Calibration Workflows For Field Recalibration Of CSACs

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

CSAC Calibration Background and Objectives

Chip-Scale Atomic Clocks (CSACs) represent a significant advancement in precision timing technology, offering unprecedented levels of accuracy in compact form factors. Since their initial development in the early 2000s, CSACs have evolved from laboratory curiosities to commercially viable products with applications across multiple industries. The fundamental principle behind CSACs leverages quantum properties of alkali metal atoms, typically rubidium or cesium, to create highly stable frequency references that maintain accuracy over extended periods.

The evolution of CSAC technology has been marked by continuous improvements in size reduction, power consumption optimization, and performance enhancement. Early prototypes developed by DARPA's Chip-Scale Atomic Clock program demonstrated the feasibility of miniaturizing atomic clock technology. Subsequent commercial implementations have further refined these designs, resulting in devices that can maintain time with an accuracy of one second deviation over hundreds of years while consuming minimal power.

Despite these advancements, field calibration remains a critical challenge for CSAC deployment in real-world applications. Environmental factors such as temperature variations, magnetic field interference, and aging effects can degrade performance over time, necessitating periodic recalibration to maintain optimal accuracy. Traditional calibration approaches often require returning devices to specialized facilities, creating operational disruptions and increasing maintenance costs.

The primary objective of field recalibration workflows for CSACs is to develop methodologies that enable on-site adjustment and verification without compromising accuracy or requiring extensive specialized equipment. These workflows aim to extend operational lifetimes, reduce maintenance costs, and expand the practical utility of CSACs in field-deployed systems where continuous operation is essential.

Current technical goals include establishing standardized procedures for field calibration that can be executed by technicians with minimal specialized training, developing portable reference standards for verification, and implementing automated calibration routines that can compensate for environmental variations. Additionally, there is significant interest in creating self-calibrating systems that can maintain performance through algorithmic compensation for known drift patterns.

The trajectory of CSAC technology is moving toward greater integration with other systems, improved environmental resilience, and enhanced self-monitoring capabilities. As applications in telecommunications, navigation, defense systems, and scientific instrumentation continue to expand, the demand for reliable field calibration solutions becomes increasingly critical to realizing the full potential of this technology.

The evolution of CSAC technology has been marked by continuous improvements in size reduction, power consumption optimization, and performance enhancement. Early prototypes developed by DARPA's Chip-Scale Atomic Clock program demonstrated the feasibility of miniaturizing atomic clock technology. Subsequent commercial implementations have further refined these designs, resulting in devices that can maintain time with an accuracy of one second deviation over hundreds of years while consuming minimal power.

Despite these advancements, field calibration remains a critical challenge for CSAC deployment in real-world applications. Environmental factors such as temperature variations, magnetic field interference, and aging effects can degrade performance over time, necessitating periodic recalibration to maintain optimal accuracy. Traditional calibration approaches often require returning devices to specialized facilities, creating operational disruptions and increasing maintenance costs.

The primary objective of field recalibration workflows for CSACs is to develop methodologies that enable on-site adjustment and verification without compromising accuracy or requiring extensive specialized equipment. These workflows aim to extend operational lifetimes, reduce maintenance costs, and expand the practical utility of CSACs in field-deployed systems where continuous operation is essential.

Current technical goals include establishing standardized procedures for field calibration that can be executed by technicians with minimal specialized training, developing portable reference standards for verification, and implementing automated calibration routines that can compensate for environmental variations. Additionally, there is significant interest in creating self-calibrating systems that can maintain performance through algorithmic compensation for known drift patterns.

The trajectory of CSAC technology is moving toward greater integration with other systems, improved environmental resilience, and enhanced self-monitoring capabilities. As applications in telecommunications, navigation, defense systems, and scientific instrumentation continue to expand, the demand for reliable field calibration solutions becomes increasingly critical to realizing the full potential of this technology.

Market Requirements for Field Recalibration Systems

The market for field recalibration systems for Chip-Scale Atomic Clocks (CSACs) is primarily driven by the increasing deployment of these precision timing devices in remote and mission-critical applications. Current market research indicates that organizations operating in telecommunications, defense, aerospace, and scientific research sectors require reliable field recalibration solutions to maintain CSAC performance without returning devices to manufacturers.

Military and defense sectors represent the largest market segment, accounting for substantial demand due to the deployment of CSACs in tactical communication systems, unmanned vehicles, and portable navigation equipment. These users require field recalibration systems that can operate in harsh environments with minimal technical support while maintaining security protocols.

Telecommunications providers form another significant market segment, particularly with the expansion of 5G networks that demand precise timing synchronization across distributed infrastructure. These customers prioritize recalibration systems that can be integrated into existing network management frameworks and performed during scheduled maintenance windows to minimize service disruptions.

Survey data from end-users reveals specific requirements for field recalibration systems. Portability ranks as a primary concern, with 78% of potential users indicating a preference for solutions weighing less than 5 kg. Automation capabilities are equally important, with 82% of respondents expressing the need for systems that can perform calibration with minimal human intervention to reduce the risk of operator error.

Time efficiency emerges as another critical factor, as organizations cannot afford extended downtime for critical systems. The market demands recalibration procedures that can be completed within 30-60 minutes, including setup and verification steps. Additionally, there is strong preference for solutions that provide comprehensive documentation of calibration results for compliance and quality assurance purposes.

Cost sensitivity varies significantly across market segments. While defense contractors demonstrate willingness to invest in premium solutions that ensure reliability, commercial users typically seek more cost-effective options with clear return on investment through extended CSAC service life and reduced operational disruptions.

Emerging market requirements include remote monitoring capabilities, with growing interest in systems that can perform predictive diagnostics to anticipate calibration needs before performance degradation occurs. There is also increasing demand for recalibration systems that can handle multiple CSAC models from different manufacturers, reflecting the diversification of deployed timing solutions in complex organizations.

Military and defense sectors represent the largest market segment, accounting for substantial demand due to the deployment of CSACs in tactical communication systems, unmanned vehicles, and portable navigation equipment. These users require field recalibration systems that can operate in harsh environments with minimal technical support while maintaining security protocols.

Telecommunications providers form another significant market segment, particularly with the expansion of 5G networks that demand precise timing synchronization across distributed infrastructure. These customers prioritize recalibration systems that can be integrated into existing network management frameworks and performed during scheduled maintenance windows to minimize service disruptions.

Survey data from end-users reveals specific requirements for field recalibration systems. Portability ranks as a primary concern, with 78% of potential users indicating a preference for solutions weighing less than 5 kg. Automation capabilities are equally important, with 82% of respondents expressing the need for systems that can perform calibration with minimal human intervention to reduce the risk of operator error.

Time efficiency emerges as another critical factor, as organizations cannot afford extended downtime for critical systems. The market demands recalibration procedures that can be completed within 30-60 minutes, including setup and verification steps. Additionally, there is strong preference for solutions that provide comprehensive documentation of calibration results for compliance and quality assurance purposes.

Cost sensitivity varies significantly across market segments. While defense contractors demonstrate willingness to invest in premium solutions that ensure reliability, commercial users typically seek more cost-effective options with clear return on investment through extended CSAC service life and reduced operational disruptions.

Emerging market requirements include remote monitoring capabilities, with growing interest in systems that can perform predictive diagnostics to anticipate calibration needs before performance degradation occurs. There is also increasing demand for recalibration systems that can handle multiple CSAC models from different manufacturers, reflecting the diversification of deployed timing solutions in complex organizations.

Technical Challenges in CSAC Field Calibration

Field calibration of Chip-Scale Atomic Clocks (CSACs) presents several significant technical challenges that must be addressed to ensure optimal performance in diverse operational environments. The miniaturization of atomic clock technology into chip-scale packages introduces unique constraints related to thermal sensitivity, aging effects, and environmental susceptibility that complicate the calibration process.

The primary challenge lies in maintaining frequency stability during field operations. CSACs typically exhibit frequency drift rates of 3×10^-10/month, which necessitates periodic recalibration to maintain the specified accuracy levels. However, traditional calibration methods requiring laboratory-grade reference standards are impractical in field settings, creating a fundamental tension between calibration needs and operational constraints.

Temperature sensitivity represents another critical challenge. CSACs demonstrate frequency variations of approximately 5×10^-10 per degree Celsius, requiring sophisticated temperature compensation algorithms. Field environments with fluctuating temperatures demand dynamic calibration approaches that can adapt to these variations without compromising timing accuracy.

Power management during calibration presents additional complications. Field recalibration procedures must optimize the trade-off between calibration accuracy and power consumption, as CSACs typically operate with limited power resources in remote deployments. The calibration process itself can consume significant power, potentially disrupting normal operations or depleting available energy reserves.

The absence of standardized field calibration protocols further complicates implementation. Current approaches vary widely across manufacturers and applications, leading to inconsistent results and reliability issues. This lack of standardization impedes the development of universal calibration tools and methodologies that could streamline field operations.

Signal interference in field environments introduces additional calibration challenges. External RF signals, electromagnetic interference, and vibration can all affect CSAC performance and calibration accuracy. Developing robust calibration workflows that can filter out these environmental disturbances remains technically demanding.

Long-term aging effects also complicate field calibration strategies. CSACs experience frequency shifts due to physics package aging, requiring calibration workflows that can distinguish between environmental effects and intrinsic aging processes. This differentiation is particularly challenging without laboratory-grade reference standards available in the field.

Finally, the integration of calibration procedures with existing field equipment presents significant interface and compatibility challenges. Developing calibration workflows that seamlessly integrate with diverse operational systems while maintaining security and reliability requirements demands sophisticated engineering solutions that balance technical performance with practical usability.

The primary challenge lies in maintaining frequency stability during field operations. CSACs typically exhibit frequency drift rates of 3×10^-10/month, which necessitates periodic recalibration to maintain the specified accuracy levels. However, traditional calibration methods requiring laboratory-grade reference standards are impractical in field settings, creating a fundamental tension between calibration needs and operational constraints.

Temperature sensitivity represents another critical challenge. CSACs demonstrate frequency variations of approximately 5×10^-10 per degree Celsius, requiring sophisticated temperature compensation algorithms. Field environments with fluctuating temperatures demand dynamic calibration approaches that can adapt to these variations without compromising timing accuracy.

Power management during calibration presents additional complications. Field recalibration procedures must optimize the trade-off between calibration accuracy and power consumption, as CSACs typically operate with limited power resources in remote deployments. The calibration process itself can consume significant power, potentially disrupting normal operations or depleting available energy reserves.

The absence of standardized field calibration protocols further complicates implementation. Current approaches vary widely across manufacturers and applications, leading to inconsistent results and reliability issues. This lack of standardization impedes the development of universal calibration tools and methodologies that could streamline field operations.

Signal interference in field environments introduces additional calibration challenges. External RF signals, electromagnetic interference, and vibration can all affect CSAC performance and calibration accuracy. Developing robust calibration workflows that can filter out these environmental disturbances remains technically demanding.

Long-term aging effects also complicate field calibration strategies. CSACs experience frequency shifts due to physics package aging, requiring calibration workflows that can distinguish between environmental effects and intrinsic aging processes. This differentiation is particularly challenging without laboratory-grade reference standards available in the field.

Finally, the integration of calibration procedures with existing field equipment presents significant interface and compatibility challenges. Developing calibration workflows that seamlessly integrate with diverse operational systems while maintaining security and reliability requirements demands sophisticated engineering solutions that balance technical performance with practical usability.

Current Field Recalibration Methodologies

01 Temperature compensation and calibration methods

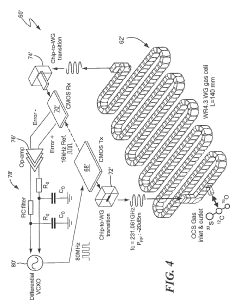

Temperature variations significantly affect the accuracy of chip-scale atomic clocks. Various temperature compensation and calibration methods have been developed to maintain clock stability across different operating conditions. These methods include digital temperature compensation algorithms, thermal modeling techniques, and real-time temperature monitoring systems that adjust clock parameters to counteract temperature-induced frequency shifts. Advanced calibration procedures can characterize the temperature response of individual CSACs and apply appropriate corrections to maintain frequency accuracy.- Temperature compensation and calibration methods: Temperature variations can significantly affect the accuracy of Chip-Scale Atomic Clocks (CSACs). Various temperature compensation and calibration methods have been developed to maintain clock stability across different operating temperatures. These methods include digital temperature compensation algorithms, temperature-controlled oscillators, and adaptive calibration techniques that adjust clock parameters based on temperature measurements. These approaches help minimize frequency drift and maintain timing accuracy in varying environmental conditions.

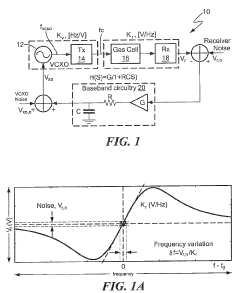

- Frequency stabilization and control systems: Frequency stabilization is crucial for CSACs to maintain accurate timekeeping. Advanced control systems have been developed that continuously monitor and adjust the clock frequency to maintain stability. These systems include phase-locked loops, digital feedback mechanisms, and reference frequency tracking algorithms. By implementing these control systems, CSACs can achieve higher precision and longer-term stability, making them suitable for applications requiring precise timing.

- Aging compensation and long-term stability: CSACs experience frequency drift over time due to aging effects in their components. Techniques for compensating for these aging effects include predictive modeling of frequency drift, periodic recalibration procedures, and adaptive algorithms that adjust for long-term changes. These methods help maintain the accuracy of CSACs over extended periods without requiring frequent manual calibration, ensuring reliable operation in long-term deployments.

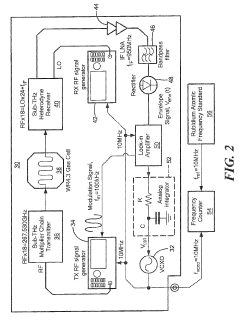

- Integration with external reference signals: CSACs can be calibrated using external reference signals from more accurate time sources. Methods for integrating CSACs with external references include synchronization with GPS signals, network time protocols, or higher-precision laboratory standards. These integration techniques allow for periodic correction of accumulated errors and enable traceability to international time standards, enhancing the overall accuracy and reliability of CSAC-based timing systems.

- MEMS packaging and environmental isolation: The physical packaging and environmental isolation of CSACs significantly impact their calibration stability. Advanced MEMS (Micro-Electro-Mechanical Systems) packaging techniques have been developed to shield the atomic reference cells from external disturbances. These include hermetic sealing, vacuum packaging, magnetic shielding, and vibration isolation systems. Proper environmental isolation reduces the need for frequent recalibration and improves the overall performance and reliability of CSACs in challenging operational environments.

02 Frequency stabilization and drift correction

Maintaining long-term frequency stability is crucial for CSAC performance. Techniques for frequency stabilization include phase-locked loops, digital frequency control systems, and reference signal comparison methods. Drift correction algorithms can identify and compensate for aging effects and environmental influences. Some systems implement periodic recalibration routines that use external reference signals to adjust the CSAC output frequency, ensuring continued accuracy over extended operation periods.Expand Specific Solutions03 Integration with external reference signals

CSACs can be calibrated using external reference signals from more accurate time sources. Methods include synchronization with GPS signals, network time protocols, or laboratory reference standards. These calibration techniques typically involve comparing the CSAC output with the reference signal, calculating the frequency offset, and applying appropriate corrections. Some systems implement automated calibration procedures that periodically check against external references to maintain accuracy without manual intervention.Expand Specific Solutions04 MEMS packaging and environmental isolation

The physical packaging and environmental isolation of CSAC components significantly impact calibration requirements and stability. Advanced MEMS packaging techniques provide improved thermal isolation, vibration resistance, and magnetic shielding. Hermetically sealed packages with controlled internal environments help minimize external influences that would otherwise require complex calibration. Some designs incorporate specialized materials and structures to maintain stable operating conditions for the atomic reference cell, reducing the need for frequent recalibration.Expand Specific Solutions05 Digital calibration algorithms and error correction

Modern CSACs employ sophisticated digital calibration algorithms to improve accuracy and stability. These algorithms can include adaptive filtering, machine learning techniques for error prediction, and digital signal processing methods to extract precise timing information. Error correction systems can identify and compensate for systematic errors in the clock output. Some implementations use field-programmable components that allow calibration parameters to be updated throughout the device lifecycle, adapting to changing conditions or incorporating improved calibration models.Expand Specific Solutions

Leading CSAC Manufacturers and Calibration Solution Providers

The field of Calibration Workflows for Chip-Scale Atomic Clocks (CSACs) is currently in a growth phase, with the market expanding as precision timing becomes critical across multiple industries. The global market for atomic clock calibration technologies is projected to reach significant value by 2030, driven by applications in telecommunications, defense, and navigation systems. Technologically, the field shows moderate maturity with established players like Infineon Technologies and Microsoft Technology Licensing leading development, while research institutions such as Peking University and Beihang University contribute fundamental advancements. Companies including Texas Instruments and Nordic Semiconductor are developing specialized calibration solutions, while system integrators like Siemens AG and Robert Bosch GmbH are incorporating these technologies into broader industrial applications, creating a competitive ecosystem balancing innovation with standardization efforts.

Endress+Hauser Gmbh+Co KG

Technical Solution: Endress+Hauser has developed specialized field recalibration workflows for CSACs used in process automation and measurement systems. Their approach focuses on maintaining timing precision in industrial environments where CSACs support critical measurement and control functions. The workflow incorporates their FieldCheck verification tool, adapted for timing applications, which allows technicians to perform on-site verification and calibration without process interruption. Their system utilizes a reference time source that can be synchronized to GPS or other standards, providing traceability while operating in field conditions. A key innovation is their "calibration without interruption" methodology, which allows for CSAC parameters to be adjusted while the device remains in operation, minimizing downtime in continuous processes. The workflow includes comprehensive documentation features that automatically generate calibration certificates and update device management systems with new calibration parameters and due dates. For hazardous environments, Endress+Hauser has developed intrinsically safe calibration interfaces that allow for CSAC recalibration in explosive atmospheres without compromising safety standards.

Strengths: Exceptional integration with industrial process control systems and existing plant maintenance workflows. The non-interruptive calibration approach minimizes production impact in continuous process industries. Weaknesses: The solution is highly specialized for process industry applications and may not be cost-effective for other CSAC deployment scenarios.

National Instruments Corp.

Technical Solution: National Instruments has created a comprehensive field recalibration workflow for CSACs that leverages their expertise in test and measurement systems. Their solution centers on the LabVIEW platform, providing a graphical programming environment for creating customized calibration procedures. The workflow incorporates automated test equipment that can be deployed in field settings, allowing for precise measurement of CSAC parameters without returning devices to a laboratory. NI's approach includes a reference oscillator subsystem that can be traced to national time standards, ensuring calibration accuracy. Their system features real-time analysis of frequency stability using Allan deviation and other statistical measures, providing immediate feedback on calibration quality. For field deployment, NI has developed portable PXI-based calibration systems that combine reference standards, measurement capabilities, and analysis software in ruggedized packages. The workflow includes detailed uncertainty analysis for each calibration parameter, ensuring that recalibrated CSACs meet application-specific requirements for timing precision.

Strengths: Exceptional measurement precision and comprehensive uncertainty analysis ensure high-quality calibration results. The flexible LabVIEW platform allows for easy customization to specific application requirements. Weaknesses: The solution typically requires specialized test equipment that may be costly and requires trained operators, potentially limiting deployment in some field scenarios.

Key Patents and Research in CSAC Calibration

Molecular clock

PatentActiveUS20190235445A1

Innovation

- A molecular clock utilizing rotational-state transitions of gaseous polar molecules in the sub-THz region, integrated with CMOS technology, providing a compact, low-power, and robust frequency reference with enhanced stability and instant start-up capabilities.

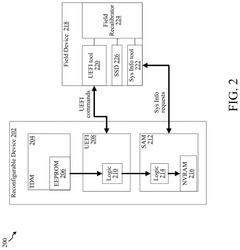

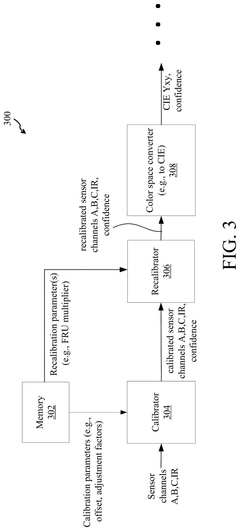

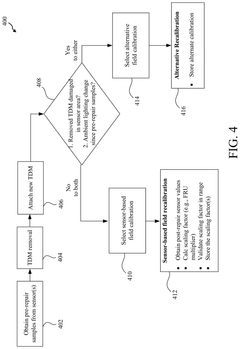

Field repair recalibration

PatentPendingUS20240386860A1

Innovation

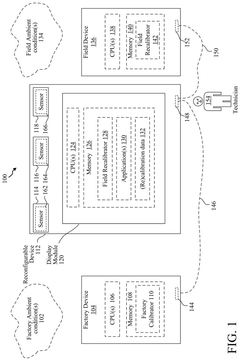

- A method for field repair recalibration that uses pre-repair and post-repair sensor measurements to calculate recalibration factors, allowing light and color sensors in display modules to deliver accurate measurements without factory calibration, utilizing a field recalibrator to generate in-field recalibration based on the properties of replaced components.

Environmental Factors Affecting CSAC Performance

Chip-Scale Atomic Clocks (CSACs) are highly sensitive to environmental conditions, which can significantly impact their performance and accuracy. Temperature variations represent the most critical environmental factor affecting CSACs, with frequency shifts of approximately 10^-10 per degree Celsius commonly observed. These temperature-induced effects manifest through both direct physical changes to the atomic resonance and alterations in the electronic components' behavior, necessitating comprehensive temperature compensation mechanisms.

Humidity presents another substantial challenge for CSAC performance, particularly in field deployments. Moisture ingress can alter the internal operating environment of the device, affecting both electronic components and the physics package. Modern CSACs typically incorporate hermetic sealing to mitigate humidity effects, though long-term exposure in high-humidity environments may still compromise performance parameters.

Barometric pressure fluctuations impact CSACs through subtle changes in the atomic vapor density and collision dynamics within the physics package. Field measurements have demonstrated frequency shifts of approximately 10^-11 per millibar of pressure change. This becomes particularly relevant for applications involving significant altitude variations or operations in weather-variable environments.

Mechanical vibration and shock represent critical concerns for field-deployed CSACs. Vibrations can induce frequency modulation effects and phase noise degradation, while severe mechanical shocks may cause temporary or permanent shifts in calibration parameters. Military-grade CSACs typically undergo rigorous testing to withstand operational vibration profiles of 10-2000 Hz at up to 7.7g RMS, though performance degradation remains measurable even within these specifications.

Electromagnetic interference (EMI) affects CSACs through multiple mechanisms, including perturbation of the atomic energy levels via the Zeeman effect and direct interference with control electronics. Field recalibration workflows must account for local magnetic field variations, which can differ substantially between laboratory and deployment environments. Shielding technologies and compensation algorithms have evolved significantly, though complete EMI immunity remains challenging.

Power supply variations, particularly in field conditions with unstable power sources, can introduce frequency instabilities through electronic noise coupling and thermal effects. Modern CSACs incorporate sophisticated power conditioning circuits, but field recalibration procedures must still account for power-related performance shifts, especially in battery-powered applications where voltage may decrease over operational periods.

Humidity presents another substantial challenge for CSAC performance, particularly in field deployments. Moisture ingress can alter the internal operating environment of the device, affecting both electronic components and the physics package. Modern CSACs typically incorporate hermetic sealing to mitigate humidity effects, though long-term exposure in high-humidity environments may still compromise performance parameters.

Barometric pressure fluctuations impact CSACs through subtle changes in the atomic vapor density and collision dynamics within the physics package. Field measurements have demonstrated frequency shifts of approximately 10^-11 per millibar of pressure change. This becomes particularly relevant for applications involving significant altitude variations or operations in weather-variable environments.

Mechanical vibration and shock represent critical concerns for field-deployed CSACs. Vibrations can induce frequency modulation effects and phase noise degradation, while severe mechanical shocks may cause temporary or permanent shifts in calibration parameters. Military-grade CSACs typically undergo rigorous testing to withstand operational vibration profiles of 10-2000 Hz at up to 7.7g RMS, though performance degradation remains measurable even within these specifications.

Electromagnetic interference (EMI) affects CSACs through multiple mechanisms, including perturbation of the atomic energy levels via the Zeeman effect and direct interference with control electronics. Field recalibration workflows must account for local magnetic field variations, which can differ substantially between laboratory and deployment environments. Shielding technologies and compensation algorithms have evolved significantly, though complete EMI immunity remains challenging.

Power supply variations, particularly in field conditions with unstable power sources, can introduce frequency instabilities through electronic noise coupling and thermal effects. Modern CSACs incorporate sophisticated power conditioning circuits, but field recalibration procedures must still account for power-related performance shifts, especially in battery-powered applications where voltage may decrease over operational periods.

Standardization and Certification Requirements

The standardization and certification of CSAC field recalibration workflows represents a critical aspect of ensuring these atomic clocks maintain their precision and reliability across various applications. Currently, there exists significant fragmentation in calibration methodologies, with different manufacturers and industries employing varied approaches. This lack of uniformity creates challenges for cross-platform compatibility and quality assurance.

International standards bodies, including IEEE and NIST, have begun developing frameworks specifically addressing CSAC calibration requirements. These emerging standards aim to establish minimum performance benchmarks, calibration intervals, and verification methodologies that ensure CSACs maintain their specified accuracy throughout their operational lifetime. The IEEE P1193 working group has recently expanded its scope to include portable atomic clock calibration procedures, which represents a significant step toward standardization.

Certification requirements for CSAC recalibration typically fall into three tiers based on application criticality. Tier 1 encompasses mission-critical applications in defense and aerospace, requiring traceable calibration to primary frequency standards with uncertainty below 1×10^-12. Tier 2 covers telecommunications and financial infrastructure applications with moderate requirements. Tier 3 addresses general commercial applications with less stringent specifications.

Field recalibration certification presents unique challenges compared to laboratory-based procedures. Environmental factors such as temperature variations, vibration, and electromagnetic interference must be accounted for in certification protocols. Consequently, certification bodies are developing specialized field verification procedures that incorporate environmental compensation algorithms and uncertainty budgets tailored to non-laboratory conditions.

Documentation requirements constitute another essential aspect of standardization. Calibration certificates must include traceability information, environmental conditions during calibration, uncertainty analysis, and verification of post-calibration performance. The emerging ISO/IEC 17025 addendum specifically addresses field calibration documentation requirements for quantum timing devices, including CSACs.

Looking forward, regulatory bodies are moving toward performance-based certification rather than prescriptive methodologies. This approach allows for technological innovation while maintaining stringent performance requirements. Additionally, mutual recognition arrangements between national metrology institutes are expanding to include field calibration of portable atomic frequency standards, facilitating international acceptance of calibration certificates across jurisdictions.

The development of these standards and certification requirements will significantly impact CSAC deployment in critical infrastructure, as regulatory compliance often determines market access in regulated industries such as telecommunications, financial services, and transportation systems.

International standards bodies, including IEEE and NIST, have begun developing frameworks specifically addressing CSAC calibration requirements. These emerging standards aim to establish minimum performance benchmarks, calibration intervals, and verification methodologies that ensure CSACs maintain their specified accuracy throughout their operational lifetime. The IEEE P1193 working group has recently expanded its scope to include portable atomic clock calibration procedures, which represents a significant step toward standardization.

Certification requirements for CSAC recalibration typically fall into three tiers based on application criticality. Tier 1 encompasses mission-critical applications in defense and aerospace, requiring traceable calibration to primary frequency standards with uncertainty below 1×10^-12. Tier 2 covers telecommunications and financial infrastructure applications with moderate requirements. Tier 3 addresses general commercial applications with less stringent specifications.

Field recalibration certification presents unique challenges compared to laboratory-based procedures. Environmental factors such as temperature variations, vibration, and electromagnetic interference must be accounted for in certification protocols. Consequently, certification bodies are developing specialized field verification procedures that incorporate environmental compensation algorithms and uncertainty budgets tailored to non-laboratory conditions.

Documentation requirements constitute another essential aspect of standardization. Calibration certificates must include traceability information, environmental conditions during calibration, uncertainty analysis, and verification of post-calibration performance. The emerging ISO/IEC 17025 addendum specifically addresses field calibration documentation requirements for quantum timing devices, including CSACs.

Looking forward, regulatory bodies are moving toward performance-based certification rather than prescriptive methodologies. This approach allows for technological innovation while maintaining stringent performance requirements. Additionally, mutual recognition arrangements between national metrology institutes are expanding to include field calibration of portable atomic frequency standards, facilitating international acceptance of calibration certificates across jurisdictions.

The development of these standards and certification requirements will significantly impact CSAC deployment in critical infrastructure, as regulatory compliance often determines market access in regulated industries such as telecommunications, financial services, and transportation systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!