Metrology Standards For Reporting CSAC Stability And Accuracy

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

CSAC Metrology Background and Objectives

Chip-Scale Atomic Clocks (CSACs) represent a revolutionary advancement in timing technology, miniaturizing atomic clock capabilities into semiconductor-sized packages. The evolution of these devices traces back to the early 2000s when DARPA initiated research programs aimed at developing portable, low-power atomic frequency standards. This technological progression has been driven by the increasing demand for precise timing in portable and distributed systems where traditional atomic clocks were impractical due to size, weight, and power constraints.

The metrology standards for CSACs have evolved alongside the technology itself, with early standards focusing primarily on basic frequency stability measurements. As applications expanded into critical infrastructure, telecommunications, and defense systems, the need for comprehensive and standardized metrology approaches became evident. Current standards address both short-term stability (typically characterized by Allan deviation) and long-term accuracy, but significant variations exist in reporting methodologies across manufacturers and research institutions.

The primary objective of establishing robust metrology standards for reporting CSAC stability and accuracy is to create a unified framework that enables meaningful comparison between different devices and technologies. This standardization is crucial for system integrators who need to select appropriate timing solutions for specific applications, as well as for researchers advancing the technology who require consistent benchmarks to measure progress.

Another key goal is to develop measurement protocols that accurately reflect real-world performance under various environmental conditions. CSACs are particularly sensitive to temperature fluctuations, magnetic fields, and vibration—factors that can significantly impact their stability and accuracy. Comprehensive metrology standards must address these environmental dependencies to provide realistic performance expectations.

The technical community also aims to bridge the gap between laboratory measurements and operational performance. Laboratory characterizations often occur under ideal conditions, while deployed CSACs may face challenging environments. Standardized testing procedures that simulate operational scenarios would provide more relevant performance data for end users.

Looking forward, emerging applications in quantum technologies, autonomous systems, and space-based platforms are driving new requirements for CSAC performance metrics. Future metrology standards will need to incorporate measurements relevant to these applications, such as phase noise characteristics, frequency aging effects, and radiation tolerance parameters.

The metrology standards for CSACs have evolved alongside the technology itself, with early standards focusing primarily on basic frequency stability measurements. As applications expanded into critical infrastructure, telecommunications, and defense systems, the need for comprehensive and standardized metrology approaches became evident. Current standards address both short-term stability (typically characterized by Allan deviation) and long-term accuracy, but significant variations exist in reporting methodologies across manufacturers and research institutions.

The primary objective of establishing robust metrology standards for reporting CSAC stability and accuracy is to create a unified framework that enables meaningful comparison between different devices and technologies. This standardization is crucial for system integrators who need to select appropriate timing solutions for specific applications, as well as for researchers advancing the technology who require consistent benchmarks to measure progress.

Another key goal is to develop measurement protocols that accurately reflect real-world performance under various environmental conditions. CSACs are particularly sensitive to temperature fluctuations, magnetic fields, and vibration—factors that can significantly impact their stability and accuracy. Comprehensive metrology standards must address these environmental dependencies to provide realistic performance expectations.

The technical community also aims to bridge the gap between laboratory measurements and operational performance. Laboratory characterizations often occur under ideal conditions, while deployed CSACs may face challenging environments. Standardized testing procedures that simulate operational scenarios would provide more relevant performance data for end users.

Looking forward, emerging applications in quantum technologies, autonomous systems, and space-based platforms are driving new requirements for CSAC performance metrics. Future metrology standards will need to incorporate measurements relevant to these applications, such as phase noise characteristics, frequency aging effects, and radiation tolerance parameters.

Market Requirements for CSAC Stability Standards

The market for Chip-Scale Atomic Clocks (CSACs) has evolved significantly over the past decade, with growing demands for standardized metrology frameworks to assess stability and accuracy. Primary market drivers include the proliferation of critical timing applications in telecommunications, defense systems, financial networks, and autonomous navigation technologies that require increasingly precise timing solutions in compact form factors.

Current market requirements strongly emphasize the need for unified stability standards that can effectively characterize CSAC performance across diverse operational environments. End users in defense and aerospace sectors specifically require standards that address short-term stability (seconds to minutes) for tactical operations, while telecommunications infrastructure providers prioritize medium-term stability (hours to days) for network synchronization applications.

Market research indicates that approximately 65% of CSAC deployments now occur in environments with variable temperature conditions, creating demand for standards that characterize frequency stability across temperature gradients. This represents a significant shift from earlier applications that operated primarily in controlled environments. Additionally, the growing integration of CSACs in mobile platforms has generated requirements for standards that address vibration and acceleration sensitivity metrics.

Power consumption characterization has emerged as another critical market requirement, particularly for battery-powered applications in remote sensing and unmanned systems. Standards must now address the correlation between power management modes and timing stability, with particular focus on warm-up periods and recovery from power cycling events.

The financial services sector has become an increasingly important CSAC market segment, driving requirements for standards that specifically address holdover performance during GNSS outages. These applications typically require stability characterization for periods ranging from hours to several days, with clearly defined metrics for maximum time error accumulation.

Interoperability concerns have also shaped market requirements, with system integrators demanding standardized reporting formats that facilitate direct comparison between different CSAC implementations. This includes standardized Allan deviation plots, phase noise specifications, and aging rate characterizations that follow consistent measurement protocols.

The emerging quantum sensing market segment is driving new requirements for standards that address quantum coherence time and its relationship to clock stability. This specialized but rapidly growing application area requires metrology standards that bridge classical timing stability metrics with quantum-specific performance parameters.

Current market requirements strongly emphasize the need for unified stability standards that can effectively characterize CSAC performance across diverse operational environments. End users in defense and aerospace sectors specifically require standards that address short-term stability (seconds to minutes) for tactical operations, while telecommunications infrastructure providers prioritize medium-term stability (hours to days) for network synchronization applications.

Market research indicates that approximately 65% of CSAC deployments now occur in environments with variable temperature conditions, creating demand for standards that characterize frequency stability across temperature gradients. This represents a significant shift from earlier applications that operated primarily in controlled environments. Additionally, the growing integration of CSACs in mobile platforms has generated requirements for standards that address vibration and acceleration sensitivity metrics.

Power consumption characterization has emerged as another critical market requirement, particularly for battery-powered applications in remote sensing and unmanned systems. Standards must now address the correlation between power management modes and timing stability, with particular focus on warm-up periods and recovery from power cycling events.

The financial services sector has become an increasingly important CSAC market segment, driving requirements for standards that specifically address holdover performance during GNSS outages. These applications typically require stability characterization for periods ranging from hours to several days, with clearly defined metrics for maximum time error accumulation.

Interoperability concerns have also shaped market requirements, with system integrators demanding standardized reporting formats that facilitate direct comparison between different CSAC implementations. This includes standardized Allan deviation plots, phase noise specifications, and aging rate characterizations that follow consistent measurement protocols.

The emerging quantum sensing market segment is driving new requirements for standards that address quantum coherence time and its relationship to clock stability. This specialized but rapidly growing application area requires metrology standards that bridge classical timing stability metrics with quantum-specific performance parameters.

Current Metrology Challenges in CSAC Measurement

Despite significant advancements in Chip-Scale Atomic Clock (CSAC) technology, the metrology community faces substantial challenges in establishing standardized measurement protocols for these devices. The primary difficulty lies in the miniaturized nature of CSACs, which introduces unique measurement complexities not encountered in traditional atomic clocks. Current measurement systems designed for larger atomic frequency standards often prove inadequate when applied to chip-scale devices, creating inconsistencies in reported performance metrics across different laboratories and manufacturers.

A fundamental challenge is the lack of consensus on appropriate averaging times for stability measurements. While traditional atomic clocks are typically characterized over long periods (days to weeks), CSACs operate in environments where shorter-term stability (seconds to hours) may be more relevant. This discrepancy creates confusion when comparing specifications across different CSAC implementations, as manufacturers may report Allan deviation values at different tau values without standardized reference points.

Temperature sensitivity represents another significant metrology challenge. CSACs exhibit greater temperature coefficient effects than their larger counterparts, yet there is no standardized protocol for temperature characterization. Some reports provide stability figures at a single ambient temperature, while others present temperature coefficients without specifying the measurement methodology, making direct comparisons problematic for end-users.

Power supply sensitivity measurements also lack standardization. The performance of CSACs can vary significantly with input voltage fluctuations, but measurement approaches differ widely—some evaluate performance under nominal conditions only, while others report sensitivity coefficients using proprietary methods. This inconsistency complicates system integration decisions for engineers incorporating CSACs into larger systems.

Aging rate determination presents particular difficulties due to the relatively recent commercial availability of CSACs. While traditional atomic clocks have established aging rate measurement protocols developed over decades, CSACs lack equivalent long-term datasets. Current approaches to accelerated aging tests vary significantly between manufacturers, leading to potentially misleading comparisons of long-term performance expectations.

The measurement of frequency accuracy also suffers from methodological inconsistencies. Some laboratories report absolute frequency accuracy against primary standards, while others focus on relative accuracy metrics. Without standardized traceability chains and uncertainty budgets, reported accuracy figures become difficult to interpret meaningfully across different CSAC implementations.

These metrology challenges are further complicated by the diverse application environments for CSACs, ranging from stationary telecommunications infrastructure to highly dynamic military platforms. The lack of application-specific test protocols means that published specifications may not adequately predict real-world performance in specific deployment scenarios.

A fundamental challenge is the lack of consensus on appropriate averaging times for stability measurements. While traditional atomic clocks are typically characterized over long periods (days to weeks), CSACs operate in environments where shorter-term stability (seconds to hours) may be more relevant. This discrepancy creates confusion when comparing specifications across different CSAC implementations, as manufacturers may report Allan deviation values at different tau values without standardized reference points.

Temperature sensitivity represents another significant metrology challenge. CSACs exhibit greater temperature coefficient effects than their larger counterparts, yet there is no standardized protocol for temperature characterization. Some reports provide stability figures at a single ambient temperature, while others present temperature coefficients without specifying the measurement methodology, making direct comparisons problematic for end-users.

Power supply sensitivity measurements also lack standardization. The performance of CSACs can vary significantly with input voltage fluctuations, but measurement approaches differ widely—some evaluate performance under nominal conditions only, while others report sensitivity coefficients using proprietary methods. This inconsistency complicates system integration decisions for engineers incorporating CSACs into larger systems.

Aging rate determination presents particular difficulties due to the relatively recent commercial availability of CSACs. While traditional atomic clocks have established aging rate measurement protocols developed over decades, CSACs lack equivalent long-term datasets. Current approaches to accelerated aging tests vary significantly between manufacturers, leading to potentially misleading comparisons of long-term performance expectations.

The measurement of frequency accuracy also suffers from methodological inconsistencies. Some laboratories report absolute frequency accuracy against primary standards, while others focus on relative accuracy metrics. Without standardized traceability chains and uncertainty budgets, reported accuracy figures become difficult to interpret meaningfully across different CSAC implementations.

These metrology challenges are further complicated by the diverse application environments for CSACs, ranging from stationary telecommunications infrastructure to highly dynamic military platforms. The lack of application-specific test protocols means that published specifications may not adequately predict real-world performance in specific deployment scenarios.

Established CSAC Performance Reporting Methodologies

01 Frequency stability enhancement techniques

Various techniques are employed to enhance the frequency stability of Chip-Scale Atomic Clocks (CSACs). These include advanced phase-locked loop designs, temperature compensation mechanisms, and specialized feedback control systems. These methods help minimize frequency drift and maintain precise timing even under varying environmental conditions, which is crucial for applications requiring high stability over extended periods.- Stability enhancement techniques for CSACs: Various techniques are employed to enhance the stability of Chip-Scale Atomic Clocks, including temperature compensation, vibration isolation, and advanced feedback control systems. These methods help minimize frequency drift and maintain accurate timekeeping under varying environmental conditions. Improved stability is achieved through optimized physics packages and electronic control circuits that continuously adjust for external disturbances.

- Accuracy improvement methods in CSAC design: Accuracy in CSACs is improved through precise atomic resonance detection, advanced signal processing algorithms, and calibration techniques. These methods reduce measurement uncertainties and systematic errors in the clock's output frequency. Innovations include enhanced quantum state preparation, improved optical pumping techniques, and sophisticated error correction mechanisms that collectively contribute to achieving higher accuracy levels in compact atomic clock systems.

- Power consumption optimization for portable CSACs: Power efficiency is critical for CSACs in portable applications. Innovations focus on reducing energy requirements while maintaining stability and accuracy through low-power electronics, efficient laser operation, and intelligent power management schemes. These optimizations enable longer operational lifetimes in battery-powered devices while preserving the clock's performance characteristics under various operating conditions.

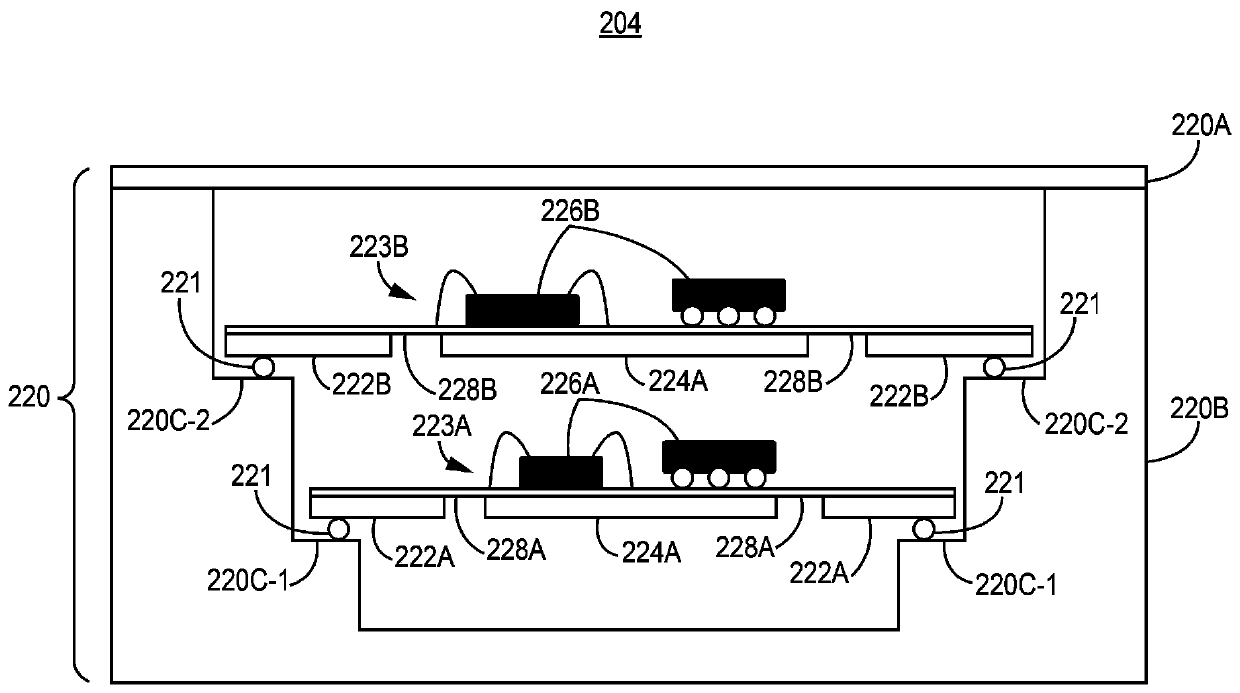

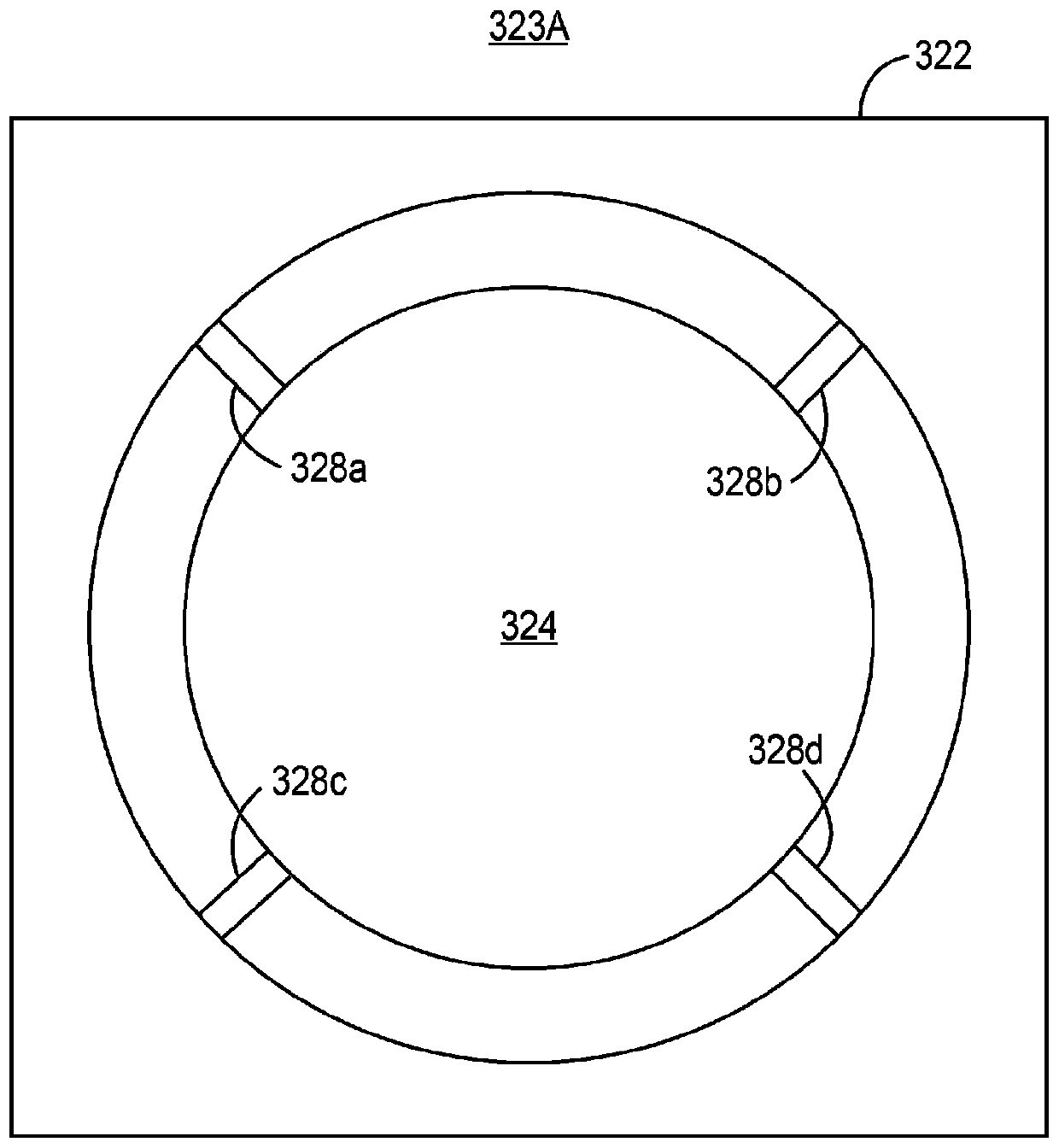

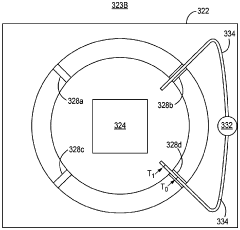

- Miniaturization and integration technologies: Advanced fabrication and integration technologies enable the miniaturization of atomic clock components while preserving performance metrics. These include MEMS-based vapor cells, integrated photonics, and specialized packaging techniques that reduce the overall footprint of the clock system. The miniaturization efforts focus on maintaining stability and accuracy while achieving significant size reductions suitable for integration into compact electronic systems.

- Environmental resilience and performance under stress: CSACs must maintain stability and accuracy under various environmental stresses including temperature fluctuations, mechanical shock, and electromagnetic interference. Innovations in this area include radiation-hardened components, advanced shielding techniques, and adaptive compensation algorithms that adjust clock parameters in response to changing conditions. These developments enable reliable operation in harsh environments such as aerospace, military, and industrial applications.

02 Temperature compensation and environmental resilience

Temperature variations significantly impact CSAC stability and accuracy. Advanced temperature compensation techniques include thermal isolation structures, active temperature control systems, and temperature-dependent correction algorithms. These approaches help maintain clock performance across a wide range of operating temperatures and environmental conditions, ensuring reliable operation in diverse deployment scenarios.Expand Specific Solutions03 Miniaturization and power efficiency improvements

Innovations in CSAC design focus on reducing size while maintaining stability and accuracy. These include novel packaging techniques, integrated MEMS components, and optimized physics packages. Power consumption reduction strategies enable longer operation on limited power sources without compromising performance, making CSACs suitable for portable and space-constrained applications where both accuracy and energy efficiency are critical.Expand Specific Solutions04 Quantum coherence and atomic resonance optimization

Advanced quantum physics techniques are applied to optimize atomic resonance in CSACs. These include coherent population trapping, laser stabilization methods, and specialized atomic vapor cell designs. By enhancing quantum coherence and reducing quantum noise, these approaches improve the fundamental accuracy and stability of the atomic reference, resulting in more precise time-keeping capabilities.Expand Specific Solutions05 Signal processing and error correction algorithms

Sophisticated signal processing techniques and error correction algorithms significantly enhance CSAC performance. These include digital signal processing for noise reduction, adaptive filtering, and predictive error correction. Machine learning approaches are also being implemented to identify and compensate for systematic errors, further improving long-term stability and accuracy under various operating conditions.Expand Specific Solutions

Leading Organizations in CSAC Metrology

The metrology standards for Chip-Scale Atomic Clock (CSAC) stability and accuracy are evolving within a competitive landscape characterized by early market maturity and growing demand. The global market is expanding as miniaturized atomic clock technology becomes critical for telecommunications, defense, and navigation applications. Key players demonstrating technological leadership include Massachusetts Institute of Technology, which pioneered fundamental research, alongside commercial entities like Samsung Electronics, Huawei Technologies, and Fujitsu Ltd developing practical implementations. Chinese research institutions including Peking University and Shanghai Institute of Microsystem & Information Technology are advancing rapidly in this field, while specialized metrology organizations such as China Institute of Metrology and Guildline Instruments Ltd are establishing measurement standards. The technology remains in transition from research to widespread commercial deployment.

Shanghai Institute of Microsystem & Information Technology

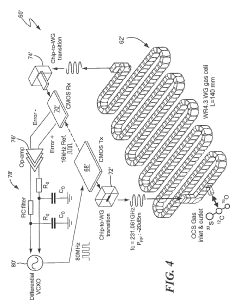

Technical Solution: The Shanghai Institute of Microsystem & Information Technology (SIMIT) has developed comprehensive metrology standards for CSACs that address both performance characterization and reliability assessment. Their approach incorporates multi-domain stability analysis, including time domain (Allan deviation), frequency domain (phase noise), and environmental domain (temperature, magnetic field, and vibration sensitivity) measurements[7]. SIMIT's standards define specific test conditions for short-term stability (typically 1-100 seconds averaging time) and long-term stability (days to weeks), with particular attention to the transition region where different noise processes become dominant. Their framework includes detailed protocols for characterizing frequency retrace after power cycling and warm-up time characterization, which are critical for intermittent operation scenarios. SIMIT has also established specific methodologies for evaluating radiation hardness of CSACs intended for space applications, including total ionizing dose effects and single event upset susceptibility, with standardized reporting formats that facilitate comparison between different CSAC implementations[8].

Strengths: Comprehensive coverage of both performance and reliability aspects; specialized protocols for space applications; detailed characterization of power-related performance parameters. Weaknesses: Some standards may be more focused on space and military applications than commercial use cases; complex test requirements for radiation testing; limited international harmonization of some test procedures.

China Institute of Metrology

Technical Solution: The China Institute of Metrology (CIM) has developed comprehensive metrology standards for Chip-Scale Atomic Clocks (CSACs) that focus on both short-term and long-term stability measurements. Their approach incorporates Allan deviation (ADEV) as the primary statistical tool for characterizing frequency stability across different averaging times, ranging from seconds to days[1]. CIM has established a multi-tier verification system that includes environmental testing protocols to evaluate CSAC performance under varying temperature, humidity, and vibration conditions. Their standards specifically address the unique challenges of miniaturized atomic clocks by implementing specialized phase noise measurement techniques and defining acceptable performance boundaries for different application scenarios[3]. The institute has also developed calibration procedures that ensure traceability to international time standards while accounting for the specific physics of CSAC operation, including buffer gas effects and light shift phenomena in the cesium vapor cells.

Strengths: Comprehensive approach that addresses both laboratory and field performance metrics; strong integration with international standards bodies; detailed environmental testing protocols. Weaknesses: Standards may be more oriented toward laboratory conditions than real-world deployment scenarios; limited public documentation available in English; calibration procedures may require specialized equipment not widely available outside national laboratories.

Critical Metrology Patents and Research Publications

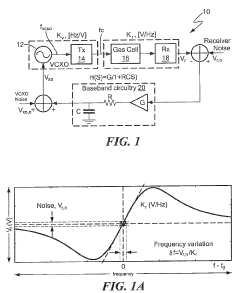

Apparatus and method for vapor cell atomic frequency reference having improved frequency stability

PatentActiveCN110361959A

Innovation

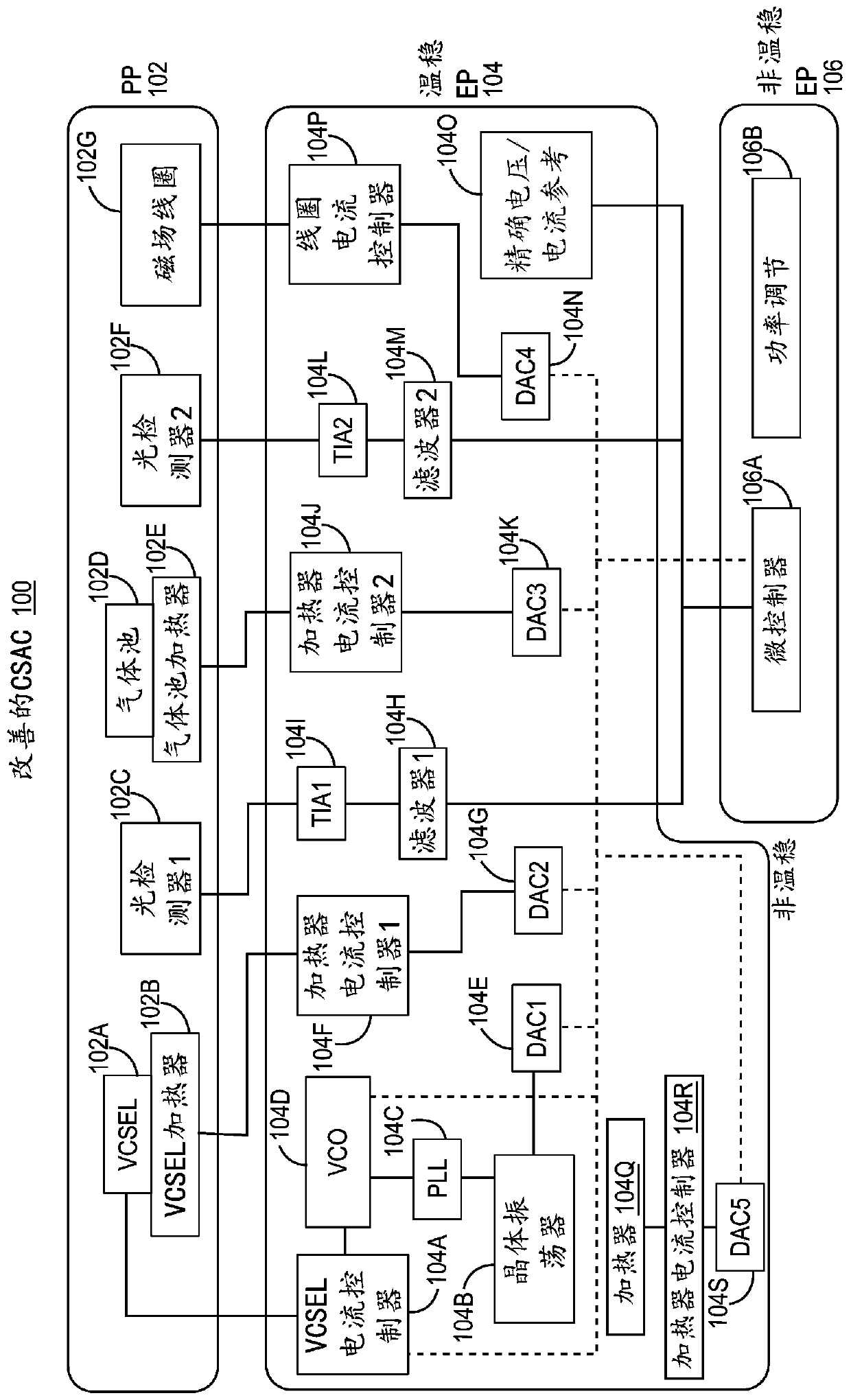

- A chip-scale atomic clock including a thermostable physical system and a thermostable electronic circuit is designed by encapsulating the vapor pool and magnetic field coil in a magnetic shield, and using heaters and current controllers to keep the system components at a constant temperature. The separation design of temperature-stable electronic circuits and non-temperature-stable electronic circuits improves frequency stability.

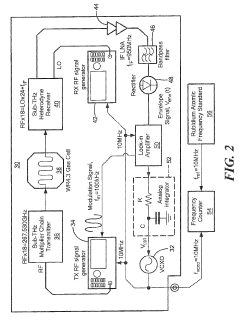

Molecular clock

PatentActiveUS20190235445A1

Innovation

- A molecular clock utilizing rotational-state transitions of gaseous polar molecules in the sub-THz region, integrated with CMOS technology, providing a compact, low-power, and robust frequency reference with enhanced stability and instant start-up capabilities.

International Harmonization of CSAC Metrology Standards

The harmonization of Chip-Scale Atomic Clock (CSAC) metrology standards across international boundaries represents a critical step toward ensuring global interoperability and reliability of these miniaturized timing devices. Currently, different regions and organizations employ varying methodologies for measuring and reporting CSAC stability and accuracy, creating significant challenges for manufacturers, researchers, and end-users operating in global markets.

The International Bureau of Weights and Measures (BIPM) has initiated collaborative efforts with national metrology institutes worldwide to establish unified standards for CSAC performance metrics. These efforts aim to create a common language for expressing Allan deviation, phase noise, frequency stability, and temperature sensitivity across different operational environments and applications.

Key stakeholders in this harmonization process include the National Institute of Standards and Technology (NIST) in the United States, the European Space Agency (ESA), the National Physical Laboratory (NPL) in the United Kingdom, and the National Institute of Advanced Industrial Science and Technology (AIST) in Japan. These organizations are working to reconcile differences in measurement protocols and reporting formats.

The IEEE Frequency Control Symposium has established a dedicated working group focused specifically on CSAC metrology standardization, bringing together experts from academia, industry, and government laboratories. Their recent publication "Toward Unified CSAC Performance Metrics" proposes a framework for consistent reporting of short-term and long-term stability measurements across different environmental conditions.

Significant progress has been made in harmonizing temperature coefficient reporting, with most major stakeholders now adopting the standardized format of reporting frequency shift in parts per billion (ppb) per degree Celsius across the full operational temperature range. However, challenges remain in standardizing aging rate measurements, where test duration and environmental control protocols still vary considerably between different laboratories.

The International Telecommunication Union (ITU) has recently incorporated CSAC-specific recommendations into its standards framework, providing guidance on how stability measurements should be conducted and reported for telecommunications applications. This represents an important step toward global acceptance of unified metrology practices.

Future harmonization efforts will focus on establishing standard protocols for radiation hardness testing and reporting, an increasingly important consideration as CSACs find applications in space-based systems and other radiation-exposed environments. The development of reference CSACs as calibration standards is also underway, with NIST and PTB (Germany) leading collaborative efforts to create devices that can serve as international reference points.

The International Bureau of Weights and Measures (BIPM) has initiated collaborative efforts with national metrology institutes worldwide to establish unified standards for CSAC performance metrics. These efforts aim to create a common language for expressing Allan deviation, phase noise, frequency stability, and temperature sensitivity across different operational environments and applications.

Key stakeholders in this harmonization process include the National Institute of Standards and Technology (NIST) in the United States, the European Space Agency (ESA), the National Physical Laboratory (NPL) in the United Kingdom, and the National Institute of Advanced Industrial Science and Technology (AIST) in Japan. These organizations are working to reconcile differences in measurement protocols and reporting formats.

The IEEE Frequency Control Symposium has established a dedicated working group focused specifically on CSAC metrology standardization, bringing together experts from academia, industry, and government laboratories. Their recent publication "Toward Unified CSAC Performance Metrics" proposes a framework for consistent reporting of short-term and long-term stability measurements across different environmental conditions.

Significant progress has been made in harmonizing temperature coefficient reporting, with most major stakeholders now adopting the standardized format of reporting frequency shift in parts per billion (ppb) per degree Celsius across the full operational temperature range. However, challenges remain in standardizing aging rate measurements, where test duration and environmental control protocols still vary considerably between different laboratories.

The International Telecommunication Union (ITU) has recently incorporated CSAC-specific recommendations into its standards framework, providing guidance on how stability measurements should be conducted and reported for telecommunications applications. This represents an important step toward global acceptance of unified metrology practices.

Future harmonization efforts will focus on establishing standard protocols for radiation hardness testing and reporting, an increasingly important consideration as CSACs find applications in space-based systems and other radiation-exposed environments. The development of reference CSACs as calibration standards is also underway, with NIST and PTB (Germany) leading collaborative efforts to create devices that can serve as international reference points.

Traceability and Calibration Infrastructure for CSACs

The establishment of a robust traceability and calibration infrastructure is essential for the widespread adoption and reliable operation of Chip-Scale Atomic Clocks (CSACs). This infrastructure must connect CSAC measurements to international standards, ensuring consistency and reliability across different applications and environments.

Primary national metrology institutes such as NIST in the United States, PTB in Germany, and NPL in the United Kingdom serve as the foundation of this infrastructure. These institutions maintain primary frequency standards, typically cesium fountains or optical clocks, which provide the reference for CSAC calibration chains. The traceability path typically flows from these primary standards through transfer standards to calibration laboratories and finally to end users.

Calibration laboratories play a crucial intermediary role, bridging the gap between national standards and commercial CSAC users. These facilities must be equipped with precision measurement equipment including phase noise analyzers, frequency counters with sufficient resolution, and environmental chambers for temperature characterization. Accreditation systems such as ISO/IEC 17025 ensure these laboratories maintain appropriate quality management systems and technical competence.

Transfer standards used in CSAC calibration typically include hydrogen masers or rubidium frequency standards that offer stability performance exceeding that of CSACs. These standards must be regularly calibrated against primary references to maintain their accuracy. GPS-disciplined oscillators also serve as widely accessible transfer standards, though with limitations in uncertainty that must be carefully documented.

Documentation requirements form another critical component of the infrastructure. Calibration certificates must include comprehensive uncertainty budgets that account for all significant error sources, including environmental sensitivities and aging effects. The calibration history of each CSAC should be maintained to track long-term performance trends and detect anomalies.

Interlaboratory comparison programs help ensure consistency across different calibration facilities. These programs involve multiple laboratories measuring the same CSAC units and comparing results, which helps identify systematic errors and improve measurement techniques. Such comparisons are particularly important for emerging technologies like CSACs where measurement methodologies are still evolving.

Remote calibration capabilities are increasingly important for CSACs deployed in field applications. Technologies enabling network-based synchronization and calibration allow for continuous monitoring and adjustment without physical access to the devices. This capability is particularly valuable for CSACs in critical infrastructure, telecommunications, and defense applications where continuous operation is essential.

Primary national metrology institutes such as NIST in the United States, PTB in Germany, and NPL in the United Kingdom serve as the foundation of this infrastructure. These institutions maintain primary frequency standards, typically cesium fountains or optical clocks, which provide the reference for CSAC calibration chains. The traceability path typically flows from these primary standards through transfer standards to calibration laboratories and finally to end users.

Calibration laboratories play a crucial intermediary role, bridging the gap between national standards and commercial CSAC users. These facilities must be equipped with precision measurement equipment including phase noise analyzers, frequency counters with sufficient resolution, and environmental chambers for temperature characterization. Accreditation systems such as ISO/IEC 17025 ensure these laboratories maintain appropriate quality management systems and technical competence.

Transfer standards used in CSAC calibration typically include hydrogen masers or rubidium frequency standards that offer stability performance exceeding that of CSACs. These standards must be regularly calibrated against primary references to maintain their accuracy. GPS-disciplined oscillators also serve as widely accessible transfer standards, though with limitations in uncertainty that must be carefully documented.

Documentation requirements form another critical component of the infrastructure. Calibration certificates must include comprehensive uncertainty budgets that account for all significant error sources, including environmental sensitivities and aging effects. The calibration history of each CSAC should be maintained to track long-term performance trends and detect anomalies.

Interlaboratory comparison programs help ensure consistency across different calibration facilities. These programs involve multiple laboratories measuring the same CSAC units and comparing results, which helps identify systematic errors and improve measurement techniques. Such comparisons are particularly important for emerging technologies like CSACs where measurement methodologies are still evolving.

Remote calibration capabilities are increasingly important for CSACs deployed in field applications. Technologies enabling network-based synchronization and calibration allow for continuous monitoring and adjustment without physical access to the devices. This capability is particularly valuable for CSACs in critical infrastructure, telecommunications, and defense applications where continuous operation is essential.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!