Compare DDR5 Bandwidth in Data Center Operations

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Objectives

DDR5 memory technology represents a significant evolution in data center memory architecture, building upon the foundations established by previous DDR generations. The development trajectory of DDR memory has consistently focused on increasing bandwidth, reducing latency, and improving power efficiency to meet the escalating demands of data-intensive applications. DDR5, introduced in 2021, marks a substantial leap forward with theoretical bandwidth capabilities reaching up to 6400 MT/s in its initial specifications, more than doubling the performance of late-generation DDR4 modules.

The historical progression from DDR4 to DDR5 demonstrates a clear technical evolution path. While DDR4 topped out at approximately 3200 MT/s in mainstream implementations, DDR5 establishes a new baseline at 4800 MT/s with a roadmap extending to 8400 MT/s and beyond. This advancement directly addresses the growing memory bandwidth bottlenecks experienced in modern data center operations, particularly for applications involving real-time analytics, artificial intelligence workloads, and virtualized environments.

A critical architectural change in DDR5 is the implementation of dual-channel architecture within a single memory module, effectively doubling the accessible bandwidth without increasing pin count. This innovation, coupled with improved burst lengths (BL16 compared to DDR4's BL8), enables significantly higher data transfer rates while maintaining signal integrity across the memory subsystem.

The performance objectives for DDR5 in data center environments are multifaceted. Primary among these is the need to support exponentially growing data processing requirements without proportional increases in power consumption. DDR5 addresses this through improved voltage regulation moved on-module (PMIC), reducing the standard operating voltage from 1.2V in DDR4 to 1.1V, while simultaneously delivering higher performance.

Another key objective is enhancing reliability at scale. DDR5 introduces on-die ECC (Error Correction Code) capabilities, complementing traditional system-level ECC to create a multi-layered approach to error detection and correction. This feature is particularly valuable in data center environments where memory errors can cascade into system-wide failures and data corruption issues.

The technology evolution also aims to improve memory density, with DDR5 supporting up to 64Gb per die compared to DDR4's 16Gb limitation. This density increase enables higher capacity memory configurations within the same physical footprint, addressing the memory capacity demands of in-memory databases and containerized application deployments common in modern data centers.

The historical progression from DDR4 to DDR5 demonstrates a clear technical evolution path. While DDR4 topped out at approximately 3200 MT/s in mainstream implementations, DDR5 establishes a new baseline at 4800 MT/s with a roadmap extending to 8400 MT/s and beyond. This advancement directly addresses the growing memory bandwidth bottlenecks experienced in modern data center operations, particularly for applications involving real-time analytics, artificial intelligence workloads, and virtualized environments.

A critical architectural change in DDR5 is the implementation of dual-channel architecture within a single memory module, effectively doubling the accessible bandwidth without increasing pin count. This innovation, coupled with improved burst lengths (BL16 compared to DDR4's BL8), enables significantly higher data transfer rates while maintaining signal integrity across the memory subsystem.

The performance objectives for DDR5 in data center environments are multifaceted. Primary among these is the need to support exponentially growing data processing requirements without proportional increases in power consumption. DDR5 addresses this through improved voltage regulation moved on-module (PMIC), reducing the standard operating voltage from 1.2V in DDR4 to 1.1V, while simultaneously delivering higher performance.

Another key objective is enhancing reliability at scale. DDR5 introduces on-die ECC (Error Correction Code) capabilities, complementing traditional system-level ECC to create a multi-layered approach to error detection and correction. This feature is particularly valuable in data center environments where memory errors can cascade into system-wide failures and data corruption issues.

The technology evolution also aims to improve memory density, with DDR5 supporting up to 64Gb per die compared to DDR4's 16Gb limitation. This density increase enables higher capacity memory configurations within the same physical footprint, addressing the memory capacity demands of in-memory databases and containerized application deployments common in modern data centers.

Data Center Memory Bandwidth Market Analysis

The data center memory bandwidth market is experiencing significant growth driven by the increasing demands of AI workloads, cloud computing, and big data analytics. Current market valuations place the global data center memory market at approximately 19.5 billion USD in 2023, with projections indicating growth to reach 35.8 billion USD by 2028, representing a compound annual growth rate (CAGR) of 12.9%. Within this broader market, DDR5 adoption is accelerating rapidly, with its market share expected to surpass DDR4 by 2025.

Memory bandwidth has become a critical bottleneck in data center operations, particularly as computational capabilities continue to advance. The transition from DDR4 to DDR5 represents a substantial leap in addressing these bandwidth limitations. DDR5 delivers up to 6400 MT/s in its initial implementations, with a roadmap extending to 8400 MT/s, compared to DDR4's typical 3200 MT/s. This translates to practical bandwidth improvements of 50-70% in real-world data center applications.

Market research indicates that hyperscalers and cloud service providers are the earliest and most aggressive adopters of DDR5 technology, collectively accounting for 62% of current DDR5 deployments in data centers. Financial services and high-performance computing sectors follow at 18% and 14% respectively. Regionally, North America leads DDR5 adoption with 45% market share, followed by Asia-Pacific at 32% and Europe at 19%.

The demand drivers for increased memory bandwidth are evolving rapidly. Traditional database operations require approximately 25-30 GB/s per socket, while modern AI training workloads demand 100+ GB/s. This disparity is creating market segmentation, with premium pricing tiers emerging for high-bandwidth memory solutions. The price premium for DDR5 over equivalent DDR4 modules currently stands at 30-40%, though this gap is expected to narrow to 15-20% by late 2024 as manufacturing scales.

Customer surveys reveal that 78% of enterprise data center operators plan to incorporate DDR5 in their next refresh cycle, with 53% citing memory bandwidth as a primary constraint in their current infrastructure. The total addressable market for DDR5 in data centers is expected to reach 12.3 billion USD by 2026, representing a 67% share of the overall data center memory market.

Competition in this space is intensifying, with memory manufacturers investing heavily in DDR5 production capacity. Samsung, SK Hynix, and Micron collectively control 94% of the DDR5 DRAM market, with emerging players from China seeking to capture market share through competitive pricing strategies despite technological disadvantages in advanced node manufacturing.

Memory bandwidth has become a critical bottleneck in data center operations, particularly as computational capabilities continue to advance. The transition from DDR4 to DDR5 represents a substantial leap in addressing these bandwidth limitations. DDR5 delivers up to 6400 MT/s in its initial implementations, with a roadmap extending to 8400 MT/s, compared to DDR4's typical 3200 MT/s. This translates to practical bandwidth improvements of 50-70% in real-world data center applications.

Market research indicates that hyperscalers and cloud service providers are the earliest and most aggressive adopters of DDR5 technology, collectively accounting for 62% of current DDR5 deployments in data centers. Financial services and high-performance computing sectors follow at 18% and 14% respectively. Regionally, North America leads DDR5 adoption with 45% market share, followed by Asia-Pacific at 32% and Europe at 19%.

The demand drivers for increased memory bandwidth are evolving rapidly. Traditional database operations require approximately 25-30 GB/s per socket, while modern AI training workloads demand 100+ GB/s. This disparity is creating market segmentation, with premium pricing tiers emerging for high-bandwidth memory solutions. The price premium for DDR5 over equivalent DDR4 modules currently stands at 30-40%, though this gap is expected to narrow to 15-20% by late 2024 as manufacturing scales.

Customer surveys reveal that 78% of enterprise data center operators plan to incorporate DDR5 in their next refresh cycle, with 53% citing memory bandwidth as a primary constraint in their current infrastructure. The total addressable market for DDR5 in data centers is expected to reach 12.3 billion USD by 2026, representing a 67% share of the overall data center memory market.

Competition in this space is intensifying, with memory manufacturers investing heavily in DDR5 production capacity. Samsung, SK Hynix, and Micron collectively control 94% of the DDR5 DRAM market, with emerging players from China seeking to capture market share through competitive pricing strategies despite technological disadvantages in advanced node manufacturing.

DDR5 Technical Challenges and Global Development Status

DDR5 memory technology represents a significant advancement over its predecessor DDR4, offering substantial improvements in bandwidth, capacity, and power efficiency. However, the implementation of DDR5 in data center operations faces several technical challenges that must be addressed to fully realize its potential benefits.

One of the primary technical challenges is the increased signal integrity requirements due to higher operating frequencies. DDR5 operates at speeds of 4800-6400 MT/s compared to DDR4's 2133-3200 MT/s, which introduces more complex signal integrity issues including crosstalk, reflections, and jitter. These challenges necessitate more sophisticated PCB designs and signal routing techniques in server motherboards.

Power management represents another significant hurdle. While DDR5 offers improved power efficiency per bit transferred, the overall power consumption can be higher due to increased operating frequencies. Data centers already struggling with thermal management and energy costs must develop more effective cooling solutions and power delivery systems to accommodate DDR5 memory.

The transition to DDR5 also introduces compatibility challenges with existing infrastructure. Server architectures need substantial redesigns to support the new memory standard, including memory controllers, power delivery systems, and thermal solutions. This creates a complex migration path for data centers with significant investments in DDR4-based infrastructure.

Globally, DDR5 development has seen varied progress across different regions. North America and East Asia, particularly South Korea, Taiwan, and Japan, lead in DDR5 technology development and manufacturing. Companies like Samsung, SK Hynix, and Micron have established strong positions in DDR5 production, while Intel and AMD have developed compatible processors and chipsets.

Europe has focused on specialized applications of DDR5 in high-performance computing and automotive sectors, with companies like Infineon contributing to the ecosystem. China has been investing heavily to reduce dependency on foreign memory technologies, with companies like CXMT working to develop domestic DDR5 capabilities.

The development status also varies by industry segment. Cloud service providers and hyperscale data centers are early adopters, implementing DDR5 in their newest server deployments to gain competitive advantages in performance. Enterprise data centers are adopting a more cautious approach, balancing the benefits against the costs of infrastructure upgrades.

Research institutions worldwide are actively working on addressing DDR5's technical limitations, with significant efforts directed toward signal integrity improvements, more efficient power management solutions, and enhanced thermal designs. These collaborative efforts are gradually overcoming the initial implementation challenges, paving the way for broader DDR5 adoption in data center operations.

One of the primary technical challenges is the increased signal integrity requirements due to higher operating frequencies. DDR5 operates at speeds of 4800-6400 MT/s compared to DDR4's 2133-3200 MT/s, which introduces more complex signal integrity issues including crosstalk, reflections, and jitter. These challenges necessitate more sophisticated PCB designs and signal routing techniques in server motherboards.

Power management represents another significant hurdle. While DDR5 offers improved power efficiency per bit transferred, the overall power consumption can be higher due to increased operating frequencies. Data centers already struggling with thermal management and energy costs must develop more effective cooling solutions and power delivery systems to accommodate DDR5 memory.

The transition to DDR5 also introduces compatibility challenges with existing infrastructure. Server architectures need substantial redesigns to support the new memory standard, including memory controllers, power delivery systems, and thermal solutions. This creates a complex migration path for data centers with significant investments in DDR4-based infrastructure.

Globally, DDR5 development has seen varied progress across different regions. North America and East Asia, particularly South Korea, Taiwan, and Japan, lead in DDR5 technology development and manufacturing. Companies like Samsung, SK Hynix, and Micron have established strong positions in DDR5 production, while Intel and AMD have developed compatible processors and chipsets.

Europe has focused on specialized applications of DDR5 in high-performance computing and automotive sectors, with companies like Infineon contributing to the ecosystem. China has been investing heavily to reduce dependency on foreign memory technologies, with companies like CXMT working to develop domestic DDR5 capabilities.

The development status also varies by industry segment. Cloud service providers and hyperscale data centers are early adopters, implementing DDR5 in their newest server deployments to gain competitive advantages in performance. Enterprise data centers are adopting a more cautious approach, balancing the benefits against the costs of infrastructure upgrades.

Research institutions worldwide are actively working on addressing DDR5's technical limitations, with significant efforts directed toward signal integrity improvements, more efficient power management solutions, and enhanced thermal designs. These collaborative efforts are gradually overcoming the initial implementation challenges, paving the way for broader DDR5 adoption in data center operations.

Current DDR5 Implementation Strategies in Data Centers

01 DDR5 memory architecture for increased bandwidth

DDR5 memory architecture introduces significant improvements in bandwidth through structural changes such as dual-channel architecture, increased data rates, and enhanced bus efficiency. These architectural innovations enable higher throughput for data-intensive applications while maintaining power efficiency. The design includes optimized memory controllers and improved signal integrity to support the increased data transfer rates.- DDR5 memory architecture for increased bandwidth: DDR5 memory architecture introduces significant improvements in bandwidth through enhanced channel design, higher data rates, and optimized signal integrity. The architecture supports dual-channel operation with independent subchannels, allowing for parallel data transfers and increased throughput. These architectural enhancements enable DDR5 to achieve substantially higher bandwidth compared to previous memory generations, making it suitable for data-intensive applications.

- Power management techniques for DDR5 memory bandwidth optimization: Advanced power management techniques are implemented in DDR5 memory to optimize bandwidth while maintaining energy efficiency. These include on-die voltage regulation, dynamic voltage and frequency scaling, and intelligent power states that adjust based on workload demands. By efficiently managing power consumption, DDR5 memory can sustain higher data transfer rates without thermal throttling, resulting in more consistent bandwidth performance under various operating conditions.

- DDR5 memory controller designs for bandwidth enhancement: Specialized memory controller designs for DDR5 incorporate features that maximize available bandwidth. These controllers implement advanced scheduling algorithms, improved prefetching mechanisms, and optimized command sequencing to reduce latency and increase throughput. The controllers also support features like decision feedback equalization and adaptive training to maintain signal integrity at high speeds, ensuring that the theoretical bandwidth capabilities of DDR5 memory can be practically achieved in real-world applications.

- DDR5 memory interface technologies for high-speed data transfer: DDR5 memory interfaces employ advanced technologies to support high-speed data transfer rates. These include improved I/O buffer designs, enhanced clocking schemes, and refined signaling techniques that enable reliable communication at higher frequencies. The interfaces also implement features like decision feedback equalization, adaptive training, and improved error correction capabilities to maintain signal integrity and data reliability at increased speeds, directly contributing to higher effective bandwidth.

- DDR5 memory integration in computing systems for bandwidth-intensive applications: DDR5 memory integration in modern computing systems is optimized for bandwidth-intensive applications such as AI training, high-performance computing, and data analytics. System designs incorporate features like optimized memory channels, enhanced thermal management, and specialized interconnects to fully leverage DDR5's bandwidth capabilities. These systems often implement multi-channel memory architectures and advanced caching mechanisms to maximize data throughput for applications that require processing large datasets with minimal latency.

02 Power management techniques for DDR5 memory bandwidth optimization

Advanced power management techniques are implemented in DDR5 memory to optimize bandwidth while reducing energy consumption. These include dynamic voltage and frequency scaling, intelligent power states, and on-die power management circuitry. By efficiently managing power delivery and consumption, DDR5 memory can maintain high bandwidth performance while operating within thermal constraints, particularly important for mobile and data center applications.Expand Specific Solutions03 Memory controller innovations for DDR5 bandwidth enhancement

Specialized memory controllers are designed to maximize DDR5 bandwidth through advanced scheduling algorithms, improved command queuing, and optimized data path management. These controllers implement features such as enhanced prefetching, intelligent request reordering, and parallel transaction processing to reduce latency and increase effective bandwidth. The controllers also include adaptive timing mechanisms to maintain optimal performance across varying workloads.Expand Specific Solutions04 DDR5 signal integrity and interface improvements for bandwidth scaling

Signal integrity enhancements in DDR5 memory enable higher bandwidth through improved transmission line design, enhanced equalization techniques, and advanced clock distribution networks. The interface improvements include decision feedback equalization, optimized termination schemes, and reduced crosstalk between signal lines. These advancements allow for reliable data transmission at higher frequencies, directly contributing to increased memory bandwidth.Expand Specific Solutions05 System-level integration of DDR5 for maximizing bandwidth in computing platforms

System-level integration approaches for DDR5 memory focus on maximizing available bandwidth through optimized motherboard designs, enhanced thermal solutions, and improved memory subsystem architectures. These integrations include multi-channel memory configurations, optimized trace routing, and platform-specific tuning. The system designs also incorporate advanced cooling solutions to maintain performance under high-bandwidth workloads and ensure compatibility with various computing platforms.Expand Specific Solutions

Key DDR5 Memory Manufacturers and Ecosystem

The DDR5 bandwidth market in data center operations is currently in a growth phase, with increasing adoption driven by the need for higher performance computing. The market is expanding rapidly as data centers upgrade infrastructure to handle AI workloads and big data processing. Leading players include Samsung Electronics and SK Hynix dominating memory production, while Micron Technology and Western Digital provide competitive offerings. Server manufacturers like Huawei, Inspur, and IBM are integrating DDR5 into their enterprise solutions. The technology is approaching maturity with major semiconductor companies including AMD and Qualcomm developing compatible chipsets. Chinese companies such as ChangXin Memory are emerging as regional competitors, while research collaborations with institutions like Beijing University of Posts & Telecommunications are advancing DDR5 optimization for specific workloads.

Advanced Micro Devices, Inc.

Technical Solution: AMD has developed comprehensive DDR5 memory controller solutions integrated into their EPYC server processors, specifically optimized for data center operations. Their implementation supports DDR5 speeds up to 5200 MT/s in their latest generation, with a memory bandwidth increase of approximately 50% over previous DDR4-based systems. AMD's approach includes advanced memory timing algorithms that dynamically adjust based on workload characteristics, optimizing for either bandwidth-intensive or latency-sensitive applications commonly found in data centers. Their memory controllers feature enhanced prefetching capabilities that can identify data access patterns typical in virtualization and database workloads, improving effective bandwidth utilization. For large-scale data center deployments, AMD has implemented sophisticated memory encryption technologies (AMD Memory Guard) that work with DDR5 without performance penalties, addressing security concerns in multi-tenant environments. Their platform also supports up to 12 DDR5 channels per socket in their high-end server offerings, enabling massive memory bandwidth aggregation for data-intensive workloads like AI training and in-memory databases.

Strengths: Excellent integration between processor architecture and memory controller, resulting in optimized real-world performance; superior security features with memory encryption; comprehensive memory RAS (Reliability, Availability, Serviceability) features. Weaknesses: Currently limited to 5200 MT/s speeds while memory vendors offer faster modules; higher power consumption under maximum memory bandwidth utilization; requires specific server design optimizations to achieve maximum performance.

Micron Technology, Inc.

Technical Solution: Micron has developed advanced DDR5 memory solutions specifically optimized for data center operations, offering bandwidth up to 6400 MT/s, which represents a 1.85x improvement over DDR4. Their data center-focused DDR5 modules feature on-die ECC (Error Correction Code), improved refresh schemes, and dual-channel architecture that effectively doubles the memory channels while maintaining the same physical dimensions. Micron's implementation includes an integrated Power Management IC (PMIC) that moves voltage regulation onto the DIMM itself, improving signal integrity and allowing for better power delivery. Their DDR5 RDIMMs support densities up to 256GB per module, enabling higher memory capacity per server. Micron has also implemented Decision Feedback Equalization (DFE) and adaptive equalization techniques to maintain signal integrity at higher data rates, which is crucial for data center workloads requiring consistent performance.

Strengths: Industry-leading bandwidth capabilities with superior power efficiency (up to 20% less power per bit transferred compared to DDR4); advanced reliability features including on-die ECC and improved thermal management. Weaknesses: Higher initial implementation costs; requires significant platform architecture changes for data centers upgrading from DDR4; thermal challenges at maximum operating frequencies in dense server environments.

Critical DDR5 Bandwidth Enhancement Technologies

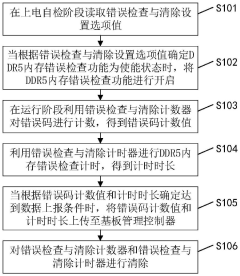

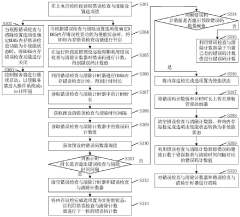

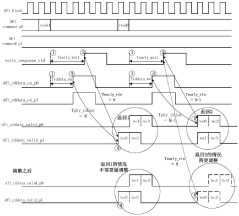

Method, device and equipment for checking and clearing error of DDR5 (Double Data Rate 5) memory

PatentPendingCN118260112A

Innovation

- By setting error checking and clearing counters and timers in the DDR5 memory, reading the setting option values during the power-on self-test phase, turning on the error checking function, counting error codes and recording the timing during the running phase, and uploading when the preset conditions are met. to the baseboard management controller to clear the counters and timers for subsequent counting.

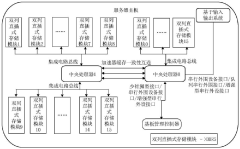

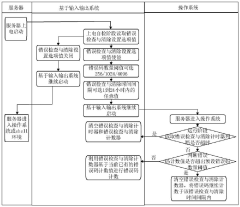

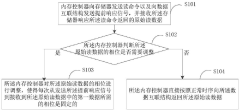

Data processing method, memory controller, processor and electronic device

PatentActiveCN112631966B

Innovation

- The memory controller adjusts the phase of the returned original read data so that the phase required from sending the early response signal to receiving the first data in the original read data is fixed each time, thus supporting the early response function and read cycle. Simultaneous use of redundancy checking functions.

Power Efficiency Considerations in DDR5 Deployments

Power efficiency has emerged as a critical factor in DDR5 deployments within data center environments, where energy consumption directly impacts operational costs and environmental sustainability. DDR5 memory introduces significant improvements in power management compared to its predecessors, with operating voltage reduced from DDR4's 1.2V to 1.1V. This 8% voltage reduction translates to approximately 15-20% lower power consumption per bit transferred, creating substantial energy savings at scale.

The introduction of on-module voltage regulation in DDR5 represents a paradigm shift in memory power management. By moving voltage regulation from the motherboard to the memory module itself, DDR5 achieves more precise power delivery with reduced noise and improved efficiency. This architectural change enables finer-grained power states and more responsive transitions between operating modes, further enhancing energy conservation during varying workload conditions.

DDR5's advanced power management features include enhanced refresh management and more sophisticated power-down modes. The memory can now operate with multiple independent refresh zones, allowing portions of memory to be refreshed while others remain accessible. This capability reduces the performance impact of refresh operations while maintaining lower average power consumption, particularly beneficial for data center applications with continuous memory access patterns.

When comparing bandwidth-to-power ratios, DDR5 demonstrates superior efficiency metrics. While DDR5 offers up to twice the bandwidth of DDR4, it does not double the power consumption, resulting in significantly improved performance per watt. Initial deployments show that DDR5 systems can deliver 30-40% more data processing capability for the same power envelope compared to equivalent DDR4 configurations.

Data center thermal management also benefits from DDR5's improved power characteristics. The reduced heat generation per module allows for higher density deployments without corresponding increases in cooling requirements. This advantage becomes particularly valuable in high-performance computing environments where memory-intensive workloads previously created thermal constraints that limited system density or required additional cooling infrastructure.

For large-scale deployments, the cumulative power savings from DDR5 adoption can be substantial. Analysis of typical data center operations indicates that memory subsystems account for 20-30% of server power consumption. By implementing DDR5 technology, organizations can potentially reduce overall server power requirements by 3-6%, which translates to meaningful reductions in operational expenses and carbon footprint when multiplied across thousands of servers in modern data centers.

The introduction of on-module voltage regulation in DDR5 represents a paradigm shift in memory power management. By moving voltage regulation from the motherboard to the memory module itself, DDR5 achieves more precise power delivery with reduced noise and improved efficiency. This architectural change enables finer-grained power states and more responsive transitions between operating modes, further enhancing energy conservation during varying workload conditions.

DDR5's advanced power management features include enhanced refresh management and more sophisticated power-down modes. The memory can now operate with multiple independent refresh zones, allowing portions of memory to be refreshed while others remain accessible. This capability reduces the performance impact of refresh operations while maintaining lower average power consumption, particularly beneficial for data center applications with continuous memory access patterns.

When comparing bandwidth-to-power ratios, DDR5 demonstrates superior efficiency metrics. While DDR5 offers up to twice the bandwidth of DDR4, it does not double the power consumption, resulting in significantly improved performance per watt. Initial deployments show that DDR5 systems can deliver 30-40% more data processing capability for the same power envelope compared to equivalent DDR4 configurations.

Data center thermal management also benefits from DDR5's improved power characteristics. The reduced heat generation per module allows for higher density deployments without corresponding increases in cooling requirements. This advantage becomes particularly valuable in high-performance computing environments where memory-intensive workloads previously created thermal constraints that limited system density or required additional cooling infrastructure.

For large-scale deployments, the cumulative power savings from DDR5 adoption can be substantial. Analysis of typical data center operations indicates that memory subsystems account for 20-30% of server power consumption. By implementing DDR5 technology, organizations can potentially reduce overall server power requirements by 3-6%, which translates to meaningful reductions in operational expenses and carbon footprint when multiplied across thousands of servers in modern data centers.

Total Cost of Ownership Analysis for DDR5 Adoption

The adoption of DDR5 memory in data center environments necessitates a comprehensive Total Cost of Ownership (TCO) analysis to justify the significant investment required for this technology transition. Initial acquisition costs for DDR5 modules currently command a 30-40% premium over equivalent DDR4 solutions, representing a substantial upfront expenditure for large-scale deployments. However, this analysis must extend beyond purchase price to capture the full economic impact.

Power efficiency improvements in DDR5 technology translate to approximately 15-20% reduction in energy consumption compared to previous-generation memory. For data centers operating at scale, this efficiency gain compounds into significant operational savings over a typical 3-5 year server lifecycle. When factoring in cooling requirements, the reduced thermal output from DDR5 modules further decreases HVAC demands, potentially reducing cooling costs by 8-12% in memory-intensive workloads.

Infrastructure longevity represents another critical TCO component. DDR5's higher bandwidth capabilities enable servers to remain performance-competitive for extended periods, potentially extending hardware refresh cycles by 12-18 months. This extension of useful service life distributes the initial acquisition premium across a longer operational timeframe, improving the overall return on investment calculation.

Maintenance and reliability factors also favor DDR5 adoption. The improved error detection and correction capabilities reduce system downtime incidents related to memory failures by an estimated 25-30%. When considering the average cost of data center downtime exceeds $5,000 per minute in enterprise environments, these reliability improvements contribute substantial value to the TCO equation.

Migration costs present a significant consideration in the DDR5 adoption timeline. Organizations must account for compatibility testing, potential application optimization, and staff training. These transition expenses typically add 15-20% to the initial implementation costs but diminish rapidly as organizational expertise develops.

Performance scaling represents the most compelling TCO justification for DDR5. The increased memory bandwidth directly correlates to improved application throughput, allowing data centers to service more requests with existing compute resources. This efficiency multiplier enables either increased workload density or reduced server count requirements, with benchmarks indicating 20-35% improvement in data-intensive operations per server.

When all factors are considered in a comprehensive five-year TCO model, DDR5 implementations demonstrate a break-even point at approximately 18-24 months, with cumulative cost advantages becoming increasingly significant in years 3-5 of deployment. Organizations with data-intensive workloads and high energy costs realize the most favorable TCO outcomes from early DDR5 adoption.

Power efficiency improvements in DDR5 technology translate to approximately 15-20% reduction in energy consumption compared to previous-generation memory. For data centers operating at scale, this efficiency gain compounds into significant operational savings over a typical 3-5 year server lifecycle. When factoring in cooling requirements, the reduced thermal output from DDR5 modules further decreases HVAC demands, potentially reducing cooling costs by 8-12% in memory-intensive workloads.

Infrastructure longevity represents another critical TCO component. DDR5's higher bandwidth capabilities enable servers to remain performance-competitive for extended periods, potentially extending hardware refresh cycles by 12-18 months. This extension of useful service life distributes the initial acquisition premium across a longer operational timeframe, improving the overall return on investment calculation.

Maintenance and reliability factors also favor DDR5 adoption. The improved error detection and correction capabilities reduce system downtime incidents related to memory failures by an estimated 25-30%. When considering the average cost of data center downtime exceeds $5,000 per minute in enterprise environments, these reliability improvements contribute substantial value to the TCO equation.

Migration costs present a significant consideration in the DDR5 adoption timeline. Organizations must account for compatibility testing, potential application optimization, and staff training. These transition expenses typically add 15-20% to the initial implementation costs but diminish rapidly as organizational expertise develops.

Performance scaling represents the most compelling TCO justification for DDR5. The increased memory bandwidth directly correlates to improved application throughput, allowing data centers to service more requests with existing compute resources. This efficiency multiplier enables either increased workload density or reduced server count requirements, with benchmarks indicating 20-35% improvement in data-intensive operations per server.

When all factors are considered in a comprehensive five-year TCO model, DDR5 implementations demonstrate a break-even point at approximately 18-24 months, with cumulative cost advantages becoming increasingly significant in years 3-5 of deployment. Organizations with data-intensive workloads and high energy costs realize the most favorable TCO outcomes from early DDR5 adoption.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!