Compare DDR5 with CXL: Memory Interconnect Efficiency

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 and CXL Evolution Background and Objectives

The evolution of memory technologies has been a critical factor in the advancement of computing systems, with DDR (Double Data Rate) memory serving as the backbone of system memory architecture for decades. DDR5, introduced in 2021, represents the fifth generation of DDR technology, bringing significant improvements in bandwidth, capacity, and power efficiency compared to its predecessor, DDR4. This evolution was driven by the increasing demands of data-intensive applications, cloud computing, and artificial intelligence workloads that require higher memory performance and capacity.

Concurrently, Compute Express Link (CXL) emerged as a new open industry-standard interconnect technology designed to address the limitations of traditional memory architectures. First announced in 2019, CXL is built upon the PCIe 5.0 physical layer and aims to provide high-bandwidth, low-latency connectivity between the CPU and devices such as accelerators, memory expanders, and smart I/O devices. The development of CXL was motivated by the need for more flexible and scalable memory architectures that could overcome the constraints of traditional DDR-based memory systems.

The primary objective of comparing DDR5 with CXL is to evaluate their respective efficiencies in memory interconnect technologies, particularly in addressing the growing memory demands of modern computing workloads. DDR5 focuses on enhancing traditional memory performance metrics, while CXL introduces a new paradigm for memory expansion and disaggregation, enabling more flexible resource allocation in data centers and high-performance computing environments.

Both technologies represent different approaches to solving the memory wall problem – the growing disparity between processor and memory performance. DDR5 continues the evolutionary path of traditional memory technologies with incremental improvements, whereas CXL introduces a revolutionary approach by enabling memory pooling, sharing, and coherent memory access across different compute resources.

Understanding the technical characteristics, performance implications, and use cases for both DDR5 and CXL is essential for system architects and technology strategists to make informed decisions about future memory architectures. This comparison aims to provide insights into how these technologies complement or compete with each other in various application scenarios, and how they might coexist in future computing systems to optimize memory interconnect efficiency.

Concurrently, Compute Express Link (CXL) emerged as a new open industry-standard interconnect technology designed to address the limitations of traditional memory architectures. First announced in 2019, CXL is built upon the PCIe 5.0 physical layer and aims to provide high-bandwidth, low-latency connectivity between the CPU and devices such as accelerators, memory expanders, and smart I/O devices. The development of CXL was motivated by the need for more flexible and scalable memory architectures that could overcome the constraints of traditional DDR-based memory systems.

The primary objective of comparing DDR5 with CXL is to evaluate their respective efficiencies in memory interconnect technologies, particularly in addressing the growing memory demands of modern computing workloads. DDR5 focuses on enhancing traditional memory performance metrics, while CXL introduces a new paradigm for memory expansion and disaggregation, enabling more flexible resource allocation in data centers and high-performance computing environments.

Both technologies represent different approaches to solving the memory wall problem – the growing disparity between processor and memory performance. DDR5 continues the evolutionary path of traditional memory technologies with incremental improvements, whereas CXL introduces a revolutionary approach by enabling memory pooling, sharing, and coherent memory access across different compute resources.

Understanding the technical characteristics, performance implications, and use cases for both DDR5 and CXL is essential for system architects and technology strategists to make informed decisions about future memory architectures. This comparison aims to provide insights into how these technologies complement or compete with each other in various application scenarios, and how they might coexist in future computing systems to optimize memory interconnect efficiency.

Market Demand Analysis for Advanced Memory Interconnects

The demand for advanced memory interconnect technologies has surged dramatically in recent years, driven primarily by the exponential growth in data-intensive applications. Cloud computing, artificial intelligence, machine learning, and big data analytics have collectively created unprecedented requirements for memory bandwidth, capacity, and efficiency. Market research indicates that the global server memory market is expected to grow at a compound annual growth rate of 19.4% from 2021 to 2026, reaching a value of $26.8 billion.

Traditional memory architectures are increasingly struggling to meet these demands, creating a significant market opportunity for advanced interconnect technologies like DDR5 and CXL. Enterprise data centers represent the largest market segment, accounting for approximately 42% of the total addressable market, followed by hyperscale cloud providers at 31% and high-performance computing at 18%.

The shift toward disaggregated memory architectures is becoming a critical market driver. Organizations are seeking solutions that allow memory resources to be pooled and allocated dynamically across computing resources, rather than being permanently attached to specific processors. This trend has accelerated the adoption timeline for CXL technology, with 67% of enterprise IT decision-makers in a recent industry survey indicating plans to implement CXL-based memory solutions within the next three years.

Memory cost optimization has emerged as another significant market factor. As data volumes continue to expand, organizations are increasingly sensitive to the total cost of memory ownership. Technologies that enable more efficient memory utilization can deliver substantial economic benefits. Market analysis shows that enterprises can reduce memory-related infrastructure costs by up to 30% through optimized memory interconnect technologies.

Latency requirements vary significantly across market segments. Financial services and high-frequency trading applications demand ultra-low latency solutions, while cloud service providers prioritize consistent performance at scale. This diversity in requirements is creating distinct market niches for different memory interconnect technologies, with DDR5 maintaining strength in traditional computing environments while CXL gains traction in composable infrastructure deployments.

Sustainability considerations are also influencing market demand. Data centers now account for approximately 1% of global electricity consumption, with memory systems representing a significant portion of this energy usage. Technologies that improve memory interconnect efficiency can substantially reduce power consumption, aligning with corporate environmental goals and regulatory requirements in key markets.

Traditional memory architectures are increasingly struggling to meet these demands, creating a significant market opportunity for advanced interconnect technologies like DDR5 and CXL. Enterprise data centers represent the largest market segment, accounting for approximately 42% of the total addressable market, followed by hyperscale cloud providers at 31% and high-performance computing at 18%.

The shift toward disaggregated memory architectures is becoming a critical market driver. Organizations are seeking solutions that allow memory resources to be pooled and allocated dynamically across computing resources, rather than being permanently attached to specific processors. This trend has accelerated the adoption timeline for CXL technology, with 67% of enterprise IT decision-makers in a recent industry survey indicating plans to implement CXL-based memory solutions within the next three years.

Memory cost optimization has emerged as another significant market factor. As data volumes continue to expand, organizations are increasingly sensitive to the total cost of memory ownership. Technologies that enable more efficient memory utilization can deliver substantial economic benefits. Market analysis shows that enterprises can reduce memory-related infrastructure costs by up to 30% through optimized memory interconnect technologies.

Latency requirements vary significantly across market segments. Financial services and high-frequency trading applications demand ultra-low latency solutions, while cloud service providers prioritize consistent performance at scale. This diversity in requirements is creating distinct market niches for different memory interconnect technologies, with DDR5 maintaining strength in traditional computing environments while CXL gains traction in composable infrastructure deployments.

Sustainability considerations are also influencing market demand. Data centers now account for approximately 1% of global electricity consumption, with memory systems representing a significant portion of this energy usage. Technologies that improve memory interconnect efficiency can substantially reduce power consumption, aligning with corporate environmental goals and regulatory requirements in key markets.

Current Technical Landscape and Challenges in Memory Interconnect

The memory interconnect landscape is currently experiencing a significant transformation driven by the increasing demands of data-intensive applications. DDR5, as the latest iteration of the traditional memory standard, represents an evolutionary advancement with substantial improvements over DDR4, offering higher bandwidth, improved power efficiency, and enhanced reliability. Initial DDR5 implementations deliver data rates of 4800-6400 MT/s, with a roadmap extending to 8400 MT/s, effectively doubling DDR4's performance ceiling while reducing operating voltage to 1.1V.

Simultaneously, Compute Express Link (CXL) has emerged as a revolutionary memory interconnect technology, fundamentally altering the traditional memory architecture paradigm. CXL operates as an open industry standard that maintains memory coherency between CPU and device memory, enabling a shared memory pool that can be dynamically allocated. The latest CXL 3.0 specification supports up to 64 GT/s per lane, leveraging PCIe 6.0 physical layer technology.

Despite these advancements, significant technical challenges persist in both technologies. DDR5 faces inherent limitations in scaling beyond socket boundaries, with memory capacity constrained by DIMM slot availability and channel architecture. Signal integrity issues become increasingly problematic at higher frequencies, necessitating complex equalization techniques and more sophisticated memory controllers.

CXL confronts its own set of challenges, primarily centered around latency concerns. While offering unprecedented flexibility, the protocol introduces additional overhead compared to direct DDR connections. Current implementations show latency penalties of approximately 100-150 nanoseconds compared to local DRAM access, which can impact performance in latency-sensitive applications.

Power consumption remains a critical concern for both technologies. DDR5's higher frequencies demand more power despite voltage reductions, while CXL's complex protocol stack and additional components in the data path increase overall system power requirements. Thermal management becomes increasingly challenging as data rates climb, particularly in dense server environments.

Software ecosystem maturity presents another significant hurdle, especially for CXL. Operating systems, hypervisors, and applications require substantial modifications to fully leverage CXL's memory pooling capabilities. Memory management algorithms must be redesigned to account for the heterogeneous memory landscape with varying latency and bandwidth characteristics.

Standardization efforts continue to evolve, with industry consortiums working to address interoperability challenges. The CXL Consortium has expanded significantly, now including over 200 member companies, while JEDEC continues refining the DDR5 specification to address emerging requirements and technical limitations.

Simultaneously, Compute Express Link (CXL) has emerged as a revolutionary memory interconnect technology, fundamentally altering the traditional memory architecture paradigm. CXL operates as an open industry standard that maintains memory coherency between CPU and device memory, enabling a shared memory pool that can be dynamically allocated. The latest CXL 3.0 specification supports up to 64 GT/s per lane, leveraging PCIe 6.0 physical layer technology.

Despite these advancements, significant technical challenges persist in both technologies. DDR5 faces inherent limitations in scaling beyond socket boundaries, with memory capacity constrained by DIMM slot availability and channel architecture. Signal integrity issues become increasingly problematic at higher frequencies, necessitating complex equalization techniques and more sophisticated memory controllers.

CXL confronts its own set of challenges, primarily centered around latency concerns. While offering unprecedented flexibility, the protocol introduces additional overhead compared to direct DDR connections. Current implementations show latency penalties of approximately 100-150 nanoseconds compared to local DRAM access, which can impact performance in latency-sensitive applications.

Power consumption remains a critical concern for both technologies. DDR5's higher frequencies demand more power despite voltage reductions, while CXL's complex protocol stack and additional components in the data path increase overall system power requirements. Thermal management becomes increasingly challenging as data rates climb, particularly in dense server environments.

Software ecosystem maturity presents another significant hurdle, especially for CXL. Operating systems, hypervisors, and applications require substantial modifications to fully leverage CXL's memory pooling capabilities. Memory management algorithms must be redesigned to account for the heterogeneous memory landscape with varying latency and bandwidth characteristics.

Standardization efforts continue to evolve, with industry consortiums working to address interoperability challenges. The CXL Consortium has expanded significantly, now including over 200 member companies, while JEDEC continues refining the DDR5 specification to address emerging requirements and technical limitations.

Comparative Analysis of DDR5 and CXL Solutions

01 DDR5 memory architecture and performance enhancements

DDR5 memory technology offers significant improvements over previous generations, including higher data rates, improved power efficiency, and enhanced reliability. The architecture incorporates on-die ECC (Error Correction Code), better channel utilization, and higher density capabilities. These enhancements enable more efficient data processing and reduced latency in memory operations, contributing to overall system performance improvements in data-intensive applications.- DDR5 memory architecture and performance improvements: DDR5 memory technology offers significant performance improvements over previous generations, including higher data rates, improved power efficiency, and enhanced reliability. The architecture incorporates features such as decision feedback equalization, on-die termination, and improved command/address bus design that collectively contribute to higher bandwidth and reduced latency. These advancements enable more efficient data transfer between memory and processors, making DDR5 particularly beneficial for data-intensive applications.

- CXL memory interconnect protocol and architecture: Compute Express Link (CXL) is a memory interconnect technology that provides high-bandwidth, low-latency connectivity between processors and memory devices. The CXL protocol supports coherent memory access, allowing processors to directly access memory attached to other devices while maintaining cache coherency. This architecture enables memory pooling, expansion, and sharing across computing resources, significantly improving memory utilization efficiency and flexibility in data center environments.

- Integration of DDR5 and CXL for memory expansion and pooling: The integration of DDR5 and CXL technologies enables advanced memory expansion and pooling capabilities. This combination allows systems to extend memory capacity beyond physical limitations of traditional architectures while maintaining performance. Memory pooling through CXL with DDR5 modules provides dynamic resource allocation, allowing memory to be shared across multiple processors or computing nodes. This integration supports disaggregated memory architectures where memory resources can be allocated independently from computing resources, improving overall system efficiency.

- Power efficiency and thermal management in DDR5 and CXL systems: Advanced power management features in DDR5 and CXL memory systems significantly improve energy efficiency. DDR5 incorporates voltage regulation modules directly on memory modules, allowing for more precise power control. CXL implementations include sophisticated power state management that can dynamically adjust power consumption based on workload demands. Together, these technologies enable better thermal management through intelligent power distribution, reduced operating voltages, and improved signal integrity, resulting in more energy-efficient memory operations especially in high-performance computing environments.

- Latency optimization and bandwidth management in hybrid memory systems: Hybrid memory systems leveraging both DDR5 and CXL technologies implement sophisticated latency optimization and bandwidth management techniques. These include intelligent data placement algorithms that position frequently accessed data in lower-latency memory regions, dynamic bandwidth allocation based on application requirements, and advanced caching mechanisms. Memory controllers in these systems can prioritize critical transactions and optimize memory access patterns to reduce contention. These optimizations collectively enhance system responsiveness and throughput, particularly for applications with diverse memory access characteristics.

02 CXL memory pooling and resource sharing capabilities

Compute Express Link (CXL) technology enables efficient memory pooling and resource sharing across multiple computing devices. This architecture allows for dynamic allocation of memory resources, improving utilization and reducing waste. CXL supports coherent memory access between CPUs, GPUs, and other accelerators, enabling a unified memory space that can be flexibly allocated based on workload demands, resulting in better resource utilization and system efficiency.Expand Specific Solutions03 Integration of DDR5 and CXL for heterogeneous computing

The integration of DDR5 and CXL technologies creates powerful solutions for heterogeneous computing environments. This combination allows systems to leverage both the high bandwidth of DDR5 and the flexible memory expansion capabilities of CXL. The integrated approach enables more efficient data movement between different types of processors and memory, reducing bottlenecks and improving overall system performance for AI, machine learning, and other compute-intensive workloads.Expand Specific Solutions04 Power efficiency optimizations in memory interconnect technologies

Advanced power management features in DDR5 and CXL technologies significantly improve energy efficiency in memory systems. These include dynamic voltage and frequency scaling, improved power states, and more granular control over memory components. The technologies implement sophisticated power gating techniques and optimize data transfer paths to reduce energy consumption during both active operation and idle states, resulting in lower operational costs and improved thermal management in data centers.Expand Specific Solutions05 Latency reduction and bandwidth optimization techniques

DDR5 and CXL technologies implement various techniques to reduce latency and optimize bandwidth utilization. These include improved prefetching algorithms, enhanced caching mechanisms, and optimized memory controller designs. The technologies also feature advanced scheduling algorithms that prioritize critical data paths and implement more efficient data compression methods. These optimizations collectively improve data throughput, reduce memory access times, and enhance overall system responsiveness for data-intensive applications.Expand Specific Solutions

Key Industry Players in DDR5 and CXL Ecosystems

The memory interconnect technology landscape is evolving rapidly, with DDR5 and CXL representing different approaches to addressing data center memory challenges. The market is in a transitional phase, with DDR5 reaching maturity while CXL is still emerging but gaining momentum. Major semiconductor players like Intel, Micron, Samsung, and Huawei are heavily invested in both technologies, with Intel particularly championing CXL development. Chinese companies including Inspur and Alibaba Cloud are increasingly contributing to this ecosystem. The global memory interconnect market is projected to grow significantly as data-intensive applications drive demand for higher bandwidth and more flexible memory architectures. While DDR5 offers evolutionary improvements in traditional memory access, CXL represents a revolutionary approach enabling disaggregated memory pools and heterogeneous computing, positioning it as potentially transformative for future data center architectures.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed advanced solutions for both DDR5 and CXL memory technologies. Their DDR5 modules deliver data transfer rates up to 7200MT/s, doubling DDR4 performance while operating at lower voltage (1.1V vs 1.2V), resulting in 20% power efficiency improvement. Samsung's DDR5 architecture implements dual 32-bit channels per module instead of a single 64-bit bus, enabling higher bandwidth utilization through independent channel operation. For CXL, Samsung has created memory expander modules that connect via CXL 2.0 interface, allowing memory capacity expansion beyond traditional DIMM limitations. Their CXL memory solution incorporates hardware-level memory pooling technology that enables dynamic allocation of memory resources across multiple servers. Samsung has also developed specialized CXL controllers that maintain cache coherency between host processors and expanded memory with optimized latency management techniques to minimize the performance gap compared to direct-attached DDR5.

Strengths: Samsung's vertical integration as both memory manufacturer and system designer enables optimized solutions across both technologies. Their DDR5 modules offer industry-leading bandwidth and power efficiency, while their CXL implementation provides flexible memory expansion with sophisticated coherency management. Weaknesses: Their CXL solutions still face inherent latency penalties compared to direct DDR5 connections, and full implementation requires significant platform-level changes to existing server architectures.

Micron Technology, Inc.

Technical Solution: Micron has developed comprehensive solutions for both DDR5 and CXL memory technologies. Their DDR5 DRAM modules deliver up to 6400MT/s data rates with significantly improved power efficiency through on-die voltage regulation and lower operating voltage (1.1V). Micron's DDR5 architecture implements dual 40-bit channels per module (32 data + 8 ECC bits each), enabling more efficient parallel operations and improved reliability through on-die ECC. Their DDR5 modules feature enhanced RAS (Reliability, Availability, Serviceability) capabilities with advanced error detection and correction. For CXL, Micron has developed memory expansion solutions based on CXL 2.0 protocol that enable memory capacity scaling beyond traditional DIMM constraints. Their CXL memory devices maintain cache coherency with host processors while providing flexible memory pooling capabilities. Micron's CXL implementation includes specialized buffer designs that optimize the latency characteristics of CXL connections, bringing performance closer to direct-attached memory while enabling significantly greater capacity scaling and resource utilization efficiency in data center environments.

Strengths: Micron's expertise in memory technology enables highly optimized implementations of both DDR5 and CXL solutions. Their DDR5 modules offer excellent reliability features and power efficiency, while their CXL memory expansion technology provides flexible scaling with optimized latency characteristics. Weaknesses: CXL implementations still face inherent bandwidth and latency disadvantages compared to direct DDR5 connections, and require significant ecosystem development to achieve widespread adoption.

Technical Deep Dive: DDR5 and CXL Architecture

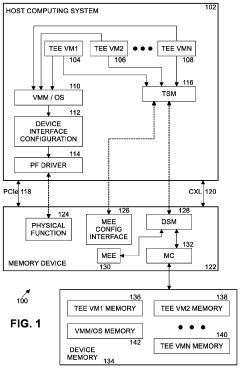

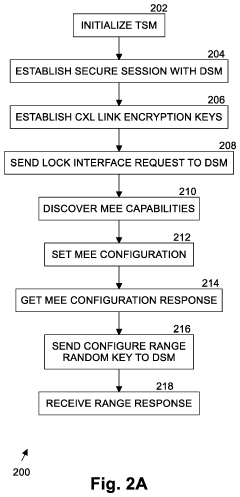

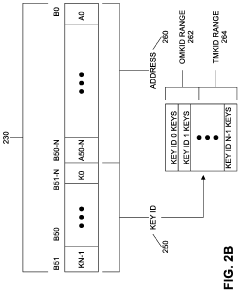

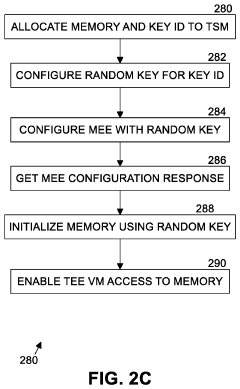

Memory encryption engine interface in compute express link (CXL) attached memory controllers

PatentActiveUS20210311643A1

Innovation

- The implementation of a memory encryption engine (MEE) with a memory mapped I/O-based configuration and capability enumeration interface supports memory encryption and integrity for CXL devices, using cryptographic ciphers like AES-XTS and message authentication codes to ensure confidentiality and integrity, and a device security manager to lock down memory device configurations and verify their security.

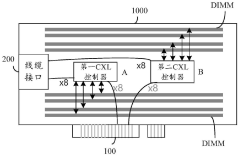

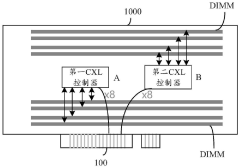

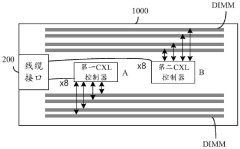

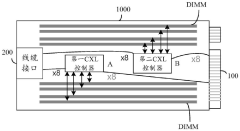

Storage expansion device and computing device

PatentPendingCN117992374A

Innovation

- Design a storage expansion device that uses two CXL controllers. The interface of each controller is divided into two parts to connect multiple DIMMs respectively. It provides cable interfaces and PCIE interfaces to ensure that any interface can access the memory of all DIMMs. Space, implements the forking function of the interface and the use of mapping tables so that the server can access all memory through different interfaces.

Performance Benchmarking and Efficiency Metrics

Comprehensive benchmarking of DDR5 and CXL memory interconnect technologies reveals significant differences in their efficiency profiles. DDR5 demonstrates raw bandwidth improvements of approximately 85% over DDR4, achieving up to 6400 MT/s in current implementations with roadmaps extending to 8400 MT/s. Latency measurements show DDR5 maintaining 75-85 nanoseconds for random access operations, representing only a modest improvement over previous generations despite the bandwidth gains.

CXL memory interconnect, operating over PCIe 5.0 physical layer, delivers bandwidth metrics that vary by implementation type. CXL.memory configurations achieve 32-64 GB/s bidirectional bandwidth, while introducing additional latency overhead of 50-120 nanoseconds compared to local DDR5 memory access. This latency penalty stems from protocol translation and the inherent characteristics of the PCIe transport layer.

Power efficiency metrics demonstrate DDR5's improvements through operating voltage reduction to 1.1V (from DDR4's 1.2V), resulting in approximately 20% lower power consumption per bit transferred. CXL implementations show higher absolute power consumption but superior scaling efficiency when measured against total memory capacity expansion, particularly in disaggregated memory architectures.

Memory utilization efficiency represents a critical differentiator, with CXL enabling 85-95% effective memory utilization across pooled resources compared to traditional DDR5 deployments that typically achieve only 40-60% utilization due to stranded memory constraints. This translates to substantial TCO advantages for CXL in large-scale deployments despite its higher access latency.

Scalability testing reveals DDR5's limitations in density-per-channel configurations, with practical implementations capped at 4-8 DIMMs per memory controller. CXL memory pooling architectures demonstrate near-linear scaling to hundreds of devices across fabric topologies, though with increasing latency penalties as network complexity grows.

Workload-specific benchmarking shows DDR5 maintaining superior performance for latency-sensitive applications with small working sets, outperforming CXL by 15-30% in OLTP database transactions and HPC applications with high locality. Conversely, CXL demonstrates 40-60% better performance-per-dollar metrics in memory-intensive analytics workloads, virtualized environments, and applications with dynamic memory requirements that benefit from memory pooling capabilities.

CXL memory interconnect, operating over PCIe 5.0 physical layer, delivers bandwidth metrics that vary by implementation type. CXL.memory configurations achieve 32-64 GB/s bidirectional bandwidth, while introducing additional latency overhead of 50-120 nanoseconds compared to local DDR5 memory access. This latency penalty stems from protocol translation and the inherent characteristics of the PCIe transport layer.

Power efficiency metrics demonstrate DDR5's improvements through operating voltage reduction to 1.1V (from DDR4's 1.2V), resulting in approximately 20% lower power consumption per bit transferred. CXL implementations show higher absolute power consumption but superior scaling efficiency when measured against total memory capacity expansion, particularly in disaggregated memory architectures.

Memory utilization efficiency represents a critical differentiator, with CXL enabling 85-95% effective memory utilization across pooled resources compared to traditional DDR5 deployments that typically achieve only 40-60% utilization due to stranded memory constraints. This translates to substantial TCO advantages for CXL in large-scale deployments despite its higher access latency.

Scalability testing reveals DDR5's limitations in density-per-channel configurations, with practical implementations capped at 4-8 DIMMs per memory controller. CXL memory pooling architectures demonstrate near-linear scaling to hundreds of devices across fabric topologies, though with increasing latency penalties as network complexity grows.

Workload-specific benchmarking shows DDR5 maintaining superior performance for latency-sensitive applications with small working sets, outperforming CXL by 15-30% in OLTP database transactions and HPC applications with high locality. Conversely, CXL demonstrates 40-60% better performance-per-dollar metrics in memory-intensive analytics workloads, virtualized environments, and applications with dynamic memory requirements that benefit from memory pooling capabilities.

Data Center Implementation Considerations

Implementing DDR5 and CXL technologies in data centers requires careful consideration of several critical factors that impact overall system performance, cost-effectiveness, and future scalability. The physical infrastructure must be evaluated thoroughly, as CXL implementations typically demand more sophisticated cooling solutions due to the increased power density compared to traditional DDR5 deployments. This necessitates redesigning rack layouts and power distribution systems to accommodate the thermal requirements of CXL-enabled servers.

Network topology considerations become paramount when implementing CXL, as its fabric-based memory pooling capabilities require low-latency, high-bandwidth connections between compute nodes. Data centers must evaluate their existing network infrastructure to determine if upgrades are necessary to support the increased east-west traffic patterns that emerge in CXL-based memory disaggregation scenarios.

Operational complexity represents another significant consideration. While DDR5 follows familiar deployment models, CXL introduces new management paradigms requiring specialized expertise. IT teams need comprehensive training on memory pooling concepts, dynamic resource allocation, and troubleshooting techniques specific to CXL environments. This operational shift may necessitate restructuring of IT teams and responsibilities.

Cost analysis must extend beyond initial hardware investments to include total cost of ownership calculations. Although CXL components currently command premium pricing compared to DDR5, the potential for improved memory utilization through pooling may yield superior long-term economics, particularly in environments with variable workload characteristics. Data centers should model various workload scenarios to determine the break-even point for CXL investments.

Migration strategies represent a critical implementation consideration. Most organizations cannot perform wholesale infrastructure replacements, necessitating phased approaches that allow DDR5 and CXL technologies to coexist. Hybrid architectures that strategically deploy CXL for memory-intensive workloads while maintaining DDR5 for general-purpose computing may optimize capital expenditure during transition periods.

Security implications must also be evaluated, as memory pooling introduces new attack vectors not present in traditional architectures. Data centers must implement robust isolation mechanisms, encryption protocols, and access controls specifically designed for shared memory resources to maintain compliance with data protection regulations.

Network topology considerations become paramount when implementing CXL, as its fabric-based memory pooling capabilities require low-latency, high-bandwidth connections between compute nodes. Data centers must evaluate their existing network infrastructure to determine if upgrades are necessary to support the increased east-west traffic patterns that emerge in CXL-based memory disaggregation scenarios.

Operational complexity represents another significant consideration. While DDR5 follows familiar deployment models, CXL introduces new management paradigms requiring specialized expertise. IT teams need comprehensive training on memory pooling concepts, dynamic resource allocation, and troubleshooting techniques specific to CXL environments. This operational shift may necessitate restructuring of IT teams and responsibilities.

Cost analysis must extend beyond initial hardware investments to include total cost of ownership calculations. Although CXL components currently command premium pricing compared to DDR5, the potential for improved memory utilization through pooling may yield superior long-term economics, particularly in environments with variable workload characteristics. Data centers should model various workload scenarios to determine the break-even point for CXL investments.

Migration strategies represent a critical implementation consideration. Most organizations cannot perform wholesale infrastructure replacements, necessitating phased approaches that allow DDR5 and CXL technologies to coexist. Hybrid architectures that strategically deploy CXL for memory-intensive workloads while maintaining DDR5 for general-purpose computing may optimize capital expenditure during transition periods.

Security implications must also be evaluated, as memory pooling introduces new attack vectors not present in traditional architectures. Data centers must implement robust isolation mechanisms, encryption protocols, and access controls specifically designed for shared memory resources to maintain compliance with data protection regulations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!