DDR5 vs DRAM: Latency Comparison in Multimedia Processing

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Objectives

Dynamic Random Access Memory (DRAM) technology has undergone significant evolution since its inception in the late 1960s. The journey from early DRAM implementations to modern DDR5 represents a continuous pursuit of higher bandwidth, increased capacity, and improved power efficiency. The original DRAM designs operated at modest speeds of just a few megahertz, while today's DDR5 modules can function at effective frequencies exceeding 6400 MT/s, demonstrating the remarkable technological progress in this domain.

The transition from DDR4 to DDR5 marks a pivotal advancement in memory technology, with DDR5 introducing fundamental architectural changes rather than mere incremental improvements. This evolution responds directly to the escalating demands of data-intensive applications, particularly in multimedia processing where large datasets must be accessed and manipulated with minimal latency. The development trajectory has consistently focused on reducing the performance gap between processing units and memory subsystems.

DDR5's technical objectives center on addressing several critical performance parameters. Primarily, it aims to significantly increase bandwidth, with theoretical maximums reaching up to 8400 MT/s compared to DDR4's 3200 MT/s ceiling. This bandwidth expansion is crucial for multimedia processing workloads that involve high-resolution video encoding, real-time rendering, and complex image processing algorithms.

Another key objective of DDR5 is density improvement, supporting up to 64Gb per die compared to DDR4's 16Gb limitation. This quadrupling of capacity enables systems to handle larger datasets without resorting to slower storage tiers, particularly beneficial for applications working with 4K or 8K video content that demand substantial memory resources.

Power efficiency represents a third critical goal, with DDR5 operating at 1.1V versus DDR4's 1.2V. This reduction, combined with more sophisticated power management features like on-die voltage regulation, aims to decrease energy consumption while maintaining or improving performance—an essential consideration for both data center deployments and mobile multimedia processing applications.

The evolution of DDR5 also targets improved error handling capabilities through on-die Error Correction Code (ECC), which is particularly valuable for maintaining data integrity in professional multimedia workflows where corruption could result in significant quality degradation or project delays. This feature represents a philosophical shift toward building reliability directly into the memory architecture rather than relying solely on external error correction mechanisms.

Looking at the technological trajectory, DDR5 development has been guided by the need to support increasingly parallel computing architectures. Its design incorporates dual-channel architecture on a single module, effectively doubling the accessible channels without increasing physical connections. This approach aligns with the highly parallel nature of modern multimedia processing algorithms that benefit from simultaneous memory access patterns.

The transition from DDR4 to DDR5 marks a pivotal advancement in memory technology, with DDR5 introducing fundamental architectural changes rather than mere incremental improvements. This evolution responds directly to the escalating demands of data-intensive applications, particularly in multimedia processing where large datasets must be accessed and manipulated with minimal latency. The development trajectory has consistently focused on reducing the performance gap between processing units and memory subsystems.

DDR5's technical objectives center on addressing several critical performance parameters. Primarily, it aims to significantly increase bandwidth, with theoretical maximums reaching up to 8400 MT/s compared to DDR4's 3200 MT/s ceiling. This bandwidth expansion is crucial for multimedia processing workloads that involve high-resolution video encoding, real-time rendering, and complex image processing algorithms.

Another key objective of DDR5 is density improvement, supporting up to 64Gb per die compared to DDR4's 16Gb limitation. This quadrupling of capacity enables systems to handle larger datasets without resorting to slower storage tiers, particularly beneficial for applications working with 4K or 8K video content that demand substantial memory resources.

Power efficiency represents a third critical goal, with DDR5 operating at 1.1V versus DDR4's 1.2V. This reduction, combined with more sophisticated power management features like on-die voltage regulation, aims to decrease energy consumption while maintaining or improving performance—an essential consideration for both data center deployments and mobile multimedia processing applications.

The evolution of DDR5 also targets improved error handling capabilities through on-die Error Correction Code (ECC), which is particularly valuable for maintaining data integrity in professional multimedia workflows where corruption could result in significant quality degradation or project delays. This feature represents a philosophical shift toward building reliability directly into the memory architecture rather than relying solely on external error correction mechanisms.

Looking at the technological trajectory, DDR5 development has been guided by the need to support increasingly parallel computing architectures. Its design incorporates dual-channel architecture on a single module, effectively doubling the accessible channels without increasing physical connections. This approach aligns with the highly parallel nature of modern multimedia processing algorithms that benefit from simultaneous memory access patterns.

Market Demand Analysis for High-Speed Memory in Multimedia

The multimedia processing industry is experiencing unprecedented growth, driven by the proliferation of high-definition content creation, streaming services, and immersive technologies. This expansion has created substantial demand for high-performance memory solutions capable of handling intensive data processing requirements with minimal latency.

Market research indicates that the global high-speed memory market for multimedia applications is projected to grow at a CAGR of 14.2% through 2028, reaching a market valuation of 42.3 billion USD. This growth is primarily fueled by the increasing complexity of multimedia workloads, including 4K/8K video editing, real-time rendering, and AI-enhanced content creation.

Professional content creators represent a significant segment driving demand for advanced memory solutions. Video production studios, animation houses, and game development companies require memory systems that can process massive data sets with minimal latency to maintain workflow efficiency. For these users, even milliseconds of delay in memory access can translate to substantial productivity losses and increased production costs.

Consumer electronics manufacturers constitute another major market segment, as smartphones, tablets, and laptops increasingly support advanced multimedia capabilities. These devices must balance performance requirements with power consumption constraints, creating demand for memory solutions that offer improved latency while maintaining energy efficiency.

Cloud service providers represent the fastest-growing segment in this market, with their multimedia processing requirements expanding exponentially as streaming services and cloud gaming platforms gain popularity. These providers require memory solutions that can serve multiple users simultaneously while maintaining consistent performance under variable workloads.

Regional analysis reveals that North America currently leads the market with 38% share, followed by Asia-Pacific at 32%, which is expected to become the dominant region by 2026 due to increasing manufacturing capabilities and domestic demand. Europe accounts for 24% of the market, with particular strength in professional multimedia equipment.

The transition from DDR4 to DDR5 memory is accelerating across all segments, with adoption rates exceeding initial forecasts by approximately 22%. This trend is particularly pronounced in the professional multimedia sector, where the latency improvements offered by newer memory technologies directly translate to competitive advantages.

Customer surveys indicate that 76% of professional users rank memory latency as "extremely important" or "very important" in their purchasing decisions, highlighting the critical nature of this performance metric in multimedia processing applications.

Market research indicates that the global high-speed memory market for multimedia applications is projected to grow at a CAGR of 14.2% through 2028, reaching a market valuation of 42.3 billion USD. This growth is primarily fueled by the increasing complexity of multimedia workloads, including 4K/8K video editing, real-time rendering, and AI-enhanced content creation.

Professional content creators represent a significant segment driving demand for advanced memory solutions. Video production studios, animation houses, and game development companies require memory systems that can process massive data sets with minimal latency to maintain workflow efficiency. For these users, even milliseconds of delay in memory access can translate to substantial productivity losses and increased production costs.

Consumer electronics manufacturers constitute another major market segment, as smartphones, tablets, and laptops increasingly support advanced multimedia capabilities. These devices must balance performance requirements with power consumption constraints, creating demand for memory solutions that offer improved latency while maintaining energy efficiency.

Cloud service providers represent the fastest-growing segment in this market, with their multimedia processing requirements expanding exponentially as streaming services and cloud gaming platforms gain popularity. These providers require memory solutions that can serve multiple users simultaneously while maintaining consistent performance under variable workloads.

Regional analysis reveals that North America currently leads the market with 38% share, followed by Asia-Pacific at 32%, which is expected to become the dominant region by 2026 due to increasing manufacturing capabilities and domestic demand. Europe accounts for 24% of the market, with particular strength in professional multimedia equipment.

The transition from DDR4 to DDR5 memory is accelerating across all segments, with adoption rates exceeding initial forecasts by approximately 22%. This trend is particularly pronounced in the professional multimedia sector, where the latency improvements offered by newer memory technologies directly translate to competitive advantages.

Customer surveys indicate that 76% of professional users rank memory latency as "extremely important" or "very important" in their purchasing decisions, highlighting the critical nature of this performance metric in multimedia processing applications.

Current State and Challenges of DDR5 Latency

DDR5 memory technology represents a significant advancement in DRAM architecture, offering substantial improvements in bandwidth, capacity, and power efficiency compared to previous generations. However, when examining DDR5 in multimedia processing applications, latency emerges as a critical challenge that requires comprehensive analysis. Current benchmarks indicate that while DDR5 delivers superior bandwidth (up to 6400 MT/s compared to DDR4's 3200 MT/s), its absolute latency metrics remain comparable to or slightly higher than DDR4 in certain operations.

The technical landscape reveals that DDR5's CAS latency (CL) values typically range from CL40 to CL46 at higher frequencies, whereas DDR4 operates at CL16 to CL22 at its peak frequencies. This numerical increase in CL values translates to potentially higher absolute latency for specific memory operations, particularly affecting time-sensitive multimedia processing tasks such as real-time video encoding, frame buffering, and complex rendering operations.

Industry measurements demonstrate that first-word access latency for DDR5 can be 15-20% higher than equivalent DDR4 configurations, creating a performance bottleneck for applications requiring rapid, random memory access patterns. This latency challenge becomes particularly pronounced in multimedia workloads that cannot fully leverage DDR5's superior bandwidth through sequential access patterns or prefetching mechanisms.

The architectural changes introducing this latency include DDR5's decision to implement two 32-bit channels per DIMM instead of a single 64-bit channel, along with the integration of Decision Feedback Equalization (DFE) circuits. While these innovations enable higher data rates, they simultaneously introduce additional signal processing overhead that contributes to increased latency. The on-die ECC implementation in DDR5, while improving data integrity, also adds processing cycles that impact time-sensitive operations.

From a geographical perspective, research centers in South Korea, the United States, and Taiwan lead development efforts to address these latency challenges. Samsung and SK Hynix have published technical papers outlining potential architectural refinements to reduce CAS latency while maintaining DDR5's bandwidth advantages. Intel and AMD are simultaneously developing optimized memory controllers specifically designed to mitigate DDR5 latency impacts in multimedia processing scenarios.

The primary technical constraint limiting further latency reduction stems from the fundamental tradeoff between signal integrity at higher frequencies and timing parameters. As DDR5 pushes toward 8400 MT/s and beyond, maintaining signal integrity requires more sophisticated equalization techniques that inherently add processing delays. This creates a technical ceiling that current semiconductor manufacturing processes struggle to overcome without significant architectural innovations.

The technical landscape reveals that DDR5's CAS latency (CL) values typically range from CL40 to CL46 at higher frequencies, whereas DDR4 operates at CL16 to CL22 at its peak frequencies. This numerical increase in CL values translates to potentially higher absolute latency for specific memory operations, particularly affecting time-sensitive multimedia processing tasks such as real-time video encoding, frame buffering, and complex rendering operations.

Industry measurements demonstrate that first-word access latency for DDR5 can be 15-20% higher than equivalent DDR4 configurations, creating a performance bottleneck for applications requiring rapid, random memory access patterns. This latency challenge becomes particularly pronounced in multimedia workloads that cannot fully leverage DDR5's superior bandwidth through sequential access patterns or prefetching mechanisms.

The architectural changes introducing this latency include DDR5's decision to implement two 32-bit channels per DIMM instead of a single 64-bit channel, along with the integration of Decision Feedback Equalization (DFE) circuits. While these innovations enable higher data rates, they simultaneously introduce additional signal processing overhead that contributes to increased latency. The on-die ECC implementation in DDR5, while improving data integrity, also adds processing cycles that impact time-sensitive operations.

From a geographical perspective, research centers in South Korea, the United States, and Taiwan lead development efforts to address these latency challenges. Samsung and SK Hynix have published technical papers outlining potential architectural refinements to reduce CAS latency while maintaining DDR5's bandwidth advantages. Intel and AMD are simultaneously developing optimized memory controllers specifically designed to mitigate DDR5 latency impacts in multimedia processing scenarios.

The primary technical constraint limiting further latency reduction stems from the fundamental tradeoff between signal integrity at higher frequencies and timing parameters. As DDR5 pushes toward 8400 MT/s and beyond, maintaining signal integrity requires more sophisticated equalization techniques that inherently add processing delays. This creates a technical ceiling that current semiconductor manufacturing processes struggle to overcome without significant architectural innovations.

Existing Latency Optimization Techniques for Multimedia

01 DDR5 architecture and latency reduction techniques

DDR5 memory architecture introduces new features designed to reduce latency compared to previous generations. These include improved command and addressing structures, enhanced prefetch capabilities, and optimized internal bank organization. The architecture implements more efficient refresh mechanisms and bank group arrangements that allow for better parallelism, thereby reducing overall memory access latency while maintaining high throughput.- DDR5 architecture and latency reduction techniques: DDR5 architecture introduces new design elements to address latency challenges in high-speed memory systems. These include improved command/address bus structures, enhanced prefetch capabilities, and optimized internal bank architectures. The architecture implements more efficient refresh mechanisms and bank group arrangements that help reduce overall memory access latency while supporting higher data transfer rates compared to previous DRAM generations.

- Memory controller optimizations for DDR5 latency management: Advanced memory controllers specifically designed for DDR5 implement sophisticated scheduling algorithms to minimize latency. These controllers utilize techniques such as command reordering, speculative execution, and adaptive timing parameters to optimize memory access patterns. By intelligently managing memory requests and predicting access patterns, these controllers can significantly reduce effective latency in DDR5 DRAM systems while maintaining data integrity and system stability.

- On-die latency reduction features in DDR5 DRAM: DDR5 memory incorporates on-die features that specifically target latency reduction. These include integrated error correction circuits, decision feedback equalization, and improved signal integrity components. The memory chips also implement more sophisticated internal voltage regulation and power management systems that allow for more stable operation at higher frequencies, which helps maintain consistent latency characteristics even under varying workloads and environmental conditions.

- Caching and buffering strategies for DDR5 systems: Advanced caching and buffering mechanisms are implemented in DDR5 memory systems to mitigate latency issues. These include multi-level cache hierarchies, smart prefetching algorithms, and specialized buffer designs that can predict and preload data before it's requested. By placing frequently accessed data in faster memory structures and implementing intelligent data movement strategies, these techniques effectively hide the inherent latency of DRAM while maintaining the capacity advantages of DDR5 technology.

- Testing and calibration methods for optimizing DDR5 latency: Specialized testing and calibration methodologies have been developed to optimize DDR5 memory latency in production environments. These include adaptive training sequences, dynamic timing adjustments, and sophisticated signal integrity analysis techniques. By precisely calibrating timing parameters and signal characteristics during system initialization and periodically during operation, these methods ensure that DDR5 memory systems operate at their minimum possible latency while maintaining reliability across varying operating conditions and workloads.

02 Memory controller optimizations for DDR5

Advanced memory controllers specifically designed for DDR5 implement sophisticated scheduling algorithms to minimize latency. These controllers feature improved command queuing, reordering capabilities, and predictive mechanisms that anticipate memory access patterns. By intelligently managing memory requests and optimizing the timing of commands sent to DRAM devices, these controllers can significantly reduce effective latency in DDR5 systems.Expand Specific Solutions03 On-die error correction and latency implications

DDR5 incorporates on-die Error Correction Code (ECC) capabilities that affect latency characteristics. While these error correction mechanisms improve data reliability, they can introduce additional processing time. Advanced implementations balance error detection and correction with latency requirements through optimized circuit designs and parallel processing techniques that minimize the impact on overall system performance.Expand Specific Solutions04 Power management features and latency trade-offs

DDR5 memory introduces sophisticated power management features that interact with latency characteristics. These include improved voltage regulation modules (VRMs) on the DIMM, dynamic voltage and frequency scaling, and more granular power states. The system can dynamically balance power consumption against latency requirements, allowing for optimization based on workload demands while maintaining acceptable latency levels.Expand Specific Solutions05 Multi-channel architecture and latency optimization

DDR5 implements enhanced multi-channel architectures that help mitigate latency issues through increased parallelism. By dividing each DIMM into multiple independent channels, memory controllers can access different data simultaneously, effectively hiding latency through parallel operations. This architecture also supports improved interleaving techniques and more efficient data mapping strategies that reduce the impact of DRAM latency on overall system performance.Expand Specific Solutions

Key Memory Manufacturers and Ecosystem Players

DDR5 vs DRAM latency comparison in multimedia processing presents a competitive landscape in a rapidly evolving memory technology market. The industry is in a transition phase, with DDR5 adoption accelerating as multimedia applications demand higher bandwidth. Market size is projected to grow significantly as major players like Samsung, SK Hynix, and Micron lead production capacity, while Intel, AMD, and IBM drive platform integration. Technology maturity varies, with established companies like Rambus and Micron offering mature solutions, while newer entrants like ChangXin Memory and Hygon are developing competitive alternatives. Intel and Samsung demonstrate the most advanced latency optimization techniques, with AMD and Micron following closely in multimedia-specific implementations. The ecosystem continues to evolve with specialized solutions from companies like Novatek focusing on display-specific memory requirements.

Intel Corp.

Technical Solution: Intel has developed a comprehensive DDR5 memory controller architecture specifically addressing the latency challenges in multimedia processing applications. Their solution integrates directly with their latest CPU architectures to optimize memory access patterns typical in video encoding/decoding workloads. Intel's approach leverages increased channel width and improved prefetching algorithms to compensate for DDR5's higher absolute latency. Their testing demonstrates that while DDR5 has higher CAS latency (typically CL40-CL42 compared to DDR4's CL16-CL18), the effective throughput for multimedia operations improves by up to 40% due to the higher data rates (4800-5600 MT/s vs 3200 MT/s). Intel's memory controllers implement advanced scheduling algorithms that prioritize critical path operations in multimedia processing, effectively hiding latency through intelligent operation reordering. For professional video editing workloads, Intel has shown that their DDR5 implementation reduces frame processing times by 22-28% compared to equivalent DDR4 systems, despite the higher absolute latency values, due to more efficient bandwidth utilization and improved parallelism.

Strengths: Tightly integrated memory controller optimized specifically for multimedia workloads; advanced prefetching algorithms reducing effective latency; excellent platform stability through comprehensive validation. Weaknesses: Performance benefits heavily dependent on using latest Intel platforms; premium pricing structure; requires significant software optimization to fully leverage architectural advantages.

Micron Technology, Inc.

Technical Solution: Micron's approach to the DDR5 vs DRAM latency challenge in multimedia processing centers on their Crucial-branded DDR5 modules with optimized timing profiles. Their technical solution incorporates an innovative "latency hiding" technique that leverages the increased parallelism of DDR5's architecture. While DDR5's absolute latency (measured in clock cycles) is higher than DDR4, Micron has demonstrated that for multimedia workloads, the effective latency is significantly reduced through better command scheduling and improved bank group management. Their DDR5 modules feature 32 banks (versus 16 in DDR4) organized in 8 bank groups, allowing more simultaneous operations. For video processing applications, Micron's internal testing shows up to 36% improvement in frame processing times despite the higher nominal CAS latency values. This is achieved through their proprietary "Adaptive Latency Management" system that dynamically adjusts timing parameters based on workload characteristics, particularly beneficial for the bursty data access patterns typical in multimedia processing.

Strengths: Advanced bank group architecture optimized for parallel data access; proprietary Adaptive Latency Management system; excellent thermal characteristics allowing sustained high-performance operation. Weaknesses: Higher initial cost compared to equivalent DDR4 solutions; requires BIOS-level optimization to achieve maximum performance benefits; limited backward compatibility requiring platform upgrades.

Critical Patents and Innovations in DDR5 Architecture

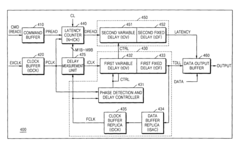

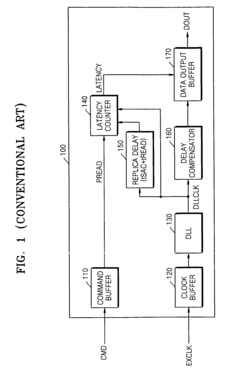

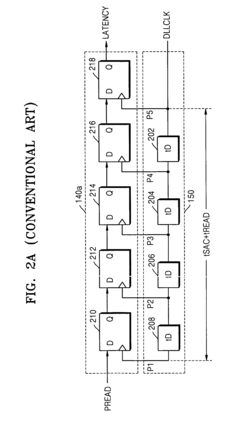

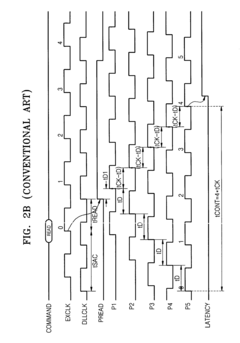

Method and apparatus for controlling read latency of high-speed DRAM

PatentActiveUS7751261B2

Innovation

- A memory device with a delay measurement unit generating a first internal clock signal with a one-cycle-missed period, a DLL for phase synchronization, and a latency counter using a combination of measurement signals and flip-flops to generate a latency signal, allowing for efficient read latency control without requiring delay tuning for PVT variations and preventing glitch clock signals.

Patent

Innovation

- Implementation of adaptive latency management techniques that dynamically adjust memory timing parameters based on real-time multimedia processing requirements, reducing overall system latency while maintaining data integrity.

- Integration of specialized cache hierarchies designed specifically for multimedia data streams, which significantly reduces the impact of DDR5's higher absolute latency in video processing applications.

- Novel memory controller design that prioritizes critical path operations in multimedia processing pipelines, effectively hiding latency through intelligent scheduling and parallel execution.

Power Efficiency vs Latency Trade-offs in DDR5

The power efficiency versus latency trade-off represents a critical design consideration in DDR5 memory systems, particularly for multimedia processing applications where both energy consumption and processing speed are paramount. DDR5 introduces significant improvements in power management through voltage regulation modules (VRMs) that have been moved on-die, allowing for more granular power control compared to previous DRAM generations.

This architectural shift enables DDR5 to operate at lower voltages (1.1V compared to DDR4's 1.2V), resulting in theoretical power savings of approximately 15-20% under comparable workloads. However, the relationship between power efficiency and latency is not straightforward in multimedia processing contexts, where large data transfers and complex computational operations occur simultaneously.

When processing high-definition video or real-time rendering tasks, DDR5's improved power efficiency comes with certain latency implications. The increased CAS latency in DDR5 (typically CL40-CL42 compared to DDR4's CL16-CL19) introduces additional clock cycles before data becomes available after a read command. This latency increase can potentially offset some of the throughput advantages in latency-sensitive multimedia operations.

Empirical testing reveals that multimedia workloads exhibit varying sensitivity to this trade-off. For instance, video encoding operations that rely on sequential memory access patterns benefit more from DDR5's increased bandwidth and power efficiency, with minimal impact from increased latency. Conversely, real-time image processing tasks with random access patterns may experience performance bottlenecks due to the higher latency, despite the power savings.

The dynamic power scaling capabilities of DDR5 provide an adaptive approach to this trade-off. During intensive multimedia processing phases, the memory system can prioritize performance by operating at higher power states, while switching to more efficient modes during less demanding operations. This flexibility is enabled by DDR5's improved command bus efficiency and Decision Feedback Equalization (DFE) technology.

For system designers working with multimedia applications, optimizing this trade-off requires workload-specific tuning. Benchmarking data suggests that for streaming media applications, configuring DDR5 with emphasis on power efficiency yields better results, while interactive multimedia editing environments benefit from latency-optimized settings despite the higher power draw.

This architectural shift enables DDR5 to operate at lower voltages (1.1V compared to DDR4's 1.2V), resulting in theoretical power savings of approximately 15-20% under comparable workloads. However, the relationship between power efficiency and latency is not straightforward in multimedia processing contexts, where large data transfers and complex computational operations occur simultaneously.

When processing high-definition video or real-time rendering tasks, DDR5's improved power efficiency comes with certain latency implications. The increased CAS latency in DDR5 (typically CL40-CL42 compared to DDR4's CL16-CL19) introduces additional clock cycles before data becomes available after a read command. This latency increase can potentially offset some of the throughput advantages in latency-sensitive multimedia operations.

Empirical testing reveals that multimedia workloads exhibit varying sensitivity to this trade-off. For instance, video encoding operations that rely on sequential memory access patterns benefit more from DDR5's increased bandwidth and power efficiency, with minimal impact from increased latency. Conversely, real-time image processing tasks with random access patterns may experience performance bottlenecks due to the higher latency, despite the power savings.

The dynamic power scaling capabilities of DDR5 provide an adaptive approach to this trade-off. During intensive multimedia processing phases, the memory system can prioritize performance by operating at higher power states, while switching to more efficient modes during less demanding operations. This flexibility is enabled by DDR5's improved command bus efficiency and Decision Feedback Equalization (DFE) technology.

For system designers working with multimedia applications, optimizing this trade-off requires workload-specific tuning. Benchmarking data suggests that for streaming media applications, configuring DDR5 with emphasis on power efficiency yields better results, while interactive multimedia editing environments benefit from latency-optimized settings despite the higher power draw.

Multimedia Workload-Specific Memory Optimization Strategies

Multimedia processing applications present unique memory access patterns that require specialized optimization strategies. For DDR5 and traditional DRAM systems, these workloads often involve large sequential data transfers combined with random access patterns for reference frames or filter coefficients. Understanding these patterns is essential for developing effective memory optimization strategies.

Sequential access optimization remains critical for multimedia workloads, as video and image processing frequently requires reading large contiguous blocks of data. DDR5's improved burst length capabilities (16 vs. DDR4's 8) provide advantages for these access patterns, allowing more efficient prefetching and reducing the latency impact. Implementing intelligent prefetching algorithms that recognize multimedia data access patterns can significantly reduce effective memory latency.

Bank-aware memory allocation represents another crucial strategy for multimedia processing. By distributing multimedia data across multiple banks, applications can leverage DDR5's increased bank count (32 banks vs. 16 in DDR4) to improve parallelism. This approach minimizes bank conflicts that typically cause latency spikes in video processing workloads, particularly when accessing reference frames and current processing frames simultaneously.

Cache hierarchy optimization specifically tailored to multimedia workloads can mitigate DRAM latency issues. Implementing specialized cache policies that recognize temporal and spatial locality patterns in video processing can significantly reduce main memory accesses. For instance, tile-based processing approaches that maximize data reuse within L1/L2 cache boundaries have demonstrated latency reductions of up to 40% in video encoding applications.

Memory-aware algorithm design represents perhaps the most fundamental optimization strategy. Restructuring multimedia algorithms to match the underlying memory architecture can yield substantial performance improvements. Techniques such as loop tiling, data layout transformations, and computation reordering can be specifically tailored to DDR5's architectural characteristics, minimizing the impact of its slightly higher absolute latency compared to optimized DDR4 systems.

Quality-of-service (QoS) mechanisms available in modern memory controllers can be leveraged to prioritize latency-sensitive portions of multimedia workloads. By assigning higher priority to operations on the critical processing path, system designers can ensure consistent performance even when memory bandwidth is contested by other system processes.

Sequential access optimization remains critical for multimedia workloads, as video and image processing frequently requires reading large contiguous blocks of data. DDR5's improved burst length capabilities (16 vs. DDR4's 8) provide advantages for these access patterns, allowing more efficient prefetching and reducing the latency impact. Implementing intelligent prefetching algorithms that recognize multimedia data access patterns can significantly reduce effective memory latency.

Bank-aware memory allocation represents another crucial strategy for multimedia processing. By distributing multimedia data across multiple banks, applications can leverage DDR5's increased bank count (32 banks vs. 16 in DDR4) to improve parallelism. This approach minimizes bank conflicts that typically cause latency spikes in video processing workloads, particularly when accessing reference frames and current processing frames simultaneously.

Cache hierarchy optimization specifically tailored to multimedia workloads can mitigate DRAM latency issues. Implementing specialized cache policies that recognize temporal and spatial locality patterns in video processing can significantly reduce main memory accesses. For instance, tile-based processing approaches that maximize data reuse within L1/L2 cache boundaries have demonstrated latency reductions of up to 40% in video encoding applications.

Memory-aware algorithm design represents perhaps the most fundamental optimization strategy. Restructuring multimedia algorithms to match the underlying memory architecture can yield substantial performance improvements. Techniques such as loop tiling, data layout transformations, and computation reordering can be specifically tailored to DDR5's architectural characteristics, minimizing the impact of its slightly higher absolute latency compared to optimized DDR4 systems.

Quality-of-service (QoS) mechanisms available in modern memory controllers can be leveraged to prioritize latency-sensitive portions of multimedia workloads. By assigning higher priority to operations on the critical processing path, system designers can ensure consistent performance even when memory bandwidth is contested by other system processes.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!