High-Throughput Experimentation in Microbial Bioprocessing Technologies

SEP 25, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Microbial Bioprocessing Evolution and Objectives

Microbial bioprocessing has evolved significantly over the past century, transforming from traditional fermentation practices to sophisticated biotechnological applications. The field began with empirical approaches to fermentation in the early 20th century, followed by the development of antibiotics production during the mid-century. The discovery of recombinant DNA technology in the 1970s revolutionized the field, enabling the engineering of microorganisms for specific purposes.

The advent of systems biology and omics technologies in the early 2000s further accelerated progress, allowing researchers to understand microbial metabolism at unprecedented levels of detail. This period saw the emergence of metabolic engineering as a discipline, focused on optimizing microbial strains for industrial applications. The integration of computational tools and modeling approaches has been instrumental in predicting microbial behavior and designing more efficient bioprocesses.

Recent years have witnessed a paradigm shift toward high-throughput experimentation (HTE) in microbial bioprocessing. This approach enables parallel testing of multiple conditions, significantly reducing development time and resources. The convergence of automation, miniaturization, and data analytics has made HTE increasingly accessible and powerful for industrial applications.

The primary objective of high-throughput experimentation in microbial bioprocessing is to accelerate the development cycle from concept to commercial implementation. This includes rapid screening of microbial strains, optimization of culture conditions, and process parameter identification. By generating large datasets quickly, HTE aims to identify optimal conditions that might be missed using traditional sequential experimentation.

Another critical goal is to enhance process robustness and scalability. HTE allows for systematic exploration of parameter spaces, identifying conditions that maintain performance across different scales and environmental variations. This addresses one of the most significant challenges in bioprocessing: the translation of laboratory results to industrial-scale production.

The field is increasingly focused on sustainability objectives, with HTE being deployed to develop microbial processes that reduce environmental footprints. This includes optimizing resource utilization, minimizing waste generation, and developing alternatives to petrochemical-based products. The ultimate vision is to establish microbial bioprocessing as a cornerstone of the circular bioeconomy.

Looking forward, the technical trajectory points toward integrating artificial intelligence and machine learning with HTE platforms to create self-optimizing bioprocesses. This represents the next frontier in the evolution of microbial bioprocessing technologies, promising unprecedented levels of efficiency and innovation in biomanufacturing.

The advent of systems biology and omics technologies in the early 2000s further accelerated progress, allowing researchers to understand microbial metabolism at unprecedented levels of detail. This period saw the emergence of metabolic engineering as a discipline, focused on optimizing microbial strains for industrial applications. The integration of computational tools and modeling approaches has been instrumental in predicting microbial behavior and designing more efficient bioprocesses.

Recent years have witnessed a paradigm shift toward high-throughput experimentation (HTE) in microbial bioprocessing. This approach enables parallel testing of multiple conditions, significantly reducing development time and resources. The convergence of automation, miniaturization, and data analytics has made HTE increasingly accessible and powerful for industrial applications.

The primary objective of high-throughput experimentation in microbial bioprocessing is to accelerate the development cycle from concept to commercial implementation. This includes rapid screening of microbial strains, optimization of culture conditions, and process parameter identification. By generating large datasets quickly, HTE aims to identify optimal conditions that might be missed using traditional sequential experimentation.

Another critical goal is to enhance process robustness and scalability. HTE allows for systematic exploration of parameter spaces, identifying conditions that maintain performance across different scales and environmental variations. This addresses one of the most significant challenges in bioprocessing: the translation of laboratory results to industrial-scale production.

The field is increasingly focused on sustainability objectives, with HTE being deployed to develop microbial processes that reduce environmental footprints. This includes optimizing resource utilization, minimizing waste generation, and developing alternatives to petrochemical-based products. The ultimate vision is to establish microbial bioprocessing as a cornerstone of the circular bioeconomy.

Looking forward, the technical trajectory points toward integrating artificial intelligence and machine learning with HTE platforms to create self-optimizing bioprocesses. This represents the next frontier in the evolution of microbial bioprocessing technologies, promising unprecedented levels of efficiency and innovation in biomanufacturing.

Market Analysis for High-Throughput Bioprocessing Solutions

The global market for high-throughput bioprocessing solutions is experiencing robust growth, driven by increasing demand for biopharmaceuticals, sustainable bioproducts, and the need for more efficient microbial strain development. Current market valuations indicate that the high-throughput experimentation (HTE) segment in bioprocessing technologies reached approximately $4.2 billion in 2022, with projections suggesting a compound annual growth rate of 12.7% through 2028.

Biopharmaceutical companies represent the largest market segment, accounting for nearly 45% of the total market share. These organizations are increasingly adopting high-throughput technologies to accelerate drug discovery and development processes, reducing time-to-market for novel therapeutics. The industrial biotechnology sector follows closely, with growing applications in enzyme production, biofuels, and specialty chemicals manufacturing.

Regionally, North America dominates the market with approximately 38% share, followed by Europe at 31% and Asia-Pacific at 24%. However, the Asia-Pacific region is demonstrating the fastest growth rate at 15.3% annually, primarily driven by expanding biotechnology sectors in China, India, and South Korea, coupled with increasing government investments in bioprocessing infrastructure.

Key market drivers include the rising prevalence of chronic diseases necessitating new therapeutic approaches, growing pressure to reduce pharmaceutical development costs, and increasing adoption of personalized medicine approaches requiring more flexible manufacturing solutions. Additionally, sustainability initiatives across industries are creating demand for bio-based alternatives to traditional chemical processes.

Market restraints include high initial investment costs for implementing high-throughput systems, technical challenges in data management and integration, and regulatory complexities associated with novel bioprocessing methods. The average return on investment period for comprehensive high-throughput bioprocessing platforms ranges from 2.5 to 3.5 years, presenting a significant barrier for smaller organizations.

Customer segmentation reveals three primary buyer categories: large pharmaceutical corporations seeking comprehensive integrated solutions, mid-sized biotechnology companies requiring modular and scalable systems, and academic/research institutions focusing on specialized high-throughput applications for specific research domains.

The market demonstrates a clear trend toward integrated solutions that combine automated liquid handling, advanced bioreactors, real-time analytics, and artificial intelligence-powered data processing capabilities. End-users increasingly demand systems that can seamlessly transition from laboratory-scale experimentation to production-scale implementation, highlighting the importance of scalability in product development strategies.

Biopharmaceutical companies represent the largest market segment, accounting for nearly 45% of the total market share. These organizations are increasingly adopting high-throughput technologies to accelerate drug discovery and development processes, reducing time-to-market for novel therapeutics. The industrial biotechnology sector follows closely, with growing applications in enzyme production, biofuels, and specialty chemicals manufacturing.

Regionally, North America dominates the market with approximately 38% share, followed by Europe at 31% and Asia-Pacific at 24%. However, the Asia-Pacific region is demonstrating the fastest growth rate at 15.3% annually, primarily driven by expanding biotechnology sectors in China, India, and South Korea, coupled with increasing government investments in bioprocessing infrastructure.

Key market drivers include the rising prevalence of chronic diseases necessitating new therapeutic approaches, growing pressure to reduce pharmaceutical development costs, and increasing adoption of personalized medicine approaches requiring more flexible manufacturing solutions. Additionally, sustainability initiatives across industries are creating demand for bio-based alternatives to traditional chemical processes.

Market restraints include high initial investment costs for implementing high-throughput systems, technical challenges in data management and integration, and regulatory complexities associated with novel bioprocessing methods. The average return on investment period for comprehensive high-throughput bioprocessing platforms ranges from 2.5 to 3.5 years, presenting a significant barrier for smaller organizations.

Customer segmentation reveals three primary buyer categories: large pharmaceutical corporations seeking comprehensive integrated solutions, mid-sized biotechnology companies requiring modular and scalable systems, and academic/research institutions focusing on specialized high-throughput applications for specific research domains.

The market demonstrates a clear trend toward integrated solutions that combine automated liquid handling, advanced bioreactors, real-time analytics, and artificial intelligence-powered data processing capabilities. End-users increasingly demand systems that can seamlessly transition from laboratory-scale experimentation to production-scale implementation, highlighting the importance of scalability in product development strategies.

Current Landscape and Bottlenecks in Microbial HTE

High-throughput experimentation (HTE) in microbial bioprocessing has evolved significantly over the past decade, transforming from traditional sequential experimentation to parallel processing methodologies. Currently, the landscape encompasses various technological platforms including microbioreactors, microfluidic devices, and automated robotic systems that enable simultaneous testing of multiple parameters and strains. Leading research institutions and biotechnology companies have established dedicated HTE facilities, with notable implementations at MIT, Ginkgo Bioworks, and Amyris demonstrating the industrial relevance of these approaches.

Despite these advancements, several critical bottlenecks impede the widespread adoption and effectiveness of microbial HTE. Scalability remains a primary challenge, as results obtained from microscale experiments often fail to translate accurately to industrial production scales. This discrepancy stems from differences in mixing dynamics, oxygen transfer rates, and substrate availability between micro and macro environments, creating significant hurdles for process engineers attempting to bridge this gap.

Data management presents another substantial obstacle. The sheer volume of data generated through HTE approaches—often terabytes per experimental campaign—overwhelms traditional laboratory information management systems. Many facilities lack integrated data pipelines capable of efficiently processing, analyzing, and extracting meaningful insights from these massive datasets, resulting in underutilized experimental potential and delayed decision-making processes.

Standardization issues further complicate the HTE landscape. The absence of universally accepted protocols and metrics for microbial cultivation in high-throughput systems leads to inconsistent reporting and difficulties in comparing results across different laboratories and platforms. This fragmentation hinders collaborative efforts and slows the collective advancement of the field.

Technical limitations in sensing technologies represent another significant bottleneck. While optical measurements for cell density and fluorescence are well-established in HTE platforms, real-time monitoring of critical parameters such as metabolite concentrations, gene expression, and protein production remains challenging at microscale. This analytical gap restricts the depth of information obtainable from high-throughput experiments and limits their application in complex bioprocess development scenarios.

Workforce constraints also affect implementation, as the interdisciplinary nature of microbial HTE requires expertise spanning microbiology, automation engineering, data science, and bioprocess development. Organizations frequently struggle to assemble teams with the necessary skill combinations, creating operational inefficiencies and underutilization of sophisticated HTE infrastructure.

Regulatory considerations add another layer of complexity, particularly for pharmaceutical and food applications, where validation of HTE methodologies for quality control and process verification remains underdeveloped, creating uncertainty in regulatory pathways for products developed using these approaches.

Despite these advancements, several critical bottlenecks impede the widespread adoption and effectiveness of microbial HTE. Scalability remains a primary challenge, as results obtained from microscale experiments often fail to translate accurately to industrial production scales. This discrepancy stems from differences in mixing dynamics, oxygen transfer rates, and substrate availability between micro and macro environments, creating significant hurdles for process engineers attempting to bridge this gap.

Data management presents another substantial obstacle. The sheer volume of data generated through HTE approaches—often terabytes per experimental campaign—overwhelms traditional laboratory information management systems. Many facilities lack integrated data pipelines capable of efficiently processing, analyzing, and extracting meaningful insights from these massive datasets, resulting in underutilized experimental potential and delayed decision-making processes.

Standardization issues further complicate the HTE landscape. The absence of universally accepted protocols and metrics for microbial cultivation in high-throughput systems leads to inconsistent reporting and difficulties in comparing results across different laboratories and platforms. This fragmentation hinders collaborative efforts and slows the collective advancement of the field.

Technical limitations in sensing technologies represent another significant bottleneck. While optical measurements for cell density and fluorescence are well-established in HTE platforms, real-time monitoring of critical parameters such as metabolite concentrations, gene expression, and protein production remains challenging at microscale. This analytical gap restricts the depth of information obtainable from high-throughput experiments and limits their application in complex bioprocess development scenarios.

Workforce constraints also affect implementation, as the interdisciplinary nature of microbial HTE requires expertise spanning microbiology, automation engineering, data science, and bioprocess development. Organizations frequently struggle to assemble teams with the necessary skill combinations, creating operational inefficiencies and underutilization of sophisticated HTE infrastructure.

Regulatory considerations add another layer of complexity, particularly for pharmaceutical and food applications, where validation of HTE methodologies for quality control and process verification remains underdeveloped, creating uncertainty in regulatory pathways for products developed using these approaches.

Contemporary HTE Platforms for Microbial Cultivation

01 Automated laboratory systems for high-throughput experimentation

Automated laboratory systems can significantly increase experimental throughput by integrating robotics, liquid handling systems, and computerized control. These systems enable parallel processing of multiple samples simultaneously, reducing manual intervention and increasing efficiency. Advanced automation platforms can operate continuously, performing standardized protocols with precision and consistency, which is essential for high-throughput screening applications in drug discovery, materials science, and chemical synthesis.- Automated laboratory systems for high-throughput experimentation: Automated laboratory systems are designed to increase experimental throughput by integrating robotics, liquid handling systems, and data management tools. These systems can perform multiple experiments simultaneously, reducing manual intervention and increasing efficiency. They typically include sample preparation stations, reaction modules, and analysis equipment that work together to accelerate the research and development process.

- Parallel processing techniques for data analysis: Parallel processing techniques enable the simultaneous analysis of multiple experimental datasets, significantly increasing throughput in high-throughput experimentation. These methods distribute computational tasks across multiple processors or computing nodes, allowing for faster data processing and analysis. Advanced algorithms optimize resource allocation and minimize processing time, enabling researchers to handle large volumes of experimental data efficiently.

- Microfluidic technologies for experimental throughput enhancement: Microfluidic technologies enable the miniaturization of experimental processes, allowing for higher throughput with reduced sample volumes. These systems use microscale channels and chambers to perform reactions and analyses at significantly smaller scales than traditional methods. By integrating multiple experimental steps on a single chip, microfluidic platforms can dramatically increase the number of experiments performed simultaneously while reducing reagent consumption and waste generation.

- Machine learning and AI for experimental optimization: Machine learning and artificial intelligence techniques are increasingly applied to high-throughput experimentation to optimize experimental design and increase throughput. These computational approaches can predict experimental outcomes, identify promising experimental conditions, and reduce the number of experiments needed to achieve desired results. By learning from previous experimental data, AI systems can guide researchers toward more efficient experimental pathways, significantly enhancing overall throughput.

- Networked instrumentation and cloud-based data management: Networked instrumentation and cloud-based data management systems enable seamless integration of experimental equipment and data analysis tools, enhancing throughput in high-throughput experimentation. These systems allow for real-time data collection, remote monitoring of experiments, and collaborative analysis across different locations. By eliminating data transfer bottlenecks and enabling automated workflows, these technologies significantly increase the speed and efficiency of experimental processes.

02 Data processing and analysis methods for high-throughput experiments

Specialized data processing algorithms and analysis methods are crucial for handling the large volumes of data generated in high-throughput experimentation. These methods include machine learning approaches, statistical analysis tools, and pattern recognition techniques that can rapidly process experimental results. Advanced software solutions enable real-time data analysis, visualization of complex datasets, and automated decision-making to guide subsequent experiments, thereby maximizing the efficiency and value of high-throughput workflows.Expand Specific Solutions03 Parallel processing technologies for increased experimental throughput

Parallel processing technologies enable multiple experiments to be conducted simultaneously, dramatically increasing throughput compared to sequential approaches. These technologies include microfluidic devices, multi-well plate systems, and array-based platforms that allow for miniaturization and parallelization of reactions. By conducting numerous experiments under identical conditions, researchers can rapidly explore large parameter spaces, accelerate optimization processes, and identify promising candidates for further investigation.Expand Specific Solutions04 Network and computing infrastructure for high-throughput experimentation

Advanced network and computing infrastructure is essential for supporting high-throughput experimentation systems. This includes high-performance computing clusters, cloud-based resources, and specialized hardware accelerators that can process experimental data at scale. Distributed computing architectures enable real-time monitoring, remote operation of equipment, and seamless integration between experimental platforms and data analysis pipelines, creating efficient workflows that maximize experimental throughput.Expand Specific Solutions05 Integrated workflow management systems for optimizing experimental throughput

Integrated workflow management systems coordinate the various components of high-throughput experimentation platforms to optimize overall throughput. These systems provide scheduling algorithms, resource allocation tools, and process optimization capabilities that minimize bottlenecks and maximize equipment utilization. By intelligently managing experimental workflows, sample tracking, and data handling, these systems ensure continuous operation with minimal downtime, enabling laboratories to achieve maximum experimental throughput while maintaining data quality and experimental reproducibility.Expand Specific Solutions

Leading Organizations in Bioprocessing Automation

High-Throughput Experimentation (HTE) in microbial bioprocessing is currently in a growth phase, transitioning from early adoption to mainstream implementation. The global market is expanding rapidly, estimated at $1.5-2 billion with projected annual growth of 12-15% through 2030. Technologically, the field shows varying maturity levels across different applications. Industry leaders like DSM IP Assets BV and Novozymes A/S have established robust HTE platforms for strain development, while companies such as Isolation Bio, Microfluidx, and Cytiva are advancing microfluidic and automation technologies. Academic institutions including Huazhong University of Science & Technology and University of California are driving fundamental research. The competitive landscape features both established biotech corporations and specialized startups, with increasing collaboration between technology providers, academic institutions, and end-users to accelerate innovation and standardization.

DSM IP Assets BV

Technical Solution: DSM has developed an integrated high-throughput experimentation platform for microbial bioprocessing that combines advanced microbioreactor technology with sophisticated analytics and computational modeling. Their system features parallel miniaturized bioreactors with precise control of critical parameters including temperature, pH, dissolved oxygen, and nutrient feeding strategies. DSM's platform incorporates automated sampling and rapid analytics capabilities, allowing real-time monitoring of metabolites, substrate utilization, and product formation across hundreds of experimental conditions simultaneously. A key innovation in their approach is the development of scale-down models that accurately predict industrial-scale performance from microscale experiments, addressing one of the most significant challenges in bioprocess development. The platform employs machine learning algorithms to analyze complex datasets and identify optimal process conditions and microbial strain characteristics. DSM has also integrated high-throughput genome editing capabilities to rapidly generate and screen microbial strain libraries with targeted genetic modifications, accelerating the strain improvement process significantly compared to traditional methods.

Strengths: Extensive experience in industrial biotechnology with deep understanding of scale-up challenges; comprehensive integration of strain engineering and bioprocess development workflows; strong track record of commercial implementation across multiple industries. Weaknesses: Complex technology requiring significant expertise to operate effectively; substantial capital investment needed for full implementation; primarily optimized for DSM's specific industrial applications rather than general-purpose use.

Microfluidx Ltd.

Technical Solution: Microfluidx has developed a cutting-edge high-throughput experimentation platform specifically designed for microbial bioprocessing that centers on advanced microfluidic technology. Their system utilizes proprietary microfluidic chips capable of creating thousands of isolated microbial culture compartments, each functioning as a miniature bioreactor with precisely controlled environmental conditions. The platform incorporates sophisticated fluid handling capabilities that enable automated nutrient delivery, sampling, and process interventions across all culture compartments simultaneously. A distinctive feature of Microfluidx's technology is their integrated optical sensing system that provides real-time monitoring of multiple parameters including cell growth, metabolite production, and gene expression through fluorescent reporter systems. The company has developed specialized surface treatments for their microfluidic channels that minimize biofilm formation and ensure consistent cell behavior across experiments. Their platform includes automated image analysis software that extracts quantitative data from microscopy observations, enabling high-content phenotypic screening of microbial populations under various bioprocessing conditions. Microfluidx's system is particularly valuable for studying population heterogeneity and cellular responses to environmental fluctuations that occur in industrial bioprocesses.

Strengths: Unparalleled throughput capabilities with thousands of parallel experiments possible; exceptional control over microenvironments at single-cell resolution; minimal reagent consumption compared to conventional methods. Weaknesses: Relatively new technology with limited track record in industrial applications; challenges in directly translating microfluidic results to conventional bioreactor systems; specialized expertise required for operation and maintenance of sophisticated microfluidic systems.

Breakthrough Technologies in Microbial Screening

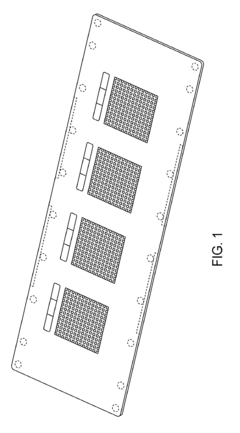

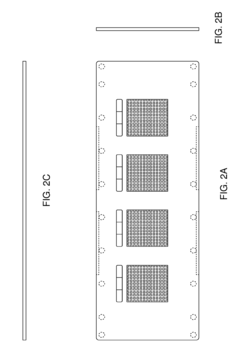

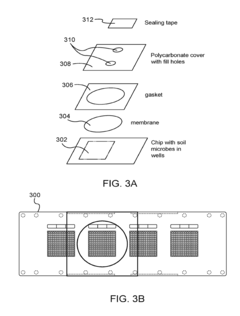

High resolution systems, kits, apparatus, and methods for bacterial community relationship determination and other high throughput microbiology applications

PatentActiveUS20180024096A1

Innovation

- A microfabricated device with an array of microwells, each containing a unique tag nucleic acid molecule for identifying biological entities, allows for high-throughput cultivation and screening by loading samples, incubating, amplifying genetic material, and sequencing to determine the presence and relationships of biological entities.

Method for micro-volume, one-pot, high-throughput protein production

PatentWO2022167661A1

Innovation

- A micro-volume, one-pot method involving ligase cycling reaction (LCR), rolling-circle amplification (RCA), and cell-free protein synthesis (CFPS) in a single reaction container at volumes of 5 pl or less, using minimal DNA starting material and optimized buffer conditions, significantly reducing cycles and interruptions.

Regulatory Framework for Accelerated Bioprocess Development

The regulatory landscape for high-throughput experimentation (HTE) in microbial bioprocessing is evolving rapidly to accommodate technological advancements while ensuring product safety and efficacy. Current regulatory frameworks from agencies such as the FDA, EMA, and NMPA have begun incorporating provisions for accelerated development pathways that leverage HTE data, though significant gaps remain in standardization.

Key regulatory considerations for HTE implementation include validation requirements for miniaturized systems, data integrity standards for automated platforms, and acceptance criteria for parallelized experiments. Regulatory bodies increasingly recognize the value of Quality by Design (QbD) principles when applied to HTE methodologies, allowing for more flexible regulatory approaches when robust design space characterization is demonstrated through high-throughput methods.

The FDA's Emerging Technology Program and similar initiatives from other regulatory bodies provide frameworks for early engagement on novel HTE technologies, offering opportunities for regulatory feedback before significant resources are committed. These programs have facilitated several successful case studies where HTE data supported accelerated approval timelines, particularly for microbial-derived biopharmaceuticals and industrial enzymes.

Harmonization efforts across international regulatory agencies remain incomplete, creating challenges for global implementation of HTE-based development strategies. The International Council for Harmonisation (ICH) has initiated working groups specifically addressing high-throughput methodologies in bioprocess development, though consensus guidelines are still under development. Regional differences in acceptance of HTE data for regulatory submissions persist, requiring tailored approaches for different markets.

Documentation requirements for HTE-derived data present unique challenges due to the volume and complexity of information generated. Regulatory expectations increasingly focus on demonstrating the relationship between miniaturized, high-throughput systems and traditional scale processes, with emphasis on establishing reliable scale-translation models. Successful regulatory submissions typically include comprehensive comparability studies between HTE and conventional process development data.

Looking forward, regulatory trends indicate movement toward more adaptive frameworks that can accommodate the rapid iteration enabled by HTE approaches. Risk-based regulatory strategies are gaining prominence, where the level of regulatory scrutiny is proportional to the identified risks rather than following rigid predetermined pathways. This evolution promises to better align regulatory processes with the accelerated timelines made possible through high-throughput experimentation in microbial bioprocessing.

Key regulatory considerations for HTE implementation include validation requirements for miniaturized systems, data integrity standards for automated platforms, and acceptance criteria for parallelized experiments. Regulatory bodies increasingly recognize the value of Quality by Design (QbD) principles when applied to HTE methodologies, allowing for more flexible regulatory approaches when robust design space characterization is demonstrated through high-throughput methods.

The FDA's Emerging Technology Program and similar initiatives from other regulatory bodies provide frameworks for early engagement on novel HTE technologies, offering opportunities for regulatory feedback before significant resources are committed. These programs have facilitated several successful case studies where HTE data supported accelerated approval timelines, particularly for microbial-derived biopharmaceuticals and industrial enzymes.

Harmonization efforts across international regulatory agencies remain incomplete, creating challenges for global implementation of HTE-based development strategies. The International Council for Harmonisation (ICH) has initiated working groups specifically addressing high-throughput methodologies in bioprocess development, though consensus guidelines are still under development. Regional differences in acceptance of HTE data for regulatory submissions persist, requiring tailored approaches for different markets.

Documentation requirements for HTE-derived data present unique challenges due to the volume and complexity of information generated. Regulatory expectations increasingly focus on demonstrating the relationship between miniaturized, high-throughput systems and traditional scale processes, with emphasis on establishing reliable scale-translation models. Successful regulatory submissions typically include comprehensive comparability studies between HTE and conventional process development data.

Looking forward, regulatory trends indicate movement toward more adaptive frameworks that can accommodate the rapid iteration enabled by HTE approaches. Risk-based regulatory strategies are gaining prominence, where the level of regulatory scrutiny is proportional to the identified risks rather than following rigid predetermined pathways. This evolution promises to better align regulatory processes with the accelerated timelines made possible through high-throughput experimentation in microbial bioprocessing.

Data Management Strategies for High-Throughput Bioprocessing

The exponential growth of data generated by high-throughput experimentation in microbial bioprocessing necessitates robust data management strategies. Current approaches integrate specialized laboratory information management systems (LIMS) with automated data acquisition systems to handle the volume, velocity, and variety of experimental data. These systems typically employ relational databases for structured data and NoSQL solutions for unstructured data, creating a hybrid architecture that balances performance with flexibility.

Cloud-based storage solutions have emerged as the predominant infrastructure choice, offering scalability and accessibility advantages over traditional on-premises systems. Major bioprocessing organizations have reported storage requirements growing at 50-70% annually, making elastic cloud resources particularly valuable. These platforms typically implement hierarchical data organization schemes that preserve experimental context while facilitating rapid retrieval.

Data standardization represents a critical challenge in the field. The adoption of community standards such as FAIR (Findable, Accessible, Interoperable, Reusable) principles has improved interoperability, though implementation remains inconsistent across the industry. Leading organizations have developed custom ontologies that extend existing standards like SBML (Systems Biology Markup Language) to accommodate the specific requirements of high-throughput microbial bioprocessing.

Real-time data processing pipelines have become essential components of modern bioprocessing operations. These systems employ edge computing architectures to perform initial data filtering and quality control at the instrument level, reducing network bandwidth requirements and enabling faster decision-making. Machine learning algorithms increasingly augment these pipelines, automatically identifying anomalous experimental conditions and suggesting corrective actions.

Data security and compliance considerations significantly influence management strategies, particularly for organizations operating under GMP (Good Manufacturing Practice) regulations. Comprehensive audit trails, electronic signatures, and role-based access controls are standard features in enterprise-grade systems. Encryption protocols for both data in transit and at rest have evolved to address the specific requirements of bioprocessing environments.

Long-term data preservation strategies typically implement tiered storage approaches, where frequently accessed data resides on high-performance media while historical data migrates to lower-cost archival solutions. Metadata management systems maintain relationships between archived datasets, ensuring that valuable historical context is not lost despite physical separation of the underlying data.

Integration with laboratory automation systems represents another frontier in bioprocessing data management. APIs (Application Programming Interfaces) and middleware solutions enable bidirectional communication between data repositories and experimental equipment, creating closed-loop systems that can autonomously adjust experimental parameters based on real-time analysis results.

Cloud-based storage solutions have emerged as the predominant infrastructure choice, offering scalability and accessibility advantages over traditional on-premises systems. Major bioprocessing organizations have reported storage requirements growing at 50-70% annually, making elastic cloud resources particularly valuable. These platforms typically implement hierarchical data organization schemes that preserve experimental context while facilitating rapid retrieval.

Data standardization represents a critical challenge in the field. The adoption of community standards such as FAIR (Findable, Accessible, Interoperable, Reusable) principles has improved interoperability, though implementation remains inconsistent across the industry. Leading organizations have developed custom ontologies that extend existing standards like SBML (Systems Biology Markup Language) to accommodate the specific requirements of high-throughput microbial bioprocessing.

Real-time data processing pipelines have become essential components of modern bioprocessing operations. These systems employ edge computing architectures to perform initial data filtering and quality control at the instrument level, reducing network bandwidth requirements and enabling faster decision-making. Machine learning algorithms increasingly augment these pipelines, automatically identifying anomalous experimental conditions and suggesting corrective actions.

Data security and compliance considerations significantly influence management strategies, particularly for organizations operating under GMP (Good Manufacturing Practice) regulations. Comprehensive audit trails, electronic signatures, and role-based access controls are standard features in enterprise-grade systems. Encryption protocols for both data in transit and at rest have evolved to address the specific requirements of bioprocessing environments.

Long-term data preservation strategies typically implement tiered storage approaches, where frequently accessed data resides on high-performance media while historical data migrates to lower-cost archival solutions. Metadata management systems maintain relationships between archived datasets, ensuring that valuable historical context is not lost despite physical separation of the underlying data.

Integration with laboratory automation systems represents another frontier in bioprocessing data management. APIs (Application Programming Interfaces) and middleware solutions enable bidirectional communication between data repositories and experimental equipment, creating closed-loop systems that can autonomously adjust experimental parameters based on real-time analysis results.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!