How Neuromorphic Chips Enhance Cognitive Computing Functions

OCT 9, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This field emerged in the late 1980s when Carver Mead first coined the term "neuromorphic," proposing electronic systems that mimic neuro-biological architectures. Over the past three decades, neuromorphic computing has evolved from theoretical concepts to practical implementations, with significant acceleration in development during the last decade due to the limitations of traditional von Neumann architectures in handling cognitive tasks efficiently.

The fundamental principle behind neuromorphic computing lies in its departure from conventional computing paradigms. While traditional computers process information sequentially through separate memory and processing units, neuromorphic systems integrate memory and computation, enabling parallel processing and event-driven operations. This architectural difference allows neuromorphic chips to excel at pattern recognition, sensory processing, and adaptive learning—capabilities that align closely with cognitive computing functions.

Current technological trends indicate a convergence of neuromorphic engineering with advances in materials science, nanotechnology, and artificial intelligence. The development trajectory shows increasing integration density, lower power consumption, and enhanced learning capabilities. Notable milestones include IBM's TrueNorth chip (2014), Intel's Loihi (2017), and BrainChip's Akida (2019), each representing significant advancements in neuromorphic architecture implementation.

The primary objective of neuromorphic computing research is to create hardware that can perform cognitive tasks with the efficiency and adaptability of biological neural systems. Specific goals include developing chips that can operate at extremely low power consumption (approaching the energy efficiency of the human brain at 20 watts), enable real-time learning without extensive training datasets, and support fault-tolerant computation through distributed processing.

Beyond technical objectives, neuromorphic computing aims to address fundamental limitations in artificial intelligence by providing hardware specifically designed for neural network operations. This includes overcoming the von Neumann bottleneck—the limited data transfer between memory and processing units—which constrains traditional computing architectures when implementing cognitive functions.

The field is increasingly focused on creating neuromorphic systems capable of unsupervised and reinforcement learning, allowing devices to adapt to new information without explicit programming. This direction aligns with the broader goal of developing more autonomous and intelligent systems that can operate effectively in dynamic, unpredictable environments—a critical requirement for next-generation cognitive computing applications in robotics, autonomous vehicles, healthcare diagnostics, and intelligent edge devices.

The fundamental principle behind neuromorphic computing lies in its departure from conventional computing paradigms. While traditional computers process information sequentially through separate memory and processing units, neuromorphic systems integrate memory and computation, enabling parallel processing and event-driven operations. This architectural difference allows neuromorphic chips to excel at pattern recognition, sensory processing, and adaptive learning—capabilities that align closely with cognitive computing functions.

Current technological trends indicate a convergence of neuromorphic engineering with advances in materials science, nanotechnology, and artificial intelligence. The development trajectory shows increasing integration density, lower power consumption, and enhanced learning capabilities. Notable milestones include IBM's TrueNorth chip (2014), Intel's Loihi (2017), and BrainChip's Akida (2019), each representing significant advancements in neuromorphic architecture implementation.

The primary objective of neuromorphic computing research is to create hardware that can perform cognitive tasks with the efficiency and adaptability of biological neural systems. Specific goals include developing chips that can operate at extremely low power consumption (approaching the energy efficiency of the human brain at 20 watts), enable real-time learning without extensive training datasets, and support fault-tolerant computation through distributed processing.

Beyond technical objectives, neuromorphic computing aims to address fundamental limitations in artificial intelligence by providing hardware specifically designed for neural network operations. This includes overcoming the von Neumann bottleneck—the limited data transfer between memory and processing units—which constrains traditional computing architectures when implementing cognitive functions.

The field is increasingly focused on creating neuromorphic systems capable of unsupervised and reinforcement learning, allowing devices to adapt to new information without explicit programming. This direction aligns with the broader goal of developing more autonomous and intelligent systems that can operate effectively in dynamic, unpredictable environments—a critical requirement for next-generation cognitive computing applications in robotics, autonomous vehicles, healthcare diagnostics, and intelligent edge devices.

Market Analysis for Cognitive Computing Solutions

The cognitive computing solutions market is experiencing robust growth, driven by increasing demand for AI-powered decision support systems across multiple industries. Current market valuations place the global cognitive computing sector at approximately $20 billion, with projections indicating a compound annual growth rate of 25-30% over the next five years. This accelerated growth trajectory is particularly evident in healthcare, finance, retail, and manufacturing sectors where complex data analysis requirements are creating substantial market opportunities.

Neuromorphic chip technology represents a significant disruptive force within this landscape, offering substantial performance advantages for cognitive computing applications. Market research indicates that organizations implementing neuromorphic-based cognitive solutions report 40-60% improvements in processing efficiency for pattern recognition tasks compared to traditional computing architectures. This efficiency gain translates directly to competitive advantage in time-sensitive applications such as fraud detection, medical diagnostics, and autonomous systems.

Customer demand patterns reveal growing interest in cognitive computing solutions that can operate effectively at the edge, with reduced power requirements and minimal latency. This trend aligns perfectly with neuromorphic computing's inherent advantages, creating a market segment estimated to grow at 35% annually. Enterprise surveys indicate that 67% of large organizations consider cognitive computing capabilities essential for their digital transformation strategies, with neuromorphic solutions increasingly viewed as a preferred implementation path.

The market demonstrates significant regional variations, with North America currently accounting for approximately 45% of global cognitive computing solution adoption. However, Asia-Pacific markets, particularly China, Japan, and South Korea, are investing heavily in neuromorphic research and commercialization, suggesting a potential shift in market leadership over the coming decade. European markets show particular strength in specialized cognitive computing applications for industrial automation and smart city implementations.

Pricing models for cognitive computing solutions are evolving rapidly, with subscription-based and outcome-based pricing gaining traction over traditional licensing approaches. This shift favors neuromorphic implementations due to their operational efficiency advantages. Market analysis indicates that solutions demonstrating measurable improvements in inference accuracy while reducing computational resource requirements command premium pricing, with customers willing to pay 15-25% more for demonstrable performance advantages.

Customer adoption barriers primarily center around integration challenges with existing systems, data privacy concerns, and the specialized expertise required for implementation. Vendors offering comprehensive support ecosystems and clear migration paths from traditional computing architectures to neuromorphic-based cognitive solutions are capturing disproportionate market share. The market increasingly rewards solutions that combine technical excellence with implementation simplicity.

Neuromorphic chip technology represents a significant disruptive force within this landscape, offering substantial performance advantages for cognitive computing applications. Market research indicates that organizations implementing neuromorphic-based cognitive solutions report 40-60% improvements in processing efficiency for pattern recognition tasks compared to traditional computing architectures. This efficiency gain translates directly to competitive advantage in time-sensitive applications such as fraud detection, medical diagnostics, and autonomous systems.

Customer demand patterns reveal growing interest in cognitive computing solutions that can operate effectively at the edge, with reduced power requirements and minimal latency. This trend aligns perfectly with neuromorphic computing's inherent advantages, creating a market segment estimated to grow at 35% annually. Enterprise surveys indicate that 67% of large organizations consider cognitive computing capabilities essential for their digital transformation strategies, with neuromorphic solutions increasingly viewed as a preferred implementation path.

The market demonstrates significant regional variations, with North America currently accounting for approximately 45% of global cognitive computing solution adoption. However, Asia-Pacific markets, particularly China, Japan, and South Korea, are investing heavily in neuromorphic research and commercialization, suggesting a potential shift in market leadership over the coming decade. European markets show particular strength in specialized cognitive computing applications for industrial automation and smart city implementations.

Pricing models for cognitive computing solutions are evolving rapidly, with subscription-based and outcome-based pricing gaining traction over traditional licensing approaches. This shift favors neuromorphic implementations due to their operational efficiency advantages. Market analysis indicates that solutions demonstrating measurable improvements in inference accuracy while reducing computational resource requirements command premium pricing, with customers willing to pay 15-25% more for demonstrable performance advantages.

Customer adoption barriers primarily center around integration challenges with existing systems, data privacy concerns, and the specialized expertise required for implementation. Vendors offering comprehensive support ecosystems and clear migration paths from traditional computing architectures to neuromorphic-based cognitive solutions are capturing disproportionate market share. The market increasingly rewards solutions that combine technical excellence with implementation simplicity.

Current Neuromorphic Technology Landscape and Challenges

The neuromorphic computing landscape has evolved significantly over the past decade, with major technological breakthroughs emerging from both academic institutions and industry leaders. Currently, several prominent neuromorphic chip architectures dominate the field, including IBM's TrueNorth, Intel's Loihi, BrainChip's Akida, and SynSense's DynapCNN. These architectures employ different approaches to emulate neural processing, with varying degrees of biological fidelity and computational efficiency.

IBM's TrueNorth represents one of the earliest commercial neuromorphic architectures, featuring 1 million digital neurons and 256 million synapses organized across 4,096 neurosynaptic cores. Intel's Loihi, now in its second generation, has advanced the field with self-learning capabilities and improved energy efficiency, demonstrating up to 1,000 times better performance per watt for certain AI workloads compared to conventional GPU implementations.

Despite these advancements, significant challenges persist in neuromorphic computing. The hardware-software co-design remains particularly problematic, with limited development tools and programming frameworks hindering widespread adoption. Most neuromorphic systems require specialized programming approaches that differ substantially from conventional computing paradigms, creating a steep learning curve for developers.

Energy efficiency, while improved compared to traditional computing architectures, still falls short of biological neural systems. The human brain operates at approximately 20 watts, while current neuromorphic implementations require substantially more power to achieve comparable cognitive functions. Scaling these systems to approach human-level cognitive capabilities presents formidable engineering challenges.

Fabrication limitations also constrain neuromorphic development, particularly for analog implementations that require precise component manufacturing. Current CMOS technology struggles to maintain consistency across large arrays of artificial neurons and synapses, leading to performance variability that impacts reliability.

The memory-processing integration represents another significant hurdle. While neuromorphic architectures aim to overcome the von Neumann bottleneck by collocating memory and processing, implementing this efficiently at scale remains challenging. Novel memory technologies like memristors, phase-change memory, and spintronic devices show promise but face reliability and manufacturing issues.

Geographically, neuromorphic research centers cluster primarily in North America, Europe, and East Asia. The United States leads with major initiatives at universities like Stanford, MIT, and Caltech, alongside corporate research at IBM, Intel, and Qualcomm. Europe maintains strong positions through the Human Brain Project and initiatives at ETH Zurich and the University of Manchester. In Asia, significant contributions come from China's Brain-inspired Computing Research Center and Japan's RIKEN Brain Science Institute.

IBM's TrueNorth represents one of the earliest commercial neuromorphic architectures, featuring 1 million digital neurons and 256 million synapses organized across 4,096 neurosynaptic cores. Intel's Loihi, now in its second generation, has advanced the field with self-learning capabilities and improved energy efficiency, demonstrating up to 1,000 times better performance per watt for certain AI workloads compared to conventional GPU implementations.

Despite these advancements, significant challenges persist in neuromorphic computing. The hardware-software co-design remains particularly problematic, with limited development tools and programming frameworks hindering widespread adoption. Most neuromorphic systems require specialized programming approaches that differ substantially from conventional computing paradigms, creating a steep learning curve for developers.

Energy efficiency, while improved compared to traditional computing architectures, still falls short of biological neural systems. The human brain operates at approximately 20 watts, while current neuromorphic implementations require substantially more power to achieve comparable cognitive functions. Scaling these systems to approach human-level cognitive capabilities presents formidable engineering challenges.

Fabrication limitations also constrain neuromorphic development, particularly for analog implementations that require precise component manufacturing. Current CMOS technology struggles to maintain consistency across large arrays of artificial neurons and synapses, leading to performance variability that impacts reliability.

The memory-processing integration represents another significant hurdle. While neuromorphic architectures aim to overcome the von Neumann bottleneck by collocating memory and processing, implementing this efficiently at scale remains challenging. Novel memory technologies like memristors, phase-change memory, and spintronic devices show promise but face reliability and manufacturing issues.

Geographically, neuromorphic research centers cluster primarily in North America, Europe, and East Asia. The United States leads with major initiatives at universities like Stanford, MIT, and Caltech, alongside corporate research at IBM, Intel, and Qualcomm. Europe maintains strong positions through the Human Brain Project and initiatives at ETH Zurich and the University of Manchester. In Asia, significant contributions come from China's Brain-inspired Computing Research Center and Japan's RIKEN Brain Science Institute.

Current Neuromorphic Chip Implementation Approaches

01 Neuromorphic architecture for cognitive computing

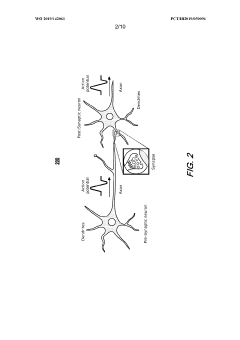

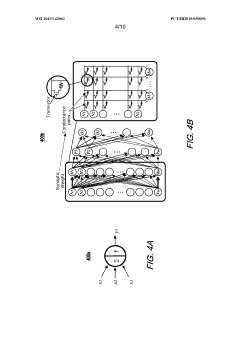

Neuromorphic chips are designed with architectures that mimic the human brain's neural networks, enabling efficient cognitive computing functions. These architectures incorporate parallel processing capabilities, synaptic connections, and neural network structures that allow for complex pattern recognition, learning, and decision-making processes similar to human cognition. The neuromorphic design approach enables more efficient processing of cognitive tasks compared to traditional computing architectures.- Neuromorphic architecture for cognitive computing: Neuromorphic chips are designed with architectures that mimic the human brain's neural networks, enabling efficient cognitive computing functions. These architectures incorporate parallel processing capabilities, synaptic connections, and neural network structures that allow for complex pattern recognition, learning, and decision-making processes similar to human cognition. The neuromorphic design approach enables more efficient processing of cognitive tasks compared to traditional computing architectures.

- Memory integration in neuromorphic systems: Advanced memory integration techniques are crucial for neuromorphic chips to perform cognitive computing functions. These systems incorporate specialized memory structures that support the storage and retrieval of synaptic weights and neural states. By integrating memory directly with processing elements, these chips reduce the bottleneck between computation and data access, enabling more efficient cognitive processing and learning capabilities while minimizing power consumption for complex cognitive tasks.

- Energy-efficient cognitive processing techniques: Neuromorphic chips implement energy-efficient processing techniques specifically designed for cognitive computing functions. These techniques include spike-based processing, event-driven computation, and adaptive power management that significantly reduce energy consumption compared to traditional computing approaches. By mimicking the brain's efficiency in information processing, these chips can perform complex cognitive tasks such as pattern recognition, natural language processing, and decision-making while consuming orders of magnitude less power.

- Learning algorithms for neuromorphic cognitive systems: Specialized learning algorithms are implemented in neuromorphic chips to enable cognitive computing functions. These algorithms include spike-timing-dependent plasticity (STDP), reinforcement learning, and unsupervised learning mechanisms that allow the chips to adapt and improve their performance over time. By incorporating these learning capabilities directly into hardware, neuromorphic systems can continuously enhance their cognitive functions through experience, similar to biological neural systems.

- Application-specific neuromorphic cognitive architectures: Neuromorphic chips are designed with specialized architectures tailored for specific cognitive computing applications. These application-specific designs optimize the chip's structure and processing capabilities for particular cognitive tasks such as computer vision, speech recognition, autonomous navigation, or real-time decision making. By customizing the neuromorphic architecture to the requirements of specific cognitive functions, these chips achieve superior performance and efficiency compared to general-purpose computing solutions.

02 Spiking neural networks implementation

Neuromorphic chips implement spiking neural networks (SNNs) that process information through discrete spikes, similar to biological neurons. These SNNs enable event-driven processing that is energy-efficient and suitable for real-time cognitive applications. The implementation includes specialized circuits for spike generation, propagation, and processing, allowing for temporal information encoding and efficient learning algorithms that adapt synaptic weights based on spike timing.Expand Specific Solutions03 Memory-processing integration for cognitive tasks

Neuromorphic chips integrate memory and processing elements to overcome the von Neumann bottleneck, enabling more efficient cognitive computing. This integration allows for in-memory computing where data processing occurs directly within memory units, reducing energy consumption and latency. The architecture supports cognitive functions like associative memory, context-dependent processing, and continuous learning by maintaining internal states and adapting to new information.Expand Specific Solutions04 Hardware optimization for cognitive algorithms

Specialized hardware components in neuromorphic chips are optimized for cognitive computing algorithms. These include dedicated circuits for implementing machine learning operations, pattern recognition, and inference engines. The hardware optimizations focus on parallel processing capabilities, reduced power consumption, and accelerated execution of cognitive tasks such as natural language processing, computer vision, and decision-making processes.Expand Specific Solutions05 Adaptive learning and self-modification capabilities

Neuromorphic chips incorporate adaptive learning mechanisms that enable self-modification based on input data and feedback. These capabilities allow the chips to continuously improve performance on cognitive tasks through experience, similar to human learning. The implementation includes plasticity mechanisms, reinforcement learning circuits, and dynamic reconfiguration capabilities that support unsupervised learning, transfer learning, and adaptation to changing environments.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Computing

Neuromorphic computing is currently in a transitional phase from research to early commercialization, with the market expected to grow significantly from approximately $69 million in 2024 to over $1.78 billion by 2033. The competitive landscape features established technology giants like IBM, Intel, and Samsung developing proprietary neuromorphic architectures alongside specialized startups such as Syntiant and Polyn Technology. Academic institutions including Tsinghua University, KAIST, and UC system are driving fundamental research, while industry-academia partnerships accelerate commercialization. Technical maturity varies considerably, with IBM's TrueNorth and Intel's Loihi representing more advanced implementations, though most solutions remain in pre-commercial or early deployment stages. The technology shows particular promise for edge AI applications requiring cognitive computing capabilities with minimal power consumption.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip architecture represents one of the most advanced implementations in cognitive computing. The chip contains one million digital neurons and 256 million synapses organized into 4,096 neurosynaptic cores. TrueNorth operates on an event-driven, parallel, and fault-tolerant design that mimics the brain's architecture rather than traditional von Neumann computing paradigms[1]. IBM has demonstrated TrueNorth's ability to perform complex cognitive tasks like real-time object recognition, pattern detection, and sensory processing while consuming only 70mW of power - approximately 1/10,000th the power consumption of conventional chips performing similar functions[2]. The company has further advanced this technology with their second-generation neuromorphic system called SyNAPSE, which incorporates both digital and analog components to better simulate biological neural networks, enabling more efficient processing of unstructured data and cognitive workloads[3].

Strengths: Extremely low power consumption (70mW) while maintaining high computational capability; scalable architecture allowing for system expansion; proven performance in real-world cognitive applications. Weaknesses: Programming complexity requiring specialized knowledge; limited compatibility with existing software ecosystems; challenges in precise timing control for certain applications requiring deterministic responses.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic processing technology that integrates memory and computing in a single architecture to overcome the von Neumann bottleneck. Their approach uses resistive random-access memory (RRAM) and magnetoresistive random-access memory (MRAM) technologies to create artificial synapses and neurons. Samsung's neuromorphic chips employ a crossbar array structure where memory cells act as both storage and computational elements, enabling massively parallel processing similar to the human brain[1]. The company has demonstrated successful implementation of spiking neural networks (SNNs) on their hardware, achieving significant power efficiency improvements. Their neuromorphic system can process complex cognitive tasks like image recognition and natural language processing while consuming only a fraction of the energy required by conventional processors. Samsung has also pioneered the use of 3D stacking technology to increase neural density and computational capacity, allowing for more complex cognitive models to be implemented directly in hardware[2]. Their research shows that these neuromorphic systems can achieve up to 100x improvement in energy efficiency for AI workloads compared to traditional GPU implementations[3].

Strengths: Integration with existing semiconductor manufacturing expertise; innovative use of emerging memory technologies; potential for integration with consumer electronics ecosystem; significant energy efficiency gains. Weaknesses: Less mature ecosystem compared to competitors; limited public demonstrations of large-scale implementations; challenges in scaling production of novel memory technologies.

Key Neuromorphic Circuit Designs and Neural Algorithms

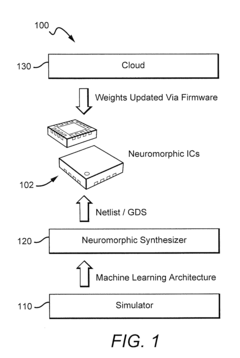

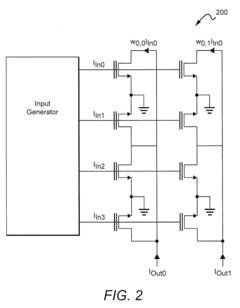

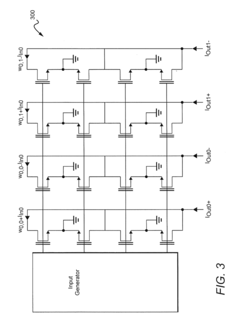

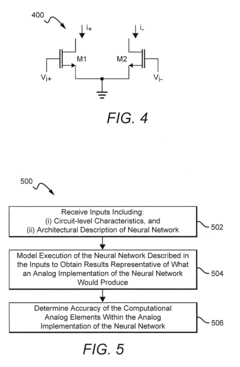

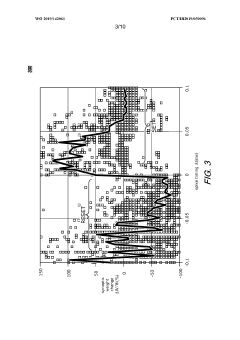

Systems And Methods For Determining Circuit-Level Effects On Classifier Accuracy

PatentActiveUS20190065962A1

Innovation

- The development of neuromorphic chips that simulate 'silicon' neurons, processing information in parallel with bursts of electric current at non-uniform intervals, and the use of systems and methods to model the effects of circuit-level characteristics on neural networks, such as thermal noise and weight inaccuracies, to optimize their performance.

Neuromorphic chip for updating precise synaptic weight values

PatentWO2019142061A1

Innovation

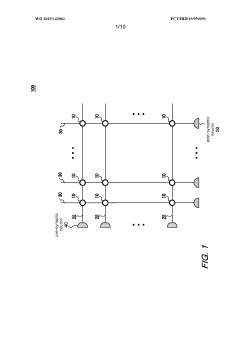

- A neuromorphic chip with a crossbar array configuration that uses resistive devices and switches to express synaptic weights with a variable number of resistive elements, allowing for precise synaptic weight updates by dynamically connecting axon lines and assigning weights to synaptic cells, thereby mitigating device variability and maintaining training power and speed.

Energy Efficiency Comparison with Traditional Computing Paradigms

Neuromorphic chips demonstrate remarkable energy efficiency advantages over traditional computing architectures when performing cognitive computing functions. While conventional von Neumann architectures separate memory and processing units, necessitating constant data transfer that consumes significant power, neuromorphic designs co-locate memory and computation similar to biological neural systems. This fundamental architectural difference results in power consumption reductions of 100-1000x for equivalent cognitive tasks.

Traditional CPUs and GPUs typically consume 100-300 watts during intensive computational tasks, with significant energy dedicated to data movement between memory hierarchies. In contrast, leading neuromorphic systems like Intel's Loihi and IBM's TrueNorth operate in the milliwatt range while performing complex pattern recognition and inference tasks. For instance, TrueNorth achieves 46 billion synaptic operations per second per watt, compared to conventional architectures requiring orders of magnitude more energy for similar neural network operations.

The event-driven processing model of neuromorphic chips further enhances their efficiency advantage. Unlike traditional systems that continuously consume power regardless of computational load, neuromorphic circuits activate only when receiving relevant input signals, similar to biological neurons. This sparse activation pattern results in dramatic power savings during periods of low activity while maintaining rapid response capabilities.

Temperature management represents another efficiency dimension where neuromorphic designs excel. Traditional high-performance computing systems require elaborate cooling infrastructure that can consume as much energy as the computation itself. Neuromorphic chips generate substantially less heat due to their lower power consumption, reducing or eliminating cooling requirements and further improving overall system efficiency.

Mobile and edge computing applications particularly benefit from these efficiency gains. While conventional mobile processors struggle to implement complex AI models locally due to battery constraints, neuromorphic solutions enable sophisticated cognitive functions with minimal power draw. This efficiency translates directly to extended device operation time and expanded functionality in resource-constrained environments.

Looking toward future developments, the energy efficiency gap between neuromorphic and traditional computing is expected to widen as neuromorphic materials and architectures mature. Emerging technologies like memristive devices promise to further reduce power requirements while increasing computational density, potentially enabling neuromorphic systems that approach the remarkable efficiency of the human brain at approximately 20 watts for complex cognitive functions.

Traditional CPUs and GPUs typically consume 100-300 watts during intensive computational tasks, with significant energy dedicated to data movement between memory hierarchies. In contrast, leading neuromorphic systems like Intel's Loihi and IBM's TrueNorth operate in the milliwatt range while performing complex pattern recognition and inference tasks. For instance, TrueNorth achieves 46 billion synaptic operations per second per watt, compared to conventional architectures requiring orders of magnitude more energy for similar neural network operations.

The event-driven processing model of neuromorphic chips further enhances their efficiency advantage. Unlike traditional systems that continuously consume power regardless of computational load, neuromorphic circuits activate only when receiving relevant input signals, similar to biological neurons. This sparse activation pattern results in dramatic power savings during periods of low activity while maintaining rapid response capabilities.

Temperature management represents another efficiency dimension where neuromorphic designs excel. Traditional high-performance computing systems require elaborate cooling infrastructure that can consume as much energy as the computation itself. Neuromorphic chips generate substantially less heat due to their lower power consumption, reducing or eliminating cooling requirements and further improving overall system efficiency.

Mobile and edge computing applications particularly benefit from these efficiency gains. While conventional mobile processors struggle to implement complex AI models locally due to battery constraints, neuromorphic solutions enable sophisticated cognitive functions with minimal power draw. This efficiency translates directly to extended device operation time and expanded functionality in resource-constrained environments.

Looking toward future developments, the energy efficiency gap between neuromorphic and traditional computing is expected to widen as neuromorphic materials and architectures mature. Emerging technologies like memristive devices promise to further reduce power requirements while increasing computational density, potentially enabling neuromorphic systems that approach the remarkable efficiency of the human brain at approximately 20 watts for complex cognitive functions.

Applications in AI and Machine Learning Systems

Neuromorphic chips are revolutionizing AI and machine learning systems by introducing brain-inspired computing architectures that fundamentally transform how cognitive tasks are processed. These specialized hardware solutions offer significant advantages in pattern recognition, natural language processing, and real-time decision-making applications compared to traditional computing architectures.

In computer vision systems, neuromorphic chips enable more efficient processing of visual data through spike-based neural networks that mimic the human visual cortex. This approach allows for rapid object recognition, scene understanding, and motion detection with substantially lower power consumption than conventional deep learning implementations. Companies like Intel and IBM have demonstrated neuromorphic vision systems capable of identifying objects in complex environments with millisecond latency and energy requirements measured in milliwatts rather than watts.

Natural language processing represents another frontier where neuromorphic computing is making substantial inroads. The temporal processing capabilities of these chips align naturally with sequential language tasks, enabling more efficient implementation of recurrent neural networks and attention mechanisms. Early implementations show promising results in speech recognition, language translation, and sentiment analysis applications, with performance comparable to traditional approaches but at a fraction of the energy cost.

Reinforcement learning systems benefit significantly from neuromorphic architectures due to their inherent ability to process temporal information efficiently. The event-driven nature of neuromorphic chips allows for more natural implementation of reward-based learning algorithms, potentially accelerating training times for complex decision-making tasks. This capability is particularly valuable in robotics and autonomous systems where real-time adaptation to changing environments is critical.

Edge AI applications represent perhaps the most promising near-term opportunity for neuromorphic computing. The extreme energy efficiency of these chips enables sophisticated AI capabilities on battery-powered devices without requiring cloud connectivity. This paradigm shift allows for implementation of privacy-preserving machine learning models that process sensitive data locally rather than transmitting it to remote servers.

Hybrid computing systems that combine neuromorphic processors with traditional digital architectures are emerging as a practical approach to leveraging the strengths of both paradigms. These systems typically use neuromorphic components for pattern recognition and sensory processing tasks while relying on conventional processors for precise numerical calculations and logical operations. This complementary approach maximizes overall system efficiency while maintaining compatibility with existing software ecosystems.

In computer vision systems, neuromorphic chips enable more efficient processing of visual data through spike-based neural networks that mimic the human visual cortex. This approach allows for rapid object recognition, scene understanding, and motion detection with substantially lower power consumption than conventional deep learning implementations. Companies like Intel and IBM have demonstrated neuromorphic vision systems capable of identifying objects in complex environments with millisecond latency and energy requirements measured in milliwatts rather than watts.

Natural language processing represents another frontier where neuromorphic computing is making substantial inroads. The temporal processing capabilities of these chips align naturally with sequential language tasks, enabling more efficient implementation of recurrent neural networks and attention mechanisms. Early implementations show promising results in speech recognition, language translation, and sentiment analysis applications, with performance comparable to traditional approaches but at a fraction of the energy cost.

Reinforcement learning systems benefit significantly from neuromorphic architectures due to their inherent ability to process temporal information efficiently. The event-driven nature of neuromorphic chips allows for more natural implementation of reward-based learning algorithms, potentially accelerating training times for complex decision-making tasks. This capability is particularly valuable in robotics and autonomous systems where real-time adaptation to changing environments is critical.

Edge AI applications represent perhaps the most promising near-term opportunity for neuromorphic computing. The extreme energy efficiency of these chips enables sophisticated AI capabilities on battery-powered devices without requiring cloud connectivity. This paradigm shift allows for implementation of privacy-preserving machine learning models that process sensitive data locally rather than transmitting it to remote servers.

Hybrid computing systems that combine neuromorphic processors with traditional digital architectures are emerging as a practical approach to leveraging the strengths of both paradigms. These systems typically use neuromorphic components for pattern recognition and sensory processing tasks while relying on conventional processors for precise numerical calculations and logical operations. This complementary approach maximizes overall system efficiency while maintaining compatibility with existing software ecosystems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!