How to Enhance DDR5 Data Transfer Rates in Cloud Infrastructure

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Targets

DDR5 memory technology represents a significant leap forward in the evolution of DRAM technology, building upon the foundations established by its predecessors. Since the introduction of DDR4 in 2014, memory bandwidth requirements have grown exponentially, particularly in cloud infrastructure environments where data processing demands continue to intensify. DDR5 emerged as the natural progression, with initial specifications published by JEDEC in 2020 and commercial availability beginning in late 2021.

The evolutionary path of DDR5 has been characterized by substantial improvements in several key performance metrics. Base frequencies have increased from DDR4's 1600-3200 MT/s to DDR5's starting point of 4800 MT/s, with a roadmap extending to 8400 MT/s and beyond. This represents more than a doubling of raw bandwidth capability, addressing one of the primary bottlenecks in modern computing architectures.

Channel architecture has undergone significant redesign in DDR5, implementing a dual-channel architecture on a single DIMM. This innovation effectively doubles the accessible bandwidth without requiring additional memory channels on the CPU side, allowing for more efficient data transfer operations particularly beneficial for cloud workloads with high concurrency requirements.

Burst length has increased from DDR4's 8 bytes to 16 bytes in DDR5, enabling larger data chunks to be transferred in a single operation. This enhancement directly supports the wider internal data buses of modern processors and accelerators commonly deployed in cloud infrastructure, improving overall system efficiency and reducing latency for large data transfers.

Power efficiency targets represent another critical evolution aspect, with DDR5 operating at a reduced voltage of 1.1V compared to DDR4's 1.2V. The on-module power management has been completely redesigned, moving voltage regulation to the DIMM itself rather than the motherboard, allowing for more precise power delivery and reduced signal noise – factors that become increasingly important at higher transfer rates.

The performance targets for DDR5 in cloud infrastructure are ambitious but necessary to address growing computational demands. Current implementations aim to achieve effective bandwidth utilization of 85-90% of theoretical maximums, significantly higher than the 70-75% typically realized in DDR4 systems. Latency optimization targets focus on maintaining or improving effective access times despite higher clock rates, with particular emphasis on reducing variability in access patterns common in virtualized environments.

Error detection and correction capabilities have been substantially enhanced in DDR5, with on-die ECC becoming standard and more robust RAS (Reliability, Availability, Serviceability) features implemented. These improvements target a reduction in uncorrectable errors by an order of magnitude compared to DDR4, a critical requirement for maintaining data integrity in high-density cloud deployments.

The evolutionary path of DDR5 has been characterized by substantial improvements in several key performance metrics. Base frequencies have increased from DDR4's 1600-3200 MT/s to DDR5's starting point of 4800 MT/s, with a roadmap extending to 8400 MT/s and beyond. This represents more than a doubling of raw bandwidth capability, addressing one of the primary bottlenecks in modern computing architectures.

Channel architecture has undergone significant redesign in DDR5, implementing a dual-channel architecture on a single DIMM. This innovation effectively doubles the accessible bandwidth without requiring additional memory channels on the CPU side, allowing for more efficient data transfer operations particularly beneficial for cloud workloads with high concurrency requirements.

Burst length has increased from DDR4's 8 bytes to 16 bytes in DDR5, enabling larger data chunks to be transferred in a single operation. This enhancement directly supports the wider internal data buses of modern processors and accelerators commonly deployed in cloud infrastructure, improving overall system efficiency and reducing latency for large data transfers.

Power efficiency targets represent another critical evolution aspect, with DDR5 operating at a reduced voltage of 1.1V compared to DDR4's 1.2V. The on-module power management has been completely redesigned, moving voltage regulation to the DIMM itself rather than the motherboard, allowing for more precise power delivery and reduced signal noise – factors that become increasingly important at higher transfer rates.

The performance targets for DDR5 in cloud infrastructure are ambitious but necessary to address growing computational demands. Current implementations aim to achieve effective bandwidth utilization of 85-90% of theoretical maximums, significantly higher than the 70-75% typically realized in DDR4 systems. Latency optimization targets focus on maintaining or improving effective access times despite higher clock rates, with particular emphasis on reducing variability in access patterns common in virtualized environments.

Error detection and correction capabilities have been substantially enhanced in DDR5, with on-die ECC becoming standard and more robust RAS (Reliability, Availability, Serviceability) features implemented. These improvements target a reduction in uncorrectable errors by an order of magnitude compared to DDR4, a critical requirement for maintaining data integrity in high-density cloud deployments.

Cloud Infrastructure Memory Demand Analysis

The cloud computing industry has witnessed exponential growth over the past decade, with global cloud infrastructure spending reaching $178 billion in 2021 and projected to exceed $277 billion by 2025. This rapid expansion has placed unprecedented demands on memory systems, particularly in data centers where performance, reliability, and efficiency are paramount. The evolution of cloud services from basic storage solutions to complex AI workloads, real-time analytics, and high-performance computing has fundamentally transformed memory requirements.

Current cloud workloads exhibit distinct memory demand characteristics that directly influence DDR5 implementation strategies. Big data analytics applications typically process terabytes to petabytes of information, requiring high-bandwidth memory access with sustained transfer rates. These workloads benefit significantly from DDR5's improved data transfer capabilities but often face bottlenecks during peak processing periods.

Virtualization environments present another critical use case, with hypervisors managing thousands of virtual machines across server clusters. Memory allocation efficiency becomes essential, as does the ability to handle rapid context switching between virtual instances. DDR5's increased channel efficiency and improved command rates provide substantial benefits in these scenarios, reducing latency during VM migrations and resource reallocation.

AI and machine learning workloads represent perhaps the most demanding memory consumers in modern cloud infrastructure. Training sophisticated neural networks requires massive parallel data processing capabilities with minimal latency. The memory subsystem must support both high bandwidth for model training and low latency for inference operations. DDR5's enhanced capabilities address these needs, though current implementations still face challenges with extremely large model training.

Database operations in cloud environments demonstrate yet another memory demand profile, with transaction processing requiring consistent performance under varying load conditions. In-memory databases particularly benefit from DDR5's increased capacity and reliability features, though they remain sensitive to latency fluctuations during peak usage periods.

Market analysis indicates memory bandwidth requirements in cloud infrastructure are growing at approximately 35% annually, outpacing Moore's Law and creating significant technical challenges. This growth trajectory is driven by increasing dataset sizes, more complex computational models, and the proliferation of real-time processing requirements across industries.

The financial implications of memory performance are substantial, with studies showing that even millisecond improvements in response times can translate to millions in revenue for cloud service providers. This economic reality drives continuous investment in memory technology advancement, with DDR5 representing a critical component in maintaining competitive advantage in the cloud marketplace.

Current cloud workloads exhibit distinct memory demand characteristics that directly influence DDR5 implementation strategies. Big data analytics applications typically process terabytes to petabytes of information, requiring high-bandwidth memory access with sustained transfer rates. These workloads benefit significantly from DDR5's improved data transfer capabilities but often face bottlenecks during peak processing periods.

Virtualization environments present another critical use case, with hypervisors managing thousands of virtual machines across server clusters. Memory allocation efficiency becomes essential, as does the ability to handle rapid context switching between virtual instances. DDR5's increased channel efficiency and improved command rates provide substantial benefits in these scenarios, reducing latency during VM migrations and resource reallocation.

AI and machine learning workloads represent perhaps the most demanding memory consumers in modern cloud infrastructure. Training sophisticated neural networks requires massive parallel data processing capabilities with minimal latency. The memory subsystem must support both high bandwidth for model training and low latency for inference operations. DDR5's enhanced capabilities address these needs, though current implementations still face challenges with extremely large model training.

Database operations in cloud environments demonstrate yet another memory demand profile, with transaction processing requiring consistent performance under varying load conditions. In-memory databases particularly benefit from DDR5's increased capacity and reliability features, though they remain sensitive to latency fluctuations during peak usage periods.

Market analysis indicates memory bandwidth requirements in cloud infrastructure are growing at approximately 35% annually, outpacing Moore's Law and creating significant technical challenges. This growth trajectory is driven by increasing dataset sizes, more complex computational models, and the proliferation of real-time processing requirements across industries.

The financial implications of memory performance are substantial, with studies showing that even millisecond improvements in response times can translate to millions in revenue for cloud service providers. This economic reality drives continuous investment in memory technology advancement, with DDR5 representing a critical component in maintaining competitive advantage in the cloud marketplace.

DDR5 Implementation Challenges in Data Centers

The implementation of DDR5 memory in data center environments presents several significant challenges that must be addressed to fully leverage its enhanced data transfer capabilities. One of the primary obstacles is thermal management. DDR5 modules operate at higher frequencies and voltages than their predecessors, generating considerably more heat. In densely packed server environments, this excess heat can lead to performance throttling, reduced component lifespan, and increased cooling costs. Data centers must redesign their cooling systems to accommodate these thermal demands without compromising energy efficiency goals.

Signal integrity becomes increasingly critical as data rates climb to 4800MT/s and beyond. The higher frequencies of DDR5 make transmission lines more susceptible to noise, crosstalk, and electromagnetic interference. Server motherboard designs require meticulous attention to trace routing, impedance matching, and shielding to maintain signal quality. Without proper signal integrity management, bit error rates increase dramatically, negating the performance benefits of faster memory.

Power delivery infrastructure presents another significant challenge. DDR5 shifts voltage regulation from the motherboard to the memory module itself through Power Management Integrated Circuits (PMICs). While this improves power efficiency and signal integrity, it requires server manufacturers to redesign power distribution networks and ensure adequate current delivery to support multiple high-performance memory channels simultaneously.

Compatibility with existing data center infrastructure poses practical deployment challenges. Many facilities have standardized server form factors and cooling solutions that may not accommodate the different thermal profiles and power requirements of DDR5 systems. This necessitates careful planning for gradual infrastructure upgrades or hybrid deployments that can support both DDR4 and DDR5 technologies during transition periods.

Cost considerations remain a significant barrier to widespread DDR5 adoption in data centers. The premium pricing of DDR5 modules, combined with the need for new server platforms and potential infrastructure modifications, creates substantial capital expenditure requirements. Data center operators must carefully evaluate the total cost of ownership, balancing the performance benefits against implementation expenses and expected service lifetimes.

Reliability and error handling mechanisms also present implementation challenges. While DDR5 introduces improved error correction capabilities through on-die ECC, integrating these features with server-level RAS (Reliability, Availability, Serviceability) systems requires sophisticated memory controllers and management software. Ensuring data integrity across high-speed memory channels while maintaining performance remains a delicate balancing act for system designers.

Signal integrity becomes increasingly critical as data rates climb to 4800MT/s and beyond. The higher frequencies of DDR5 make transmission lines more susceptible to noise, crosstalk, and electromagnetic interference. Server motherboard designs require meticulous attention to trace routing, impedance matching, and shielding to maintain signal quality. Without proper signal integrity management, bit error rates increase dramatically, negating the performance benefits of faster memory.

Power delivery infrastructure presents another significant challenge. DDR5 shifts voltage regulation from the motherboard to the memory module itself through Power Management Integrated Circuits (PMICs). While this improves power efficiency and signal integrity, it requires server manufacturers to redesign power distribution networks and ensure adequate current delivery to support multiple high-performance memory channels simultaneously.

Compatibility with existing data center infrastructure poses practical deployment challenges. Many facilities have standardized server form factors and cooling solutions that may not accommodate the different thermal profiles and power requirements of DDR5 systems. This necessitates careful planning for gradual infrastructure upgrades or hybrid deployments that can support both DDR4 and DDR5 technologies during transition periods.

Cost considerations remain a significant barrier to widespread DDR5 adoption in data centers. The premium pricing of DDR5 modules, combined with the need for new server platforms and potential infrastructure modifications, creates substantial capital expenditure requirements. Data center operators must carefully evaluate the total cost of ownership, balancing the performance benefits against implementation expenses and expected service lifetimes.

Reliability and error handling mechanisms also present implementation challenges. While DDR5 introduces improved error correction capabilities through on-die ECC, integrating these features with server-level RAS (Reliability, Availability, Serviceability) systems requires sophisticated memory controllers and management software. Ensuring data integrity across high-speed memory channels while maintaining performance remains a delicate balancing act for system designers.

Current DDR5 Optimization Techniques

01 DDR5 memory data transfer rates and bandwidth improvements

DDR5 memory technology offers significantly improved data transfer rates compared to previous generations. These improvements are achieved through higher clock frequencies, enhanced channel architecture, and more efficient data handling protocols. DDR5 memory can achieve data transfer rates starting from 4800 MT/s up to 8400 MT/s and beyond, effectively doubling the bandwidth available in DDR4 systems while improving power efficiency.- DDR5 memory architecture for increased data transfer rates: DDR5 memory architecture introduces significant improvements to achieve higher data transfer rates compared to previous generations. The architecture includes enhanced memory controllers, improved signaling techniques, and optimized data paths that collectively enable faster data movement between memory and processors. These architectural enhancements support the increased bandwidth requirements of modern computing systems while maintaining signal integrity at higher speeds.

- Advanced clock synchronization techniques for DDR5: DDR5 memory implements advanced clock synchronization techniques to support higher data transfer rates. These include improved clock distribution networks, enhanced phase-locked loops (PLLs), and more precise delay-locked loops (DLLs) that minimize clock skew and jitter. By maintaining tighter synchronization between memory components, these techniques enable reliable data transfers at significantly higher frequencies than previous memory generations.

- Power management innovations for high-speed DDR5 operation: DDR5 memory incorporates advanced power management features that support higher data transfer rates while optimizing energy consumption. These innovations include on-die voltage regulation, improved power delivery networks, and intelligent power state management. By providing more stable power delivery and reducing power-related signal noise, these features enable memory to operate reliably at higher frequencies while maintaining reasonable thermal profiles.

- Enhanced signal integrity solutions for DDR5 high-speed transfers: To achieve higher data transfer rates, DDR5 memory implements enhanced signal integrity solutions that minimize interference and maintain clean signal paths. These include improved termination schemes, equalization techniques, and advanced I/O buffer designs. Additionally, DDR5 employs decision feedback equalization and other signal processing techniques to compensate for channel impairments at higher frequencies, ensuring reliable data transmission despite increased speeds.

- DDR5 memory controller optimizations for maximizing bandwidth: DDR5 memory controllers feature significant optimizations to fully leverage the increased data transfer capabilities of the memory technology. These include more sophisticated prefetching algorithms, improved command scheduling, and enhanced burst operations. The controllers also implement advanced error correction capabilities and more efficient refresh mechanisms, allowing for higher sustained bandwidth while maintaining data integrity at the elevated transfer rates characteristic of DDR5.

02 Memory controller architecture for high-speed data transfer

Specialized memory controller architectures are essential for managing the high-speed data transfers in DDR5 systems. These controllers implement advanced timing mechanisms, improved command scheduling, and optimized interface protocols to handle the increased data rates. The controllers also incorporate features for managing multiple channels simultaneously, reducing latency, and ensuring data integrity at higher transfer speeds.Expand Specific Solutions03 Signal integrity and clock synchronization for high-speed memory

Maintaining signal integrity at DDR5's high data transfer rates requires advanced techniques for clock synchronization, noise reduction, and impedance matching. These systems employ sophisticated timing control mechanisms, improved equalization techniques, and enhanced phase-locked loops to ensure reliable data transmission. The designs also address challenges related to signal reflection, crosstalk, and jitter that become more pronounced at higher frequencies.Expand Specific Solutions04 Power management for high-speed memory operations

DDR5 memory incorporates advanced power management features to support higher data transfer rates while maintaining efficiency. These include on-die voltage regulation, improved power delivery networks, and dynamic voltage scaling. The technology implements more granular power states, allowing portions of the memory to enter low-power modes when not in active use, which helps manage the increased power demands associated with higher transfer rates.Expand Specific Solutions05 Memory interface protocols and buffering techniques

Enhanced interface protocols and buffering techniques are crucial for achieving DDR5's high data transfer rates. These include improved prefetch buffers, more efficient command/address signaling, and optimized data bus utilization. DDR5 implements decision feedback equalization, advanced training sequences, and enhanced error correction capabilities to maintain data integrity at higher speeds. The memory architecture also features dual-channel designs with independent command and address buses to increase throughput.Expand Specific Solutions

Leading DDR5 Manufacturers and Cloud Providers

The DDR5 data transfer rate enhancement in cloud infrastructure is currently in a growth phase, with the market expanding rapidly due to increasing demand for high-performance computing. The global market size is projected to grow significantly as cloud providers upgrade their infrastructure to support data-intensive applications. Technologically, this field is advancing from early maturity to mainstream adoption, with key players demonstrating varying levels of innovation. Companies like Micron Technology and Rambus lead with established DDR5 solutions, while Huawei, ZTE, and AMD are making significant advancements in memory controller technologies. Chinese manufacturers such as ChangXin Memory Technologies and Hygon are rapidly closing the technology gap, focusing on specialized cloud-optimized DDR5 implementations. Emerging players like Inspur and New H3C are integrating DDR5 into comprehensive cloud solutions, creating a competitive landscape that balances established expertise with innovative approaches.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed a comprehensive DDR5 enhancement solution for cloud infrastructure centered around their Kunpeng server processors and associated memory subsystem designs. Their approach implements advanced memory controller architectures featuring multi-phase training algorithms that optimize timing parameters across different operating conditions. Huawei's technology incorporates proprietary signal integrity enhancements including adaptive equalization circuits that dynamically compensate for channel characteristics at high frequencies, enabling stable operation at data rates exceeding 6400 MT/s. Their server platforms feature custom-designed power delivery networks with multiple power planes and sophisticated filtering to minimize noise that typically limits maximum data rates. Huawei has also implemented advanced thermal management solutions specifically for high-speed memory operations, including dynamic frequency scaling based on temperature monitoring and workload characteristics. Additionally, their memory subsystems incorporate enhanced reliability features such as extended ECC protection and memory rank sparing that maintain data integrity at higher transfer rates where error rates naturally increase. Huawei's solution also includes firmware-level optimizations that adjust memory timing parameters based on application workload patterns, dynamically balancing between maximum bandwidth, latency, and power efficiency.

Strengths: Highly integrated system-level approach covering processor, memory controller, and platform design; excellent power efficiency characteristics; strong reliability features suitable for mission-critical cloud applications. Weaknesses: Ecosystem limited primarily to Huawei's own server platforms; less third-party validation compared to more open industry solutions; potential supply chain challenges in some markets.

Micron Technology, Inc.

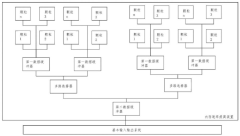

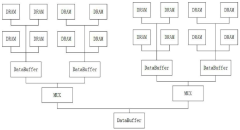

Technical Solution: Micron has developed advanced DDR5 memory solutions specifically optimized for cloud infrastructure with data transfer rates exceeding 6400 MT/s. Their technology implements on-die ECC (Error Correction Code) that significantly reduces system errors and improves data integrity during high-speed transfers. Micron's DDR5 modules feature dual 32-bit channels per DIMM instead of a single 64-bit channel, effectively doubling the memory access efficiency. They've also implemented decision feedback equalization (DFE) and feed-forward equalization (FFE) techniques to maintain signal integrity at higher frequencies. Additionally, Micron has developed proprietary RCD (Registering Clock Driver) components that enable better timing control and signal management across multiple memory ranks, allowing cloud servers to maintain stability at higher transfer rates. Their DDR5 solutions incorporate integrated power management ICs (PMICs) on each DIMM, moving voltage regulation from the motherboard to the memory module for more precise power delivery and reduced noise.

Strengths: Superior signal integrity at high frequencies through advanced equalization techniques; improved power efficiency with on-DIMM voltage regulation; enhanced reliability with on-die ECC. Weaknesses: Higher initial implementation costs compared to DDR4 solutions; requires significant server architecture modifications to fully leverage the dual-channel per DIMM design; thermal management challenges at maximum transfer rates.

Key Patents in High-Speed Memory Interface Design

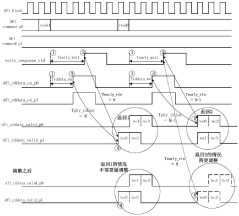

Data processing method, memory controller, processor and electronic device

PatentActiveCN112631966B

Innovation

- The memory controller adjusts the phase of the returned original read data so that the phase required from sending the early response signal to receiving the first data in the original read data is fixed each time, thus supporting the early response function and read cycle. Simultaneous use of redundancy checking functions.

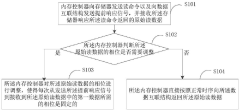

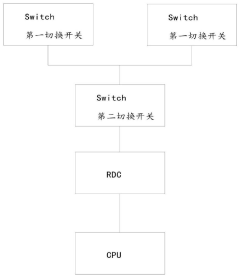

Memory rate improving device and method, equipment and storage medium

PatentPendingCN117724659A

Innovation

- By using the first data buffer to connect multiple memory particles, the multiplexer is connected to the two data buffers, and the second data buffer is connected to the basic input and output system to realize data transfer, increasing the number of particles to increase the memory capacity, and at the same time Use switch and Switch strobe chip for data transmission and switching.

Thermal Management Solutions for High-Speed DDR5

Thermal management has become a critical challenge in DDR5 memory systems, particularly as data transfer rates continue to increase in cloud infrastructure environments. The higher frequencies and voltages of DDR5 modules generate significantly more heat than previous generations, with thermal output increasing exponentially as speeds approach and exceed 6400 MT/s. Without proper thermal solutions, this excess heat can lead to performance throttling, reduced reliability, and shortened component lifespan.

Advanced cooling technologies are emerging as essential components for high-performance DDR5 implementations. Passive cooling solutions utilizing aluminum and copper heat spreaders with optimized fin designs remain the foundation of memory thermal management. These solutions have evolved to incorporate phase-change thermal interface materials that significantly improve heat transfer efficiency between memory chips and heat dissipation components.

Active cooling approaches are gaining prominence in data center environments where memory density and utilization rates are exceptionally high. Direct airflow optimization techniques employ computational fluid dynamics to create targeted cooling channels across memory modules. Some advanced systems now incorporate micro-fans integrated directly into DIMM designs, providing localized cooling precisely where thermal hotspots develop.

Liquid cooling solutions represent the cutting edge for extreme performance scenarios. Cold plate technologies that directly contact memory modules can reduce operating temperatures by 15-20°C compared to conventional air cooling. Immersion cooling, while more complex to implement, offers even greater thermal efficiency by submerging entire server components in dielectric fluid, enabling sustained operation at maximum DDR5 transfer rates.

Thermal monitoring and dynamic management systems have become increasingly sophisticated, with embedded temperature sensors providing real-time data to memory controllers. This enables intelligent frequency scaling and refresh rate adjustments based on thermal conditions, maintaining optimal performance while preventing overheating. Some advanced implementations now incorporate predictive thermal modeling that anticipates workload-induced temperature spikes before they occur.

Material science innovations are also contributing to thermal management improvements. New ceramic-based substrates with superior thermal conductivity are replacing traditional PCB materials in premium DDR5 modules. Carbon nanotube-enhanced thermal interface materials demonstrate up to 60% better heat transfer efficiency compared to conventional compounds, while maintaining the necessary electrical isolation properties.

Advanced cooling technologies are emerging as essential components for high-performance DDR5 implementations. Passive cooling solutions utilizing aluminum and copper heat spreaders with optimized fin designs remain the foundation of memory thermal management. These solutions have evolved to incorporate phase-change thermal interface materials that significantly improve heat transfer efficiency between memory chips and heat dissipation components.

Active cooling approaches are gaining prominence in data center environments where memory density and utilization rates are exceptionally high. Direct airflow optimization techniques employ computational fluid dynamics to create targeted cooling channels across memory modules. Some advanced systems now incorporate micro-fans integrated directly into DIMM designs, providing localized cooling precisely where thermal hotspots develop.

Liquid cooling solutions represent the cutting edge for extreme performance scenarios. Cold plate technologies that directly contact memory modules can reduce operating temperatures by 15-20°C compared to conventional air cooling. Immersion cooling, while more complex to implement, offers even greater thermal efficiency by submerging entire server components in dielectric fluid, enabling sustained operation at maximum DDR5 transfer rates.

Thermal monitoring and dynamic management systems have become increasingly sophisticated, with embedded temperature sensors providing real-time data to memory controllers. This enables intelligent frequency scaling and refresh rate adjustments based on thermal conditions, maintaining optimal performance while preventing overheating. Some advanced implementations now incorporate predictive thermal modeling that anticipates workload-induced temperature spikes before they occur.

Material science innovations are also contributing to thermal management improvements. New ceramic-based substrates with superior thermal conductivity are replacing traditional PCB materials in premium DDR5 modules. Carbon nanotube-enhanced thermal interface materials demonstrate up to 60% better heat transfer efficiency compared to conventional compounds, while maintaining the necessary electrical isolation properties.

Power Efficiency Considerations for DDR5 Deployment

Power efficiency has emerged as a critical factor in DDR5 deployment within cloud infrastructure environments, directly impacting both operational costs and performance capabilities. DDR5 memory modules offer significantly higher data transfer rates compared to previous generations, but this comes with increased power consumption challenges that must be carefully managed. The typical DDR5 module operates at 1.1V compared to DDR4's 1.2V, representing a nominal 8% reduction in voltage, yet the higher frequencies and doubled bank groups create new power management complexities.

Advanced power management features in DDR5 include on-die voltage regulation, which shifts power management from the motherboard to the DIMM itself. This architectural change enables more precise voltage control and reduced power losses during voltage conversion processes. The implementation of multiple power saving states (PSx) allows memory to dynamically adjust power consumption based on workload demands, significantly reducing idle power consumption in large-scale deployments.

Decision feedback equalization (DFE) and adaptive voltage scaling technologies further optimize power efficiency by adjusting signal integrity parameters and operating voltages in real-time. These technologies can reduce power consumption by 15-20% during active operations while maintaining data integrity at high transfer rates. Additionally, DDR5's improved refresh management mechanisms reduce the frequency of power-intensive refresh operations without compromising data retention.

Cloud infrastructure providers implementing DDR5 must consider the thermal design power (TDP) implications, as higher data rates generate more heat. Enhanced cooling solutions or reduced DIMM density may be necessary in high-performance environments to prevent thermal throttling, which would otherwise negate the performance benefits. Power capping technologies at both the hardware and software levels provide mechanisms to balance performance needs against power constraints.

The total cost of ownership (TCO) analysis reveals that despite higher initial acquisition costs, DDR5's improved power efficiency can yield significant operational savings in large-scale deployments. Studies indicate potential energy savings of 25-30% per data transaction compared to DDR4 at equivalent workloads, with the greatest efficiency gains observed in read-intensive applications typical of cloud database operations.

For optimal deployment, cloud infrastructure architects should implement graduated adoption strategies that prioritize DDR5 for workloads with high memory bandwidth requirements and sensitivity to latency. Power monitoring and management systems should be upgraded to take full advantage of DDR5's granular power control capabilities, ensuring that efficiency gains translate directly to operational cost reductions while supporting enhanced data transfer rates.

Advanced power management features in DDR5 include on-die voltage regulation, which shifts power management from the motherboard to the DIMM itself. This architectural change enables more precise voltage control and reduced power losses during voltage conversion processes. The implementation of multiple power saving states (PSx) allows memory to dynamically adjust power consumption based on workload demands, significantly reducing idle power consumption in large-scale deployments.

Decision feedback equalization (DFE) and adaptive voltage scaling technologies further optimize power efficiency by adjusting signal integrity parameters and operating voltages in real-time. These technologies can reduce power consumption by 15-20% during active operations while maintaining data integrity at high transfer rates. Additionally, DDR5's improved refresh management mechanisms reduce the frequency of power-intensive refresh operations without compromising data retention.

Cloud infrastructure providers implementing DDR5 must consider the thermal design power (TDP) implications, as higher data rates generate more heat. Enhanced cooling solutions or reduced DIMM density may be necessary in high-performance environments to prevent thermal throttling, which would otherwise negate the performance benefits. Power capping technologies at both the hardware and software levels provide mechanisms to balance performance needs against power constraints.

The total cost of ownership (TCO) analysis reveals that despite higher initial acquisition costs, DDR5's improved power efficiency can yield significant operational savings in large-scale deployments. Studies indicate potential energy savings of 25-30% per data transaction compared to DDR4 at equivalent workloads, with the greatest efficiency gains observed in read-intensive applications typical of cloud database operations.

For optimal deployment, cloud infrastructure architects should implement graduated adoption strategies that prioritize DDR5 for workloads with high memory bandwidth requirements and sensitivity to latency. Power monitoring and management systems should be upgraded to take full advantage of DDR5's granular power control capabilities, ensuring that efficiency gains translate directly to operational cost reductions while supporting enhanced data transfer rates.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!