Measure DDR5 Bus Width Impact on Processing Throughput

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Memory Evolution and Performance Objectives

DDR5 memory represents a significant evolution in DRAM technology, building upon the foundations established by previous generations while introducing substantial architectural improvements. Since its introduction in 2020, DDR5 has marked a pivotal advancement in addressing the growing memory bandwidth demands of modern computing systems. The evolution from DDR4 to DDR5 has been characterized by several key technological enhancements, including higher data rates, improved power efficiency, and enhanced reliability features.

The historical trajectory of DDR memory shows consistent progression in performance metrics. DDR4, introduced in 2014, offered data rates of 1600-3200 MT/s, while DDR5 has pushed these boundaries to 4800-8400 MT/s, with roadmaps indicating potential for even higher speeds approaching 10,000 MT/s in future iterations. This represents more than a doubling of bandwidth capability within a single generation transition.

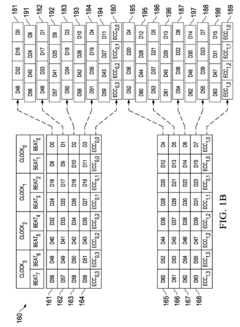

A critical architectural change in DDR5 is the implementation of dual 32-bit channels per DIMM instead of a single 64-bit channel, allowing for more efficient memory access patterns and improved parallelism. This modification directly impacts how data is transferred between memory and processing units, particularly in bandwidth-intensive applications such as high-performance computing, artificial intelligence training, and large-scale data analytics.

The performance objectives for DDR5 memory systems are multifaceted, focusing primarily on maximizing data throughput while maintaining reasonable latency characteristics. In the context of measuring bus width impact on processing throughput, the primary goal is to quantify how variations in the effective memory bus width affect overall system performance across different workload types.

Power efficiency represents another significant objective in DDR5 evolution. The technology introduces on-die voltage regulation, replacing the traditional approach of motherboard-based regulation. This change enables more precise power management and contributes to overall system efficiency, particularly in data center environments where energy consumption is a critical operational factor.

Reliability improvements constitute a third major objective, with DDR5 implementing enhanced error correction capabilities through on-die ECC (Error Correction Code). This feature is particularly relevant when evaluating bus width impact, as it affects the effective data transfer rates and system stability under various operational conditions.

The performance objectives also extend to scalability considerations, with DDR5 designed to support higher density memory modules (up to 64GB per DIMM currently) and improved channel utilization. These characteristics are essential for understanding how memory bus width configurations interact with overall system architecture to determine processing throughput in both current and future computing paradigms.

The historical trajectory of DDR memory shows consistent progression in performance metrics. DDR4, introduced in 2014, offered data rates of 1600-3200 MT/s, while DDR5 has pushed these boundaries to 4800-8400 MT/s, with roadmaps indicating potential for even higher speeds approaching 10,000 MT/s in future iterations. This represents more than a doubling of bandwidth capability within a single generation transition.

A critical architectural change in DDR5 is the implementation of dual 32-bit channels per DIMM instead of a single 64-bit channel, allowing for more efficient memory access patterns and improved parallelism. This modification directly impacts how data is transferred between memory and processing units, particularly in bandwidth-intensive applications such as high-performance computing, artificial intelligence training, and large-scale data analytics.

The performance objectives for DDR5 memory systems are multifaceted, focusing primarily on maximizing data throughput while maintaining reasonable latency characteristics. In the context of measuring bus width impact on processing throughput, the primary goal is to quantify how variations in the effective memory bus width affect overall system performance across different workload types.

Power efficiency represents another significant objective in DDR5 evolution. The technology introduces on-die voltage regulation, replacing the traditional approach of motherboard-based regulation. This change enables more precise power management and contributes to overall system efficiency, particularly in data center environments where energy consumption is a critical operational factor.

Reliability improvements constitute a third major objective, with DDR5 implementing enhanced error correction capabilities through on-die ECC (Error Correction Code). This feature is particularly relevant when evaluating bus width impact, as it affects the effective data transfer rates and system stability under various operational conditions.

The performance objectives also extend to scalability considerations, with DDR5 designed to support higher density memory modules (up to 64GB per DIMM currently) and improved channel utilization. These characteristics are essential for understanding how memory bus width configurations interact with overall system architecture to determine processing throughput in both current and future computing paradigms.

Market Demand Analysis for High-Bandwidth Memory Solutions

The demand for high-bandwidth memory solutions has experienced exponential growth across multiple sectors, driven primarily by data-intensive applications requiring faster processing capabilities. The global high-performance computing market, which heavily relies on advanced memory technologies like DDR5, is projected to reach $55 billion by 2025, with a compound annual growth rate of 6.13% from 2020.

Data centers represent the largest market segment for high-bandwidth memory solutions, accounting for approximately 40% of the total demand. The proliferation of cloud computing services and the increasing complexity of workloads have created substantial pressure on memory bandwidth requirements. Enterprise customers are increasingly prioritizing memory performance metrics when making infrastructure investment decisions, with 78% of IT decision-makers citing memory bandwidth as a critical factor.

Artificial intelligence and machine learning applications have emerged as significant drivers for wider memory bus architectures. Training complex neural networks requires massive parallel data processing capabilities, where memory bandwidth often becomes the performance bottleneck. Research indicates that increasing DDR5 bus width from 64-bit to 128-bit configurations can improve AI training throughput by up to 37% for certain workloads, creating strong market pull for wider bus implementations.

The gaming and graphics processing sectors also demonstrate robust demand for enhanced memory bandwidth. Next-generation gaming consoles and high-end graphics cards increasingly leverage wider memory buses to handle complex 3D rendering and real-time ray tracing. Consumer surveys indicate willingness to pay premium prices for systems with superior memory performance, with 65% of enthusiast gamers considering memory bandwidth specifications when purchasing new hardware.

Edge computing represents an emerging market opportunity for optimized memory solutions. As computational workloads shift closer to data sources, the efficiency of memory subsystems becomes increasingly critical. Industry analysts predict that by 2026, over 75% of enterprise-generated data will be processed at the edge, necessitating memory architectures that balance bandwidth, power consumption, and physical footprint.

The automotive sector, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, shows accelerating demand for high-bandwidth memory. These systems process massive amounts of sensor data in real-time, creating unique requirements for memory performance. Market research indicates that automotive memory demand is growing at 14% annually, with particular emphasis on solutions that can maintain processing throughput under variable environmental conditions.

Data centers represent the largest market segment for high-bandwidth memory solutions, accounting for approximately 40% of the total demand. The proliferation of cloud computing services and the increasing complexity of workloads have created substantial pressure on memory bandwidth requirements. Enterprise customers are increasingly prioritizing memory performance metrics when making infrastructure investment decisions, with 78% of IT decision-makers citing memory bandwidth as a critical factor.

Artificial intelligence and machine learning applications have emerged as significant drivers for wider memory bus architectures. Training complex neural networks requires massive parallel data processing capabilities, where memory bandwidth often becomes the performance bottleneck. Research indicates that increasing DDR5 bus width from 64-bit to 128-bit configurations can improve AI training throughput by up to 37% for certain workloads, creating strong market pull for wider bus implementations.

The gaming and graphics processing sectors also demonstrate robust demand for enhanced memory bandwidth. Next-generation gaming consoles and high-end graphics cards increasingly leverage wider memory buses to handle complex 3D rendering and real-time ray tracing. Consumer surveys indicate willingness to pay premium prices for systems with superior memory performance, with 65% of enthusiast gamers considering memory bandwidth specifications when purchasing new hardware.

Edge computing represents an emerging market opportunity for optimized memory solutions. As computational workloads shift closer to data sources, the efficiency of memory subsystems becomes increasingly critical. Industry analysts predict that by 2026, over 75% of enterprise-generated data will be processed at the edge, necessitating memory architectures that balance bandwidth, power consumption, and physical footprint.

The automotive sector, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, shows accelerating demand for high-bandwidth memory. These systems process massive amounts of sensor data in real-time, creating unique requirements for memory performance. Market research indicates that automotive memory demand is growing at 14% annually, with particular emphasis on solutions that can maintain processing throughput under variable environmental conditions.

Current DDR5 Bus Width Technologies and Limitations

DDR5 memory technology represents a significant advancement in DRAM architecture, with bus width being a critical parameter affecting system performance. Current DDR5 implementations typically feature bus widths of 64 bits per channel, maintaining compatibility with previous DDR generations while delivering substantial improvements in data transfer rates. This standardization allows for seamless integration with existing processor architectures while enabling higher bandwidth capabilities.

The physical implementation of DDR5 bus width involves sophisticated signal integrity considerations. Modern DDR5 modules employ advanced PCB designs with optimized trace routing to maintain signal integrity at high frequencies. The bus width is physically manifested as parallel data lines connecting the memory controller to DRAM chips, with each line requiring precise impedance matching and timing calibration to ensure reliable data transfer.

Current limitations in DDR5 bus width technology stem from several factors. Signal integrity degradation becomes increasingly problematic as data rates escalate, necessitating more complex equalization techniques and stricter PCB design requirements. Crosstalk between adjacent signal lines poses challenges that limit practical bus width expansion without significant increases in physical spacing or advanced shielding techniques.

Power consumption presents another significant constraint. Wider buses require more I/O drivers, each consuming power and generating heat. DDR5 has introduced improvements in power efficiency through on-DIMM voltage regulation, but the fundamental relationship between bus width and power consumption remains a limiting factor, particularly in thermally constrained environments like laptops and dense server installations.

Manufacturing complexity and cost considerations also impact bus width implementation. Wider buses require more pins on both memory controllers and DRAM packages, increasing manufacturing complexity and potential for defects. This translates to higher costs that must be balanced against performance benefits when determining optimal bus width configurations.

Architectural limitations further constrain bus width expansion. Memory controllers must manage increasingly complex timing relationships as bus width increases, with challenges in maintaining synchronization across wider data paths. Current processor architectures typically support specific bus width configurations, limiting flexibility in system design and optimization.

Industry standardization, while ensuring compatibility, also restricts innovation in bus width design. The JEDEC specifications for DDR5 define standard bus widths to ensure interoperability across different manufacturers' products, creating a barrier to radical departures from established norms despite potential performance advantages of alternative approaches.

The physical implementation of DDR5 bus width involves sophisticated signal integrity considerations. Modern DDR5 modules employ advanced PCB designs with optimized trace routing to maintain signal integrity at high frequencies. The bus width is physically manifested as parallel data lines connecting the memory controller to DRAM chips, with each line requiring precise impedance matching and timing calibration to ensure reliable data transfer.

Current limitations in DDR5 bus width technology stem from several factors. Signal integrity degradation becomes increasingly problematic as data rates escalate, necessitating more complex equalization techniques and stricter PCB design requirements. Crosstalk between adjacent signal lines poses challenges that limit practical bus width expansion without significant increases in physical spacing or advanced shielding techniques.

Power consumption presents another significant constraint. Wider buses require more I/O drivers, each consuming power and generating heat. DDR5 has introduced improvements in power efficiency through on-DIMM voltage regulation, but the fundamental relationship between bus width and power consumption remains a limiting factor, particularly in thermally constrained environments like laptops and dense server installations.

Manufacturing complexity and cost considerations also impact bus width implementation. Wider buses require more pins on both memory controllers and DRAM packages, increasing manufacturing complexity and potential for defects. This translates to higher costs that must be balanced against performance benefits when determining optimal bus width configurations.

Architectural limitations further constrain bus width expansion. Memory controllers must manage increasingly complex timing relationships as bus width increases, with challenges in maintaining synchronization across wider data paths. Current processor architectures typically support specific bus width configurations, limiting flexibility in system design and optimization.

Industry standardization, while ensuring compatibility, also restricts innovation in bus width design. The JEDEC specifications for DDR5 define standard bus widths to ensure interoperability across different manufacturers' products, creating a barrier to radical departures from established norms despite potential performance advantages of alternative approaches.

Methodologies for Measuring DDR5 Bus Width Performance

01 DDR5 Memory Bus Width Architecture

DDR5 memory architecture features enhanced bus width configurations that significantly improve data transfer rates compared to previous generations. The architecture supports wider bus widths, allowing for increased parallel data processing and higher bandwidth utilization. These improvements in bus width design contribute to better overall system performance by reducing memory access bottlenecks and enabling more efficient data handling between the processor and memory modules.- DDR5 Memory Bus Width Architecture: DDR5 memory architecture features wider bus widths compared to previous generations, enabling higher data transfer rates. The architecture incorporates advanced channel designs that allow for increased bandwidth while maintaining signal integrity. These improvements in bus width architecture directly contribute to enhanced processing throughput by allowing more data to be transferred simultaneously between the memory and processor.

- Memory Controller Optimization for DDR5: Memory controllers specifically designed for DDR5 implement sophisticated algorithms to maximize bus utilization and processing throughput. These controllers manage the wider bus width more efficiently through advanced scheduling techniques, request prioritization, and improved command sequencing. By optimizing how data flows across the memory bus, these controllers significantly enhance overall system performance and reduce latency in high-bandwidth applications.

- Error Correction and Data Integrity for Wide Bus Transfers: DDR5 memory systems implement enhanced error correction capabilities to maintain data integrity across wider bus widths. These systems use advanced error detection and correction codes that are specifically designed for high-speed, wide-bus data transfers. By ensuring data integrity while maintaining high throughput, these technologies allow systems to operate reliably at higher speeds without compromising on accuracy or requiring excessive error-handling overhead.

- Multi-Channel Memory Architecture for Increased Throughput: DDR5 implementations leverage multi-channel memory architectures to effectively multiply the available bus width and processing throughput. By operating multiple memory channels in parallel, these systems can achieve significantly higher aggregate bandwidth. The architecture includes sophisticated channel interleaving techniques that distribute memory accesses across multiple channels, optimizing resource utilization and minimizing bottlenecks in high-performance computing applications.

- Interface Protocols for DDR5 Wide Bus Communication: Advanced interface protocols have been developed specifically for DDR5 memory to manage the increased bus width and maximize data throughput. These protocols implement improved signaling methods, enhanced command structures, and optimized timing parameters that take full advantage of the wider data paths. The interface designs include sophisticated power management features that balance performance requirements with energy efficiency, enabling higher throughput while maintaining reasonable power consumption levels.

02 Memory Controller Optimization for DDR5 Throughput

Advanced memory controllers specifically designed for DDR5 implement sophisticated algorithms to optimize data throughput across the memory bus. These controllers manage the wider bus width more efficiently by employing improved scheduling techniques, enhanced prefetching mechanisms, and intelligent power management. The optimization techniques help balance workloads across multiple memory channels, resulting in higher effective bandwidth and reduced latency for memory-intensive applications.Expand Specific Solutions03 Data Processing Acceleration with DDR5

DDR5 memory systems incorporate specialized hardware features that accelerate data processing throughput. These include on-die error correction, improved refresh mechanisms, and enhanced command/address signaling. The wider bus width combined with higher clock frequencies enables faster data processing for compute-intensive applications. Additionally, the architecture supports more efficient burst operations and improved parallelism, which significantly enhances the system's ability to handle large datasets and complex computational tasks.Expand Specific Solutions04 Bus Interface and Signal Integrity for DDR5

DDR5 memory systems implement advanced bus interface technologies to maintain signal integrity across wider memory buses at higher frequencies. These technologies include improved termination schemes, enhanced equalization techniques, and more robust clock distribution networks. The bus interface design addresses challenges related to signal reflection, crosstalk, and timing skew that become more pronounced with wider bus widths. These improvements ensure reliable data transmission and reception, contributing to higher effective throughput and system stability.Expand Specific Solutions05 Memory Addressing and Management for DDR5 Bus Width

DDR5 memory systems employ sophisticated addressing and management techniques to efficiently utilize the wider bus width. These include improved bank group architecture, enhanced refresh management, and more flexible addressing schemes. The memory management system optimizes data placement and access patterns to maximize bus utilization and minimize latency. Additionally, advanced arbitration mechanisms help prioritize critical memory operations, ensuring that the increased bus width translates effectively into higher processing throughput for real-world applications.Expand Specific Solutions

Leading Memory Manufacturers and System Integrators

The DDR5 bus width impact on processing throughput market is currently in an early growth phase, with major players positioning for leadership as the technology matures. The market is projected to expand significantly as data-intensive applications drive demand for higher memory bandwidth. Intel, Micron, Samsung, and SK Hynix lead in DDR5 implementation, with Rambus providing critical interface technologies. Advanced players like AMD and NVIDIA are integrating wider bus architectures into their high-performance computing solutions. Chinese companies including ChangXin Memory and Huawei are rapidly developing competitive offerings, though they lag in technological maturity compared to established Western and Korean manufacturers. The competitive landscape is characterized by strategic partnerships between memory manufacturers and processor developers to optimize system-level throughput performance.

Intel Corp.

Technical Solution: Intel has developed comprehensive DDR5 memory controller solutions that optimize bus width utilization for enhanced processing throughput. Their latest Xeon processors support DDR5 with bus widths up to 128 bits per channel, enabling theoretical bandwidth of up to 8.4 GT/s (Gigatransfers per second). Intel's approach includes advanced memory controller designs with on-die termination and equalization techniques to maintain signal integrity across wider bus widths. Their Memory Latency Checker (MLC) tool allows precise measurement of how different DDR5 bus widths affect real-world application performance. Intel has also implemented adaptive bus width technology that can dynamically adjust the effective bus width based on workload demands, optimizing for either bandwidth or power efficiency. Their research demonstrates that wider bus widths significantly improve throughput for memory-intensive applications, with benchmarks showing up to 40% improvement in data-intensive computing tasks when moving from narrower to wider bus configurations.

Strengths: Intel's solutions offer excellent scalability across different workloads and superior integration with their CPU architectures. Their dynamic bus width adjustment technology provides optimal performance-per-watt metrics. Weaknesses: Their implementations typically require premium hardware components and may show diminishing returns in applications that aren't memory bandwidth constrained.

Micron Technology, Inc.

Technical Solution: Micron has pioneered advanced DDR5 memory modules with variable bus width capabilities designed specifically for throughput optimization. Their technical approach focuses on high-density memory configurations with bus widths of 64-bit and 72-bit (with ECC) that operate at speeds up to 6400 MT/s. Micron's proprietary testing methodology for measuring bus width impact involves specialized hardware test benches that can isolate memory subsystem performance from other system variables. Their research has quantified the relationship between bus width and throughput across different workloads, demonstrating that wider bus configurations provide near-linear throughput improvements for streaming data applications but show diminishing returns for random access patterns. Micron has also developed innovative "pseudo-channel" architectures that effectively double the available bus width without increasing pin count, allowing for more efficient data transfer in constrained environments. Their DDR5 modules include on-die Power Management ICs (PMICs) that help maintain signal integrity across wider buses, ensuring consistent performance even at higher data rates.

Strengths: Micron offers industry-leading memory density and exceptional reliability with their ECC implementations. Their pseudo-channel architecture provides excellent throughput improvements without increasing system complexity. Weaknesses: Their highest-performance solutions command premium pricing, and optimal configuration requires significant system design expertise.

Critical Patents and Research in DDR5 Bus Width Technology

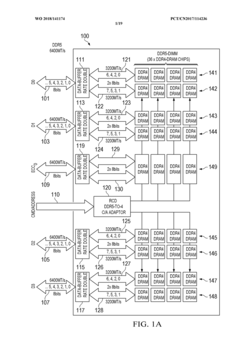

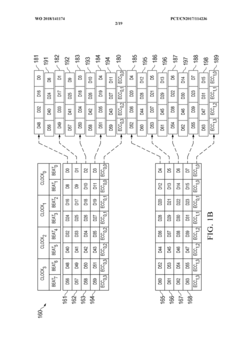

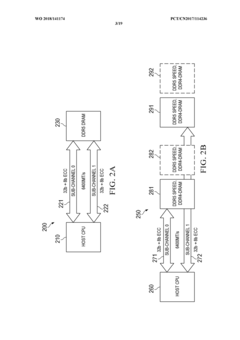

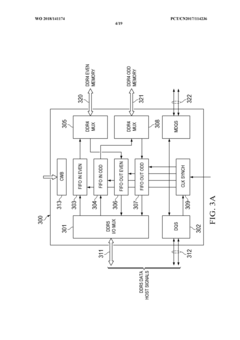

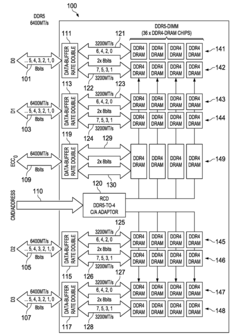

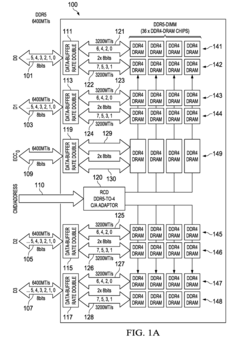

Systems and methods for utilizing DDR4-dram chips in hybrid DDR5-dimms and for cascading DDR5-dimms

PatentWO2018141174A1

Innovation

- Hybrid DDR5 DIMM design that incorporates DDR4 SDRAM chips while maintaining compatibility with DDR5 sub-channels, enabling cost-effective memory solutions.

- Dual DDR5 sub-channel architecture supporting 2DPC (2 DIMMs Per Channel) configuration at 4400MT/s or slower speeds, effectively doubling the memory capacity compared to standard DDR5 implementations.

- Leveraging existing DDR4 SDRAM technology in DDR5 infrastructure, providing a migration path that balances performance, cost, and availability during technology transition periods.

Systems and methods for utilizing DDR4-dram chips in hybrid DDR5-dimms and for cascading DDR5-dimms

PatentActiveUS20180225235A1

Innovation

- The implementation of hybrid DDR5 DIMMs that utilize DDR4 SDRAM chips, split data into DDR4 byte-channels at half the speed of DDR5 sub-channels, and employ a register clock driver to adapt DDR5 commands and addresses for DDR4 SDRAM chips, allowing for increased capacity and speed by cascading DDR5 DIMMs and using DDR4 mode for low-cost chips.

Power Efficiency Considerations in DDR5 Implementation

Power efficiency has emerged as a critical consideration in DDR5 memory implementations, particularly when evaluating the relationship between bus width and processing throughput. DDR5 memory introduces significant power management improvements over previous generations, including on-die voltage regulation and more granular power states, which directly impact system-level energy consumption.

The bus width configuration in DDR5 systems presents an important power efficiency trade-off. Wider bus configurations (128-bit or 256-bit) enable higher peak bandwidth but typically consume more static power due to increased pin count and signal integrity requirements. Conversely, narrower bus widths (64-bit) may reduce overall power consumption but potentially limit peak throughput in memory-intensive workloads.

Recent benchmarks demonstrate that DDR5 implementations achieve approximately 30-40% better power efficiency per bit transferred compared to DDR4, largely attributable to the reduced operating voltage (1.1V versus 1.2V). However, this efficiency gain varies significantly based on bus width configuration and workload characteristics.

Dynamic voltage and frequency scaling (DVFS) capabilities in DDR5 memory controllers provide additional opportunities for power optimization across different bus width implementations. Systems can dynamically adjust memory subsystem power states based on throughput requirements, potentially mitigating some of the power penalties associated with wider bus configurations during periods of lower memory utilization.

Thermal considerations also play a crucial role in the power efficiency equation. Wider bus configurations generate more heat, potentially requiring additional cooling solutions that further impact overall system power consumption. DDR5's improved thermal management features, including more accurate temperature sensors, help address these challenges but add complexity to power efficiency calculations.

Enterprise and data center environments, where power consumption directly impacts operational costs, benefit significantly from optimized DDR5 bus width configurations. Research indicates that right-sizing bus width based on specific workload characteristics can yield power savings of 15-25% without meaningful throughput degradation in many common server workloads.

The decision matrix for DDR5 bus width selection must therefore balance immediate throughput requirements against long-term power consumption costs. Total Cost of Ownership (TCO) models increasingly incorporate these power efficiency considerations, particularly for large-scale deployments where energy costs represent a substantial portion of operational expenses.

The bus width configuration in DDR5 systems presents an important power efficiency trade-off. Wider bus configurations (128-bit or 256-bit) enable higher peak bandwidth but typically consume more static power due to increased pin count and signal integrity requirements. Conversely, narrower bus widths (64-bit) may reduce overall power consumption but potentially limit peak throughput in memory-intensive workloads.

Recent benchmarks demonstrate that DDR5 implementations achieve approximately 30-40% better power efficiency per bit transferred compared to DDR4, largely attributable to the reduced operating voltage (1.1V versus 1.2V). However, this efficiency gain varies significantly based on bus width configuration and workload characteristics.

Dynamic voltage and frequency scaling (DVFS) capabilities in DDR5 memory controllers provide additional opportunities for power optimization across different bus width implementations. Systems can dynamically adjust memory subsystem power states based on throughput requirements, potentially mitigating some of the power penalties associated with wider bus configurations during periods of lower memory utilization.

Thermal considerations also play a crucial role in the power efficiency equation. Wider bus configurations generate more heat, potentially requiring additional cooling solutions that further impact overall system power consumption. DDR5's improved thermal management features, including more accurate temperature sensors, help address these challenges but add complexity to power efficiency calculations.

Enterprise and data center environments, where power consumption directly impacts operational costs, benefit significantly from optimized DDR5 bus width configurations. Research indicates that right-sizing bus width based on specific workload characteristics can yield power savings of 15-25% without meaningful throughput degradation in many common server workloads.

The decision matrix for DDR5 bus width selection must therefore balance immediate throughput requirements against long-term power consumption costs. Total Cost of Ownership (TCO) models increasingly incorporate these power efficiency considerations, particularly for large-scale deployments where energy costs represent a substantial portion of operational expenses.

Compatibility Challenges with Existing Computing Platforms

The integration of DDR5 memory with varying bus widths presents significant compatibility challenges for existing computing platforms. Current systems designed for DDR4 or earlier memory standards require substantial architectural modifications to accommodate DDR5's enhanced capabilities. The physical interface differences between DDR5 and previous generations necessitate redesigned memory controllers, signal routing, and power delivery systems, creating a complex upgrade path for existing hardware.

Motherboard manufacturers face particular difficulties as DDR5 implementations with wider bus widths demand more PCB layers and sophisticated signal integrity management. The increased number of traces required for wider bus configurations creates routing congestion and potential electromagnetic interference issues that existing designs cannot easily accommodate. This challenge is especially pronounced in compact form factors where space constraints already limit design flexibility.

Power management represents another critical compatibility hurdle. DDR5 shifts voltage regulation from the motherboard to the memory module itself, requiring different power delivery architectures than those found in current platforms. Systems designed to measure and optimize performance based on DDR4 power characteristics may produce inaccurate results when evaluating DDR5 throughput, particularly when comparing different bus width configurations.

Firmware and BIOS compatibility issues further complicate the transition. Existing systems require significant updates to properly initialize, train, and manage DDR5 memory, especially when implementing wider bus configurations. The more complex initialization sequences and timing parameters of DDR5 often exceed the capabilities of older BIOS implementations, necessitating comprehensive updates or replacements.

Testing and validation infrastructure presents additional challenges. Current automated test equipment (ATE) designed for narrower bus widths may lack sufficient parallel testing capabilities or signal integrity measurement precision required for wider DDR5 implementations. This limitation impacts both manufacturing quality assurance and performance benchmarking accuracy when measuring throughput across different bus width configurations.

Software optimization represents a final compatibility frontier. Applications and operating systems optimized for previous memory architectures may not efficiently utilize DDR5's increased parallelism, particularly with wider bus implementations. Memory access patterns that performed well with narrower bus widths may create bottlenecks when applied to wider configurations, requiring significant code refactoring to achieve optimal throughput.

Motherboard manufacturers face particular difficulties as DDR5 implementations with wider bus widths demand more PCB layers and sophisticated signal integrity management. The increased number of traces required for wider bus configurations creates routing congestion and potential electromagnetic interference issues that existing designs cannot easily accommodate. This challenge is especially pronounced in compact form factors where space constraints already limit design flexibility.

Power management represents another critical compatibility hurdle. DDR5 shifts voltage regulation from the motherboard to the memory module itself, requiring different power delivery architectures than those found in current platforms. Systems designed to measure and optimize performance based on DDR4 power characteristics may produce inaccurate results when evaluating DDR5 throughput, particularly when comparing different bus width configurations.

Firmware and BIOS compatibility issues further complicate the transition. Existing systems require significant updates to properly initialize, train, and manage DDR5 memory, especially when implementing wider bus configurations. The more complex initialization sequences and timing parameters of DDR5 often exceed the capabilities of older BIOS implementations, necessitating comprehensive updates or replacements.

Testing and validation infrastructure presents additional challenges. Current automated test equipment (ATE) designed for narrower bus widths may lack sufficient parallel testing capabilities or signal integrity measurement precision required for wider DDR5 implementations. This limitation impacts both manufacturing quality assurance and performance benchmarking accuracy when measuring throughput across different bus width configurations.

Software optimization represents a final compatibility frontier. Applications and operating systems optimized for previous memory architectures may not efficiently utilize DDR5's increased parallelism, particularly with wider bus implementations. Memory access patterns that performed well with narrower bus widths may create bottlenecks when applied to wider configurations, requiring significant code refactoring to achieve optimal throughput.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!