Neuromorphic AI Systems vs Traditional Systems: Utility Comparison

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic AI Evolution and Objectives

Neuromorphic computing represents a paradigm shift in artificial intelligence, drawing inspiration from the structure and function of the human brain. This bio-inspired approach to computing has evolved significantly since its conceptual inception in the late 1980s with Carver Mead's pioneering work. The fundamental premise of neuromorphic systems lies in their ability to mimic neural networks through specialized hardware architectures that process information in a manner analogous to biological neural systems.

The evolution of neuromorphic AI has been marked by several key milestones. Initially, research focused on creating electronic circuits that could emulate basic neuronal functions. By the early 2000s, advancements in semiconductor technology enabled the development of more sophisticated neuromorphic chips capable of simulating larger neural networks. The last decade has witnessed exponential growth in this field, with major technological breakthroughs from both academic institutions and industry leaders.

Current neuromorphic systems represent a convergence of neuroscience, computer engineering, and materials science. These systems typically employ spiking neural networks (SNNs) that communicate through discrete events or "spikes" rather than continuous signals, closely resembling biological neural communication. This event-driven processing offers potential advantages in energy efficiency compared to traditional computing architectures that operate on a clock-based paradigm.

The primary objectives of neuromorphic AI development are multifaceted. First, these systems aim to achieve unprecedented energy efficiency by leveraging the brain's remarkable ability to perform complex computations with minimal power consumption. Second, they seek to enable real-time processing of sensory data, facilitating more natural human-machine interactions. Third, neuromorphic systems target improved adaptability and learning capabilities that more closely mirror biological neural networks.

Looking forward, the trajectory of neuromorphic computing points toward increasingly sophisticated systems capable of addressing complex cognitive tasks while maintaining energy efficiency. Researchers are actively exploring novel materials and architectures to overcome current limitations in scalability and integration with conventional computing systems. The ultimate goal remains the development of artificial intelligence systems that can approach the human brain's remarkable efficiency, adaptability, and cognitive capabilities.

As traditional AI systems face increasing challenges related to energy consumption and computational bottlenecks, neuromorphic approaches offer a promising alternative path. The comparative utility of these systems versus traditional approaches represents a critical area of investigation for the future of computing and artificial intelligence technologies.

The evolution of neuromorphic AI has been marked by several key milestones. Initially, research focused on creating electronic circuits that could emulate basic neuronal functions. By the early 2000s, advancements in semiconductor technology enabled the development of more sophisticated neuromorphic chips capable of simulating larger neural networks. The last decade has witnessed exponential growth in this field, with major technological breakthroughs from both academic institutions and industry leaders.

Current neuromorphic systems represent a convergence of neuroscience, computer engineering, and materials science. These systems typically employ spiking neural networks (SNNs) that communicate through discrete events or "spikes" rather than continuous signals, closely resembling biological neural communication. This event-driven processing offers potential advantages in energy efficiency compared to traditional computing architectures that operate on a clock-based paradigm.

The primary objectives of neuromorphic AI development are multifaceted. First, these systems aim to achieve unprecedented energy efficiency by leveraging the brain's remarkable ability to perform complex computations with minimal power consumption. Second, they seek to enable real-time processing of sensory data, facilitating more natural human-machine interactions. Third, neuromorphic systems target improved adaptability and learning capabilities that more closely mirror biological neural networks.

Looking forward, the trajectory of neuromorphic computing points toward increasingly sophisticated systems capable of addressing complex cognitive tasks while maintaining energy efficiency. Researchers are actively exploring novel materials and architectures to overcome current limitations in scalability and integration with conventional computing systems. The ultimate goal remains the development of artificial intelligence systems that can approach the human brain's remarkable efficiency, adaptability, and cognitive capabilities.

As traditional AI systems face increasing challenges related to energy consumption and computational bottlenecks, neuromorphic approaches offer a promising alternative path. The comparative utility of these systems versus traditional approaches represents a critical area of investigation for the future of computing and artificial intelligence technologies.

Market Demand Analysis for Brain-Inspired Computing

The market for brain-inspired computing technologies has witnessed significant growth in recent years, driven by increasing demands for more efficient AI processing capabilities. Current projections indicate the neuromorphic computing market will reach approximately $8.9 billion by 2025, with a compound annual growth rate exceeding 20% between 2020-2025. This remarkable growth trajectory reflects the expanding recognition of neuromorphic systems' potential advantages over traditional computing architectures.

Primary market demand stems from applications requiring real-time processing of unstructured data with energy constraints. The autonomous vehicle sector represents a particularly promising market, where neuromorphic systems can process visual inputs and make decisions with lower latency and power consumption than conventional systems. Industry analysts estimate that implementing neuromorphic processors could reduce power requirements for certain AI functions in vehicles by up to 95% compared to GPU-based solutions.

Healthcare presents another substantial market opportunity, particularly in medical imaging analysis and brain-computer interfaces. The ability of neuromorphic systems to process sensory data efficiently aligns perfectly with requirements for portable medical devices and implantable neural interfaces. Market research indicates healthcare applications could constitute approximately 18% of the total neuromorphic computing market by 2025.

Edge computing applications represent perhaps the most immediate commercial opportunity. As IoT device deployments continue to accelerate, the need for on-device intelligence that operates within strict power budgets becomes increasingly critical. Industry surveys indicate that 73% of enterprises implementing edge AI cite power consumption as a primary concern, creating a natural fit for neuromorphic solutions that can deliver 50-100x improvements in energy efficiency for certain workloads.

The defense and security sectors also demonstrate strong demand signals, with government agencies investing substantially in neuromorphic research for applications ranging from autonomous drones to signal intelligence. Public records show defense-related neuromorphic computing research grants exceeding $450 million globally in 2021 alone.

Despite this promising outlook, market adoption faces challenges related to software ecosystem maturity and integration with existing AI workflows. Enterprise surveys indicate that 62% of potential adopters cite concerns about compatibility with existing machine learning frameworks as a barrier to implementation. This highlights the need for neuromorphic computing providers to develop robust software stacks and demonstrate clear migration paths from traditional computing paradigms.

Primary market demand stems from applications requiring real-time processing of unstructured data with energy constraints. The autonomous vehicle sector represents a particularly promising market, where neuromorphic systems can process visual inputs and make decisions with lower latency and power consumption than conventional systems. Industry analysts estimate that implementing neuromorphic processors could reduce power requirements for certain AI functions in vehicles by up to 95% compared to GPU-based solutions.

Healthcare presents another substantial market opportunity, particularly in medical imaging analysis and brain-computer interfaces. The ability of neuromorphic systems to process sensory data efficiently aligns perfectly with requirements for portable medical devices and implantable neural interfaces. Market research indicates healthcare applications could constitute approximately 18% of the total neuromorphic computing market by 2025.

Edge computing applications represent perhaps the most immediate commercial opportunity. As IoT device deployments continue to accelerate, the need for on-device intelligence that operates within strict power budgets becomes increasingly critical. Industry surveys indicate that 73% of enterprises implementing edge AI cite power consumption as a primary concern, creating a natural fit for neuromorphic solutions that can deliver 50-100x improvements in energy efficiency for certain workloads.

The defense and security sectors also demonstrate strong demand signals, with government agencies investing substantially in neuromorphic research for applications ranging from autonomous drones to signal intelligence. Public records show defense-related neuromorphic computing research grants exceeding $450 million globally in 2021 alone.

Despite this promising outlook, market adoption faces challenges related to software ecosystem maturity and integration with existing AI workflows. Enterprise surveys indicate that 62% of potential adopters cite concerns about compatibility with existing machine learning frameworks as a barrier to implementation. This highlights the need for neuromorphic computing providers to develop robust software stacks and demonstrate clear migration paths from traditional computing paradigms.

Current Neuromorphic Technology Landscape and Challenges

The global neuromorphic computing landscape has witnessed significant advancements in recent years, with research institutions and technology companies making substantial investments in this emerging field. Current neuromorphic systems attempt to mimic the brain's neural architecture and information processing mechanisms, offering potential advantages in energy efficiency, parallel processing, and adaptive learning capabilities compared to traditional von Neumann computing architectures.

Major research initiatives like the EU's Human Brain Project, IBM's TrueNorth, and Intel's Loihi have demonstrated promising results in neuromorphic chip development. These systems utilize artificial neural networks implemented in hardware, with architectures that incorporate spiking neurons and plastic synapses to process information in ways fundamentally different from conventional computing systems.

Despite these advancements, neuromorphic technology faces significant challenges that limit widespread adoption. The hardware implementation of neural networks remains complex and expensive, with current manufacturing processes not optimized for the unique requirements of neuromorphic architectures. Scaling these systems to match the capabilities of the human brain (with approximately 86 billion neurons and 100 trillion synapses) presents formidable engineering challenges.

Programming paradigms represent another substantial hurdle. Traditional software development approaches are ill-suited for neuromorphic systems, necessitating new programming models that can effectively leverage spike-based computation and temporal information processing. The lack of standardized development frameworks and tools further complicates the creation of applications for neuromorphic hardware.

Energy efficiency, while theoretically superior to traditional systems, has not yet reached its full potential in practical implementations. Current neuromorphic chips still consume more power than biological neural systems when performing comparable computational tasks, though they offer significant improvements over conventional AI accelerators for specific workloads.

The integration of neuromorphic systems with existing computing infrastructure presents additional challenges. Interface protocols, data formatting, and system compatibility issues must be addressed to enable seamless operation within current technology ecosystems. This integration challenge extends to software stacks and development environments that must bridge conventional and neuromorphic computing paradigms.

Geographically, neuromorphic research centers are concentrated in North America, Europe, and East Asia, with the United States, European Union, China, and Japan leading development efforts. This distribution reflects both the significant investment requirements and the strategic importance various nations place on advancing neuromorphic technology as a potential paradigm shift in computing architecture.

Major research initiatives like the EU's Human Brain Project, IBM's TrueNorth, and Intel's Loihi have demonstrated promising results in neuromorphic chip development. These systems utilize artificial neural networks implemented in hardware, with architectures that incorporate spiking neurons and plastic synapses to process information in ways fundamentally different from conventional computing systems.

Despite these advancements, neuromorphic technology faces significant challenges that limit widespread adoption. The hardware implementation of neural networks remains complex and expensive, with current manufacturing processes not optimized for the unique requirements of neuromorphic architectures. Scaling these systems to match the capabilities of the human brain (with approximately 86 billion neurons and 100 trillion synapses) presents formidable engineering challenges.

Programming paradigms represent another substantial hurdle. Traditional software development approaches are ill-suited for neuromorphic systems, necessitating new programming models that can effectively leverage spike-based computation and temporal information processing. The lack of standardized development frameworks and tools further complicates the creation of applications for neuromorphic hardware.

Energy efficiency, while theoretically superior to traditional systems, has not yet reached its full potential in practical implementations. Current neuromorphic chips still consume more power than biological neural systems when performing comparable computational tasks, though they offer significant improvements over conventional AI accelerators for specific workloads.

The integration of neuromorphic systems with existing computing infrastructure presents additional challenges. Interface protocols, data formatting, and system compatibility issues must be addressed to enable seamless operation within current technology ecosystems. This integration challenge extends to software stacks and development environments that must bridge conventional and neuromorphic computing paradigms.

Geographically, neuromorphic research centers are concentrated in North America, Europe, and East Asia, with the United States, European Union, China, and Japan leading development efforts. This distribution reflects both the significant investment requirements and the strategic importance various nations place on advancing neuromorphic technology as a potential paradigm shift in computing architecture.

Current Neuromorphic vs Traditional AI Architectures

01 Neuromorphic Computing for Energy Efficiency

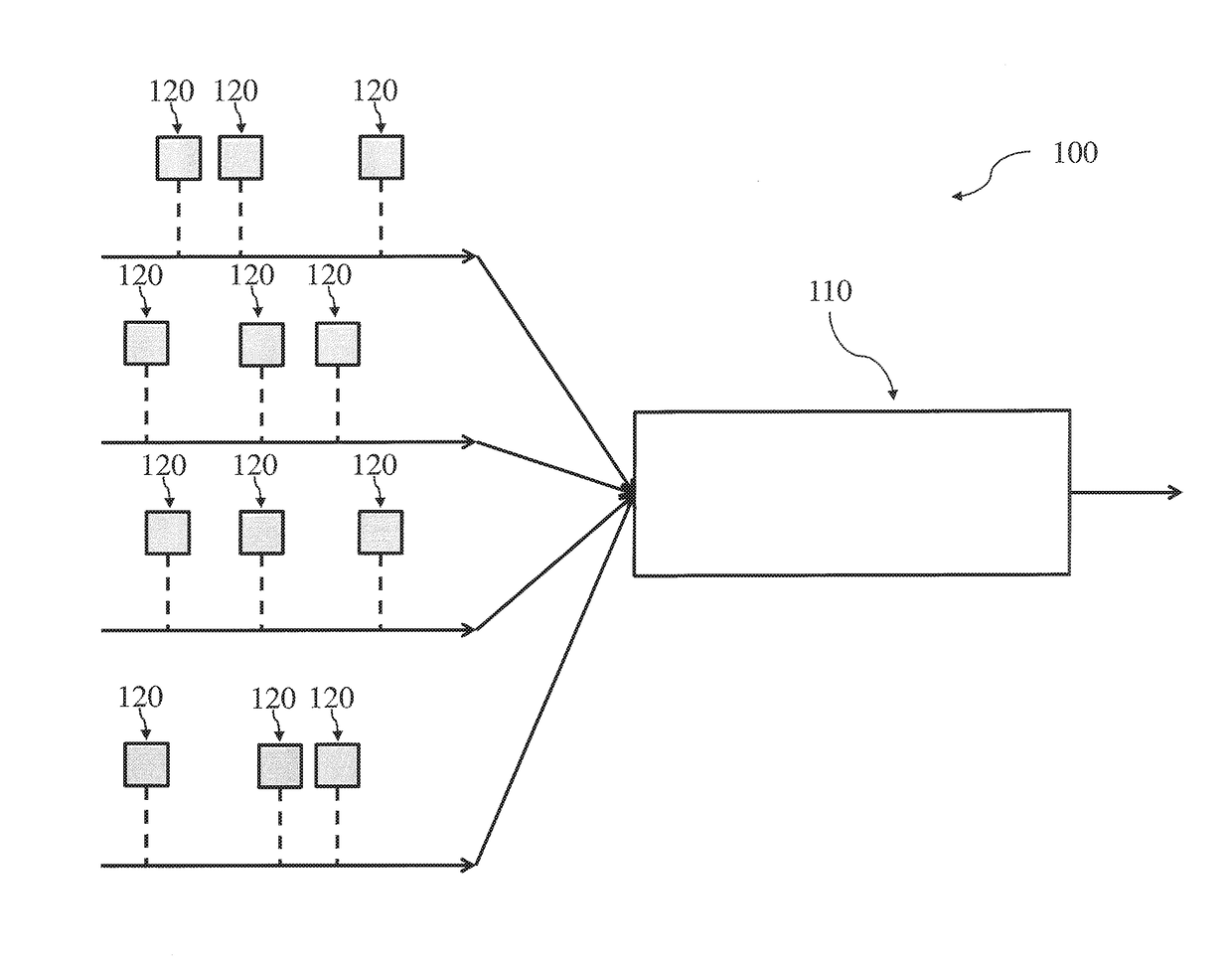

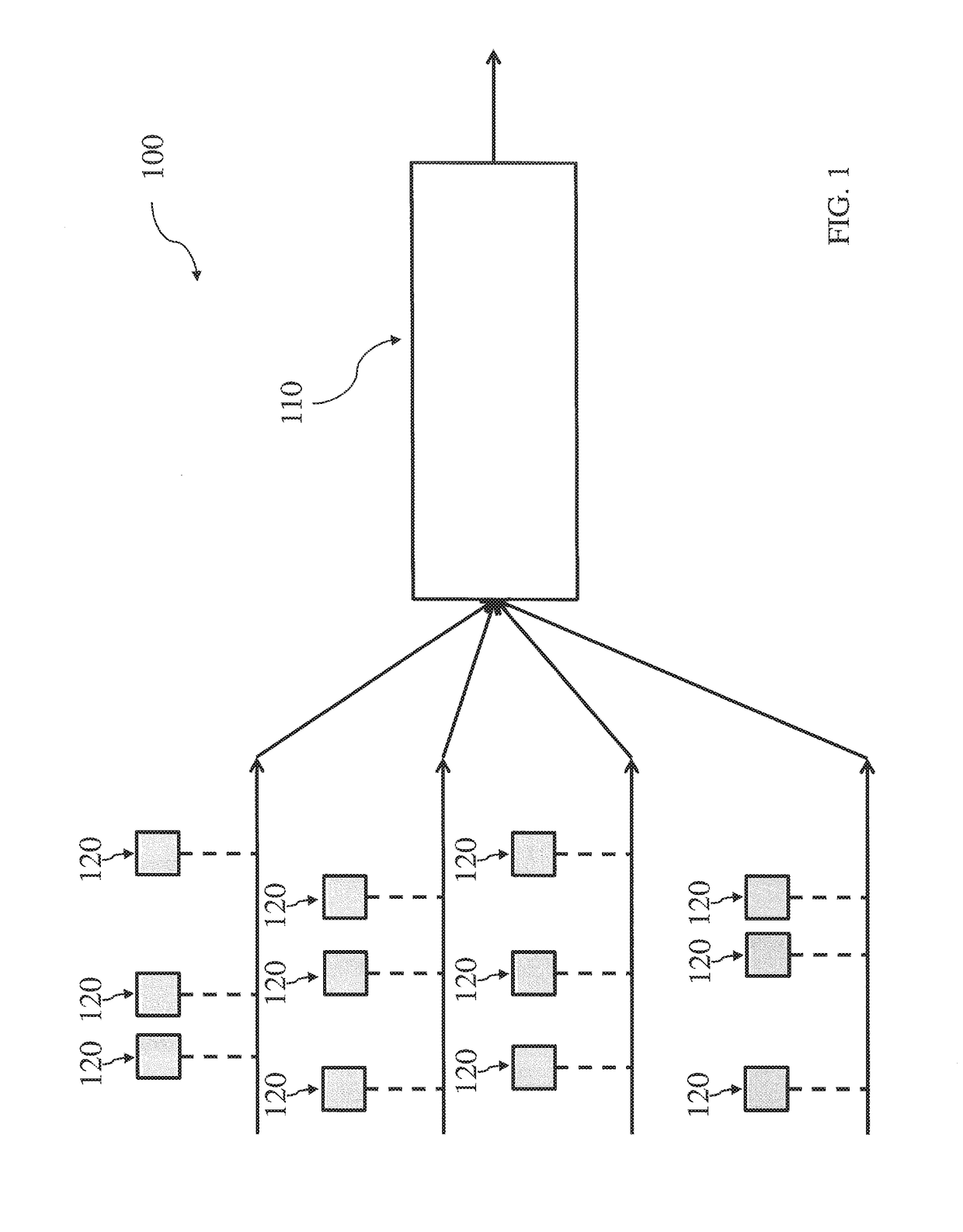

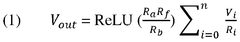

Neuromorphic AI systems are designed to mimic the structure and function of the human brain, offering significant advantages in energy efficiency compared to traditional computing architectures. These systems utilize specialized hardware that operates with lower power consumption while maintaining high computational capabilities, making them suitable for edge computing applications where energy resources are limited. The brain-inspired design allows for parallel processing and reduced energy requirements while performing complex AI tasks.- Neuromorphic computing architectures for AI applications: Neuromorphic computing architectures mimic the structure and function of the human brain to enable more efficient AI processing. These systems utilize specialized hardware designs that incorporate neural networks with spiking neurons and synaptic connections. By emulating biological neural systems, these architectures can achieve significant improvements in energy efficiency, processing speed, and learning capabilities compared to traditional computing approaches for AI applications.

- Energy-efficient AI processing using neuromorphic systems: Neuromorphic AI systems offer substantial energy efficiency advantages over conventional computing architectures. By implementing event-driven processing and sparse activation patterns similar to biological neural networks, these systems can perform complex AI tasks while consuming significantly less power. This energy efficiency makes neuromorphic systems particularly valuable for edge computing applications, mobile devices, and other scenarios where power consumption is a critical constraint.

- Real-time learning and adaptation in neuromorphic systems: Neuromorphic AI systems can perform continuous learning and adaptation in real-time environments. Unlike traditional AI systems that require separate training and inference phases, these systems can modify their internal representations and processing based on incoming data streams. This capability enables applications in dynamic environments where conditions change rapidly and the system must adapt without explicit retraining, such as autonomous vehicles, robotics, and adaptive control systems.

- Sensory processing and pattern recognition applications: Neuromorphic systems excel at processing sensory data and performing pattern recognition tasks. Their architecture is particularly well-suited for processing visual, auditory, and other sensory inputs in ways that mimic biological perception. These systems can efficiently detect patterns, recognize objects, process natural language, and interpret complex sensory scenes with high accuracy while maintaining low power consumption, making them valuable for applications in computer vision, speech recognition, and multi-modal sensing.

- Integration of neuromorphic AI in autonomous systems and robotics: Neuromorphic AI systems provide significant utility in autonomous systems and robotics applications. Their ability to process sensory information efficiently, make decisions in real-time, and adapt to changing environments makes them ideal for controlling autonomous vehicles, drones, and robotic systems. The low latency and energy efficiency of neuromorphic processing enables faster response times and extended operational durations in mobile platforms, while their learning capabilities allow for improved autonomous navigation and interaction with complex environments.

02 Hardware Implementation of Spiking Neural Networks

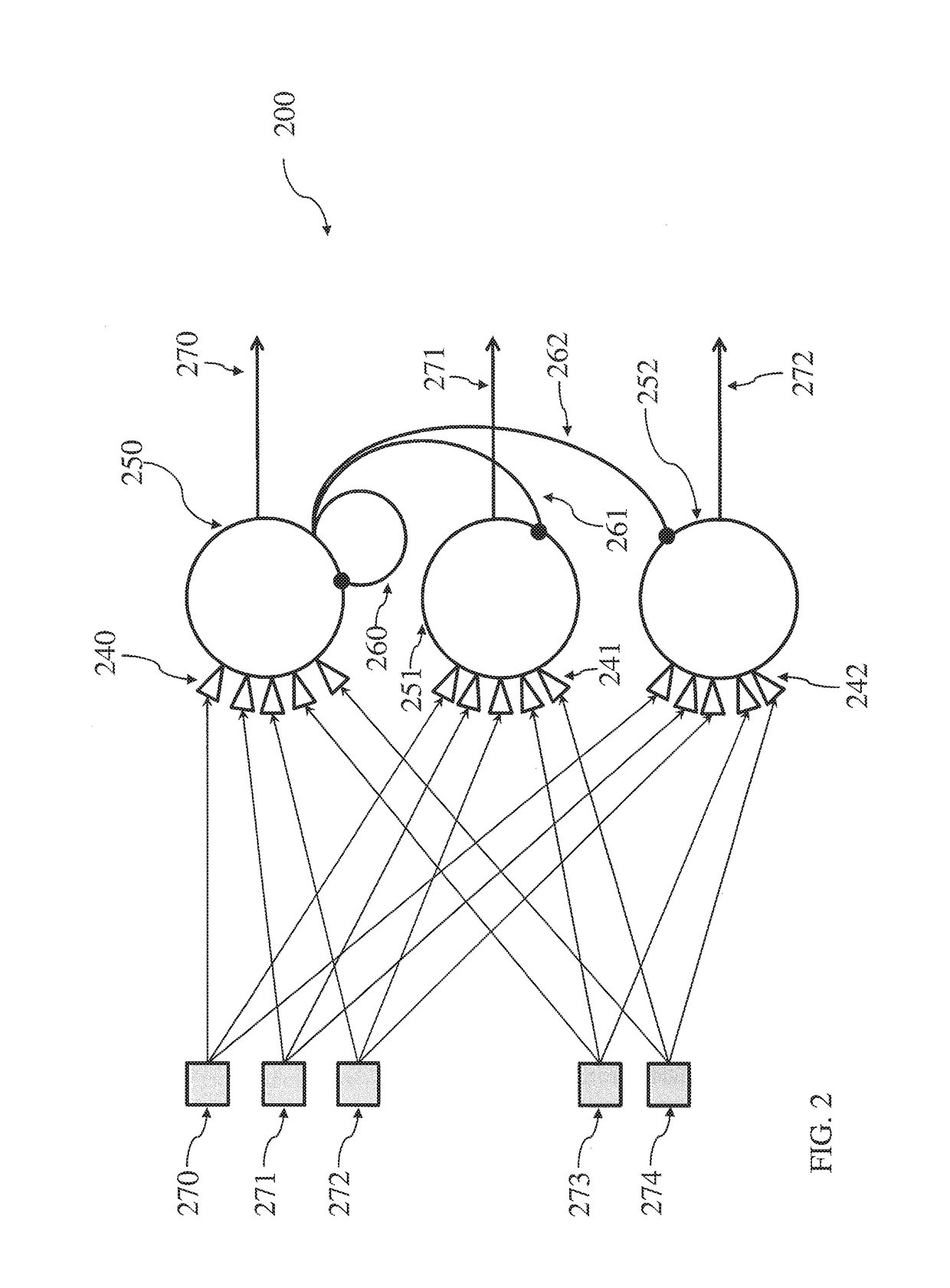

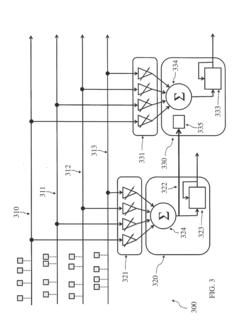

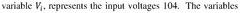

Neuromorphic systems implement spiking neural networks (SNNs) in hardware, enabling more efficient processing of temporal data patterns. These implementations use specialized circuits that process information through discrete spikes or events rather than continuous signals, similar to biological neurons. This approach allows for efficient event-driven computation where processing occurs only when needed, reducing computational overhead. Hardware implementations include memristor-based synapses, specialized neuromorphic chips, and integrated circuits designed specifically for neural processing.Expand Specific Solutions03 Applications in Autonomous Systems and Robotics

Neuromorphic AI systems provide significant utility in autonomous systems and robotics by enabling real-time decision making with lower latency and power requirements. These systems can process sensory information from multiple sources simultaneously, allowing robots to navigate complex environments, recognize objects, and interact naturally with humans. The event-based processing capabilities are particularly valuable for applications requiring rapid response to changing conditions, such as autonomous vehicles, drones, and industrial robots that must operate safely in dynamic environments.Expand Specific Solutions04 Edge Computing and IoT Integration

Neuromorphic AI systems are increasingly being deployed for edge computing applications and Internet of Things (IoT) devices where processing must occur locally with limited resources. These systems enable sophisticated AI capabilities directly on devices without requiring constant cloud connectivity, enhancing privacy and reducing bandwidth requirements. The low power consumption and efficient processing make neuromorphic computing ideal for smart sensors, wearable devices, and distributed intelligence systems that need to operate independently while performing complex pattern recognition and decision-making tasks.Expand Specific Solutions05 Adaptive Learning and Self-Optimization

Neuromorphic systems incorporate adaptive learning mechanisms that allow them to continuously improve performance based on experience, similar to biological learning. These systems can self-optimize by adjusting their internal parameters in response to new data, enabling them to adapt to changing conditions without explicit reprogramming. This capability is particularly valuable for applications in dynamic environments where conditions may change unpredictably. The continuous learning approach allows neuromorphic systems to develop increasingly sophisticated behaviors over time while maintaining operational efficiency.Expand Specific Solutions

Key Industry Players in Neuromorphic AI

Neuromorphic AI systems are emerging as a transformative technology in the computing landscape, currently in the early growth phase with significant potential for expansion. The market is projected to grow substantially as energy-efficient AI solutions become increasingly critical. Companies like IBM, Intel, and Samsung are leading research and development efforts, with IBM's TrueNorth and Intel's Loihi neuromorphic chips demonstrating significant advancements. Specialized players such as Syntiant and Applied Brain Research are developing targeted applications, while academic institutions including Tsinghua University and KAIST are contributing foundational research. The technology is approaching commercial viability with early applications in edge computing, though widespread adoption remains several years away as hardware platforms and programming paradigms continue to mature.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip represents one of the most advanced neuromorphic AI systems, designed to mimic the brain's architecture and function. The chip contains 1 million digital neurons and 256 million synapses, consuming only 70mW of power while delivering the equivalent of 46 billion synaptic operations per second per watt[1]. IBM has further developed the SyNAPSE (Systems of Neuromorphic Adaptive Plastic Scalable Electronics) architecture that implements spiking neural networks in hardware, allowing for event-driven computation that activates only when needed rather than continuously like traditional systems[2]. Their neuromorphic systems excel at pattern recognition tasks and can process sensory data in real-time with significantly lower power consumption compared to conventional von Neumann architectures. IBM has demonstrated applications in object recognition, anomaly detection, and time-series prediction using these systems[3].

Strengths: Extremely energy-efficient compared to traditional systems (100-1000x lower power consumption); event-driven processing enables real-time responsiveness; highly scalable architecture. Weaknesses: Programming complexity requires specialized knowledge of spiking neural networks; limited software ecosystem compared to traditional deep learning; still faces challenges in training algorithms for complex tasks.

Syntiant Corp.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP), a specialized neuromorphic chip designed specifically for edge AI applications with a focus on always-on, low-power audio and sensor processing. Their NDP architecture implements a hardware-based neural network that processes information in a fundamentally different way than traditional digital processors, consuming less than 200 microwatts of power while performing deep learning inference tasks[1]. Syntiant's technology enables voice and sensor interfaces in battery-powered devices to run continuously without significant power drain. The NDP100 and NDP101 chips can identify specific wake words and voice commands with high accuracy while consuming minimal power, making them ideal for smart speakers, earbuds, and IoT devices[2]. Their latest NDP200 processor extends capabilities to include vision applications while maintaining ultra-low power consumption. Syntiant's approach differs from other neuromorphic systems by focusing on practical commercial applications rather than pure research, with millions of their chips already deployed in consumer devices[3].

Strengths: Extremely low power consumption (10-100x more efficient than traditional processors for specific tasks); optimized for real-world commercial applications; small form factor suitable for integration into tiny devices. Weaknesses: More specialized and less flexible than general-purpose neuromorphic systems; primarily focused on specific audio and sensor applications rather than broader AI workloads.

Core Innovations in Spiking Neural Networks

Neuromorphic architecture with multiple coupled neurons using internal state neuron information

PatentActiveUS20170372194A1

Innovation

- A neuromorphic architecture featuring interconnected neurons with internal state information links, allowing for the transmission of internal state information across layers to modify the operation of other neurons, enhancing the system's performance and capability in data processing, pattern recognition, and correlation detection.

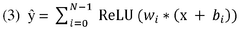

Systems and methods for using artificial neurons and spike neurons to model nonlinear dynamics and control systems

PatentWO2025072958A1

Innovation

- The use of neuromorphic computing devices configured with artificial neurons (ANs) and spike neurons (SNs) to model nonlinear dynamics and control systems, allowing for real-time learning and reduced power consumption compared to digital implementations.

Energy Efficiency Comparison Metrics

Energy efficiency represents a critical metric when comparing neuromorphic AI systems with traditional computing architectures. Neuromorphic systems, designed to mimic the brain's neural structure, demonstrate significant advantages in power consumption metrics. These brain-inspired systems typically operate at power densities of 20-30 watts per square centimeter, whereas conventional von Neumann architectures often require 100+ watts for comparable computational tasks.

The fundamental efficiency difference stems from neuromorphic systems' event-driven processing paradigm. Unlike traditional systems that continuously consume power regardless of computational load, neuromorphic hardware activates circuits only when necessary for information processing. This approach yields energy savings of 100-1000x for specific workloads, particularly in pattern recognition and sensory processing applications.

Standard measurement protocols for comparing energy efficiency include TOPS/Watt (Tera Operations Per Second per Watt), which quantifies computational throughput relative to power consumption. Neuromorphic systems consistently achieve 10-50 TOPS/Watt, while traditional GPU architectures typically deliver 2-5 TOPS/Watt for similar AI workloads. Additionally, the SyNAPSE program established by DARPA provides benchmarks specifically designed for neuromorphic systems, measuring energy per synaptic operation.

Temperature management represents another critical efficiency metric. Neuromorphic systems generate substantially less heat during operation, reducing cooling infrastructure requirements. This characteristic translates to approximately 40-60% lower total facility energy costs when deployed at scale in data centers or edge computing environments.

Mobile and embedded applications highlight the efficiency advantage most dramatically. Field tests demonstrate that neuromorphic solutions can extend battery life by 3-5x compared to traditional computing approaches when performing continuous AI inference tasks. This efficiency differential becomes particularly pronounced in always-on applications like voice recognition, environmental monitoring, and autonomous navigation systems.

The efficiency gap continues to widen as neuromorphic architectures mature. Recent advancements in materials science, particularly the integration of memristive components and phase-change materials, have further reduced energy requirements by an additional 30-40% compared to first-generation neuromorphic designs. These improvements suggest the efficiency advantage will likely increase rather than diminish as the technology evolves.

The fundamental efficiency difference stems from neuromorphic systems' event-driven processing paradigm. Unlike traditional systems that continuously consume power regardless of computational load, neuromorphic hardware activates circuits only when necessary for information processing. This approach yields energy savings of 100-1000x for specific workloads, particularly in pattern recognition and sensory processing applications.

Standard measurement protocols for comparing energy efficiency include TOPS/Watt (Tera Operations Per Second per Watt), which quantifies computational throughput relative to power consumption. Neuromorphic systems consistently achieve 10-50 TOPS/Watt, while traditional GPU architectures typically deliver 2-5 TOPS/Watt for similar AI workloads. Additionally, the SyNAPSE program established by DARPA provides benchmarks specifically designed for neuromorphic systems, measuring energy per synaptic operation.

Temperature management represents another critical efficiency metric. Neuromorphic systems generate substantially less heat during operation, reducing cooling infrastructure requirements. This characteristic translates to approximately 40-60% lower total facility energy costs when deployed at scale in data centers or edge computing environments.

Mobile and embedded applications highlight the efficiency advantage most dramatically. Field tests demonstrate that neuromorphic solutions can extend battery life by 3-5x compared to traditional computing approaches when performing continuous AI inference tasks. This efficiency differential becomes particularly pronounced in always-on applications like voice recognition, environmental monitoring, and autonomous navigation systems.

The efficiency gap continues to widen as neuromorphic architectures mature. Recent advancements in materials science, particularly the integration of memristive components and phase-change materials, have further reduced energy requirements by an additional 30-40% compared to first-generation neuromorphic designs. These improvements suggest the efficiency advantage will likely increase rather than diminish as the technology evolves.

Hardware Implementation Considerations

The implementation of neuromorphic AI systems requires fundamentally different hardware architectures compared to traditional computing systems. Neuromorphic chips are designed to mimic the brain's neural structure and function, utilizing specialized components like artificial neurons and synapses. These components operate based on spike-based communication rather than continuous signal processing, creating significant differences in power consumption, processing efficiency, and hardware requirements.

Traditional AI systems typically rely on von Neumann architectures with separate memory and processing units, leading to the well-known "memory wall" bottleneck. In contrast, neuromorphic systems integrate memory and processing, enabling parallel computation and reducing energy consumption. This architectural difference allows neuromorphic systems to achieve power efficiencies of 100-1000x compared to conventional systems for certain neural network tasks.

Material selection presents another critical consideration. While traditional systems primarily use silicon-based semiconductors, neuromorphic hardware often incorporates novel materials like memristors, phase-change memory, or spintronic devices to implement synaptic functions. These materials enable persistent state changes that mimic biological synaptic plasticity, but introduce manufacturing challenges and potential reliability issues not present in conventional systems.

Scaling neuromorphic hardware presents unique challenges. Traditional systems benefit from decades of manufacturing optimization, while neuromorphic chips often require custom fabrication processes. Intel's Loihi and IBM's TrueNorth represent significant advances in neuromorphic chip design, but their manufacturing complexity limits widespread adoption. Additionally, the interconnection density required to mimic neural networks creates thermal management challenges not present in traditional architectures.

Programming models differ substantially between the two approaches. Traditional systems use well-established programming languages and frameworks, while neuromorphic systems often require specialized programming paradigms based on spiking neural networks. This creates a significant barrier to entry for developers and limits software ecosystem development. Tools like IBM's TrueNorth Neurosynaptic System and the Nengo neural simulator aim to bridge this gap but remain less mature than traditional AI frameworks.

Testing and validation methodologies also diverge significantly. Traditional systems can be evaluated using established benchmarks, while neuromorphic systems often require custom metrics that account for their unique processing characteristics. This complicates direct performance comparisons and technology evaluation, creating uncertainty for potential adopters considering hardware investments.

Traditional AI systems typically rely on von Neumann architectures with separate memory and processing units, leading to the well-known "memory wall" bottleneck. In contrast, neuromorphic systems integrate memory and processing, enabling parallel computation and reducing energy consumption. This architectural difference allows neuromorphic systems to achieve power efficiencies of 100-1000x compared to conventional systems for certain neural network tasks.

Material selection presents another critical consideration. While traditional systems primarily use silicon-based semiconductors, neuromorphic hardware often incorporates novel materials like memristors, phase-change memory, or spintronic devices to implement synaptic functions. These materials enable persistent state changes that mimic biological synaptic plasticity, but introduce manufacturing challenges and potential reliability issues not present in conventional systems.

Scaling neuromorphic hardware presents unique challenges. Traditional systems benefit from decades of manufacturing optimization, while neuromorphic chips often require custom fabrication processes. Intel's Loihi and IBM's TrueNorth represent significant advances in neuromorphic chip design, but their manufacturing complexity limits widespread adoption. Additionally, the interconnection density required to mimic neural networks creates thermal management challenges not present in traditional architectures.

Programming models differ substantially between the two approaches. Traditional systems use well-established programming languages and frameworks, while neuromorphic systems often require specialized programming paradigms based on spiking neural networks. This creates a significant barrier to entry for developers and limits software ecosystem development. Tools like IBM's TrueNorth Neurosynaptic System and the Nengo neural simulator aim to bridge this gap but remain less mature than traditional AI frameworks.

Testing and validation methodologies also diverge significantly. Traditional systems can be evaluated using established benchmarks, while neuromorphic systems often require custom metrics that account for their unique processing characteristics. This complicates direct performance comparisons and technology evaluation, creating uncertainty for potential adopters considering hardware investments.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!