Optimizing DDR5 Latency for Real-Time Applications

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Targets

DDR5 memory technology represents a significant evolution in the DRAM landscape, building upon the foundations established by previous generations while introducing substantial architectural improvements. Since its introduction in 2021, DDR5 has demonstrated remarkable progress in addressing bandwidth requirements for modern computing systems. The historical trajectory from DDR4 to DDR5 shows a doubling of peak data rates from 3200 MT/s to 6400 MT/s in initial implementations, with roadmaps extending to 8400 MT/s and beyond as the technology matures.

The primary performance targets for DDR5 optimization in real-time applications center around reducing effective latency while maintaining the increased bandwidth advantages. Current DDR5 modules exhibit CAS latencies ranging from CL40 to CL46 at standard operating frequencies, representing a numerical increase compared to DDR4's typical CL16 to CL22 range. However, this comparison requires nuanced analysis as the absolute latency in nanoseconds remains the critical metric for real-time application performance.

Industry benchmarks indicate that first-generation DDR5 implementations demonstrate approximately 15-20% higher actual latency compared to mature DDR4 platforms. This latency gap presents a significant challenge for real-time applications where response time predictability is paramount. The technology evolution roadmap aims to progressively reduce this latency overhead while continuing to expand bandwidth capabilities.

Performance targets specifically for real-time applications include achieving sub-15ns random access latency, improving command bus efficiency by at least 30%, and implementing more sophisticated on-die error correction capabilities without introducing additional latency penalties. These targets align with the requirements of emerging real-time workloads in autonomous systems, industrial automation, and high-frequency trading platforms.

The evolution trajectory suggests a convergence point where DDR5 will eventually outperform DDR4 in both bandwidth and latency metrics, anticipated within the next 18-24 months as manufacturing processes mature and controller architectures are optimized. This convergence represents a critical milestone for widespread adoption in latency-sensitive applications.

Memory subsystem designers are increasingly focusing on the interplay between raw DRAM performance characteristics and system-level optimizations, including memory controller enhancements, intelligent prefetching algorithms, and application-specific tuning methodologies. The industry trend indicates a shift toward more holistic memory subsystem design approaches that consider workload-specific latency requirements rather than pursuing generalized performance improvements.

The primary performance targets for DDR5 optimization in real-time applications center around reducing effective latency while maintaining the increased bandwidth advantages. Current DDR5 modules exhibit CAS latencies ranging from CL40 to CL46 at standard operating frequencies, representing a numerical increase compared to DDR4's typical CL16 to CL22 range. However, this comparison requires nuanced analysis as the absolute latency in nanoseconds remains the critical metric for real-time application performance.

Industry benchmarks indicate that first-generation DDR5 implementations demonstrate approximately 15-20% higher actual latency compared to mature DDR4 platforms. This latency gap presents a significant challenge for real-time applications where response time predictability is paramount. The technology evolution roadmap aims to progressively reduce this latency overhead while continuing to expand bandwidth capabilities.

Performance targets specifically for real-time applications include achieving sub-15ns random access latency, improving command bus efficiency by at least 30%, and implementing more sophisticated on-die error correction capabilities without introducing additional latency penalties. These targets align with the requirements of emerging real-time workloads in autonomous systems, industrial automation, and high-frequency trading platforms.

The evolution trajectory suggests a convergence point where DDR5 will eventually outperform DDR4 in both bandwidth and latency metrics, anticipated within the next 18-24 months as manufacturing processes mature and controller architectures are optimized. This convergence represents a critical milestone for widespread adoption in latency-sensitive applications.

Memory subsystem designers are increasingly focusing on the interplay between raw DRAM performance characteristics and system-level optimizations, including memory controller enhancements, intelligent prefetching algorithms, and application-specific tuning methodologies. The industry trend indicates a shift toward more holistic memory subsystem design approaches that consider workload-specific latency requirements rather than pursuing generalized performance improvements.

Real-Time Application Memory Requirements Analysis

Real-time applications operate under strict timing constraints, requiring memory systems that can deliver data with predictable and minimal latency. The memory requirements for these applications differ significantly from general-purpose computing workloads, as even millisecond delays can lead to system failures or compromised performance in critical scenarios.

For real-time systems interfacing with DDR5 memory, latency requirements typically fall within the 5-50 nanosecond range for the most demanding applications. Industries such as autonomous driving require memory response times under 10 nanoseconds to process sensor data and make split-second decisions. High-frequency trading platforms demand even lower latencies, often below 5 nanoseconds, to maintain competitive advantage in market transactions.

Memory bandwidth requirements vary considerably across real-time application domains. Industrial automation systems may require 10-20 GB/s for complex control algorithms, while advanced radar systems for defense applications often demand 50-100 GB/s to process incoming signal data in real time. Modern avionics systems typically require 30-40 GB/s to handle multiple sensor inputs simultaneously.

Beyond raw performance metrics, real-time applications exhibit distinct access patterns that significantly impact memory optimization strategies. Many safety-critical systems display highly deterministic memory access patterns, with predictable read/write sequences that can be optimized through prefetching mechanisms. Conversely, event-driven real-time systems often generate sporadic, bursty memory traffic that challenges traditional caching strategies.

Temperature sensitivity represents another critical consideration, as many real-time systems operate in harsh environments. Industrial control systems must maintain consistent memory performance across temperature ranges from -40°C to 85°C, while automotive applications face similar challenges with the added complexity of rapid temperature fluctuations.

Power constraints further complicate memory requirements analysis. Battery-powered medical devices with real-time monitoring capabilities must balance low latency needs with strict power budgets, typically limiting memory subsystem power consumption to 50-200 mW. Similarly, aerospace applications must optimize for both performance and power efficiency due to limited onboard energy resources.

Reliability requirements for real-time memory systems exceed those of consumer applications by orders of magnitude. Medical devices and aerospace systems often specify bit error rates below 10^-15, compared to consumer electronics tolerances of 10^-12. This necessitates additional error correction capabilities that must be implemented without compromising latency targets.

The analysis of these requirements reveals that DDR5 optimization for real-time applications must address not only raw latency metrics but also consistency, environmental resilience, and application-specific access patterns to deliver truly effective solutions.

For real-time systems interfacing with DDR5 memory, latency requirements typically fall within the 5-50 nanosecond range for the most demanding applications. Industries such as autonomous driving require memory response times under 10 nanoseconds to process sensor data and make split-second decisions. High-frequency trading platforms demand even lower latencies, often below 5 nanoseconds, to maintain competitive advantage in market transactions.

Memory bandwidth requirements vary considerably across real-time application domains. Industrial automation systems may require 10-20 GB/s for complex control algorithms, while advanced radar systems for defense applications often demand 50-100 GB/s to process incoming signal data in real time. Modern avionics systems typically require 30-40 GB/s to handle multiple sensor inputs simultaneously.

Beyond raw performance metrics, real-time applications exhibit distinct access patterns that significantly impact memory optimization strategies. Many safety-critical systems display highly deterministic memory access patterns, with predictable read/write sequences that can be optimized through prefetching mechanisms. Conversely, event-driven real-time systems often generate sporadic, bursty memory traffic that challenges traditional caching strategies.

Temperature sensitivity represents another critical consideration, as many real-time systems operate in harsh environments. Industrial control systems must maintain consistent memory performance across temperature ranges from -40°C to 85°C, while automotive applications face similar challenges with the added complexity of rapid temperature fluctuations.

Power constraints further complicate memory requirements analysis. Battery-powered medical devices with real-time monitoring capabilities must balance low latency needs with strict power budgets, typically limiting memory subsystem power consumption to 50-200 mW. Similarly, aerospace applications must optimize for both performance and power efficiency due to limited onboard energy resources.

Reliability requirements for real-time memory systems exceed those of consumer applications by orders of magnitude. Medical devices and aerospace systems often specify bit error rates below 10^-15, compared to consumer electronics tolerances of 10^-12. This necessitates additional error correction capabilities that must be implemented without compromising latency targets.

The analysis of these requirements reveals that DDR5 optimization for real-time applications must address not only raw latency metrics but also consistency, environmental resilience, and application-specific access patterns to deliver truly effective solutions.

DDR5 Latency Challenges and Bottlenecks

Despite the significant advancements in DDR5 memory technology, several critical latency challenges and bottlenecks persist that impede optimal performance in real-time applications. The fundamental issue lies in the inherent trade-off between increased bandwidth and latency in DDR5 architecture. While DDR5 offers substantially higher bandwidth compared to DDR4 (up to 6400 MT/s vs 3200 MT/s), its absolute latency metrics have not improved proportionally, creating a performance bottleneck for latency-sensitive applications.

The architectural changes in DDR5 introduce new latency concerns. The decision to implement dual 32-bit channels instead of a single 64-bit channel improves throughput but adds complexity to memory access patterns. This architectural shift requires more sophisticated memory controllers and can introduce additional latency when applications require data that spans across both subchannels.

Command bus contention represents another significant bottleneck. As DDR5 systems handle increasingly complex workloads with multiple concurrent memory operations, the command bus becomes saturated, leading to command queuing delays. This issue is particularly problematic in real-time applications where predictable memory access timing is crucial.

The increased refresh requirements in higher-density DDR5 modules create periodic unavailability windows that can cause unpredictable latency spikes. With DDR5's higher densities, these refresh operations occur more frequently and take longer to complete, potentially disrupting time-critical operations in real-time systems.

Power management features in DDR5, while beneficial for energy efficiency, introduce latency penalties during state transitions. The deeper power-saving states in DDR5 require longer wake-up times, creating latency spikes when memory needs to transition from a low-power state to an active state. This presents a particular challenge for intermittent real-time workloads.

Signal integrity issues at higher frequencies also contribute to latency problems. As DDR5 operates at significantly higher frequencies than previous generations, maintaining signal integrity becomes more challenging. Signal reflections, crosstalk, and other electromagnetic interference can necessitate additional error correction cycles, adding to effective memory latency.

The increased complexity of DDR5's error correction capabilities, while improving reliability, adds processing overhead. The on-die ECC (Error Correction Code) implementation in DDR5 requires additional processing time for error detection and correction, contributing to overall memory access latency.

Temperature-dependent performance variations present another bottleneck. DDR5 memory exhibits more pronounced performance degradation at higher temperatures, requiring more aggressive thermal throttling that can unpredictably impact latency in demanding real-time applications.

The architectural changes in DDR5 introduce new latency concerns. The decision to implement dual 32-bit channels instead of a single 64-bit channel improves throughput but adds complexity to memory access patterns. This architectural shift requires more sophisticated memory controllers and can introduce additional latency when applications require data that spans across both subchannels.

Command bus contention represents another significant bottleneck. As DDR5 systems handle increasingly complex workloads with multiple concurrent memory operations, the command bus becomes saturated, leading to command queuing delays. This issue is particularly problematic in real-time applications where predictable memory access timing is crucial.

The increased refresh requirements in higher-density DDR5 modules create periodic unavailability windows that can cause unpredictable latency spikes. With DDR5's higher densities, these refresh operations occur more frequently and take longer to complete, potentially disrupting time-critical operations in real-time systems.

Power management features in DDR5, while beneficial for energy efficiency, introduce latency penalties during state transitions. The deeper power-saving states in DDR5 require longer wake-up times, creating latency spikes when memory needs to transition from a low-power state to an active state. This presents a particular challenge for intermittent real-time workloads.

Signal integrity issues at higher frequencies also contribute to latency problems. As DDR5 operates at significantly higher frequencies than previous generations, maintaining signal integrity becomes more challenging. Signal reflections, crosstalk, and other electromagnetic interference can necessitate additional error correction cycles, adding to effective memory latency.

The increased complexity of DDR5's error correction capabilities, while improving reliability, adds processing overhead. The on-die ECC (Error Correction Code) implementation in DDR5 requires additional processing time for error detection and correction, contributing to overall memory access latency.

Temperature-dependent performance variations present another bottleneck. DDR5 memory exhibits more pronounced performance degradation at higher temperatures, requiring more aggressive thermal throttling that can unpredictably impact latency in demanding real-time applications.

Current DDR5 Latency Optimization Techniques

01 DDR5 memory architecture for latency reduction

DDR5 memory architecture incorporates advanced design elements specifically aimed at reducing memory latency. These include optimized memory banks, improved prefetch mechanisms, and enhanced command scheduling. The architecture allows for more efficient data access patterns and parallel operations, which helps minimize wait times during memory operations. These architectural improvements enable faster response times compared to previous DDR generations.- DDR5 memory architecture for latency reduction: DDR5 memory architecture incorporates advanced design features specifically aimed at reducing latency. These include improved internal bank structures, optimized command scheduling, and enhanced prefetch capabilities. The architecture allows for more efficient data access patterns and parallel operations, which helps minimize the time required to retrieve data from memory. These architectural improvements enable DDR5 to achieve lower latency compared to previous memory generations while maintaining higher bandwidth capabilities.

- Memory controller techniques for latency management: Memory controllers play a crucial role in managing DDR5 memory latency through various techniques. These include intelligent command scheduling, adaptive timing adjustments, and advanced queue management. The controllers can dynamically optimize memory access patterns based on workload characteristics, prioritize critical requests, and implement sophisticated prefetching algorithms. By efficiently managing memory transactions and reducing idle time between operations, these controller techniques help minimize overall system latency while maximizing memory throughput.

- On-die enhancements for latency optimization: DDR5 memory incorporates various on-die enhancements designed to optimize latency. These include integrated error correction circuits, decision feedback equalization, and improved signal integrity features. The memory chips also feature enhanced internal buffering and more sophisticated refresh mechanisms that reduce interference with active memory operations. These on-die improvements allow for more reliable high-speed operation while maintaining lower latency, particularly for critical memory access patterns common in modern computing workloads.

- Caching and buffering strategies for DDR5: Advanced caching and buffering strategies are implemented in DDR5 memory systems to mitigate latency issues. These include multi-level cache hierarchies, intelligent buffer management, and specialized cache coherence protocols. The strategies involve predictive algorithms that anticipate memory access patterns and pre-load frequently accessed data into faster cache memory. By reducing the frequency of direct DRAM access and optimizing data placement across the memory hierarchy, these techniques effectively hide the inherent latency of DDR5 memory while maintaining system performance.

- Power management techniques affecting DDR5 latency: Power management techniques in DDR5 memory systems have significant impacts on latency characteristics. These include dynamic voltage and frequency scaling, selective bank activation, and power-aware scheduling algorithms. The memory system can adaptively balance power consumption against performance requirements, adjusting operational parameters to optimize latency under different workload conditions. These techniques enable DDR5 memory to maintain lower latency profiles even while implementing advanced power-saving features, resulting in better overall system efficiency without compromising critical performance metrics.

02 Memory controller optimizations for DDR5 latency management

Specialized memory controllers are designed to manage DDR5 memory latency through various techniques such as intelligent request scheduling, command reordering, and adaptive timing control. These controllers implement sophisticated algorithms to predict memory access patterns and optimize the sequence of operations. By prioritizing critical requests and minimizing idle cycles, these controllers significantly reduce effective memory latency in DDR5 systems.Expand Specific Solutions03 DDR5 timing parameters and clock synchronization techniques

DDR5 memory employs refined timing parameters and advanced clock synchronization methods to minimize latency. These include improved CAS latency management, reduced command-to-data delays, and optimized refresh timing. The memory modules utilize sophisticated on-die termination and signal integrity features that allow for higher operating frequencies while maintaining tight timing controls. These enhancements collectively contribute to lower overall memory access latency.Expand Specific Solutions04 Cache hierarchy and buffer designs for DDR5 systems

DDR5 memory systems implement enhanced cache hierarchies and specialized buffer designs to mitigate latency issues. These include multi-level cache structures, intelligent buffer management, and optimized data prefetching mechanisms. The system architecture incorporates dedicated read/write buffers and transaction queues that help hide memory access latency. These buffer designs work in conjunction with the memory controller to provide faster apparent access times to the processor.Expand Specific Solutions05 Power management techniques affecting DDR5 latency

Power management features in DDR5 memory can significantly impact latency characteristics. Advanced techniques include dynamic voltage and frequency scaling, selective bank activation, and intelligent power state transitions. These mechanisms balance power consumption with performance requirements, allowing systems to maintain low latency during critical operations while conserving energy during idle periods. The relationship between power states and latency is carefully managed to optimize overall system performance.Expand Specific Solutions

Key Memory Manufacturers and Ecosystem Players

The DDR5 latency optimization market for real-time applications is currently in a growth phase, with an estimated market size exceeding $5 billion and expanding at 15-20% annually. The competitive landscape features established memory manufacturers like Micron Technology and Samsung Electronics leading in hardware solutions, while Intel, AMD, and Qualcomm focus on memory controller optimizations. Chinese players including Huawei, ChangXin Memory, and Inspur are rapidly gaining market share through government-backed initiatives. The technology maturity varies across segments - physical memory components are highly mature, while real-time optimization techniques remain in development. Companies like Rambus and IBM are advancing proprietary low-latency architectures, while cloud providers like Google and Huawei Cloud are developing software-defined memory solutions for real-time workloads.

Micron Technology, Inc.

Technical Solution: Micron has developed advanced DDR5 memory solutions with optimized latency for real-time applications through their Crucial brand. Their approach includes implementing on-die ECC (Error Correction Code), enhanced refresh management algorithms, and Decision Feedback Equalization (DFE) to reduce latency while maintaining data integrity. Micron's DDR5 modules feature dual-channel architecture with independent 40-bit channels, allowing for more efficient parallel operations and reduced command bus contention. Their proprietary RCD (Registering Clock Driver) designs minimize signal propagation delays, achieving CAS latencies as low as 36 clock cycles at high frequencies. Micron has also developed specialized DIMM designs with optimized trace layouts to reduce signal reflections and crosstalk, further decreasing latency in high-speed operations.

Strengths: Industry-leading manufacturing process allowing for tighter timing specifications; extensive experience in memory optimization; strong vertical integration from design to production. Weaknesses: Premium pricing compared to competitors; some solutions require proprietary controllers for maximum performance benefits.

Intel Corp.

Technical Solution: Intel has developed comprehensive DDR5 latency optimization solutions that integrate deeply with their processor architectures. Their approach includes advanced memory controllers with sophisticated prefetch algorithms that reduce effective latency by predicting data access patterns based on application behavior. Intel's Memory Latency Checker tool allows for real-time monitoring and tuning of memory subsystems. Their DDR5 implementation features enhanced command rate optimization and improved bank group management to reduce conflicts and waiting states. Intel has also developed proprietary "Dynamic Memory Boost" technology that temporarily increases memory voltage and adjusts timing parameters during latency-critical operations. Their platform-level approach includes optimized BIOS settings and memory training algorithms that establish the lowest stable latency timings for each specific memory configuration. Additionally, Intel's Memory Latency Tuner software provides application-specific optimization for real-time workloads.

Strengths: Holistic platform-level optimization capabilities; tight integration between CPU and memory subsystems; extensive software support for memory tuning. Weaknesses: Some optimizations are limited to Intel platforms; performance benefits may vary significantly across different memory vendors.

Critical Patents and Research in DDR5 Latency Reduction

Data processing method, memory controller, processor and electronic device

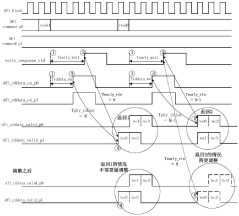

PatentActiveCN112631966B

Innovation

- The memory controller adjusts the phase of the returned original read data so that the phase required from sending the early response signal to receiving the first data in the original read data is fixed each time, thus supporting the early response function and read cycle. Simultaneous use of redundancy checking functions.

Memory system and memory access interface device thereof

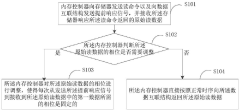

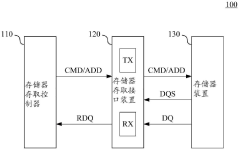

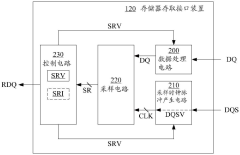

PatentPendingCN117055801A

Innovation

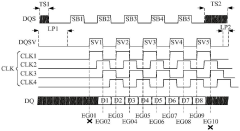

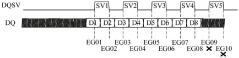

- A memory access interface device is designed, which includes a data processing circuit, a sampling clock pulse generation circuit, a sampling circuit and a control circuit. By increasing the number of strobe pulses of the effective data strobe signal, multiple sampling clock pulse signals are generated to adjust the sampling The result is to reduce the time gap and ensure the correct reading of the data signal.

Power-Latency Tradeoffs in DDR5 Implementations

The optimization of DDR5 memory systems for real-time applications involves critical trade-offs between power consumption and latency performance. DDR5 memory architectures inherently consume more power than their predecessors due to higher operating frequencies, increased channel counts, and enhanced functionality. This power increase directly impacts system thermal profiles and energy efficiency, creating a complex optimization challenge.

When implementing DDR5 in real-time systems, engineers face a fundamental dilemma: lower latency configurations typically demand higher power consumption. This relationship manifests in several key implementation aspects. First, aggressive timing parameters that reduce command and data latency require increased voltage levels to maintain signal integrity, directly increasing power draw. Memory controllers operating at maximum throughput similarly consume additional power while reducing effective latency.

The decision matrix becomes particularly complex when considering on-die termination (ODT) settings. Stronger termination reduces signal reflection and improves timing margins, potentially allowing for lower latency operation, but at the cost of significantly higher static power consumption. Similarly, refresh rate adjustments present another trade-off point - reducing refresh frequency saves power but may introduce additional latency spikes during critical operations.

DDR5's power management features introduce new variables to this equation. The integrated voltage regulators (IVRs) on DDR5 DIMMs provide more granular power control but add their own efficiency considerations. Decision Support Telemetry (DST) capabilities enable dynamic power-latency optimization but require sophisticated control algorithms to be effective in real-time environments.

Recent benchmark data from high-performance computing implementations demonstrates that optimizing for minimum latency can increase DIMM power consumption by 15-30% compared to balanced configurations. Conversely, implementations prioritizing power efficiency often sacrifice 5-12% in latency performance. The relationship is non-linear, with diminishing returns observed when pushing beyond certain thresholds in either direction.

For real-time applications, the optimal implementation strategy typically involves identifying critical memory access patterns and selectively applying power-intensive optimizations only during time-sensitive operations. This approach requires sophisticated memory controllers capable of dynamically adjusting power states based on workload characteristics and timing requirements, effectively creating application-specific power-latency profiles rather than static configurations.

When implementing DDR5 in real-time systems, engineers face a fundamental dilemma: lower latency configurations typically demand higher power consumption. This relationship manifests in several key implementation aspects. First, aggressive timing parameters that reduce command and data latency require increased voltage levels to maintain signal integrity, directly increasing power draw. Memory controllers operating at maximum throughput similarly consume additional power while reducing effective latency.

The decision matrix becomes particularly complex when considering on-die termination (ODT) settings. Stronger termination reduces signal reflection and improves timing margins, potentially allowing for lower latency operation, but at the cost of significantly higher static power consumption. Similarly, refresh rate adjustments present another trade-off point - reducing refresh frequency saves power but may introduce additional latency spikes during critical operations.

DDR5's power management features introduce new variables to this equation. The integrated voltage regulators (IVRs) on DDR5 DIMMs provide more granular power control but add their own efficiency considerations. Decision Support Telemetry (DST) capabilities enable dynamic power-latency optimization but require sophisticated control algorithms to be effective in real-time environments.

Recent benchmark data from high-performance computing implementations demonstrates that optimizing for minimum latency can increase DIMM power consumption by 15-30% compared to balanced configurations. Conversely, implementations prioritizing power efficiency often sacrifice 5-12% in latency performance. The relationship is non-linear, with diminishing returns observed when pushing beyond certain thresholds in either direction.

For real-time applications, the optimal implementation strategy typically involves identifying critical memory access patterns and selectively applying power-intensive optimizations only during time-sensitive operations. This approach requires sophisticated memory controllers capable of dynamically adjusting power states based on workload characteristics and timing requirements, effectively creating application-specific power-latency profiles rather than static configurations.

Thermal Management Strategies for High-Performance DDR5

Thermal management has become a critical factor in optimizing DDR5 latency for real-time applications. As DDR5 memory modules operate at significantly higher frequencies than their predecessors, they generate substantially more heat during operation. This thermal output directly impacts memory performance, particularly latency characteristics that are crucial for time-sensitive applications.

The relationship between temperature and latency in DDR5 systems follows a clear pattern: as temperatures rise, memory cells require more time to stabilize during read and write operations, resulting in increased latency. For real-time applications where microsecond delays can impact system functionality, maintaining optimal thermal conditions becomes essential for consistent performance.

Current thermal management approaches for high-performance DDR5 include passive cooling solutions such as enhanced heat spreaders with advanced thermal interface materials. These solutions typically utilize aluminum or copper heat spreaders with specialized thermal compounds to maximize heat dissipation from memory chips to the surrounding environment.

Active cooling strategies have also emerged as effective solutions, particularly in data center environments where DDR5 modules operate continuously under heavy workloads. These include dedicated memory cooling fans, liquid cooling solutions that incorporate memory modules into the cooling loop, and integrated vapor chamber designs that efficiently distribute heat across larger surface areas.

Thermal monitoring and dynamic frequency scaling represent another critical aspect of DDR5 thermal management. Modern memory controllers incorporate temperature sensors that continuously monitor thermal conditions, allowing systems to dynamically adjust memory frequencies and refresh rates to maintain optimal operating temperatures and prevent thermal throttling that would otherwise increase latency.

Advanced system-level approaches include optimized airflow designs in server and workstation environments, where computational fluid dynamics modeling helps create thermal pathways that efficiently remove heat from memory modules. Some high-performance systems also implement predictive thermal management algorithms that anticipate workload patterns and proactively adjust cooling parameters.

For embedded real-time systems utilizing DDR5, specialized thermal solutions include thermally conductive enclosures, phase-change materials that absorb heat during peak operations, and thermally optimized PCB designs that help dissipate heat through ground planes and thermal vias.

The effectiveness of these thermal management strategies directly correlates with achievable latency performance in real-time applications, making thermal considerations a fundamental aspect of DDR5 memory system design rather than a secondary concern.

The relationship between temperature and latency in DDR5 systems follows a clear pattern: as temperatures rise, memory cells require more time to stabilize during read and write operations, resulting in increased latency. For real-time applications where microsecond delays can impact system functionality, maintaining optimal thermal conditions becomes essential for consistent performance.

Current thermal management approaches for high-performance DDR5 include passive cooling solutions such as enhanced heat spreaders with advanced thermal interface materials. These solutions typically utilize aluminum or copper heat spreaders with specialized thermal compounds to maximize heat dissipation from memory chips to the surrounding environment.

Active cooling strategies have also emerged as effective solutions, particularly in data center environments where DDR5 modules operate continuously under heavy workloads. These include dedicated memory cooling fans, liquid cooling solutions that incorporate memory modules into the cooling loop, and integrated vapor chamber designs that efficiently distribute heat across larger surface areas.

Thermal monitoring and dynamic frequency scaling represent another critical aspect of DDR5 thermal management. Modern memory controllers incorporate temperature sensors that continuously monitor thermal conditions, allowing systems to dynamically adjust memory frequencies and refresh rates to maintain optimal operating temperatures and prevent thermal throttling that would otherwise increase latency.

Advanced system-level approaches include optimized airflow designs in server and workstation environments, where computational fluid dynamics modeling helps create thermal pathways that efficiently remove heat from memory modules. Some high-performance systems also implement predictive thermal management algorithms that anticipate workload patterns and proactively adjust cooling parameters.

For embedded real-time systems utilizing DDR5, specialized thermal solutions include thermally conductive enclosures, phase-change materials that absorb heat during peak operations, and thermally optimized PCB designs that help dissipate heat through ground planes and thermal vias.

The effectiveness of these thermal management strategies directly correlates with achievable latency performance in real-time applications, making thermal considerations a fundamental aspect of DDR5 memory system design rather than a secondary concern.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!