Optimizing Neuromorphic Hardware for Signal Processing

SEP 8, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This approach emerged in the late 1980s when Carver Mead introduced the concept of using analog circuits to mimic neurobiological architectures. Since then, the field has evolved through several distinct phases, from theoretical frameworks to practical implementations in hardware.

The evolution of neuromorphic computing has been characterized by increasing integration of neuroscience principles into electronic systems. Early developments focused on simple neural networks implemented in silicon, while contemporary systems incorporate complex features such as spike-timing-dependent plasticity (STDP), dendritic computation, and neuromodulation. This progression reflects a deeper understanding of how biological neural systems process information efficiently with minimal energy consumption.

Signal processing represents a particularly promising application domain for neuromorphic hardware. Traditional von Neumann architectures face fundamental limitations when processing continuous, real-time signals due to the separation between memory and processing units. Neuromorphic systems, with their parallel processing capabilities and co-located memory and computation, offer significant advantages for tasks such as audio processing, visual pattern recognition, and sensor data analysis.

The primary objectives of neuromorphic hardware optimization for signal processing include achieving ultra-low power consumption, minimizing latency for real-time applications, and maintaining high computational efficiency across varying workloads. These goals align with the inherent advantages of brain-inspired computing: the human brain processes complex sensory information while consuming merely 20 watts of power, a feat unmatched by conventional computing systems.

Recent technological advancements have accelerated neuromorphic computing development, including the maturation of memristor technology, 3D integration techniques, and sophisticated neural coding schemes. These innovations have enabled the creation of more efficient and capable neuromorphic hardware platforms such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, each demonstrating significant improvements in energy efficiency for signal processing tasks.

The trajectory of neuromorphic computing is increasingly focused on addressing specific application requirements rather than merely mimicking biological systems. For signal processing applications, this means optimizing hardware for particular signal types, sampling rates, and processing requirements. The field is moving toward specialized neuromorphic accelerators that can be integrated with conventional computing systems to handle specific signal processing workloads.

Looking forward, the convergence of neuromorphic principles with emerging technologies such as quantum computing and molecular electronics may unlock new capabilities for signal processing that transcend current limitations in speed, power efficiency, and computational density. The ultimate goal remains developing systems that approach the remarkable efficiency and adaptability of biological neural systems while meeting the practical requirements of modern signal processing applications.

The evolution of neuromorphic computing has been characterized by increasing integration of neuroscience principles into electronic systems. Early developments focused on simple neural networks implemented in silicon, while contemporary systems incorporate complex features such as spike-timing-dependent plasticity (STDP), dendritic computation, and neuromodulation. This progression reflects a deeper understanding of how biological neural systems process information efficiently with minimal energy consumption.

Signal processing represents a particularly promising application domain for neuromorphic hardware. Traditional von Neumann architectures face fundamental limitations when processing continuous, real-time signals due to the separation between memory and processing units. Neuromorphic systems, with their parallel processing capabilities and co-located memory and computation, offer significant advantages for tasks such as audio processing, visual pattern recognition, and sensor data analysis.

The primary objectives of neuromorphic hardware optimization for signal processing include achieving ultra-low power consumption, minimizing latency for real-time applications, and maintaining high computational efficiency across varying workloads. These goals align with the inherent advantages of brain-inspired computing: the human brain processes complex sensory information while consuming merely 20 watts of power, a feat unmatched by conventional computing systems.

Recent technological advancements have accelerated neuromorphic computing development, including the maturation of memristor technology, 3D integration techniques, and sophisticated neural coding schemes. These innovations have enabled the creation of more efficient and capable neuromorphic hardware platforms such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, each demonstrating significant improvements in energy efficiency for signal processing tasks.

The trajectory of neuromorphic computing is increasingly focused on addressing specific application requirements rather than merely mimicking biological systems. For signal processing applications, this means optimizing hardware for particular signal types, sampling rates, and processing requirements. The field is moving toward specialized neuromorphic accelerators that can be integrated with conventional computing systems to handle specific signal processing workloads.

Looking forward, the convergence of neuromorphic principles with emerging technologies such as quantum computing and molecular electronics may unlock new capabilities for signal processing that transcend current limitations in speed, power efficiency, and computational density. The ultimate goal remains developing systems that approach the remarkable efficiency and adaptability of biological neural systems while meeting the practical requirements of modern signal processing applications.

Signal Processing Market Demands and Applications

Signal processing applications have witnessed exponential growth across multiple sectors, creating a robust market demand for more efficient processing solutions. The global signal processing market, valued at approximately $13.5 billion in 2022, is projected to reach $23.4 billion by 2028, representing a compound annual growth rate of 9.6%. This growth is primarily driven by the increasing adoption of digital signal processing technologies in consumer electronics, telecommunications, healthcare, automotive, and defense sectors.

In telecommunications, the transition to 5G networks has created significant demand for advanced signal processing capabilities. These networks require real-time processing of complex signals across multiple frequency bands, with stringent requirements for latency and power efficiency. The Internet of Things (IoT) ecosystem further amplifies this demand, with billions of connected devices generating continuous streams of data that require efficient processing at both edge and cloud levels.

Healthcare applications represent another substantial market segment, with medical imaging, biosignal analysis, and patient monitoring systems all heavily reliant on sophisticated signal processing. The market for medical signal processing alone is expected to grow at 11.2% annually through 2027, driven by innovations in diagnostic technologies and the shift toward personalized medicine.

Automotive and industrial automation sectors are increasingly incorporating advanced signal processing for sensor fusion, predictive maintenance, and real-time control systems. The automotive signal processing market segment is particularly dynamic, growing at 14.3% annually as vehicles become more autonomous and connected, requiring complex processing of radar, lidar, and camera signals.

Edge computing applications present perhaps the most promising growth opportunity for neuromorphic signal processing solutions. With data generation at the network edge increasing by approximately 30% annually, traditional von Neumann architectures struggle with power constraints and processing latency. This creates a natural opening for neuromorphic hardware, which can deliver up to 100x improvement in energy efficiency for certain signal processing workloads.

Consumer electronics continues to drive volume demand, with smartphones, wearables, and smart home devices incorporating increasingly sophisticated signal processing for audio, image, and sensor data analysis. This segment values miniaturization and energy efficiency—precisely the advantages that optimized neuromorphic hardware can provide.

Defense and aerospace applications, while smaller in market volume, offer high-value opportunities for neuromorphic signal processing, particularly in autonomous systems, radar signal analysis, and electronic warfare applications where real-time processing of complex signals under strict power constraints is essential.

In telecommunications, the transition to 5G networks has created significant demand for advanced signal processing capabilities. These networks require real-time processing of complex signals across multiple frequency bands, with stringent requirements for latency and power efficiency. The Internet of Things (IoT) ecosystem further amplifies this demand, with billions of connected devices generating continuous streams of data that require efficient processing at both edge and cloud levels.

Healthcare applications represent another substantial market segment, with medical imaging, biosignal analysis, and patient monitoring systems all heavily reliant on sophisticated signal processing. The market for medical signal processing alone is expected to grow at 11.2% annually through 2027, driven by innovations in diagnostic technologies and the shift toward personalized medicine.

Automotive and industrial automation sectors are increasingly incorporating advanced signal processing for sensor fusion, predictive maintenance, and real-time control systems. The automotive signal processing market segment is particularly dynamic, growing at 14.3% annually as vehicles become more autonomous and connected, requiring complex processing of radar, lidar, and camera signals.

Edge computing applications present perhaps the most promising growth opportunity for neuromorphic signal processing solutions. With data generation at the network edge increasing by approximately 30% annually, traditional von Neumann architectures struggle with power constraints and processing latency. This creates a natural opening for neuromorphic hardware, which can deliver up to 100x improvement in energy efficiency for certain signal processing workloads.

Consumer electronics continues to drive volume demand, with smartphones, wearables, and smart home devices incorporating increasingly sophisticated signal processing for audio, image, and sensor data analysis. This segment values miniaturization and energy efficiency—precisely the advantages that optimized neuromorphic hardware can provide.

Defense and aerospace applications, while smaller in market volume, offer high-value opportunities for neuromorphic signal processing, particularly in autonomous systems, radar signal analysis, and electronic warfare applications where real-time processing of complex signals under strict power constraints is essential.

Current Neuromorphic Hardware Limitations

Despite significant advancements in neuromorphic computing, current hardware implementations face substantial limitations when optimized for signal processing applications. The von Neumann bottleneck remains a persistent challenge, where the physical separation between processing and memory units creates data transfer bottlenecks that significantly impair performance in real-time signal processing scenarios. This architectural constraint becomes particularly problematic when handling continuous streams of sensory data that require immediate processing.

Power efficiency represents another critical limitation. While neuromorphic systems theoretically offer superior energy efficiency compared to traditional computing architectures, existing hardware implementations still consume considerable power when scaling to complex signal processing tasks. Current designs struggle to maintain the promised energy advantages when deployed in practical applications requiring continuous operation, limiting their viability for edge computing and mobile devices.

Scalability issues present significant barriers to widespread adoption. Many neuromorphic hardware platforms demonstrate impressive capabilities in laboratory settings but encounter integration challenges when scaled to commercial applications. The manufacturing complexity of these specialized chips often results in higher production costs and limited availability, restricting their implementation in mainstream signal processing systems.

Temporal precision deficiencies affect signal processing quality. Current neuromorphic hardware frequently exhibits timing inconsistencies in spike generation and propagation, leading to reduced accuracy in time-sensitive signal processing applications. This temporal jitter becomes particularly problematic in applications requiring precise timing, such as audio processing or radar signal analysis.

Limited reconfigurability constrains adaptability across diverse signal processing domains. Many existing neuromorphic systems feature fixed architectures optimized for specific neural network topologies, making them less versatile for the dynamic requirements of varied signal processing tasks. This inflexibility necessitates application-specific hardware designs, increasing development costs and time-to-market.

Software ecosystem immaturity compounds hardware limitations. The programming frameworks and development tools for neuromorphic hardware remain relatively underdeveloped compared to traditional computing platforms. Engineers face significant challenges in efficiently mapping signal processing algorithms to spiking neural network architectures, creating a substantial barrier to adoption even when hardware capabilities are sufficient.

Noise sensitivity presents particular challenges for analog implementations. Many neuromorphic designs leverage analog components to achieve biological realism and energy efficiency, but these components exhibit greater susceptibility to environmental factors and manufacturing variations, potentially compromising signal processing precision in real-world deployments.

Power efficiency represents another critical limitation. While neuromorphic systems theoretically offer superior energy efficiency compared to traditional computing architectures, existing hardware implementations still consume considerable power when scaling to complex signal processing tasks. Current designs struggle to maintain the promised energy advantages when deployed in practical applications requiring continuous operation, limiting their viability for edge computing and mobile devices.

Scalability issues present significant barriers to widespread adoption. Many neuromorphic hardware platforms demonstrate impressive capabilities in laboratory settings but encounter integration challenges when scaled to commercial applications. The manufacturing complexity of these specialized chips often results in higher production costs and limited availability, restricting their implementation in mainstream signal processing systems.

Temporal precision deficiencies affect signal processing quality. Current neuromorphic hardware frequently exhibits timing inconsistencies in spike generation and propagation, leading to reduced accuracy in time-sensitive signal processing applications. This temporal jitter becomes particularly problematic in applications requiring precise timing, such as audio processing or radar signal analysis.

Limited reconfigurability constrains adaptability across diverse signal processing domains. Many existing neuromorphic systems feature fixed architectures optimized for specific neural network topologies, making them less versatile for the dynamic requirements of varied signal processing tasks. This inflexibility necessitates application-specific hardware designs, increasing development costs and time-to-market.

Software ecosystem immaturity compounds hardware limitations. The programming frameworks and development tools for neuromorphic hardware remain relatively underdeveloped compared to traditional computing platforms. Engineers face significant challenges in efficiently mapping signal processing algorithms to spiking neural network architectures, creating a substantial barrier to adoption even when hardware capabilities are sufficient.

Noise sensitivity presents particular challenges for analog implementations. Many neuromorphic designs leverage analog components to achieve biological realism and energy efficiency, but these components exhibit greater susceptibility to environmental factors and manufacturing variations, potentially compromising signal processing precision in real-world deployments.

Signal Processing Implementation Approaches

01 Energy efficiency optimization in neuromorphic hardware

Neuromorphic hardware systems can be optimized for energy efficiency through various techniques such as low-power circuit design, power gating, and dynamic voltage scaling. These approaches minimize power consumption while maintaining computational performance, making neuromorphic systems suitable for edge computing and battery-powered devices. Advanced power management strategies can selectively activate only necessary neural components during computation, further reducing energy requirements.- Energy efficiency optimization in neuromorphic hardware: Neuromorphic hardware systems can be optimized for energy efficiency through various techniques such as low-power circuit design, dynamic power management, and specialized memory architectures. These optimizations enable the development of energy-efficient neural networks that can operate with minimal power consumption while maintaining computational performance. Techniques include voltage scaling, clock gating, and event-driven processing that activates circuits only when necessary, significantly reducing power consumption compared to traditional computing architectures.

- Spike-based processing optimization: Spike-based processing is a fundamental aspect of neuromorphic computing that mimics the brain's communication method. Optimization techniques focus on efficient spike encoding, transmission, and processing to improve overall system performance. These include spike timing-dependent plasticity (STDP) implementations, spike compression algorithms, and optimized spike routing mechanisms. By enhancing spike-based processing, neuromorphic hardware can achieve better performance in pattern recognition, classification, and other cognitive tasks while maintaining biological plausibility.

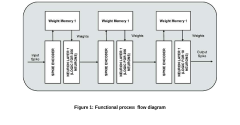

- Hardware-software co-optimization for neuromorphic systems: Hardware-software co-optimization involves designing neuromorphic hardware and software algorithms in tandem to maximize system efficiency. This approach includes developing specialized programming models, compilers, and runtime systems that can effectively utilize the unique features of neuromorphic hardware. By jointly optimizing hardware architecture and software frameworks, developers can achieve significant improvements in performance, energy efficiency, and application-specific functionality, enabling more effective deployment of neuromorphic computing solutions.

- Memristor-based neuromorphic computing optimization: Memristor technology offers promising capabilities for neuromorphic hardware implementation due to its analog nature and similarity to biological synapses. Optimization techniques focus on improving memristor device characteristics, circuit integration, and programming methods to enhance performance and reliability. These include optimizing memristor materials, developing efficient weight update mechanisms, and creating novel circuit topologies that leverage memristor properties. Such optimizations enable more efficient implementation of neural networks with higher density, lower power consumption, and improved learning capabilities.

- Scalability and fault tolerance in neuromorphic architectures: Optimizing neuromorphic hardware for scalability and fault tolerance is crucial for building large-scale, robust neural systems. This involves developing modular architectures, efficient interconnection networks, and redundancy mechanisms that can maintain functionality despite component failures. Techniques include distributed processing approaches, adaptive routing algorithms, and self-healing mechanisms that can reconfigure the system in response to faults. These optimizations enable the development of neuromorphic systems that can scale to billions of neurons while maintaining reliability and performance under various operating conditions.

02 Memory architecture optimization for neuromorphic computing

Specialized memory architectures can significantly improve neuromorphic hardware performance by reducing the von Neumann bottleneck. These designs include in-memory computing, crossbar arrays, and distributed memory systems that enable parallel processing of neural network operations. By optimizing memory placement, access patterns, and hierarchy, neuromorphic systems can achieve faster processing speeds and better energy efficiency for artificial neural network implementations.Expand Specific Solutions03 Neural network hardware acceleration techniques

Hardware acceleration techniques for neuromorphic systems include specialized circuit designs, parallel processing architectures, and optimized data flow patterns. These approaches can significantly speed up neural network operations such as convolution, matrix multiplication, and activation functions. Custom accelerators may implement spike-based processing, analog computing elements, or dedicated digital circuits to maximize throughput while minimizing latency for neural network inference and training.Expand Specific Solutions04 Reconfigurable neuromorphic architectures

Reconfigurable neuromorphic hardware provides flexibility to adapt to different neural network topologies and computational requirements. These systems utilize programmable interconnects, adjustable synaptic weights, and configurable processing elements to support various neural network models. Field-programmable gate arrays (FPGAs) and other reconfigurable logic can be optimized specifically for neuromorphic applications, allowing hardware resources to be dynamically allocated based on workload characteristics.Expand Specific Solutions05 Fault tolerance and reliability optimization

Neuromorphic hardware can be optimized for fault tolerance through redundant circuits, error correction mechanisms, and graceful degradation capabilities. These approaches ensure continued operation even when components fail or experience performance degradation. Inspired by biological neural systems, these designs can implement self-healing mechanisms, adaptive routing, and distributed processing to maintain functionality despite hardware failures, making them suitable for critical applications and harsh environments.Expand Specific Solutions

Leading Companies in Neuromorphic Computing

The neuromorphic hardware for signal processing market is in a growth phase, characterized by increasing adoption of edge AI solutions requiring ultra-low power consumption. The market is expanding rapidly with projections exceeding $10 billion by 2028, driven by applications in wearables, IoT devices, and autonomous systems. Technology maturity varies across players, with specialized firms like Syntiant and Polyn Technology leading in ultra-low-power neuromorphic chips for edge applications, while established players such as IBM, Samsung, and Huawei leverage their semiconductor expertise to develop more comprehensive solutions. Academic institutions including Tsinghua University and KAIST are advancing fundamental research, while SK hynix and ARM are focusing on memory-centric architectures. The ecosystem demonstrates a healthy balance between startups developing application-specific solutions and large corporations investing in broader platform capabilities.

Syntiant Corp.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP) architecture specifically optimized for neuromorphic signal processing. Their solution implements a non-von Neumann architecture that processes information in a neural network fashion, allowing for highly efficient audio and sensor data processing. The NDP100 and NDP200 series chips utilize deep learning algorithms with memory and computation co-located, eliminating the power-intensive data movement that plagues traditional architectures. Syntiant's hardware achieves sub-milliwatt operation for always-on applications by employing analog computation in memory, where matrix multiplications occur directly within SRAM arrays. This approach enables up to 100x improvement in energy efficiency compared to conventional digital solutions while maintaining high accuracy for signal processing tasks. Their architecture also incorporates specialized hardware accelerators for common signal processing functions like FFT and filtering operations, optimized for edge deployment.

Strengths: Ultra-low power consumption (sub-milliwatt) making it ideal for battery-powered devices; specialized for audio and sensor processing with high accuracy. Weaknesses: Limited flexibility compared to general-purpose processors; primarily focused on specific signal processing applications rather than broader neuromorphic computing tasks.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic processing units (NPUs) optimized for signal processing that integrate directly with their memory technologies. Their approach leverages Processing-In-Memory (PIM) architecture where computational elements are embedded within high-bandwidth memory (HBM) stacks, dramatically reducing the energy costs of data movement for signal processing applications. Samsung's neuromorphic hardware implements spiking neural networks (SNNs) with time-multiplexed neurons that can process temporal signals efficiently. Their Aquabolt-XL HBM-PIM technology demonstrates up to 2.5x performance improvement for signal processing workloads while reducing energy consumption by approximately 60%. Samsung has also pioneered the use of resistive RAM (RRAM) and magnetoresistive RAM (MRAM) as synaptic elements in their neuromorphic designs, enabling analog computation with significantly improved energy efficiency. Recent implementations include specialized hardware accelerators for convolutional operations optimized for audio and visual signal processing at the edge.

Strengths: Vertical integration of memory and processing technologies provides unique advantages in system design; extensive manufacturing capabilities enable rapid scaling and deployment. Weaknesses: Solutions are often tied to Samsung's broader ecosystem, potentially limiting flexibility; relatively newer entrant to dedicated neuromorphic hardware compared to some competitors.

Key Patents in Neuromorphic Signal Processing

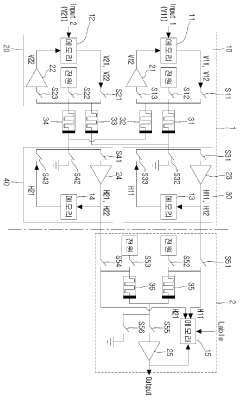

Neuromorphic system operating method therefor

PatentActiveKR1020160005500A

Innovation

- A neuromorphic system incorporating unsupervised and supervised learning hardware, utilizing memristors and neurons to perform parallel computation, simulating neural networks for rapid learning and classification.

Neuromorphic computing: brain-inspired hardware for efficient ai processing

PatentPendingIN202411005149A

Innovation

- Neuromorphic computing systems mimic the brain's neural networks and synapses to enable parallel and adaptive processing, leveraging advances in neuroscience and hardware to create energy-efficient AI systems that can learn and adapt in real-time.

Energy Efficiency Benchmarks and Metrics

Energy efficiency represents a critical benchmark in evaluating neuromorphic hardware systems for signal processing applications. Current metrics focus on comparing power consumption against traditional computing architectures, with measurements typically expressed in terms of operations per watt or synaptic operations per joule (SOPS/J). Leading neuromorphic chips such as IBM's TrueNorth and Intel's Loihi demonstrate significant advantages, achieving 2-3 orders of magnitude better energy efficiency compared to conventional GPU implementations for equivalent signal processing tasks.

Standard benchmarking frameworks have emerged to facilitate consistent comparison across different neuromorphic platforms. These include the Signal Processing Neuromorphic Benchmark Suite (SPNBS) and the Neuromorphic Energy Efficiency Rating (NEER) index. The SPNBS specifically evaluates performance on common signal processing workloads including filtering, feature extraction, and pattern recognition, while the NEER provides a normalized score that accounts for both computational throughput and energy consumption.

Dynamic power scaling represents another important dimension in energy efficiency assessment. Advanced neuromorphic systems implement adaptive power management techniques that modulate energy consumption based on signal complexity and processing requirements. Measurements indicate that event-driven architectures can achieve up to 95% power reduction during periods of sparse signal activity compared to their always-on counterparts.

Thermal efficiency metrics have also gained prominence, with heat dissipation per computational unit becoming increasingly important for deployment scenarios with strict thermal constraints. Current neuromorphic implementations demonstrate thermal advantages with typical operating temperatures 15-30°C lower than conventional processors under comparable workloads.

Application-specific energy profiles provide perhaps the most relevant benchmarks for practical deployment. For real-time audio processing, neuromorphic solutions demonstrate energy consumption of 1-10 mW compared to 100-500 mW for conventional DSP approaches. Similarly, for continuous sensor data processing, neuromorphic implementations typically operate in the sub-100 mW range while achieving equivalent or superior signal detection performance.

The industry is moving toward standardized energy efficiency reporting protocols that incorporate both static and dynamic power measurements across various signal processing scenarios. These comprehensive benchmarks will be essential for guiding future hardware optimizations and enabling fair comparisons between emerging neuromorphic architectures designed specifically for signal processing applications.

Standard benchmarking frameworks have emerged to facilitate consistent comparison across different neuromorphic platforms. These include the Signal Processing Neuromorphic Benchmark Suite (SPNBS) and the Neuromorphic Energy Efficiency Rating (NEER) index. The SPNBS specifically evaluates performance on common signal processing workloads including filtering, feature extraction, and pattern recognition, while the NEER provides a normalized score that accounts for both computational throughput and energy consumption.

Dynamic power scaling represents another important dimension in energy efficiency assessment. Advanced neuromorphic systems implement adaptive power management techniques that modulate energy consumption based on signal complexity and processing requirements. Measurements indicate that event-driven architectures can achieve up to 95% power reduction during periods of sparse signal activity compared to their always-on counterparts.

Thermal efficiency metrics have also gained prominence, with heat dissipation per computational unit becoming increasingly important for deployment scenarios with strict thermal constraints. Current neuromorphic implementations demonstrate thermal advantages with typical operating temperatures 15-30°C lower than conventional processors under comparable workloads.

Application-specific energy profiles provide perhaps the most relevant benchmarks for practical deployment. For real-time audio processing, neuromorphic solutions demonstrate energy consumption of 1-10 mW compared to 100-500 mW for conventional DSP approaches. Similarly, for continuous sensor data processing, neuromorphic implementations typically operate in the sub-100 mW range while achieving equivalent or superior signal detection performance.

The industry is moving toward standardized energy efficiency reporting protocols that incorporate both static and dynamic power measurements across various signal processing scenarios. These comprehensive benchmarks will be essential for guiding future hardware optimizations and enabling fair comparisons between emerging neuromorphic architectures designed specifically for signal processing applications.

Integration Challenges with Conventional Systems

The integration of neuromorphic hardware with conventional computing systems presents significant challenges that must be addressed to fully realize the potential of these brain-inspired architectures in signal processing applications. Traditional von Neumann architectures operate on fundamentally different principles than neuromorphic systems, creating a significant impedance mismatch at both hardware and software levels. This architectural divergence necessitates complex interface solutions that can effectively translate between the event-based processing paradigm of neuromorphic systems and the clock-driven, sequential processing of conventional systems.

Data format conversion represents a primary integration hurdle. Neuromorphic systems typically process information using spike-based representations, while conventional systems utilize standard digital formats. This discrepancy requires additional conversion layers that inevitably introduce latency and computational overhead, potentially negating some of the efficiency advantages that neuromorphic hardware offers for signal processing tasks.

Power management across hybrid systems presents another substantial challenge. While neuromorphic hardware excels in energy efficiency for certain signal processing operations, the integration components themselves often consume significant power. Creating seamless power management strategies across these disparate computing paradigms requires sophisticated control systems that can optimize energy usage while maintaining performance requirements.

Timing synchronization between event-driven neuromorphic components and clock-driven conventional systems introduces additional complexity. Signal processing applications often demand precise timing coordination, particularly in real-time scenarios. The asynchronous nature of neuromorphic computation can create unpredictable processing delays that must be carefully managed to maintain system integrity and performance guarantees.

Programming models for hybrid neuromorphic-conventional systems remain underdeveloped. Current software frameworks typically target either neuromorphic or conventional architectures exclusively, with limited support for heterogeneous computing environments. Developers face significant challenges in efficiently partitioning signal processing workloads across these different computational paradigms without specialized tools and abstractions.

Scalability concerns also emerge when integrating neuromorphic hardware into larger systems. As signal processing demands grow, the complexity of managing the interaction between neuromorphic and conventional components increases exponentially. Ensuring that performance scales proportionally with system size requires sophisticated resource allocation and management strategies that are still evolving in this emerging field.

Standardization efforts remain in nascent stages, further complicating integration efforts. The lack of established interfaces and protocols for neuromorphic-conventional system communication forces many implementations to rely on custom solutions, limiting interoperability and increasing development costs for signal processing applications that could benefit from neuromorphic acceleration.

Data format conversion represents a primary integration hurdle. Neuromorphic systems typically process information using spike-based representations, while conventional systems utilize standard digital formats. This discrepancy requires additional conversion layers that inevitably introduce latency and computational overhead, potentially negating some of the efficiency advantages that neuromorphic hardware offers for signal processing tasks.

Power management across hybrid systems presents another substantial challenge. While neuromorphic hardware excels in energy efficiency for certain signal processing operations, the integration components themselves often consume significant power. Creating seamless power management strategies across these disparate computing paradigms requires sophisticated control systems that can optimize energy usage while maintaining performance requirements.

Timing synchronization between event-driven neuromorphic components and clock-driven conventional systems introduces additional complexity. Signal processing applications often demand precise timing coordination, particularly in real-time scenarios. The asynchronous nature of neuromorphic computation can create unpredictable processing delays that must be carefully managed to maintain system integrity and performance guarantees.

Programming models for hybrid neuromorphic-conventional systems remain underdeveloped. Current software frameworks typically target either neuromorphic or conventional architectures exclusively, with limited support for heterogeneous computing environments. Developers face significant challenges in efficiently partitioning signal processing workloads across these different computational paradigms without specialized tools and abstractions.

Scalability concerns also emerge when integrating neuromorphic hardware into larger systems. As signal processing demands grow, the complexity of managing the interaction between neuromorphic and conventional components increases exponentially. Ensuring that performance scales proportionally with system size requires sophisticated resource allocation and management strategies that are still evolving in this emerging field.

Standardization efforts remain in nascent stages, further complicating integration efforts. The lack of established interfaces and protocols for neuromorphic-conventional system communication forces many implementations to rely on custom solutions, limiting interoperability and increasing development costs for signal processing applications that could benefit from neuromorphic acceleration.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!