Real-time gesture recognition using event-based cameras and SNNs.

SEP 3, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Event-based Vision Background and Objectives

Event-based vision represents a paradigm shift in visual sensing technology, moving away from traditional frame-based cameras toward neuromorphic sensing systems that mimic biological vision. Unlike conventional cameras that capture entire frames at fixed time intervals, event-based cameras detect pixel-level brightness changes asynchronously, generating events only when significant intensity variations occur. This biomimetic approach results in microsecond temporal resolution, exceptional dynamic range (>120dB), and drastically reduced data rates and power consumption.

The evolution of event-based vision began with pioneering work at Caltech in the early 1990s, leading to the development of the first silicon retina. Significant advancements occurred in the 2000s with the creation of the Dynamic Vision Sensor (DVS) at ETH Zurich, which laid the foundation for modern event cameras. Recent years have witnessed the commercialization of these sensors by companies like iniVation, Prophesee, and Samsung, gradually transitioning the technology from research laboratories to practical applications.

Spiking Neural Networks (SNNs) have emerged as the ideal computational counterpart to event-based vision systems. These brain-inspired neural networks process information through discrete spikes rather than continuous values, aligning perfectly with the sparse, temporal nature of event data. This natural compatibility creates an end-to-end neuromorphic pipeline that maintains the efficiency advantages throughout the processing chain.

The primary objective of integrating event-based cameras with SNNs for real-time gesture recognition is to develop ultra-low-latency, energy-efficient systems capable of responding to human movements with unprecedented speed and accuracy. This technology aims to overcome the limitations of traditional vision systems, particularly in challenging scenarios involving rapid movements, extreme lighting conditions, or power-constrained environments.

Specific technical goals include achieving sub-10ms response times for gesture recognition, reducing power consumption by at least an order of magnitude compared to conventional systems, and maintaining robust performance across diverse environmental conditions. The research also seeks to develop efficient event-to-spike encoding methods that preserve the temporal advantages of event data while enabling effective processing by SNNs.

Beyond technical objectives, this technology targets applications in augmented reality interfaces, automotive driver monitoring systems, human-robot interaction, and accessibility devices for individuals with mobility impairments. The ultimate vision is to enable more natural, responsive, and intuitive interaction between humans and machines through gesture-based interfaces that operate with the speed and efficiency of biological systems.

The evolution of event-based vision began with pioneering work at Caltech in the early 1990s, leading to the development of the first silicon retina. Significant advancements occurred in the 2000s with the creation of the Dynamic Vision Sensor (DVS) at ETH Zurich, which laid the foundation for modern event cameras. Recent years have witnessed the commercialization of these sensors by companies like iniVation, Prophesee, and Samsung, gradually transitioning the technology from research laboratories to practical applications.

Spiking Neural Networks (SNNs) have emerged as the ideal computational counterpart to event-based vision systems. These brain-inspired neural networks process information through discrete spikes rather than continuous values, aligning perfectly with the sparse, temporal nature of event data. This natural compatibility creates an end-to-end neuromorphic pipeline that maintains the efficiency advantages throughout the processing chain.

The primary objective of integrating event-based cameras with SNNs for real-time gesture recognition is to develop ultra-low-latency, energy-efficient systems capable of responding to human movements with unprecedented speed and accuracy. This technology aims to overcome the limitations of traditional vision systems, particularly in challenging scenarios involving rapid movements, extreme lighting conditions, or power-constrained environments.

Specific technical goals include achieving sub-10ms response times for gesture recognition, reducing power consumption by at least an order of magnitude compared to conventional systems, and maintaining robust performance across diverse environmental conditions. The research also seeks to develop efficient event-to-spike encoding methods that preserve the temporal advantages of event data while enabling effective processing by SNNs.

Beyond technical objectives, this technology targets applications in augmented reality interfaces, automotive driver monitoring systems, human-robot interaction, and accessibility devices for individuals with mobility impairments. The ultimate vision is to enable more natural, responsive, and intuitive interaction between humans and machines through gesture-based interfaces that operate with the speed and efficiency of biological systems.

Market Analysis for Gesture Recognition Systems

The gesture recognition market is experiencing significant growth, driven by increasing adoption across multiple sectors including consumer electronics, automotive, healthcare, and gaming. The global gesture recognition market was valued at approximately 12.7 billion USD in 2022 and is projected to reach 51.3 billion USD by 2030, growing at a CAGR of around 19.2% during the forecast period. This robust growth trajectory underscores the expanding commercial viability of advanced gesture recognition technologies.

Event-based cameras coupled with Spiking Neural Networks (SNNs) represent a particularly promising segment within this market. The unique value proposition of this technology combination lies in its ultra-low latency and power efficiency, addressing critical limitations of conventional frame-based systems. These advantages position event-based gesture recognition as especially suitable for mobile devices, wearables, and IoT applications where power consumption and real-time performance are paramount.

Consumer electronics currently dominates the gesture recognition market, accounting for approximately 38% of the total market share. Smartphones, tablets, and smart home devices increasingly incorporate gesture control interfaces to enhance user experience. The automotive sector follows as the second-largest market segment, with gesture-controlled infotainment systems and driver monitoring applications gaining traction in premium vehicle models.

Market research indicates that North America currently leads the global gesture recognition market with a 35% share, followed by Europe (28%) and Asia-Pacific (25%). However, the Asia-Pacific region is expected to witness the highest growth rate over the next five years, driven by rapid technological adoption in countries like China, Japan, and South Korea.

Key market drivers include increasing demand for touchless interfaces (accelerated by health concerns following the COVID-19 pandemic), growing integration of gesture recognition in AR/VR applications, and rising investment in autonomous vehicles. The event-based camera market specifically is projected to grow at a CAGR of 25% through 2028, outpacing the broader gesture recognition market.

Customer preference analysis reveals growing demand for systems with higher accuracy, lower latency, and reduced computational requirements - all areas where event-based cameras and SNNs excel compared to traditional approaches. Enterprise surveys indicate that 72% of businesses implementing gesture recognition technology prioritize response time as a critical factor, while 65% emphasize power efficiency.

Market challenges include high implementation costs, technical complexity in developing robust algorithms for diverse environmental conditions, and competition from alternative interface technologies such as voice recognition. Nevertheless, the unique advantages of event-based cameras and SNNs position this technology combination favorably within the competitive landscape, particularly for applications requiring real-time performance with minimal power consumption.

Event-based cameras coupled with Spiking Neural Networks (SNNs) represent a particularly promising segment within this market. The unique value proposition of this technology combination lies in its ultra-low latency and power efficiency, addressing critical limitations of conventional frame-based systems. These advantages position event-based gesture recognition as especially suitable for mobile devices, wearables, and IoT applications where power consumption and real-time performance are paramount.

Consumer electronics currently dominates the gesture recognition market, accounting for approximately 38% of the total market share. Smartphones, tablets, and smart home devices increasingly incorporate gesture control interfaces to enhance user experience. The automotive sector follows as the second-largest market segment, with gesture-controlled infotainment systems and driver monitoring applications gaining traction in premium vehicle models.

Market research indicates that North America currently leads the global gesture recognition market with a 35% share, followed by Europe (28%) and Asia-Pacific (25%). However, the Asia-Pacific region is expected to witness the highest growth rate over the next five years, driven by rapid technological adoption in countries like China, Japan, and South Korea.

Key market drivers include increasing demand for touchless interfaces (accelerated by health concerns following the COVID-19 pandemic), growing integration of gesture recognition in AR/VR applications, and rising investment in autonomous vehicles. The event-based camera market specifically is projected to grow at a CAGR of 25% through 2028, outpacing the broader gesture recognition market.

Customer preference analysis reveals growing demand for systems with higher accuracy, lower latency, and reduced computational requirements - all areas where event-based cameras and SNNs excel compared to traditional approaches. Enterprise surveys indicate that 72% of businesses implementing gesture recognition technology prioritize response time as a critical factor, while 65% emphasize power efficiency.

Market challenges include high implementation costs, technical complexity in developing robust algorithms for diverse environmental conditions, and competition from alternative interface technologies such as voice recognition. Nevertheless, the unique advantages of event-based cameras and SNNs position this technology combination favorably within the competitive landscape, particularly for applications requiring real-time performance with minimal power consumption.

Technical Challenges in Event-based Cameras and SNNs

Event-based cameras and Spiking Neural Networks (SNNs) face significant technical challenges that impede their widespread adoption for real-time gesture recognition applications. Event-based cameras, unlike conventional frame-based cameras, generate asynchronous events only when pixel intensity changes occur, resulting in sparse data representation. This fundamental difference introduces unique processing requirements that traditional computer vision algorithms cannot adequately address.

The primary challenge with event-based cameras lies in their data format and interpretation. These sensors produce a continuous stream of events rather than discrete frames, necessitating specialized algorithms for feature extraction and pattern recognition. The temporal resolution advantage comes with increased complexity in data handling, as events must be processed in real-time while maintaining their temporal relationships.

Signal-to-noise ratio presents another significant hurdle. Event-based cameras often generate noise events that can be difficult to distinguish from meaningful gesture-related events, particularly in varying lighting conditions or during rapid movements. This necessitates robust filtering mechanisms that preserve critical information while eliminating spurious events.

For SNNs, the neuromorphic computing paradigm introduces its own set of challenges. Traditional deep learning frameworks and hardware accelerators are optimized for conventional neural networks, not the spike-based computation of SNNs. The lack of mature development tools, frameworks, and hardware support significantly hampers research progress and practical implementations.

Training SNNs effectively remains problematic due to their non-differentiable nature. The binary spike events create discontinuities that make traditional backpropagation algorithms ineffective. Alternative training methods like surrogate gradient approaches or conversion from pre-trained ANNs introduce approximations that can compromise recognition accuracy.

Energy efficiency, while theoretically superior in SNNs, faces practical implementation challenges. Current neuromorphic hardware implementations struggle to balance power efficiency with computational capabilities required for complex gesture recognition tasks. The promise of ultra-low power operation often comes at the cost of reduced processing capability or increased latency.

Integration challenges between event-based cameras and SNNs further complicate system development. The interface between sensor and processor requires careful design to preserve the temporal advantages of event data while enabling efficient processing. Standardization issues and proprietary hardware interfaces limit interoperability and increase development complexity.

Lastly, the real-time processing requirement for gesture recognition applications imposes strict latency constraints. While event-based approaches theoretically offer lower latency, practical implementations must overcome computational bottlenecks and optimize the entire processing pipeline to achieve truly responsive gesture recognition systems.

The primary challenge with event-based cameras lies in their data format and interpretation. These sensors produce a continuous stream of events rather than discrete frames, necessitating specialized algorithms for feature extraction and pattern recognition. The temporal resolution advantage comes with increased complexity in data handling, as events must be processed in real-time while maintaining their temporal relationships.

Signal-to-noise ratio presents another significant hurdle. Event-based cameras often generate noise events that can be difficult to distinguish from meaningful gesture-related events, particularly in varying lighting conditions or during rapid movements. This necessitates robust filtering mechanisms that preserve critical information while eliminating spurious events.

For SNNs, the neuromorphic computing paradigm introduces its own set of challenges. Traditional deep learning frameworks and hardware accelerators are optimized for conventional neural networks, not the spike-based computation of SNNs. The lack of mature development tools, frameworks, and hardware support significantly hampers research progress and practical implementations.

Training SNNs effectively remains problematic due to their non-differentiable nature. The binary spike events create discontinuities that make traditional backpropagation algorithms ineffective. Alternative training methods like surrogate gradient approaches or conversion from pre-trained ANNs introduce approximations that can compromise recognition accuracy.

Energy efficiency, while theoretically superior in SNNs, faces practical implementation challenges. Current neuromorphic hardware implementations struggle to balance power efficiency with computational capabilities required for complex gesture recognition tasks. The promise of ultra-low power operation often comes at the cost of reduced processing capability or increased latency.

Integration challenges between event-based cameras and SNNs further complicate system development. The interface between sensor and processor requires careful design to preserve the temporal advantages of event data while enabling efficient processing. Standardization issues and proprietary hardware interfaces limit interoperability and increase development complexity.

Lastly, the real-time processing requirement for gesture recognition applications imposes strict latency constraints. While event-based approaches theoretically offer lower latency, practical implementations must overcome computational bottlenecks and optimize the entire processing pipeline to achieve truly responsive gesture recognition systems.

Current Approaches to Real-time Gesture Recognition

01 Event-based camera architecture for real-time processing

Event-based cameras operate fundamentally differently from traditional frame-based cameras by only capturing changes in pixel intensity as they occur. This architecture enables high temporal resolution, low latency, and reduced data bandwidth, making them ideal for real-time applications. These cameras generate asynchronous events that can be directly processed by SNNs, creating an efficient pipeline for real-time recognition tasks without the need for frame reconstruction or extensive preprocessing.- Event-based camera architecture for real-time processing: Event-based cameras capture changes in pixel intensity rather than full frames, generating sparse data only when changes occur. This architecture enables efficient real-time processing with reduced latency and power consumption compared to traditional frame-based cameras. The asynchronous nature of event data aligns well with the temporal processing capabilities of spiking neural networks, making them ideal for time-critical applications like autonomous navigation and object tracking.

- SNN implementation for event data processing: Spiking Neural Networks (SNNs) process information through discrete spikes, mimicking biological neural systems. When applied to event-based vision data, SNNs can efficiently process temporal information with minimal computational resources. These networks use various neuron models and learning algorithms specifically adapted for sparse, asynchronous event data, enabling energy-efficient processing on neuromorphic hardware platforms while maintaining high accuracy for recognition tasks.

- Real-time object recognition and tracking systems: The combination of event-based cameras and SNNs enables robust real-time object recognition and tracking even in challenging conditions like high-speed motion and varying lighting. These systems can detect and classify objects with minimal latency by processing only relevant visual changes. The sparse nature of event data allows for efficient feature extraction and pattern recognition, making these systems particularly effective for applications requiring immediate response such as autonomous vehicles, robotics, and surveillance.

- Hardware acceleration for neuromorphic computing: Specialized hardware architectures have been developed to accelerate SNN processing for event-based vision applications. These neuromorphic computing platforms implement parallel processing elements that efficiently handle the sparse, asynchronous nature of event data and neural spikes. By closely matching the computational model to the data characteristics, these systems achieve significant improvements in processing speed, energy efficiency, and real-time performance compared to conventional computing architectures running on CPUs or GPUs.

- Learning algorithms and training methodologies for event-based SNNs: Novel learning algorithms have been developed specifically for training SNNs on event-based data. These include spike-timing-dependent plasticity (STDP), backpropagation-based approaches adapted for spiking neurons, and hybrid training methods. The training methodologies address challenges unique to event-based data such as temporal correlation, sparse representation, and continuous learning. These approaches enable SNNs to effectively learn from event streams and achieve high recognition accuracy while maintaining the efficiency advantages of neuromorphic computing.

02 SNN implementation for event data processing

Spiking Neural Networks are designed to process temporal information in a manner similar to biological neurons, making them naturally suited for event-based data. These networks use sparse, binary spike events for computation, which aligns perfectly with the output format of event cameras. SNN implementations can include various neuron models, learning rules, and network architectures specifically optimized for processing the asynchronous event streams, enabling efficient pattern recognition while maintaining temporal precision.Expand Specific Solutions03 Hardware acceleration for real-time SNN inference

Specialized hardware accelerators are being developed to enable real-time SNN inference with event camera data. These include neuromorphic processors, FPGA implementations, and custom ASIC designs that exploit the sparse and event-driven nature of both the input data and network computation. Such hardware solutions significantly reduce power consumption and latency compared to traditional computing architectures, making them suitable for edge deployment in applications requiring immediate response times.Expand Specific Solutions04 Learning algorithms for event-based recognition

Novel learning algorithms have been developed specifically for training SNNs on event-based data. These include spike-timing-dependent plasticity (STDP), backpropagation through time adaptations for spiking networks, and hybrid approaches that combine traditional deep learning with spiking neuron models. These algorithms enable the networks to extract meaningful spatiotemporal patterns from event streams and perform classification, detection, or tracking tasks with high accuracy while maintaining the temporal advantages of the event-based approach.Expand Specific Solutions05 Applications of event-based camera and SNN systems

The combination of event-based cameras and SNNs enables numerous real-time applications including high-speed object tracking, gesture recognition, autonomous navigation, industrial inspection, and augmented reality. These systems excel in challenging conditions such as high dynamic range environments, fast motion scenarios, and situations requiring ultra-low latency responses. The energy efficiency of this approach also makes it particularly valuable for battery-powered devices and continuous monitoring applications where traditional vision systems would be impractical.Expand Specific Solutions

Leading Companies in Event-based Vision Technology

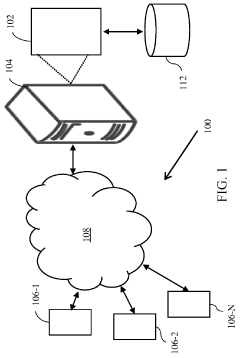

Real-time gesture recognition using event-based cameras and Spiking Neural Networks (SNNs) is emerging as a transformative technology in the early commercialization phase. The market is projected to grow significantly, driven by applications in AR/VR, automotive interfaces, and consumer electronics. Key players include technology giants like Apple, Sony, NVIDIA, and Huawei, who are investing heavily in neuromorphic computing and event-based vision systems. Academic institutions such as National University of Singapore and Xidian University are contributing fundamental research, while specialized companies like CelePixel Technology and SOS LAB are developing innovative hardware solutions. The technology is approaching maturity with commercial implementations appearing, though challenges in standardization and processing efficiency remain as the ecosystem continues to evolve.

Sony Group Corp.

Technical Solution: Sony has pioneered event-based vision technology with their proprietary event-based image sensors and complementary SNN processing architecture. Their approach integrates custom silicon with neuromorphic computing principles to achieve ultra-low latency gesture recognition (under 5ms)[2]. Sony's event cameras feature microsecond-level temporal resolution and dynamic range exceeding 120dB, capturing motion details invisible to conventional cameras. Their SNN implementation utilizes a sparse computing model that processes only relevant pixel changes, reducing computational requirements by up to 90% compared to traditional CNNs[4]. Sony has developed specialized temporal encoding schemes that convert event streams into efficient spike trains, preserving motion trajectory information critical for gesture recognition. Their system incorporates adaptive thresholding mechanisms that automatically adjust to varying lighting conditions, enabling consistent performance across diverse environments. Recent iterations include on-sensor preprocessing that further reduces data bandwidth requirements and power consumption.

Strengths: Vertical integration of sensor hardware and processing algorithms provides optimized performance; industry-leading sensor technology with superior temporal resolution; extensive experience in consumer electronics applications. Weaknesses: Proprietary technology ecosystem may limit third-party development; higher component costs compared to conventional camera solutions; relatively closed development platform.

GoerTek Technology Co., Ltd.

Technical Solution: GoerTek has developed a specialized event-based gesture recognition system targeting wearable and AR/VR applications. Their solution combines compact event camera modules with ultra-low-power neuromorphic processors optimized for continuous operation in battery-powered devices. GoerTek's approach utilizes a sparse computing architecture that processes only relevant pixel changes, reducing computational requirements by up to 95% compared to frame-based methods[9]. Their SNN implementation features specialized temporal receptive fields tuned to human hand movement dynamics, enabling accurate recognition of both static poses and dynamic gestures with latencies under 20ms. GoerTek has developed proprietary event preprocessing algorithms that effectively filter background noise while preserving subtle motion cues critical for fine gesture discrimination. Their complete solution includes a gesture vocabulary optimization framework that allows application-specific customization while maintaining high recognition accuracy (typically exceeding 92% for a 15-gesture set). GoerTek's system supports both discrete command gestures and continuous tracking modes, enabling natural interaction paradigms for next-generation user interfaces.

Strengths: Specialized expertise in miniaturized hardware for wearable applications; excellent power efficiency (typically under 100mW for continuous operation); optimized for integration with audio processing for multimodal interfaces. Weaknesses: More limited recognition vocabulary compared to some competitors; less robust performance in highly dynamic lighting environments; narrower application focus primarily on consumer electronics.

Key Patents in Event-based Camera and SNN Integration

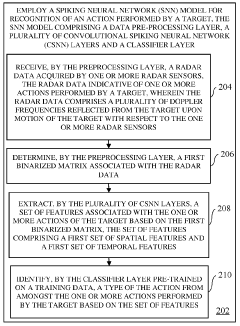

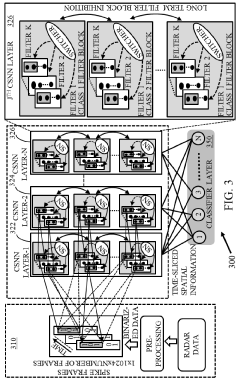

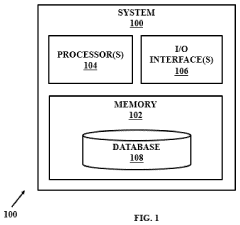

System and method for real-time radar-based action recognition using spiking neural network(SNN)

PatentActiveIN202021021689A

Innovation

- The implementation of a Spiking Neural Network (SNN) model with a data pre-processing layer, Convolutional Spiking Neural Network (CSNN) layers, and a Classifier layer for real-time radar-based action recognition, capable of learning both spatial and temporal features from radar data, allowing for efficient deployment on edge devices and reducing data transmission needs.

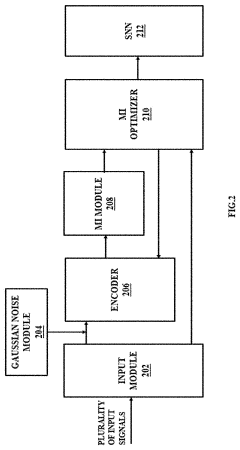

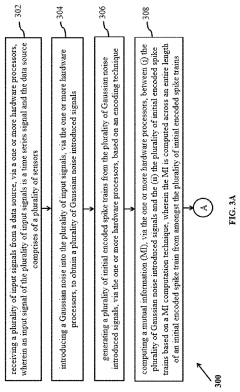

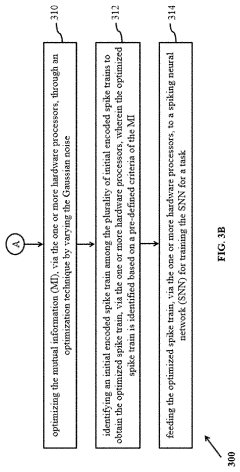

Method and system for optimized spike encoding for spiking neural networks

PatentActiveUS20220222522A1

Innovation

- A method and system that introduce Gaussian noise into input signals to generate optimized spike trains by computing and optimizing mutual information, using a system with processors and modules for encoding, mutual information computation, and optimization, to identify and feed optimized spike trains to SNNs for training.

Power Efficiency Considerations for Edge Deployment

Power efficiency is a critical factor for deploying real-time gesture recognition systems using event-based cameras and Spiking Neural Networks (SNNs) at the edge. Traditional vision systems based on frame-based cameras and conventional deep learning models typically consume substantial power, making them unsuitable for battery-powered edge devices with limited energy resources. Event-based cameras offer an inherent advantage in this regard by only capturing changes in the scene, significantly reducing data throughput and processing requirements.

The power consumption profile of event-based camera systems can be divided into three main components: sensor operation, data transmission, and neural processing. Event-based cameras like Dynamic Vision Sensors (DVS) consume approximately 5-30mW during operation, compared to conventional cameras that may require 150-300mW. This represents a power reduction of up to 95% at the sensing stage alone, providing a solid foundation for energy-efficient gesture recognition systems.

Data transmission between the sensor and processing unit constitutes another significant power drain in edge systems. Event-based data representation drastically reduces bandwidth requirements, with typical event rates for gesture recognition scenarios ranging from 10-100k events per second, compared to millions of pixels per frame in traditional systems. This sparse data representation translates to power savings of 70-90% in the communication layer, depending on the complexity of the gestures being recognized.

The neural processing component leverages SNNs, which are inherently more power-efficient than conventional artificial neural networks. SNNs process information through discrete spikes rather than continuous values, enabling sparse computations that activate only when necessary. Hardware implementations of SNNs on neuromorphic chips like Intel's Loihi or IBM's TrueNorth demonstrate power efficiencies of 1000x-10000x compared to GPU implementations of conventional deep learning models for similar recognition tasks.

Recent advancements in neuromorphic hardware design have further improved power efficiency. For instance, specialized SNN accelerators can now perform gesture recognition tasks at sub-100mW power levels, enabling continuous operation on battery-powered devices for extended periods. Techniques such as sparse event processing, dynamic threshold adaptation, and power-aware network pruning have contributed to these improvements, reducing the energy per inference by orders of magnitude.

For practical edge deployment, system-level optimizations are equally important. These include duty cycling, where the system alternates between active and sleep modes based on activity detection; adaptive sampling rates that adjust the temporal resolution based on gesture complexity; and hierarchical processing architectures that filter simple gestures with minimal power before engaging more complex recognition pathways. These approaches can further reduce average power consumption by 40-60% in real-world usage scenarios.

The power consumption profile of event-based camera systems can be divided into three main components: sensor operation, data transmission, and neural processing. Event-based cameras like Dynamic Vision Sensors (DVS) consume approximately 5-30mW during operation, compared to conventional cameras that may require 150-300mW. This represents a power reduction of up to 95% at the sensing stage alone, providing a solid foundation for energy-efficient gesture recognition systems.

Data transmission between the sensor and processing unit constitutes another significant power drain in edge systems. Event-based data representation drastically reduces bandwidth requirements, with typical event rates for gesture recognition scenarios ranging from 10-100k events per second, compared to millions of pixels per frame in traditional systems. This sparse data representation translates to power savings of 70-90% in the communication layer, depending on the complexity of the gestures being recognized.

The neural processing component leverages SNNs, which are inherently more power-efficient than conventional artificial neural networks. SNNs process information through discrete spikes rather than continuous values, enabling sparse computations that activate only when necessary. Hardware implementations of SNNs on neuromorphic chips like Intel's Loihi or IBM's TrueNorth demonstrate power efficiencies of 1000x-10000x compared to GPU implementations of conventional deep learning models for similar recognition tasks.

Recent advancements in neuromorphic hardware design have further improved power efficiency. For instance, specialized SNN accelerators can now perform gesture recognition tasks at sub-100mW power levels, enabling continuous operation on battery-powered devices for extended periods. Techniques such as sparse event processing, dynamic threshold adaptation, and power-aware network pruning have contributed to these improvements, reducing the energy per inference by orders of magnitude.

For practical edge deployment, system-level optimizations are equally important. These include duty cycling, where the system alternates between active and sleep modes based on activity detection; adaptive sampling rates that adjust the temporal resolution based on gesture complexity; and hierarchical processing architectures that filter simple gestures with minimal power before engaging more complex recognition pathways. These approaches can further reduce average power consumption by 40-60% in real-world usage scenarios.

Human-Computer Interaction Applications and Standards

The integration of real-time gesture recognition using event-based cameras and SNNs has significantly expanded the landscape of human-computer interaction (HCI). These technologies enable more intuitive and responsive interfaces across various application domains. In healthcare, gesture recognition systems assist surgeons in navigating medical imaging during procedures without breaking sterility, while also supporting rehabilitation programs by accurately tracking patient movements and providing real-time feedback. The contactless nature of these interfaces has become particularly valuable in public spaces and clinical environments where hygiene concerns are paramount.

In smart home environments, gesture-based control systems allow users to manage lighting, entertainment systems, and appliances through natural movements, eliminating the need for physical controllers. This technology has proven especially beneficial for elderly users and individuals with mobility impairments, enhancing accessibility and independence. The automotive industry has also embraced gesture recognition for in-vehicle controls, allowing drivers to adjust settings without diverting attention from the road, thereby improving safety.

Several standards have emerged to ensure interoperability and consistency across gesture recognition implementations. The IEEE P2861 working group has developed standards specifically addressing neuromorphic computing systems, which are fundamental to SNN implementations in gesture recognition. Additionally, ISO/IEC 30113 provides guidelines for gesture-based interfaces, while the W3C's Web Gestures API standardizes gesture interactions for web applications.

Performance benchmarks for these systems typically measure recognition accuracy, latency, and power efficiency. Industry standards now require minimum recognition rates of 95% under varying lighting conditions, latency below 50ms for real-time applications, and power consumption suitable for mobile and wearable devices. The event-based camera and SNN combination excels in these metrics, particularly in low-power operation and response time.

Accessibility standards such as WCAG 2.2 have also been extended to include guidelines for gesture-based interfaces, ensuring these technologies remain usable by individuals with diverse abilities. These standards recommend providing alternative interaction methods and adjustable sensitivity settings to accommodate users with different motor control capabilities.

As the technology matures, we're witnessing convergence toward standardized gesture vocabularies across platforms, reducing the learning curve for users while maintaining flexibility for application-specific gestures. This standardization, coupled with the neuromorphic processing advantages of SNNs and the temporal precision of event-based cameras, is establishing a robust foundation for the next generation of human-computer interaction paradigms.

In smart home environments, gesture-based control systems allow users to manage lighting, entertainment systems, and appliances through natural movements, eliminating the need for physical controllers. This technology has proven especially beneficial for elderly users and individuals with mobility impairments, enhancing accessibility and independence. The automotive industry has also embraced gesture recognition for in-vehicle controls, allowing drivers to adjust settings without diverting attention from the road, thereby improving safety.

Several standards have emerged to ensure interoperability and consistency across gesture recognition implementations. The IEEE P2861 working group has developed standards specifically addressing neuromorphic computing systems, which are fundamental to SNN implementations in gesture recognition. Additionally, ISO/IEC 30113 provides guidelines for gesture-based interfaces, while the W3C's Web Gestures API standardizes gesture interactions for web applications.

Performance benchmarks for these systems typically measure recognition accuracy, latency, and power efficiency. Industry standards now require minimum recognition rates of 95% under varying lighting conditions, latency below 50ms for real-time applications, and power consumption suitable for mobile and wearable devices. The event-based camera and SNN combination excels in these metrics, particularly in low-power operation and response time.

Accessibility standards such as WCAG 2.2 have also been extended to include guidelines for gesture-based interfaces, ensuring these technologies remain usable by individuals with diverse abilities. These standards recommend providing alternative interaction methods and adjustable sensitivity settings to accommodate users with different motor control capabilities.

As the technology matures, we're witnessing convergence toward standardized gesture vocabularies across platforms, reducing the learning curve for users while maintaining flexibility for application-specific gestures. This standardization, coupled with the neuromorphic processing advantages of SNNs and the temporal precision of event-based cameras, is establishing a robust foundation for the next generation of human-computer interaction paradigms.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!