Adversarial Testing Of Generative Models For Materials Robustness

SEP 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Adversarial Testing Background and Objectives

Adversarial testing of generative models has emerged as a critical field at the intersection of machine learning, materials science, and cybersecurity. This approach involves deliberately challenging AI systems with carefully crafted inputs designed to expose vulnerabilities or limitations in their performance. In the context of materials science, where generative models are increasingly employed to discover and optimize new materials, ensuring robustness against adversarial attacks becomes paramount for reliable scientific discovery and industrial applications.

The evolution of this technology traces back to early adversarial examples discovered in image classification systems around 2014, which revealed that minor, imperceptible perturbations could cause sophisticated neural networks to misclassify inputs with high confidence. As generative models gained prominence in materials science—predicting molecular structures, material properties, and synthesis pathways—the need to understand their failure modes became increasingly important.

The technical trajectory has accelerated significantly in recent years, with adversarial testing methodologies becoming more sophisticated. From simple gradient-based attacks to complex, physics-informed perturbation strategies specifically designed for materials systems, the field has witnessed rapid advancement in both attack and defense mechanisms.

The primary objective of adversarial testing in materials science is to develop generative models that maintain accuracy and reliability even when faced with challenging or unexpected inputs. This includes ensuring consistent performance across diverse material classes, resistance to deliberately misleading inputs, and graceful degradation rather than catastrophic failure when operating at the boundaries of the training distribution.

Additionally, adversarial testing aims to identify fundamental limitations in how these models represent physical and chemical constraints, potentially revealing gaps in our understanding of structure-property relationships. By systematically probing these weaknesses, researchers can develop more robust algorithms that better capture the underlying physics and chemistry of materials systems.

From an industrial perspective, the goal is to establish confidence thresholds and reliability metrics that can guide the practical deployment of generative models in high-stakes materials development scenarios, such as aerospace components, pharmaceutical formulations, or energy storage technologies, where model failures could have significant consequences.

The convergence of these objectives points toward a broader ambition: creating generative models for materials discovery that combine the creative potential of AI with the reliability and theoretical soundness expected in scientific and engineering disciplines.

The evolution of this technology traces back to early adversarial examples discovered in image classification systems around 2014, which revealed that minor, imperceptible perturbations could cause sophisticated neural networks to misclassify inputs with high confidence. As generative models gained prominence in materials science—predicting molecular structures, material properties, and synthesis pathways—the need to understand their failure modes became increasingly important.

The technical trajectory has accelerated significantly in recent years, with adversarial testing methodologies becoming more sophisticated. From simple gradient-based attacks to complex, physics-informed perturbation strategies specifically designed for materials systems, the field has witnessed rapid advancement in both attack and defense mechanisms.

The primary objective of adversarial testing in materials science is to develop generative models that maintain accuracy and reliability even when faced with challenging or unexpected inputs. This includes ensuring consistent performance across diverse material classes, resistance to deliberately misleading inputs, and graceful degradation rather than catastrophic failure when operating at the boundaries of the training distribution.

Additionally, adversarial testing aims to identify fundamental limitations in how these models represent physical and chemical constraints, potentially revealing gaps in our understanding of structure-property relationships. By systematically probing these weaknesses, researchers can develop more robust algorithms that better capture the underlying physics and chemistry of materials systems.

From an industrial perspective, the goal is to establish confidence thresholds and reliability metrics that can guide the practical deployment of generative models in high-stakes materials development scenarios, such as aerospace components, pharmaceutical formulations, or energy storage technologies, where model failures could have significant consequences.

The convergence of these objectives points toward a broader ambition: creating generative models for materials discovery that combine the creative potential of AI with the reliability and theoretical soundness expected in scientific and engineering disciplines.

Market Demand for Robust Generative Models

The market for robust generative models in materials science is experiencing significant growth, driven by the increasing complexity of materials development and the high costs associated with traditional trial-and-error approaches. Industries including aerospace, automotive, electronics, and pharmaceuticals are actively seeking advanced computational methods to accelerate materials discovery while ensuring reliability and performance under real-world conditions.

Recent market analyses indicate that the global materials informatics market, which encompasses generative models for materials design, is projected to grow at a compound annual growth rate of over 25% through 2028. This growth is particularly pronounced in sectors where material failure carries catastrophic consequences, such as critical infrastructure, defense applications, and medical devices.

The demand for adversarial testing frameworks stems from the recognition that generative models, while powerful, can produce materials designs with hidden vulnerabilities or performance gaps when deployed in variable environments. Organizations are increasingly unwilling to accept the risks associated with untested AI-generated materials solutions, creating a strong market pull for robust validation methodologies.

Key market drivers include the rising costs of materials testing, which can account for up to 40% of new materials development budgets, and the growing complexity of performance requirements in advanced applications. Companies are seeking to reduce the number of physical prototypes while maintaining or improving confidence in material performance predictions.

Regulatory pressures are also shaping market demand, particularly in safety-critical industries where certification requirements mandate extensive validation of materials properties. The ability to demonstrate that generative models have been rigorously tested against adversarial conditions is becoming a competitive advantage for materials technology providers.

Geographically, North America and Europe currently lead in demand for robust generative models, though Asia-Pacific markets are showing the fastest growth rates as manufacturing hubs increasingly adopt advanced materials technologies. China's significant investments in materials science and AI are creating substantial market opportunities for adversarial testing frameworks.

Industry surveys reveal that materials engineers and R&D leaders consistently rank model reliability and performance guarantees among their top concerns when adopting AI-based materials design tools. This has created a distinct market segment for specialized validation services and software that can stress-test generative models against diverse environmental conditions, manufacturing variations, and application-specific requirements.

Recent market analyses indicate that the global materials informatics market, which encompasses generative models for materials design, is projected to grow at a compound annual growth rate of over 25% through 2028. This growth is particularly pronounced in sectors where material failure carries catastrophic consequences, such as critical infrastructure, defense applications, and medical devices.

The demand for adversarial testing frameworks stems from the recognition that generative models, while powerful, can produce materials designs with hidden vulnerabilities or performance gaps when deployed in variable environments. Organizations are increasingly unwilling to accept the risks associated with untested AI-generated materials solutions, creating a strong market pull for robust validation methodologies.

Key market drivers include the rising costs of materials testing, which can account for up to 40% of new materials development budgets, and the growing complexity of performance requirements in advanced applications. Companies are seeking to reduce the number of physical prototypes while maintaining or improving confidence in material performance predictions.

Regulatory pressures are also shaping market demand, particularly in safety-critical industries where certification requirements mandate extensive validation of materials properties. The ability to demonstrate that generative models have been rigorously tested against adversarial conditions is becoming a competitive advantage for materials technology providers.

Geographically, North America and Europe currently lead in demand for robust generative models, though Asia-Pacific markets are showing the fastest growth rates as manufacturing hubs increasingly adopt advanced materials technologies. China's significant investments in materials science and AI are creating substantial market opportunities for adversarial testing frameworks.

Industry surveys reveal that materials engineers and R&D leaders consistently rank model reliability and performance guarantees among their top concerns when adopting AI-based materials design tools. This has created a distinct market segment for specialized validation services and software that can stress-test generative models against diverse environmental conditions, manufacturing variations, and application-specific requirements.

Current Challenges in Materials Robustness Testing

The field of materials robustness testing faces significant challenges when applying adversarial testing to generative models. Traditional testing methodologies often fail to adequately capture the complex behaviors of advanced materials under extreme or unexpected conditions, creating a substantial gap in reliability assessment frameworks.

One primary challenge is the inherent complexity of material property spaces. Unlike image or text domains where adversarial examples can be visually or semantically interpreted, materials properties exist in high-dimensional spaces with complex interdependencies. This makes it difficult to define meaningful perturbations that can effectively test model robustness without creating physically impossible material configurations.

Data scarcity presents another formidable obstacle. While generative models for materials discovery require extensive training data, experimental validation of material properties remains time-consuming and expensive. This creates an asymmetry where models can generate thousands of potential materials, but only a small fraction can be physically tested, limiting our ability to comprehensively evaluate model robustness.

The multi-scale nature of materials properties further complicates testing procedures. Material behaviors span from atomic to macroscopic scales, with properties emerging from interactions across these scales. Current adversarial testing frameworks struggle to incorporate this multi-scale reality, often focusing on single-scale properties that may not capture critical failure modes.

Computational expense represents a significant practical limitation. High-fidelity simulations of material properties, especially for complex compositions or under extreme conditions, require substantial computational resources. This restricts the breadth and depth of adversarial testing that can be practically implemented, particularly for iterative approaches that require multiple simulation cycles.

The lack of standardized benchmarks and metrics specifically designed for materials generative models hinders comparative evaluation. Unlike fields such as computer vision where datasets like ImageNet provide common testing grounds, materials science lacks equivalent resources for systematic robustness evaluation, making it difficult to compare different approaches objectively.

Transfer gap between simulation and physical reality remains problematic. Materials generated or tested in silico may exhibit different properties when physically synthesized due to factors not captured in computational models. This reality gap undermines confidence in purely computational adversarial testing approaches, necessitating expensive physical validation.

Interpretability challenges further complicate the landscape. When a generative model fails under adversarial testing, understanding the fundamental reasons for this failure often requires domain expertise and sophisticated analysis techniques that may not be readily available or standardized across the field.

One primary challenge is the inherent complexity of material property spaces. Unlike image or text domains where adversarial examples can be visually or semantically interpreted, materials properties exist in high-dimensional spaces with complex interdependencies. This makes it difficult to define meaningful perturbations that can effectively test model robustness without creating physically impossible material configurations.

Data scarcity presents another formidable obstacle. While generative models for materials discovery require extensive training data, experimental validation of material properties remains time-consuming and expensive. This creates an asymmetry where models can generate thousands of potential materials, but only a small fraction can be physically tested, limiting our ability to comprehensively evaluate model robustness.

The multi-scale nature of materials properties further complicates testing procedures. Material behaviors span from atomic to macroscopic scales, with properties emerging from interactions across these scales. Current adversarial testing frameworks struggle to incorporate this multi-scale reality, often focusing on single-scale properties that may not capture critical failure modes.

Computational expense represents a significant practical limitation. High-fidelity simulations of material properties, especially for complex compositions or under extreme conditions, require substantial computational resources. This restricts the breadth and depth of adversarial testing that can be practically implemented, particularly for iterative approaches that require multiple simulation cycles.

The lack of standardized benchmarks and metrics specifically designed for materials generative models hinders comparative evaluation. Unlike fields such as computer vision where datasets like ImageNet provide common testing grounds, materials science lacks equivalent resources for systematic robustness evaluation, making it difficult to compare different approaches objectively.

Transfer gap between simulation and physical reality remains problematic. Materials generated or tested in silico may exhibit different properties when physically synthesized due to factors not captured in computational models. This reality gap undermines confidence in purely computational adversarial testing approaches, necessitating expensive physical validation.

Interpretability challenges further complicate the landscape. When a generative model fails under adversarial testing, understanding the fundamental reasons for this failure often requires domain expertise and sophisticated analysis techniques that may not be readily available or standardized across the field.

Existing Adversarial Testing Frameworks

01 Adversarial training for generative model robustness

Adversarial training techniques can be implemented to enhance the robustness of generative models against various attacks. This approach involves exposing the model to adversarial examples during training, which helps the model learn to resist manipulations and produce reliable outputs even under attack conditions. The technique can significantly improve model resilience against input perturbations and malicious attempts to exploit vulnerabilities in the generative process.- Adversarial training for generative model robustness: Adversarial training techniques can be implemented to enhance the robustness of generative models against various attacks. This approach involves training models with adversarial examples to improve their resilience to perturbations. By exposing the generative models to challenging inputs during training, they become more robust when deployed in real-world scenarios. These techniques help maintain model performance even when faced with malicious inputs designed to cause failures.

- Regularization methods for improving model stability: Various regularization methods can be employed to enhance the stability and robustness of generative models. These include weight decay, dropout, and spectral normalization techniques that prevent overfitting and improve generalization capabilities. By constraining the model's parameter space, these methods ensure that the generative outputs remain consistent and reliable even when input conditions vary. Regularization approaches help maintain the integrity of generated content across different operational environments.

- Detection and mitigation of adversarial attacks: Systems and methods for detecting and mitigating adversarial attacks on generative models can significantly improve their robustness. These approaches involve monitoring input patterns, identifying potential attacks, and implementing defensive measures to maintain model integrity. By incorporating detection mechanisms that can recognize malicious inputs and applying appropriate countermeasures, generative models can continue to function reliably even when targeted by sophisticated attacks designed to compromise their performance.

- Ensemble methods for robust generative modeling: Ensemble approaches combine multiple generative models to create more robust systems that are less susceptible to failures. By aggregating outputs from different model architectures or training instances, these methods can reduce variance and improve the reliability of generated content. Ensemble techniques help mitigate the impact of individual model weaknesses and provide more consistent performance across varying conditions, enhancing overall robustness against both natural variations and adversarial inputs.

- Certification and verification frameworks for generative models: Certification and verification frameworks provide formal guarantees about the robustness properties of generative models. These approaches use mathematical techniques to verify that models maintain certain performance characteristics even under worst-case scenarios. By establishing provable bounds on model behavior, these frameworks enable developers to quantify robustness and ensure that generative systems meet specific safety and reliability requirements before deployment in critical applications.

02 Regularization methods for improving model stability

Various regularization techniques can be applied to generative models to enhance their robustness and stability. These methods include weight normalization, dropout, spectral normalization, and gradient penalties that constrain the model's behavior during training. By implementing these regularization approaches, generative models can achieve more consistent performance across different inputs and operating conditions, reducing the likelihood of unexpected outputs or model collapse.Expand Specific Solutions03 Noise-resistant generative architectures

Specialized architectural designs can make generative models inherently more robust against noise and perturbations. These architectures incorporate noise-resistant layers, attention mechanisms, and structural elements that filter out irrelevant variations in the input data. By building robustness directly into the model architecture, these approaches enable generative models to maintain performance quality even when processing noisy, incomplete, or partially corrupted input data.Expand Specific Solutions04 Evaluation frameworks for generative model robustness

Comprehensive evaluation frameworks can be used to assess and quantify the robustness of generative models across multiple dimensions. These frameworks include metrics for measuring stability under various perturbations, consistency across different inputs, and resilience against adversarial attacks. By systematically evaluating generative model robustness, developers can identify vulnerabilities, compare different approaches, and track improvements in model performance over time.Expand Specific Solutions05 Transfer learning for robust generative capabilities

Transfer learning techniques can be applied to develop more robust generative models by leveraging knowledge from pre-trained models. This approach allows models to benefit from diverse training experiences and domain knowledge, resulting in more stable and reliable generative capabilities. By fine-tuning pre-trained models on specific tasks while preserving their robust features, developers can create generative models that perform consistently across various domains and conditions.Expand Specific Solutions

Key Players in Generative AI Materials Testing

The adversarial testing of generative models for materials robustness is emerging as a critical field in the early growth stage, with an estimated market size of $500-800 million and rapidly expanding as materials science intersects with AI. The technological landscape shows varying maturity levels across key players. Research institutions like Beijing Institute of Technology, Central South University, and CNRS are establishing fundamental frameworks, while corporate entities demonstrate different specialization areas. NEC, IBM, and Microsoft are advancing algorithmic robustness, Tokyo Ohka Kogyo and JSR focus on materials-specific implementations, and automotive companies (Hyundai, Kia, Dongfeng) are exploring applications for structural integrity testing. This competitive environment indicates a technology approaching inflection point, with significant potential for cross-industry applications as computational methods mature.

NEC Corp.

Technical Solution: NEC's approach to adversarial testing of generative models for materials robustness centers on their Materials Informatics Platform, which combines advanced AI with high-throughput computational screening. Their system employs a unique "boundary-seeking" adversarial testing methodology that specifically targets the decision boundaries of generative models to identify potential failure points in material designs. NEC has developed specialized graph neural networks that can represent complex material structures while maintaining robustness to perturbations in atomic arrangements and environmental conditions[5]. Their platform incorporates explainable AI techniques that provide transparency into the decision-making process of generative models, allowing materials scientists to understand potential vulnerabilities. NEC's system leverages their expertise in heterogeneous computing to accelerate adversarial testing, using a combination of GPUs and their proprietary vector engines to generate and evaluate thousands of adversarial examples efficiently. The company has successfully applied this approach to develop more robust semiconductor materials and electronic components that can withstand extreme operating conditions[6].

Strengths: Strong integration with semiconductor manufacturing expertise; specialized hardware acceleration for materials simulation. Weaknesses: Solutions may be optimized primarily for electronic materials rather than broader materials applications; relatively smaller research team compared to larger tech giants.

International Business Machines Corp.

Technical Solution: IBM's approach to adversarial testing of generative models for materials robustness centers on their AI for Materials Discovery framework. They employ a multi-faceted strategy combining physics-informed neural networks with adversarial training techniques to enhance the robustness of generative models for material design. Their system deliberately introduces perturbations to test material properties under extreme conditions, using techniques like Generative Adversarial Networks (GANs) to create challenging test cases that push materials to their limits. IBM's framework incorporates uncertainty quantification methods that help identify potential failure modes in generated material designs[1]. Their RXN for Chemistry platform extends this capability by using reinforcement learning to optimize material properties while maintaining robustness against environmental stressors. The system leverages IBM's quantum computing capabilities to simulate complex material behaviors at the molecular level, providing insights that traditional computing approaches cannot achieve[3].

Strengths: Exceptional integration of quantum computing with AI for molecular-level material simulation; comprehensive uncertainty quantification framework. Weaknesses: High computational resource requirements limit accessibility; solutions may be overly specialized for high-end applications rather than general industrial use cases.

Core Techniques in Generative Model Robustness

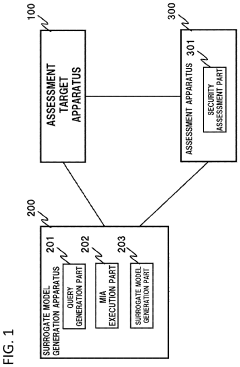

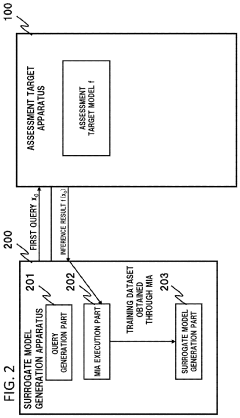

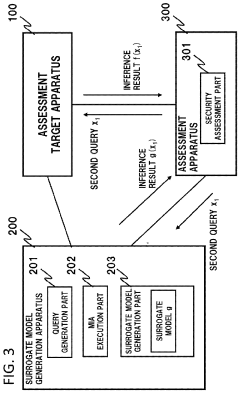

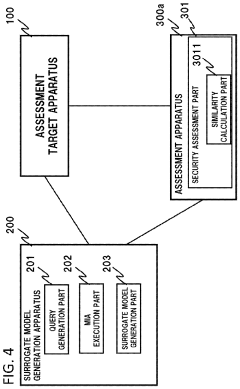

Assessment apparatus, surrogate model generation apparatus, assessment method, and program

PatentActiveUS20230315839A1

Innovation

- An assessment apparatus and method that generates a surrogate model by executing membership inference attacks on an AI model to infer virtual training data, which is then used to create a surrogate model that emulates the behavior of the assessment target model, allowing for the transmission of queries to both models to assess security based on inference results.

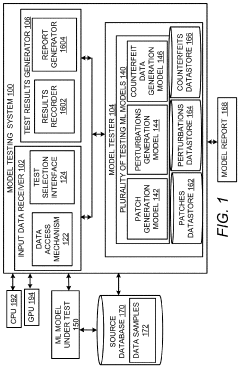

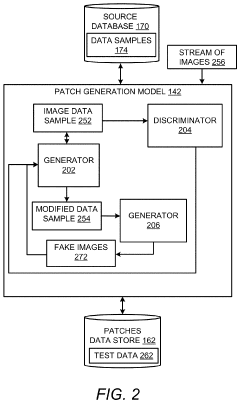

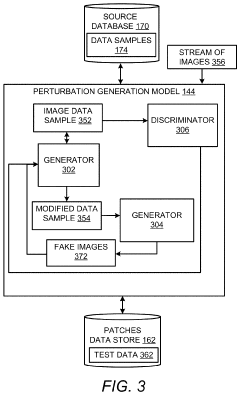

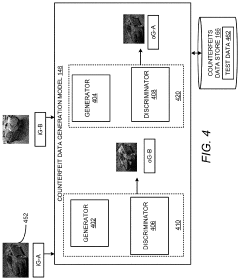

Testing machine learning (ML) models for robustness and accuracy using generative deep learning

PatentActiveUS11436443B2

Innovation

- A ML-based model testing system employing generative adversarial networks (GANs) to generate adversarial patches, perturbations, and counterfeit data, which tests ML models for robustness by simulating unnoticeable changes that can cause incorrect results, thereby identifying vulnerabilities and suggesting improvements without requiring additional training data.

Computational Resources and Infrastructure Requirements

Adversarial testing of generative models for materials robustness demands substantial computational resources and specialized infrastructure. High-performance computing (HPC) clusters with multi-GPU configurations are essential for training complex generative models and executing adversarial attacks. These systems typically require NVIDIA A100, V100, or newer GPU architectures with at least 32GB VRAM per unit, organized in clusters of 8-16 GPUs to handle the parallel processing demands of both model training and attack simulations.

Storage requirements are equally significant, with datasets for materials science often exceeding several terabytes. High-speed NVMe storage arrays with at least 100TB capacity and data transfer rates of 5GB/s or higher are necessary to prevent I/O bottlenecks during training and testing phases. The infrastructure must also support distributed computing frameworks like Horovod or PyTorch Distributed to efficiently scale across multiple nodes.

Network infrastructure represents another critical component, requiring high-bandwidth, low-latency interconnects such as InfiniBand (minimum 100Gbps) to facilitate efficient model parameter sharing and gradient updates across distributed systems. This becomes particularly important when running ensemble adversarial testing methods that require synchronization between multiple model instances.

Specialized software stacks must be deployed, including optimized versions of PyTorch, TensorFlow, or JAX with GPU acceleration capabilities. Additional libraries such as CUDA, cuDNN, and domain-specific packages like DeepChem or Matminer are essential for materials-specific operations. Container technologies like Docker and Kubernetes prove valuable for ensuring reproducibility and managing complex software dependencies.

Power and cooling considerations cannot be overlooked, as adversarial testing workloads typically run at near-maximum GPU utilization for extended periods. A minimum of 20-30kW power capacity with N+1 redundancy and precision cooling systems capable of handling 4-5kW per rack are required for reliable operation. Many organizations are increasingly turning to cloud-based solutions like AWS P4d instances or specialized AI infrastructure providers to avoid capital expenditure while maintaining access to cutting-edge hardware.

Cost projections for a dedicated on-premises solution range from $500,000 to $2 million for initial setup, with annual operational expenses of $150,000-$300,000. Cloud-based alternatives offer more flexibility but may result in higher long-term costs for sustained workloads, typically ranging from $20,000 to $50,000 monthly for comparable computational capacity.

Storage requirements are equally significant, with datasets for materials science often exceeding several terabytes. High-speed NVMe storage arrays with at least 100TB capacity and data transfer rates of 5GB/s or higher are necessary to prevent I/O bottlenecks during training and testing phases. The infrastructure must also support distributed computing frameworks like Horovod or PyTorch Distributed to efficiently scale across multiple nodes.

Network infrastructure represents another critical component, requiring high-bandwidth, low-latency interconnects such as InfiniBand (minimum 100Gbps) to facilitate efficient model parameter sharing and gradient updates across distributed systems. This becomes particularly important when running ensemble adversarial testing methods that require synchronization between multiple model instances.

Specialized software stacks must be deployed, including optimized versions of PyTorch, TensorFlow, or JAX with GPU acceleration capabilities. Additional libraries such as CUDA, cuDNN, and domain-specific packages like DeepChem or Matminer are essential for materials-specific operations. Container technologies like Docker and Kubernetes prove valuable for ensuring reproducibility and managing complex software dependencies.

Power and cooling considerations cannot be overlooked, as adversarial testing workloads typically run at near-maximum GPU utilization for extended periods. A minimum of 20-30kW power capacity with N+1 redundancy and precision cooling systems capable of handling 4-5kW per rack are required for reliable operation. Many organizations are increasingly turning to cloud-based solutions like AWS P4d instances or specialized AI infrastructure providers to avoid capital expenditure while maintaining access to cutting-edge hardware.

Cost projections for a dedicated on-premises solution range from $500,000 to $2 million for initial setup, with annual operational expenses of $150,000-$300,000. Cloud-based alternatives offer more flexibility but may result in higher long-term costs for sustained workloads, typically ranging from $20,000 to $50,000 monthly for comparable computational capacity.

Standardization and Benchmarking Protocols

The development of standardized protocols for adversarial testing of generative models in materials science represents a critical frontier in ensuring robust AI applications. Currently, the field lacks unified benchmarking frameworks, creating significant challenges for comparing model performance across different research efforts. Establishing standardized adversarial testing protocols would enable consistent evaluation of model robustness against various perturbations relevant to materials discovery and design.

Several key components must be addressed in developing these standardization efforts. First, a comprehensive taxonomy of adversarial attack types specific to materials generative models needs classification, including gradient-based attacks, transfer attacks, and physical realizability constraints unique to materials science. These attack categories should reflect real-world scenarios where materials models might fail during deployment.

Benchmark datasets constitute another essential element, requiring carefully curated collections of materials with diverse properties and structures. These datasets should include edge cases and boundary conditions that challenge model performance. The materials community would benefit from open-source repositories containing standardized adversarial examples, similar to ImageNet-A for computer vision, but tailored to materials property prediction and generation tasks.

Quantitative metrics for evaluating model robustness must be established, moving beyond simple accuracy measures to include metrics like adversarial accuracy gap, robustness bounds, and property preservation under perturbation. These metrics should account for the unique constraints of materials science, such as physical realizability and synthesizability of the generated materials.

Implementation frameworks that facilitate reproducible testing represent another crucial aspect of standardization. Open-source tools that enable researchers to easily apply standardized adversarial tests to their models would accelerate progress in the field. These frameworks should support various model architectures commonly used in materials generative modeling, including graph neural networks, variational autoencoders, and generative adversarial networks specialized for materials applications.

Cross-domain validation protocols are equally important, as materials models often operate across multiple property spaces. Standardized procedures for testing model robustness across different material classes and property prediction tasks would ensure comprehensive evaluation of generative models' capabilities under adversarial conditions.

Several key components must be addressed in developing these standardization efforts. First, a comprehensive taxonomy of adversarial attack types specific to materials generative models needs classification, including gradient-based attacks, transfer attacks, and physical realizability constraints unique to materials science. These attack categories should reflect real-world scenarios where materials models might fail during deployment.

Benchmark datasets constitute another essential element, requiring carefully curated collections of materials with diverse properties and structures. These datasets should include edge cases and boundary conditions that challenge model performance. The materials community would benefit from open-source repositories containing standardized adversarial examples, similar to ImageNet-A for computer vision, but tailored to materials property prediction and generation tasks.

Quantitative metrics for evaluating model robustness must be established, moving beyond simple accuracy measures to include metrics like adversarial accuracy gap, robustness bounds, and property preservation under perturbation. These metrics should account for the unique constraints of materials science, such as physical realizability and synthesizability of the generated materials.

Implementation frameworks that facilitate reproducible testing represent another crucial aspect of standardization. Open-source tools that enable researchers to easily apply standardized adversarial tests to their models would accelerate progress in the field. These frameworks should support various model architectures commonly used in materials generative modeling, including graph neural networks, variational autoencoders, and generative adversarial networks specialized for materials applications.

Cross-domain validation protocols are equally important, as materials models often operate across multiple property spaces. Standardized procedures for testing model robustness across different material classes and property prediction tasks would ensure comprehensive evaluation of generative models' capabilities under adversarial conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!