Model Auditing: Reproducibility Checks For Generative Materials Workflows

SEP 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Generative Materials Modeling Background and Objectives

Generative materials modeling represents a transformative approach in materials science, leveraging computational methods to predict, design, and optimize materials with specific properties. This field has evolved significantly over the past three decades, transitioning from simple empirical models to sophisticated machine learning and AI-driven frameworks. The integration of quantum mechanics principles, molecular dynamics, and data science has enabled unprecedented capabilities in materials discovery and characterization.

The evolution of generative materials modeling can be traced through several key technological milestones. Early computational materials science relied heavily on density functional theory (DFT) calculations, which while accurate, were computationally intensive and limited in scale. The emergence of machine learning techniques in the 2010s marked a significant turning point, enabling the development of surrogate models that could approximate quantum mechanical calculations at a fraction of the computational cost.

Recent advancements have seen the rise of generative adversarial networks (GANs), variational autoencoders (VAEs), and transformer-based architectures specifically tailored for materials science applications. These approaches have demonstrated remarkable success in generating novel material structures with targeted properties, accelerating the traditionally slow process of materials discovery and optimization.

The primary objective of model auditing in generative materials workflows is to establish rigorous frameworks for ensuring reproducibility, reliability, and trustworthiness of computational predictions. As these models become increasingly complex and opaque, the need for systematic validation approaches becomes paramount. This includes developing standardized benchmarks, uncertainty quantification methods, and interpretability tools that can provide insights into model behavior and limitations.

Another critical goal is to bridge the gap between computational predictions and experimental validation. Despite significant advances in computational methods, the ultimate test of any materials model remains its ability to accurately predict properties that can be verified experimentally. Establishing clear protocols for model validation against experimental data represents a key challenge in the field.

Looking forward, the trajectory of generative materials modeling points toward increasingly autonomous systems capable of closed-loop materials discovery and optimization. This vision encompasses not only the generation of candidate materials but also the ability to intelligently navigate the vast design space, prioritize promising candidates for experimental validation, and iteratively refine models based on feedback.

The evolution of generative materials modeling can be traced through several key technological milestones. Early computational materials science relied heavily on density functional theory (DFT) calculations, which while accurate, were computationally intensive and limited in scale. The emergence of machine learning techniques in the 2010s marked a significant turning point, enabling the development of surrogate models that could approximate quantum mechanical calculations at a fraction of the computational cost.

Recent advancements have seen the rise of generative adversarial networks (GANs), variational autoencoders (VAEs), and transformer-based architectures specifically tailored for materials science applications. These approaches have demonstrated remarkable success in generating novel material structures with targeted properties, accelerating the traditionally slow process of materials discovery and optimization.

The primary objective of model auditing in generative materials workflows is to establish rigorous frameworks for ensuring reproducibility, reliability, and trustworthiness of computational predictions. As these models become increasingly complex and opaque, the need for systematic validation approaches becomes paramount. This includes developing standardized benchmarks, uncertainty quantification methods, and interpretability tools that can provide insights into model behavior and limitations.

Another critical goal is to bridge the gap between computational predictions and experimental validation. Despite significant advances in computational methods, the ultimate test of any materials model remains its ability to accurately predict properties that can be verified experimentally. Establishing clear protocols for model validation against experimental data represents a key challenge in the field.

Looking forward, the trajectory of generative materials modeling points toward increasingly autonomous systems capable of closed-loop materials discovery and optimization. This vision encompasses not only the generation of candidate materials but also the ability to intelligently navigate the vast design space, prioritize promising candidates for experimental validation, and iteratively refine models based on feedback.

Market Analysis for Reproducible Materials Design

The materials design market is experiencing a significant transformation driven by the integration of generative AI and machine learning technologies. Current market size estimates place the advanced materials sector at approximately $100 billion globally, with computational materials design representing a rapidly growing segment. Industry analysts project a compound annual growth rate of 25-30% for AI-enabled materials discovery platforms over the next five years, highlighting the economic potential of reproducible generative workflows.

Key market segments benefiting from reproducible materials design include pharmaceuticals, semiconductors, energy storage, aerospace, and sustainable materials. The pharmaceutical industry alone has invested over $5 billion in AI-driven materials research in the past three years, recognizing the potential for accelerated drug discovery and reduced development costs.

Market demand for reproducibility tools is being driven by several factors. First, the increasing complexity of materials design workflows has created significant challenges in verifying and validating results across different research teams and environments. According to a recent survey by Materials Research Society, 78% of materials scientists report difficulties reproducing computational results from published literature.

Second, regulatory pressures are intensifying, particularly in sectors like healthcare and aerospace, where material performance validation is critical. The FDA and similar agencies worldwide are implementing stricter guidelines for computational model validation, creating a $2.3 billion market opportunity for auditing and verification tools.

Third, the economic imperative for efficiency is compelling. Industry reports indicate that non-reproducible research costs the materials science sector approximately $4 billion annually in wasted resources and delayed commercialization timelines. Companies implementing robust reproducibility frameworks report 40% faster time-to-market for new materials.

The competitive landscape shows emerging specialized players focused on reproducibility platforms competing with established scientific software providers expanding their offerings. Venture capital investment in reproducibility-focused startups has reached $850 million in 2022, a 65% increase from the previous year.

Customer segments show varying needs: large enterprises prioritize integration with existing workflows and regulatory compliance, while research institutions focus on transparency and collaborative features. Government laboratories emphasize security and standardization capabilities.

Market barriers include fragmentation of tools and methodologies, resistance to standardization, and concerns about intellectual property protection when implementing transparent workflows. Despite these challenges, the market trajectory remains strongly positive as organizations increasingly recognize reproducibility as both a scientific necessity and a competitive advantage.

Key market segments benefiting from reproducible materials design include pharmaceuticals, semiconductors, energy storage, aerospace, and sustainable materials. The pharmaceutical industry alone has invested over $5 billion in AI-driven materials research in the past three years, recognizing the potential for accelerated drug discovery and reduced development costs.

Market demand for reproducibility tools is being driven by several factors. First, the increasing complexity of materials design workflows has created significant challenges in verifying and validating results across different research teams and environments. According to a recent survey by Materials Research Society, 78% of materials scientists report difficulties reproducing computational results from published literature.

Second, regulatory pressures are intensifying, particularly in sectors like healthcare and aerospace, where material performance validation is critical. The FDA and similar agencies worldwide are implementing stricter guidelines for computational model validation, creating a $2.3 billion market opportunity for auditing and verification tools.

Third, the economic imperative for efficiency is compelling. Industry reports indicate that non-reproducible research costs the materials science sector approximately $4 billion annually in wasted resources and delayed commercialization timelines. Companies implementing robust reproducibility frameworks report 40% faster time-to-market for new materials.

The competitive landscape shows emerging specialized players focused on reproducibility platforms competing with established scientific software providers expanding their offerings. Venture capital investment in reproducibility-focused startups has reached $850 million in 2022, a 65% increase from the previous year.

Customer segments show varying needs: large enterprises prioritize integration with existing workflows and regulatory compliance, while research institutions focus on transparency and collaborative features. Government laboratories emphasize security and standardization capabilities.

Market barriers include fragmentation of tools and methodologies, resistance to standardization, and concerns about intellectual property protection when implementing transparent workflows. Despite these challenges, the market trajectory remains strongly positive as organizations increasingly recognize reproducibility as both a scientific necessity and a competitive advantage.

Current Challenges in Model Auditing for Materials Science

The field of model auditing for materials science faces significant challenges that impede the establishment of reliable reproducibility checks for generative materials workflows. One primary challenge is the inherent complexity of materials science models, which often integrate multiple physical and chemical principles across different scales. These multiscale models frequently combine quantum mechanics, molecular dynamics, and continuum mechanics, creating intricate computational workflows that are difficult to audit comprehensively.

Data quality and availability present another substantial obstacle. Materials science relies on experimental data that is often sparse, noisy, or proprietary. The lack of standardized, open-access datasets makes it challenging to establish benchmarks for model validation. Additionally, many materials databases contain inconsistencies in measurement methodologies, units, and reporting standards, further complicating the auditing process.

Computational reproducibility remains elusive due to the sensitivity of materials simulations to initial conditions, numerical methods, and implementation details. Small variations in these parameters can lead to significantly different outcomes, making it difficult to determine whether discrepancies arise from model deficiencies or implementation differences. This challenge is exacerbated by the frequent use of proprietary software and closed-source algorithms in industrial settings.

The interdisciplinary nature of materials science introduces additional complexity to model auditing. Effective auditing requires expertise across multiple domains including physics, chemistry, computer science, and specific application areas. This breadth of knowledge is rarely found in a single individual or even within small teams, necessitating collaborative approaches that are logistically challenging to coordinate.

Uncertainty quantification represents a critical yet underdeveloped aspect of model auditing in materials science. Many generative workflows lack robust methods for propagating uncertainties through complex computational pipelines, making it difficult to assess the reliability of predictions. Without proper uncertainty metrics, it becomes challenging to determine whether a model is performing within acceptable error bounds.

The rapid evolution of AI and machine learning techniques in materials discovery introduces new challenges for auditing. These models often function as "black boxes," with limited interpretability and transparency. Traditional validation methods may be insufficient for assessing the reliability of AI-driven predictions, particularly when these models are applied to materials compositions or structures outside their training distribution.

Data quality and availability present another substantial obstacle. Materials science relies on experimental data that is often sparse, noisy, or proprietary. The lack of standardized, open-access datasets makes it challenging to establish benchmarks for model validation. Additionally, many materials databases contain inconsistencies in measurement methodologies, units, and reporting standards, further complicating the auditing process.

Computational reproducibility remains elusive due to the sensitivity of materials simulations to initial conditions, numerical methods, and implementation details. Small variations in these parameters can lead to significantly different outcomes, making it difficult to determine whether discrepancies arise from model deficiencies or implementation differences. This challenge is exacerbated by the frequent use of proprietary software and closed-source algorithms in industrial settings.

The interdisciplinary nature of materials science introduces additional complexity to model auditing. Effective auditing requires expertise across multiple domains including physics, chemistry, computer science, and specific application areas. This breadth of knowledge is rarely found in a single individual or even within small teams, necessitating collaborative approaches that are logistically challenging to coordinate.

Uncertainty quantification represents a critical yet underdeveloped aspect of model auditing in materials science. Many generative workflows lack robust methods for propagating uncertainties through complex computational pipelines, making it difficult to assess the reliability of predictions. Without proper uncertainty metrics, it becomes challenging to determine whether a model is performing within acceptable error bounds.

The rapid evolution of AI and machine learning techniques in materials discovery introduces new challenges for auditing. These models often function as "black boxes," with limited interpretability and transparency. Traditional validation methods may be insufficient for assessing the reliability of AI-driven predictions, particularly when these models are applied to materials compositions or structures outside their training distribution.

Existing Auditing Methodologies for Generative Materials Models

01 Methodologies for model audit reproducibility

Various methodologies have been developed to ensure the reproducibility of model audits. These methodologies include standardized frameworks, systematic approaches, and documented procedures that allow auditors to consistently evaluate AI models. By implementing structured audit protocols, organizations can ensure that different auditors can reproduce the same results when examining the same model, which is crucial for establishing trust in AI systems.- Standardized frameworks for model audit reproducibility: Standardized frameworks and methodologies are essential for ensuring the reproducibility of model audits. These frameworks provide structured approaches to document audit processes, validate results, and establish consistent evaluation criteria. By implementing standardized protocols, organizations can ensure that model audits are conducted systematically and their results can be reproduced by different auditors or at different times, enhancing the reliability and credibility of the audit findings.

- Automated tools for model audit documentation: Automated tools play a crucial role in documenting model audit processes and ensuring reproducibility. These tools can capture audit trails, record testing procedures, and generate comprehensive reports of audit activities. By automating documentation, organizations can reduce human error, maintain consistent records, and facilitate the reproduction of audit results. Such tools also enable version control of models and audit artifacts, making it easier to track changes and reproduce previous audit states.

- Data versioning and provenance tracking systems: Data versioning and provenance tracking systems are fundamental to model audit reproducibility. These systems maintain records of data sources, transformations, and the specific datasets used during model development and testing. By tracking data lineage and preserving dataset versions, organizations can ensure that auditors can access the exact same data used in previous audits, enabling consistent evaluation and reproducible results even as underlying data sources evolve over time.

- Collaborative platforms for audit transparency: Collaborative platforms enhance model audit reproducibility by providing transparent environments where multiple stakeholders can participate in and observe the audit process. These platforms facilitate knowledge sharing, peer review, and collective validation of audit methodologies and findings. By enabling collaboration among auditors, model developers, and other stakeholders, these systems create a more robust audit ecosystem where results can be independently verified and reproduced, increasing confidence in model performance and compliance.

- Containerization and environment management for computational reproducibility: Containerization and environment management technologies ensure computational reproducibility in model auditing. These approaches package models along with their dependencies, runtime environments, and configurations into portable containers that can be executed consistently across different computing infrastructures. By isolating the audit environment and preserving all necessary components, these technologies enable auditors to reproduce exact computational conditions, eliminating variability caused by different software versions or system configurations.

02 Technical tools for audit verification and validation

Specialized technical tools have been created to support the verification and validation processes in model auditing. These tools enable automated testing, performance measurement, and comparison of results across multiple audit iterations. They can track changes in model behavior, document audit trails, and provide consistent metrics for evaluation, thereby enhancing the reproducibility of audit findings and ensuring that audit results can be independently verified.Expand Specific Solutions03 Documentation standards for reproducible audits

Comprehensive documentation standards are essential for ensuring audit reproducibility. These standards specify how to record audit procedures, test cases, evaluation criteria, and results in a way that allows others to follow the same steps. Proper documentation includes capturing the model's version, input data, environmental configurations, and all parameters used during the audit, enabling future auditors to recreate the exact conditions under which the original audit was conducted.Expand Specific Solutions04 Automated systems for continuous audit monitoring

Automated systems have been developed for continuous monitoring and auditing of models in production environments. These systems can regularly test models against predefined criteria, detect drift in performance or behavior, and trigger alerts when inconsistencies are found. By automating the audit process, these systems reduce human error and ensure that audit procedures are applied consistently over time, improving the reproducibility of results across multiple audit cycles.Expand Specific Solutions05 Standardized metrics and benchmarks for model evaluation

Standardized metrics and benchmarks provide consistent criteria for evaluating model performance across different audit instances. These include quantitative measures for accuracy, fairness, robustness, and other important model characteristics. By using standardized evaluation frameworks, auditors can ensure that their assessments are comparable and reproducible, regardless of who conducts the audit or when it takes place, which is fundamental for establishing reliable model governance practices.Expand Specific Solutions

Leading Organizations in Materials Model Verification

Model auditing for generative materials workflows is emerging as a critical field in the intersection of AI and materials science, currently in its early development stage. The market is growing rapidly, driven by increasing demand for reproducible AI models in materials discovery. Technologically, the field is still maturing, with key players demonstrating varying levels of expertise. IBM leads with advanced AI auditing frameworks, while Google and Microsoft contribute significant machine learning validation tools. Companies like Boeing and Intel are developing specialized applications for materials verification, and academic-industry partnerships are forming to establish standards. The competitive landscape shows a mix of tech giants and specialized materials science companies working to address reproducibility challenges in generative materials design.

International Business Machines Corp.

Technical Solution: IBM has developed an advanced model auditing framework for generative materials workflows called "Materials Reproducibility Toolkit" (MRT). This solution implements a comprehensive approach to reproducibility validation through containerized execution environments that capture the complete computational context. IBM's framework leverages their expertise in quantum computing and materials science to address both classical and quantum-enhanced generative models. The MRT system implements automated differential testing that compares model outputs across multiple execution environments, identifying subtle inconsistencies that might affect reproducibility. Their approach includes specialized components for materials-specific validation, including crystal structure verification, molecular stability checks, and synthesis pathway validation. IBM's solution also implements continuous monitoring of model drift over time, automatically flagging when reproducibility metrics degrade beyond configurable thresholds. The system integrates with IBM's broader AI governance framework to provide audit trails and compliance documentation for materials discovery processes in regulated industries.

Strengths: IBM's solution offers exceptional depth in handling complex materials models including those leveraging quantum computing resources. Their containerized approach ensures true reproducibility across diverse computing environments. The system provides robust governance features suitable for regulated industries. Weaknesses: The comprehensive nature of IBM's solution can introduce significant computational overhead, potentially slowing down research iteration cycles. Implementation requires specialized expertise in both materials science and advanced computing infrastructure.

Microsoft Technology Licensing LLC

Technical Solution: Microsoft has pioneered an enterprise-grade model auditing framework specifically designed for generative materials workflows called "MaterialCheck." This system implements a three-tier verification approach: (1) Deterministic reproducibility validation that ensures identical inputs produce consistent outputs across different computing environments; (2) Statistical reproducibility assessment that quantifies the variance in model predictions when subjected to controlled perturbations; and (3) Physical validation protocols that bridge computational predictions with experimental outcomes. Microsoft's solution integrates with Azure Machine Learning to provide automated regression testing for materials models, capturing detailed execution contexts including hardware specifications, library versions, and random seeds. Their framework implements continuous validation pipelines that automatically re-verify models when dependencies change, and provides confidence scoring for generated materials properties. MaterialCheck also features specialized visualization tools that highlight areas of prediction instability across different runs, helping researchers identify potential reproducibility issues before experimental validation.

Strengths: Microsoft's solution offers seamless integration with their cloud ecosystem, providing scalable validation capabilities with strong governance features. Their approach emphasizes practical reproducibility metrics that correlate with experimental outcomes rather than just computational consistency. Weaknesses: The system has higher implementation complexity for non-Azure environments and may require significant customization for specialized materials workflows not covered by standard templates. The comprehensive validation approach can introduce latency in rapid iteration cycles.

Key Innovations in Reproducibility Validation Techniques

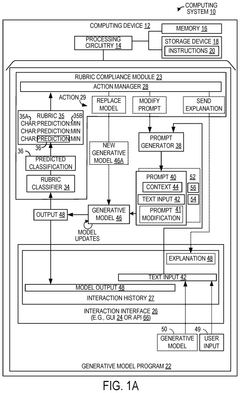

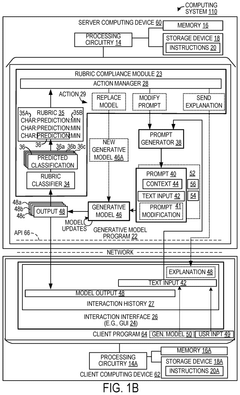

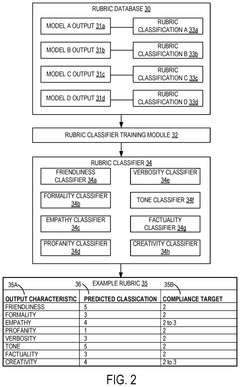

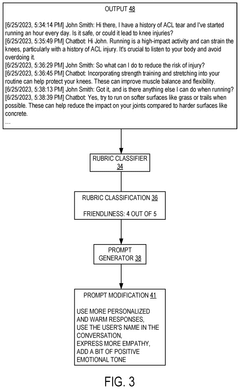

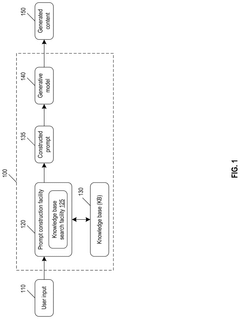

Monitoring compliance of a generative language model with an output characteristic rubric

PatentPendingUS20250077795A1

Innovation

- A computing system is provided that monitors the compliance of a generative language model with a rubric of output characteristics by using a rubric classifier to generate predicted classifications for these characteristics, allowing for real-time adjustments to maintain desired output standards.

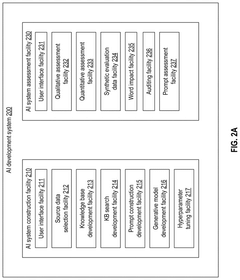

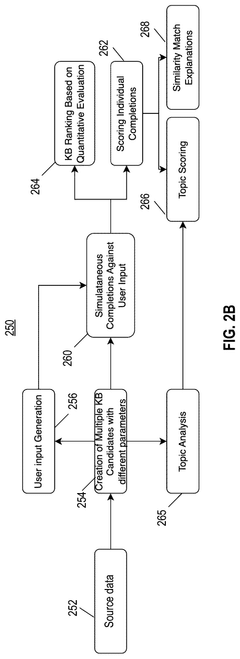

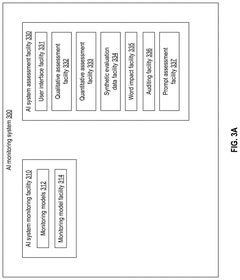

Systems and methods for development, assessment, and/or monitoring of a generative ai system

PatentPendingUS20250190459A1

Innovation

- The development of a method that constructs multiple generative AI systems using modeling blueprints, evaluates them using a set of quantitative metrics, and provides recommendations for their use based on these evaluations, while also incorporating features like retrieval-augmented generation and monitoring models to improve performance and prevent undesirable outputs.

Standardization Efforts for Materials Workflow Validation

The standardization of materials workflow validation represents a critical frontier in ensuring the reliability and reproducibility of generative materials research. Several international organizations have established working groups dedicated to developing comprehensive standards for validating computational materials workflows. The International Union of Pure and Applied Chemistry (IUPAC) has formed a specialized committee focused on computational chemistry validation protocols, while the Materials Research Society (MRS) has launched a dedicated task force addressing reproducibility in materials informatics and simulation.

These standardization efforts typically encompass several key components. First, they establish minimum reporting requirements for computational materials studies, including detailed documentation of algorithms, parameters, and computational environments. The Research Data Alliance (RDA) Materials Data Infrastructure Interest Group has proposed a framework requiring researchers to document hardware specifications, software versions, and parameter settings used in generative materials workflows.

Second, standardization initiatives are developing benchmark datasets and reference calculations that serve as validation points for new methodologies. The Novel Materials Discovery (NOMAD) Laboratory has created a repository of reference calculations across different computational methods, enabling systematic comparison and validation of new approaches against established results.

Third, emerging standards address workflow provenance tracking - documenting the complete lineage of data transformations from raw inputs to final results. The Common Workflow Language (CWL) has been adapted specifically for materials science applications, allowing researchers to formally describe computational procedures in a machine-readable format that facilitates reproduction.

Verification and validation metrics constitute another crucial aspect of these standardization efforts. The Molecular Sciences Software Institute (MolSSI) has proposed quantitative metrics for assessing the reliability of molecular simulations, including uncertainty quantification protocols and sensitivity analysis requirements.

Industry participation has accelerated standardization progress, with companies like Citrine Informatics and Materials Design contributing open-source validation tools and frameworks. The FAIR (Findable, Accessible, Interoperable, Reusable) principles have been extended specifically for materials workflows through initiatives like FAIRmat, establishing guidelines for data management practices that support reproducibility.

Despite these advances, challenges remain in harmonizing standards across different computational domains and experimental validation approaches. Current standardization efforts are increasingly focusing on integrating machine learning validation techniques with traditional computational chemistry validation protocols to address the unique challenges posed by AI-driven materials discovery workflows.

These standardization efforts typically encompass several key components. First, they establish minimum reporting requirements for computational materials studies, including detailed documentation of algorithms, parameters, and computational environments. The Research Data Alliance (RDA) Materials Data Infrastructure Interest Group has proposed a framework requiring researchers to document hardware specifications, software versions, and parameter settings used in generative materials workflows.

Second, standardization initiatives are developing benchmark datasets and reference calculations that serve as validation points for new methodologies. The Novel Materials Discovery (NOMAD) Laboratory has created a repository of reference calculations across different computational methods, enabling systematic comparison and validation of new approaches against established results.

Third, emerging standards address workflow provenance tracking - documenting the complete lineage of data transformations from raw inputs to final results. The Common Workflow Language (CWL) has been adapted specifically for materials science applications, allowing researchers to formally describe computational procedures in a machine-readable format that facilitates reproduction.

Verification and validation metrics constitute another crucial aspect of these standardization efforts. The Molecular Sciences Software Institute (MolSSI) has proposed quantitative metrics for assessing the reliability of molecular simulations, including uncertainty quantification protocols and sensitivity analysis requirements.

Industry participation has accelerated standardization progress, with companies like Citrine Informatics and Materials Design contributing open-source validation tools and frameworks. The FAIR (Findable, Accessible, Interoperable, Reusable) principles have been extended specifically for materials workflows through initiatives like FAIRmat, establishing guidelines for data management practices that support reproducibility.

Despite these advances, challenges remain in harmonizing standards across different computational domains and experimental validation approaches. Current standardization efforts are increasingly focusing on integrating machine learning validation techniques with traditional computational chemistry validation protocols to address the unique challenges posed by AI-driven materials discovery workflows.

Computational Infrastructure Requirements for Robust Auditing

Robust model auditing for generative materials workflows demands substantial computational infrastructure to ensure reproducibility and reliability. The infrastructure must support both the execution of complex materials simulations and the verification processes that validate model outputs. High-performance computing (HPC) clusters with specialized hardware accelerators, particularly GPUs and TPUs, are essential for handling the computationally intensive nature of materials modeling and the parallel processing requirements of audit procedures.

Storage systems represent another critical component, requiring not only large capacity but also high-speed access capabilities. Distributed file systems with redundancy mechanisms are necessary to maintain audit trails and version histories of models, datasets, and simulation results. These systems should implement comprehensive metadata management to facilitate traceability across the entire workflow lifecycle.

Containerization technologies such as Docker and Kubernetes have become indispensable for ensuring consistent execution environments across different computational platforms. By encapsulating dependencies and runtime configurations, containers enable precise replication of experimental conditions—a fundamental requirement for meaningful auditing. Integration with workflow management systems like Airflow or Nextflow further enhances reproducibility by formalizing execution sequences and parameter settings.

Network infrastructure considerations cannot be overlooked, particularly when auditing involves distributed teams or federated learning approaches. Low-latency, high-bandwidth connections are necessary to support real-time collaboration and efficient data transfer between computational resources. Security measures must be implemented to protect proprietary materials data while still enabling transparent auditing processes.

Automated monitoring systems represent a key infrastructure component, continuously tracking resource utilization, model performance metrics, and system health indicators. These systems should incorporate anomaly detection capabilities to identify potential reproducibility issues before they compromise audit results. Integration with notification frameworks ensures timely intervention when discrepancies are detected.

Scalability remains a paramount concern as materials workflows grow in complexity. Infrastructure should support dynamic resource allocation, allowing audit processes to scale horizontally during peak demand periods. Cloud-based solutions offer particular advantages in this regard, providing elastic computing resources that can be provisioned on demand, though considerations regarding data sovereignty and transfer costs must be carefully evaluated.

Storage systems represent another critical component, requiring not only large capacity but also high-speed access capabilities. Distributed file systems with redundancy mechanisms are necessary to maintain audit trails and version histories of models, datasets, and simulation results. These systems should implement comprehensive metadata management to facilitate traceability across the entire workflow lifecycle.

Containerization technologies such as Docker and Kubernetes have become indispensable for ensuring consistent execution environments across different computational platforms. By encapsulating dependencies and runtime configurations, containers enable precise replication of experimental conditions—a fundamental requirement for meaningful auditing. Integration with workflow management systems like Airflow or Nextflow further enhances reproducibility by formalizing execution sequences and parameter settings.

Network infrastructure considerations cannot be overlooked, particularly when auditing involves distributed teams or federated learning approaches. Low-latency, high-bandwidth connections are necessary to support real-time collaboration and efficient data transfer between computational resources. Security measures must be implemented to protect proprietary materials data while still enabling transparent auditing processes.

Automated monitoring systems represent a key infrastructure component, continuously tracking resource utilization, model performance metrics, and system health indicators. These systems should incorporate anomaly detection capabilities to identify potential reproducibility issues before they compromise audit results. Integration with notification frameworks ensures timely intervention when discrepancies are detected.

Scalability remains a paramount concern as materials workflows grow in complexity. Infrastructure should support dynamic resource allocation, allowing audit processes to scale horizontally during peak demand periods. Cloud-based solutions offer particular advantages in this regard, providing elastic computing resources that can be provisioned on demand, though considerations regarding data sovereignty and transfer costs must be carefully evaluated.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!