Defending Against Mode Collapse In Materials Generative Models

SEP 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Materials Generative Models Background and Objectives

Materials generative models represent a transformative approach in computational materials science, leveraging artificial intelligence to accelerate the discovery and design of novel materials. These models have evolved from simple statistical approaches to sophisticated deep learning architectures capable of generating molecular structures, crystal lattices, and material properties with increasing fidelity. The field has witnessed significant growth since 2015, with generative adversarial networks (GANs), variational autoencoders (VAEs), and more recently, diffusion models emerging as dominant paradigms.

Mode collapse represents a critical challenge in materials generative models, particularly in GANs, where the generator produces limited varieties of outputs regardless of input variation. This phenomenon severely restricts the diversity of generated materials, undermining the fundamental purpose of these models in materials discovery. When mode collapse occurs, the generative model fails to capture the full distribution of possible materials, instead converging to a small subset of "safe" but unrepresentative examples.

The objective of addressing mode collapse is to ensure generative models can explore the vast chemical and structural space of materials comprehensively. This is particularly crucial in materials science where discovering novel compounds with specific properties often requires exploring unusual or previously unexplored regions of the design space. Effective generative models must balance novelty with physical realizability, generating diverse yet synthesizable material candidates.

Current research aims to develop robust techniques that can detect, prevent, and mitigate mode collapse in materials generative models. This includes architectural innovations, specialized loss functions, regularization techniques, and physics-informed constraints that guide the generative process while maintaining diversity. The goal is to create models that can generate materials spanning the entire feasible design space rather than clustering around a few modes.

The evolution of these models is closely tied to advances in computational resources and algorithmic efficiency. Early materials generative models were limited by computational constraints, but modern approaches leverage high-performance computing and specialized hardware accelerators to train increasingly complex architectures on larger materials datasets.

Looking forward, the field is moving toward multi-modal generative models that can simultaneously consider structural, compositional, and property-based aspects of materials. The ultimate objective is to develop generative frameworks that can reliably produce diverse, novel, and physically realizable materials with tailored properties for applications ranging from energy storage and catalysis to structural materials and electronics.

Mode collapse represents a critical challenge in materials generative models, particularly in GANs, where the generator produces limited varieties of outputs regardless of input variation. This phenomenon severely restricts the diversity of generated materials, undermining the fundamental purpose of these models in materials discovery. When mode collapse occurs, the generative model fails to capture the full distribution of possible materials, instead converging to a small subset of "safe" but unrepresentative examples.

The objective of addressing mode collapse is to ensure generative models can explore the vast chemical and structural space of materials comprehensively. This is particularly crucial in materials science where discovering novel compounds with specific properties often requires exploring unusual or previously unexplored regions of the design space. Effective generative models must balance novelty with physical realizability, generating diverse yet synthesizable material candidates.

Current research aims to develop robust techniques that can detect, prevent, and mitigate mode collapse in materials generative models. This includes architectural innovations, specialized loss functions, regularization techniques, and physics-informed constraints that guide the generative process while maintaining diversity. The goal is to create models that can generate materials spanning the entire feasible design space rather than clustering around a few modes.

The evolution of these models is closely tied to advances in computational resources and algorithmic efficiency. Early materials generative models were limited by computational constraints, but modern approaches leverage high-performance computing and specialized hardware accelerators to train increasingly complex architectures on larger materials datasets.

Looking forward, the field is moving toward multi-modal generative models that can simultaneously consider structural, compositional, and property-based aspects of materials. The ultimate objective is to develop generative frameworks that can reliably produce diverse, novel, and physically realizable materials with tailored properties for applications ranging from energy storage and catalysis to structural materials and electronics.

Market Analysis for Materials Design AI Solutions

The AI-driven materials design market is experiencing unprecedented growth, fueled by increasing demand for novel materials across industries including aerospace, automotive, electronics, and pharmaceuticals. Current market valuations place the global materials informatics sector at approximately $200 million, with projections indicating growth to reach $500 million by 2026, representing a compound annual growth rate of 20.3%. This rapid expansion is primarily driven by the significant reduction in R&D timelines and costs that AI solutions offer compared to traditional materials discovery approaches.

Within this expanding market, generative models for materials design represent a particularly promising segment. These models can accelerate materials discovery by orders of magnitude, reducing development cycles from years to months or even weeks. Companies implementing these solutions report average R&D cost reductions of 30-40% and time-to-market improvements of 50-60% for new materials.

The market demand is further segmented by application areas. The semiconductor industry constitutes the largest share at 28%, followed by energy materials at 22%, biomaterials at 18%, and structural materials at 15%. The remaining 17% encompasses various specialized applications including catalysts, polymers, and composites. This distribution reflects the critical need for advanced materials in high-tech and energy transition sectors.

Regional analysis reveals North America currently dominates the market with 42% share, followed by Europe (27%), Asia-Pacific (24%), and rest of world (7%). However, the Asia-Pacific region is experiencing the fastest growth rate at 25.8% annually, driven by substantial investments in materials science infrastructure in China, Japan, and South Korea.

Customer segmentation shows large corporations account for 65% of market revenue, while academic and government research institutions represent 25%. The remaining 10% comes from startups and SMEs, though this segment is growing rapidly as barriers to entry decrease through cloud-based AI platforms and open-source tools.

Key market drivers include increasing computational power, expanding materials databases, advances in machine learning algorithms, and growing pressure for sustainable materials development. However, challenges remain, particularly regarding model reliability and the "mode collapse" phenomenon, which limits the diversity of generated materials and creates significant barriers to commercial adoption. Market surveys indicate that 78% of potential enterprise customers cite concerns about AI model reliability as their primary hesitation for full-scale implementation.

Within this expanding market, generative models for materials design represent a particularly promising segment. These models can accelerate materials discovery by orders of magnitude, reducing development cycles from years to months or even weeks. Companies implementing these solutions report average R&D cost reductions of 30-40% and time-to-market improvements of 50-60% for new materials.

The market demand is further segmented by application areas. The semiconductor industry constitutes the largest share at 28%, followed by energy materials at 22%, biomaterials at 18%, and structural materials at 15%. The remaining 17% encompasses various specialized applications including catalysts, polymers, and composites. This distribution reflects the critical need for advanced materials in high-tech and energy transition sectors.

Regional analysis reveals North America currently dominates the market with 42% share, followed by Europe (27%), Asia-Pacific (24%), and rest of world (7%). However, the Asia-Pacific region is experiencing the fastest growth rate at 25.8% annually, driven by substantial investments in materials science infrastructure in China, Japan, and South Korea.

Customer segmentation shows large corporations account for 65% of market revenue, while academic and government research institutions represent 25%. The remaining 10% comes from startups and SMEs, though this segment is growing rapidly as barriers to entry decrease through cloud-based AI platforms and open-source tools.

Key market drivers include increasing computational power, expanding materials databases, advances in machine learning algorithms, and growing pressure for sustainable materials development. However, challenges remain, particularly regarding model reliability and the "mode collapse" phenomenon, which limits the diversity of generated materials and creates significant barriers to commercial adoption. Market surveys indicate that 78% of potential enterprise customers cite concerns about AI model reliability as their primary hesitation for full-scale implementation.

Mode Collapse Challenges in Materials Generative Models

Mode collapse represents one of the most significant challenges in materials generative models, particularly in Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) applied to materials science. This phenomenon occurs when the generator produces limited varieties of outputs, failing to capture the full diversity of the target distribution. In materials science, where structural and property diversity is crucial, mode collapse severely limits the utility of generative models for materials discovery.

The fundamental mechanism behind mode collapse involves the generator identifying a small subset of material structures or properties that successfully "fool" the discriminator, leading to convergence on these limited patterns. This is particularly problematic in materials science where the property space is inherently multi-modal, with distinct clusters of materials exhibiting similar properties but different structures.

Current detection methods for mode collapse in materials generative models include diversity metrics such as Fréchet Inception Distance (FID), Maximum Mean Discrepancy (MMD), and property distribution analysis. However, these metrics often fail to capture the nuanced structural diversity required in materials science applications, where subtle atomic arrangements can lead to dramatically different properties.

The consequences of mode collapse in materials discovery are severe. When generative models fail to explore the full design space, they miss potentially revolutionary materials with unique property combinations. This limitation undermines the primary advantage of using AI for materials discovery – the ability to efficiently explore vast, uncharted regions of the materials space.

Several factors exacerbate mode collapse in materials generative models. The inherent sparsity of valid materials in the vast chemical space creates imbalanced training distributions. Additionally, the multi-scale nature of materials properties, spanning from atomic to macroscopic scales, creates complex, multi-modal distributions that are difficult for generative models to capture fully.

Recent research has identified that mode collapse is particularly prevalent when generating materials with specific targeted properties, as the optimization process tends to converge on a limited set of solutions that satisfy the constraints. This becomes especially problematic in inverse design scenarios where diverse candidate materials are desired for a given set of target properties.

The challenge is further complicated by the limited availability of high-quality materials data compared to other domains where generative models have been successful, such as image generation. This data scarcity makes it difficult to train models that can generalize across the full spectrum of possible materials.

The fundamental mechanism behind mode collapse involves the generator identifying a small subset of material structures or properties that successfully "fool" the discriminator, leading to convergence on these limited patterns. This is particularly problematic in materials science where the property space is inherently multi-modal, with distinct clusters of materials exhibiting similar properties but different structures.

Current detection methods for mode collapse in materials generative models include diversity metrics such as Fréchet Inception Distance (FID), Maximum Mean Discrepancy (MMD), and property distribution analysis. However, these metrics often fail to capture the nuanced structural diversity required in materials science applications, where subtle atomic arrangements can lead to dramatically different properties.

The consequences of mode collapse in materials discovery are severe. When generative models fail to explore the full design space, they miss potentially revolutionary materials with unique property combinations. This limitation undermines the primary advantage of using AI for materials discovery – the ability to efficiently explore vast, uncharted regions of the materials space.

Several factors exacerbate mode collapse in materials generative models. The inherent sparsity of valid materials in the vast chemical space creates imbalanced training distributions. Additionally, the multi-scale nature of materials properties, spanning from atomic to macroscopic scales, creates complex, multi-modal distributions that are difficult for generative models to capture fully.

Recent research has identified that mode collapse is particularly prevalent when generating materials with specific targeted properties, as the optimization process tends to converge on a limited set of solutions that satisfy the constraints. This becomes especially problematic in inverse design scenarios where diverse candidate materials are desired for a given set of target properties.

The challenge is further complicated by the limited availability of high-quality materials data compared to other domains where generative models have been successful, such as image generation. This data scarcity makes it difficult to train models that can generalize across the full spectrum of possible materials.

Current Strategies for Mode Collapse Mitigation

01 Techniques to address mode collapse in generative models

Various techniques have been developed to address mode collapse in generative models for materials science. These include diversity-promoting regularization methods, architectural modifications, and training strategies that encourage the model to explore the full distribution of possible material structures rather than converging on a limited subset. These approaches help ensure that generative models can produce diverse and representative samples of materials with desired properties.- Techniques to mitigate mode collapse in generative models: Various techniques can be employed to address mode collapse in materials generative models. These include using regularization methods, implementing diversity-promoting objectives, and employing ensemble approaches. By incorporating these techniques, the generative models can better capture the full distribution of material properties and structures, preventing the model from producing limited or repetitive outputs that fail to represent the diversity of the target domain.

- Adversarial training approaches for materials generation: Adversarial training frameworks, such as GANs (Generative Adversarial Networks), can be modified to prevent mode collapse when generating material structures. These modifications include gradient penalties, spectral normalization, and alternative adversarial objectives that encourage the generator to explore diverse modes of the data distribution. These approaches help maintain diversity in the generated materials while ensuring high quality and realistic outputs.

- Latent space optimization for diverse materials discovery: Optimizing the latent space representation in generative models can help prevent mode collapse in materials discovery applications. Techniques such as disentangled representations, controlled sampling strategies, and latent space regularization ensure that the model explores different regions of the materials design space. This approach enables the discovery of diverse material candidates with desired properties rather than converging to a limited set of solutions.

- Multi-objective optimization frameworks for materials design: Multi-objective optimization frameworks can be integrated with generative models to address mode collapse in materials design. These frameworks simultaneously optimize for multiple material properties while maintaining diversity in the generated outputs. By explicitly incorporating diversity as an objective alongside performance metrics, these approaches ensure that the generative model explores various regions of the materials design space rather than converging to a single solution.

- Data augmentation and preprocessing strategies: Data augmentation and preprocessing strategies can help mitigate mode collapse in materials generative models. These strategies include synthetic data generation, feature engineering, and balanced sampling techniques that ensure the model is exposed to diverse examples during training. By improving the quality and diversity of the training data, these approaches help the generative model learn a more comprehensive representation of the materials space, reducing the likelihood of mode collapse.

02 Adversarial training methods for materials generation

Adversarial training methods, particularly Generative Adversarial Networks (GANs), are used for materials discovery but are prone to mode collapse. Modified adversarial approaches incorporate specialized loss functions, gradient penalties, and spectral normalization to stabilize training and prevent the generator from producing limited varieties of materials. These modifications help maintain diversity in the generated material structures while still achieving high-quality outputs.Expand Specific Solutions03 Latent space optimization for materials diversity

Latent space optimization techniques help prevent mode collapse by ensuring proper exploration of the material property space. Methods include variational approaches, disentangled representations, and regularization of the latent space to maintain smooth transitions between different material properties. These techniques create more structured latent spaces that can represent the full diversity of possible materials rather than collapsing to a few modes.Expand Specific Solutions04 Multi-objective optimization for materials generation

Multi-objective optimization approaches help overcome mode collapse by simultaneously optimizing for multiple material properties or characteristics. By considering diverse objectives during the generative process, these methods naturally encourage exploration of different regions of the materials space. This approach prevents the model from focusing exclusively on optimizing a single property, which often leads to mode collapse and limited diversity in generated materials.Expand Specific Solutions05 Data augmentation and curriculum learning for materials models

Data augmentation and curriculum learning strategies help prevent mode collapse by gradually increasing the complexity of the training process and enhancing the diversity of the training data. These approaches include synthetic data generation, progressive training schedules, and targeted sampling methods that focus on underrepresented regions of the materials space. By ensuring the model is exposed to diverse examples during training, these techniques help maintain generative diversity.Expand Specific Solutions

Key Industry Players in Materials AI Research

The materials generative model market is in an early growth phase, characterized by increasing research focus on addressing mode collapse challenges. The market is expanding rapidly as AI applications in materials science gain traction, though exact size remains difficult to quantify due to its emerging nature. Technologically, the field is still developing, with major semiconductor and materials companies like SK hynix, Micron Technology, and TSMC investing in research to overcome generative AI limitations. Academic institutions (Beihang University, Sichuan University) and tech giants (Google) are also contributing significantly. The competitive landscape features collaboration between traditional materials manufacturers and AI specialists, with companies like Preferred Networks and JSR Corp developing specialized solutions to enhance model stability and diversity in materials generation.

Preferred Networks Corp.

Technical Solution: Preferred Networks has developed a sophisticated approach to addressing mode collapse in materials generative models through their ChainerMaterials framework. Their solution implements energy-based generative models with carefully designed energy functions that explicitly penalize lack of diversity in generated samples. They've pioneered the use of Wasserstein distance metrics specifically calibrated for materials science applications, providing more stable gradients during training and preventing mode collapse. Preferred Networks' approach incorporates a novel form of minibatch diversity regularization that compares structural and property features within generated batches to ensure comprehensive exploration of the materials design space. Their framework includes adaptive noise injection techniques that dynamically adjust based on detected mode collapse symptoms, introducing controlled stochasticity to escape local optima. They've also developed specialized attention mechanisms for materials generative models that focus on maintaining diversity in critical structural features while allowing convergence in less important aspects.

Strengths: Strong theoretical foundation in energy-based models; excellent balance between computational efficiency and model expressiveness. Weaknesses: Requires careful hyperparameter tuning; some techniques may be difficult to implement without specialized expertise in both materials science and deep learning.

Google LLC

Technical Solution: Google has developed advanced techniques to combat mode collapse in materials generative models through their TensorFlow Materials framework. Their approach combines adversarial training with diversity-promoting regularization techniques that penalize lack of variety in generated samples. Google's research teams have implemented spectral normalization and orthogonal regularization in their generative models to stabilize training and prevent mode collapse when generating novel material structures. They've also pioneered the use of minibatch discrimination techniques specifically tailored for materials science applications, allowing the discriminator to compare samples within a batch to identify when the generator is producing too similar outputs. Their recent work incorporates physical constraints and domain knowledge as additional loss terms to ensure generated materials maintain realistic properties while exploring diverse chemical and structural spaces.

Strengths: Extensive computational resources and AI expertise allow for implementation of complex regularization techniques; integration with broader machine learning ecosystem enables rapid deployment and testing. Weaknesses: Solutions may be computationally expensive for smaller research groups; approaches sometimes prioritize mathematical elegance over domain-specific materials science constraints.

Technical Analysis of Anti-Collapse Algorithms

Learning method, learning device, model generation method, and program

PatentWO2020031802A1

Innovation

- The learning method updates the discriminator's parameters to concavify or regularize the generator's loss function in the region it can sample, smoothing the loss function surface and diffusing gradient vectors, thereby reducing mode collapse.

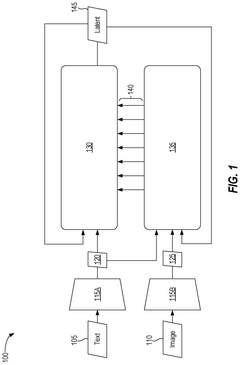

Avoiding generative mode collapse using guided image diffusion machine learning models

PatentPendingUS20250200429A1

Innovation

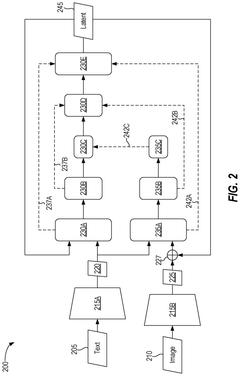

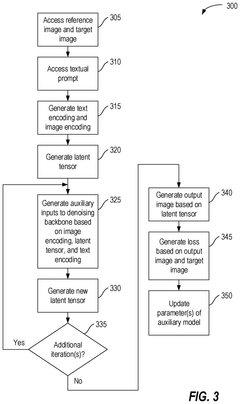

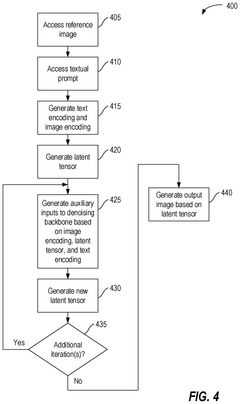

- A new architecture for diffusion machine learning models is introduced, where a diffusion model is frozen and an auxiliary machine learning model is trained to assist the denoising backbone during the inverse diffusion phase, using inputs like reference image embeddings, prompt embeddings, and noisy latent tensors to generate intermediate tensors that guide the denoising process.

Computational Resource Requirements

Addressing mode collapse in materials generative models requires substantial computational resources due to the complexity of the problem and the sophisticated algorithms involved. High-performance computing (HPC) infrastructure is essential, with most research teams utilizing clusters equipped with multiple GPUs, typically NVIDIA A100 or V100 series. These setups commonly feature 8-16 GPUs with 32-80GB memory per unit to handle the intensive training processes of generative adversarial networks (GANs) and other generative models for materials discovery.

Memory requirements are particularly demanding when implementing defensive mechanisms against mode collapse. Training datasets for materials science applications often contain millions of molecular structures or crystal configurations, requiring 100-500GB of RAM for efficient processing. Additionally, storage systems must accommodate datasets ranging from several hundred gigabytes to multiple terabytes, with high-speed SSD storage recommended for training data access.

The computational complexity increases significantly when implementing regularization techniques and diversity-promoting mechanisms. Training times for robust materials generative models typically range from several days to weeks on dedicated GPU clusters. For instance, implementing Wasserstein GANs with gradient penalty or spectral normalization techniques to prevent mode collapse can increase computational overhead by 30-50% compared to standard GAN implementations.

Cloud computing has emerged as a viable alternative for research teams without access to dedicated HPC resources. Major providers like AWS, Google Cloud, and Microsoft Azure offer specialized machine learning instances with costs ranging from $5-25 per GPU hour depending on the configuration. A complete training cycle for a materials generative model with mode collapse defenses typically costs between $5,000-15,000 on cloud platforms.

Energy consumption represents another significant consideration, with large-scale training operations consuming 5-15 kW of power continuously. This translates to substantial operational costs and environmental impact, prompting research into more efficient algorithmic approaches. Recent advances in mixed-precision training and model distillation have shown promise in reducing computational requirements by 20-40% without significantly compromising model performance or diversity preservation capabilities.

Scalability remains a critical challenge, as defensive techniques against mode collapse often involve additional computational layers that do not parallelize efficiently. Research teams must carefully balance resource allocation between model complexity, dataset size, and implementation of diversity-preserving mechanisms to achieve optimal results within reasonable computational constraints.

Memory requirements are particularly demanding when implementing defensive mechanisms against mode collapse. Training datasets for materials science applications often contain millions of molecular structures or crystal configurations, requiring 100-500GB of RAM for efficient processing. Additionally, storage systems must accommodate datasets ranging from several hundred gigabytes to multiple terabytes, with high-speed SSD storage recommended for training data access.

The computational complexity increases significantly when implementing regularization techniques and diversity-promoting mechanisms. Training times for robust materials generative models typically range from several days to weeks on dedicated GPU clusters. For instance, implementing Wasserstein GANs with gradient penalty or spectral normalization techniques to prevent mode collapse can increase computational overhead by 30-50% compared to standard GAN implementations.

Cloud computing has emerged as a viable alternative for research teams without access to dedicated HPC resources. Major providers like AWS, Google Cloud, and Microsoft Azure offer specialized machine learning instances with costs ranging from $5-25 per GPU hour depending on the configuration. A complete training cycle for a materials generative model with mode collapse defenses typically costs between $5,000-15,000 on cloud platforms.

Energy consumption represents another significant consideration, with large-scale training operations consuming 5-15 kW of power continuously. This translates to substantial operational costs and environmental impact, prompting research into more efficient algorithmic approaches. Recent advances in mixed-precision training and model distillation have shown promise in reducing computational requirements by 20-40% without significantly compromising model performance or diversity preservation capabilities.

Scalability remains a critical challenge, as defensive techniques against mode collapse often involve additional computational layers that do not parallelize efficiently. Research teams must carefully balance resource allocation between model complexity, dataset size, and implementation of diversity-preserving mechanisms to achieve optimal results within reasonable computational constraints.

Interdisciplinary Collaboration Frameworks

Interdisciplinary Collaboration Frameworks for addressing mode collapse in materials generative models require strategic integration of expertise across multiple domains. The complexity of materials science, combined with the mathematical intricacies of generative models, necessitates structured collaborative approaches that transcend traditional disciplinary boundaries.

Effective frameworks must connect machine learning specialists with materials scientists through formalized communication channels. These channels should facilitate the translation of domain-specific knowledge into actionable insights for model development. Regular cross-disciplinary workshops and joint problem-solving sessions have proven particularly effective in identifying mode collapse signatures that might be overlooked by single-domain experts.

Data sharing protocols represent another critical component of these frameworks. Materials science datasets often contain complex structural information that requires specialized interpretation. Establishing standardized formats for data exchange between computational scientists and materials experts ensures that model training incorporates appropriate physical constraints, thereby reducing the likelihood of mode collapse.

Collaborative validation methodologies constitute a third essential element. By implementing multi-stage verification processes that incorporate both computational metrics and materials science principles, teams can detect early warning signs of mode collapse before they manifest fully in generative outputs. This approach has demonstrated success in recent applications involving crystal structure generation and polymer design.

Knowledge integration platforms serve as technological enablers for these collaborations. Cloud-based environments that support simultaneous access to materials databases, visualization tools, and model development frameworks allow real-time collaborative troubleshooting when mode collapse issues emerge. Several leading research institutions have implemented such platforms with positive outcomes for model stability.

Governance structures for these interdisciplinary efforts must balance flexibility with accountability. Successful frameworks typically establish clear roles while maintaining permeable boundaries that allow expertise to flow where needed. This hybrid approach ensures that when mode collapse occurs, the team can rapidly mobilize appropriate expertise without bureaucratic impediments.

Funding mechanisms aligned with interdisciplinary work represent the final framework component. Traditional funding structures often reinforce disciplinary silos, whereas successful defense against mode collapse requires sustained investment in boundary-spanning research. Organizations that have implemented specialized funding tracks for materials-AI integration report higher success rates in developing stable generative models.

Effective frameworks must connect machine learning specialists with materials scientists through formalized communication channels. These channels should facilitate the translation of domain-specific knowledge into actionable insights for model development. Regular cross-disciplinary workshops and joint problem-solving sessions have proven particularly effective in identifying mode collapse signatures that might be overlooked by single-domain experts.

Data sharing protocols represent another critical component of these frameworks. Materials science datasets often contain complex structural information that requires specialized interpretation. Establishing standardized formats for data exchange between computational scientists and materials experts ensures that model training incorporates appropriate physical constraints, thereby reducing the likelihood of mode collapse.

Collaborative validation methodologies constitute a third essential element. By implementing multi-stage verification processes that incorporate both computational metrics and materials science principles, teams can detect early warning signs of mode collapse before they manifest fully in generative outputs. This approach has demonstrated success in recent applications involving crystal structure generation and polymer design.

Knowledge integration platforms serve as technological enablers for these collaborations. Cloud-based environments that support simultaneous access to materials databases, visualization tools, and model development frameworks allow real-time collaborative troubleshooting when mode collapse issues emerge. Several leading research institutions have implemented such platforms with positive outcomes for model stability.

Governance structures for these interdisciplinary efforts must balance flexibility with accountability. Successful frameworks typically establish clear roles while maintaining permeable boundaries that allow expertise to flow where needed. This hybrid approach ensures that when mode collapse occurs, the team can rapidly mobilize appropriate expertise without bureaucratic impediments.

Funding mechanisms aligned with interdisciplinary work represent the final framework component. Traditional funding structures often reinforce disciplinary silos, whereas successful defense against mode collapse requires sustained investment in boundary-spanning research. Organizations that have implemented specialized funding tracks for materials-AI integration report higher success rates in developing stable generative models.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!