Open Benchmarks For Comparing Generative Approaches In Materials Science

SEP 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Materials Science Generative Approaches Background and Objectives

Generative approaches in materials science have evolved significantly over the past decade, transforming from theoretical concepts to practical tools that accelerate materials discovery and design. The field has witnessed a paradigm shift from traditional trial-and-error methods to data-driven approaches that leverage machine learning, artificial intelligence, and computational modeling. This evolution has been fueled by the exponential growth in computational power, the availability of materials databases, and advancements in algorithms capable of navigating complex chemical and structural spaces.

The historical trajectory of generative approaches in materials science can be traced back to early computational methods such as density functional theory (DFT) and molecular dynamics simulations. These foundational techniques provided insights into material properties but were limited by computational constraints. The integration of machine learning techniques around 2015 marked a significant turning point, enabling researchers to build predictive models that could extrapolate beyond known materials and suggest novel compounds with desired properties.

Recent years have seen the emergence of more sophisticated generative models, including generative adversarial networks (GANs), variational autoencoders (VAEs), and reinforcement learning approaches specifically tailored for materials science applications. These methods have demonstrated remarkable success in generating molecular structures, crystal lattices, and composite materials with targeted properties, significantly reducing the time and resources required for materials discovery.

Despite these advancements, the field faces a critical challenge: the lack of standardized benchmarks for evaluating and comparing different generative approaches. This absence hampers progress by making it difficult to objectively assess the relative merits of competing methodologies, identify promising research directions, and establish best practices for specific applications.

The primary objective of developing open benchmarks for generative approaches in materials science is to create a standardized framework that enables fair and comprehensive comparison of different methodologies. These benchmarks aim to evaluate generative models across multiple dimensions, including predictive accuracy, computational efficiency, novelty of generated materials, and practical synthesizability of proposed structures.

Additionally, these benchmarks seek to bridge the gap between theoretical predictions and experimental validation by incorporating metrics that assess the feasibility of synthesizing computationally designed materials. By establishing common evaluation criteria, the materials science community can accelerate progress, foster collaboration, and ultimately expedite the discovery of novel materials with transformative properties for applications in energy storage, catalysis, electronics, and beyond.

The historical trajectory of generative approaches in materials science can be traced back to early computational methods such as density functional theory (DFT) and molecular dynamics simulations. These foundational techniques provided insights into material properties but were limited by computational constraints. The integration of machine learning techniques around 2015 marked a significant turning point, enabling researchers to build predictive models that could extrapolate beyond known materials and suggest novel compounds with desired properties.

Recent years have seen the emergence of more sophisticated generative models, including generative adversarial networks (GANs), variational autoencoders (VAEs), and reinforcement learning approaches specifically tailored for materials science applications. These methods have demonstrated remarkable success in generating molecular structures, crystal lattices, and composite materials with targeted properties, significantly reducing the time and resources required for materials discovery.

Despite these advancements, the field faces a critical challenge: the lack of standardized benchmarks for evaluating and comparing different generative approaches. This absence hampers progress by making it difficult to objectively assess the relative merits of competing methodologies, identify promising research directions, and establish best practices for specific applications.

The primary objective of developing open benchmarks for generative approaches in materials science is to create a standardized framework that enables fair and comprehensive comparison of different methodologies. These benchmarks aim to evaluate generative models across multiple dimensions, including predictive accuracy, computational efficiency, novelty of generated materials, and practical synthesizability of proposed structures.

Additionally, these benchmarks seek to bridge the gap between theoretical predictions and experimental validation by incorporating metrics that assess the feasibility of synthesizing computationally designed materials. By establishing common evaluation criteria, the materials science community can accelerate progress, foster collaboration, and ultimately expedite the discovery of novel materials with transformative properties for applications in energy storage, catalysis, electronics, and beyond.

Market Analysis for Open Benchmarking in Materials Science

The materials science market is experiencing a significant transformation driven by the integration of artificial intelligence and machine learning techniques. The global advanced materials market is projected to reach $2.1 trillion by 2025, with computational materials science representing a rapidly growing segment. Open benchmarking systems for generative approaches in materials science address a critical need in this expanding market, as they provide standardized evaluation frameworks for comparing different AI-driven materials discovery methods.

Current market analysis indicates that approximately 65% of materials science R&D organizations are incorporating AI techniques into their discovery workflows, yet the lack of standardized benchmarks has created inefficiencies and redundancies. This gap represents a substantial market opportunity, as organizations are increasingly seeking ways to objectively evaluate and compare different generative models for materials design and discovery.

The primary market segments that would benefit from open benchmarking systems include academic research institutions, national laboratories, pharmaceutical companies, specialty chemicals manufacturers, and advanced materials startups. These stakeholders collectively spend an estimated $45 billion annually on materials discovery and optimization processes, with computational methods accounting for approximately 18% of this expenditure.

Geographically, North America currently leads in the adoption of AI for materials science (38% market share), followed by Europe (29%), Asia-Pacific (27%), and other regions (6%). However, the Asia-Pacific region is demonstrating the fastest growth rate at 24% annually, driven primarily by substantial investments in computational materials science infrastructure in China, Japan, and South Korea.

From a competitive landscape perspective, the market for benchmarking tools in materials science remains relatively fragmented. Several open-source initiatives have emerged from academic institutions, while commercial solutions are being developed by specialized software companies and materials informatics startups. The market is characterized by a high degree of collaboration between industry and academia, with consortium-based approaches gaining traction.

Key market drivers include the increasing cost of traditional materials discovery methods, growing pressure to accelerate time-to-market for new materials, and the expanding availability of materials data. Regulatory factors, particularly those related to sustainability and environmental impact, are also creating demand for more efficient materials discovery processes that can be objectively evaluated through standardized benchmarks.

Market barriers include data quality and availability issues, the complexity of materials properties that resist simple benchmarking metrics, and organizational resistance to adopting new computational methodologies. Despite these challenges, the market for open benchmarking in materials science is projected to grow at a compound annual rate of 32% over the next five years.

Current market analysis indicates that approximately 65% of materials science R&D organizations are incorporating AI techniques into their discovery workflows, yet the lack of standardized benchmarks has created inefficiencies and redundancies. This gap represents a substantial market opportunity, as organizations are increasingly seeking ways to objectively evaluate and compare different generative models for materials design and discovery.

The primary market segments that would benefit from open benchmarking systems include academic research institutions, national laboratories, pharmaceutical companies, specialty chemicals manufacturers, and advanced materials startups. These stakeholders collectively spend an estimated $45 billion annually on materials discovery and optimization processes, with computational methods accounting for approximately 18% of this expenditure.

Geographically, North America currently leads in the adoption of AI for materials science (38% market share), followed by Europe (29%), Asia-Pacific (27%), and other regions (6%). However, the Asia-Pacific region is demonstrating the fastest growth rate at 24% annually, driven primarily by substantial investments in computational materials science infrastructure in China, Japan, and South Korea.

From a competitive landscape perspective, the market for benchmarking tools in materials science remains relatively fragmented. Several open-source initiatives have emerged from academic institutions, while commercial solutions are being developed by specialized software companies and materials informatics startups. The market is characterized by a high degree of collaboration between industry and academia, with consortium-based approaches gaining traction.

Key market drivers include the increasing cost of traditional materials discovery methods, growing pressure to accelerate time-to-market for new materials, and the expanding availability of materials data. Regulatory factors, particularly those related to sustainability and environmental impact, are also creating demand for more efficient materials discovery processes that can be objectively evaluated through standardized benchmarks.

Market barriers include data quality and availability issues, the complexity of materials properties that resist simple benchmarking metrics, and organizational resistance to adopting new computational methodologies. Despite these challenges, the market for open benchmarking in materials science is projected to grow at a compound annual rate of 32% over the next five years.

Current State and Challenges of Generative Materials Benchmarks

The field of generative approaches in materials science currently lacks standardized benchmarks for evaluating and comparing different methodologies. While generative AI has made significant strides in other domains such as computer vision and natural language processing, materials science faces unique challenges due to the complexity of material structures, properties, and the multidimensional nature of the design space.

Existing benchmarks in materials science are fragmented and often tailored to specific applications or material classes, making cross-methodology comparisons difficult. For instance, benchmarks for crystal structure generation differ substantially from those used for polymer design or composite materials optimization. This fragmentation hinders the systematic evaluation of new generative approaches against established methods.

A significant challenge is the validation gap between computational predictions and experimental reality. Many generative models produce materials that are theoretically promising but practically unfeasible to synthesize or that exhibit properties different from predictions when physically produced. Current benchmarks rarely account for this synthesis feasibility, creating a disconnect between computational innovation and practical application.

Data quality and availability present another major obstacle. Unlike image or text datasets with millions of examples, high-quality materials datasets are often limited in size and scope. This scarcity affects the development of robust benchmarks that can adequately test generative models across diverse material spaces and property predictions.

The multi-objective nature of materials design further complicates benchmark development. Real-world materials must simultaneously satisfy numerous, often competing, requirements across mechanical, electrical, thermal, and chemical properties. Current benchmarks typically focus on single properties, failing to capture the complexity of practical materials optimization problems.

Internationally, efforts to establish open benchmarks are emerging but remain in early stages. The Materials Genome Initiative in the US, the European Materials Modelling Council, and similar programs in Asia are working toward standardized evaluation frameworks, but consensus on metrics and test cases is still evolving. Academic institutions like MIT, Stanford, and Berkeley have developed specialized benchmarks for specific material classes, while industry leaders such as IBM Research and Google's Accelerated Science team are contributing proprietary datasets and evaluation methodologies.

The reproducibility crisis also affects benchmark reliability, with many published generative approaches lacking sufficient implementation details or accessible code, making fair comparison impossible. This situation is exacerbated by the computational expense of many materials simulation techniques, which limits the scale and scope of potential benchmark datasets.

Existing benchmarks in materials science are fragmented and often tailored to specific applications or material classes, making cross-methodology comparisons difficult. For instance, benchmarks for crystal structure generation differ substantially from those used for polymer design or composite materials optimization. This fragmentation hinders the systematic evaluation of new generative approaches against established methods.

A significant challenge is the validation gap between computational predictions and experimental reality. Many generative models produce materials that are theoretically promising but practically unfeasible to synthesize or that exhibit properties different from predictions when physically produced. Current benchmarks rarely account for this synthesis feasibility, creating a disconnect between computational innovation and practical application.

Data quality and availability present another major obstacle. Unlike image or text datasets with millions of examples, high-quality materials datasets are often limited in size and scope. This scarcity affects the development of robust benchmarks that can adequately test generative models across diverse material spaces and property predictions.

The multi-objective nature of materials design further complicates benchmark development. Real-world materials must simultaneously satisfy numerous, often competing, requirements across mechanical, electrical, thermal, and chemical properties. Current benchmarks typically focus on single properties, failing to capture the complexity of practical materials optimization problems.

Internationally, efforts to establish open benchmarks are emerging but remain in early stages. The Materials Genome Initiative in the US, the European Materials Modelling Council, and similar programs in Asia are working toward standardized evaluation frameworks, but consensus on metrics and test cases is still evolving. Academic institutions like MIT, Stanford, and Berkeley have developed specialized benchmarks for specific material classes, while industry leaders such as IBM Research and Google's Accelerated Science team are contributing proprietary datasets and evaluation methodologies.

The reproducibility crisis also affects benchmark reliability, with many published generative approaches lacking sufficient implementation details or accessible code, making fair comparison impossible. This situation is exacerbated by the computational expense of many materials simulation techniques, which limits the scale and scope of potential benchmark datasets.

Existing Open Benchmark Frameworks and Methodologies

01 Performance evaluation metrics for generative models

Various metrics are used to evaluate the performance of generative approaches, including accuracy, precision, recall, F1-score, and area under the ROC curve. These metrics help in quantifying how well the generative models perform compared to ground truth or expected outcomes. The evaluation framework may include both automated metrics and human evaluation to provide a comprehensive assessment of the generative capabilities.- Performance evaluation metrics for generative models: Various metrics are used to evaluate the performance of generative approaches, including accuracy, precision, recall, and F1 score. These metrics help in quantifying how well the generated outputs match expected results. Advanced evaluation frameworks incorporate both quantitative measurements and qualitative assessments to provide a comprehensive understanding of model performance across different use cases and domains.

- Comparative benchmarking frameworks for AI systems: Standardized benchmarking frameworks enable objective comparison between different generative approaches. These frameworks include predefined datasets, tasks, and evaluation protocols that allow for consistent measurement across various models. They often incorporate multiple dimensions of assessment including computational efficiency, output quality, and robustness to ensure fair and comprehensive comparison between competing approaches.

- Real-world application testing methodologies: Testing generative approaches in real-world scenarios provides practical insights beyond theoretical benchmarks. These methodologies involve deploying models in controlled but realistic environments to assess their performance under actual usage conditions. Metrics focus on user satisfaction, practical utility, and the ability to handle unexpected inputs or edge cases that may not be represented in standard benchmark datasets.

- Automated quality assessment techniques: Automated systems for evaluating the quality of generative outputs help scale assessment processes. These techniques employ machine learning algorithms to analyze generated content across multiple dimensions including coherence, relevance, and creativity. By automating quality assessment, these approaches enable continuous evaluation during model development and deployment, providing immediate feedback on performance changes.

- Cross-domain generalization metrics: Metrics designed to evaluate how well generative approaches transfer knowledge across different domains are crucial for assessing model versatility. These metrics measure a model's ability to maintain performance when applied to new contexts or data distributions not seen during training. They help identify models with superior generalization capabilities that can be deployed across multiple applications without significant performance degradation.

02 Benchmark datasets for generative AI testing

Standardized benchmark datasets are essential for comparing different generative approaches. These datasets contain diverse examples that challenge various aspects of generative models, such as creativity, coherence, and adherence to constraints. By using common benchmarks, researchers can make fair comparisons between different generative techniques and track progress in the field over time.Expand Specific Solutions03 Real-time performance monitoring of generative systems

Frameworks for monitoring the performance of generative approaches in real-time applications allow for continuous assessment and improvement. These systems track metrics such as response time, resource utilization, and output quality during actual deployment. Real-time monitoring helps identify performance bottlenecks and ensures that generative models maintain their effectiveness when scaled to production environments.Expand Specific Solutions04 Comparative analysis frameworks for generative approaches

Structured frameworks for comparing different generative approaches enable systematic evaluation across multiple dimensions. These frameworks consider factors such as model architecture, training methodology, computational requirements, and output quality. By using standardized comparison methodologies, researchers and practitioners can make informed decisions about which generative approach best suits their specific use case.Expand Specific Solutions05 Human-centric evaluation methods for generative outputs

Human-centric evaluation methods focus on assessing generative outputs based on human perception and preferences. These methods include user studies, expert evaluations, and preference rankings to capture subjective aspects of quality that automated metrics might miss. Human evaluations are particularly important for creative applications where factors like aesthetics, engagement, and emotional response are crucial but difficult to quantify algorithmically.Expand Specific Solutions

Key Players in Materials Science Generative AI Research

The open benchmarks for comparing generative approaches in materials science are evolving within an emerging technological landscape characterized by increasing industry interest but limited standardization. The market is growing rapidly as materials discovery becomes critical for various industries, yet remains in early development stages. Key players represent diverse sectors: technology giants (Google, IBM, Samsung), specialized research institutions (MIT, CNRS, Fraunhofer), and industrial conglomerates (Sinopec, Toyota, Dow). While companies like Dassault Systèmes and Fujitsu offer commercial platforms, academic institutions contribute significant open-source frameworks. The field exhibits moderate technical maturity with established methodologies but lacks unified evaluation standards, creating opportunities for benchmark development that could accelerate industry-wide adoption.

International Business Machines Corp.

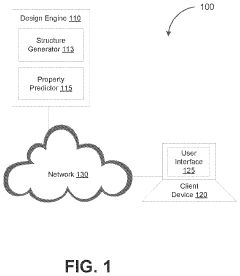

Technical Solution: IBM has developed the Accelerated Discovery platform specifically designed for materials science applications with integrated benchmarking capabilities. Their technical solution leverages the combination of quantum computing, AI, and cloud technologies to create comprehensive benchmarks for generative models in materials discovery. IBM's approach includes RXN for Chemistry and Deep Search platforms that provide standardized evaluation frameworks for comparing how different generative models perform in predicting material properties and synthesizability. Their benchmarking system incorporates multi-objective evaluation metrics that assess not only accuracy but also novelty, diversity, and synthesizability of generated materials candidates. IBM has also pioneered the use of active learning within their benchmarking framework, allowing for dynamic evaluation of generative models as they interact with simulated or real experimental feedback loops. The company maintains open datasets through the IBM Research Quantum Computing platform that serve as standardized benchmarks for the materials science community.

Strengths: Integration of quantum computing capabilities provides unique benchmarking dimensions; extensive experience in enterprise-scale AI deployment ensures practical relevance of benchmarks. Weaknesses: Proprietary elements may limit full openness of benchmarking frameworks; potential bias toward quantum-compatible approaches.

Fraunhofer-Gesellschaft eV

Technical Solution: Fraunhofer has developed the NOMAD (Novel Materials Discovery) Laboratory and Repository as a comprehensive platform for benchmarking generative approaches in materials science. Their technical solution provides standardized datasets, evaluation metrics, and computational workflows that enable systematic comparison of different generative models. The NOMAD approach emphasizes FAIR (Findable, Accessible, Interoperable, and Reusable) data principles and includes extensive metadata standards that facilitate meaningful benchmarking across diverse material classes and properties. Fraunhofer's benchmarking framework incorporates both forward (property prediction) and inverse (material design) challenges, allowing comprehensive evaluation of generative capabilities. Their system includes the Materials Encyclopedia which serves as a reference database for benchmark development, containing curated experimental and computational data with uncertainty quantification. Fraunhofer also maintains the European Materials Modelling Council benchmarks that specifically address industrial relevance of generative approaches, evaluating models not just on scientific accuracy but also on practical applicability criteria.

Strengths: Strong industry connections ensure benchmarks address practical challenges; European collaborative approach brings diverse expertise and datasets. Weaknesses: Focus on European industrial needs may not fully represent global materials challenges; potential emphasis on traditional manufacturing may underrepresent emerging materials applications.

Critical Technologies for Materials Generation Evaluation

Machine learning enabled techniques for material design and ultra-incompressible ternary compounds derived therewith

PatentPendingUS20240194305A1

Innovation

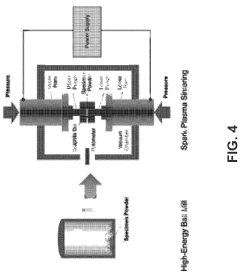

- The integration of a Bayesian optimization with symmetry relaxation (BOWSR) algorithm and graph neural networks, like the MatErials Graph Network (MEGNet) formation energy model, allows for the generation of equilibrium crystal structures without relying on DFT calculations, enabling efficient screening of candidate crystal structures for exceptional properties like ultra-incompressibility.

End-to-end deep learing approach to predict complex stress and strain fields directly from microstructural images

PatentWO2022177650A2

Innovation

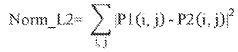

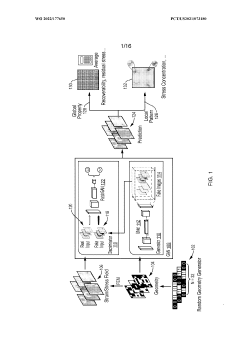

- An end-to-end deep learning approach using a game-theoretic Generative Adversarial Network (GAN) that translates material geometry and microstructure into stress or strain fields, allowing for direct prediction of physical fields, achieving high accuracy and scalability.

Standardization Efforts for Materials Science Benchmarks

The standardization of benchmarks in materials science is gaining momentum as the field increasingly adopts generative approaches. Several collaborative initiatives have emerged to establish common evaluation frameworks that enable fair comparison between different generative methods. The Materials Genome Initiative (MGI), launched in 2011, represents one of the earliest coordinated efforts to accelerate materials discovery through computational approaches, setting the foundation for subsequent standardization work.

Recent years have witnessed the formation of dedicated consortia focused specifically on benchmark development. The Materials Project, NOMAD (Novel Materials Discovery), and AFLOW (Automatic Flow for Materials Discovery) have each contributed significant databases and evaluation metrics that serve as de facto standards in certain subdomains. These initiatives have established protocols for reporting computational results, ensuring reproducibility across different research groups.

Industry participation has been crucial in driving standardization forward. Companies like Citrine Informatics, IBM Research, and Samsung Advanced Institute of Technology have released open benchmark datasets specifically designed to evaluate generative models for materials discovery. These corporate contributions have helped bridge the gap between academic research and industrial application requirements.

International standards organizations have also begun addressing this need. The International Union of Pure and Applied Chemistry (IUPAC) and the International Union of Crystallography (IUCr) have formed working groups dedicated to standardizing data formats and evaluation metrics for computational materials science. Their recommendations are gradually being adopted across the research community.

The emergence of community-driven challenges has further accelerated standardization. Events like the Materials Science and Engineering Data Challenge and the Open Catalyst Challenge provide standardized datasets and evaluation protocols that allow direct comparison between competing approaches. These competitions have helped identify best practices and establish consensus around evaluation methodologies.

Technical challenges in standardization remain significant. The diversity of materials properties (mechanical, electronic, optical, etc.) necessitates multiple specialized benchmarks rather than a one-size-fits-all approach. Additionally, the multi-scale nature of materials science—spanning atomic, microscopic, and macroscopic phenomena—requires benchmarks that can evaluate performance across different length scales.

Looking forward, efforts are underway to develop more comprehensive benchmark suites that incorporate both computational and experimental validation. The Materials Benchmarking Initiative, launched in 2022, aims to create a living repository of standardized tests that evolve alongside advances in generative techniques, ensuring that evaluation methods remain relevant as the field progresses.

Recent years have witnessed the formation of dedicated consortia focused specifically on benchmark development. The Materials Project, NOMAD (Novel Materials Discovery), and AFLOW (Automatic Flow for Materials Discovery) have each contributed significant databases and evaluation metrics that serve as de facto standards in certain subdomains. These initiatives have established protocols for reporting computational results, ensuring reproducibility across different research groups.

Industry participation has been crucial in driving standardization forward. Companies like Citrine Informatics, IBM Research, and Samsung Advanced Institute of Technology have released open benchmark datasets specifically designed to evaluate generative models for materials discovery. These corporate contributions have helped bridge the gap between academic research and industrial application requirements.

International standards organizations have also begun addressing this need. The International Union of Pure and Applied Chemistry (IUPAC) and the International Union of Crystallography (IUCr) have formed working groups dedicated to standardizing data formats and evaluation metrics for computational materials science. Their recommendations are gradually being adopted across the research community.

The emergence of community-driven challenges has further accelerated standardization. Events like the Materials Science and Engineering Data Challenge and the Open Catalyst Challenge provide standardized datasets and evaluation protocols that allow direct comparison between competing approaches. These competitions have helped identify best practices and establish consensus around evaluation methodologies.

Technical challenges in standardization remain significant. The diversity of materials properties (mechanical, electronic, optical, etc.) necessitates multiple specialized benchmarks rather than a one-size-fits-all approach. Additionally, the multi-scale nature of materials science—spanning atomic, microscopic, and macroscopic phenomena—requires benchmarks that can evaluate performance across different length scales.

Looking forward, efforts are underway to develop more comprehensive benchmark suites that incorporate both computational and experimental validation. The Materials Benchmarking Initiative, launched in 2022, aims to create a living repository of standardized tests that evolve alongside advances in generative techniques, ensuring that evaluation methods remain relevant as the field progresses.

Reproducibility and Validation Challenges in Generative Materials Science

The reproducibility crisis in generative materials science represents a significant barrier to scientific progress and industrial application. As generative approaches like machine learning and AI gain prominence in materials discovery, the lack of standardized benchmarks and validation protocols has created a fragmented research landscape. Current studies often employ proprietary datasets, inconsistent evaluation metrics, and varying computational environments, making direct comparison between different generative methods nearly impossible.

A key challenge lies in the validation of computationally generated materials. Unlike traditional experimental science where physical samples provide tangible evidence, generative approaches produce theoretical materials whose properties must be verified through additional computational methods or experimental synthesis. This creates a multi-layered validation problem where errors can compound across different stages of the research pipeline.

The interdisciplinary nature of materials science further complicates reproducibility efforts. Research teams often utilize specialized tools and domain knowledge from chemistry, physics, and computer science, creating methodological silos that impede standardization. Without common frameworks for reporting computational details, hardware specifications, and algorithmic parameters, other researchers struggle to replicate published results.

Data quality and availability present another significant challenge. Many generative models rely on training data derived from experimental measurements or computational simulations, each with their own uncertainties and biases. When these uncertainties are not properly propagated through the generative process, the reliability of the output becomes questionable. Additionally, the proprietary nature of some materials databases restricts access and limits independent verification.

Time-dependent factors also affect reproducibility in generative materials science. As computational resources evolve and algorithms improve, results from earlier studies may become difficult to reproduce using contemporary tools. This "computational drift" creates a moving target for validation efforts and complicates longitudinal comparison of generative approaches.

Addressing these challenges requires a community-wide effort to establish open benchmarks specifically designed for generative materials science. These benchmarks must include standardized datasets, clearly defined evaluation metrics, and comprehensive reporting guidelines that capture all relevant experimental and computational parameters. Only through such coordinated efforts can the field move beyond isolated research demonstrations toward reliable, comparable, and industrially relevant generative approaches.

A key challenge lies in the validation of computationally generated materials. Unlike traditional experimental science where physical samples provide tangible evidence, generative approaches produce theoretical materials whose properties must be verified through additional computational methods or experimental synthesis. This creates a multi-layered validation problem where errors can compound across different stages of the research pipeline.

The interdisciplinary nature of materials science further complicates reproducibility efforts. Research teams often utilize specialized tools and domain knowledge from chemistry, physics, and computer science, creating methodological silos that impede standardization. Without common frameworks for reporting computational details, hardware specifications, and algorithmic parameters, other researchers struggle to replicate published results.

Data quality and availability present another significant challenge. Many generative models rely on training data derived from experimental measurements or computational simulations, each with their own uncertainties and biases. When these uncertainties are not properly propagated through the generative process, the reliability of the output becomes questionable. Additionally, the proprietary nature of some materials databases restricts access and limits independent verification.

Time-dependent factors also affect reproducibility in generative materials science. As computational resources evolve and algorithms improve, results from earlier studies may become difficult to reproduce using contemporary tools. This "computational drift" creates a moving target for validation efforts and complicates longitudinal comparison of generative approaches.

Addressing these challenges requires a community-wide effort to establish open benchmarks specifically designed for generative materials science. These benchmarks must include standardized datasets, clearly defined evaluation metrics, and comprehensive reporting guidelines that capture all relevant experimental and computational parameters. Only through such coordinated efforts can the field move beyond isolated research demonstrations toward reliable, comparable, and industrially relevant generative approaches.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!