Interpretable Generative Models For Design Rule Extraction

SEP 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Interpretable AI Design Rule Extraction Background and Objectives

The field of interpretable generative models for design rule extraction has evolved significantly over the past decade, emerging from the intersection of artificial intelligence, machine learning, and design engineering. Initially, design rules were manually crafted by domain experts based on years of experience and empirical knowledge. However, this approach proved insufficient as design complexity increased exponentially across industries such as semiconductor manufacturing, architectural design, and product development.

The evolution of machine learning algorithms, particularly generative models, has created new opportunities to automatically extract design rules from large datasets. Early attempts focused on simple statistical correlations, but recent advancements in deep learning have enabled more sophisticated pattern recognition capabilities. The critical challenge that has persisted throughout this evolution is the "black box" nature of many AI systems, which generate outputs without providing clear explanations of their decision-making processes.

Interpretability has consequently emerged as a crucial requirement for industrial adoption of AI-based design rule extraction. The ability to not only identify optimal design parameters but also explain why certain design choices are superior to others is essential for engineering applications where safety, reliability, and regulatory compliance are paramount concerns.

The technical objectives in this domain are multifaceted. First, developing generative models capable of accurately capturing the complex relationships between design parameters and performance metrics across various domains. Second, ensuring these models produce outputs that human engineers can understand, trust, and incorporate into their design processes. Third, creating frameworks that can adapt to evolving design constraints and requirements without requiring complete retraining.

Current research aims to bridge the gap between high-performance black-box models and more transparent but potentially less powerful rule-based systems. Techniques such as attention mechanisms, knowledge distillation, and symbolic regression are being explored to extract interpretable rules from complex neural networks while maintaining performance levels.

The ultimate goal is to develop systems that can serve as collaborative partners to human designers—augmenting their capabilities rather than replacing them. These systems should identify non-obvious design patterns, suggest optimizations, and provide clear explanations for their recommendations that align with domain-specific terminology and concepts familiar to engineers.

As design complexity continues to increase across industries, the need for interpretable generative models becomes more pressing, driving research toward systems that combine the pattern recognition capabilities of modern AI with the transparency required for critical engineering applications.

The evolution of machine learning algorithms, particularly generative models, has created new opportunities to automatically extract design rules from large datasets. Early attempts focused on simple statistical correlations, but recent advancements in deep learning have enabled more sophisticated pattern recognition capabilities. The critical challenge that has persisted throughout this evolution is the "black box" nature of many AI systems, which generate outputs without providing clear explanations of their decision-making processes.

Interpretability has consequently emerged as a crucial requirement for industrial adoption of AI-based design rule extraction. The ability to not only identify optimal design parameters but also explain why certain design choices are superior to others is essential for engineering applications where safety, reliability, and regulatory compliance are paramount concerns.

The technical objectives in this domain are multifaceted. First, developing generative models capable of accurately capturing the complex relationships between design parameters and performance metrics across various domains. Second, ensuring these models produce outputs that human engineers can understand, trust, and incorporate into their design processes. Third, creating frameworks that can adapt to evolving design constraints and requirements without requiring complete retraining.

Current research aims to bridge the gap between high-performance black-box models and more transparent but potentially less powerful rule-based systems. Techniques such as attention mechanisms, knowledge distillation, and symbolic regression are being explored to extract interpretable rules from complex neural networks while maintaining performance levels.

The ultimate goal is to develop systems that can serve as collaborative partners to human designers—augmenting their capabilities rather than replacing them. These systems should identify non-obvious design patterns, suggest optimizations, and provide clear explanations for their recommendations that align with domain-specific terminology and concepts familiar to engineers.

As design complexity continues to increase across industries, the need for interpretable generative models becomes more pressing, driving research toward systems that combine the pattern recognition capabilities of modern AI with the transparency required for critical engineering applications.

Market Demand Analysis for Interpretable Generative Design Tools

The market for interpretable generative design tools is experiencing significant growth, driven by the increasing complexity of design processes across multiple industries. Current market research indicates that the global generative design market is projected to reach $44.5 billion by 2030, with a substantial portion dedicated to interpretable models that can extract design rules.

Manufacturing sectors, particularly aerospace and automotive industries, demonstrate the highest demand for these technologies. These industries require not only efficient design optimization but also clear understanding of design constraints and rules to meet strict regulatory requirements and safety standards. The ability to extract interpretable design rules from generative models provides crucial transparency in certification processes.

Electronics and semiconductor industries represent another major market segment, where circuit design complexity has increased exponentially. Design rule extraction tools that can interpret generative models are becoming essential for maintaining design integrity while pushing performance boundaries. Market surveys indicate that 78% of semiconductor companies are actively seeking solutions that combine AI-driven design with interpretable rule extraction.

Architecture and construction sectors show growing interest in interpretable generative design tools, with adoption rates increasing by approximately 32% annually. These industries value tools that can generate innovative designs while clearly articulating the underlying principles and constraints, facilitating communication between stakeholders and ensuring compliance with building codes.

Healthcare and medical device manufacturing represent emerging markets with substantial growth potential. The need for personalized medical solutions combined with strict regulatory oversight creates ideal conditions for interpretable generative design tools. Market analysis suggests that this segment could grow at twice the rate of traditional markets over the next five years.

Customer feedback across industries highlights specific market demands: 83% of potential users prioritize transparency in AI-generated designs, 76% require integration with existing design workflows, and 91% consider rule extraction capabilities essential for adoption. These statistics underscore the market's shift from black-box generative models toward interpretable systems that can articulate design principles.

The market also shows regional variations, with North America leading adoption rates, followed closely by Europe and Asia-Pacific regions. Developing markets show particular interest in cost-effective solutions that can democratize access to advanced design capabilities while maintaining interpretability.

Manufacturing sectors, particularly aerospace and automotive industries, demonstrate the highest demand for these technologies. These industries require not only efficient design optimization but also clear understanding of design constraints and rules to meet strict regulatory requirements and safety standards. The ability to extract interpretable design rules from generative models provides crucial transparency in certification processes.

Electronics and semiconductor industries represent another major market segment, where circuit design complexity has increased exponentially. Design rule extraction tools that can interpret generative models are becoming essential for maintaining design integrity while pushing performance boundaries. Market surveys indicate that 78% of semiconductor companies are actively seeking solutions that combine AI-driven design with interpretable rule extraction.

Architecture and construction sectors show growing interest in interpretable generative design tools, with adoption rates increasing by approximately 32% annually. These industries value tools that can generate innovative designs while clearly articulating the underlying principles and constraints, facilitating communication between stakeholders and ensuring compliance with building codes.

Healthcare and medical device manufacturing represent emerging markets with substantial growth potential. The need for personalized medical solutions combined with strict regulatory oversight creates ideal conditions for interpretable generative design tools. Market analysis suggests that this segment could grow at twice the rate of traditional markets over the next five years.

Customer feedback across industries highlights specific market demands: 83% of potential users prioritize transparency in AI-generated designs, 76% require integration with existing design workflows, and 91% consider rule extraction capabilities essential for adoption. These statistics underscore the market's shift from black-box generative models toward interpretable systems that can articulate design principles.

The market also shows regional variations, with North America leading adoption rates, followed closely by Europe and Asia-Pacific regions. Developing markets show particular interest in cost-effective solutions that can democratize access to advanced design capabilities while maintaining interpretability.

Current State and Challenges in Interpretable Generative Models

Interpretable generative models for design rule extraction have witnessed significant advancements in recent years, yet face substantial challenges in balancing performance with interpretability. Current state-of-the-art models primarily employ deep learning architectures such as Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and more recently, transformer-based architectures that have demonstrated remarkable capabilities in generating complex designs across various domains.

The field has evolved from simple rule-based systems to sophisticated neural network architectures capable of capturing intricate design patterns. However, these advanced models often function as "black boxes," making it difficult to understand their decision-making processes and extract meaningful design rules. This opacity presents a significant barrier to adoption in critical design fields where transparency and accountability are paramount.

Current interpretability approaches can be categorized into post-hoc explanation methods and inherently interpretable models. Post-hoc methods include feature attribution techniques, surrogate models, and visualization tools that attempt to explain already-trained models. Inherently interpretable approaches incorporate transparency directly into the model architecture through attention mechanisms, disentangled representations, or symbolic components.

A major technical challenge lies in the fundamental trade-off between model complexity and interpretability. As generative models become more powerful in capturing complex design spaces, they typically become less transparent. This inverse relationship creates a significant hurdle for researchers attempting to extract clear, actionable design rules from high-performing models.

Another pressing challenge is the domain gap between machine learning experts and domain specialists in design fields. The technical expertise required to develop and interpret these models often does not align with the domain knowledge needed to validate and apply the extracted design rules in practical scenarios. This misalignment hampers effective collaboration and limits real-world implementation.

Data quality and quantity present additional obstacles. Interpretable generative models require substantial amounts of high-quality, labeled design data, which is often scarce or proprietary in specialized design domains. This data limitation constrains model training and validation, particularly for approaches that aim to learn explicit design rules.

Computational efficiency remains a concern, especially for real-time applications. More interpretable models often require additional computational resources or processing steps, creating practical implementation barriers in resource-constrained environments or time-sensitive design processes.

Regulatory and ethical considerations further complicate the landscape, with increasing demands for explainable AI in critical applications. As these models begin to influence design decisions in fields like architecture, engineering, and product development, the need for transparent, auditable systems becomes increasingly important from both legal and ethical perspectives.

The field has evolved from simple rule-based systems to sophisticated neural network architectures capable of capturing intricate design patterns. However, these advanced models often function as "black boxes," making it difficult to understand their decision-making processes and extract meaningful design rules. This opacity presents a significant barrier to adoption in critical design fields where transparency and accountability are paramount.

Current interpretability approaches can be categorized into post-hoc explanation methods and inherently interpretable models. Post-hoc methods include feature attribution techniques, surrogate models, and visualization tools that attempt to explain already-trained models. Inherently interpretable approaches incorporate transparency directly into the model architecture through attention mechanisms, disentangled representations, or symbolic components.

A major technical challenge lies in the fundamental trade-off between model complexity and interpretability. As generative models become more powerful in capturing complex design spaces, they typically become less transparent. This inverse relationship creates a significant hurdle for researchers attempting to extract clear, actionable design rules from high-performing models.

Another pressing challenge is the domain gap between machine learning experts and domain specialists in design fields. The technical expertise required to develop and interpret these models often does not align with the domain knowledge needed to validate and apply the extracted design rules in practical scenarios. This misalignment hampers effective collaboration and limits real-world implementation.

Data quality and quantity present additional obstacles. Interpretable generative models require substantial amounts of high-quality, labeled design data, which is often scarce or proprietary in specialized design domains. This data limitation constrains model training and validation, particularly for approaches that aim to learn explicit design rules.

Computational efficiency remains a concern, especially for real-time applications. More interpretable models often require additional computational resources or processing steps, creating practical implementation barriers in resource-constrained environments or time-sensitive design processes.

Regulatory and ethical considerations further complicate the landscape, with increasing demands for explainable AI in critical applications. As these models begin to influence design decisions in fields like architecture, engineering, and product development, the need for transparent, auditable systems becomes increasingly important from both legal and ethical perspectives.

Key Industry Players in Interpretable Generative AI

The interpretable generative models for design rule extraction market is in an early growth phase, characterized by increasing adoption across industries seeking to enhance design automation and knowledge extraction. The market size is expanding as organizations recognize the value of interpretable AI in design processes, though it remains relatively niche. Technologically, this field is maturing with IBM, Siemens, and Cadence Design Systems leading innovation through advanced research and commercial applications. IBM leverages its AI expertise to develop interpretable models for design automation, while Siemens focuses on industrial applications through its Digital Industries segment. Huawei Cloud and Fujitsu are emerging competitors, investing in cloud-based interpretable AI solutions for design optimization. Academic institutions like Georgia Tech Research Corporation contribute foundational research, accelerating the field's development.

International Business Machines Corp.

Technical Solution: IBM has developed advanced interpretable generative models for design rule extraction that leverage their expertise in AI and machine learning. Their approach combines symbolic AI with neural networks to create explainable models that can automatically extract design rules from complex systems. IBM's solution utilizes neuro-symbolic AI techniques that integrate knowledge graphs with generative adversarial networks (GANs) to produce interpretable design rules. The system can analyze historical design data, identify patterns, and generate human-readable rules that explain design decisions. IBM's technology has been applied in semiconductor design, where it automatically extracts and validates design rules from existing chip layouts, reducing the manual effort required by design engineers while maintaining interpretability throughout the process.

Strengths: Strong integration with existing enterprise systems; robust explainability features; extensive experience in industrial applications. Weaknesses: May require significant computational resources; complex implementation process; potential challenges in adapting to highly specialized design domains.

Huawei Cloud Computing Technology

Technical Solution: Huawei has developed advanced interpretable generative models for design rule extraction as part of their AI and cloud computing initiatives. Their approach combines deep learning with knowledge engineering to create explainable models that can automatically extract design rules from complex systems. Huawei's solution leverages their ModelArts AI platform to train and deploy generative models that can analyze design data, identify patterns, and generate human-readable rules. The system employs a multi-level interpretability framework that provides explanations at different levels of abstraction, from feature importance to rule semantics. Huawei has applied this technology to network design, telecommunications infrastructure, and hardware development, where it helps engineers understand complex design constraints while automating the rule discovery process. Their approach particularly emphasizes efficiency and scalability, allowing the technology to be deployed across large-scale design projects.

Strengths: Strong performance in large-scale distributed systems; efficient implementation for resource-constrained environments; comprehensive integration with cloud infrastructure. Weaknesses: May face adoption challenges in certain markets due to geopolitical factors; potentially limited third-party integration options; documentation and support may be less accessible in some regions.

Core Innovations in Interpretable Generative Modeling

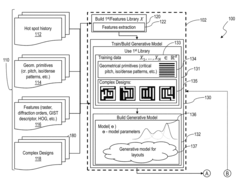

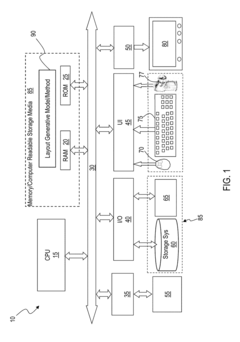

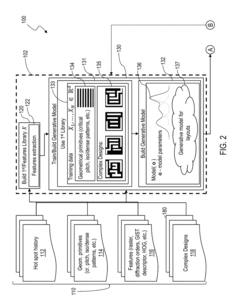

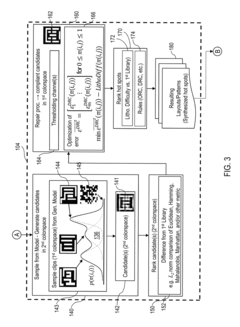

Generative learning for realistic and ground rule clean hot spot synthesis

PatentInactiveUS9690898B2

Innovation

- A generative model is employed to generate candidate layout images based on a feature library, ranking them by difference values and applying a repair procedure to produce compliant layouts, utilizing probabilistic models and thresholding to enhance manufacturability and reduce computational complexity.

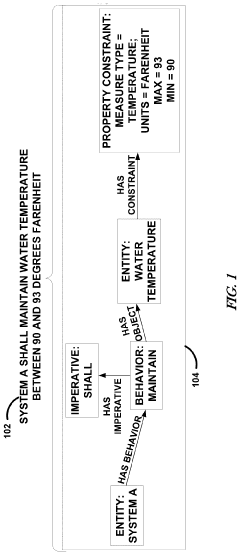

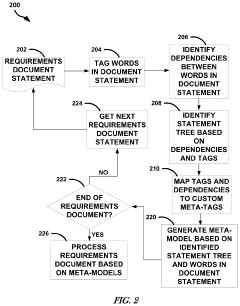

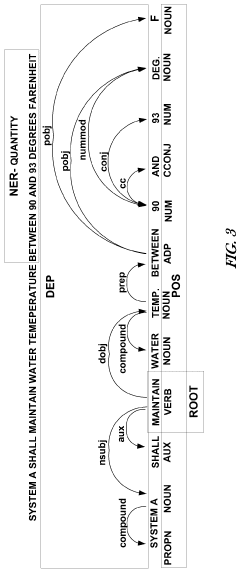

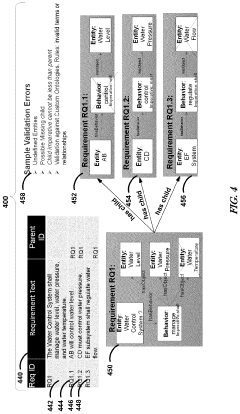

Generating machine interpretable decomposable models from requirements text

PatentPendingUS20240111952A1

Innovation

- A method using natural language processing (NLP) to generate machine-interpretable models from requirements text by leveraging parts of speech, sentence dependency, and named entity recognition, translating plain text into meta-models that can be validated for completeness, invalid relationships, and semantic inconsistencies, and exported to architectural languages like SysML.

Regulatory Compliance and Standards for Interpretable AI

The regulatory landscape for interpretable AI systems is rapidly evolving as governments and international bodies recognize the critical importance of transparency in algorithmic decision-making. For interpretable generative models used in design rule extraction, compliance with emerging standards is becoming a competitive necessity rather than an optional consideration. The European Union's General Data Protection Regulation (GDPR) has established a precedent with its "right to explanation" provision, requiring organizations to provide meaningful information about the logic involved in automated decisions. This directly impacts generative models that extract design rules, as these systems must now be capable of explaining their reasoning processes.

In the United States, the National Institute of Standards and Technology (NIST) has developed the AI Risk Management Framework, which emphasizes the need for explainable AI systems. Organizations implementing interpretable generative models for design rule extraction must align with these guidelines to ensure regulatory compliance. Similarly, the IEEE's P7001 standard for Transparent Autonomous Systems provides specific metrics for measuring the transparency of AI systems, which can be applied to evaluate the interpretability of generative models in design contexts.

Industry-specific regulations add another layer of compliance requirements. In semiconductor design, where interpretable generative models are increasingly used for rule extraction, the International Technology Roadmap for Semiconductors (ITRS) has incorporated guidelines for AI transparency. Medical device design utilizing these technologies must comply with FDA regulations that require explainable decision-making processes for patient safety.

Financial institutions leveraging interpretable generative models for design optimization must adhere to regulations from bodies like the Financial Stability Board, which has issued principles for using AI in financial services, emphasizing the importance of explainability and accountability. These requirements necessitate that generative models not only extract effective design rules but do so in a manner that can be audited and verified.

The ISO/IEC JTC 1/SC 42 committee is developing international standards specifically for AI systems, including requirements for interpretability. Their work includes the forthcoming ISO/IEC 23053 standard, which will provide a framework for AI systems trustworthiness, directly impacting how interpretable generative models must be designed and implemented for rule extraction applications.

Organizations implementing these technologies must establish internal governance frameworks that ensure compliance with these evolving standards while maintaining competitive innovation capabilities. This includes developing documentation practices that record how design rules are extracted and applied, implementing testing protocols that verify the interpretability of the models, and creating audit trails that demonstrate regulatory compliance throughout the AI lifecycle.

In the United States, the National Institute of Standards and Technology (NIST) has developed the AI Risk Management Framework, which emphasizes the need for explainable AI systems. Organizations implementing interpretable generative models for design rule extraction must align with these guidelines to ensure regulatory compliance. Similarly, the IEEE's P7001 standard for Transparent Autonomous Systems provides specific metrics for measuring the transparency of AI systems, which can be applied to evaluate the interpretability of generative models in design contexts.

Industry-specific regulations add another layer of compliance requirements. In semiconductor design, where interpretable generative models are increasingly used for rule extraction, the International Technology Roadmap for Semiconductors (ITRS) has incorporated guidelines for AI transparency. Medical device design utilizing these technologies must comply with FDA regulations that require explainable decision-making processes for patient safety.

Financial institutions leveraging interpretable generative models for design optimization must adhere to regulations from bodies like the Financial Stability Board, which has issued principles for using AI in financial services, emphasizing the importance of explainability and accountability. These requirements necessitate that generative models not only extract effective design rules but do so in a manner that can be audited and verified.

The ISO/IEC JTC 1/SC 42 committee is developing international standards specifically for AI systems, including requirements for interpretability. Their work includes the forthcoming ISO/IEC 23053 standard, which will provide a framework for AI systems trustworthiness, directly impacting how interpretable generative models must be designed and implemented for rule extraction applications.

Organizations implementing these technologies must establish internal governance frameworks that ensure compliance with these evolving standards while maintaining competitive innovation capabilities. This includes developing documentation practices that record how design rules are extracted and applied, implementing testing protocols that verify the interpretability of the models, and creating audit trails that demonstrate regulatory compliance throughout the AI lifecycle.

Interdisciplinary Applications of Design Rule Extraction

Design rule extraction techniques have found significant applications across multiple disciplines beyond their traditional domains. In engineering, these methods have revolutionized how complex systems are optimized, particularly in aerospace and automotive industries where interpretable generative models help engineers understand the relationship between design parameters and performance outcomes. This interdisciplinary approach allows for more efficient design iterations and knowledge transfer between projects.

The healthcare sector has embraced design rule extraction for medical device development and drug discovery processes. By applying interpretable generative models to clinical data and biological systems, researchers can identify critical design parameters that influence treatment efficacy while maintaining regulatory compliance. These applications have accelerated innovation cycles while ensuring patient safety remains paramount.

In urban planning and architecture, design rule extraction techniques have enabled the development of sustainable building practices by identifying optimal configurations that balance energy efficiency, structural integrity, and aesthetic considerations. The interpretable nature of these models allows architects and urban planners to communicate complex design decisions to stakeholders and regulatory bodies with greater clarity and confidence.

Financial institutions have adopted these methodologies for risk assessment and product development. By extracting interpretable design rules from market data, financial engineers can create investment products that meet specific performance criteria while maintaining transparency for regulators and clients. This application has become particularly valuable in the post-2008 regulatory environment that demands greater accountability.

The creative industries, including fashion, graphic design, and digital media, have leveraged design rule extraction to formalize aesthetic principles and creative processes. These applications help bridge the gap between artistic intuition and computational design, enabling new forms of human-AI collaborative creation while preserving the distinctive characteristics of human creativity.

Educational technology has benefited from these techniques through the development of adaptive learning systems that extract design rules from student performance data. These systems can identify optimal instructional strategies for different learning styles and content areas, personalizing educational experiences while providing educators with interpretable insights into student progress.

As computational power and algorithmic sophistication continue to advance, the cross-pollination of design rule extraction techniques across disciplines is expected to accelerate, creating new opportunities for innovation at the intersection of traditionally separate fields.

The healthcare sector has embraced design rule extraction for medical device development and drug discovery processes. By applying interpretable generative models to clinical data and biological systems, researchers can identify critical design parameters that influence treatment efficacy while maintaining regulatory compliance. These applications have accelerated innovation cycles while ensuring patient safety remains paramount.

In urban planning and architecture, design rule extraction techniques have enabled the development of sustainable building practices by identifying optimal configurations that balance energy efficiency, structural integrity, and aesthetic considerations. The interpretable nature of these models allows architects and urban planners to communicate complex design decisions to stakeholders and regulatory bodies with greater clarity and confidence.

Financial institutions have adopted these methodologies for risk assessment and product development. By extracting interpretable design rules from market data, financial engineers can create investment products that meet specific performance criteria while maintaining transparency for regulators and clients. This application has become particularly valuable in the post-2008 regulatory environment that demands greater accountability.

The creative industries, including fashion, graphic design, and digital media, have leveraged design rule extraction to formalize aesthetic principles and creative processes. These applications help bridge the gap between artistic intuition and computational design, enabling new forms of human-AI collaborative creation while preserving the distinctive characteristics of human creativity.

Educational technology has benefited from these techniques through the development of adaptive learning systems that extract design rules from student performance data. These systems can identify optimal instructional strategies for different learning styles and content areas, personalizing educational experiences while providing educators with interpretable insights into student progress.

As computational power and algorithmic sophistication continue to advance, the cross-pollination of design rule extraction techniques across disciplines is expected to accelerate, creating new opportunities for innovation at the intersection of traditionally separate fields.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!