Comparative Analysis of High-Throughput Experimentation Techniques

SEP 25, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

HTE Evolution and Objectives

High-throughput experimentation (HTE) has evolved significantly over the past three decades, transforming from a niche methodology to a cornerstone approach in modern scientific discovery. Initially developed in the pharmaceutical industry during the 1990s for accelerating drug discovery, HTE techniques have since expanded across multiple disciplines including materials science, catalysis, and biotechnology. The fundamental principle driving this evolution has been the need to overcome the traditional "one-experiment-at-a-time" bottleneck that limited scientific progress for centuries.

The technological evolution of HTE can be traced through several distinct phases. The first generation focused primarily on miniaturization and parallelization of experiments, enabling scientists to conduct hundreds of reactions simultaneously. This was followed by the integration of robotics and automation systems in the early 2000s, which dramatically increased throughput while reducing human error. The third phase, occurring roughly between 2005-2015, saw the incorporation of advanced analytics and data management systems capable of handling the massive datasets generated through HTE approaches.

Most recently, HTE has entered a fourth evolutionary phase characterized by the integration of artificial intelligence and machine learning algorithms. These computational tools not only assist in experimental design but also in the interpretation of complex multidimensional datasets, enabling researchers to extract meaningful patterns and relationships that would be impossible to discern through conventional analysis methods.

The primary objectives of modern HTE techniques center around four key dimensions: speed, scale, precision, and knowledge generation. Speed refers to the acceleration of experimental cycles, reducing the time from hypothesis to validation from months to days or even hours. Scale encompasses both the number of experiments that can be conducted simultaneously and the breadth of parameter space that can be explored. Precision focuses on the reproducibility and reliability of experimental results, ensuring that high throughput does not come at the expense of data quality.

Perhaps most importantly, the ultimate objective of HTE is to transform the scientific discovery process itself—moving from traditional hypothesis-driven approaches to data-driven paradigms where unexpected correlations and patterns can emerge from comprehensive exploration of experimental space. This represents a fundamental shift in how scientific knowledge is generated and validated, with HTE serving as both catalyst and enabler of this transformation.

As we look toward future developments, the objectives of HTE continue to evolve, with increasing emphasis on sustainability, resource efficiency, and accessibility. The democratization of HTE technologies through cost reduction and simplified interfaces represents a critical goal for expanding their impact across scientific disciplines and industrial applications.

The technological evolution of HTE can be traced through several distinct phases. The first generation focused primarily on miniaturization and parallelization of experiments, enabling scientists to conduct hundreds of reactions simultaneously. This was followed by the integration of robotics and automation systems in the early 2000s, which dramatically increased throughput while reducing human error. The third phase, occurring roughly between 2005-2015, saw the incorporation of advanced analytics and data management systems capable of handling the massive datasets generated through HTE approaches.

Most recently, HTE has entered a fourth evolutionary phase characterized by the integration of artificial intelligence and machine learning algorithms. These computational tools not only assist in experimental design but also in the interpretation of complex multidimensional datasets, enabling researchers to extract meaningful patterns and relationships that would be impossible to discern through conventional analysis methods.

The primary objectives of modern HTE techniques center around four key dimensions: speed, scale, precision, and knowledge generation. Speed refers to the acceleration of experimental cycles, reducing the time from hypothesis to validation from months to days or even hours. Scale encompasses both the number of experiments that can be conducted simultaneously and the breadth of parameter space that can be explored. Precision focuses on the reproducibility and reliability of experimental results, ensuring that high throughput does not come at the expense of data quality.

Perhaps most importantly, the ultimate objective of HTE is to transform the scientific discovery process itself—moving from traditional hypothesis-driven approaches to data-driven paradigms where unexpected correlations and patterns can emerge from comprehensive exploration of experimental space. This represents a fundamental shift in how scientific knowledge is generated and validated, with HTE serving as both catalyst and enabler of this transformation.

As we look toward future developments, the objectives of HTE continue to evolve, with increasing emphasis on sustainability, resource efficiency, and accessibility. The democratization of HTE technologies through cost reduction and simplified interfaces represents a critical goal for expanding their impact across scientific disciplines and industrial applications.

Market Demand Analysis for HTE Solutions

The High-Throughput Experimentation (HTE) market has witnessed substantial growth in recent years, driven primarily by increasing R&D investments across pharmaceutical, chemical, and materials science industries. Current market assessments value the global HTE solutions market at approximately 800 million USD in 2023, with projections indicating a compound annual growth rate of 9-11% through 2030, potentially reaching 1.5 billion USD.

Pharmaceutical and biotechnology sectors represent the largest demand segment, accounting for nearly 45% of the total market share. This dominance stems from the critical need to accelerate drug discovery processes and reduce time-to-market for new therapeutic compounds. The average cost to develop a new drug exceeds 2 billion USD, with development timelines often spanning 10-15 years. HTE technologies have demonstrated capabilities to reduce screening times by 60-80% and cut development costs by 30-40% in early-stage research.

Chemical manufacturing represents the second-largest market segment at 25%, where HTE solutions enable rapid catalyst optimization, formulation development, and process intensification. Materials science applications follow at 20%, with growing implementation in fields such as advanced polymers, nanomaterials, and renewable energy materials development.

Regionally, North America leads market consumption at 38%, followed by Europe (32%) and Asia-Pacific (25%). However, the Asia-Pacific region demonstrates the fastest growth trajectory at 12-14% annually, driven by expanding R&D infrastructure in China, Japan, and South Korea, alongside increasing government initiatives to boost innovation capabilities.

Key market drivers include intensifying pressure to reduce product development cycles, growing complexity of research challenges requiring multi-parameter optimization, and increasing adoption of artificial intelligence and machine learning integration with HTE workflows. Industry surveys indicate that 78% of R&D executives consider HTE implementation a strategic priority for maintaining competitive advantage.

Customer demand increasingly focuses on integrated solutions that combine hardware automation with sophisticated data management and analysis capabilities. End-users report that data handling and interpretation represent significant bottlenecks, with 65% of organizations citing challenges in extracting actionable insights from high-throughput data streams. This has created substantial market opportunities for software solutions that enable seamless data integration, visualization, and predictive analytics.

Emerging application areas showing rapid demand growth include personalized medicine (15-18% annual growth), sustainable materials development (14-16%), and agricultural biotechnology (12-14%). These sectors are particularly attracted to HTE's ability to efficiently explore vast experimental spaces while minimizing resource consumption.

Pharmaceutical and biotechnology sectors represent the largest demand segment, accounting for nearly 45% of the total market share. This dominance stems from the critical need to accelerate drug discovery processes and reduce time-to-market for new therapeutic compounds. The average cost to develop a new drug exceeds 2 billion USD, with development timelines often spanning 10-15 years. HTE technologies have demonstrated capabilities to reduce screening times by 60-80% and cut development costs by 30-40% in early-stage research.

Chemical manufacturing represents the second-largest market segment at 25%, where HTE solutions enable rapid catalyst optimization, formulation development, and process intensification. Materials science applications follow at 20%, with growing implementation in fields such as advanced polymers, nanomaterials, and renewable energy materials development.

Regionally, North America leads market consumption at 38%, followed by Europe (32%) and Asia-Pacific (25%). However, the Asia-Pacific region demonstrates the fastest growth trajectory at 12-14% annually, driven by expanding R&D infrastructure in China, Japan, and South Korea, alongside increasing government initiatives to boost innovation capabilities.

Key market drivers include intensifying pressure to reduce product development cycles, growing complexity of research challenges requiring multi-parameter optimization, and increasing adoption of artificial intelligence and machine learning integration with HTE workflows. Industry surveys indicate that 78% of R&D executives consider HTE implementation a strategic priority for maintaining competitive advantage.

Customer demand increasingly focuses on integrated solutions that combine hardware automation with sophisticated data management and analysis capabilities. End-users report that data handling and interpretation represent significant bottlenecks, with 65% of organizations citing challenges in extracting actionable insights from high-throughput data streams. This has created substantial market opportunities for software solutions that enable seamless data integration, visualization, and predictive analytics.

Emerging application areas showing rapid demand growth include personalized medicine (15-18% annual growth), sustainable materials development (14-16%), and agricultural biotechnology (12-14%). These sectors are particularly attracted to HTE's ability to efficiently explore vast experimental spaces while minimizing resource consumption.

Current HTE Landscape and Technical Barriers

High-throughput experimentation (HTE) has emerged as a transformative approach in scientific research, enabling rapid and parallel testing of multiple variables. The current landscape of HTE technologies spans across pharmaceutical, materials science, biotechnology, and chemical industries, with varying levels of adoption and sophistication.

In the pharmaceutical sector, HTE platforms have reached considerable maturity, with automated systems capable of conducting thousands of reactions daily. Companies like Merck, Pfizer, and Novartis have established dedicated HTE facilities that integrate robotics, microfluidics, and advanced analytics. These systems typically operate at microscale (10-1000 μL) and can reduce experimental timelines from months to days.

Materials science applications have seen significant growth, particularly in catalyst discovery and optimization. Academic institutions and corporations like BASF and Dow Chemical utilize parallel reactors and high-throughput characterization techniques. However, the complexity of materials properties often requires more sophisticated analytical methods than those used in pharmaceutical screening.

Despite impressive advances, several technical barriers limit the full potential of HTE technologies. Sample miniaturization, while beneficial for throughput, creates challenges in analytical sensitivity and reproducibility. Many existing analytical techniques were not designed for microscale samples, leading to detection limitations and potential data quality issues.

Data management represents another significant challenge. HTE generates massive datasets that require sophisticated informatics infrastructure. Current systems often struggle with data integration across different experimental platforms and analytical techniques. The lack of standardized data formats and protocols further complicates cross-platform comparisons and collaborative research efforts.

Automation compatibility presents ongoing challenges, particularly for complex experimental workflows. While liquid handling and basic reaction setup have been successfully automated, more complex operations such as heterogeneous catalysis studies or multi-phase reactions remain difficult to implement in high-throughput formats.

Translation of results from HTE to conventional scale remains problematic. Phenomena observed at microscale may not directly translate to production scale due to differences in mixing, heat transfer, and other physical parameters. This "scale-up gap" necessitates additional validation experiments that can diminish the efficiency gains of HTE.

Resource requirements constitute a significant barrier to wider adoption. Comprehensive HTE platforms require substantial capital investment in specialized equipment and software, as well as trained personnel. This creates accessibility disparities between large corporations and smaller research entities, potentially limiting innovation in the field.

In the pharmaceutical sector, HTE platforms have reached considerable maturity, with automated systems capable of conducting thousands of reactions daily. Companies like Merck, Pfizer, and Novartis have established dedicated HTE facilities that integrate robotics, microfluidics, and advanced analytics. These systems typically operate at microscale (10-1000 μL) and can reduce experimental timelines from months to days.

Materials science applications have seen significant growth, particularly in catalyst discovery and optimization. Academic institutions and corporations like BASF and Dow Chemical utilize parallel reactors and high-throughput characterization techniques. However, the complexity of materials properties often requires more sophisticated analytical methods than those used in pharmaceutical screening.

Despite impressive advances, several technical barriers limit the full potential of HTE technologies. Sample miniaturization, while beneficial for throughput, creates challenges in analytical sensitivity and reproducibility. Many existing analytical techniques were not designed for microscale samples, leading to detection limitations and potential data quality issues.

Data management represents another significant challenge. HTE generates massive datasets that require sophisticated informatics infrastructure. Current systems often struggle with data integration across different experimental platforms and analytical techniques. The lack of standardized data formats and protocols further complicates cross-platform comparisons and collaborative research efforts.

Automation compatibility presents ongoing challenges, particularly for complex experimental workflows. While liquid handling and basic reaction setup have been successfully automated, more complex operations such as heterogeneous catalysis studies or multi-phase reactions remain difficult to implement in high-throughput formats.

Translation of results from HTE to conventional scale remains problematic. Phenomena observed at microscale may not directly translate to production scale due to differences in mixing, heat transfer, and other physical parameters. This "scale-up gap" necessitates additional validation experiments that can diminish the efficiency gains of HTE.

Resource requirements constitute a significant barrier to wider adoption. Comprehensive HTE platforms require substantial capital investment in specialized equipment and software, as well as trained personnel. This creates accessibility disparities between large corporations and smaller research entities, potentially limiting innovation in the field.

Contemporary HTE Methodologies and Platforms

01 Parallel processing systems for high-throughput experimentation

Parallel processing systems enable simultaneous execution of multiple experiments, significantly increasing throughput in research environments. These systems utilize advanced computing architectures to distribute experimental workloads across multiple processors or experimental stations. By running experiments concurrently rather than sequentially, researchers can generate larger datasets in shorter timeframes, accelerating discovery and development processes in fields such as materials science, chemistry, and biotechnology.- Automated laboratory systems for high-throughput experimentation: Automated laboratory systems enable high-throughput experimentation by integrating robotics, liquid handling devices, and analytical instruments. These systems can perform multiple experiments simultaneously, significantly increasing experimental throughput while reducing manual intervention. The automation includes sample preparation, reaction execution, and data collection processes, allowing researchers to conduct large numbers of experiments efficiently and consistently.

- Parallel processing techniques for experimental data analysis: High-throughput experimentation generates massive amounts of data that require efficient processing methods. Parallel processing techniques distribute computational tasks across multiple processors or computing nodes, enabling simultaneous analysis of experimental data. These techniques include distributed computing frameworks, cloud-based solutions, and specialized algorithms designed to handle large datasets, significantly reducing analysis time and increasing overall experimental throughput.

- Microfluidic platforms for accelerated experimentation: Microfluidic technologies enable high-throughput experimentation by miniaturizing reaction vessels and integrating multiple experimental steps on a single chip. These platforms allow for precise control of small fluid volumes, rapid mixing, and parallel processing of numerous samples. The reduced reagent consumption, faster reaction times, and ability to automate complex workflows make microfluidic systems particularly valuable for screening applications and optimization studies where large experimental matrices need to be explored.

- Advanced screening methodologies for compound libraries: High-throughput screening methodologies enable rapid evaluation of large compound libraries against specific targets. These techniques incorporate automated sample handling, multiplexed assays, and sophisticated detection systems to quickly identify promising candidates. Advanced screening approaches include label-free detection methods, cell-based assays, and phenotypic screening, which can process thousands of compounds daily while generating rich datasets that facilitate the identification of structure-activity relationships.

- Machine learning integration for experimental design and optimization: Machine learning algorithms enhance high-throughput experimentation by optimizing experimental design and predicting outcomes. These computational approaches can identify patterns in complex datasets, suggest promising experimental conditions, and reduce the number of experiments needed to achieve desired results. The integration of machine learning with high-throughput platforms creates feedback loops where experimental results inform subsequent experiments, leading to more efficient exploration of experimental space and accelerated discovery processes.

02 Automated laboratory equipment and robotics

Automated laboratory systems incorporate robotics and precision instrumentation to perform experimental procedures with minimal human intervention. These systems can handle sample preparation, reagent dispensing, and analytical measurements at high speeds and with greater consistency than manual methods. Robotic platforms can operate continuously, enabling round-the-clock experimentation and dramatically increasing throughput while reducing human error and variability in experimental conditions.Expand Specific Solutions03 Microfluidic and miniaturized experimental platforms

Microfluidic technologies enable experimentation at microscale dimensions, allowing for significant reductions in sample volumes and reaction times. These miniaturized platforms can accommodate hundreds or thousands of parallel reactions on a single chip or plate, dramatically increasing experimental throughput. The reduced scale also enhances reaction efficiency through improved heat and mass transfer, while requiring less reagent consumption and generating less waste compared to conventional methods.Expand Specific Solutions04 Data management and analysis systems for high-throughput experiments

Specialized software platforms and algorithms are essential for managing and analyzing the massive datasets generated by high-throughput experimentation. These systems provide automated data processing, pattern recognition, and statistical analysis capabilities that can rapidly extract meaningful insights from experimental results. Advanced machine learning approaches can identify trends and correlations that might be missed by conventional analysis, further accelerating the discovery process and enabling researchers to make data-driven decisions about subsequent experiments.Expand Specific Solutions05 Integrated workflow systems for experimental design and execution

Comprehensive workflow management systems coordinate the entire experimental process from design to execution and analysis. These integrated platforms enable researchers to design experimental arrays, control automated equipment, track samples, and analyze results within a unified environment. By streamlining the experimental workflow and eliminating bottlenecks between different stages, these systems maximize throughput while maintaining experimental quality and reproducibility. They often incorporate design of experiments (DOE) methodologies to optimize experimental parameters and resource utilization.Expand Specific Solutions

Leading Organizations in HTE Research and Implementation

High-Throughput Experimentation (HTE) techniques are evolving rapidly in a market transitioning from early adoption to growth phase. The global HTE market is expanding at approximately 15% CAGR, driven by semiconductor, pharmaceutical, and materials science applications. Key players demonstrate varying technological maturity: established leaders like Intermolecular (Synopsys) and Accelergy offer comprehensive HTE platforms; semiconductor giants including Intel, Samsung, SMIC, and GlobalFoundries integrate HTE into R&D workflows; while specialized innovators such as Ningbo Galaxy Materials Technology and Halo-Bio focus on niche applications. Academic institutions (Shanghai University, UNC Chapel Hill) contribute fundamental research, while equipment manufacturers (Advantest, National Instruments) provide enabling technologies. The competitive landscape reflects a balance between established industrial players and emerging specialized solution providers across multiple industry verticals.

Dow Global Technologies LLC

Technical Solution: Dow has developed an advanced High-Throughput Research (HTR) platform that integrates robotics, microreactors, and automated analytics to accelerate materials discovery across multiple chemical domains. Their system employs parallel batch reactors with capacities ranging from microliters to milliliters, enabling thousands of experiments to be conducted simultaneously under precisely controlled conditions. Dow's platform incorporates in-line analytical techniques including FTIR, GC-MS, and HPLC for real-time reaction monitoring and product characterization. A distinguishing feature is their "Scale-Bridging" methodology, which ensures that discoveries made at microscale can be reliably translated to production scale. Their proprietary experimental design software utilizes machine learning algorithms to navigate complex chemical spaces efficiently, identifying optimal formulations with minimal experimental iterations. This integrated approach has been successfully applied across polymer science, catalysis, and formulation chemistry, reducing development cycles from years to months.

Strengths: Exceptional chemical diversity capabilities spanning multiple industries; robust scale-up methodology ensuring industrial relevance; sophisticated machine learning integration for experimental design. Weaknesses: System complexity requires significant expertise; high capital and operational costs; primarily optimized for Dow's specific chemistry domains.

Intermolecular, Inc.

Technical Solution: Intermolecular has pioneered the High Productivity Combinatorial (HPC) platform, which integrates advanced physical vapor deposition, atomic layer deposition, and wet processing tools with proprietary informatics systems. Their platform enables parallel synthesis and characterization of hundreds to thousands of unique material compositions on a single substrate. The system incorporates automated handling systems that can process multiple substrates simultaneously while maintaining precise control over experimental variables. Their proprietary workflow management software coordinates the experimental design, execution, and data analysis, allowing for rapid iteration through materials discovery cycles. Intermolecular's approach has demonstrated up to 100x acceleration in materials development timelines compared to traditional sequential methods, particularly in semiconductor and electronic materials research.

Strengths: Exceptional throughput capacity with parallel processing capabilities; sophisticated informatics integration for experimental design and analysis; proven track record in semiconductor materials discovery. Weaknesses: High capital investment requirements; primarily focused on inorganic materials and thin films rather than broader chemical space; requires specialized expertise to operate effectively.

Critical HTE Innovations and Intellectual Property

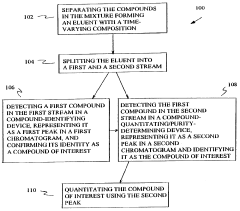

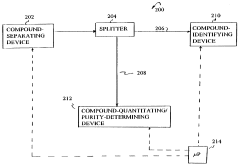

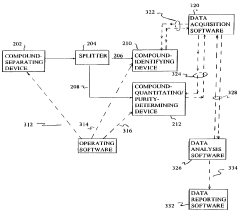

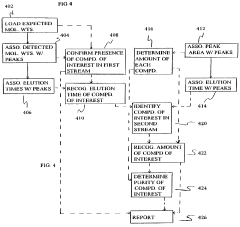

High-throughput apparatus for identifying, quantitating and determining the purity of chemical compounds in mixtures

PatentInactiveUS6090280A

Innovation

- A method and apparatus that utilizes chromatographic separation to split a product stream into two streams for simultaneous detection by a compound-identifying device (e.g., mass spectrograph) and a compound-quantitating/purity-determining device (e.g., evaporative light scattering detector), allowing for contemporaneous confirmation and quantitation of compounds, with peak correlation across devices to ensure accurate identification and purity determination.

Method for performing high-throughput analyses and device for carrying out this method

PatentInactiveEP1523682A1

Innovation

- A method and device utilizing a biochip arrangement with multiple spot arrays on a common flat carrier, allowing for simultaneous and parallel processing of tests, with spatial separation of spot arrays for independent treatment and reduced material usage, enabling clocked work steps for sample application, temperature control, and reagent management.

Cost-Benefit Analysis of HTE Implementation

Implementing High-Throughput Experimentation (HTE) technologies requires substantial initial investment, making a thorough cost-benefit analysis essential for organizations considering adoption. The capital expenditure for HTE platforms typically ranges from $500,000 to several million dollars, depending on the scale and sophistication of the system. This includes costs for robotic systems, analytical instruments, data management software, and laboratory infrastructure modifications.

Beyond the initial investment, organizations must account for ongoing operational expenses including maintenance contracts (typically 10-15% of equipment cost annually), consumables, specialized personnel, and periodic system upgrades. Training costs are also significant, as staff require specialized knowledge to operate complex HTE systems effectively.

The benefits of HTE implementation can be quantified across several dimensions. Most notably, experimental throughput increases by orders of magnitude—from tens of experiments per week using traditional methods to hundreds or thousands with HTE. This acceleration directly translates to reduced time-to-market for new products, with industry case studies showing development cycle reductions of 30-50%.

Quality improvements represent another significant benefit, as HTE systems offer superior reproducibility and precision compared to manual experimentation. This leads to more reliable data and fewer failed experiments, reducing material waste and rework costs. The standardization of experimental protocols further enhances data quality and facilitates more meaningful comparisons across experimental sets.

Long-term ROI calculations from pharmaceutical and materials science industries demonstrate that despite high initial costs, HTE investments typically achieve break-even within 2-3 years. Companies report ROI figures ranging from 150-300% over five-year periods, with the highest returns observed in R&D-intensive sectors where innovation speed directly impacts market position.

Risk mitigation factors should also be considered in the analysis. Organizations implementing HTE reduce dependency on individual researcher expertise and create more robust experimental workflows. However, they also face risks including technology obsolescence, integration challenges with existing systems, and potential disruption to established research processes.

The optimal implementation strategy often involves phased adoption, beginning with targeted application in high-value research areas before expanding to broader deployment. This approach allows organizations to demonstrate value, develop internal expertise, and refine implementation strategies while managing financial exposure and organizational change.

Beyond the initial investment, organizations must account for ongoing operational expenses including maintenance contracts (typically 10-15% of equipment cost annually), consumables, specialized personnel, and periodic system upgrades. Training costs are also significant, as staff require specialized knowledge to operate complex HTE systems effectively.

The benefits of HTE implementation can be quantified across several dimensions. Most notably, experimental throughput increases by orders of magnitude—from tens of experiments per week using traditional methods to hundreds or thousands with HTE. This acceleration directly translates to reduced time-to-market for new products, with industry case studies showing development cycle reductions of 30-50%.

Quality improvements represent another significant benefit, as HTE systems offer superior reproducibility and precision compared to manual experimentation. This leads to more reliable data and fewer failed experiments, reducing material waste and rework costs. The standardization of experimental protocols further enhances data quality and facilitates more meaningful comparisons across experimental sets.

Long-term ROI calculations from pharmaceutical and materials science industries demonstrate that despite high initial costs, HTE investments typically achieve break-even within 2-3 years. Companies report ROI figures ranging from 150-300% over five-year periods, with the highest returns observed in R&D-intensive sectors where innovation speed directly impacts market position.

Risk mitigation factors should also be considered in the analysis. Organizations implementing HTE reduce dependency on individual researcher expertise and create more robust experimental workflows. However, they also face risks including technology obsolescence, integration challenges with existing systems, and potential disruption to established research processes.

The optimal implementation strategy often involves phased adoption, beginning with targeted application in high-value research areas before expanding to broader deployment. This approach allows organizations to demonstrate value, develop internal expertise, and refine implementation strategies while managing financial exposure and organizational change.

Data Management Challenges in HTE

High-throughput experimentation (HTE) generates unprecedented volumes of data, creating significant data management challenges that require innovative solutions. The sheer scale of data produced during parallel experiments—often thousands of data points per day—overwhelms traditional laboratory information management systems. This exponential growth in data volume necessitates robust infrastructure capable of storing petabytes of information while maintaining accessibility and integrity.

Data heterogeneity presents another critical challenge in HTE environments. Researchers must integrate diverse data types including spectroscopic measurements, chromatographic analyses, visual observations, and metadata from varying experimental conditions. This diversity complicates standardization efforts and requires sophisticated data models that can accommodate multiple formats while preserving relationships between different data elements.

Real-time data processing capabilities have become essential as HTE platforms evolve toward continuous operation. Systems must handle streaming data from automated instruments, perform preliminary analysis, and flag anomalies without human intervention. The computational requirements for such real-time processing often exceed the capabilities of standard laboratory computing resources, necessitating edge computing solutions or cloud integration.

Data quality assurance represents a persistent challenge in high-throughput workflows. With minimal human oversight during automated processes, systematic errors can propagate undetected throughout large datasets. Advanced validation algorithms and statistical methods must be implemented to identify outliers, instrument drift, and calibration issues that might compromise experimental conclusions.

Long-term data preservation strategies must address both regulatory compliance and scientific reproducibility concerns. HTE datasets often contain valuable information that may become relevant for future research questions beyond the original experimental scope. Implementing FAIR (Findable, Accessible, Interoperable, Reusable) data principles requires significant investment in metadata standards, ontologies, and persistent identifiers.

Knowledge extraction from massive HTE datasets remains perhaps the most sophisticated challenge. Traditional analysis methods designed for small-scale experiments often fail to reveal complex patterns across thousands of experiments. Machine learning and artificial intelligence approaches show promise but require specialized expertise and computational resources that many research organizations struggle to acquire and maintain.

Cross-organizational data sharing compounds these challenges, as proprietary concerns must be balanced against the scientific benefits of collaborative analysis. Secure data exchange protocols, differential privacy techniques, and federated learning approaches are emerging as potential solutions, though standardization across the industry remains elusive.

Data heterogeneity presents another critical challenge in HTE environments. Researchers must integrate diverse data types including spectroscopic measurements, chromatographic analyses, visual observations, and metadata from varying experimental conditions. This diversity complicates standardization efforts and requires sophisticated data models that can accommodate multiple formats while preserving relationships between different data elements.

Real-time data processing capabilities have become essential as HTE platforms evolve toward continuous operation. Systems must handle streaming data from automated instruments, perform preliminary analysis, and flag anomalies without human intervention. The computational requirements for such real-time processing often exceed the capabilities of standard laboratory computing resources, necessitating edge computing solutions or cloud integration.

Data quality assurance represents a persistent challenge in high-throughput workflows. With minimal human oversight during automated processes, systematic errors can propagate undetected throughout large datasets. Advanced validation algorithms and statistical methods must be implemented to identify outliers, instrument drift, and calibration issues that might compromise experimental conclusions.

Long-term data preservation strategies must address both regulatory compliance and scientific reproducibility concerns. HTE datasets often contain valuable information that may become relevant for future research questions beyond the original experimental scope. Implementing FAIR (Findable, Accessible, Interoperable, Reusable) data principles requires significant investment in metadata standards, ontologies, and persistent identifiers.

Knowledge extraction from massive HTE datasets remains perhaps the most sophisticated challenge. Traditional analysis methods designed for small-scale experiments often fail to reveal complex patterns across thousands of experiments. Machine learning and artificial intelligence approaches show promise but require specialized expertise and computational resources that many research organizations struggle to acquire and maintain.

Cross-organizational data sharing compounds these challenges, as proprietary concerns must be balanced against the scientific benefits of collaborative analysis. Secure data exchange protocols, differential privacy techniques, and federated learning approaches are emerging as potential solutions, though standardization across the industry remains elusive.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!