Design Of Photonic Training Hardware For Online Learning Applications

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Photonic Hardware Evolution and Training Objectives

Photonic computing has emerged as a promising alternative to traditional electronic systems, particularly for machine learning applications that demand high computational power and energy efficiency. The evolution of photonic hardware for training neural networks has undergone significant transformation over the past decade, moving from theoretical concepts to practical implementations that leverage the inherent advantages of light-based computation.

The initial development phase of photonic neural networks focused primarily on demonstrating basic optical computing principles using bulk optical components. These early systems, while proving the concept, were limited by their size, stability requirements, and integration challenges. The field subsequently progressed toward integrated photonic circuits, which offered improved scalability and reliability through silicon photonics and related technologies.

Recent advancements have led to the development of specialized photonic tensor cores and optical processing units (OPUs) specifically designed for matrix multiplication and other operations central to neural network training. These components exploit wavelength division multiplexing, coherent light manipulation, and nonlinear optical effects to achieve parallel processing capabilities that significantly outpace electronic counterparts in specific computational tasks.

The primary objective of modern photonic training hardware is to address the computational bottlenecks in online learning applications, where models must be continuously updated with new data. This requires systems capable of performing forward and backward propagation operations with high throughput and minimal latency, while maintaining energy efficiency that surpasses electronic alternatives by orders of magnitude.

Another critical goal is to develop architectures that seamlessly integrate with existing machine learning frameworks and workflows. This includes creating hardware-software interfaces that abstract the complexity of the underlying photonic systems, allowing AI developers to leverage optical acceleration without specialized knowledge of photonics.

Scalability represents a fundamental objective for photonic training hardware, as practical applications demand systems capable of handling increasingly complex neural network architectures. This necessitates advances in photonic integration density, multi-chip module technologies, and optical interconnect solutions that can support the massive parallelism required for large-scale model training.

Error resilience and precision control have emerged as essential requirements, particularly as photonic systems must contend with noise, fabrication variations, and thermal effects that can impact computational accuracy. Developing robust calibration techniques and error correction mechanisms is therefore crucial to ensuring that photonic hardware can deliver the reliability needed for production-grade machine learning applications.

The convergence of these evolutionary trends and objectives is driving the field toward hybrid electro-photonic systems that combine the strengths of both domains, potentially revolutionizing how neural networks are trained for online learning scenarios across various industries.

The initial development phase of photonic neural networks focused primarily on demonstrating basic optical computing principles using bulk optical components. These early systems, while proving the concept, were limited by their size, stability requirements, and integration challenges. The field subsequently progressed toward integrated photonic circuits, which offered improved scalability and reliability through silicon photonics and related technologies.

Recent advancements have led to the development of specialized photonic tensor cores and optical processing units (OPUs) specifically designed for matrix multiplication and other operations central to neural network training. These components exploit wavelength division multiplexing, coherent light manipulation, and nonlinear optical effects to achieve parallel processing capabilities that significantly outpace electronic counterparts in specific computational tasks.

The primary objective of modern photonic training hardware is to address the computational bottlenecks in online learning applications, where models must be continuously updated with new data. This requires systems capable of performing forward and backward propagation operations with high throughput and minimal latency, while maintaining energy efficiency that surpasses electronic alternatives by orders of magnitude.

Another critical goal is to develop architectures that seamlessly integrate with existing machine learning frameworks and workflows. This includes creating hardware-software interfaces that abstract the complexity of the underlying photonic systems, allowing AI developers to leverage optical acceleration without specialized knowledge of photonics.

Scalability represents a fundamental objective for photonic training hardware, as practical applications demand systems capable of handling increasingly complex neural network architectures. This necessitates advances in photonic integration density, multi-chip module technologies, and optical interconnect solutions that can support the massive parallelism required for large-scale model training.

Error resilience and precision control have emerged as essential requirements, particularly as photonic systems must contend with noise, fabrication variations, and thermal effects that can impact computational accuracy. Developing robust calibration techniques and error correction mechanisms is therefore crucial to ensuring that photonic hardware can deliver the reliability needed for production-grade machine learning applications.

The convergence of these evolutionary trends and objectives is driving the field toward hybrid electro-photonic systems that combine the strengths of both domains, potentially revolutionizing how neural networks are trained for online learning scenarios across various industries.

Market Analysis for Photonic Neural Network Solutions

The photonic neural network (PNN) solutions market is experiencing rapid growth, driven by increasing demands for energy-efficient computing architectures capable of handling complex AI workloads. Current market valuations place this sector at approximately $425 million in 2023, with projections indicating growth to reach $3.8 billion by 2030, representing a compound annual growth rate (CAGR) of 37.2% during this forecast period.

The primary market segments for photonic neural networks include data centers, telecommunications, autonomous vehicles, healthcare diagnostics, and scientific research. Data centers represent the largest current market share at 42%, as operators seek solutions to address the exponential growth in energy consumption from traditional electronic AI accelerators. The telecommunications sector follows at 27%, leveraging PNNs for real-time signal processing and network optimization.

Market demand is being fueled by several key factors. First, the energy efficiency advantage of photonic computing, which can potentially reduce power consumption by 90% compared to electronic counterparts for certain workloads. Second, the inherent parallelism of light-based computation aligns perfectly with neural network architectures. Third, the increasing complexity of AI models has created computational bottlenecks that conventional electronic systems struggle to overcome efficiently.

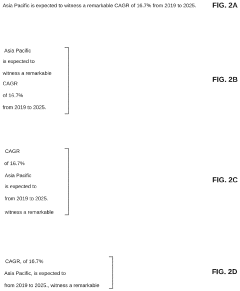

Regional analysis reveals North America currently dominates the market with 45% share, followed by Europe (28%) and Asia-Pacific (22%). However, the Asia-Pacific region is expected to witness the fastest growth rate of 42% CAGR through 2030, driven by substantial investments in China, Japan, and South Korea.

Customer segmentation shows enterprise-level organizations as the primary adopters (68%), followed by research institutions (18%) and government agencies (14%). The high initial investment requirements currently limit adoption among smaller organizations, though this barrier is expected to diminish as the technology matures and economies of scale develop.

Market challenges include high manufacturing costs, integration complexities with existing electronic infrastructure, and the need for specialized expertise. Additionally, standardization remains an ongoing issue, with multiple competing architectures vying for market dominance.

The competitive landscape features both established technology corporations and specialized startups. Key market players include Lightmatter, Lightelligence, Luminous Computing, and Intel's Silicon Photonics division. Recent strategic partnerships between photonics specialists and AI software developers indicate a trend toward creating end-to-end solutions that bridge the hardware-software gap, potentially accelerating market adoption.

The primary market segments for photonic neural networks include data centers, telecommunications, autonomous vehicles, healthcare diagnostics, and scientific research. Data centers represent the largest current market share at 42%, as operators seek solutions to address the exponential growth in energy consumption from traditional electronic AI accelerators. The telecommunications sector follows at 27%, leveraging PNNs for real-time signal processing and network optimization.

Market demand is being fueled by several key factors. First, the energy efficiency advantage of photonic computing, which can potentially reduce power consumption by 90% compared to electronic counterparts for certain workloads. Second, the inherent parallelism of light-based computation aligns perfectly with neural network architectures. Third, the increasing complexity of AI models has created computational bottlenecks that conventional electronic systems struggle to overcome efficiently.

Regional analysis reveals North America currently dominates the market with 45% share, followed by Europe (28%) and Asia-Pacific (22%). However, the Asia-Pacific region is expected to witness the fastest growth rate of 42% CAGR through 2030, driven by substantial investments in China, Japan, and South Korea.

Customer segmentation shows enterprise-level organizations as the primary adopters (68%), followed by research institutions (18%) and government agencies (14%). The high initial investment requirements currently limit adoption among smaller organizations, though this barrier is expected to diminish as the technology matures and economies of scale develop.

Market challenges include high manufacturing costs, integration complexities with existing electronic infrastructure, and the need for specialized expertise. Additionally, standardization remains an ongoing issue, with multiple competing architectures vying for market dominance.

The competitive landscape features both established technology corporations and specialized startups. Key market players include Lightmatter, Lightelligence, Luminous Computing, and Intel's Silicon Photonics division. Recent strategic partnerships between photonics specialists and AI software developers indicate a trend toward creating end-to-end solutions that bridge the hardware-software gap, potentially accelerating market adoption.

Current Challenges in Photonic Computing for ML

Despite the significant advancements in photonic computing for machine learning applications, several critical challenges continue to impede widespread implementation and adoption. The integration of photonic elements with traditional electronic systems presents substantial interface complications, particularly in managing the conversion between optical and electrical signals. This conversion process introduces latency and energy overhead, potentially negating the speed and efficiency advantages inherent to photonic systems.

Thermal stability represents another significant obstacle in photonic computing platforms. Optical components are highly sensitive to temperature fluctuations, which can cause wavelength drift and affect computational accuracy. Current solutions involving temperature control mechanisms add complexity, cost, and energy consumption to photonic systems, undermining their efficiency benefits.

Manufacturing scalability remains problematic for photonic integrated circuits (PICs). While electronic integrated circuits benefit from decades of manufacturing refinement, photonic fabrication processes lack comparable maturity. Variations in manufacturing processes can lead to device-to-device inconsistencies, affecting the reliability and reproducibility of photonic computing systems for machine learning applications.

The limited dynamic range and precision of photonic components present fundamental challenges for implementing high-precision machine learning algorithms. Traditional deep learning models often require 32-bit or 16-bit floating-point precision, whereas current photonic systems typically operate at much lower effective bit resolutions. This precision gap necessitates novel algorithmic approaches specifically tailored to photonic hardware constraints.

Power consumption, while theoretically advantageous in photonic systems, faces practical limitations. Laser sources, modulators, and detectors still consume significant energy, particularly in systems requiring multiple wavelengths or high optical power. The energy efficiency promised by photonic computing has yet to be fully realized in practical implementations for online learning applications.

Programming models and software frameworks for photonic computing remain underdeveloped compared to their electronic counterparts. The lack of standardized programming interfaces and optimization tools creates barriers for machine learning practitioners seeking to leverage photonic hardware. This software gap slows adoption and limits the exploration of photonic solutions for complex machine learning tasks.

Noise management presents another critical challenge, as photonic systems are susceptible to various noise sources including shot noise, thermal noise, and crosstalk between waveguides. These noise factors can significantly impact the signal-to-noise ratio, potentially degrading the performance of machine learning algorithms that require high computational precision.

Thermal stability represents another significant obstacle in photonic computing platforms. Optical components are highly sensitive to temperature fluctuations, which can cause wavelength drift and affect computational accuracy. Current solutions involving temperature control mechanisms add complexity, cost, and energy consumption to photonic systems, undermining their efficiency benefits.

Manufacturing scalability remains problematic for photonic integrated circuits (PICs). While electronic integrated circuits benefit from decades of manufacturing refinement, photonic fabrication processes lack comparable maturity. Variations in manufacturing processes can lead to device-to-device inconsistencies, affecting the reliability and reproducibility of photonic computing systems for machine learning applications.

The limited dynamic range and precision of photonic components present fundamental challenges for implementing high-precision machine learning algorithms. Traditional deep learning models often require 32-bit or 16-bit floating-point precision, whereas current photonic systems typically operate at much lower effective bit resolutions. This precision gap necessitates novel algorithmic approaches specifically tailored to photonic hardware constraints.

Power consumption, while theoretically advantageous in photonic systems, faces practical limitations. Laser sources, modulators, and detectors still consume significant energy, particularly in systems requiring multiple wavelengths or high optical power. The energy efficiency promised by photonic computing has yet to be fully realized in practical implementations for online learning applications.

Programming models and software frameworks for photonic computing remain underdeveloped compared to their electronic counterparts. The lack of standardized programming interfaces and optimization tools creates barriers for machine learning practitioners seeking to leverage photonic hardware. This software gap slows adoption and limits the exploration of photonic solutions for complex machine learning tasks.

Noise management presents another critical challenge, as photonic systems are susceptible to various noise sources including shot noise, thermal noise, and crosstalk between waveguides. These noise factors can significantly impact the signal-to-noise ratio, potentially degrading the performance of machine learning algorithms that require high computational precision.

State-of-the-Art Photonic Training Architectures

01 Optical Neural Networks and Photonic Computing

Photonic hardware designed for neural network training and implementation, utilizing optical components to perform matrix operations and neural network computations. These systems leverage light's properties for parallel processing, offering advantages in speed and energy efficiency compared to traditional electronic systems. The architecture includes optical elements for weight representation, nonlinear activation functions, and optical signal processing for forward and backward propagation during training.- Optical Neural Networks and Photonic Computing: Photonic hardware designed for neural network training and implementation, utilizing optical components to perform computations. These systems leverage light's properties for parallel processing, offering advantages in speed and energy efficiency compared to electronic systems. The architecture typically includes optical elements for matrix operations, nonlinear activation functions, and specialized photonic integrated circuits for machine learning applications.

- Photonic Tensor Processing Units: Specialized photonic hardware designed specifically for tensor operations in machine learning training. These systems use optical components to accelerate matrix multiplications and other tensor operations critical for training deep neural networks. The architecture incorporates photonic integrated circuits with specialized waveguides, modulators, and detectors to enable high-throughput processing of complex mathematical operations required for model training.

- Integrated Photonic-Electronic Training Systems: Hybrid systems that combine photonic and electronic components to leverage the advantages of both technologies for machine learning training. These architectures use photonics for data-intensive operations while electronic components handle control logic and certain computational tasks. The integration includes interfaces between optical and electronic domains, with specialized hardware for converting between signals and coordinating processing across both paradigms.

- Reconfigurable Photonic Training Platforms: Adaptable photonic hardware systems that can be reconfigured for different machine learning training tasks. These platforms feature programmable optical elements that can be adjusted to implement various neural network architectures and learning algorithms. The hardware includes tunable components such as phase shifters, variable optical attenuators, and reconfigurable optical circuits that can be modified through software control to optimize training for specific applications.

- Coherent Optical Processing for Training: Training hardware that utilizes coherent optical processing techniques to perform machine learning computations. These systems leverage the phase and amplitude properties of light for complex calculations. The architecture includes coherent light sources, phase modulators, beam splitters, and coherent detection systems to implement matrix operations and other computations required for neural network training, offering potential advantages in processing density and energy efficiency.

02 Integrated Photonic Training Circuits

Specialized integrated photonic circuits designed specifically for machine learning training applications. These circuits integrate multiple optical components on a single chip, including waveguides, modulators, photodetectors, and phase shifters to enable efficient on-chip training of neural networks. The integration allows for compact, scalable systems that can perform both inference and training operations while maintaining low power consumption and high processing speeds.Expand Specific Solutions03 Quantum Photonic Training Systems

Hardware systems that combine quantum computing principles with photonic technologies for training machine learning models. These systems utilize quantum properties of light such as superposition and entanglement to perform complex training operations. The architecture includes quantum light sources, quantum gates implemented with optical components, and quantum measurement devices that enable quantum advantage for specific training algorithms and optimization problems.Expand Specific Solutions04 Reconfigurable Photonic Training Hardware

Adaptable photonic systems that can be dynamically reconfigured for different training tasks and neural network architectures. These systems feature programmable optical elements such as spatial light modulators, tunable beam splitters, and reconfigurable optical interconnects that allow for flexible implementation of various training algorithms. The reconfigurability enables optimization of the hardware for specific applications and facilitates hardware-software co-design for machine learning systems.Expand Specific Solutions05 Hybrid Electronic-Photonic Training Platforms

Systems that combine electronic and photonic components to leverage the advantages of both technologies for neural network training. These hybrid platforms use photonics for high-speed matrix operations and data movement while employing electronic components for control, memory, and certain computational tasks. The architecture includes interfaces between electronic and optical domains, co-designed training algorithms that optimize the workload distribution, and specialized hardware for efficient conversion between electronic and optical signals.Expand Specific Solutions

Leading Companies and Research Institutions in Photonics

The photonic training hardware for online learning applications market is in its early growth stage, characterized by increasing research activity but limited commercial deployment. The market size is expanding as AI and machine learning applications drive demand for more energy-efficient computing solutions. Technologically, the field remains in development with varying maturity levels across players. Leading companies like Qualcomm and Lightmatter are pioneering commercial photonic computing solutions, while academic institutions including Oxford University, Peking University, and Huazhong University of Science & Technology contribute fundamental research. Traditional technology corporations such as NEC, Hitachi, and Siemens are exploring integration opportunities, while specialized startups like Beijing Lingxi Technology and Beijing Galaxy RainTai are developing niche applications targeting specific market segments.

Huazhong University of Science & Technology

Technical Solution: Huazhong University has pioneered an integrated photonic neural network architecture specifically optimized for online learning applications. Their approach utilizes phase-change materials (PCMs) embedded within silicon photonic waveguides to create tunable synaptic weights that can be rapidly updated during training. The system implements a novel optical backpropagation mechanism that directly modulates optical signals for weight updates, eliminating the electronic bottleneck in traditional systems. Their latest prototype demonstrates on-chip optical gradient calculation with sub-nanosecond response times, enabling real-time adaptation to new data. The architecture incorporates specialized optical interference units that perform matrix multiplications at the speed of light while consuming minimal power. For online learning scenarios, they've developed a hybrid electro-optical control system that balances update precision with energy efficiency.

Strengths: Ultra-fast weight update capability (nanosecond-scale) enabling true online learning; Highly energy-efficient compared to electronic implementations; Compact integration potential for edge devices. Weaknesses: Current implementations face challenges with optical crosstalk limiting scalability; Precision of optical weight storage still lags behind digital counterparts.

NEC Corp.

Technical Solution: NEC has developed an advanced photonic accelerator platform specifically targeting online learning applications. Their system utilizes coherent optical processing units (COPUs) that leverage phase and amplitude modulation of light to perform neural network computations with unprecedented energy efficiency. For online learning scenarios, NEC's architecture implements a specialized optical feedback path that enables rapid weight updates based on incoming data streams. The system employs proprietary optical memory elements based on phase-change materials that can maintain weight values between updates while allowing fast reconfiguration. Their latest prototype demonstrates the ability to process over 100 billion multiply-accumulate operations per second while consuming less than 2W of power. NEC's architecture also incorporates specialized optical nonlinear elements that implement activation functions directly in the optical domain, eliminating costly optical-electrical-optical conversions that plague many competing designs.

Strengths: Exceptional energy efficiency (approximately 100x better than electronic equivalents); High throughput enabling real-time processing of complex data streams; Compact form factor suitable for edge deployment. Weaknesses: Current implementation requires precise temperature control adding system complexity; Limited precision compared to digital systems may affect learning convergence for some applications.

Key Patents and Innovations in Optical Neural Networks

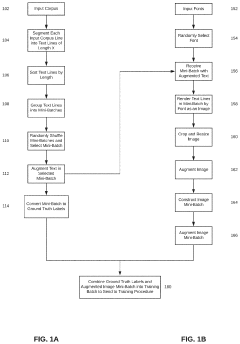

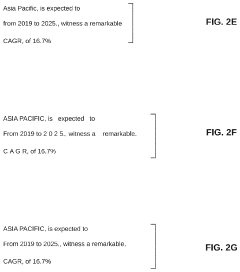

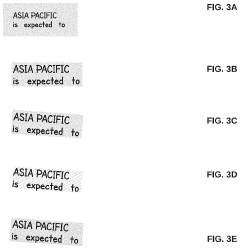

Online training data generation for optical character recognition

PatentActiveUS20210319246A1

Innovation

- The method involves generating training images in CPU memory using asynchronous multi-processing, parallel to the training process in the GPU, allowing for online generation of training data without relying on disk storage, enabling real-time training with varied fonts and image augmentations, thereby reducing overhead and storage needs.

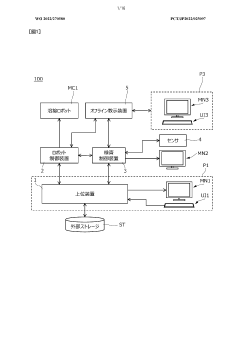

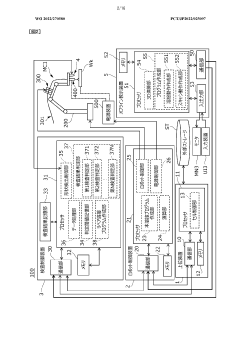

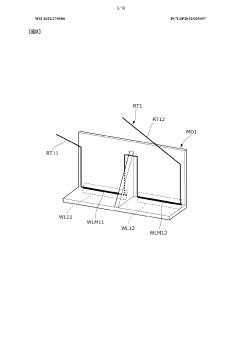

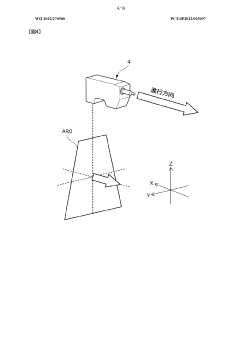

Offline teaching device and offline teaching method

PatentWO2022270580A1

Innovation

- An offline teaching device and method that include an input unit for operator operations, an acquisition unit for three-dimensional shape data of workpieces, and a generation unit to create a teaching program for welding robots, allowing for the visualization of the scannable range and efficient generation of scan areas based on sensor capabilities.

Energy Efficiency Comparison with Electronic Solutions

Photonic computing systems demonstrate significant energy efficiency advantages over their electronic counterparts, particularly in the context of online learning applications. When comparing the energy consumption metrics, photonic neural networks (PNNs) can achieve theoretical energy efficiencies in the femtojoule per operation range, while state-of-the-art electronic GPUs and TPUs typically operate in the picojoule to nanojoule per operation range. This represents a potential improvement of 2-3 orders of magnitude in energy efficiency.

The fundamental physics behind this efficiency advantage lies in the nature of light propagation. Photonic systems can perform matrix multiplications—the core operation in neural network training—with minimal energy dissipation as photons pass through optical elements. In contrast, electronic systems must move charges through resistive components, inevitably generating heat and consuming energy according to Joule's law.

Recent experimental demonstrations have shown that photonic accelerators can achieve energy efficiencies of approximately 10-100 femtojoules per multiply-accumulate operation (MAC), compared to 1-10 picojoules per MAC in cutting-edge electronic AI accelerators. This efficiency becomes particularly pronounced in online learning scenarios where continuous model updates require sustained computational throughput.

The energy scaling characteristics also favor photonic solutions as network complexity increases. While electronic systems face a quadratic increase in energy consumption with matrix size due to data movement costs, photonic systems demonstrate more favorable scaling properties, with energy requirements growing more linearly with the number of network parameters.

Temperature management represents another significant advantage for photonic systems. Electronic neural network accelerators often require elaborate cooling solutions that can consume as much energy as the computation itself. Photonic systems generate substantially less heat, reducing or eliminating the need for active cooling in many deployment scenarios, which further widens the total energy efficiency gap.

However, it is important to note that current photonic training hardware still requires electronic components for control, conversion, and certain processing steps. These hybrid electro-optical systems show reduced efficiency compared to theoretical pure-optical implementations. The energy overhead of electro-optical conversions can be substantial, sometimes accounting for 30-50% of the total system energy budget.

As manufacturing processes mature and integration density increases, the energy efficiency advantage of photonic solutions is expected to grow further. Emerging technologies such as integrated silicon photonics and novel nonlinear optical materials promise to reduce optical losses and improve energy metrics by another order of magnitude in the next generation of photonic training hardware.

The fundamental physics behind this efficiency advantage lies in the nature of light propagation. Photonic systems can perform matrix multiplications—the core operation in neural network training—with minimal energy dissipation as photons pass through optical elements. In contrast, electronic systems must move charges through resistive components, inevitably generating heat and consuming energy according to Joule's law.

Recent experimental demonstrations have shown that photonic accelerators can achieve energy efficiencies of approximately 10-100 femtojoules per multiply-accumulate operation (MAC), compared to 1-10 picojoules per MAC in cutting-edge electronic AI accelerators. This efficiency becomes particularly pronounced in online learning scenarios where continuous model updates require sustained computational throughput.

The energy scaling characteristics also favor photonic solutions as network complexity increases. While electronic systems face a quadratic increase in energy consumption with matrix size due to data movement costs, photonic systems demonstrate more favorable scaling properties, with energy requirements growing more linearly with the number of network parameters.

Temperature management represents another significant advantage for photonic systems. Electronic neural network accelerators often require elaborate cooling solutions that can consume as much energy as the computation itself. Photonic systems generate substantially less heat, reducing or eliminating the need for active cooling in many deployment scenarios, which further widens the total energy efficiency gap.

However, it is important to note that current photonic training hardware still requires electronic components for control, conversion, and certain processing steps. These hybrid electro-optical systems show reduced efficiency compared to theoretical pure-optical implementations. The energy overhead of electro-optical conversions can be substantial, sometimes accounting for 30-50% of the total system energy budget.

As manufacturing processes mature and integration density increases, the energy efficiency advantage of photonic solutions is expected to grow further. Emerging technologies such as integrated silicon photonics and novel nonlinear optical materials promise to reduce optical losses and improve energy metrics by another order of magnitude in the next generation of photonic training hardware.

Integration Challenges with Existing Digital Infrastructure

The integration of photonic training hardware with existing digital infrastructure presents significant challenges that must be addressed for successful implementation in online learning applications. Current digital systems are predominantly based on electronic components and traditional computing architectures, creating a fundamental compatibility gap with photonic technologies. This disparity necessitates the development of specialized interfaces and conversion mechanisms to enable seamless communication between electronic and photonic domains.

Signal conversion represents a primary challenge, as electronic signals must be efficiently transformed into optical signals and vice versa. This conversion process introduces latency and potential signal degradation, which can compromise the performance advantages inherent to photonic systems. The development of high-speed, low-loss electro-optical converters is therefore critical to maintaining the computational benefits of photonic hardware.

Data format compatibility poses another significant obstacle. Existing machine learning frameworks and software libraries are designed for electronic computing systems and may not readily support the unique processing capabilities of photonic hardware. Substantial modifications to these frameworks or the development of new middleware solutions are required to translate conventional digital data structures into formats optimized for photonic processing.

Power management considerations also complicate integration efforts. While photonic systems offer potential energy efficiency advantages for specific computational tasks, they may require different power delivery systems and thermal management solutions compared to traditional electronic infrastructure. These differences can necessitate substantial modifications to existing data center designs and cooling systems.

Networking infrastructure presents additional challenges, as the integration of photonic training hardware may require specialized optical communication channels that differ from standard electronic networking protocols. The implementation of hybrid networks capable of supporting both electronic and photonic data transmission becomes essential for maintaining system-wide performance.

Scalability concerns emerge when considering the deployment of photonic training hardware across distributed learning environments. The ability to scale photonic solutions in parallel with existing digital infrastructure requires standardized interfaces and protocols that currently remain underdeveloped. This standardization gap impedes the seamless expansion of photonic capabilities within established digital ecosystems.

Maintenance and reliability considerations further complicate integration efforts. Technical personnel typically possess expertise in electronic systems but may lack specialized knowledge in photonic technologies. This skills gap necessitates comprehensive training programs and the development of diagnostic tools specifically designed for photonic hardware maintenance.

Signal conversion represents a primary challenge, as electronic signals must be efficiently transformed into optical signals and vice versa. This conversion process introduces latency and potential signal degradation, which can compromise the performance advantages inherent to photonic systems. The development of high-speed, low-loss electro-optical converters is therefore critical to maintaining the computational benefits of photonic hardware.

Data format compatibility poses another significant obstacle. Existing machine learning frameworks and software libraries are designed for electronic computing systems and may not readily support the unique processing capabilities of photonic hardware. Substantial modifications to these frameworks or the development of new middleware solutions are required to translate conventional digital data structures into formats optimized for photonic processing.

Power management considerations also complicate integration efforts. While photonic systems offer potential energy efficiency advantages for specific computational tasks, they may require different power delivery systems and thermal management solutions compared to traditional electronic infrastructure. These differences can necessitate substantial modifications to existing data center designs and cooling systems.

Networking infrastructure presents additional challenges, as the integration of photonic training hardware may require specialized optical communication channels that differ from standard electronic networking protocols. The implementation of hybrid networks capable of supporting both electronic and photonic data transmission becomes essential for maintaining system-wide performance.

Scalability concerns emerge when considering the deployment of photonic training hardware across distributed learning environments. The ability to scale photonic solutions in parallel with existing digital infrastructure requires standardized interfaces and protocols that currently remain underdeveloped. This standardization gap impedes the seamless expansion of photonic capabilities within established digital ecosystems.

Maintenance and reliability considerations further complicate integration efforts. Technical personnel typically possess expertise in electronic systems but may lack specialized knowledge in photonic technologies. This skills gap necessitates comprehensive training programs and the development of diagnostic tools specifically designed for photonic hardware maintenance.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!