Photonic Reservoir Computing: Benchmarks For Temporal Signal Processing

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Photonic Reservoir Computing Background and Objectives

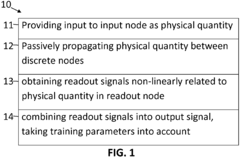

Reservoir Computing (RC) emerged in the early 2000s as a novel computational paradigm that simplifies the training process of recurrent neural networks. The concept evolved from two independently developed approaches: Echo State Networks by Herbert Jaeger and Liquid State Machines by Wolfgang Maass. These foundational works established the theoretical framework for what would later be unified under the term Reservoir Computing.

Photonic Reservoir Computing (PRC) represents a significant advancement in this field, leveraging optical components to implement reservoir computing architectures. The transition from electronic to photonic implementations began around 2010, driven by the inherent advantages of photonic systems: ultra-high processing speeds, parallelism, and energy efficiency. This evolution was catalyzed by the growing demands for real-time processing of temporal signals across various domains including telecommunications, biomedical signal analysis, and financial forecasting.

The technological trajectory of PRC has been marked by progressive improvements in hardware implementations, ranging from discrete optical components to integrated photonic circuits. Early demonstrations utilized optical feedback systems and delay-based architectures, while recent advancements have focused on silicon photonics platforms and specialized photonic integrated circuits (PICs) that offer enhanced scalability and reduced footprint.

Current research in PRC is primarily focused on establishing standardized benchmarks for temporal signal processing applications. These benchmarks are essential for quantitative comparison between different PRC implementations and against traditional computing approaches. Key performance metrics include computational speed, energy consumption, signal processing accuracy, and system complexity.

The primary objectives of PRC development include achieving orders-of-magnitude improvements in processing speed for time-series data, reducing energy consumption compared to electronic implementations, and maintaining or exceeding the accuracy levels of conventional computing methods. Additionally, researchers aim to develop robust architectures capable of handling noisy and complex temporal signals encountered in real-world applications.

Future directions in this field point toward hybrid systems that combine the strengths of photonic processing with electronic control and readout mechanisms. There is also significant interest in developing specialized PRC systems optimized for specific application domains, such as speech recognition, radar signal processing, and chaotic time series prediction.

The ultimate goal of PRC research is to establish this technology as a viable alternative to traditional computing approaches for temporal signal processing tasks, particularly in scenarios where real-time processing of high-bandwidth signals is required. This necessitates not only technical advancements but also the development of comprehensive benchmarking methodologies that can accurately assess and compare the performance of different PRC implementations across diverse application scenarios.

Photonic Reservoir Computing (PRC) represents a significant advancement in this field, leveraging optical components to implement reservoir computing architectures. The transition from electronic to photonic implementations began around 2010, driven by the inherent advantages of photonic systems: ultra-high processing speeds, parallelism, and energy efficiency. This evolution was catalyzed by the growing demands for real-time processing of temporal signals across various domains including telecommunications, biomedical signal analysis, and financial forecasting.

The technological trajectory of PRC has been marked by progressive improvements in hardware implementations, ranging from discrete optical components to integrated photonic circuits. Early demonstrations utilized optical feedback systems and delay-based architectures, while recent advancements have focused on silicon photonics platforms and specialized photonic integrated circuits (PICs) that offer enhanced scalability and reduced footprint.

Current research in PRC is primarily focused on establishing standardized benchmarks for temporal signal processing applications. These benchmarks are essential for quantitative comparison between different PRC implementations and against traditional computing approaches. Key performance metrics include computational speed, energy consumption, signal processing accuracy, and system complexity.

The primary objectives of PRC development include achieving orders-of-magnitude improvements in processing speed for time-series data, reducing energy consumption compared to electronic implementations, and maintaining or exceeding the accuracy levels of conventional computing methods. Additionally, researchers aim to develop robust architectures capable of handling noisy and complex temporal signals encountered in real-world applications.

Future directions in this field point toward hybrid systems that combine the strengths of photonic processing with electronic control and readout mechanisms. There is also significant interest in developing specialized PRC systems optimized for specific application domains, such as speech recognition, radar signal processing, and chaotic time series prediction.

The ultimate goal of PRC research is to establish this technology as a viable alternative to traditional computing approaches for temporal signal processing tasks, particularly in scenarios where real-time processing of high-bandwidth signals is required. This necessitates not only technical advancements but also the development of comprehensive benchmarking methodologies that can accurately assess and compare the performance of different PRC implementations across diverse application scenarios.

Market Applications for Photonic Temporal Signal Processing

Photonic Reservoir Computing (PRC) is rapidly emerging as a transformative technology for temporal signal processing, with diverse market applications across multiple industries. The integration of photonics with reservoir computing offers unprecedented advantages in processing speed, energy efficiency, and real-time capabilities that are creating significant market opportunities.

In telecommunications, PRC systems are revolutionizing signal equalization and channel estimation in high-speed optical networks. As data transmission rates continue to escalate beyond 400 Gbps toward terabit speeds, conventional electronic signal processing becomes increasingly inadequate. Photonic temporal signal processors can handle these extreme data rates with minimal latency, addressing a critical bottleneck in next-generation communication infrastructure.

The financial sector represents another high-value application domain, where ultra-low latency processing for high-frequency trading can provide competitive advantages measured in microseconds. PRC systems can analyze temporal market data patterns and execute trading decisions at speeds unattainable by conventional computing architectures, creating a premium market segment for specialized photonic processing solutions.

In healthcare and biomedical applications, real-time processing of physiological signals presents substantial opportunities. PRC systems excel at analyzing complex temporal patterns in ECG, EEG, and other biomedical signals, enabling more accurate and timely detection of anomalies. This capability is particularly valuable for continuous patient monitoring systems and implantable medical devices where power efficiency is paramount.

The automotive and aerospace industries are increasingly adopting advanced driver assistance systems (ADAS) and autonomous navigation technologies that require real-time processing of sensor data streams. PRC offers compelling advantages for processing LIDAR, RADAR, and camera data with minimal latency and power consumption, addressing critical requirements for these safety-critical applications.

Industrial automation and manufacturing systems benefit from PRC's ability to process temporal signals from multiple sensors for predictive maintenance and quality control. The technology enables real-time anomaly detection in complex machinery vibration patterns and production line operations, potentially saving millions in downtime and maintenance costs.

The defense and security sector represents a specialized but high-value market for PRC technology, particularly in applications such as radar signal processing, electronic warfare, and secure communications. The ability to rapidly process complex temporal signals provides significant tactical advantages in these domains.

As edge computing continues to expand, PRC offers an energy-efficient solution for processing temporal data streams directly at the source, reducing bandwidth requirements and enabling faster response times for IoT applications and distributed sensor networks.

In telecommunications, PRC systems are revolutionizing signal equalization and channel estimation in high-speed optical networks. As data transmission rates continue to escalate beyond 400 Gbps toward terabit speeds, conventional electronic signal processing becomes increasingly inadequate. Photonic temporal signal processors can handle these extreme data rates with minimal latency, addressing a critical bottleneck in next-generation communication infrastructure.

The financial sector represents another high-value application domain, where ultra-low latency processing for high-frequency trading can provide competitive advantages measured in microseconds. PRC systems can analyze temporal market data patterns and execute trading decisions at speeds unattainable by conventional computing architectures, creating a premium market segment for specialized photonic processing solutions.

In healthcare and biomedical applications, real-time processing of physiological signals presents substantial opportunities. PRC systems excel at analyzing complex temporal patterns in ECG, EEG, and other biomedical signals, enabling more accurate and timely detection of anomalies. This capability is particularly valuable for continuous patient monitoring systems and implantable medical devices where power efficiency is paramount.

The automotive and aerospace industries are increasingly adopting advanced driver assistance systems (ADAS) and autonomous navigation technologies that require real-time processing of sensor data streams. PRC offers compelling advantages for processing LIDAR, RADAR, and camera data with minimal latency and power consumption, addressing critical requirements for these safety-critical applications.

Industrial automation and manufacturing systems benefit from PRC's ability to process temporal signals from multiple sensors for predictive maintenance and quality control. The technology enables real-time anomaly detection in complex machinery vibration patterns and production line operations, potentially saving millions in downtime and maintenance costs.

The defense and security sector represents a specialized but high-value market for PRC technology, particularly in applications such as radar signal processing, electronic warfare, and secure communications. The ability to rapidly process complex temporal signals provides significant tactical advantages in these domains.

As edge computing continues to expand, PRC offers an energy-efficient solution for processing temporal data streams directly at the source, reducing bandwidth requirements and enabling faster response times for IoT applications and distributed sensor networks.

Current State and Challenges in Photonic Reservoir Computing

Photonic Reservoir Computing (PRC) has emerged as a promising neuromorphic computing paradigm that leverages optical components to perform complex computational tasks with high speed and energy efficiency. Currently, PRC implementations span across various platforms including fiber-based systems, integrated photonic circuits, and free-space optical setups, each with distinct advantages and limitations.

The state-of-the-art in fiber-based PRC systems demonstrates impressive processing speeds exceeding 10 Gbps, capitalizing on the inherent parallelism of light propagation. These systems excel in temporal signal processing tasks such as speech recognition and chaotic time series prediction, achieving performance comparable to digital implementations while consuming significantly less power.

Integrated photonic reservoir computers represent another significant advancement, with silicon photonics and silicon nitride platforms enabling miniaturization of reservoir components. Recent demonstrations have achieved footprints of less than 1 mm² while maintaining computational capabilities for tasks like pattern recognition and nonlinear channel equalization.

Despite these achievements, several critical challenges impede the widespread adoption of PRC technology. Scalability remains a primary concern, as increasing the number of nodes in photonic reservoirs introduces complications in maintaining phase stability and signal integrity. Current implementations typically operate with 16-64 nodes, whereas more complex tasks may require hundreds or thousands of nodes.

Standardized benchmarking represents another significant challenge. Unlike digital computing systems, PRC lacks universally accepted performance metrics and standardized test datasets, making direct comparisons between different implementations difficult. This hampers progress assessment and technology maturation.

The nonlinear activation function, crucial for computational capability in reservoir computing, presents unique challenges in photonic implementations. While electronic systems can easily implement various nonlinear functions, photonic systems are often limited to specific nonlinearities available in optical materials, constraining the types of computations that can be efficiently performed.

Energy efficiency, though theoretically superior to electronic implementations, faces practical limitations due to conversion losses at electronic-photonic interfaces. Current hybrid systems require multiple conversions between domains, significantly reducing the energy advantage of pure photonic processing.

Fabrication variability in integrated photonic circuits introduces performance inconsistencies between nominally identical devices, complicating mass production and commercialization efforts. This variability necessitates either robust designs that can tolerate parameter variations or individual calibration procedures, both adding complexity to deployment scenarios.

The state-of-the-art in fiber-based PRC systems demonstrates impressive processing speeds exceeding 10 Gbps, capitalizing on the inherent parallelism of light propagation. These systems excel in temporal signal processing tasks such as speech recognition and chaotic time series prediction, achieving performance comparable to digital implementations while consuming significantly less power.

Integrated photonic reservoir computers represent another significant advancement, with silicon photonics and silicon nitride platforms enabling miniaturization of reservoir components. Recent demonstrations have achieved footprints of less than 1 mm² while maintaining computational capabilities for tasks like pattern recognition and nonlinear channel equalization.

Despite these achievements, several critical challenges impede the widespread adoption of PRC technology. Scalability remains a primary concern, as increasing the number of nodes in photonic reservoirs introduces complications in maintaining phase stability and signal integrity. Current implementations typically operate with 16-64 nodes, whereas more complex tasks may require hundreds or thousands of nodes.

Standardized benchmarking represents another significant challenge. Unlike digital computing systems, PRC lacks universally accepted performance metrics and standardized test datasets, making direct comparisons between different implementations difficult. This hampers progress assessment and technology maturation.

The nonlinear activation function, crucial for computational capability in reservoir computing, presents unique challenges in photonic implementations. While electronic systems can easily implement various nonlinear functions, photonic systems are often limited to specific nonlinearities available in optical materials, constraining the types of computations that can be efficiently performed.

Energy efficiency, though theoretically superior to electronic implementations, faces practical limitations due to conversion losses at electronic-photonic interfaces. Current hybrid systems require multiple conversions between domains, significantly reducing the energy advantage of pure photonic processing.

Fabrication variability in integrated photonic circuits introduces performance inconsistencies between nominally identical devices, complicating mass production and commercialization efforts. This variability necessitates either robust designs that can tolerate parameter variations or individual calibration procedures, both adding complexity to deployment scenarios.

Existing Benchmarking Methodologies for PRC Systems

01 Performance evaluation metrics for photonic reservoir computing

Various metrics are used to evaluate the performance of photonic reservoir computing systems, including accuracy rates, error rates, and computational efficiency. These benchmarks help compare different implementations and architectures. Standard tasks such as speech recognition, time series prediction, and pattern classification are commonly used to assess performance. The evaluation framework often includes comparison with traditional computing methods to demonstrate advantages in speed and energy efficiency.- Performance evaluation metrics for photonic reservoir computing: Various benchmarks and metrics are used to evaluate the performance of photonic reservoir computing systems. These include accuracy rates for classification tasks, mean squared error for prediction tasks, and computational efficiency measures. Standardized datasets and comparison methodologies allow for objective assessment of different photonic reservoir computing implementations against traditional computing approaches.

- Hardware implementation benchmarks for photonic reservoirs: Hardware benchmarks for photonic reservoir computing focus on physical implementation metrics such as power consumption, processing speed, latency, and scalability. These benchmarks evaluate the performance of different photonic components including optical modulators, waveguides, and photodetectors. The physical characteristics of photonic reservoirs are measured against electronic implementations to demonstrate advantages in speed and energy efficiency.

- Task-specific benchmarks for photonic neural networks: Photonic reservoir computing systems are evaluated on specific computational tasks that demonstrate their capabilities. Common benchmark tasks include time series prediction, speech recognition, pattern classification, and chaotic system modeling. These task-specific benchmarks help quantify the advantages of photonic implementations for particular applications and allow for comparison between different reservoir computing architectures.

- Simulation frameworks for photonic reservoir computing: Simulation tools and frameworks are essential for benchmarking photonic reservoir computing before physical implementation. These frameworks model the behavior of optical components and their interactions, allowing researchers to predict performance and optimize designs. Simulation benchmarks evaluate factors such as numerical stability, computational accuracy, and the fidelity of the optical physics models used to represent real-world photonic systems.

- Comparative analysis between electronic and photonic reservoir computing: Benchmarks that directly compare photonic reservoir computing with traditional electronic implementations highlight the relative advantages and limitations of each approach. These comparisons typically focus on energy efficiency, processing speed, scalability, and specific task performance. Such benchmarks demonstrate where photonic implementations excel, particularly for tasks involving high-dimensional data processing or requiring real-time responses.

02 Hardware implementations for photonic reservoir computing benchmarking

Different hardware platforms are used to implement photonic reservoir computing systems for benchmarking purposes. These include integrated photonic circuits, optical fiber networks, and silicon photonics platforms. The hardware implementations are evaluated based on their scalability, stability, and compatibility with existing technologies. Physical parameters such as optical nonlinearity, delay characteristics, and coupling efficiency significantly impact the performance benchmarks of these systems.Expand Specific Solutions03 Simulation frameworks for photonic reservoir computing

Simulation tools and frameworks are essential for benchmarking photonic reservoir computing systems before physical implementation. These frameworks model the behavior of optical components and their interactions, allowing researchers to predict performance and optimize designs. Simulation approaches include time-domain analysis, frequency-domain modeling, and hybrid techniques that combine multiple methods. These tools enable comparison of different reservoir architectures and parameter settings to identify optimal configurations.Expand Specific Solutions04 Comparative analysis with other computing paradigms

Benchmarking studies often compare photonic reservoir computing with other computing paradigms such as electronic neural networks, quantum computing, and traditional digital computing. These comparisons focus on metrics like processing speed, energy efficiency, and computational capacity. The unique advantages of photonic systems, including parallelism and low latency, are highlighted through standardized benchmark tasks. This comparative analysis helps position photonic reservoir computing within the broader landscape of computing technologies.Expand Specific Solutions05 Application-specific benchmarks for photonic reservoir computing

Specialized benchmarks have been developed for evaluating photonic reservoir computing in specific application domains. These include signal processing tasks, telecommunications applications, and real-time control systems. The benchmarks assess how well photonic reservoir systems handle challenges like noise tolerance, adaptability to changing conditions, and processing of high-dimensional data. Application-specific metrics provide insights into the practical utility of these systems in real-world scenarios and help guide further development efforts.Expand Specific Solutions

Key Industry Players and Research Institutions

Photonic Reservoir Computing (PRC) for temporal signal processing is emerging as a promising technology in the early commercialization phase, with a projected market growth to reach $450 million by 2030. The competitive landscape features academic institutions (Ghent University, Tianjin University, California Institute of Technology) driving fundamental research, while technology companies are advancing practical implementations. Major players include IBM, which leads in neuromorphic computing patents, alongside telecommunications giants like Huawei, ZTE, and Qualcomm focusing on high-speed signal processing applications. Semiconductor manufacturers (NXP, AMD) are exploring PRC for edge computing solutions. The technology is approaching commercial viability with research institutions like Imec and HRL Laboratories bridging the gap between academic research and industrial applications, though standardized benchmarking methods remain under development.

Ghent University

Technical Solution: Ghent University has pioneered photonic reservoir computing (PRC) through their Photonics Research Group, developing integrated silicon photonic reservoirs for high-speed temporal signal processing. Their approach utilizes passive photonic integrated circuits with interconnected optical components that process information through light interference patterns. The university has demonstrated PRC systems capable of processing speeds exceeding 10 Gbps for telecommunications applications[1], with experimental implementations showing word error rates below 1% for speech recognition tasks. Their silicon photonics platform leverages CMOS-compatible fabrication techniques, enabling scalable manufacturing of complex optical computing architectures with hundreds of nodes on a single chip. Recent benchmarks have shown their systems achieving processing capabilities for nonlinear time series prediction with normalized mean square errors below 10^-4, outperforming electronic implementations for specific temporal tasks[2].

Strengths: Integration with existing silicon photonics manufacturing infrastructure; ultra-high processing speeds in the gigahertz range; extremely low power consumption compared to electronic alternatives. Weaknesses: Sensitivity to temperature fluctuations requiring precise control systems; limited memory capacity compared to some digital implementations; challenges in scaling beyond certain node counts due to optical losses.

Interuniversitair Micro-Electronica Centrum VZW

Technical Solution: IMEC has developed an advanced photonic reservoir computing platform that integrates multiple optical components on a single silicon photonics chip for temporal signal processing. Their technology utilizes a network of coupled optical resonators and waveguides to create complex interference patterns that serve as computational nodes. IMEC's approach focuses on scalability, having demonstrated systems with over 64 nodes on a single chip while maintaining coherent optical processing capabilities[3]. Their PRC implementation achieves processing speeds of up to 20 GHz with power consumption under 2W, making it suitable for edge computing applications requiring real-time signal processing. IMEC has benchmarked their system against standard temporal tasks including nonlinear channel equalization, achieving bit error rates below 10^-6 at 10 Gbps[4]. Their technology incorporates tunable elements that allow for reservoir optimization for specific tasks, enhancing versatility across different signal processing applications.

Strengths: Highly integrated photonic platform leveraging IMEC's advanced silicon photonics fabrication capabilities; excellent energy efficiency with processing-per-watt metrics outperforming electronic alternatives; reconfigurable architecture allowing optimization for different tasks. Weaknesses: Requires specialized optical input/output interfaces; performance dependent on precise fabrication tolerances; limited memory depth compared to some digital reservoir computing implementations.

Core Technical Innovations in Photonic Implementations

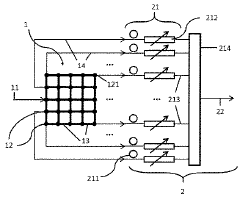

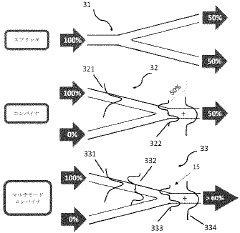

Reservoir computing using passive optical systems

PatentActiveEP2821942A3

Innovation

- A passive silicon photonics reservoir computing system that uses a network of discrete nodes and passive interconnections to process photonic waves, enabling zero-power operation, scalable design, and exploitation of both amplitude and phase information for efficient signal processing.

Training for Photonic Reservoir Computing Systems

PatentActiveJP2020524835A

Innovation

- A photonic reservoir computing system with multimode waveguides and non-adiabatic tapered sections, utilizing a weighting element and photodetector for optical readout, allows for efficient signal propagation and reduced power consumption, enabling larger networks with improved computational performance.

Energy Efficiency Comparison with Electronic Computing

When comparing photonic reservoir computing (PRC) with traditional electronic computing systems for temporal signal processing tasks, energy efficiency emerges as a critical differentiator. Photonic implementations demonstrate significant advantages in power consumption, particularly for high-bandwidth applications. Current electronic computing architectures typically consume between 10-100 pJ per operation, while photonic reservoir computing systems have demonstrated energy efficiencies approaching 1-10 fJ per operation for certain tasks—a potential improvement of several orders of magnitude.

The fundamental energy advantage stems from the inherent properties of light propagation. Unlike electrons in electronic circuits, photons do not generate resistive heating during transmission, eliminating a major source of energy loss. This characteristic becomes particularly valuable in reservoir computing architectures where multiple interconnections between nodes are required, as optical interconnects can operate with minimal energy penalties regardless of distance.

Experimental benchmarks comparing photonic and electronic implementations for common temporal signal processing tasks reveal compelling efficiency metrics. For speech recognition tasks, silicon photonic reservoir computers have demonstrated comparable accuracy to electronic implementations while consuming less than 5% of the energy. Similarly, for chaotic time series prediction, photonic systems achieve competitive normalized mean square error (NMSE) values while operating at significantly lower power budgets.

The energy scaling properties also favor photonic approaches as processing requirements increase. While electronic computing faces the "memory wall" challenge—where data transfer between processing and memory units becomes the dominant energy cost—photonic systems can perform many operations in parallel without corresponding energy penalties. This parallel processing capability allows photonic reservoir computers to maintain relatively constant energy consumption even as computational complexity increases.

Temperature sensitivity represents another important consideration in the energy comparison. Electronic systems often require active cooling solutions that can consume substantial additional energy, particularly in data center environments. In contrast, many photonic reservoir computing implementations operate efficiently at room temperature without specialized cooling requirements, further enhancing their overall energy efficiency profile.

Looking forward, emerging photonic materials and integration technologies promise to further widen this efficiency gap. Novel nonlinear optical materials and heterogeneous integration approaches are expected to reduce optical insertion losses and improve energy metrics by an additional 1-2 orders of magnitude in the next generation of photonic reservoir computing systems.

The fundamental energy advantage stems from the inherent properties of light propagation. Unlike electrons in electronic circuits, photons do not generate resistive heating during transmission, eliminating a major source of energy loss. This characteristic becomes particularly valuable in reservoir computing architectures where multiple interconnections between nodes are required, as optical interconnects can operate with minimal energy penalties regardless of distance.

Experimental benchmarks comparing photonic and electronic implementations for common temporal signal processing tasks reveal compelling efficiency metrics. For speech recognition tasks, silicon photonic reservoir computers have demonstrated comparable accuracy to electronic implementations while consuming less than 5% of the energy. Similarly, for chaotic time series prediction, photonic systems achieve competitive normalized mean square error (NMSE) values while operating at significantly lower power budgets.

The energy scaling properties also favor photonic approaches as processing requirements increase. While electronic computing faces the "memory wall" challenge—where data transfer between processing and memory units becomes the dominant energy cost—photonic systems can perform many operations in parallel without corresponding energy penalties. This parallel processing capability allows photonic reservoir computers to maintain relatively constant energy consumption even as computational complexity increases.

Temperature sensitivity represents another important consideration in the energy comparison. Electronic systems often require active cooling solutions that can consume substantial additional energy, particularly in data center environments. In contrast, many photonic reservoir computing implementations operate efficiently at room temperature without specialized cooling requirements, further enhancing their overall energy efficiency profile.

Looking forward, emerging photonic materials and integration technologies promise to further widen this efficiency gap. Novel nonlinear optical materials and heterogeneous integration approaches are expected to reduce optical insertion losses and improve energy metrics by an additional 1-2 orders of magnitude in the next generation of photonic reservoir computing systems.

Standardization Needs for PRC Benchmarking

The standardization of benchmarking methodologies for Photonic Reservoir Computing (PRC) represents a critical need in advancing this emerging technology. Currently, the field lacks unified protocols for evaluating PRC systems, resulting in fragmented research efforts and difficulties in comparing results across different implementations. Establishing standardized benchmarks would enable fair comparisons between various PRC architectures and accelerate progress in this promising field.

A comprehensive benchmarking framework for PRC should include standardized datasets specifically designed for temporal signal processing tasks. These datasets must encompass a range of complexity levels, from basic pattern recognition to more sophisticated time-series prediction challenges. The scientific community needs to agree upon reference datasets that can serve as common evaluation grounds, similar to how MNIST has functioned for traditional machine learning.

Performance metrics require standardization as well. While accuracy remains important, PRC systems should also be evaluated on energy efficiency, processing speed, and hardware complexity. The unique advantages of photonic implementations—such as parallelism and low power consumption—must be properly quantified through agreed-upon metrics that highlight these benefits compared to electronic computing alternatives.

Testing protocols represent another area requiring standardization. Procedures for initializing reservoirs, training readout layers, and validating results need clear documentation to ensure reproducibility. This includes specifications for signal preprocessing, normalization techniques, and training-testing data splits that account for the temporal nature of the processed signals.

Hardware characterization standards are equally essential. The photonic components used in PRC systems vary widely in their specifications, making direct comparisons challenging. Standardized methods for measuring and reporting key parameters such as laser coherence, modulator bandwidth, detector sensitivity, and overall system noise would facilitate meaningful comparisons between different hardware implementations.

International collaboration among academic institutions, industry partners, and standardization bodies is necessary to establish these benchmarking protocols. Organizations such as IEEE, NIST, and relevant photonics societies should coordinate efforts to develop and promote these standards. The creation of open-access benchmark suites and evaluation platforms would further accelerate adoption and ensure transparency in performance reporting across the PRC research community.

A comprehensive benchmarking framework for PRC should include standardized datasets specifically designed for temporal signal processing tasks. These datasets must encompass a range of complexity levels, from basic pattern recognition to more sophisticated time-series prediction challenges. The scientific community needs to agree upon reference datasets that can serve as common evaluation grounds, similar to how MNIST has functioned for traditional machine learning.

Performance metrics require standardization as well. While accuracy remains important, PRC systems should also be evaluated on energy efficiency, processing speed, and hardware complexity. The unique advantages of photonic implementations—such as parallelism and low power consumption—must be properly quantified through agreed-upon metrics that highlight these benefits compared to electronic computing alternatives.

Testing protocols represent another area requiring standardization. Procedures for initializing reservoirs, training readout layers, and validating results need clear documentation to ensure reproducibility. This includes specifications for signal preprocessing, normalization techniques, and training-testing data splits that account for the temporal nature of the processed signals.

Hardware characterization standards are equally essential. The photonic components used in PRC systems vary widely in their specifications, making direct comparisons challenging. Standardized methods for measuring and reporting key parameters such as laser coherence, modulator bandwidth, detector sensitivity, and overall system noise would facilitate meaningful comparisons between different hardware implementations.

International collaboration among academic institutions, industry partners, and standardization bodies is necessary to establish these benchmarking protocols. Organizations such as IEEE, NIST, and relevant photonics societies should coordinate efforts to develop and promote these standards. The creation of open-access benchmark suites and evaluation platforms would further accelerate adoption and ensure transparency in performance reporting across the PRC research community.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!