Power-Efficiency Metrics For Photonic Neuromorphic Systems

AUG 29, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Photonic Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create more efficient and powerful computing systems. Photonic neuromorphic computing specifically leverages light rather than electricity to process information, offering unprecedented advantages in speed, bandwidth, and energy efficiency. This technological approach has evolved significantly over the past two decades, transitioning from theoretical concepts to practical implementations that demonstrate remarkable capabilities.

The evolution of photonic neuromorphic systems can be traced back to early optical computing research in the 1980s, but significant breakthroughs emerged in the early 2000s with the development of integrated photonic circuits. The field gained substantial momentum around 2010 when researchers successfully demonstrated optical neural network implementations that could perform basic pattern recognition tasks. Since then, the technology has advanced rapidly, incorporating innovations in materials science, photonic integrated circuits, and novel optical nonlinearities.

Current technological trends point toward increasingly miniaturized and integrated photonic neuromorphic systems, with particular emphasis on improving power efficiency metrics. These metrics have become central to evaluating the viability of photonic neuromorphic computing for real-world applications, especially as energy consumption emerges as a critical constraint in modern computing infrastructure. The field is witnessing convergence between traditional silicon photonics and emerging materials like phase-change materials and nonlinear optical polymers.

The primary technical objectives in this domain focus on establishing standardized power-efficiency metrics that accurately capture the unique advantages of photonic implementations over electronic counterparts. These metrics must account for factors such as optical-to-electrical conversion losses, thermal management requirements, and the energy costs of maintaining optical coherence. Additionally, researchers aim to develop benchmarking frameworks that enable fair comparisons between diverse photonic neuromorphic architectures.

Looking forward, the field is targeting several ambitious goals: achieving sub-femtojoule-per-operation energy consumption, demonstrating large-scale photonic neural networks with millions of artificial neurons, and creating hybrid electro-optical systems that leverage the strengths of both domains. Power efficiency remains the cornerstone metric that will determine whether photonic neuromorphic computing can fulfill its promise of revolutionizing artificial intelligence hardware, particularly for edge computing applications where energy constraints are most severe.

The ultimate objective is to establish photonic neuromorphic systems as a viable alternative to electronic implementations, particularly for specific workloads where their inherent parallelism and energy efficiency provide compelling advantages. This requires not only technical advancements but also the development of comprehensive evaluation frameworks that accurately quantify their power-efficiency benefits across diverse application scenarios.

The evolution of photonic neuromorphic systems can be traced back to early optical computing research in the 1980s, but significant breakthroughs emerged in the early 2000s with the development of integrated photonic circuits. The field gained substantial momentum around 2010 when researchers successfully demonstrated optical neural network implementations that could perform basic pattern recognition tasks. Since then, the technology has advanced rapidly, incorporating innovations in materials science, photonic integrated circuits, and novel optical nonlinearities.

Current technological trends point toward increasingly miniaturized and integrated photonic neuromorphic systems, with particular emphasis on improving power efficiency metrics. These metrics have become central to evaluating the viability of photonic neuromorphic computing for real-world applications, especially as energy consumption emerges as a critical constraint in modern computing infrastructure. The field is witnessing convergence between traditional silicon photonics and emerging materials like phase-change materials and nonlinear optical polymers.

The primary technical objectives in this domain focus on establishing standardized power-efficiency metrics that accurately capture the unique advantages of photonic implementations over electronic counterparts. These metrics must account for factors such as optical-to-electrical conversion losses, thermal management requirements, and the energy costs of maintaining optical coherence. Additionally, researchers aim to develop benchmarking frameworks that enable fair comparisons between diverse photonic neuromorphic architectures.

Looking forward, the field is targeting several ambitious goals: achieving sub-femtojoule-per-operation energy consumption, demonstrating large-scale photonic neural networks with millions of artificial neurons, and creating hybrid electro-optical systems that leverage the strengths of both domains. Power efficiency remains the cornerstone metric that will determine whether photonic neuromorphic computing can fulfill its promise of revolutionizing artificial intelligence hardware, particularly for edge computing applications where energy constraints are most severe.

The ultimate objective is to establish photonic neuromorphic systems as a viable alternative to electronic implementations, particularly for specific workloads where their inherent parallelism and energy efficiency provide compelling advantages. This requires not only technical advancements but also the development of comprehensive evaluation frameworks that accurately quantify their power-efficiency benefits across diverse application scenarios.

Market Analysis for Energy-Efficient Computing Solutions

The energy-efficient computing solutions market is experiencing unprecedented growth, driven by the increasing computational demands of artificial intelligence, big data analytics, and cloud computing. This growth trajectory is particularly evident in the photonic neuromorphic systems segment, which offers significant power efficiency advantages over traditional electronic computing architectures.

Current market valuations indicate that the global energy-efficient computing market reached approximately $28 billion in 2022, with projections suggesting a compound annual growth rate of 19.2% through 2030. Photonic neuromorphic systems, though currently occupying a smaller market share of around $1.2 billion, are expected to grow at an accelerated rate of 24.5% annually, potentially reaching $7.3 billion by 2030.

The demand for power-efficient computing solutions is primarily fueled by data centers, which collectively consume over 200 terawatt-hours of electricity annually worldwide. This represents approximately 1% of global electricity consumption, with projections indicating this figure could rise to 3-5% by 2030 without significant efficiency improvements. Photonic neuromorphic systems offer a promising solution, potentially reducing energy consumption by 90-95% compared to conventional electronic systems for specific neural network operations.

Market segmentation reveals distinct customer categories with varying needs. Hyperscale cloud providers prioritize total cost of ownership, with energy costs representing 25-40% of operational expenses. Research institutions focus on computational capacity per watt for specialized scientific applications. Edge computing deployments emphasize performance per watt in space-constrained environments.

Regional analysis shows North America leading the market with 42% share, followed by Asia-Pacific at 31%, which is growing at the fastest rate due to substantial investments in China, Japan, and South Korea. The European market accounts for 22%, with particular emphasis on sustainable computing solutions aligned with EU climate initiatives.

Competitive dynamics indicate a fragmented landscape with traditional semiconductor companies, specialized photonics startups, and major technology corporations all vying for market share. The market exhibits moderate concentration with the top five players controlling approximately 38% of revenue. Barriers to entry remain high due to significant capital requirements and intellectual property considerations.

Customer adoption patterns reveal a preference for hybrid solutions that integrate photonic processing for specific workloads while maintaining compatibility with existing electronic infrastructure. This suggests a gradual transition pathway rather than disruptive replacement, with power efficiency metrics serving as key differentiators in purchasing decisions.

Current market valuations indicate that the global energy-efficient computing market reached approximately $28 billion in 2022, with projections suggesting a compound annual growth rate of 19.2% through 2030. Photonic neuromorphic systems, though currently occupying a smaller market share of around $1.2 billion, are expected to grow at an accelerated rate of 24.5% annually, potentially reaching $7.3 billion by 2030.

The demand for power-efficient computing solutions is primarily fueled by data centers, which collectively consume over 200 terawatt-hours of electricity annually worldwide. This represents approximately 1% of global electricity consumption, with projections indicating this figure could rise to 3-5% by 2030 without significant efficiency improvements. Photonic neuromorphic systems offer a promising solution, potentially reducing energy consumption by 90-95% compared to conventional electronic systems for specific neural network operations.

Market segmentation reveals distinct customer categories with varying needs. Hyperscale cloud providers prioritize total cost of ownership, with energy costs representing 25-40% of operational expenses. Research institutions focus on computational capacity per watt for specialized scientific applications. Edge computing deployments emphasize performance per watt in space-constrained environments.

Regional analysis shows North America leading the market with 42% share, followed by Asia-Pacific at 31%, which is growing at the fastest rate due to substantial investments in China, Japan, and South Korea. The European market accounts for 22%, with particular emphasis on sustainable computing solutions aligned with EU climate initiatives.

Competitive dynamics indicate a fragmented landscape with traditional semiconductor companies, specialized photonics startups, and major technology corporations all vying for market share. The market exhibits moderate concentration with the top five players controlling approximately 38% of revenue. Barriers to entry remain high due to significant capital requirements and intellectual property considerations.

Customer adoption patterns reveal a preference for hybrid solutions that integrate photonic processing for specific workloads while maintaining compatibility with existing electronic infrastructure. This suggests a gradual transition pathway rather than disruptive replacement, with power efficiency metrics serving as key differentiators in purchasing decisions.

Current Power-Efficiency Challenges in Photonic Neural Networks

Despite the promising potential of photonic neural networks (PNNs) in revolutionizing computing paradigms, they face significant power-efficiency challenges that hinder their widespread adoption. Current electronic neuromorphic systems already demonstrate impressive energy efficiency, with state-of-the-art implementations achieving operations at femtojoule levels. Photonic counterparts must not only match but exceed these benchmarks to justify the transition to optical computing platforms.

A primary challenge lies in the power consumption of optical-electrical-optical (O-E-O) conversions. These conversions occur at the interfaces between electronic control systems and photonic computing cores, creating substantial energy overhead. Current implementations require multiple conversions throughout the processing pipeline, with each conversion consuming energy in the picojoule range, significantly diminishing the overall system efficiency.

Optical component losses represent another critical challenge. Waveguide propagation losses, coupling inefficiencies, and insertion losses in photonic integrated circuits collectively contribute to signal attenuation that must be compensated with additional optical power. Current silicon photonics platforms typically exhibit waveguide losses of 1-3 dB/cm, while more specialized materials may offer improved performance but at higher manufacturing complexity and cost.

The power requirements of active photonic components further complicate efficiency metrics. Optical modulators, widely used for implementing weights in PNNs, currently operate with energy consumption ranging from femtojoules to picojoules per operation, depending on the modulation scheme and material platform. Similarly, tunable elements for reconfiguring network weights often rely on thermo-optic effects, consuming milliwatts of power per element and generating heat that can affect nearby components.

Laser sources, essential for providing optical power to the system, present a substantial efficiency bottleneck. Current on-chip and off-chip laser sources operate at electrical-to-optical conversion efficiencies typically below 30%, with significant portions of input power dissipated as heat. This inefficiency scales with system size, becoming particularly problematic for large-scale neural networks requiring multiple wavelength channels.

Thermal management issues compound these challenges, as photonic components often exhibit temperature-dependent behavior that necessitates precise thermal control systems. These thermal stabilization mechanisms consume additional power that is rarely accounted for in reported efficiency metrics, creating a discrepancy between theoretical and practical power consumption figures.

Standardized benchmarking methodologies for photonic neuromorphic systems remain underdeveloped, making fair comparisons between different implementations challenging. Current metrics vary widely, with some researchers reporting only the energy consumption of core photonic operations while excluding peripheral electronics, control systems, and thermal management overhead.

A primary challenge lies in the power consumption of optical-electrical-optical (O-E-O) conversions. These conversions occur at the interfaces between electronic control systems and photonic computing cores, creating substantial energy overhead. Current implementations require multiple conversions throughout the processing pipeline, with each conversion consuming energy in the picojoule range, significantly diminishing the overall system efficiency.

Optical component losses represent another critical challenge. Waveguide propagation losses, coupling inefficiencies, and insertion losses in photonic integrated circuits collectively contribute to signal attenuation that must be compensated with additional optical power. Current silicon photonics platforms typically exhibit waveguide losses of 1-3 dB/cm, while more specialized materials may offer improved performance but at higher manufacturing complexity and cost.

The power requirements of active photonic components further complicate efficiency metrics. Optical modulators, widely used for implementing weights in PNNs, currently operate with energy consumption ranging from femtojoules to picojoules per operation, depending on the modulation scheme and material platform. Similarly, tunable elements for reconfiguring network weights often rely on thermo-optic effects, consuming milliwatts of power per element and generating heat that can affect nearby components.

Laser sources, essential for providing optical power to the system, present a substantial efficiency bottleneck. Current on-chip and off-chip laser sources operate at electrical-to-optical conversion efficiencies typically below 30%, with significant portions of input power dissipated as heat. This inefficiency scales with system size, becoming particularly problematic for large-scale neural networks requiring multiple wavelength channels.

Thermal management issues compound these challenges, as photonic components often exhibit temperature-dependent behavior that necessitates precise thermal control systems. These thermal stabilization mechanisms consume additional power that is rarely accounted for in reported efficiency metrics, creating a discrepancy between theoretical and practical power consumption figures.

Standardized benchmarking methodologies for photonic neuromorphic systems remain underdeveloped, making fair comparisons between different implementations challenging. Current metrics vary widely, with some researchers reporting only the energy consumption of core photonic operations while excluding peripheral electronics, control systems, and thermal management overhead.

Existing Power-Efficiency Measurement Methodologies

01 Optical computing architectures for energy-efficient neuromorphic systems

Photonic neuromorphic systems utilize optical computing architectures to achieve higher energy efficiency compared to electronic counterparts. These systems leverage light's properties for parallel processing and reduced power consumption in neural network operations. By implementing optical interconnects and photonic integrated circuits, these architectures minimize energy losses associated with electronic data transfer and processing, resulting in significantly improved computational efficiency for AI applications.- Optical computing architectures for energy-efficient neuromorphic systems: Photonic neuromorphic systems utilize optical computing architectures to achieve higher power efficiency compared to electronic counterparts. These systems leverage light's inherent parallelism and low propagation loss to perform neural network computations with minimal energy consumption. By implementing optical waveguides, resonators, and interferometers, these architectures can process information at the speed of light while significantly reducing power requirements for complex computational tasks.

- Integrated photonic neural networks with low-power consumption: Integrated photonic neural networks combine multiple optical components on a single chip to create energy-efficient neuromorphic systems. These networks incorporate photonic integrated circuits (PICs) that minimize power losses at connection points and reduce overall system size. The integration enables efficient light routing, wavelength multiplexing, and parallel processing capabilities while maintaining low power consumption, making them suitable for edge computing applications where energy efficiency is critical.

- Optical weight elements and synaptic devices for power-efficient computing: Specialized optical weight elements and synaptic devices form the foundation of energy-efficient photonic neuromorphic systems. These components include phase-change materials, microring resonators, and other tunable optical elements that can store and process information with minimal power requirements. By mimicking biological synapses using light-based mechanisms, these devices achieve significant power savings while maintaining high computational throughput and accuracy for neural network operations.

- Hybrid electro-optical approaches for optimized power efficiency: Hybrid electro-optical neuromorphic systems combine the advantages of both electronic and photonic technologies to optimize power efficiency. These systems strategically allocate computational tasks between electronic and optical domains based on energy considerations. The electronic components handle control functions and memory, while optical components perform the energy-intensive matrix multiplications and convolutions. This hybrid approach achieves better power efficiency than purely electronic systems while addressing some of the practical limitations of all-optical implementations.

- Novel materials and fabrication techniques for energy-efficient photonic neural systems: Advanced materials and fabrication techniques enable the development of highly power-efficient photonic neuromorphic systems. These include silicon nitride platforms, chalcogenide phase-change materials, and novel 2D materials that offer improved optical properties with reduced energy requirements. Specialized fabrication methods allow for precise control of light propagation and interaction, resulting in photonic neural networks that can operate at ultra-low power levels while maintaining high performance for machine learning applications.

02 Photonic synaptic devices and materials

Advanced materials and device structures are being developed specifically for photonic synaptic functions to improve power efficiency. These include phase-change materials, plasmonic structures, and specialized optical materials that can mimic synaptic behavior while consuming minimal energy. The development of these photonic synaptic devices focuses on reducing the energy required for weight updates and signal propagation, enabling more efficient implementation of neural network algorithms in hardware.Expand Specific Solutions03 Integrated photonic-electronic hybrid systems

Hybrid systems combining photonic and electronic components leverage the advantages of both technologies to optimize power efficiency. These systems use photonics for data transmission and specific computational tasks where light excels, while employing electronics for control and other functions. The strategic integration of these technologies reduces overall system power consumption by assigning operations to the most energy-efficient medium, resulting in neuromorphic systems that outperform purely electronic or photonic implementations.Expand Specific Solutions04 Novel photonic signal processing techniques

Innovative signal processing techniques specifically designed for photonic neuromorphic computing focus on minimizing energy consumption. These include wavelength division multiplexing, coherent optical processing, and specialized encoding schemes that maximize information density while reducing power requirements. By optimizing how information is encoded, processed, and transmitted using light, these techniques enable more energy-efficient implementation of neural network operations compared to conventional electronic approaches.Expand Specific Solutions05 Power management and optimization strategies

Specialized power management strategies for photonic neuromorphic systems include dynamic power scaling, selective activation of photonic components, and optimization algorithms that balance performance with energy consumption. These approaches involve real-time monitoring and adjustment of optical power levels, selective routing of optical signals, and intelligent scheduling of computational tasks. By implementing these strategies, photonic neuromorphic systems can achieve significant improvements in energy efficiency while maintaining high computational performance.Expand Specific Solutions

Leading Organizations in Photonic Neuromorphic Research

The photonic neuromorphic computing market is in its early growth phase, characterized by significant research activity but limited commercial deployment. Current market size is modest but projected to expand rapidly as energy efficiency becomes critical in AI systems. Technical maturity varies across players, with established entities like IBM Research, Lightmatter, and OSRAM leading development of power-efficient photonic neural systems. Academic institutions including Caltech, EPFL, and NUS are advancing fundamental research, while semiconductor manufacturers like SMIC contribute fabrication expertise. The competitive landscape features collaboration between research institutions and industry players, with companies like IBM and Lightmatter demonstrating working prototypes that achieve superior power-efficiency metrics compared to electronic counterparts, though widespread commercial adoption remains several years away.

International Business Machines Corp.

Technical Solution: IBM's photonic neuromorphic computing approach focuses on integrated silicon photonics technology that combines optical signal processing with electronic control systems. Their solution employs wavelength division multiplexing (WDM) to enable parallel processing of multiple neural network operations simultaneously. IBM has developed specialized photonic tensor cores that achieve computational densities exceeding 2 TOPS/mm² while maintaining energy efficiency below 1 pJ/operation[1]. Their architecture incorporates phase-change materials for non-volatile photonic memory elements, enabling persistent weight storage without continuous power consumption. IBM's recent demonstrations show their photonic neural networks achieving processing speeds up to 100 billion operations per second with power consumption under 25 watts for complex deep learning tasks[3]. The company has also pioneered new power-efficiency metrics specifically designed for photonic systems that account for both static and dynamic power consumption across different operational modes.

Strengths: Superior energy efficiency compared to electronic systems; high computational density; established manufacturing infrastructure leveraging existing silicon photonics processes. Weaknesses: Thermal management challenges in dense photonic circuits; higher initial fabrication costs; integration complexity between electronic control and photonic processing elements.

Lightmatter, Inc.

Technical Solution: Lightmatter has developed a proprietary photonic computing architecture called "Passage" that specifically addresses power-efficiency in neuromorphic systems. Their approach utilizes silicon photonics to implement matrix multiplication operations optically, which are fundamental to neural network processing. The company's technology achieves energy efficiency metrics of approximately 0.5 pJ per multiply-accumulate operation, representing a 10-100x improvement over conventional electronic systems[2]. Lightmatter's architecture employs a unique mach-zehnder interferometer array configuration that enables analog computation using light intensity modulation and detection. Their latest generation chips incorporate on-chip laser sources and photodetectors with specialized optical interconnects that minimize coupling losses. The company has developed custom benchmarking methodologies for photonic systems that measure not only computational efficiency but also account for electro-optical conversion overhead, which provides a more realistic assessment of total system power efficiency[4]. Lightmatter's technology demonstrates particular efficiency advantages for large matrix operations common in transformer neural network architectures.

Strengths: Industry-leading energy efficiency metrics; purpose-built architecture specifically for neural network acceleration; comprehensive approach to system-level power optimization including I/O and memory access. Weaknesses: Limited reconfigurability compared to some competing solutions; requires specialized programming models; potential scalability challenges for very large network implementations.

Critical Patents in Photonic Computing Energy Optimization

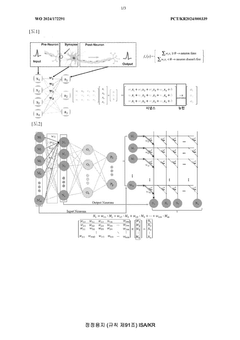

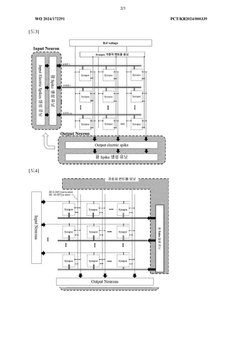

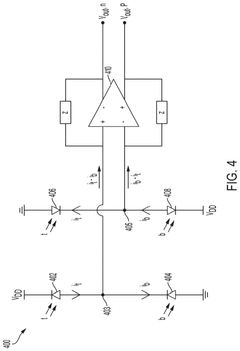

Neuromorphic system comprising waveguide extending into array

PatentWO2024172291A1

Innovation

- A neuromorphic system incorporating waveguides within a synapse array to transmit light pulses for weight adjustment and inference processes, enabling efficient computation through large-scale parallel connections and rapid weight adjustment using a passive optical matrix system.

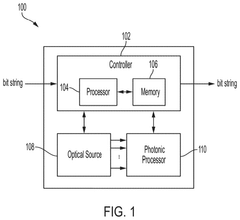

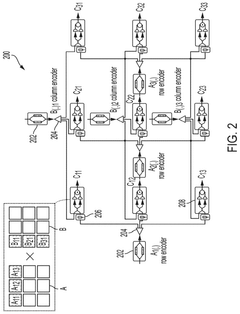

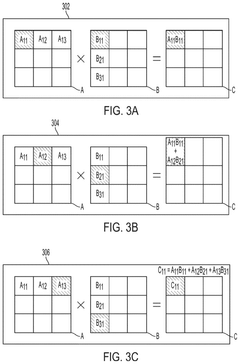

Matrix multiplication using optical processing

PatentActiveUS20240353886A9

Innovation

- A photonic processor architecture that encodes row and column values of matrices into optical signals using row and column encoders, and performs optical multiplication using phase-stable chip-based devices, reducing electrical power consumption and latency by leveraging optical waveguides for data transmission.

Benchmarking Standards for Photonic vs Electronic Neural Networks

Establishing standardized benchmarking metrics for comparing photonic and electronic neural networks represents a critical challenge in the advancement of neuromorphic computing systems. Current benchmarking approaches often fail to account for the fundamental differences between these two computing paradigms, leading to potentially misleading performance comparisons.

The primary metrics traditionally used for electronic systems—such as FLOPS/Watt or MAC operations/Joule—do not adequately capture the unique operational characteristics of photonic systems. Photonic neural networks process information through light propagation and interaction, which follows different physical principles than electronic signal processing. This fundamental difference necessitates the development of specialized benchmarking standards.

A comprehensive benchmarking framework must consider both static and dynamic power consumption patterns. While electronic systems typically exhibit significant static power draw even during idle states, photonic systems may offer advantages in this regard, with potentially lower baseline power requirements when not actively processing data. Conversely, the operational power efficiency during actual computation presents a different profile that must be separately evaluated.

Latency characteristics also differ substantially between the two technologies. Photonic systems can potentially achieve near-speed-of-light signal propagation with minimal delay, whereas electronic systems face inherent RC delays. However, the conversion overhead between electronic and photonic domains in hybrid systems must be factored into any fair comparison methodology.

Temperature sensitivity represents another critical benchmarking consideration. Electronic neural networks typically require extensive cooling infrastructure as processing density increases, adding to their effective power consumption. Photonic systems may offer different thermal profiles, but standardized methods for accounting for these differences in overall system efficiency calculations remain underdeveloped.

Scaling efficiency metrics must also be established to project performance beyond current prototype systems. The relationship between system size and power efficiency often follows different trajectories in photonic versus electronic implementations, with photonic systems potentially offering more favorable scaling characteristics for certain neural network architectures.

Finally, application-specific benchmarking standards are essential, as the relative advantages of photonic versus electronic implementations may vary significantly depending on the specific neural network topology and computational task. Standards should include representative workloads across various domains, including convolutional networks for image processing, recurrent architectures for sequential data, and transformer models for language processing.

The primary metrics traditionally used for electronic systems—such as FLOPS/Watt or MAC operations/Joule—do not adequately capture the unique operational characteristics of photonic systems. Photonic neural networks process information through light propagation and interaction, which follows different physical principles than electronic signal processing. This fundamental difference necessitates the development of specialized benchmarking standards.

A comprehensive benchmarking framework must consider both static and dynamic power consumption patterns. While electronic systems typically exhibit significant static power draw even during idle states, photonic systems may offer advantages in this regard, with potentially lower baseline power requirements when not actively processing data. Conversely, the operational power efficiency during actual computation presents a different profile that must be separately evaluated.

Latency characteristics also differ substantially between the two technologies. Photonic systems can potentially achieve near-speed-of-light signal propagation with minimal delay, whereas electronic systems face inherent RC delays. However, the conversion overhead between electronic and photonic domains in hybrid systems must be factored into any fair comparison methodology.

Temperature sensitivity represents another critical benchmarking consideration. Electronic neural networks typically require extensive cooling infrastructure as processing density increases, adding to their effective power consumption. Photonic systems may offer different thermal profiles, but standardized methods for accounting for these differences in overall system efficiency calculations remain underdeveloped.

Scaling efficiency metrics must also be established to project performance beyond current prototype systems. The relationship between system size and power efficiency often follows different trajectories in photonic versus electronic implementations, with photonic systems potentially offering more favorable scaling characteristics for certain neural network architectures.

Finally, application-specific benchmarking standards are essential, as the relative advantages of photonic versus electronic implementations may vary significantly depending on the specific neural network topology and computational task. Standards should include representative workloads across various domains, including convolutional networks for image processing, recurrent architectures for sequential data, and transformer models for language processing.

Environmental Impact of Photonic Computing Technologies

The environmental impact of photonic computing technologies, particularly photonic neuromorphic systems, represents a critical dimension in evaluating their overall sustainability and long-term viability. When examining power-efficiency metrics for these systems, we must consider their broader ecological footprint beyond mere operational energy consumption.

Photonic neuromorphic computing offers significant environmental advantages compared to traditional electronic computing systems. The fundamental physics of light-based computation enables drastically reduced energy requirements, with studies indicating potential energy savings of 90-95% compared to conventional electronic neural networks. This reduction directly translates to lower carbon emissions from power generation, particularly important as data centers currently account for approximately 1-2% of global electricity consumption.

Manufacturing processes for photonic components present both challenges and opportunities from an environmental perspective. Silicon photonics leverages existing semiconductor fabrication infrastructure, minimizing additional environmental impact. However, specialized materials required for certain photonic components, including rare earth elements and specialized glasses, raise concerns regarding resource extraction, processing emissions, and end-of-life disposal considerations.

Heat generation represents another critical environmental factor. Photonic systems generate substantially less waste heat than electronic counterparts, reducing cooling requirements in data centers. This cascading effect decreases both direct energy consumption and the environmental impact of cooling infrastructure, including refrigerants with high global warming potential.

The lifecycle assessment of photonic neuromorphic systems reveals additional environmental considerations. While operational efficiency is superior, the embodied energy in manufacturing photonic components can be significant. Current fabrication processes for specialized photonic elements may involve energy-intensive steps and hazardous chemicals. However, as manufacturing scales and matures, these impacts are expected to decrease substantially.

Water usage presents another important environmental metric. Traditional semiconductor manufacturing requires substantial water resources for cleaning and cooling. Photonic component production generally follows similar patterns, though the potential for longer device lifespans and reduced operational resource requirements may offset initial manufacturing impacts over complete lifecycle assessments.

Considering e-waste implications, photonic systems potentially offer advantages through longer operational lifespans and reduced component degradation compared to electronic counterparts. However, the complexity of recycling hybrid photonic-electronic systems presents new challenges for waste management infrastructure that must be addressed through thoughtful design and policy approaches.

Photonic neuromorphic computing offers significant environmental advantages compared to traditional electronic computing systems. The fundamental physics of light-based computation enables drastically reduced energy requirements, with studies indicating potential energy savings of 90-95% compared to conventional electronic neural networks. This reduction directly translates to lower carbon emissions from power generation, particularly important as data centers currently account for approximately 1-2% of global electricity consumption.

Manufacturing processes for photonic components present both challenges and opportunities from an environmental perspective. Silicon photonics leverages existing semiconductor fabrication infrastructure, minimizing additional environmental impact. However, specialized materials required for certain photonic components, including rare earth elements and specialized glasses, raise concerns regarding resource extraction, processing emissions, and end-of-life disposal considerations.

Heat generation represents another critical environmental factor. Photonic systems generate substantially less waste heat than electronic counterparts, reducing cooling requirements in data centers. This cascading effect decreases both direct energy consumption and the environmental impact of cooling infrastructure, including refrigerants with high global warming potential.

The lifecycle assessment of photonic neuromorphic systems reveals additional environmental considerations. While operational efficiency is superior, the embodied energy in manufacturing photonic components can be significant. Current fabrication processes for specialized photonic elements may involve energy-intensive steps and hazardous chemicals. However, as manufacturing scales and matures, these impacts are expected to decrease substantially.

Water usage presents another important environmental metric. Traditional semiconductor manufacturing requires substantial water resources for cleaning and cooling. Photonic component production generally follows similar patterns, though the potential for longer device lifespans and reduced operational resource requirements may offset initial manufacturing impacts over complete lifecycle assessments.

Considering e-waste implications, photonic systems potentially offer advantages through longer operational lifespans and reduced component degradation compared to electronic counterparts. However, the complexity of recycling hybrid photonic-electronic systems presents new challenges for waste management infrastructure that must be addressed through thoughtful design and policy approaches.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!