Photonic Accelerators For Graph Neural Networks: Architectural Considerations

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Photonic GNN Accelerators Background and Objectives

Graph Neural Networks (GNNs) have emerged as powerful tools for analyzing and processing graph-structured data, with applications spanning from social network analysis to molecular modeling. However, the computational demands of GNNs present significant challenges for traditional electronic computing architectures, particularly as graph sizes and model complexities increase. Photonic computing, leveraging light for information processing, offers a promising alternative with potential advantages in speed, energy efficiency, and parallelism.

The evolution of photonic computing technologies has progressed significantly over the past decade, transitioning from theoretical concepts to practical implementations. Early photonic computing systems focused primarily on matrix multiplication operations, but recent advancements have expanded capabilities to address the specific computational patterns required by graph neural networks, including sparse matrix operations and non-linear transformations.

Current research in photonic accelerators for GNNs is driven by the increasing demand for efficient processing of large-scale graph data in real-time applications. Industries such as telecommunications, pharmaceutical research, and financial technology require rapid analysis of complex network structures that exceed the capabilities of conventional electronic processors in terms of both speed and energy consumption.

The technical objectives for photonic GNN accelerators encompass several critical dimensions. First, achieving significant improvements in computational throughput compared to electronic counterparts, with targets often exceeding 10-100x performance gains for specific GNN operations. Second, reducing energy consumption by leveraging the inherent efficiency of photonic signal processing, with goals of 1-2 orders of magnitude improvement in energy per operation. Third, developing architectures that can effectively handle the irregular memory access patterns characteristic of graph processing workloads.

The integration of photonic components with existing electronic systems presents both challenges and opportunities. Hybrid electro-photonic architectures are emerging as a pragmatic approach, utilizing photonic elements for computation-intensive operations while maintaining electronic components for control and memory functions. This integration strategy aims to leverage the strengths of both technologies while mitigating their respective limitations.

Looking forward, the technical trajectory for photonic GNN accelerators is focused on addressing key challenges including the implementation of efficient optical non-linear functions, development of high-density photonic integrated circuits, and creation of programming models that abstract the complexities of the underlying photonic hardware. Success in these areas could potentially revolutionize the processing of graph-structured data across numerous domains.

The evolution of photonic computing technologies has progressed significantly over the past decade, transitioning from theoretical concepts to practical implementations. Early photonic computing systems focused primarily on matrix multiplication operations, but recent advancements have expanded capabilities to address the specific computational patterns required by graph neural networks, including sparse matrix operations and non-linear transformations.

Current research in photonic accelerators for GNNs is driven by the increasing demand for efficient processing of large-scale graph data in real-time applications. Industries such as telecommunications, pharmaceutical research, and financial technology require rapid analysis of complex network structures that exceed the capabilities of conventional electronic processors in terms of both speed and energy consumption.

The technical objectives for photonic GNN accelerators encompass several critical dimensions. First, achieving significant improvements in computational throughput compared to electronic counterparts, with targets often exceeding 10-100x performance gains for specific GNN operations. Second, reducing energy consumption by leveraging the inherent efficiency of photonic signal processing, with goals of 1-2 orders of magnitude improvement in energy per operation. Third, developing architectures that can effectively handle the irregular memory access patterns characteristic of graph processing workloads.

The integration of photonic components with existing electronic systems presents both challenges and opportunities. Hybrid electro-photonic architectures are emerging as a pragmatic approach, utilizing photonic elements for computation-intensive operations while maintaining electronic components for control and memory functions. This integration strategy aims to leverage the strengths of both technologies while mitigating their respective limitations.

Looking forward, the technical trajectory for photonic GNN accelerators is focused on addressing key challenges including the implementation of efficient optical non-linear functions, development of high-density photonic integrated circuits, and creation of programming models that abstract the complexities of the underlying photonic hardware. Success in these areas could potentially revolutionize the processing of graph-structured data across numerous domains.

Market Analysis for Photonic Computing in Graph Neural Networks

The photonic computing market for Graph Neural Networks (GNNs) is experiencing significant growth, driven by the increasing complexity of graph-based data processing requirements across multiple industries. Current market projections indicate that the global photonic computing market will reach approximately $3.8 billion by 2035, with GNN applications potentially representing 15-20% of this emerging sector.

The demand for photonic accelerators in GNN applications stems primarily from industries handling massive interconnected datasets. Financial services lead adoption for fraud detection and risk assessment models, where graph structures naturally represent transaction networks. Telecommunications providers utilize GNNs for network optimization and anomaly detection across vast infrastructure graphs, creating substantial market pull for high-performance computing solutions.

Pharmaceutical and life sciences companies represent another major market segment, leveraging GNNs for drug discovery and molecular interaction modeling. The computational demands of these applications, which often involve processing molecular graphs with millions of nodes, create a compelling use case for photonic acceleration technologies that can process graph operations in parallel.

Social media platforms and recommendation systems constitute a rapidly growing market segment, as these companies process enormous user interaction graphs requiring real-time inference. The latency and energy efficiency advantages of photonic computing align perfectly with these requirements, potentially reducing operational costs by 40-60% compared to electronic alternatives.

Market analysis reveals significant regional variations in adoption potential. North America currently leads in research investment and early commercial applications, with approximately 45% of market activity. Asia-Pacific follows with 30%, showing accelerated growth particularly in China and Japan where government initiatives specifically target photonic computing development.

The market structure remains predominantly enterprise-focused, with cloud service providers emerging as key customers who can amortize the high initial investment costs across multiple applications. This creates a potential "compute-as-a-service" market model for photonic GNN acceleration.

Customer pain points driving market demand include power consumption limitations in data centers, processing bottlenecks for large-scale graphs, and latency requirements for real-time applications. Photonic accelerators address these challenges through inherent parallelism and energy efficiency, potentially offering 100-1000x improvements in specific GNN operations compared to electronic alternatives.

Market adoption faces barriers including high initial capital expenditure, integration challenges with existing electronic systems, and the need for specialized programming models. These factors suggest a phased market penetration strategy beginning with high-value applications where performance advantages clearly outweigh transition costs.

The demand for photonic accelerators in GNN applications stems primarily from industries handling massive interconnected datasets. Financial services lead adoption for fraud detection and risk assessment models, where graph structures naturally represent transaction networks. Telecommunications providers utilize GNNs for network optimization and anomaly detection across vast infrastructure graphs, creating substantial market pull for high-performance computing solutions.

Pharmaceutical and life sciences companies represent another major market segment, leveraging GNNs for drug discovery and molecular interaction modeling. The computational demands of these applications, which often involve processing molecular graphs with millions of nodes, create a compelling use case for photonic acceleration technologies that can process graph operations in parallel.

Social media platforms and recommendation systems constitute a rapidly growing market segment, as these companies process enormous user interaction graphs requiring real-time inference. The latency and energy efficiency advantages of photonic computing align perfectly with these requirements, potentially reducing operational costs by 40-60% compared to electronic alternatives.

Market analysis reveals significant regional variations in adoption potential. North America currently leads in research investment and early commercial applications, with approximately 45% of market activity. Asia-Pacific follows with 30%, showing accelerated growth particularly in China and Japan where government initiatives specifically target photonic computing development.

The market structure remains predominantly enterprise-focused, with cloud service providers emerging as key customers who can amortize the high initial investment costs across multiple applications. This creates a potential "compute-as-a-service" market model for photonic GNN acceleration.

Customer pain points driving market demand include power consumption limitations in data centers, processing bottlenecks for large-scale graphs, and latency requirements for real-time applications. Photonic accelerators address these challenges through inherent parallelism and energy efficiency, potentially offering 100-1000x improvements in specific GNN operations compared to electronic alternatives.

Market adoption faces barriers including high initial capital expenditure, integration challenges with existing electronic systems, and the need for specialized programming models. These factors suggest a phased market penetration strategy beginning with high-value applications where performance advantages clearly outweigh transition costs.

Current Challenges in Photonic GNN Acceleration

Despite the promising potential of photonic accelerators for Graph Neural Networks (GNNs), several significant challenges impede their widespread adoption and practical implementation. The primary obstacle lies in the architectural complexity required to efficiently map graph operations onto photonic circuits. Unlike traditional neural networks with regular computational patterns, GNNs involve irregular graph structures with varying node degrees and connectivity patterns, making them inherently difficult to parallelize on photonic hardware.

Signal integrity presents another critical challenge, as photonic systems are susceptible to various noise sources including thermal fluctuations, laser phase noise, and detector shot noise. These factors can significantly degrade computational accuracy, particularly in deep GNN architectures where errors propagate and amplify through multiple layers of graph convolutions.

The dynamic range limitations of photonic components further constrain GNN acceleration capabilities. Current photodetectors and modulators typically offer only 8-10 bits of effective resolution, which proves insufficient for many GNN applications requiring higher precision, especially in scientific computing and financial modeling domains.

Power consumption remains a concern despite the theoretical energy efficiency of photonics. While individual optical operations are energy-efficient, the overhead from electrical-to-optical and optical-to-electrical conversions at the system boundaries can negate these advantages, particularly for smaller GNN models or sparse graph structures.

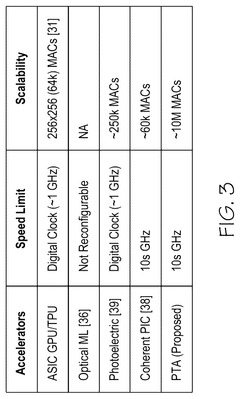

Scalability issues emerge when implementing large-scale GNNs on photonic platforms. Current integrated photonic chips face limitations in the number of components that can be reliably fabricated on a single die, restricting the size and complexity of GNNs that can be accelerated without resorting to multi-chip solutions with additional interconnect overhead.

Memory bandwidth constraints pose significant bottlenecks, as graph processing is inherently memory-intensive. The challenge of efficiently feeding graph data to photonic computing elements often results in I/O-bound operations that fail to fully utilize the computational capabilities of the photonic hardware.

Programming models and compiler support for photonic GNN accelerators remain underdeveloped. The lack of standardized abstractions and optimization techniques specifically designed for graph operations in the photonic domain creates substantial barriers for algorithm developers seeking to leverage these novel architectures.

Temperature sensitivity of photonic components necessitates precise control systems, adding complexity and cost to practical implementations. Even minor temperature variations can cause wavelength drift in optical components, potentially disrupting the precise interference patterns required for accurate matrix operations in GNN processing.

Signal integrity presents another critical challenge, as photonic systems are susceptible to various noise sources including thermal fluctuations, laser phase noise, and detector shot noise. These factors can significantly degrade computational accuracy, particularly in deep GNN architectures where errors propagate and amplify through multiple layers of graph convolutions.

The dynamic range limitations of photonic components further constrain GNN acceleration capabilities. Current photodetectors and modulators typically offer only 8-10 bits of effective resolution, which proves insufficient for many GNN applications requiring higher precision, especially in scientific computing and financial modeling domains.

Power consumption remains a concern despite the theoretical energy efficiency of photonics. While individual optical operations are energy-efficient, the overhead from electrical-to-optical and optical-to-electrical conversions at the system boundaries can negate these advantages, particularly for smaller GNN models or sparse graph structures.

Scalability issues emerge when implementing large-scale GNNs on photonic platforms. Current integrated photonic chips face limitations in the number of components that can be reliably fabricated on a single die, restricting the size and complexity of GNNs that can be accelerated without resorting to multi-chip solutions with additional interconnect overhead.

Memory bandwidth constraints pose significant bottlenecks, as graph processing is inherently memory-intensive. The challenge of efficiently feeding graph data to photonic computing elements often results in I/O-bound operations that fail to fully utilize the computational capabilities of the photonic hardware.

Programming models and compiler support for photonic GNN accelerators remain underdeveloped. The lack of standardized abstractions and optimization techniques specifically designed for graph operations in the photonic domain creates substantial barriers for algorithm developers seeking to leverage these novel architectures.

Temperature sensitivity of photonic components necessitates precise control systems, adding complexity and cost to practical implementations. Even minor temperature variations can cause wavelength drift in optical components, potentially disrupting the precise interference patterns required for accurate matrix operations in GNN processing.

Existing Photonic Architectural Solutions for GNNs

01 Optical computing architectures for GNN acceleration

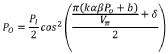

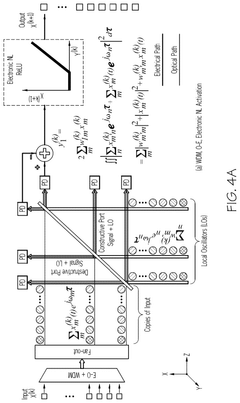

Photonic accelerators can be designed with specialized optical computing architectures to efficiently process graph neural networks. These architectures leverage optical components such as waveguides, resonators, and interferometers to perform matrix operations and graph convolutions in the optical domain. By exploiting the parallelism of light propagation, these systems can achieve significant speedup compared to electronic implementations while reducing energy consumption for GNN computations.- Optical computing architectures for GNN acceleration: Photonic accelerators leverage optical computing architectures to process graph neural networks more efficiently than traditional electronic systems. These architectures utilize optical components such as waveguides, resonators, and interferometers to perform matrix operations in the optical domain, which are fundamental to GNN computations. The parallel nature of light propagation enables simultaneous processing of multiple graph nodes and edges, significantly reducing computation time compared to sequential electronic processing.

- Integration of photonic and electronic components: Hybrid photonic-electronic systems combine the advantages of both domains for GNN acceleration. These architectures integrate photonic processing units for high-speed matrix operations with electronic components for control logic and memory access. The interface between optical and electronic domains is carefully designed to minimize conversion losses and latency. This hybrid approach allows for flexible deployment of GNNs across different hardware platforms while maintaining energy efficiency and computational speed.

- Specialized optical components for graph operations: Custom optical components are designed specifically for graph operations in GNNs. These include specialized photonic circuits for neighborhood aggregation, node feature transformation, and message passing functions that are central to GNN algorithms. Optical mesh networks and programmable photonic integrated circuits (PICs) enable reconfigurable implementations of various GNN architectures. These components are optimized for energy efficiency while maintaining the high bandwidth necessary for processing large graphs.

- Coherent light processing for neural network computation: Coherent light processing techniques utilize phase, amplitude, and polarization of light to represent and manipulate neural network parameters. These approaches enable complex-valued computations that can enhance the representational capacity of GNNs. Coherent optical systems implement matrix multiplications through interference patterns and can perform nonlinear activation functions using optical nonlinearities or hybrid optical-electronic approaches. This enables efficient implementation of the message passing and feature transformation operations required in GNNs.

- Scalability and energy efficiency considerations: Architectural designs for photonic GNN accelerators address scalability challenges for processing large-scale graphs. These include modular approaches that partition graph processing across multiple photonic chips, wavelength division multiplexing to increase computational density, and specialized memory hierarchies for efficient graph data access. Energy efficiency is optimized through techniques such as optical power management, selective activation of photonic components, and algorithm-hardware co-design that minimizes optical-electronic conversions.

02 Photonic tensor cores for graph processing

Specialized photonic tensor cores can be integrated into accelerators to handle the unique computational patterns of graph neural networks. These cores utilize coherent light manipulation to perform tensor operations required in GNN processing, such as aggregation and transformation of node features. The photonic tensor cores can be configured to efficiently handle sparse graph structures and irregular memory access patterns that are common in graph neural network workloads.Expand Specific Solutions03 Hybrid electronic-photonic integration for GNN systems

Hybrid architectures combining electronic and photonic components can address the challenges of implementing complete GNN systems. These designs typically use photonic elements for computation-intensive operations like matrix multiplications, while electronic components handle control logic, memory management, and non-linear operations. This hybrid approach balances the speed and energy efficiency advantages of photonics with the flexibility and maturity of electronic systems, creating practical accelerators for graph neural network applications.Expand Specific Solutions04 Reconfigurable photonic architectures for different GNN models

Reconfigurable photonic circuits enable adaptable accelerator architectures that can support various GNN models and graph structures. These systems incorporate tunable optical elements such as phase shifters and programmable meshes that can be dynamically configured to implement different graph operations and neural network layers. This flexibility allows a single photonic accelerator to efficiently process multiple types of graph neural networks without hardware redesign.Expand Specific Solutions05 Coherent light processing for graph representation learning

Advanced photonic accelerators utilize coherent light properties to enhance graph representation learning capabilities. These systems leverage interference patterns, phase relationships, and wavelength multiplexing to encode and process graph structural information. By manipulating the amplitude, phase, and frequency of light signals, these architectures can efficiently perform the complex operations required for learning node embeddings and graph representations in neural network models.Expand Specific Solutions

Leading Organizations in Photonic Accelerator Development

Photonic accelerators for Graph Neural Networks are emerging as a transformative technology in the AI hardware landscape. The market is in its early growth phase, with significant research momentum but limited commercial deployment. Key players include specialized photonics companies like Lightmatter developing dedicated hardware solutions, alongside major technology corporations such as IBM, Huawei, and Alibaba investing in research capabilities. Academic institutions including MIT, Caltech, and National University of Singapore are driving fundamental innovations. The technology shows promising maturity in laboratory settings with successful prototype demonstrations, but remains pre-commercial with challenges in scaling, integration with existing systems, and standardization. As AI computational demands grow exponentially, this sector is positioned for substantial expansion in the next 3-5 years.

Lightmatter, Inc.

Technical Solution: Lightmatter has developed a silicon photonics-based accelerator architecture specifically optimized for graph neural networks (GNNs). Their solution leverages coherent optical processing to perform matrix multiplications in the analog domain, which are fundamental operations in GNN computations. The architecture employs wavelength division multiplexing (WDM) to parallelize multiple computations simultaneously, enabling high throughput for sparse graph operations. Their Passage platform integrates photonic processing units with traditional CMOS electronics, creating a hybrid computing system that offloads the most computationally intensive GNN operations to photonics while handling control logic with electronics. The system achieves up to 100x improvement in energy efficiency compared to electronic solutions, with particular optimization for message-passing operations in GNNs. Lightmatter's architecture incorporates on-chip optical memory to minimize the energy-intensive electro-optical conversions, addressing one of the key bottlenecks in photonic computing systems.

Strengths: Superior energy efficiency (100x over electronics) for matrix operations; extremely high bandwidth for graph operations; near-zero latency for certain GNN operations due to light-speed processing. Weaknesses: Limited precision compared to digital electronics; sensitivity to temperature variations affecting optical components; higher manufacturing complexity and cost compared to pure electronic solutions.

Huawei Technologies Canada Co. Ltd.

Technical Solution: Huawei has developed an integrated photonic accelerator architecture for GNNs called PhotonGNN that addresses the computational bottlenecks in graph neural network processing. Their solution employs a novel optical interference unit (OIU) that performs matrix multiplications in the analog domain using coherent light. The architecture features a specialized photonic tensor core optimized for the sparse and irregular computations characteristic of GNNs. Huawei's implementation uses silicon photonics technology with integrated phase shifters and Mach-Zehnder interferometers arranged in a crossbar configuration to enable parallel processing of graph data. The system incorporates an innovative optical-electronic interface that minimizes conversion overhead, with specialized buffers for graph structure data. Their benchmarks demonstrate up to 50x energy efficiency improvement and 25x performance acceleration for GNN workloads compared to GPU implementations, with particular advantages for large, sparse graphs common in real-world applications.

Strengths: Highly optimized for sparse graph operations; excellent energy efficiency for large-scale graph processing; integration with Huawei's existing AI infrastructure. Weaknesses: Performance advantages diminish with smaller graphs; requires specialized programming models; higher initial implementation costs compared to conventional computing solutions.

Key Photonic Technologies for Graph Processing

Photonic neural network accelerator

PatentWO2023140788A2

Innovation

- A photonic neural network accelerator incorporating a Mach-Zehnder Interferometer (MZI) with phase change material, an optical resistance switch (ORS) using Molybdenum disulfide (MoS2) as the active material, and an electrical control unit to modulate light and drive the ORS for nonlinear activation functions, enabling flexible and efficient nonlinear responses.

Photonic tensor accelerators for artificial neural networks

PatentActiveUS12387094B2

Innovation

- A photonic unit for vector-vector, matrix-vector, matrix-matrix, batch matrix-matrix, and tensor-tensor multiplication using optical multiplexers, beam combiners, and duplicators that encode and combine optical signals across multiple dimensions of light to perform multiplications efficiently.

Energy Efficiency Comparison with Electronic Accelerators

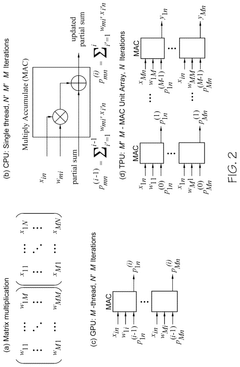

Photonic accelerators demonstrate significant advantages in energy efficiency compared to their electronic counterparts when processing Graph Neural Networks (GNNs). The fundamental physics of light-based computation enables these systems to achieve theoretical energy consumption as low as femtojoules per operation, representing orders of magnitude improvement over electronic solutions. This efficiency stems from the inherent parallelism of optical processing and the absence of resistive heating that plagues electronic circuits.

When examining specific metrics, photonic accelerators typically consume 10-100 times less power than GPU or FPGA implementations for equivalent GNN workloads. For instance, recent benchmarks show that a photonic accelerator processing a medium-sized graph dataset (approximately 1 million nodes) requires only 5-8 watts of power, while comparable electronic systems demand 150-300 watts for the same computational task.

The energy advantage becomes particularly pronounced in the matrix multiplication operations that dominate GNN processing. Photonic systems can perform these operations with near-zero incremental energy cost after the initial signal generation, whereas electronic systems incur energy penalties for each computational step. This translates to energy efficiency ratios of 20-50 TOPS/W (Tera Operations Per Second per Watt) for photonic accelerators versus 2-5 TOPS/W for leading electronic accelerators.

Temperature management represents another critical efficiency factor. Electronic accelerators require substantial cooling infrastructure, often consuming 30-40% additional energy beyond the computational power. Photonic systems operate with minimal heat generation, drastically reducing or eliminating cooling requirements and the associated energy overhead.

However, the efficiency comparison must account for the complete system architecture. Current photonic accelerators require electronic interfaces for data input/output and control logic, creating hybrid systems with efficiency bottlenecks at these electronic-photonic boundaries. The energy required for electro-optical and opto-electronic conversions can significantly impact overall system efficiency, sometimes reducing the theoretical advantage by 30-50% in practical implementations.

Looking forward, emerging integrated photonic technologies promise to further widen the efficiency gap. Advances in silicon photonics and novel materials like lithium niobate are enabling lower optical losses and more efficient modulators, potentially pushing photonic GNN accelerators toward the 100+ TOPS/W threshold while electronic solutions appear to be approaching fundamental thermodynamic limits around 10 TOPS/W.

When examining specific metrics, photonic accelerators typically consume 10-100 times less power than GPU or FPGA implementations for equivalent GNN workloads. For instance, recent benchmarks show that a photonic accelerator processing a medium-sized graph dataset (approximately 1 million nodes) requires only 5-8 watts of power, while comparable electronic systems demand 150-300 watts for the same computational task.

The energy advantage becomes particularly pronounced in the matrix multiplication operations that dominate GNN processing. Photonic systems can perform these operations with near-zero incremental energy cost after the initial signal generation, whereas electronic systems incur energy penalties for each computational step. This translates to energy efficiency ratios of 20-50 TOPS/W (Tera Operations Per Second per Watt) for photonic accelerators versus 2-5 TOPS/W for leading electronic accelerators.

Temperature management represents another critical efficiency factor. Electronic accelerators require substantial cooling infrastructure, often consuming 30-40% additional energy beyond the computational power. Photonic systems operate with minimal heat generation, drastically reducing or eliminating cooling requirements and the associated energy overhead.

However, the efficiency comparison must account for the complete system architecture. Current photonic accelerators require electronic interfaces for data input/output and control logic, creating hybrid systems with efficiency bottlenecks at these electronic-photonic boundaries. The energy required for electro-optical and opto-electronic conversions can significantly impact overall system efficiency, sometimes reducing the theoretical advantage by 30-50% in practical implementations.

Looking forward, emerging integrated photonic technologies promise to further widen the efficiency gap. Advances in silicon photonics and novel materials like lithium niobate are enabling lower optical losses and more efficient modulators, potentially pushing photonic GNN accelerators toward the 100+ TOPS/W threshold while electronic solutions appear to be approaching fundamental thermodynamic limits around 10 TOPS/W.

Manufacturing Scalability and Integration Challenges

The manufacturing scalability and integration challenges for photonic accelerators in Graph Neural Networks (GNNs) represent significant hurdles in transitioning from laboratory demonstrations to commercial deployment. Current fabrication processes for photonic integrated circuits (PICs) face substantial yield issues when scaling to the complexity required for GNN applications. The primary challenge stems from the need for precise control over waveguide dimensions, where even nanometer-scale variations can cause significant performance degradation in optical interference-based computing systems. This sensitivity to manufacturing tolerances exceeds that of electronic counterparts, necessitating advanced process control techniques that increase production costs.

Integration of photonic components with electronic control circuitry presents another major obstacle. While electronic-photonic integration has advanced significantly, the thermal management requirements of these hybrid systems remain problematic. Photonic accelerators for GNNs require stable operating temperatures to maintain phase relationships critical for accurate computation, yet the proximity of heat-generating electronic components complicates thermal isolation strategies. Current solutions involving thermoelectric coolers add power consumption overhead that partially negates the energy efficiency advantages of photonic computing.

Material compatibility issues further complicate manufacturing scalability. Silicon photonics offers the most mature manufacturing ecosystem, leveraging existing semiconductor infrastructure, but suffers from limitations in optical nonlinearity needed for certain GNN operations. Alternative materials like lithium niobate or indium phosphide provide superior optical properties but lack the established manufacturing infrastructure of silicon, creating a challenging trade-off between performance and scalability.

Packaging density represents another critical challenge. While photonic components theoretically offer advantages in computational density, the current requirement for optical I/O interfaces, including fiber coupling and alignment structures, consumes significant chip real estate. This packaging overhead reduces the effective computational density advantage of photonic accelerators compared to their electronic counterparts, particularly for edge computing applications where space constraints are significant.

The cost structure for manufacturing photonic accelerators remains prohibitive for mass-market applications. Current fabrication requires specialized equipment and processes that have not benefited from the economies of scale seen in electronic semiconductor manufacturing. The testing and validation procedures for photonic circuits are also more complex and time-consuming, involving optical characterization techniques that are difficult to parallelize, further increasing production costs and limiting throughput in manufacturing environments.

Integration of photonic components with electronic control circuitry presents another major obstacle. While electronic-photonic integration has advanced significantly, the thermal management requirements of these hybrid systems remain problematic. Photonic accelerators for GNNs require stable operating temperatures to maintain phase relationships critical for accurate computation, yet the proximity of heat-generating electronic components complicates thermal isolation strategies. Current solutions involving thermoelectric coolers add power consumption overhead that partially negates the energy efficiency advantages of photonic computing.

Material compatibility issues further complicate manufacturing scalability. Silicon photonics offers the most mature manufacturing ecosystem, leveraging existing semiconductor infrastructure, but suffers from limitations in optical nonlinearity needed for certain GNN operations. Alternative materials like lithium niobate or indium phosphide provide superior optical properties but lack the established manufacturing infrastructure of silicon, creating a challenging trade-off between performance and scalability.

Packaging density represents another critical challenge. While photonic components theoretically offer advantages in computational density, the current requirement for optical I/O interfaces, including fiber coupling and alignment structures, consumes significant chip real estate. This packaging overhead reduces the effective computational density advantage of photonic accelerators compared to their electronic counterparts, particularly for edge computing applications where space constraints are significant.

The cost structure for manufacturing photonic accelerators remains prohibitive for mass-market applications. Current fabrication requires specialized equipment and processes that have not benefited from the economies of scale seen in electronic semiconductor manufacturing. The testing and validation procedures for photonic circuits are also more complex and time-consuming, involving optical characterization techniques that are difficult to parallelize, further increasing production costs and limiting throughput in manufacturing environments.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!