How to Reduce Noise in FTIR Spectra Processing

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

FTIR Spectroscopy Background and Noise Reduction Goals

Fourier Transform Infrared (FTIR) spectroscopy has evolved significantly since its inception in the mid-20th century, becoming an indispensable analytical technique across numerous scientific and industrial domains. The technology leverages the interaction between infrared radiation and sample materials to generate spectral fingerprints that reveal molecular composition and structure. Over recent decades, FTIR instrumentation has progressed from simple dispersive spectrometers to sophisticated systems incorporating advanced interferometers and detectors, dramatically enhancing resolution and sensitivity capabilities.

Despite these technological advancements, noise remains a persistent challenge in FTIR spectroscopy, often compromising data quality and analytical accuracy. Noise sources in FTIR spectra are diverse, including detector noise, optical interference, environmental fluctuations, and sample-related artifacts. The presence of these noise components can obscure critical spectral features, complicate peak identification, and introduce errors in quantitative analyses, ultimately limiting the technique's effectiveness in applications requiring high precision.

The evolution of FTIR technology has been characterized by continuous efforts to mitigate these noise issues. Early developments focused primarily on hardware improvements, while recent trends emphasize sophisticated signal processing algorithms and computational approaches. This shift reflects the growing recognition that optimal noise reduction requires a multifaceted strategy combining hardware optimization, careful experimental design, and advanced data processing techniques.

Current technological trajectories point toward integrated systems that incorporate real-time noise identification and adaptive correction mechanisms. Machine learning and artificial intelligence are increasingly being explored as powerful tools for distinguishing signal from noise in complex spectral datasets. Additionally, there is growing interest in developing standardized protocols for noise assessment and reduction across different FTIR applications.

The primary technical goals for noise reduction in FTIR spectroscopy encompass several dimensions. First, enhancing signal-to-noise ratio (SNR) without compromising spectral resolution or introducing artifacts. Second, developing robust methods for identifying and characterizing different noise types to enable targeted reduction strategies. Third, establishing automated and adaptive noise reduction workflows that can accommodate varying sample types and experimental conditions.

Achieving these goals would significantly expand FTIR capabilities in challenging applications such as trace analysis, in-situ monitoring, and high-throughput screening. Furthermore, improved noise reduction would enhance the reliability of spectral databases and facilitate more accurate chemometric modeling, ultimately strengthening FTIR's position as a cornerstone analytical technique in fields ranging from pharmaceutical development to environmental monitoring and materials science.

Despite these technological advancements, noise remains a persistent challenge in FTIR spectroscopy, often compromising data quality and analytical accuracy. Noise sources in FTIR spectra are diverse, including detector noise, optical interference, environmental fluctuations, and sample-related artifacts. The presence of these noise components can obscure critical spectral features, complicate peak identification, and introduce errors in quantitative analyses, ultimately limiting the technique's effectiveness in applications requiring high precision.

The evolution of FTIR technology has been characterized by continuous efforts to mitigate these noise issues. Early developments focused primarily on hardware improvements, while recent trends emphasize sophisticated signal processing algorithms and computational approaches. This shift reflects the growing recognition that optimal noise reduction requires a multifaceted strategy combining hardware optimization, careful experimental design, and advanced data processing techniques.

Current technological trajectories point toward integrated systems that incorporate real-time noise identification and adaptive correction mechanisms. Machine learning and artificial intelligence are increasingly being explored as powerful tools for distinguishing signal from noise in complex spectral datasets. Additionally, there is growing interest in developing standardized protocols for noise assessment and reduction across different FTIR applications.

The primary technical goals for noise reduction in FTIR spectroscopy encompass several dimensions. First, enhancing signal-to-noise ratio (SNR) without compromising spectral resolution or introducing artifacts. Second, developing robust methods for identifying and characterizing different noise types to enable targeted reduction strategies. Third, establishing automated and adaptive noise reduction workflows that can accommodate varying sample types and experimental conditions.

Achieving these goals would significantly expand FTIR capabilities in challenging applications such as trace analysis, in-situ monitoring, and high-throughput screening. Furthermore, improved noise reduction would enhance the reliability of spectral databases and facilitate more accurate chemometric modeling, ultimately strengthening FTIR's position as a cornerstone analytical technique in fields ranging from pharmaceutical development to environmental monitoring and materials science.

Market Demand for High-Quality Spectral Analysis

The global market for high-quality spectral analysis has experienced significant growth over the past decade, driven primarily by increasing demands in pharmaceutical research, environmental monitoring, and materials science. FTIR (Fourier Transform Infrared) spectroscopy, as a cornerstone analytical technique, has seen its application expand across diverse industries where precise molecular identification and quantification are critical.

In the pharmaceutical sector, the demand for noise-reduced FTIR analysis has grown at a remarkable pace as regulatory requirements for drug purity and composition become more stringent. Pharmaceutical companies require highly accurate spectral data to ensure compliance with FDA and EMA regulations, creating a substantial market for advanced noise reduction solutions in FTIR processing.

The chemical manufacturing industry represents another significant market segment, where real-time process monitoring using FTIR spectroscopy has become essential for quality control. Companies in this sector are increasingly willing to invest in premium solutions that can deliver cleaner spectral data, as even minor improvements in signal-to-noise ratios can translate to substantial cost savings by reducing production errors and material waste.

Academic and research institutions constitute a growing market for high-quality spectral analysis tools, particularly as interdisciplinary research involving complex biological samples becomes more prevalent. These institutions often work with challenging samples that produce inherently noisy spectra, creating demand for sophisticated noise reduction algorithms and hardware solutions.

Environmental monitoring applications have emerged as a rapidly expanding market segment, with governmental agencies and private organizations deploying FTIR systems for air quality monitoring, water analysis, and soil contamination studies. The ability to detect trace contaminants reliably depends critically on effective noise reduction techniques.

Market research indicates that end-users are increasingly prioritizing software solutions that can be integrated with existing FTIR hardware, allowing for cost-effective upgrades rather than complete system replacements. This trend has created opportunities for specialized software developers focusing on advanced signal processing algorithms specifically designed for spectroscopic applications.

The geographical distribution of market demand shows particular strength in North America and Europe, where established pharmaceutical and chemical industries drive adoption. However, the Asia-Pacific region is experiencing the fastest growth rate as manufacturing capabilities expand and regulatory frameworks mature, creating new opportunities for providers of high-quality spectral analysis solutions.

In the pharmaceutical sector, the demand for noise-reduced FTIR analysis has grown at a remarkable pace as regulatory requirements for drug purity and composition become more stringent. Pharmaceutical companies require highly accurate spectral data to ensure compliance with FDA and EMA regulations, creating a substantial market for advanced noise reduction solutions in FTIR processing.

The chemical manufacturing industry represents another significant market segment, where real-time process monitoring using FTIR spectroscopy has become essential for quality control. Companies in this sector are increasingly willing to invest in premium solutions that can deliver cleaner spectral data, as even minor improvements in signal-to-noise ratios can translate to substantial cost savings by reducing production errors and material waste.

Academic and research institutions constitute a growing market for high-quality spectral analysis tools, particularly as interdisciplinary research involving complex biological samples becomes more prevalent. These institutions often work with challenging samples that produce inherently noisy spectra, creating demand for sophisticated noise reduction algorithms and hardware solutions.

Environmental monitoring applications have emerged as a rapidly expanding market segment, with governmental agencies and private organizations deploying FTIR systems for air quality monitoring, water analysis, and soil contamination studies. The ability to detect trace contaminants reliably depends critically on effective noise reduction techniques.

Market research indicates that end-users are increasingly prioritizing software solutions that can be integrated with existing FTIR hardware, allowing for cost-effective upgrades rather than complete system replacements. This trend has created opportunities for specialized software developers focusing on advanced signal processing algorithms specifically designed for spectroscopic applications.

The geographical distribution of market demand shows particular strength in North America and Europe, where established pharmaceutical and chemical industries drive adoption. However, the Asia-Pacific region is experiencing the fastest growth rate as manufacturing capabilities expand and regulatory frameworks mature, creating new opportunities for providers of high-quality spectral analysis solutions.

Current Challenges in FTIR Noise Reduction

Despite significant advancements in Fourier Transform Infrared (FTIR) spectroscopy technology, noise reduction remains one of the most persistent challenges in obtaining high-quality spectral data. The current FTIR systems face multiple noise sources that significantly impact data quality and interpretation accuracy. These noise sources can be categorized into instrumental, environmental, and sample-related factors, each presenting unique challenges to researchers and technicians.

Instrumental noise sources include detector noise (particularly thermal noise in MCT detectors), electronic interference from internal components, and optical path fluctuations. Modern FTIR systems have improved significantly in these areas, but fundamental physical limitations still exist, especially when working with low signal-to-noise ratios or when high spectral resolution is required.

Environmental factors constitute another major challenge, with atmospheric water vapor and CO2 absorption being particularly problematic. These atmospheric interferences create characteristic absorption bands that can mask important sample features. Temperature and humidity fluctuations in the laboratory environment can also introduce baseline instabilities and spectral distortions that are difficult to correct in post-processing.

Sample-related challenges include scattering effects in heterogeneous samples, varying sample thickness, and uneven distribution of analytes. These factors can introduce non-linear baseline shifts and spectral artifacts that conventional preprocessing algorithms struggle to address effectively. Additionally, low concentration analytes often produce weak absorption bands that are easily overwhelmed by background noise.

Current digital signal processing approaches have limitations as well. While techniques such as Savitzky-Golay filtering, wavelet transforms, and Fourier self-deconvolution are widely used, they often involve trade-offs between noise reduction and spectral resolution. Aggressive filtering can remove noise but may also distort or eliminate subtle spectral features that contain valuable chemical information.

Machine learning approaches for noise reduction are emerging but face challenges in generalizability across different sample types and experimental conditions. Training data requirements and computational complexity can limit their practical implementation in routine analytical workflows.

Reproducibility issues further complicate FTIR analysis, as noise patterns can vary between measurements, instruments, and laboratories. This variability makes it difficult to establish standardized noise reduction protocols that work consistently across different experimental setups.

The development of effective noise reduction strategies is further constrained by the need to maintain spectral integrity while removing noise. Distinguishing between noise and actual spectral features remains a fundamental challenge, particularly in complex biological samples or in the analysis of trace components in environmental matrices.

Instrumental noise sources include detector noise (particularly thermal noise in MCT detectors), electronic interference from internal components, and optical path fluctuations. Modern FTIR systems have improved significantly in these areas, but fundamental physical limitations still exist, especially when working with low signal-to-noise ratios or when high spectral resolution is required.

Environmental factors constitute another major challenge, with atmospheric water vapor and CO2 absorption being particularly problematic. These atmospheric interferences create characteristic absorption bands that can mask important sample features. Temperature and humidity fluctuations in the laboratory environment can also introduce baseline instabilities and spectral distortions that are difficult to correct in post-processing.

Sample-related challenges include scattering effects in heterogeneous samples, varying sample thickness, and uneven distribution of analytes. These factors can introduce non-linear baseline shifts and spectral artifacts that conventional preprocessing algorithms struggle to address effectively. Additionally, low concentration analytes often produce weak absorption bands that are easily overwhelmed by background noise.

Current digital signal processing approaches have limitations as well. While techniques such as Savitzky-Golay filtering, wavelet transforms, and Fourier self-deconvolution are widely used, they often involve trade-offs between noise reduction and spectral resolution. Aggressive filtering can remove noise but may also distort or eliminate subtle spectral features that contain valuable chemical information.

Machine learning approaches for noise reduction are emerging but face challenges in generalizability across different sample types and experimental conditions. Training data requirements and computational complexity can limit their practical implementation in routine analytical workflows.

Reproducibility issues further complicate FTIR analysis, as noise patterns can vary between measurements, instruments, and laboratories. This variability makes it difficult to establish standardized noise reduction protocols that work consistently across different experimental setups.

The development of effective noise reduction strategies is further constrained by the need to maintain spectral integrity while removing noise. Distinguishing between noise and actual spectral features remains a fundamental challenge, particularly in complex biological samples or in the analysis of trace components in environmental matrices.

Existing Noise Reduction Algorithms and Methods

01 Noise reduction techniques in FTIR spectroscopy

Various methods can be employed to reduce noise in FTIR spectra processing, including digital filtering algorithms, signal averaging, and baseline correction techniques. These approaches help to improve signal-to-noise ratio and enhance the quality of spectral data for more accurate analysis. Advanced mathematical algorithms can be applied to filter out random noise while preserving the essential spectral features.- Noise reduction algorithms for FTIR spectra: Various algorithms can be applied to reduce noise in FTIR spectral data. These include Fourier transform techniques, wavelet transforms, and digital filtering methods that help separate signal from noise. Advanced mathematical processing can identify and remove random fluctuations while preserving the integrity of spectral features. These techniques improve the signal-to-noise ratio and enhance the quality of spectral data for more accurate analysis.

- Hardware solutions for minimizing FTIR spectral noise: Hardware-based approaches can significantly reduce noise in FTIR spectroscopy. These include improved detector designs, temperature control systems, and optical component enhancements. Specialized interferometers with higher stability reduce mechanical vibrations that contribute to noise. Some systems incorporate reference channels or dual-beam configurations to compensate for environmental fluctuations in real-time, resulting in cleaner spectral data acquisition before computational processing.

- Machine learning approaches for FTIR spectra denoising: Machine learning and artificial intelligence techniques are increasingly applied to FTIR spectra processing. Neural networks can be trained to distinguish between noise patterns and genuine spectral features. Deep learning models analyze large datasets of spectra to identify and remove noise components while preserving essential chemical information. These approaches are particularly effective for complex samples with overlapping spectral features or when dealing with variable noise conditions.

- Baseline correction and spectral preprocessing methods: Effective baseline correction is crucial for removing background noise in FTIR spectra. Various preprocessing techniques include smoothing functions, derivative spectroscopy, and polynomial fitting algorithms to eliminate baseline drift and systematic noise. Normalization methods help standardize spectra for comparative analysis. These preprocessing steps are often applied sequentially in optimized workflows to enhance spectral quality before further analysis or interpretation.

- Real-time adaptive noise filtering for FTIR analysis: Real-time adaptive filtering techniques dynamically adjust to changing noise conditions during FTIR data acquisition. These systems continuously monitor signal quality and modify filtering parameters accordingly. Adaptive algorithms can identify and compensate for specific noise sources such as environmental vibrations, temperature fluctuations, or electromagnetic interference. This approach is particularly valuable for in-process monitoring applications where conditions may change during measurement.

02 Fourier transform algorithms for spectral processing

Specialized Fourier transform algorithms are used to process raw interferogram data into meaningful FTIR spectra. These algorithms include apodization functions, zero-filling, and phase correction techniques that help minimize artifacts and noise in the resulting spectra. Optimized computational methods can significantly improve spectral resolution and reduce processing-induced noise in the final data.Expand Specific Solutions03 Hardware solutions for noise minimization

Hardware-based approaches to minimize noise in FTIR systems include improved detector designs, temperature stabilization, vibration isolation, and optical path purging. These physical modifications to the FTIR instrumentation help reduce environmental and instrumental noise sources before they affect the spectral data, resulting in cleaner raw signals that require less post-processing.Expand Specific Solutions04 Machine learning and AI for spectral denoising

Advanced machine learning and artificial intelligence techniques are increasingly being applied to FTIR spectra processing to identify and remove noise patterns. These approaches include neural networks, principal component analysis, and pattern recognition algorithms that can distinguish between meaningful spectral features and various types of noise, enabling automated and more effective noise reduction.Expand Specific Solutions05 Chemometric methods for spectral enhancement

Chemometric techniques such as multivariate curve resolution, partial least squares, and derivative spectroscopy are employed to enhance FTIR spectral quality by separating overlapping signals from noise. These statistical approaches help in extracting meaningful chemical information from noisy spectra and improve the reliability of quantitative and qualitative analyses in complex samples.Expand Specific Solutions

Leading Companies and Research Institutions in FTIR Technology

The FTIR spectra noise reduction technology landscape is currently in a mature development phase, with established players and emerging innovators. The market is experiencing steady growth, driven by increasing demand for precise spectroscopic analysis across pharmaceutical, environmental monitoring, and materials science sectors. Key technology leaders include Intel Corp. and Texas Instruments, who leverage their semiconductor expertise to develop advanced signal processing solutions. Specialized instrumentation companies like Yokogawa Electric, Hitachi High-Tech Science, and Spectral Sciences are advancing algorithm-based noise reduction techniques. Academic institutions such as University of Southern California and Wisconsin Alumni Research Foundation contribute fundamental research innovations. The technology shows high maturity with ongoing refinements focused on real-time processing capabilities and integration with AI for automated noise pattern recognition.

Texas Instruments Incorporated

Technical Solution: Texas Instruments has developed a hardware-centric approach to FTIR noise reduction through their specialized digital signal processing (DSP) chips and integrated circuit solutions. Their technology focuses on real-time signal processing capabilities critical for industrial process monitoring and portable FTIR applications. TI's solution begins with high-performance analog-to-digital converters featuring exceptional signal-to-noise ratios and dynamic range, specifically optimized for spectroscopic applications[8]. These feed into dedicated DSP processors implementing their proprietary "SpectralClean" algorithms, which perform multiple noise reduction functions in parallel with minimal latency. The processing pipeline includes adaptive filtering techniques that automatically adjust to changing noise profiles, optimized FFT implementations that minimize computational artifacts, and specialized filtering algorithms that preserve critical spectral features while aggressively removing noise. For portable applications, TI has developed ultra-low-power implementations that maintain high performance while significantly extending battery life. Their hardware architecture also includes dedicated co-processors for specific noise reduction tasks such as baseline correction, atmospheric compensation, and detector response normalization, enabling comprehensive noise reduction without compromising processing speed[9].

Strengths: Exceptional real-time processing capabilities; extremely low power consumption ideal for portable instruments; hardware acceleration providing superior performance for specific noise reduction tasks. Weaknesses: Less flexible than purely software-based approaches; requires specialized hardware integration; optimization primarily focused on specific application scenarios rather than general-purpose use.

Spectral Sciences, Inc.

Technical Solution: Spectral Sciences has developed advanced algorithms for FTIR spectra noise reduction utilizing wavelet transform techniques combined with machine learning approaches. Their solution employs multi-resolution analysis to decompose spectral signals into different frequency components, allowing selective filtering of noise while preserving important spectral features[1]. The company's proprietary adaptive thresholding algorithms automatically adjust filtering parameters based on signal characteristics, enabling optimal noise reduction across varying sample types and environmental conditions. Additionally, they've implemented a comprehensive pre-processing pipeline that includes baseline correction, atmospheric compensation, and detector response normalization to address multiple noise sources simultaneously[3]. Their software suite integrates these techniques with user-friendly interfaces that allow spectroscopists to visualize and fine-tune noise reduction parameters in real-time, making it accessible for both research and industrial applications.

Strengths: Superior preservation of spectral features while achieving high noise reduction ratios; adaptive algorithms that require minimal user intervention; comprehensive approach addressing multiple noise sources simultaneously. Weaknesses: Computationally intensive algorithms may require significant processing power; complex implementation may necessitate specialized training; potentially higher cost compared to simpler solutions.

Key Innovations in Spectral Denoising Approaches

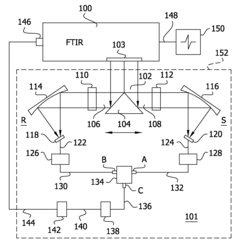

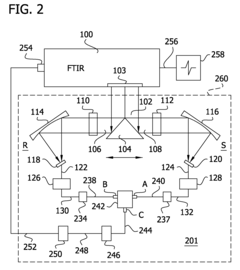

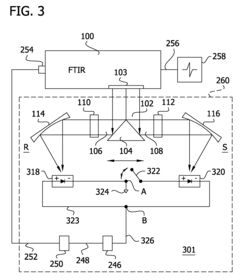

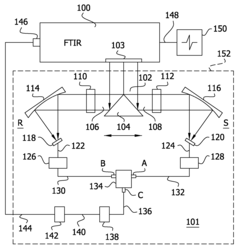

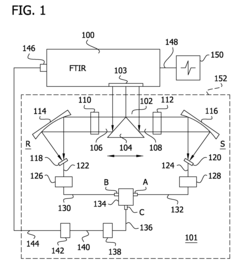

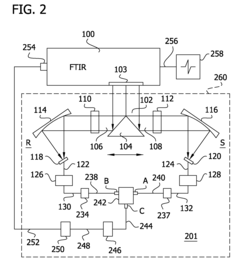

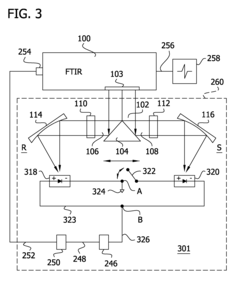

External/internal optical adapter for FTIR spectrophotometer

PatentActiveUS20110079718A1

Innovation

- An optical adapter is introduced that splits the light source into reference and sample beams, allowing for the detection of difference signals and the minimization of noise by positioning the beamsplitter to reduce the center burst of the interferogram, thereby enhancing the signal-to-noise ratio by 50 to 100 times.

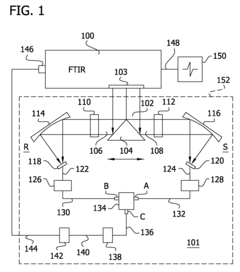

External/internal optical adapter with biased photodiodes for FTIR spectrophotometer

PatentActiveUS20110079719A1

Innovation

- The introduction of an optical adapter that moves a beamsplitter to minimize noise, allowing for a significant reduction in baseline noise by up to 100-fold and enhancing the signal-to-noise ratio, achieved through the use of biased photodiodes and a processor to determine spectra based on difference signals.

Hardware Solutions for FTIR Noise Minimization

Hardware solutions for FTIR noise minimization represent critical advancements in improving spectral quality at the instrument level. Modern FTIR spectrometers incorporate sophisticated optical designs that significantly reduce noise sources before digital processing occurs. High-precision interferometers with advanced stabilization mechanisms minimize mechanical vibrations that would otherwise introduce random noise patterns in collected spectra.

Detector technology has evolved substantially, with modern systems employing mercury cadmium telluride (MCT) and deuterated triglycine sulfate (DTGS) detectors that offer superior signal-to-noise ratios compared to earlier generations. MCT detectors, requiring liquid nitrogen cooling, provide exceptional sensitivity for applications demanding high-resolution spectra, while DTGS detectors offer reliable performance for routine analyses without requiring cryogenic cooling.

Environmental control systems within FTIR instruments have become increasingly sophisticated, with sealed and desiccated optical paths that minimize water vapor and CO2 interference. Temperature-stabilized chambers house critical optical components, preventing thermal drift that can introduce baseline instabilities and spectral artifacts during extended measurement periods.

Purge gas systems represent another hardware solution, where dry nitrogen or purified air continuously flows through the sample chamber and optical path. This approach effectively eliminates atmospheric interference from moisture and carbon dioxide, which are particularly problematic in mid-IR regions where these molecules strongly absorb.

Beam conditioning hardware, including aperture controls and optical filters, allows optimization of the infrared beam characteristics for specific sample types. These components help balance signal strength against noise, particularly important when analyzing challenging samples with low transmittance or high scattering properties.

Advanced sampling accessories have also contributed significantly to noise reduction. Attenuated Total Reflectance (ATR) modules with temperature control capabilities ensure stable sample-crystal interfaces, reducing noise from thermal fluctuations. Similarly, diffuse reflectance accessories incorporate improved optical geometries that maximize signal collection while minimizing stray light interference.

Integration of hardware solutions with automated calibration systems ensures consistent performance over time. Regular background measurements against reference materials compensate for instrument drift, while internal laser references maintain wavelength accuracy. These hardware-based calibration mechanisms provide the foundation upon which subsequent digital processing techniques can build, ensuring that noise reduction begins at the physical measurement level rather than relying solely on post-acquisition corrections.

Detector technology has evolved substantially, with modern systems employing mercury cadmium telluride (MCT) and deuterated triglycine sulfate (DTGS) detectors that offer superior signal-to-noise ratios compared to earlier generations. MCT detectors, requiring liquid nitrogen cooling, provide exceptional sensitivity for applications demanding high-resolution spectra, while DTGS detectors offer reliable performance for routine analyses without requiring cryogenic cooling.

Environmental control systems within FTIR instruments have become increasingly sophisticated, with sealed and desiccated optical paths that minimize water vapor and CO2 interference. Temperature-stabilized chambers house critical optical components, preventing thermal drift that can introduce baseline instabilities and spectral artifacts during extended measurement periods.

Purge gas systems represent another hardware solution, where dry nitrogen or purified air continuously flows through the sample chamber and optical path. This approach effectively eliminates atmospheric interference from moisture and carbon dioxide, which are particularly problematic in mid-IR regions where these molecules strongly absorb.

Beam conditioning hardware, including aperture controls and optical filters, allows optimization of the infrared beam characteristics for specific sample types. These components help balance signal strength against noise, particularly important when analyzing challenging samples with low transmittance or high scattering properties.

Advanced sampling accessories have also contributed significantly to noise reduction. Attenuated Total Reflectance (ATR) modules with temperature control capabilities ensure stable sample-crystal interfaces, reducing noise from thermal fluctuations. Similarly, diffuse reflectance accessories incorporate improved optical geometries that maximize signal collection while minimizing stray light interference.

Integration of hardware solutions with automated calibration systems ensures consistent performance over time. Regular background measurements against reference materials compensate for instrument drift, while internal laser references maintain wavelength accuracy. These hardware-based calibration mechanisms provide the foundation upon which subsequent digital processing techniques can build, ensuring that noise reduction begins at the physical measurement level rather than relying solely on post-acquisition corrections.

Validation Metrics for Spectral Quality Assessment

Validation metrics for spectral quality assessment are essential tools for evaluating the reliability and accuracy of FTIR spectral data. These metrics provide quantitative measures to determine whether spectra meet acceptable quality standards before further analysis or interpretation. Signal-to-noise ratio (SNR) stands as the most fundamental metric, calculated by dividing the maximum signal intensity by the standard deviation of the noise in a spectral region without analyte peaks. Higher SNR values indicate cleaner spectra with greater reliability for subsequent analysis.

Root Mean Square Error (RMSE) serves as another critical validation metric, measuring the difference between processed spectra and reference standards. Lower RMSE values suggest higher spectral quality and more accurate representation of the sample's chemical composition. For time-series measurements or repeated scans, the coefficient of variation (CV) provides valuable insights into measurement precision and reproducibility across multiple acquisitions.

Peak resolution metrics quantify the ability to distinguish between closely spaced spectral features. These include Full Width at Half Maximum (FWHM) measurements and peak separation indices. Better resolution indicates higher spectral quality and enables more accurate identification of chemical components in complex mixtures. Additionally, baseline stability metrics assess the flatness and consistency of the spectral baseline, with metrics like baseline slope and curvature providing quantitative measures of baseline quality.

Spectral artifacts detection algorithms represent another category of validation metrics, automatically identifying common artifacts such as water vapor interference, CO2 bands, and fringing effects. These algorithms generate artifact scores that can be used to flag problematic spectra requiring additional processing or recollection. For applications involving quantitative analysis, prediction error metrics such as Standard Error of Prediction (SEP) and Relative Percent Difference (RPD) evaluate how noise affects the accuracy of concentration predictions.

Modern FTIR systems increasingly incorporate real-time quality assessment protocols that apply these validation metrics during data acquisition. These systems can automatically flag spectra that fail to meet predefined quality thresholds, enabling immediate corrective action. Machine learning approaches have also emerged for spectral quality assessment, using trained models to evaluate multiple quality parameters simultaneously and provide comprehensive quality scores.

Standardization of these validation metrics across laboratories and industries remains an ongoing challenge. Organizations like ASTM International and the National Institute of Standards and Technology (NIST) continue to develop reference materials and protocols to ensure consistent application of quality metrics across different instruments and experimental setups.

Root Mean Square Error (RMSE) serves as another critical validation metric, measuring the difference between processed spectra and reference standards. Lower RMSE values suggest higher spectral quality and more accurate representation of the sample's chemical composition. For time-series measurements or repeated scans, the coefficient of variation (CV) provides valuable insights into measurement precision and reproducibility across multiple acquisitions.

Peak resolution metrics quantify the ability to distinguish between closely spaced spectral features. These include Full Width at Half Maximum (FWHM) measurements and peak separation indices. Better resolution indicates higher spectral quality and enables more accurate identification of chemical components in complex mixtures. Additionally, baseline stability metrics assess the flatness and consistency of the spectral baseline, with metrics like baseline slope and curvature providing quantitative measures of baseline quality.

Spectral artifacts detection algorithms represent another category of validation metrics, automatically identifying common artifacts such as water vapor interference, CO2 bands, and fringing effects. These algorithms generate artifact scores that can be used to flag problematic spectra requiring additional processing or recollection. For applications involving quantitative analysis, prediction error metrics such as Standard Error of Prediction (SEP) and Relative Percent Difference (RPD) evaluate how noise affects the accuracy of concentration predictions.

Modern FTIR systems increasingly incorporate real-time quality assessment protocols that apply these validation metrics during data acquisition. These systems can automatically flag spectra that fail to meet predefined quality thresholds, enabling immediate corrective action. Machine learning approaches have also emerged for spectral quality assessment, using trained models to evaluate multiple quality parameters simultaneously and provide comprehensive quality scores.

Standardization of these validation metrics across laboratories and industries remains an ongoing challenge. Organizations like ASTM International and the National Institute of Standards and Technology (NIST) continue to develop reference materials and protocols to ensure consistent application of quality metrics across different instruments and experimental setups.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!