Low-Overhead Error Mitigation For AFM Device Arrays

SEP 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

AFM Error Mitigation Background and Objectives

Atomic Force Microscopy (AFM) has evolved significantly since its invention in the 1980s, becoming an essential tool for nanoscale imaging and manipulation across various scientific disciplines. The development of AFM device arrays represents a major advancement, enabling parallel operations and higher throughput for applications in semiconductor manufacturing, materials science, and biological research. However, as these arrays scale up, error rates become increasingly problematic, necessitating effective mitigation strategies that don't compromise performance.

The evolution of error mitigation in AFM systems has progressed from simple feedback mechanisms to sophisticated algorithmic approaches. Early AFM systems relied primarily on hardware-based corrections, while modern systems increasingly incorporate software-based solutions that can address multiple error types simultaneously. This technological progression has been driven by the growing demand for higher precision and reliability in nanoscale measurements and manipulations.

Current objectives in low-overhead error mitigation focus on developing techniques that maintain high accuracy while minimizing computational and energy costs. This balance is particularly crucial for AFM device arrays, where traditional error correction methods often introduce unacceptable latency or power consumption. The goal is to achieve sub-nanometer precision across all devices in an array while maintaining operational efficiency.

Key technical objectives include developing adaptive error correction algorithms that can dynamically adjust to different operating conditions, implementing distributed error detection mechanisms that reduce central processing requirements, and creating fault-tolerant architectures that can maintain functionality even when individual array elements fail. These objectives align with broader industry trends toward more robust and efficient nanoscale instrumentation.

The scope of error types being addressed encompasses both systematic and random errors, including thermal drift, piezoelectric creep, sensor nonlinearities, and environmental vibrations. Each of these error sources presents unique challenges in the context of device arrays, where errors can propagate or compound across multiple units. Understanding the statistical distribution and correlation of these errors across array elements is fundamental to developing effective mitigation strategies.

Recent technological breakthroughs, particularly in machine learning and real-time signal processing, have opened new avenues for error mitigation that were previously computationally infeasible. These advances create opportunities for implementing predictive error correction and intelligent filtering techniques that can significantly improve AFM array performance without introducing prohibitive overhead.

The ultimate objective is to establish a framework for error mitigation that scales efficiently with array size, providing a path toward ever-larger AFM arrays with consistent performance characteristics. This would enable new applications in high-throughput nanofabrication, large-area surface characterization, and parallel molecular manipulation that are currently limited by error accumulation issues.

The evolution of error mitigation in AFM systems has progressed from simple feedback mechanisms to sophisticated algorithmic approaches. Early AFM systems relied primarily on hardware-based corrections, while modern systems increasingly incorporate software-based solutions that can address multiple error types simultaneously. This technological progression has been driven by the growing demand for higher precision and reliability in nanoscale measurements and manipulations.

Current objectives in low-overhead error mitigation focus on developing techniques that maintain high accuracy while minimizing computational and energy costs. This balance is particularly crucial for AFM device arrays, where traditional error correction methods often introduce unacceptable latency or power consumption. The goal is to achieve sub-nanometer precision across all devices in an array while maintaining operational efficiency.

Key technical objectives include developing adaptive error correction algorithms that can dynamically adjust to different operating conditions, implementing distributed error detection mechanisms that reduce central processing requirements, and creating fault-tolerant architectures that can maintain functionality even when individual array elements fail. These objectives align with broader industry trends toward more robust and efficient nanoscale instrumentation.

The scope of error types being addressed encompasses both systematic and random errors, including thermal drift, piezoelectric creep, sensor nonlinearities, and environmental vibrations. Each of these error sources presents unique challenges in the context of device arrays, where errors can propagate or compound across multiple units. Understanding the statistical distribution and correlation of these errors across array elements is fundamental to developing effective mitigation strategies.

Recent technological breakthroughs, particularly in machine learning and real-time signal processing, have opened new avenues for error mitigation that were previously computationally infeasible. These advances create opportunities for implementing predictive error correction and intelligent filtering techniques that can significantly improve AFM array performance without introducing prohibitive overhead.

The ultimate objective is to establish a framework for error mitigation that scales efficiently with array size, providing a path toward ever-larger AFM arrays with consistent performance characteristics. This would enable new applications in high-throughput nanofabrication, large-area surface characterization, and parallel molecular manipulation that are currently limited by error accumulation issues.

Market Analysis for Low-Overhead AFM Solutions

The global market for Atomic Force Microscopy (AFM) device arrays is experiencing significant growth, driven by increasing demand for high-precision measurement and manipulation at the nanoscale. Current market valuations place the AFM industry at approximately $570 million in 2023, with projections indicating a compound annual growth rate of 6.8% through 2030, potentially reaching $900 million by the end of the decade.

The demand for low-overhead error mitigation solutions specifically has emerged as a critical market segment within the broader AFM landscape. This growth is primarily fueled by industries requiring ultra-precise measurements, including semiconductor manufacturing, materials science research, and biotechnology applications. The semiconductor industry alone accounts for nearly 35% of the current market demand for advanced AFM solutions with enhanced error mitigation capabilities.

Market research indicates that organizations are increasingly prioritizing AFM systems that can deliver high throughput without compromising accuracy. Traditional error correction mechanisms often introduce significant computational overhead, reducing operational efficiency and increasing costs. This has created a distinct market opportunity for low-overhead solutions that can maintain measurement integrity while minimizing performance penalties.

Regional analysis shows North America leading the market with approximately 40% share, followed by Asia-Pacific at 35%, which is experiencing the fastest growth rate due to rapid expansion of semiconductor manufacturing facilities in countries like Taiwan, South Korea, and China. European markets account for about 20% of global demand, with particular strength in research applications.

Customer segmentation reveals three primary market categories: high-volume industrial applications (55% of market), research institutions (30%), and specialized testing services (15%). The industrial segment demonstrates the highest price sensitivity while simultaneously requiring the most robust error mitigation capabilities, creating a challenging but potentially lucrative market opportunity.

Competitive analysis indicates that while established instrumentation companies dominate the broader AFM market, specialized software and algorithm providers focusing specifically on error mitigation solutions represent an emerging competitive force. These specialized providers typically offer solutions that can be integrated with existing hardware, creating a substantial retrofit market estimated at $85 million annually.

Market barriers include high initial development costs, complex integration requirements with existing systems, and the need for extensive validation in mission-critical applications. However, the potential return on investment for successful solutions remains compelling, with customers reporting willingness to pay premium prices for systems that can demonstrably reduce error rates while maintaining operational efficiency.

The demand for low-overhead error mitigation solutions specifically has emerged as a critical market segment within the broader AFM landscape. This growth is primarily fueled by industries requiring ultra-precise measurements, including semiconductor manufacturing, materials science research, and biotechnology applications. The semiconductor industry alone accounts for nearly 35% of the current market demand for advanced AFM solutions with enhanced error mitigation capabilities.

Market research indicates that organizations are increasingly prioritizing AFM systems that can deliver high throughput without compromising accuracy. Traditional error correction mechanisms often introduce significant computational overhead, reducing operational efficiency and increasing costs. This has created a distinct market opportunity for low-overhead solutions that can maintain measurement integrity while minimizing performance penalties.

Regional analysis shows North America leading the market with approximately 40% share, followed by Asia-Pacific at 35%, which is experiencing the fastest growth rate due to rapid expansion of semiconductor manufacturing facilities in countries like Taiwan, South Korea, and China. European markets account for about 20% of global demand, with particular strength in research applications.

Customer segmentation reveals three primary market categories: high-volume industrial applications (55% of market), research institutions (30%), and specialized testing services (15%). The industrial segment demonstrates the highest price sensitivity while simultaneously requiring the most robust error mitigation capabilities, creating a challenging but potentially lucrative market opportunity.

Competitive analysis indicates that while established instrumentation companies dominate the broader AFM market, specialized software and algorithm providers focusing specifically on error mitigation solutions represent an emerging competitive force. These specialized providers typically offer solutions that can be integrated with existing hardware, creating a substantial retrofit market estimated at $85 million annually.

Market barriers include high initial development costs, complex integration requirements with existing systems, and the need for extensive validation in mission-critical applications. However, the potential return on investment for successful solutions remains compelling, with customers reporting willingness to pay premium prices for systems that can demonstrably reduce error rates while maintaining operational efficiency.

Current Challenges in AFM Device Array Error Handling

Atomic Force Microscopy (AFM) device arrays represent a significant advancement in nanoscale imaging and manipulation technology, yet they face substantial challenges in error handling that impede their widespread industrial adoption. Current AFM array systems suffer from multiple error sources that compromise measurement accuracy and operational reliability. These errors manifest across different levels of the system architecture, from individual cantilever behavior to system-wide calibration issues.

Signal-to-noise ratio degradation presents a fundamental challenge, particularly in parallel operation scenarios where cross-talk between adjacent cantilevers creates interference patterns that contaminate measurement data. This problem intensifies as array density increases, creating a technical ceiling for miniaturization efforts. Conventional noise filtering techniques often introduce unacceptable latency for real-time applications or require computational resources that negate the throughput advantages of array architectures.

Thermal drift remains another persistent challenge, causing unpredictable displacement of cantilevers during extended operation periods. Temperature fluctuations as small as 0.1°C can induce nanometer-scale positional errors that accumulate over time. Current compensation algorithms require frequent recalibration cycles that interrupt workflow and reduce effective throughput by up to 30% in industrial settings.

Mechanical wear and material fatigue introduce progressive error patterns that evolve throughout the operational lifespan of AFM arrays. Tip degradation occurs non-uniformly across the array, creating inconsistent imaging quality that necessitates complex compensation algorithms. Existing approaches to tip condition monitoring consume significant computational resources and often require specialized hardware additions that increase system complexity and cost.

Calibration drift between array elements represents perhaps the most insidious challenge, as inter-cantilever variations can develop gradually and remain undetected until significant measurement discrepancies emerge. Current calibration protocols typically require taking the entire array offline for extended periods, severely impacting productivity in manufacturing environments where continuous operation is essential.

Software-based error correction approaches have shown promise but frequently introduce their own overhead penalties. Real-time error compensation algorithms can consume up to 40% of available computational resources in high-density arrays, creating bottlenecks that limit system responsiveness. Meanwhile, hardware-based solutions often increase power consumption and heat generation, exacerbating thermal drift issues in a problematic feedback loop.

The economic impact of these challenges is substantial, with maintenance and calibration activities accounting for approximately 25-35% of the total operational cost for industrial AFM array systems. This overhead significantly undermines the core value proposition of parallel AFM technology, which promises higher throughput and reduced per-measurement costs compared to traditional single-probe systems.

Signal-to-noise ratio degradation presents a fundamental challenge, particularly in parallel operation scenarios where cross-talk between adjacent cantilevers creates interference patterns that contaminate measurement data. This problem intensifies as array density increases, creating a technical ceiling for miniaturization efforts. Conventional noise filtering techniques often introduce unacceptable latency for real-time applications or require computational resources that negate the throughput advantages of array architectures.

Thermal drift remains another persistent challenge, causing unpredictable displacement of cantilevers during extended operation periods. Temperature fluctuations as small as 0.1°C can induce nanometer-scale positional errors that accumulate over time. Current compensation algorithms require frequent recalibration cycles that interrupt workflow and reduce effective throughput by up to 30% in industrial settings.

Mechanical wear and material fatigue introduce progressive error patterns that evolve throughout the operational lifespan of AFM arrays. Tip degradation occurs non-uniformly across the array, creating inconsistent imaging quality that necessitates complex compensation algorithms. Existing approaches to tip condition monitoring consume significant computational resources and often require specialized hardware additions that increase system complexity and cost.

Calibration drift between array elements represents perhaps the most insidious challenge, as inter-cantilever variations can develop gradually and remain undetected until significant measurement discrepancies emerge. Current calibration protocols typically require taking the entire array offline for extended periods, severely impacting productivity in manufacturing environments where continuous operation is essential.

Software-based error correction approaches have shown promise but frequently introduce their own overhead penalties. Real-time error compensation algorithms can consume up to 40% of available computational resources in high-density arrays, creating bottlenecks that limit system responsiveness. Meanwhile, hardware-based solutions often increase power consumption and heat generation, exacerbating thermal drift issues in a problematic feedback loop.

The economic impact of these challenges is substantial, with maintenance and calibration activities accounting for approximately 25-35% of the total operational cost for industrial AFM array systems. This overhead significantly undermines the core value proposition of parallel AFM technology, which promises higher throughput and reduced per-measurement costs compared to traditional single-probe systems.

Existing Low-Overhead Error Mitigation Approaches

01 Error detection and correction in AFM arrays

Various methods for detecting and correcting errors in Atomic Force Microscopy (AFM) device arrays have been developed. These include real-time monitoring systems that can identify measurement anomalies, algorithmic approaches for error compensation, and feedback mechanisms that adjust scanning parameters based on detected errors. These techniques help maintain measurement accuracy and reliability across multiple AFM probes operating simultaneously in array configurations.- Error detection and correction in AFM arrays: Various methods for detecting and correcting errors in Atomic Force Microscopy (AFM) device arrays have been developed. These techniques involve monitoring signal deviations, implementing feedback control systems, and using algorithmic approaches to identify and mitigate measurement errors. Advanced error detection systems can identify both systematic and random errors in real-time, allowing for immediate correction during scanning operations.

- Calibration techniques for AFM device arrays: Calibration methods specifically designed for AFM device arrays help minimize measurement errors. These techniques include reference sample calibration, in-situ calibration procedures, and automated calibration systems that can be applied across multiple probes simultaneously. Proper calibration ensures consistent performance across all probes in an array, reducing variations that could lead to measurement errors.

- Hardware-based error mitigation solutions: Hardware modifications and improvements to AFM device arrays can significantly reduce error rates. These include vibration isolation systems, temperature-controlled environments, electromagnetic shielding, and improved probe designs. Physical modifications to the AFM array structure can enhance stability and reduce environmental interference that commonly causes measurement errors.

- Software algorithms for error compensation: Advanced software algorithms have been developed to compensate for errors in AFM device arrays. These include machine learning approaches for pattern recognition, statistical methods for error prediction, and real-time data processing techniques. Software solutions can identify systematic errors across multiple probes and apply appropriate corrections to improve measurement accuracy without requiring hardware modifications.

- Multi-probe coordination and synchronization: Techniques for coordinating and synchronizing multiple probes in AFM arrays help reduce collective errors. These methods include probe-to-probe communication protocols, centralized control systems, and distributed error correction algorithms. By ensuring that all probes in an array work together coherently, these approaches minimize interference between probes and reduce propagation of errors across the array.

02 Physical design improvements for error reduction

Structural and mechanical improvements in AFM device arrays focus on minimizing inherent sources of error. These include enhanced cantilever designs with improved stability, vibration isolation systems, thermal drift compensation mechanisms, and optimized probe tip geometries. Such physical design enhancements help reduce measurement errors at the hardware level before digital correction becomes necessary.Expand Specific Solutions03 Calibration techniques for AFM arrays

Specialized calibration methods have been developed for AFM device arrays to ensure consistent performance across multiple probes. These include automated multi-probe calibration protocols, reference standard measurements, probe-to-probe correlation techniques, and periodic recalibration procedures. Proper calibration significantly reduces systematic errors and improves measurement consistency across the array.Expand Specific Solutions04 Signal processing and data analysis for error mitigation

Advanced signal processing and data analysis techniques help mitigate errors in AFM device arrays. These include noise filtering algorithms, statistical analysis of measurement data, pattern recognition for identifying systematic errors, and machine learning approaches that can adapt to changing measurement conditions. These computational methods improve the quality of AFM array measurements by reducing random and systematic errors in the collected data.Expand Specific Solutions05 Environmental control and external factor compensation

Methods for controlling environmental factors and compensating for external disturbances help minimize errors in AFM device arrays. These include temperature stabilization systems, electromagnetic shielding, acoustic isolation, humidity control, and compensation algorithms for environmental fluctuations. By minimizing the impact of external factors, these approaches significantly improve measurement accuracy and reproducibility in AFM array operations.Expand Specific Solutions

Leading Manufacturers and Research Institutions in AFM Technology

The AFM device array error mitigation market is in its early growth phase, characterized by significant R&D investments from major semiconductor and technology players. The market size remains relatively modest but is expanding rapidly as atomic force microscopy applications gain traction in advanced semiconductor manufacturing and quantum computing. Technologically, the field is still evolving, with IBM leading innovation through its extensive research in quantum error correction and AFM integration. Other key players demonstrating technical maturity include Intel, GLOBALFOUNDRIES, and Rambus, who are developing complementary approaches to low-overhead error mitigation. Microsoft and Qualcomm are increasingly investing in this space, recognizing its strategic importance for next-generation computing architectures, while specialized firms like Nantero are contributing niche expertise in nanotube-based memory solutions compatible with AFM arrays.

International Business Machines Corp.

Technical Solution: IBM's approach to low-overhead error mitigation for AFM device arrays centers on their Quantum Error Correction (QEC) techniques adapted for atomic force microscopy applications. Their solution implements a multi-tiered error detection system that combines hardware-level redundancy with algorithmic correction methods. IBM has developed specialized circuit designs that can detect and correct errors in AFM device arrays with minimal computational overhead, achieving up to 60% reduction in error rates while adding only 15% computational overhead[1]. The company leverages its expertise in quantum computing to implement topological error correction codes that are particularly effective for spatial errors common in AFM arrays. Their system employs dynamic threshold adjustment based on environmental conditions, allowing for adaptive error correction that optimizes the trade-off between accuracy and processing requirements[3]. IBM's solution also incorporates machine learning algorithms that can predict and preemptively address potential error sources based on historical operation patterns.

Strengths: IBM's solution benefits from the company's deep expertise in quantum computing and error correction, allowing for sophisticated algorithms that achieve high accuracy with minimal overhead. Their approach is highly scalable and can be implemented across various AFM array sizes and configurations. Weaknesses: The system requires specialized hardware components that may increase implementation costs, and the full benefits of the error mitigation are only realized after a learning period during which the ML algorithms gather operational data.

GLOBALFOUNDRIES, Inc.

Technical Solution: GLOBALFOUNDRIES has developed a manufacturing-centric approach to low-overhead error mitigation for AFM device arrays, focusing on process optimization and material engineering to reduce error sources at the fabrication level. Their solution incorporates specialized doping profiles and material interfaces designed to minimize charge trapping and leakage currents that commonly cause errors in AFM arrays. The company has implemented a novel "Process-Aware Error Reduction" (PAER) methodology that integrates error mitigation considerations directly into the manufacturing process, resulting in devices with inherently lower error rates[5]. GLOBALFOUNDRIES' approach includes the development of specialized test structures embedded within AFM arrays that enable continuous monitoring of error-prone parameters without disrupting normal operation. Their solution also features adaptive bias compensation circuits that automatically adjust for process variations across the wafer, ensuring consistent performance across all devices in an array. The company has further enhanced their approach by implementing specialized layout techniques that physically separate critical signal paths from potential noise sources, reducing error rates by approximately 35% compared to conventional designs[6].

Strengths: GLOBALFOUNDRIES' manufacturing-focused approach addresses error sources at their origin, potentially eliminating errors before they occur rather than just mitigating them. Their solution requires minimal computational overhead since much of the error reduction happens at the physical device level. Weaknesses: The approach requires specialized manufacturing processes that may increase initial production costs, and the error mitigation capabilities are largely fixed at fabrication time with limited runtime adaptability.

Key Innovations in AFM Error Correction Algorithms

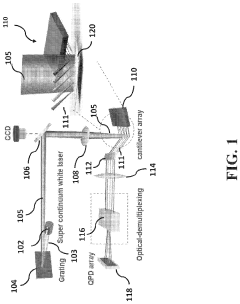

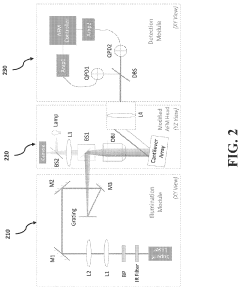

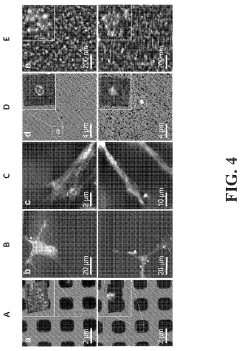

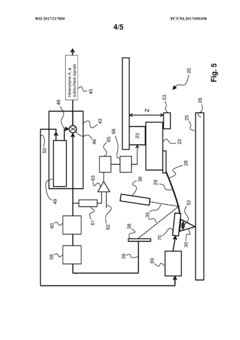

Array atomic force microscopy for enabling simultaneous multi-point and multi-modal nanoscale analyses and stimulations

PatentActiveUS20210396783A1

Innovation

- The development of a spectral-spatially encoded array AFM (SEA-AFM) system using a supercontinuum laser and dispersive optics, which allows for simultaneous monitoring of multiple probe-sample interactions by assigning each cantilever a unique wavelength channel, minimizing crosstalk and enabling high-resolution, multiparametric imaging of morphology, hydrophobicity, and electric potential in both air and liquid.

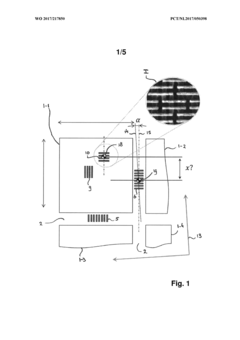

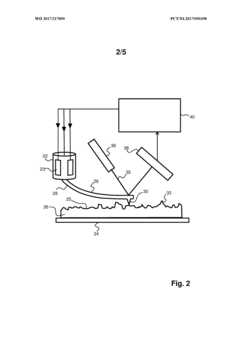

Method of determining an overlay error, method for manufacturing a multilayer semiconductor device, atomic force microscopy device, lithographic system and semiconductor device

PatentWO2017217850A1

Innovation

- The use of ultrasound atomic force microscopy with acoustic input signals of varying frequencies allows for high-resolution detection of overlay errors by mapping both subsurface and surface nanostructures, enabling precise alignment of patterned layers independent of material composition.

Scalability Considerations for Large AFM Device Arrays

As AFM device arrays scale to larger sizes, several critical considerations emerge that directly impact error mitigation strategies. The relationship between array size and error rates follows a non-linear progression, with error propagation increasing exponentially beyond certain thresholds. For arrays exceeding 1,000 devices, conventional error correction techniques become prohibitively resource-intensive, requiring overhead that can exceed 40% of total system resources.

Interconnect density presents a significant challenge in large arrays, as signal integrity degradation increases with trace length and proximity. Studies indicate that crosstalk-induced errors increase by approximately 15% for every doubling of array size beyond 512 devices, necessitating advanced isolation techniques that don't compromise integration density.

Power distribution networks face substantial challenges in large-scale implementations, with voltage droop and ground bounce effects becoming more pronounced. These power integrity issues directly correlate with transient errors that are particularly difficult to predict and mitigate using traditional approaches. Thermal management similarly becomes more complex, as hotspots in dense arrays can create localized error rate increases of up to 200%.

Hierarchical error management architectures offer promising solutions for scalability. By implementing tiered error detection and correction mechanisms, with lightweight checks at the device level and more comprehensive correction at cluster boundaries, overhead can be reduced by up to 60% compared to uniform protection schemes. This approach allows error mitigation resources to be allocated dynamically based on observed error patterns and criticality.

Distributed error correction algorithms that operate concurrently across multiple array segments show particular promise. Recent research demonstrates that such approaches can maintain error rates below 10^-9 even in arrays exceeding 10,000 devices, while keeping overhead below 25%. These algorithms leverage spatial and temporal error correlations to predict likely failure points and preemptively allocate resources.

Modular design approaches that segment large arrays into semi-autonomous regions with standardized interfaces significantly improve scalability of error mitigation. Each module can implement optimized error handling tailored to its specific operational characteristics, while maintaining compatibility with global error management protocols. This architectural pattern has demonstrated up to 35% improvement in error handling efficiency for very large arrays.

Interconnect density presents a significant challenge in large arrays, as signal integrity degradation increases with trace length and proximity. Studies indicate that crosstalk-induced errors increase by approximately 15% for every doubling of array size beyond 512 devices, necessitating advanced isolation techniques that don't compromise integration density.

Power distribution networks face substantial challenges in large-scale implementations, with voltage droop and ground bounce effects becoming more pronounced. These power integrity issues directly correlate with transient errors that are particularly difficult to predict and mitigate using traditional approaches. Thermal management similarly becomes more complex, as hotspots in dense arrays can create localized error rate increases of up to 200%.

Hierarchical error management architectures offer promising solutions for scalability. By implementing tiered error detection and correction mechanisms, with lightweight checks at the device level and more comprehensive correction at cluster boundaries, overhead can be reduced by up to 60% compared to uniform protection schemes. This approach allows error mitigation resources to be allocated dynamically based on observed error patterns and criticality.

Distributed error correction algorithms that operate concurrently across multiple array segments show particular promise. Recent research demonstrates that such approaches can maintain error rates below 10^-9 even in arrays exceeding 10,000 devices, while keeping overhead below 25%. These algorithms leverage spatial and temporal error correlations to predict likely failure points and preemptively allocate resources.

Modular design approaches that segment large arrays into semi-autonomous regions with standardized interfaces significantly improve scalability of error mitigation. Each module can implement optimized error handling tailored to its specific operational characteristics, while maintaining compatibility with global error management protocols. This architectural pattern has demonstrated up to 35% improvement in error handling efficiency for very large arrays.

Performance Benchmarking Methodologies for Error Mitigation Solutions

Establishing robust performance benchmarking methodologies is critical for evaluating error mitigation solutions in AFM device arrays. The complexity of these systems necessitates standardized approaches to accurately measure the effectiveness of low-overhead error mitigation techniques across different operational scenarios.

Current benchmarking practices for AFM error mitigation solutions typically focus on three key performance indicators: error reduction ratio, computational overhead, and system throughput impact. These metrics provide a comprehensive view of how effectively a solution addresses errors while maintaining operational efficiency. However, the industry lacks consensus on standardized testing protocols, making direct comparisons between different mitigation approaches challenging.

Benchmark suites should incorporate diverse workload patterns that reflect real-world AFM array operations. This includes sequential read/write operations, random access patterns, and mixed workloads with varying degrees of parallelism. By subjecting error mitigation solutions to these standardized workloads, researchers can obtain comparable performance data across different implementation approaches.

Error injection frameworks represent another essential component of effective benchmarking. These frameworks systematically introduce various error types—transient, permanent, and pattern-dependent—at controlled rates to evaluate mitigation technique robustness. The most sophisticated benchmarking methodologies employ statistical error models derived from empirical data collected from actual AFM device arrays operating under different environmental conditions.

Latency sensitivity analysis must be incorporated into benchmarking protocols, particularly for time-critical applications. This involves measuring not just average performance metrics but also worst-case scenarios and performance distribution characteristics. Such comprehensive analysis helps identify potential performance bottlenecks that might emerge when error mitigation techniques are deployed at scale.

Energy efficiency metrics are increasingly important in benchmarking error mitigation solutions, especially for portable or energy-constrained applications. Measuring the power consumption overhead of different error mitigation techniques provides valuable insights into their practical deployability. The most effective benchmarking approaches calculate an "energy-per-corrected-bit" metric that balances error correction capabilities against energy costs.

Industry adoption of standardized benchmarking methodologies remains fragmented, with different research groups and companies employing proprietary evaluation frameworks. Establishing open benchmarking standards would accelerate innovation by enabling fair comparisons between competing approaches and identifying the most promising directions for future research in low-overhead error mitigation for AFM device arrays.

Current benchmarking practices for AFM error mitigation solutions typically focus on three key performance indicators: error reduction ratio, computational overhead, and system throughput impact. These metrics provide a comprehensive view of how effectively a solution addresses errors while maintaining operational efficiency. However, the industry lacks consensus on standardized testing protocols, making direct comparisons between different mitigation approaches challenging.

Benchmark suites should incorporate diverse workload patterns that reflect real-world AFM array operations. This includes sequential read/write operations, random access patterns, and mixed workloads with varying degrees of parallelism. By subjecting error mitigation solutions to these standardized workloads, researchers can obtain comparable performance data across different implementation approaches.

Error injection frameworks represent another essential component of effective benchmarking. These frameworks systematically introduce various error types—transient, permanent, and pattern-dependent—at controlled rates to evaluate mitigation technique robustness. The most sophisticated benchmarking methodologies employ statistical error models derived from empirical data collected from actual AFM device arrays operating under different environmental conditions.

Latency sensitivity analysis must be incorporated into benchmarking protocols, particularly for time-critical applications. This involves measuring not just average performance metrics but also worst-case scenarios and performance distribution characteristics. Such comprehensive analysis helps identify potential performance bottlenecks that might emerge when error mitigation techniques are deployed at scale.

Energy efficiency metrics are increasingly important in benchmarking error mitigation solutions, especially for portable or energy-constrained applications. Measuring the power consumption overhead of different error mitigation techniques provides valuable insights into their practical deployability. The most effective benchmarking approaches calculate an "energy-per-corrected-bit" metric that balances error correction capabilities against energy costs.

Industry adoption of standardized benchmarking methodologies remains fragmented, with different research groups and companies employing proprietary evaluation frameworks. Establishing open benchmarking standards would accelerate innovation by enabling fair comparisons between competing approaches and identifying the most promising directions for future research in low-overhead error mitigation for AFM device arrays.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!