AI-driven anomaly detection for early identification of contamination and culture failure in OoC labs

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Organ-on-Chip Contamination Detection Background and Objectives

Organ-on-Chip (OoC) technology represents a revolutionary approach in biomedical research, offering miniaturized physiological models that replicate the functions of human organs. Since its emergence in the early 2000s, this technology has evolved from simple microfluidic systems to complex multi-organ platforms capable of mimicking intricate biological processes. The trajectory of OoC development has been characterized by increasing sophistication in tissue engineering, microfluidics, and sensor integration, enabling more accurate simulation of human physiological responses.

Despite significant advancements, contamination and culture failure remain persistent challenges in OoC laboratories, often leading to experimental inconsistencies, data corruption, and resource wastage. Traditional detection methods typically rely on visual inspection or endpoint analysis, which frequently identify issues only after substantial damage has occurred. This reactive approach significantly hampers research efficiency and reliability.

The integration of artificial intelligence into OoC monitoring systems presents a promising frontier for addressing these limitations. AI-driven anomaly detection offers the potential for real-time, automated identification of contamination and culture failure indicators before they manifest as visible problems. This proactive approach aligns with the broader trend toward intelligent laboratory systems and precision medicine.

Our technical objectives encompass developing robust AI algorithms capable of analyzing multimodal data streams from OoC platforms, including microscopy images, metabolic parameters, electrical signals, and environmental conditions. These algorithms must demonstrate high sensitivity to subtle deviations from normal culture conditions while maintaining specificity to avoid false alarms that could disrupt valuable experiments.

Furthermore, we aim to establish standardized protocols for data collection and preprocessing that accommodate the heterogeneity of OoC systems across different organ models and experimental designs. This standardization is essential for creating generalizable AI solutions that can be implemented across diverse laboratory settings.

The long-term vision extends beyond mere contamination detection to comprehensive predictive maintenance of OoC systems, where AI not only identifies potential failures but also recommends corrective actions and optimizes culture conditions to enhance experimental outcomes. This capability would represent a significant leap forward in laboratory automation and could substantially accelerate drug development pipelines by reducing experimental failures and improving reproducibility.

As OoC technology continues to gain traction in pharmaceutical research, toxicology testing, and personalized medicine, the development of reliable contamination detection systems becomes increasingly critical to realizing the full potential of these innovative platforms in revolutionizing biomedical research and drug development processes.

Despite significant advancements, contamination and culture failure remain persistent challenges in OoC laboratories, often leading to experimental inconsistencies, data corruption, and resource wastage. Traditional detection methods typically rely on visual inspection or endpoint analysis, which frequently identify issues only after substantial damage has occurred. This reactive approach significantly hampers research efficiency and reliability.

The integration of artificial intelligence into OoC monitoring systems presents a promising frontier for addressing these limitations. AI-driven anomaly detection offers the potential for real-time, automated identification of contamination and culture failure indicators before they manifest as visible problems. This proactive approach aligns with the broader trend toward intelligent laboratory systems and precision medicine.

Our technical objectives encompass developing robust AI algorithms capable of analyzing multimodal data streams from OoC platforms, including microscopy images, metabolic parameters, electrical signals, and environmental conditions. These algorithms must demonstrate high sensitivity to subtle deviations from normal culture conditions while maintaining specificity to avoid false alarms that could disrupt valuable experiments.

Furthermore, we aim to establish standardized protocols for data collection and preprocessing that accommodate the heterogeneity of OoC systems across different organ models and experimental designs. This standardization is essential for creating generalizable AI solutions that can be implemented across diverse laboratory settings.

The long-term vision extends beyond mere contamination detection to comprehensive predictive maintenance of OoC systems, where AI not only identifies potential failures but also recommends corrective actions and optimizes culture conditions to enhance experimental outcomes. This capability would represent a significant leap forward in laboratory automation and could substantially accelerate drug development pipelines by reducing experimental failures and improving reproducibility.

As OoC technology continues to gain traction in pharmaceutical research, toxicology testing, and personalized medicine, the development of reliable contamination detection systems becomes increasingly critical to realizing the full potential of these innovative platforms in revolutionizing biomedical research and drug development processes.

Market Analysis for AI-Enhanced OoC Quality Control Systems

The global market for AI-enhanced Organ-on-Chip (OoC) quality control systems is experiencing rapid growth, driven by increasing demand for more reliable drug development processes and reduced animal testing. Current market valuations indicate that the OoC market reached approximately 60 million USD in 2022 and is projected to grow at a CAGR of 39.2% through 2030, with the AI-enhanced quality control segment emerging as one of the fastest-growing components.

Pharmaceutical and biotechnology companies represent the largest market segment, accounting for nearly 65% of the current demand. These organizations are increasingly adopting AI-driven anomaly detection systems to minimize costly experimental failures and accelerate drug development timelines. Research institutions constitute the second-largest market segment at 25%, while contract research organizations make up the remaining 10%.

Geographically, North America dominates the market with approximately 45% share, followed by Europe (30%) and Asia-Pacific (20%). The Asia-Pacific region, particularly China and South Korea, is expected to witness the highest growth rate due to increasing investments in biotechnology research infrastructure and favorable regulatory environments for OoC technology adoption.

Key market drivers include the rising costs of drug development failures, with late-stage failures costing pharmaceutical companies billions annually. AI-enhanced quality control systems that can detect contamination or culture failure early in the process offer significant cost-saving potential, with some early adopters reporting reduction in experimental failure rates by up to 35%.

Regulatory pressures are also accelerating market growth, as agencies like the FDA and EMA increasingly recognize OoC data in regulatory submissions. The FDA's Modernization Act 2.0, which encourages alternatives to animal testing, has created a favorable environment for OoC technology adoption.

Market challenges include high initial implementation costs, with comprehensive AI-OoC quality control systems typically requiring investments between 100,000 to 500,000 USD depending on scale and complexity. Additionally, integration challenges with existing laboratory workflows and data management systems remain significant barriers to widespread adoption.

Customer surveys indicate that return on investment is the primary concern for potential adopters, with 78% of respondents citing cost-benefit analysis as their top consideration. Technical reliability (65%) and ease of integration with existing systems (58%) follow as critical decision factors.

Pharmaceutical and biotechnology companies represent the largest market segment, accounting for nearly 65% of the current demand. These organizations are increasingly adopting AI-driven anomaly detection systems to minimize costly experimental failures and accelerate drug development timelines. Research institutions constitute the second-largest market segment at 25%, while contract research organizations make up the remaining 10%.

Geographically, North America dominates the market with approximately 45% share, followed by Europe (30%) and Asia-Pacific (20%). The Asia-Pacific region, particularly China and South Korea, is expected to witness the highest growth rate due to increasing investments in biotechnology research infrastructure and favorable regulatory environments for OoC technology adoption.

Key market drivers include the rising costs of drug development failures, with late-stage failures costing pharmaceutical companies billions annually. AI-enhanced quality control systems that can detect contamination or culture failure early in the process offer significant cost-saving potential, with some early adopters reporting reduction in experimental failure rates by up to 35%.

Regulatory pressures are also accelerating market growth, as agencies like the FDA and EMA increasingly recognize OoC data in regulatory submissions. The FDA's Modernization Act 2.0, which encourages alternatives to animal testing, has created a favorable environment for OoC technology adoption.

Market challenges include high initial implementation costs, with comprehensive AI-OoC quality control systems typically requiring investments between 100,000 to 500,000 USD depending on scale and complexity. Additionally, integration challenges with existing laboratory workflows and data management systems remain significant barriers to widespread adoption.

Customer surveys indicate that return on investment is the primary concern for potential adopters, with 78% of respondents citing cost-benefit analysis as their top consideration. Technical reliability (65%) and ease of integration with existing systems (58%) follow as critical decision factors.

Current Challenges in OoC Contamination Detection Technologies

Organ-on-Chip (OoC) laboratories face significant challenges in detecting contamination and culture failure using current technologies. Traditional contamination detection methods in OoC systems rely heavily on manual inspection and periodic sampling, which are time-consuming, labor-intensive, and often detect issues only after significant damage has occurred. These approaches lack the sensitivity required for early detection of subtle changes that precede full contamination events.

Microscopic examination, while valuable, requires trained personnel and cannot be performed continuously, creating detection gaps where contamination can proliferate unnoticed. Additionally, conventional image analysis software lacks the sophistication to distinguish between normal cellular variations and early signs of contamination, resulting in high false positive and negative rates.

Biochemical assays used for contamination detection typically require sample extraction, which disrupts the continuous operation of OoC systems and may compromise experimental integrity. These assays also consume valuable cell culture material and reagents, adding to operational costs and reducing experimental throughput. Furthermore, most biochemical detection methods have significant latency between sampling and results, delaying critical interventions.

Current sensor technologies integrated into OoC platforms suffer from limited specificity and sensitivity. pH sensors, oxygen sensors, and temperature monitors can indicate gross deviations but often fail to detect subtle metabolic shifts that precede contamination events. The miniaturized nature of OoC systems also presents unique challenges for sensor integration without disrupting microfluidic flows or cellular microenvironments.

Data management presents another significant hurdle, as most OoC labs lack integrated systems for continuous data collection and real-time analysis. The volume and variety of data generated (images, sensor readings, flow rates) are typically stored in disparate systems without coherent integration, hampering comprehensive analysis and pattern recognition that could reveal early contamination signatures.

Standardization remains problematic across the field, with different laboratories employing varied protocols for contamination detection and reporting. This lack of standardization impedes comparative analysis and the development of universal detection algorithms. The absence of shared databases of contamination events further limits the training data available for developing robust AI detection systems.

Cost considerations also constrain implementation of advanced detection technologies in many research settings. High-resolution imaging systems, specialized sensors, and computing infrastructure required for real-time analysis represent significant investments that many laboratories cannot justify, particularly for early-stage research applications.

Microscopic examination, while valuable, requires trained personnel and cannot be performed continuously, creating detection gaps where contamination can proliferate unnoticed. Additionally, conventional image analysis software lacks the sophistication to distinguish between normal cellular variations and early signs of contamination, resulting in high false positive and negative rates.

Biochemical assays used for contamination detection typically require sample extraction, which disrupts the continuous operation of OoC systems and may compromise experimental integrity. These assays also consume valuable cell culture material and reagents, adding to operational costs and reducing experimental throughput. Furthermore, most biochemical detection methods have significant latency between sampling and results, delaying critical interventions.

Current sensor technologies integrated into OoC platforms suffer from limited specificity and sensitivity. pH sensors, oxygen sensors, and temperature monitors can indicate gross deviations but often fail to detect subtle metabolic shifts that precede contamination events. The miniaturized nature of OoC systems also presents unique challenges for sensor integration without disrupting microfluidic flows or cellular microenvironments.

Data management presents another significant hurdle, as most OoC labs lack integrated systems for continuous data collection and real-time analysis. The volume and variety of data generated (images, sensor readings, flow rates) are typically stored in disparate systems without coherent integration, hampering comprehensive analysis and pattern recognition that could reveal early contamination signatures.

Standardization remains problematic across the field, with different laboratories employing varied protocols for contamination detection and reporting. This lack of standardization impedes comparative analysis and the development of universal detection algorithms. The absence of shared databases of contamination events further limits the training data available for developing robust AI detection systems.

Cost considerations also constrain implementation of advanced detection technologies in many research settings. High-resolution imaging systems, specialized sensors, and computing infrastructure required for real-time analysis represent significant investments that many laboratories cannot justify, particularly for early-stage research applications.

Existing AI Solutions for Early Contamination Identification

01 Machine learning algorithms for anomaly detection

Advanced machine learning algorithms can be employed for detecting anomalies in various systems. These algorithms analyze patterns in data to identify deviations that may indicate potential issues. By leveraging techniques such as supervised and unsupervised learning, these systems can establish baseline behaviors and flag activities that deviate significantly from expected patterns, enabling early identification of anomalies before they escalate into critical problems.- AI-based anomaly detection systems for early identification: Artificial intelligence algorithms can be employed to detect anomalies in various systems by analyzing patterns and identifying deviations from normal behavior. These AI-driven systems can process large volumes of data in real-time, enabling early identification of potential issues before they escalate into serious problems. The technology utilizes machine learning models that continuously improve their detection capabilities through training on historical data.

- Predictive analytics for anomaly forecasting: Predictive analytics combines statistical algorithms and machine learning techniques to analyze current and historical data to make predictions about future events. In the context of anomaly detection, these systems can forecast potential anomalies before they occur by identifying subtle patterns that precede abnormal events. This approach enables organizations to implement preventive measures rather than reactive responses to anomalies.

- Real-time monitoring systems with AI integration: Real-time monitoring systems enhanced with artificial intelligence capabilities can continuously observe system behaviors and immediately flag deviations. These systems process streaming data from multiple sources and apply advanced algorithms to detect anomalies as they emerge. The integration of AI enables these systems to adapt to changing conditions and reduce false positives by understanding contextual information.

- Multi-dimensional anomaly detection frameworks: Multi-dimensional frameworks analyze data across various parameters simultaneously to identify complex anomalies that might not be apparent in single-dimension analysis. These systems can correlate seemingly unrelated variables to detect subtle patterns indicating potential issues. By examining multiple dimensions of data, these frameworks provide a more comprehensive understanding of system behavior and can identify anomalies with greater accuracy.

- Automated response mechanisms for detected anomalies: Automated response systems can be triggered once an anomaly is detected, implementing predefined actions to mitigate potential damage. These systems can isolate affected components, initiate backup procedures, or alert relevant personnel based on the nature and severity of the detected anomaly. The automation reduces response time and minimizes human error in critical situations, allowing for more effective management of anomalies.

02 Real-time monitoring and alert systems

Real-time monitoring systems continuously analyze data streams to detect anomalies as they occur. These systems implement AI-driven algorithms that process incoming data and compare it against established baselines or historical patterns. When deviations are detected, automated alert mechanisms notify relevant personnel, allowing for immediate response to potential issues. This approach significantly reduces the time between anomaly occurrence and detection, minimizing potential damage or disruption.Expand Specific Solutions03 Predictive analytics for early anomaly identification

Predictive analytics combines historical data analysis with AI algorithms to forecast potential anomalies before they manifest. By identifying patterns that historically preceded anomalous events, these systems can provide early warnings about developing issues. This approach enables proactive intervention rather than reactive response, allowing organizations to address potential problems during their formative stages when remediation is typically less costly and disruptive.Expand Specific Solutions04 Multi-dimensional data analysis for complex anomaly detection

Complex systems often require multi-dimensional data analysis to effectively identify anomalies. AI-driven solutions can simultaneously analyze multiple parameters and their interrelationships to detect subtle anomalies that might be missed by simpler approaches. These systems can identify correlations between seemingly unrelated variables and recognize compound anomalies that manifest across different aspects of a system, providing a more comprehensive approach to early identification of potential issues.Expand Specific Solutions05 Adaptive learning systems for evolving anomaly detection

Adaptive learning systems continuously refine their anomaly detection capabilities based on new data and feedback. These AI-driven systems evolve over time, improving their accuracy and reducing false positives as they process more information. By incorporating feedback loops and continuous learning mechanisms, these systems can adapt to changing conditions and emerging patterns, ensuring that anomaly detection remains effective even as the underlying systems and potential threats evolve.Expand Specific Solutions

Leading Organizations in AI-OoC Contamination Detection Integration

The AI-driven anomaly detection market for organ-on-chip (OoC) laboratories is in its early growth phase, characterized by increasing adoption as contamination detection becomes critical for research integrity. The market is expanding rapidly with the global OoC market projected to reach $220 million by 2025. Technology maturity varies across players, with established companies like Siemens Healthcare Diagnostics and Canon developing sophisticated AI detection systems, while research institutions such as University of Oslo and German Cancer Research Center focus on algorithm development. Emerging players like Magnolia Medical Technologies are specializing in contamination detection solutions, while technology giants including NTT and Ping An Technology are leveraging their AI expertise to enter this niche market with advanced predictive analytics capabilities.

German Cancer Research Center (Deutsches Krebsforschungszentrum, DKFZ)

Technical Solution: The German Cancer Research Center has pioneered an AI-driven anomaly detection framework specifically designed for cancer-on-chip models. Their approach combines unsupervised learning techniques with domain-specific knowledge to identify early signs of culture contamination and failure in complex tumor microenvironment models. The system employs autoencoders trained on large datasets of healthy culture images and sensor readings to establish normal baseline patterns. Any deviation from these learned representations triggers alerts for potential anomalies. What distinguishes DKFZ's solution is its integration of biological context through knowledge graphs that connect observed anomalies with potential biological causes, helping researchers not just detect but understand the nature of contamination or failure events. The platform incorporates federated learning capabilities that allow multiple research institutions to contribute to model improvement while maintaining data privacy, creating an evolving system that continuously improves its detection capabilities as it processes more experimental data across the research network.

Strengths: The biological knowledge integration provides context-aware anomaly detection that helps researchers understand the root causes of detected issues. The federated learning approach enables continuous improvement without compromising sensitive research data. Weaknesses: The system's reliance on extensive knowledge graphs requires significant domain expertise to maintain and update as new biological insights emerge.

Siemens Healthcare Diagnostics, Inc.

Technical Solution: Siemens Healthcare Diagnostics has developed an advanced AI-driven anomaly detection system specifically for OoC environments that integrates multimodal sensing technologies with deep learning algorithms. Their solution employs a combination of computer vision and biosensor data analysis to continuously monitor cell cultures in microfluidic devices. The system utilizes convolutional neural networks (CNNs) for image-based anomaly detection, analyzing microscopic images to identify morphological changes in cells that might indicate contamination or culture failure. This is complemented by recurrent neural networks (RNNs) that process time-series data from integrated biosensors monitoring parameters such as pH, oxygen levels, temperature, and metabolite concentrations. The platform features transfer learning capabilities that allow it to adapt to different cell types and experimental conditions with minimal retraining, significantly reducing the time required for implementation in new laboratory settings.

Strengths: Comprehensive integration of multiple data streams enables early detection of subtle anomalies before they become visible to human operators. The system's transfer learning capabilities allow for rapid deployment across different OoC applications with minimal customization. Weaknesses: The solution requires significant computational resources and specialized hardware integration with existing OoC platforms, potentially limiting accessibility for smaller research labs.

Critical Technologies for Real-Time OoC Monitoring

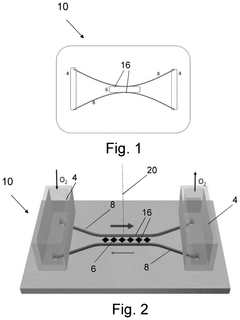

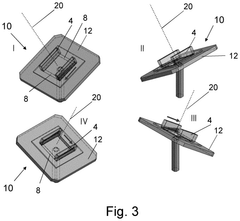

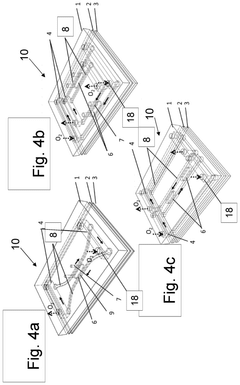

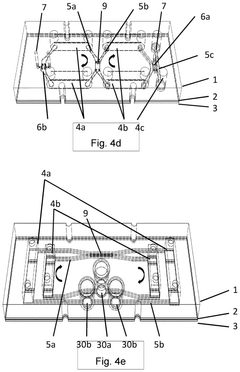

Cell culture device and movement system

PatentPendingUS20240368517A1

Innovation

- A cell culture device with a movement system that generates a one-way gravity-driven flow loop using tilted orientation, eliminating the need for pumps and allowing for selective transport of cell media and cell growth, simulating physiological flows and pressures through semipermeable barriers and controlled tilting angles.

Regulatory Compliance for AI-Enabled OoC Quality Assurance

The integration of AI-driven anomaly detection systems in Organ-on-Chip (OoC) laboratories necessitates careful navigation of complex regulatory frameworks. These advanced quality assurance systems must comply with multiple overlapping regulations that govern both medical devices and artificial intelligence applications in healthcare settings.

FDA regulations, particularly 21 CFR Part 11 concerning electronic records and signatures, are directly applicable to AI-enabled OoC quality assurance systems. These regulations mandate comprehensive validation of software systems, audit trails for all data modifications, and secure user authentication protocols to ensure data integrity throughout the anomaly detection process.

In the European context, the Medical Device Regulation (MDR) and In Vitro Diagnostic Regulation (IVDR) classify AI-enabled OoC systems based on their risk profiles. Most contamination detection systems would likely fall under Class IIa or IIb, requiring thorough technical documentation and conformity assessment procedures before market approval.

The General Data Protection Regulation (GDPR) presents additional compliance challenges when AI systems process personal data, even in laboratory settings. This necessitates implementation of privacy-by-design principles, data minimization strategies, and robust data protection impact assessments for AI-driven anomaly detection systems.

Emerging AI-specific regulations, such as the EU AI Act, introduce new compliance requirements for high-risk AI applications in healthcare. These include mandatory risk management systems, human oversight mechanisms, and transparency requirements regarding the AI's decision-making processes when flagging potential contamination or culture failures.

International standards like ISO 13485 for medical device quality management systems and IEC 62304 for medical device software lifecycle processes provide essential frameworks for ensuring AI-enabled OoC quality assurance systems meet global compliance requirements. These standards emphasize risk management, verification, and validation throughout the development process.

Regulatory bodies increasingly require explainability in AI systems used for critical applications like contamination detection. This necessitates the development of interpretable AI models that can provide clear rationales for flagging anomalies, rather than functioning as "black boxes" that merely provide binary outputs without explanation.

Compliance strategies must include comprehensive documentation of training data sources, model validation procedures, and performance metrics specific to contamination detection. Regular audits and continuous monitoring systems are essential to maintain regulatory compliance as both AI technologies and regulatory frameworks continue to evolve in this rapidly developing field.

FDA regulations, particularly 21 CFR Part 11 concerning electronic records and signatures, are directly applicable to AI-enabled OoC quality assurance systems. These regulations mandate comprehensive validation of software systems, audit trails for all data modifications, and secure user authentication protocols to ensure data integrity throughout the anomaly detection process.

In the European context, the Medical Device Regulation (MDR) and In Vitro Diagnostic Regulation (IVDR) classify AI-enabled OoC systems based on their risk profiles. Most contamination detection systems would likely fall under Class IIa or IIb, requiring thorough technical documentation and conformity assessment procedures before market approval.

The General Data Protection Regulation (GDPR) presents additional compliance challenges when AI systems process personal data, even in laboratory settings. This necessitates implementation of privacy-by-design principles, data minimization strategies, and robust data protection impact assessments for AI-driven anomaly detection systems.

Emerging AI-specific regulations, such as the EU AI Act, introduce new compliance requirements for high-risk AI applications in healthcare. These include mandatory risk management systems, human oversight mechanisms, and transparency requirements regarding the AI's decision-making processes when flagging potential contamination or culture failures.

International standards like ISO 13485 for medical device quality management systems and IEC 62304 for medical device software lifecycle processes provide essential frameworks for ensuring AI-enabled OoC quality assurance systems meet global compliance requirements. These standards emphasize risk management, verification, and validation throughout the development process.

Regulatory bodies increasingly require explainability in AI systems used for critical applications like contamination detection. This necessitates the development of interpretable AI models that can provide clear rationales for flagging anomalies, rather than functioning as "black boxes" that merely provide binary outputs without explanation.

Compliance strategies must include comprehensive documentation of training data sources, model validation procedures, and performance metrics specific to contamination detection. Regular audits and continuous monitoring systems are essential to maintain regulatory compliance as both AI technologies and regulatory frameworks continue to evolve in this rapidly developing field.

Data Security and Privacy in Laboratory Monitoring Systems

The implementation of AI-driven anomaly detection systems in Organ-on-Chip (OoC) laboratories necessitates robust data security and privacy frameworks. These systems continuously monitor sensitive biological data, patient information, and proprietary research protocols, creating significant security vulnerabilities if not properly protected. Laboratory monitoring systems must comply with stringent regulations such as HIPAA, GDPR, and industry-specific standards while maintaining data integrity.

Encryption technologies represent the first line of defense in these systems. End-to-end encryption ensures that data remains protected during transmission between sensors, central monitoring systems, and storage facilities. Advanced encryption standards (AES-256) and secure key management protocols are essential for protecting both real-time monitoring data and historical records from unauthorized access or interception.

Access control mechanisms must be implemented with granular permission structures that limit data visibility based on role-specific requirements. Multi-factor authentication, biometric verification, and regular credential rotation policies significantly reduce the risk of unauthorized access. These measures are particularly critical in collaborative research environments where multiple institutions may require varying levels of access to the monitoring system.

Data anonymization and pseudonymization techniques should be employed whenever possible, especially when AI systems require large datasets for training and validation. Techniques such as differential privacy can allow meaningful pattern analysis while protecting individual sample identities and proprietary information. This balance is crucial for maintaining research integrity while safeguarding intellectual property.

Audit trails and comprehensive logging systems must document all interactions with the monitoring data, creating accountability and enabling forensic analysis in case of security incidents. These systems should be tamper-evident and include mechanisms to detect unusual access patterns that might indicate security breaches or insider threats.

Cloud security considerations are paramount as many laboratory monitoring systems leverage cloud infrastructure for data storage and AI processing. Secure API implementations, regular vulnerability assessments, and compliance with cloud security frameworks (such as CSA STAR) help mitigate risks associated with third-party service providers. Data residency requirements must also be addressed, particularly for international research collaborations.

Incident response protocols specific to laboratory environments should be established, including procedures for containing potential contamination events while preserving digital evidence. Regular security training for laboratory personnel must emphasize both physical and digital security practices, creating a holistic security culture that recognizes the interconnected nature of modern laboratory systems.

Encryption technologies represent the first line of defense in these systems. End-to-end encryption ensures that data remains protected during transmission between sensors, central monitoring systems, and storage facilities. Advanced encryption standards (AES-256) and secure key management protocols are essential for protecting both real-time monitoring data and historical records from unauthorized access or interception.

Access control mechanisms must be implemented with granular permission structures that limit data visibility based on role-specific requirements. Multi-factor authentication, biometric verification, and regular credential rotation policies significantly reduce the risk of unauthorized access. These measures are particularly critical in collaborative research environments where multiple institutions may require varying levels of access to the monitoring system.

Data anonymization and pseudonymization techniques should be employed whenever possible, especially when AI systems require large datasets for training and validation. Techniques such as differential privacy can allow meaningful pattern analysis while protecting individual sample identities and proprietary information. This balance is crucial for maintaining research integrity while safeguarding intellectual property.

Audit trails and comprehensive logging systems must document all interactions with the monitoring data, creating accountability and enabling forensic analysis in case of security incidents. These systems should be tamper-evident and include mechanisms to detect unusual access patterns that might indicate security breaches or insider threats.

Cloud security considerations are paramount as many laboratory monitoring systems leverage cloud infrastructure for data storage and AI processing. Secure API implementations, regular vulnerability assessments, and compliance with cloud security frameworks (such as CSA STAR) help mitigate risks associated with third-party service providers. Data residency requirements must also be addressed, particularly for international research collaborations.

Incident response protocols specific to laboratory environments should be established, including procedures for containing potential contamination events while preserving digital evidence. Regular security training for laboratory personnel must emphasize both physical and digital security practices, creating a holistic security culture that recognizes the interconnected nature of modern laboratory systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!