Data Fusion Approaches Combining Multiple Sensor Modalities

AUG 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Multi-Sensor Data Fusion Background and Objectives

Multi-sensor data fusion represents a technological paradigm that has evolved significantly over the past few decades, driven by advancements in sensor technologies, computational capabilities, and algorithmic innovations. The concept originated in military applications during the Cold War era, where combining data from multiple radar and sonar systems became crucial for accurate target tracking and identification. Since then, the field has expanded dramatically across various domains including autonomous vehicles, healthcare monitoring, environmental sensing, and industrial automation.

The evolution of this technology has been characterized by three distinct waves. The first wave (1980s-1990s) focused primarily on statistical methods such as Kalman filtering and Bayesian inference. The second wave (2000s-2010s) saw the integration of machine learning techniques, particularly support vector machines and neural networks. The current third wave is defined by deep learning approaches that can automatically extract features and patterns from multi-modal sensor data.

The fundamental objective of multi-sensor data fusion is to combine information from diverse sensor modalities to achieve more accurate, reliable, and comprehensive understanding of observed phenomena than would be possible using any single sensor type. This integration aims to overcome the limitations inherent to individual sensor technologies, such as limited range, resolution, or susceptibility to environmental interference.

Key technical goals in this domain include developing robust algorithms capable of handling heterogeneous data types, addressing temporal and spatial alignment challenges, managing varying levels of uncertainty across different sensor inputs, and optimizing computational efficiency for real-time applications. Additionally, there is growing emphasis on creating adaptive fusion frameworks that can dynamically adjust their processing strategies based on contextual factors and changing environmental conditions.

From a strategic perspective, multi-sensor data fusion seeks to enable more intelligent autonomous systems capable of complex decision-making in uncertain environments. This includes enhancing situational awareness in critical applications, improving detection and classification accuracy, extending operational capabilities across diverse environmental conditions, and reducing false alarm rates in monitoring systems.

The convergence of Internet of Things (IoT) technologies with multi-sensor fusion approaches has further accelerated innovation in this field, creating opportunities for distributed sensing networks that can collectively perceive and interpret complex environments. As sensor miniaturization and energy efficiency continue to improve, the deployment scenarios for multi-modal fusion systems are expanding rapidly, driving research toward more sophisticated integration methodologies.

The evolution of this technology has been characterized by three distinct waves. The first wave (1980s-1990s) focused primarily on statistical methods such as Kalman filtering and Bayesian inference. The second wave (2000s-2010s) saw the integration of machine learning techniques, particularly support vector machines and neural networks. The current third wave is defined by deep learning approaches that can automatically extract features and patterns from multi-modal sensor data.

The fundamental objective of multi-sensor data fusion is to combine information from diverse sensor modalities to achieve more accurate, reliable, and comprehensive understanding of observed phenomena than would be possible using any single sensor type. This integration aims to overcome the limitations inherent to individual sensor technologies, such as limited range, resolution, or susceptibility to environmental interference.

Key technical goals in this domain include developing robust algorithms capable of handling heterogeneous data types, addressing temporal and spatial alignment challenges, managing varying levels of uncertainty across different sensor inputs, and optimizing computational efficiency for real-time applications. Additionally, there is growing emphasis on creating adaptive fusion frameworks that can dynamically adjust their processing strategies based on contextual factors and changing environmental conditions.

From a strategic perspective, multi-sensor data fusion seeks to enable more intelligent autonomous systems capable of complex decision-making in uncertain environments. This includes enhancing situational awareness in critical applications, improving detection and classification accuracy, extending operational capabilities across diverse environmental conditions, and reducing false alarm rates in monitoring systems.

The convergence of Internet of Things (IoT) technologies with multi-sensor fusion approaches has further accelerated innovation in this field, creating opportunities for distributed sensing networks that can collectively perceive and interpret complex environments. As sensor miniaturization and energy efficiency continue to improve, the deployment scenarios for multi-modal fusion systems are expanding rapidly, driving research toward more sophisticated integration methodologies.

Market Analysis for Multi-Modal Sensing Solutions

The multi-modal sensor fusion market is experiencing robust growth, driven by increasing demand for comprehensive sensing solutions across various industries. The global market for sensor fusion technologies was valued at approximately $6.5 billion in 2022 and is projected to reach $25.1 billion by 2028, representing a compound annual growth rate (CAGR) of 21.3% during the forecast period.

Automotive applications currently dominate the market landscape, accounting for nearly 35% of the total market share. Advanced driver-assistance systems (ADAS) and autonomous vehicles heavily rely on multi-modal sensing solutions that combine data from cameras, radar, LiDAR, ultrasonic sensors, and infrared sensors to create a comprehensive environmental perception system. Major automotive manufacturers and technology companies are investing heavily in this domain to enhance vehicle safety and autonomy.

Consumer electronics represents the second-largest application segment, with smartphones, wearables, and smart home devices increasingly incorporating multiple sensor modalities. The integration of visual, audio, and haptic sensors in these devices is creating more intuitive and context-aware user experiences. Market analysts predict this segment will grow at a CAGR of 24.7% through 2028, outpacing the overall market growth rate.

Industrial automation and robotics constitute another significant market segment, valued at approximately $1.2 billion in 2022. Manufacturing facilities are adopting multi-modal sensing solutions to improve production efficiency, quality control, and workplace safety. The combination of machine vision systems with force/torque sensors and acoustic monitoring enables more sophisticated robotic applications and predictive maintenance capabilities.

Healthcare applications represent the fastest-growing segment, with a projected CAGR of 27.8% through 2028. Multi-modal sensing solutions are revolutionizing patient monitoring, diagnostic imaging, and surgical procedures. The integration of optical, thermal, and biochemical sensors is enabling more accurate disease detection and personalized treatment approaches.

Regionally, North America leads the market with approximately 38% share, followed by Europe (29%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to witness the highest growth rate due to rapid industrialization, increasing automotive production, and government initiatives supporting technological advancement in countries like China, Japan, and South Korea.

Key market challenges include high implementation costs, technical complexity in data integration, and concerns regarding data privacy and security. Despite these challenges, the growing demand for more accurate and reliable sensing solutions across industries continues to drive market expansion and technological innovation.

Automotive applications currently dominate the market landscape, accounting for nearly 35% of the total market share. Advanced driver-assistance systems (ADAS) and autonomous vehicles heavily rely on multi-modal sensing solutions that combine data from cameras, radar, LiDAR, ultrasonic sensors, and infrared sensors to create a comprehensive environmental perception system. Major automotive manufacturers and technology companies are investing heavily in this domain to enhance vehicle safety and autonomy.

Consumer electronics represents the second-largest application segment, with smartphones, wearables, and smart home devices increasingly incorporating multiple sensor modalities. The integration of visual, audio, and haptic sensors in these devices is creating more intuitive and context-aware user experiences. Market analysts predict this segment will grow at a CAGR of 24.7% through 2028, outpacing the overall market growth rate.

Industrial automation and robotics constitute another significant market segment, valued at approximately $1.2 billion in 2022. Manufacturing facilities are adopting multi-modal sensing solutions to improve production efficiency, quality control, and workplace safety. The combination of machine vision systems with force/torque sensors and acoustic monitoring enables more sophisticated robotic applications and predictive maintenance capabilities.

Healthcare applications represent the fastest-growing segment, with a projected CAGR of 27.8% through 2028. Multi-modal sensing solutions are revolutionizing patient monitoring, diagnostic imaging, and surgical procedures. The integration of optical, thermal, and biochemical sensors is enabling more accurate disease detection and personalized treatment approaches.

Regionally, North America leads the market with approximately 38% share, followed by Europe (29%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to witness the highest growth rate due to rapid industrialization, increasing automotive production, and government initiatives supporting technological advancement in countries like China, Japan, and South Korea.

Key market challenges include high implementation costs, technical complexity in data integration, and concerns regarding data privacy and security. Despite these challenges, the growing demand for more accurate and reliable sensing solutions across industries continues to drive market expansion and technological innovation.

Current Challenges in Multi-Sensor Data Fusion

Despite significant advancements in multi-sensor data fusion technologies, several critical challenges continue to impede optimal implementation across various domains. Heterogeneity among sensor data represents a fundamental obstacle, as different sensors produce data with varying formats, resolutions, sampling rates, and measurement units. This inconsistency complicates the integration process and often requires sophisticated preprocessing techniques to normalize data before fusion can occur.

Temporal and spatial alignment issues present another significant hurdle. Sensors rarely operate in perfect synchronization, leading to timing discrepancies that can severely impact fusion accuracy, particularly in dynamic environments where conditions change rapidly. Similarly, spatial registration problems arise when sensors observe the same phenomenon from different perspectives or positions, necessitating complex transformation algorithms to align observations properly.

Uncertainty management remains persistently challenging in multi-modal fusion systems. Each sensor type introduces unique error characteristics and reliability issues that must be quantified and accounted for during the fusion process. Traditional probabilistic methods often struggle with the complex uncertainty propagation that occurs when combining heterogeneous data sources, particularly when dealing with non-Gaussian noise distributions or systematic biases.

Computational complexity poses significant constraints, especially for real-time applications. As the number of sensors and data dimensionality increase, fusion algorithms face exponential growth in computational requirements. This challenge becomes particularly acute in resource-constrained environments such as mobile platforms, IoT devices, or autonomous vehicles where processing power and energy consumption are limited.

Scalability issues emerge when fusion systems need to incorporate additional sensors or adapt to changing operational conditions. Many current fusion architectures lack the flexibility to seamlessly integrate new sensor modalities without substantial reconfiguration or retraining, limiting their practical deployment in evolving environments.

Data quality assessment represents another persistent challenge, as fusion systems must continuously evaluate the reliability and relevance of incoming sensor data. Detecting and managing faulty sensors, corrupted data, or adversarial inputs remains difficult, particularly in safety-critical applications where system failures could have severe consequences.

Privacy and security concerns have gained prominence as fusion systems increasingly incorporate sensitive data sources. Protecting personal information while maintaining fusion performance presents technical and ethical challenges, especially in applications like healthcare monitoring or public surveillance where privacy expectations are high.

Interpretability and explainability deficiencies limit the adoption of advanced fusion techniques in regulated industries. As fusion algorithms become more sophisticated, particularly those leveraging deep learning approaches, understanding and validating their decision-making processes becomes increasingly difficult for human operators and regulatory bodies.

Temporal and spatial alignment issues present another significant hurdle. Sensors rarely operate in perfect synchronization, leading to timing discrepancies that can severely impact fusion accuracy, particularly in dynamic environments where conditions change rapidly. Similarly, spatial registration problems arise when sensors observe the same phenomenon from different perspectives or positions, necessitating complex transformation algorithms to align observations properly.

Uncertainty management remains persistently challenging in multi-modal fusion systems. Each sensor type introduces unique error characteristics and reliability issues that must be quantified and accounted for during the fusion process. Traditional probabilistic methods often struggle with the complex uncertainty propagation that occurs when combining heterogeneous data sources, particularly when dealing with non-Gaussian noise distributions or systematic biases.

Computational complexity poses significant constraints, especially for real-time applications. As the number of sensors and data dimensionality increase, fusion algorithms face exponential growth in computational requirements. This challenge becomes particularly acute in resource-constrained environments such as mobile platforms, IoT devices, or autonomous vehicles where processing power and energy consumption are limited.

Scalability issues emerge when fusion systems need to incorporate additional sensors or adapt to changing operational conditions. Many current fusion architectures lack the flexibility to seamlessly integrate new sensor modalities without substantial reconfiguration or retraining, limiting their practical deployment in evolving environments.

Data quality assessment represents another persistent challenge, as fusion systems must continuously evaluate the reliability and relevance of incoming sensor data. Detecting and managing faulty sensors, corrupted data, or adversarial inputs remains difficult, particularly in safety-critical applications where system failures could have severe consequences.

Privacy and security concerns have gained prominence as fusion systems increasingly incorporate sensitive data sources. Protecting personal information while maintaining fusion performance presents technical and ethical challenges, especially in applications like healthcare monitoring or public surveillance where privacy expectations are high.

Interpretability and explainability deficiencies limit the adoption of advanced fusion techniques in regulated industries. As fusion algorithms become more sophisticated, particularly those leveraging deep learning approaches, understanding and validating their decision-making processes becomes increasingly difficult for human operators and regulatory bodies.

Contemporary Multi-Modal Fusion Architectures

01 Multi-sensor data fusion techniques

Multi-sensor data fusion involves combining data from multiple sensors to achieve more accurate and reliable information. This approach enhances the quality of data interpretation by integrating complementary information from different sources. The integration effectiveness is improved through algorithms that can handle heterogeneous data types and resolve conflicts between different sensor inputs, resulting in more robust decision-making systems.- Multi-sensor data fusion techniques: Multi-sensor data fusion involves combining data from multiple sensors to achieve more accurate and reliable information. This approach enhances the quality of integrated data by reducing uncertainty and increasing robustness. The techniques include statistical methods, artificial intelligence algorithms, and hierarchical processing frameworks that enable effective integration of heterogeneous sensor data for improved decision-making and system performance.

- Medical imaging data integration systems: Systems for integrating various medical imaging data sources to provide comprehensive patient information. These approaches combine different imaging modalities such as MRI, CT scans, and ultrasound to create unified diagnostic views. The integration effectiveness is enhanced through specialized algorithms that align and normalize data from different sources, enabling healthcare professionals to make more informed clinical decisions based on complete patient information.

- Distributed data fusion architectures: Architectures designed for distributed data fusion across networks, enabling efficient integration of information from geographically dispersed sources. These systems implement parallel processing frameworks and communication protocols that optimize data transfer while maintaining data integrity. The effectiveness of integration is measured through reduced latency, improved scalability, and enhanced resilience against network failures or data inconsistencies.

- AI-powered data fusion frameworks: Advanced frameworks leveraging artificial intelligence and machine learning algorithms to automate and optimize the data fusion process. These systems can identify patterns, correlations, and anomalies across diverse datasets, enabling more intelligent integration. The AI components adapt to changing data characteristics and can handle unstructured data, improving the overall effectiveness of integration through continuous learning and refinement of fusion parameters.

- Real-time data fusion methodologies: Methodologies specifically designed for real-time integration of streaming data from multiple sources. These approaches focus on minimizing processing delays while maintaining accuracy through optimized algorithms and efficient memory management. The effectiveness of real-time fusion is achieved through adaptive filtering techniques, parallel processing architectures, and prioritization mechanisms that ensure critical information is integrated first for time-sensitive applications.

02 Medical imaging data fusion systems

Data fusion approaches in medical imaging combine information from different imaging modalities such as MRI, CT scans, and ultrasound to provide comprehensive diagnostic information. These systems integrate anatomical and functional data to improve visualization, diagnosis accuracy, and treatment planning. The effectiveness of integration is measured by enhanced clinical decision-making capabilities and improved patient outcomes through more precise interventions.Expand Specific Solutions03 Database integration and information management

This approach focuses on integrating data from disparate databases and information systems to create unified knowledge repositories. The effectiveness of integration is enhanced through standardized data formats, middleware solutions, and semantic mapping techniques. These methods enable organizations to consolidate information across different platforms, improving data accessibility, consistency, and analytical capabilities while reducing redundancy and maintenance costs.Expand Specific Solutions04 AI and machine learning for data fusion

Artificial intelligence and machine learning algorithms are employed to enhance data fusion processes by automatically identifying patterns, correlations, and insights across diverse datasets. These approaches improve integration effectiveness through adaptive learning mechanisms that can handle large volumes of heterogeneous data. The techniques include neural networks, deep learning, and ensemble methods that optimize the extraction of meaningful information from combined data sources.Expand Specific Solutions05 Security and privacy in data fusion systems

This approach addresses the challenges of maintaining data security and privacy while integrating information from multiple sources. Integration effectiveness is measured by the system's ability to protect sensitive information during the fusion process while still enabling valuable insights. Techniques include encryption methods, access control mechanisms, and privacy-preserving algorithms that allow data to be combined and analyzed without compromising confidentiality.Expand Specific Solutions

Leading Organizations in Multi-Sensor Integration

Data fusion approaches combining multiple sensor modalities are evolving rapidly in a growing market characterized by increasing technological maturity. The industry is transitioning from early development to commercial application phases, with the market expected to expand significantly due to rising demand in autonomous vehicles, defense, and IoT applications. Leading players like Lockheed Martin, Thales, and IBM are advancing sophisticated fusion algorithms, while specialized firms such as Intelligent Fusion Technology and Digital Global Systems focus on niche applications. Academic institutions (CNRS, Tufts) collaborate with industry to bridge research gaps. Automotive companies (Toyota, BMW) are integrating multi-sensor fusion for autonomous driving, while tech giants (Huawei, NEC) develop cloud-based fusion platforms, indicating a competitive landscape with diverse specialization strategies.

Lockheed Martin Corp.

Technical Solution: Lockheed Martin has developed advanced multi-modal sensor fusion frameworks for defense and aerospace applications. Their approach integrates radar, infrared, electro-optical, and LIDAR sensors through a hierarchical fusion architecture that processes data at different levels (signal, feature, and decision). The company employs proprietary algorithms for temporal alignment and spatial registration of heterogeneous sensor data, enabling real-time target tracking and identification in complex environments. Their system utilizes Bayesian methods and machine learning techniques to handle uncertainty and improve detection reliability. Lockheed's sensor fusion technology incorporates adaptive weighting mechanisms that dynamically adjust the contribution of each sensor modality based on environmental conditions and mission requirements[1]. This allows for robust performance across varying operational scenarios, from aerial surveillance to maritime domain awareness.

Strengths: Superior integration of multiple military-grade sensors with high reliability in adverse conditions; extensive field testing in mission-critical applications; advanced uncertainty handling. Weaknesses: High implementation costs; significant computational requirements; proprietary systems with limited interoperability with third-party technologies.

Intelligent Fusion Technology, Inc.

Technical Solution: Intelligent Fusion Technology specializes in multi-sensor data fusion solutions with their flagship MIDAS (Multi-sensor Integration and Data Analysis System) platform. Their approach focuses on heterogeneous sensor integration using a modular architecture that supports plug-and-play capabilities for various sensor types. The company employs advanced statistical methods including Kalman filtering, particle filters, and deep learning techniques to process and fuse data from disparate sources such as RF sensors, imaging systems, and acoustic arrays. Their technology implements both centralized and distributed fusion architectures depending on application requirements, with particular emphasis on handling asynchronous data streams and varying data quality[2]. IFT's solutions incorporate context-aware fusion algorithms that adapt to changing environmental conditions and mission parameters, enabling more intelligent decision-making in complex scenarios. The company has developed specialized techniques for managing bandwidth constraints in distributed sensor networks while maintaining fusion accuracy.

Strengths: Highly adaptable modular architecture; specialized expertise in asynchronous data handling; strong performance in bandwidth-constrained environments; extensive experience with defense and intelligence applications. Weaknesses: Limited commercial deployment outside government/defense sectors; higher complexity in configuration for non-technical users.

Key Algorithms and Frameworks Analysis

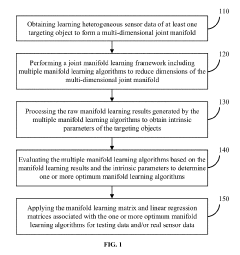

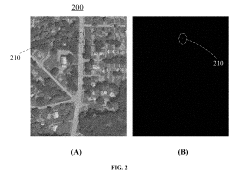

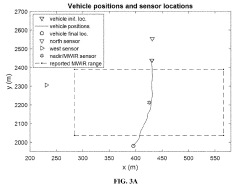

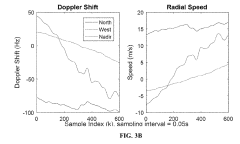

Methods, systems and media for joint manifold learning based heterogenous sensor data fusion

PatentActiveUS20190228272A1

Innovation

- The method employs joint manifold learning to process data from multiple sensors, applying various manifold learning algorithms to reduce dimensions and extract intrinsic parameters, selecting the optimal algorithms for fusion, thereby preserving feature information and enhancing tracking accuracy.

Data fusion method and device based on multiple sensors and storage medium

PatentActiveCN112434682A

Innovation

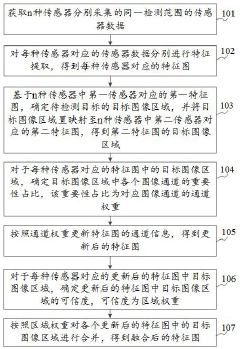

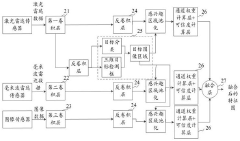

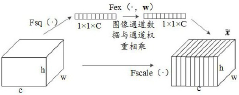

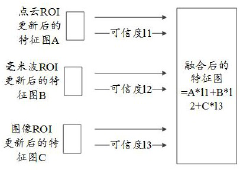

- By obtaining data from the same detection range collected by multiple sensors, feature extraction and mapping are performed to determine the importance proportion and regional credibility of image channels, and the attention mechanism and credibility mechanism are used to update the feature map and perform fusion.

Standardization Efforts in Data Fusion Protocols

The standardization of data fusion protocols represents a critical foundation for the advancement of multi-modal sensor integration technologies. Currently, several international organizations are leading efforts to establish common frameworks and protocols that enable interoperability between diverse sensor systems. The IEEE has developed the IEEE 1451 family of standards specifically addressing smart transducer interfaces, which provides a foundation for sensor data fusion across different modalities. Similarly, the International Organization for Standardization (ISO) has introduced ISO/IEC 21823 for Internet of Things (IoT) interoperability, which includes specific provisions for multi-modal sensor data integration.

Industry consortia have also made significant contributions to standardization efforts. The Open Geospatial Consortium (OGC) has established the Sensor Web Enablement (SWE) framework, which defines standards for discovering, accessing, and integrating sensor data across multiple platforms. Additionally, the Industrial Internet Consortium (IIC) has developed reference architectures that incorporate standardized approaches to multi-modal sensor fusion for industrial applications.

Recent developments in standardization have focused on addressing the unique challenges of real-time data fusion. The Object Management Group (OMG) has introduced the Data Distribution Service (DDS) standard, which provides a scalable middleware architecture for real-time systems that integrate multiple sensor modalities. This standard has gained significant traction in aerospace, defense, and autonomous vehicle applications where reliable fusion of heterogeneous sensor data is mission-critical.

Emerging standardization efforts are increasingly addressing the semantic interoperability aspects of data fusion. The W3C's Semantic Sensor Network (SSN) ontology provides a standardized vocabulary for describing sensors and their observations, facilitating more sophisticated fusion algorithms that can understand the context and meaning of data from different modalities. This semantic layer is proving essential for advanced fusion applications in healthcare, environmental monitoring, and smart city deployments.

Despite these advances, significant challenges remain in standardization efforts. The rapid evolution of sensor technologies often outpaces standardization processes, creating gaps in coverage for newer modalities. Furthermore, cross-domain standardization remains problematic, with domain-specific standards sometimes creating silos that impede broader integration efforts. Industry adoption of existing standards also varies considerably, with proprietary solutions still dominating in many commercial applications.

Industry consortia have also made significant contributions to standardization efforts. The Open Geospatial Consortium (OGC) has established the Sensor Web Enablement (SWE) framework, which defines standards for discovering, accessing, and integrating sensor data across multiple platforms. Additionally, the Industrial Internet Consortium (IIC) has developed reference architectures that incorporate standardized approaches to multi-modal sensor fusion for industrial applications.

Recent developments in standardization have focused on addressing the unique challenges of real-time data fusion. The Object Management Group (OMG) has introduced the Data Distribution Service (DDS) standard, which provides a scalable middleware architecture for real-time systems that integrate multiple sensor modalities. This standard has gained significant traction in aerospace, defense, and autonomous vehicle applications where reliable fusion of heterogeneous sensor data is mission-critical.

Emerging standardization efforts are increasingly addressing the semantic interoperability aspects of data fusion. The W3C's Semantic Sensor Network (SSN) ontology provides a standardized vocabulary for describing sensors and their observations, facilitating more sophisticated fusion algorithms that can understand the context and meaning of data from different modalities. This semantic layer is proving essential for advanced fusion applications in healthcare, environmental monitoring, and smart city deployments.

Despite these advances, significant challenges remain in standardization efforts. The rapid evolution of sensor technologies often outpaces standardization processes, creating gaps in coverage for newer modalities. Furthermore, cross-domain standardization remains problematic, with domain-specific standards sometimes creating silos that impede broader integration efforts. Industry adoption of existing standards also varies considerably, with proprietary solutions still dominating in many commercial applications.

Real-Time Processing Constraints and Solutions

Real-time processing represents one of the most significant challenges in multi-modal sensor fusion systems. The integration of data from diverse sensor modalities such as cameras, LiDAR, radar, and ultrasonic sensors generates massive data volumes that must be processed with minimal latency to enable timely decision-making. Current autonomous vehicles and advanced robotics systems typically require processing latencies below 100ms to ensure safe operation in dynamic environments.

The computational complexity of fusion algorithms presents a fundamental constraint. Traditional centralized fusion architectures often create bottlenecks when processing high-bandwidth sensor data streams simultaneously. This challenge is particularly evident in applications requiring high update rates, such as autonomous driving at highway speeds where environmental conditions change rapidly.

Hardware acceleration solutions have emerged as critical enablers for real-time multi-modal fusion. Field-Programmable Gate Arrays (FPGAs) offer reconfigurable computing capabilities that can be optimized for specific fusion algorithms, achieving processing speeds 10-50x faster than general-purpose processors for certain operations. Graphics Processing Units (GPUs) excel at parallel processing tasks common in sensor fusion, while Application-Specific Integrated Circuits (ASICs) provide maximum performance efficiency for standardized fusion operations.

Edge computing architectures distribute processing loads across multiple nodes, reducing central processing requirements. This approach enables preliminary fusion operations to occur closer to the sensors themselves, minimizing data transfer latencies. Implementations using edge computing have demonstrated latency reductions of up to 60% compared to cloud-dependent architectures.

Software optimization techniques complement hardware solutions through algorithmic improvements. Approximate computing methods sacrifice minimal accuracy for substantial performance gains, while incremental fusion algorithms update only the portions of fused data affected by new sensor inputs. Dynamic resource allocation frameworks adaptively assign computational resources based on current processing demands and environmental complexity.

Temporal synchronization remains a persistent challenge, as different sensors operate at varying sampling rates and processing times. Time-stamping mechanisms and predictive algorithms help align data from different modalities, while buffering strategies manage the trade-off between fusion accuracy and processing latency. Advanced systems implement adaptive synchronization that adjusts buffer sizes based on environmental complexity and vehicle dynamics.

Emerging solutions include neuromorphic computing architectures that mimic biological neural systems, potentially offering order-of-magnitude improvements in energy efficiency for fusion operations. Quantum computing, while still experimental, shows promise for specific fusion algorithms involving complex probabilistic calculations that challenge conventional computing paradigms.

The computational complexity of fusion algorithms presents a fundamental constraint. Traditional centralized fusion architectures often create bottlenecks when processing high-bandwidth sensor data streams simultaneously. This challenge is particularly evident in applications requiring high update rates, such as autonomous driving at highway speeds where environmental conditions change rapidly.

Hardware acceleration solutions have emerged as critical enablers for real-time multi-modal fusion. Field-Programmable Gate Arrays (FPGAs) offer reconfigurable computing capabilities that can be optimized for specific fusion algorithms, achieving processing speeds 10-50x faster than general-purpose processors for certain operations. Graphics Processing Units (GPUs) excel at parallel processing tasks common in sensor fusion, while Application-Specific Integrated Circuits (ASICs) provide maximum performance efficiency for standardized fusion operations.

Edge computing architectures distribute processing loads across multiple nodes, reducing central processing requirements. This approach enables preliminary fusion operations to occur closer to the sensors themselves, minimizing data transfer latencies. Implementations using edge computing have demonstrated latency reductions of up to 60% compared to cloud-dependent architectures.

Software optimization techniques complement hardware solutions through algorithmic improvements. Approximate computing methods sacrifice minimal accuracy for substantial performance gains, while incremental fusion algorithms update only the portions of fused data affected by new sensor inputs. Dynamic resource allocation frameworks adaptively assign computational resources based on current processing demands and environmental complexity.

Temporal synchronization remains a persistent challenge, as different sensors operate at varying sampling rates and processing times. Time-stamping mechanisms and predictive algorithms help align data from different modalities, while buffering strategies manage the trade-off between fusion accuracy and processing latency. Advanced systems implement adaptive synchronization that adjusts buffer sizes based on environmental complexity and vehicle dynamics.

Emerging solutions include neuromorphic computing architectures that mimic biological neural systems, potentially offering order-of-magnitude improvements in energy efficiency for fusion operations. Quantum computing, while still experimental, shows promise for specific fusion algorithms involving complex probabilistic calculations that challenge conventional computing paradigms.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!