Sensor Data Quality Assurance And Remote Diagnostics

AUG 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Sensor Data QA Background and Objectives

Sensor data quality assurance has evolved significantly over the past two decades, transitioning from basic error detection mechanisms to sophisticated systems capable of real-time analysis and predictive maintenance. The evolution has been driven by the exponential growth in sensor deployment across industries, with the global sensor market expanding from approximately $80 billion in 2010 to over $240 billion by 2022, and projections indicating continued growth at a CAGR of 11.5% through 2028.

The proliferation of IoT devices, which reached 14.4 billion connected devices globally in 2022, has dramatically increased the volume and variety of sensor data being generated. This expansion has highlighted critical challenges in ensuring data quality, as studies indicate that up to 30% of sensor data may contain anomalies, errors, or inconsistencies that can compromise analytical outcomes and operational decisions.

Traditional quality assurance methods focused primarily on post-collection data cleaning and validation. However, the increasing reliance on real-time decision-making systems has necessitated a shift toward proactive quality assurance mechanisms that can identify and address issues at the point of data generation or transmission. This evolution represents a fundamental change in approach, moving from reactive to preventive quality management strategies.

Remote diagnostics capabilities have similarly transformed, progressing from basic remote monitoring to advanced predictive analytics that can anticipate sensor failures before they occur. This progression has been enabled by advancements in machine learning algorithms, edge computing capabilities, and communication protocols that support efficient data transmission even in bandwidth-constrained environments.

The primary objectives of modern sensor data quality assurance and remote diagnostics systems include: ensuring data accuracy and reliability across diverse operational conditions; minimizing data loss during transmission and storage; enabling real-time anomaly detection and correction; supporting predictive maintenance to reduce downtime; and facilitating comprehensive system health monitoring through remote access capabilities.

Industry standards for sensor data quality have also evolved, with organizations such as IEEE, ISO, and IEC developing frameworks that define quality parameters including accuracy, precision, resolution, linearity, and drift characteristics. These standards provide essential benchmarks for evaluating sensor performance and data reliability across different application domains.

The convergence of sensor technologies with artificial intelligence and cloud computing has created new opportunities for implementing sophisticated quality assurance mechanisms that can adapt to changing operational conditions and learn from historical patterns. This technological integration represents the current frontier in sensor data quality assurance, with significant implications for industries ranging from manufacturing and healthcare to transportation and environmental monitoring.

The proliferation of IoT devices, which reached 14.4 billion connected devices globally in 2022, has dramatically increased the volume and variety of sensor data being generated. This expansion has highlighted critical challenges in ensuring data quality, as studies indicate that up to 30% of sensor data may contain anomalies, errors, or inconsistencies that can compromise analytical outcomes and operational decisions.

Traditional quality assurance methods focused primarily on post-collection data cleaning and validation. However, the increasing reliance on real-time decision-making systems has necessitated a shift toward proactive quality assurance mechanisms that can identify and address issues at the point of data generation or transmission. This evolution represents a fundamental change in approach, moving from reactive to preventive quality management strategies.

Remote diagnostics capabilities have similarly transformed, progressing from basic remote monitoring to advanced predictive analytics that can anticipate sensor failures before they occur. This progression has been enabled by advancements in machine learning algorithms, edge computing capabilities, and communication protocols that support efficient data transmission even in bandwidth-constrained environments.

The primary objectives of modern sensor data quality assurance and remote diagnostics systems include: ensuring data accuracy and reliability across diverse operational conditions; minimizing data loss during transmission and storage; enabling real-time anomaly detection and correction; supporting predictive maintenance to reduce downtime; and facilitating comprehensive system health monitoring through remote access capabilities.

Industry standards for sensor data quality have also evolved, with organizations such as IEEE, ISO, and IEC developing frameworks that define quality parameters including accuracy, precision, resolution, linearity, and drift characteristics. These standards provide essential benchmarks for evaluating sensor performance and data reliability across different application domains.

The convergence of sensor technologies with artificial intelligence and cloud computing has created new opportunities for implementing sophisticated quality assurance mechanisms that can adapt to changing operational conditions and learn from historical patterns. This technological integration represents the current frontier in sensor data quality assurance, with significant implications for industries ranging from manufacturing and healthcare to transportation and environmental monitoring.

Market Demand Analysis for High-Quality Sensor Data

The global market for high-quality sensor data is experiencing unprecedented growth, driven by the proliferation of IoT devices across multiple industries. Current market valuations indicate that the sensor data quality assurance market reached approximately $5.2 billion in 2022, with projections suggesting a compound annual growth rate of 18.7% through 2028. This rapid expansion reflects the increasing recognition of data quality as a critical factor in operational efficiency and decision-making processes.

Industrial manufacturing represents the largest market segment, accounting for nearly 32% of the total demand. In this sector, high-quality sensor data directly impacts production efficiency, predictive maintenance capabilities, and overall equipment effectiveness. Manufacturing companies report that improved sensor data quality can reduce unplanned downtime by up to 25% and extend machine lifetime by 20-30%.

Healthcare and medical devices constitute the fastest-growing segment, with demand increasing at 22.3% annually. The critical nature of medical applications necessitates extremely high data reliability standards, with many regulatory bodies now mandating comprehensive data quality assurance protocols for medical devices and monitoring systems.

Consumer demand for reliable smart home and wearable technology has created another significant market segment. Research indicates that 78% of consumers consider device reliability as a primary factor in purchasing decisions, directly correlating with the quality of sensor data processing.

Automotive and transportation industries have also emerged as major markets, particularly with the advancement of autonomous vehicle technologies. These applications require sensor data with near-perfect accuracy and reliability, creating substantial demand for sophisticated quality assurance solutions.

Geographically, North America leads the market with a 38% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is experiencing the fastest growth rate at 24.1% annually, driven by rapid industrial automation in China, Japan, and South Korea.

Key market drivers include increasing regulatory requirements across industries, growing complexity of IoT ecosystems, rising costs associated with poor data quality, and the expanding implementation of AI and machine learning systems that depend on high-quality input data. Industry surveys reveal that organizations lose an average of 15-25% of their operational efficiency due to poor sensor data quality, creating a compelling business case for investment in quality assurance solutions.

Industrial manufacturing represents the largest market segment, accounting for nearly 32% of the total demand. In this sector, high-quality sensor data directly impacts production efficiency, predictive maintenance capabilities, and overall equipment effectiveness. Manufacturing companies report that improved sensor data quality can reduce unplanned downtime by up to 25% and extend machine lifetime by 20-30%.

Healthcare and medical devices constitute the fastest-growing segment, with demand increasing at 22.3% annually. The critical nature of medical applications necessitates extremely high data reliability standards, with many regulatory bodies now mandating comprehensive data quality assurance protocols for medical devices and monitoring systems.

Consumer demand for reliable smart home and wearable technology has created another significant market segment. Research indicates that 78% of consumers consider device reliability as a primary factor in purchasing decisions, directly correlating with the quality of sensor data processing.

Automotive and transportation industries have also emerged as major markets, particularly with the advancement of autonomous vehicle technologies. These applications require sensor data with near-perfect accuracy and reliability, creating substantial demand for sophisticated quality assurance solutions.

Geographically, North America leads the market with a 38% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is experiencing the fastest growth rate at 24.1% annually, driven by rapid industrial automation in China, Japan, and South Korea.

Key market drivers include increasing regulatory requirements across industries, growing complexity of IoT ecosystems, rising costs associated with poor data quality, and the expanding implementation of AI and machine learning systems that depend on high-quality input data. Industry surveys reveal that organizations lose an average of 15-25% of their operational efficiency due to poor sensor data quality, creating a compelling business case for investment in quality assurance solutions.

Current Challenges in Sensor Data Quality Assurance

Despite significant advancements in sensor technology, the field of sensor data quality assurance faces numerous persistent challenges that impede reliable data collection and analysis. One of the primary obstacles is sensor drift and calibration degradation over time, which leads to gradual accuracy reduction without clear indication of failure. This phenomenon is particularly problematic in long-term deployments where regular physical access for maintenance is limited or cost-prohibitive.

Environmental factors present another significant challenge, as temperature fluctuations, humidity, vibration, and electromagnetic interference can substantially impact sensor readings. These variables often create complex error patterns that are difficult to isolate from actual measurements, especially in harsh industrial environments or outdoor installations where conditions are constantly changing.

Data transmission reliability remains problematic, with packet loss, communication latency, and bandwidth limitations affecting real-time monitoring capabilities. In remote or resource-constrained environments, these issues are exacerbated by power constraints and network instability, leading to incomplete datasets that compromise analytical integrity.

Cross-sensor correlation and data fusion present technical hurdles when attempting to integrate readings from heterogeneous sensor networks. Variations in sampling rates, measurement units, and precision levels complicate the development of unified quality assurance frameworks, particularly in systems that combine legacy equipment with newer sensor technologies.

Scalability challenges emerge as sensor networks grow, with quality assurance processes that work effectively for dozens of sensors often failing when applied to thousands or millions of data points. This scaling issue affects both computational resources required for processing and the statistical methods used to identify anomalies.

Security vulnerabilities introduce additional complications, as compromised sensors may report falsified data that appears valid but undermines system integrity. Distinguishing between natural sensor anomalies and malicious data manipulation requires sophisticated detection algorithms that are still evolving.

Resource constraints further limit quality assurance capabilities, particularly in edge computing scenarios where processing power, memory, and energy availability restrict the complexity of on-device validation algorithms. This forces trade-offs between immediate data validation and comprehensive quality analysis.

Finally, the lack of standardized quality metrics across industries creates inconsistency in how sensor data quality is defined and measured. Without universal benchmarks, organizations struggle to establish appropriate quality thresholds and validation procedures, leading to subjective assessments that vary widely between implementations and use cases.

Environmental factors present another significant challenge, as temperature fluctuations, humidity, vibration, and electromagnetic interference can substantially impact sensor readings. These variables often create complex error patterns that are difficult to isolate from actual measurements, especially in harsh industrial environments or outdoor installations where conditions are constantly changing.

Data transmission reliability remains problematic, with packet loss, communication latency, and bandwidth limitations affecting real-time monitoring capabilities. In remote or resource-constrained environments, these issues are exacerbated by power constraints and network instability, leading to incomplete datasets that compromise analytical integrity.

Cross-sensor correlation and data fusion present technical hurdles when attempting to integrate readings from heterogeneous sensor networks. Variations in sampling rates, measurement units, and precision levels complicate the development of unified quality assurance frameworks, particularly in systems that combine legacy equipment with newer sensor technologies.

Scalability challenges emerge as sensor networks grow, with quality assurance processes that work effectively for dozens of sensors often failing when applied to thousands or millions of data points. This scaling issue affects both computational resources required for processing and the statistical methods used to identify anomalies.

Security vulnerabilities introduce additional complications, as compromised sensors may report falsified data that appears valid but undermines system integrity. Distinguishing between natural sensor anomalies and malicious data manipulation requires sophisticated detection algorithms that are still evolving.

Resource constraints further limit quality assurance capabilities, particularly in edge computing scenarios where processing power, memory, and energy availability restrict the complexity of on-device validation algorithms. This forces trade-offs between immediate data validation and comprehensive quality analysis.

Finally, the lack of standardized quality metrics across industries creates inconsistency in how sensor data quality is defined and measured. Without universal benchmarks, organizations struggle to establish appropriate quality thresholds and validation procedures, leading to subjective assessments that vary widely between implementations and use cases.

Existing Remote Diagnostic Solutions and Frameworks

01 Data quality assessment and validation techniques

Various methods and systems for assessing and validating sensor data quality to ensure reliability. These techniques include statistical analysis, comparison with reference data, and automated validation algorithms that can detect anomalies, outliers, and inconsistencies in sensor readings. Quality assessment frameworks help in identifying and filtering out erroneous data before it's used in critical applications.- Data quality assessment and validation techniques: Various methods and systems for assessing and validating sensor data quality are implemented to ensure reliability. These techniques include statistical analysis, comparison with reference data, and automated validation algorithms that can detect anomalies, outliers, and inconsistencies in sensor readings. Quality assessment frameworks help in identifying and filtering out erroneous data before it's used in critical applications, thereby improving overall system performance and decision-making processes.

- Sensor calibration and accuracy improvement: Techniques for enhancing sensor data quality through calibration processes and accuracy improvement methods. These include dynamic calibration algorithms, self-calibrating sensor systems, and compensation techniques that adjust for environmental factors affecting sensor readings. Advanced calibration approaches use machine learning to continuously refine sensor outputs based on operational conditions, reducing drift and systematic errors over time.

- Real-time monitoring and error detection systems: Systems designed for continuous monitoring of sensor data quality in real-time applications, with capabilities to detect errors, malfunctions, or degradation as they occur. These systems implement fault detection algorithms, health monitoring of sensor networks, and automated alert mechanisms when data quality falls below defined thresholds. Real-time quality control enables immediate corrective actions, minimizing the impact of sensor failures on operational systems.

- Data fusion and multi-sensor integration: Methods for combining data from multiple sensors to improve overall data quality and reliability. These approaches use sensor fusion algorithms, complementary sensor arrangements, and redundancy techniques to cross-validate measurements and compensate for individual sensor limitations. By integrating data from diverse sensor types, these systems can achieve higher accuracy, broader coverage, and greater resilience to single-point failures than individual sensors alone.

- Machine learning for sensor data quality enhancement: Application of artificial intelligence and machine learning techniques to improve sensor data quality through intelligent processing. These methods include predictive analytics for identifying potential quality issues, anomaly detection algorithms that learn normal patterns to flag deviations, and adaptive filtering techniques that automatically adjust to changing conditions. Machine learning approaches can recognize complex patterns in sensor behavior that traditional rule-based systems might miss, enabling more sophisticated quality control.

02 Sensor calibration and accuracy improvement

Methods for improving sensor data quality through calibration processes and accuracy enhancement techniques. These include regular calibration protocols, drift compensation algorithms, and adaptive calibration methods that adjust to environmental changes. Advanced calibration techniques help maintain sensor accuracy over time and across varying operational conditions, ensuring high-quality data output.Expand Specific Solutions03 Real-time data processing and filtering

Systems for processing and filtering sensor data in real-time to improve quality. These include noise reduction algorithms, signal processing techniques, and intelligent filtering methods that can separate meaningful data from interference. Real-time processing enables immediate quality control and allows systems to respond promptly to data quality issues before they affect downstream applications.Expand Specific Solutions04 Sensor fusion and multi-sensor data integration

Techniques for combining data from multiple sensors to enhance overall data quality and reliability. Sensor fusion algorithms integrate complementary data sources, compensate for individual sensor weaknesses, and provide more comprehensive and accurate measurements. These approaches help overcome limitations of single sensors and improve resilience against sensor failures or inaccuracies.Expand Specific Solutions05 Machine learning for sensor data quality enhancement

Application of machine learning and artificial intelligence techniques to improve sensor data quality. These include predictive models for data correction, anomaly detection algorithms, and self-learning systems that can adapt to changing conditions. Machine learning approaches can identify complex patterns in sensor behavior, predict failures, and automatically adjust for data quality issues without human intervention.Expand Specific Solutions

Key Industry Players in Sensor QA and Diagnostics

The sensor data quality assurance and remote diagnostics market is currently in a growth phase, with increasing adoption across healthcare, industrial, and automotive sectors. The global market size is estimated to reach $15-20 billion by 2025, driven by IoT proliferation and demand for predictive maintenance solutions. Technology maturity varies by application, with companies like Philips, Hitachi, and Mitsubishi Electric leading in healthcare diagnostics through advanced AI-powered monitoring systems. In the industrial sector, ABB Group, YASKAWA, and Caterpillar have developed sophisticated sensor networks for equipment monitoring, while automotive applications are advanced by companies like T-Mobile and Fujitsu. Emerging players like Eko Health are disrupting traditional approaches with innovative mobile diagnostic platforms, indicating a competitive landscape that balances established industrial giants with agile technology innovators.

Koninklijke Philips NV

Technical Solution: Philips has developed an advanced sensor data quality assurance system that integrates real-time monitoring with AI-driven analytics for healthcare applications. Their solution employs a multi-layered approach combining hardware sensors with sophisticated software algorithms to ensure data integrity. The system features automatic calibration mechanisms that continuously adjust sensor parameters based on environmental conditions and usage patterns. Philips' remote diagnostics platform enables healthcare providers to monitor medical devices across distributed locations, with capabilities for predictive maintenance that can identify potential failures before they occur. The system employs end-to-end encryption for secure data transmission and includes comprehensive audit trails for regulatory compliance. Their cloud-based analytics engine processes sensor data to identify anomalies and quality issues, while machine learning algorithms continuously improve detection accuracy over time[1][3].

Strengths: Extensive healthcare domain expertise allows for highly specialized sensor solutions tailored to medical applications. Their integrated ecosystem approach provides seamless connectivity between devices and diagnostic systems. Weaknesses: Solutions may be costly compared to competitors, and their proprietary systems can limit interoperability with third-party devices.

Cardiac Pacemakers, Inc.

Technical Solution: Cardiac Pacemakers has pioneered remote monitoring technology specifically for implantable cardiac devices. Their sensor data quality assurance system employs proprietary algorithms to filter signal noise and ensure accurate cardiac rhythm detection. The platform features continuous self-diagnostic capabilities that verify sensor functionality and data integrity through multiple redundant checks. Their remote diagnostics solution enables clinicians to access real-time patient data through a secure cloud platform, with automated alerts for critical events or data anomalies. The system incorporates machine learning to establish personalized baselines for each patient, improving the accuracy of anomaly detection. Their technology includes sophisticated power management features that optimize battery life while maintaining high-frequency data sampling when needed. The platform also provides comprehensive data visualization tools that help clinicians quickly identify trends and potential issues in cardiac device performance[2][5].

Strengths: Highly specialized in cardiac monitoring with deep expertise in implantable device technology and critical data reliability requirements. Their systems feature exceptional battery optimization crucial for implantable devices. Weaknesses: Narrow focus on cardiac applications limits broader market applications, and their solutions may have limited integration capabilities with general hospital information systems.

Core Technologies for Sensor Data Validation

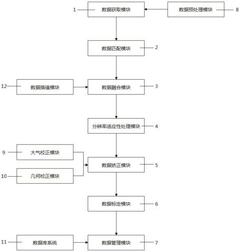

Remote sensing data quality monitoring system with extensible functions and performance

PatentPendingCN118779307A

Innovation

- A remote sensing data quality monitoring system with scalable functions and performance is designed. By unifying the scale and resolution of satellite data and UAV data, it adopts data matching, resolution adaptive processing, data correction and data calibration, etc. module, eliminates spatial resolution differences, generates integrated images, and improves data accuracy and precision.

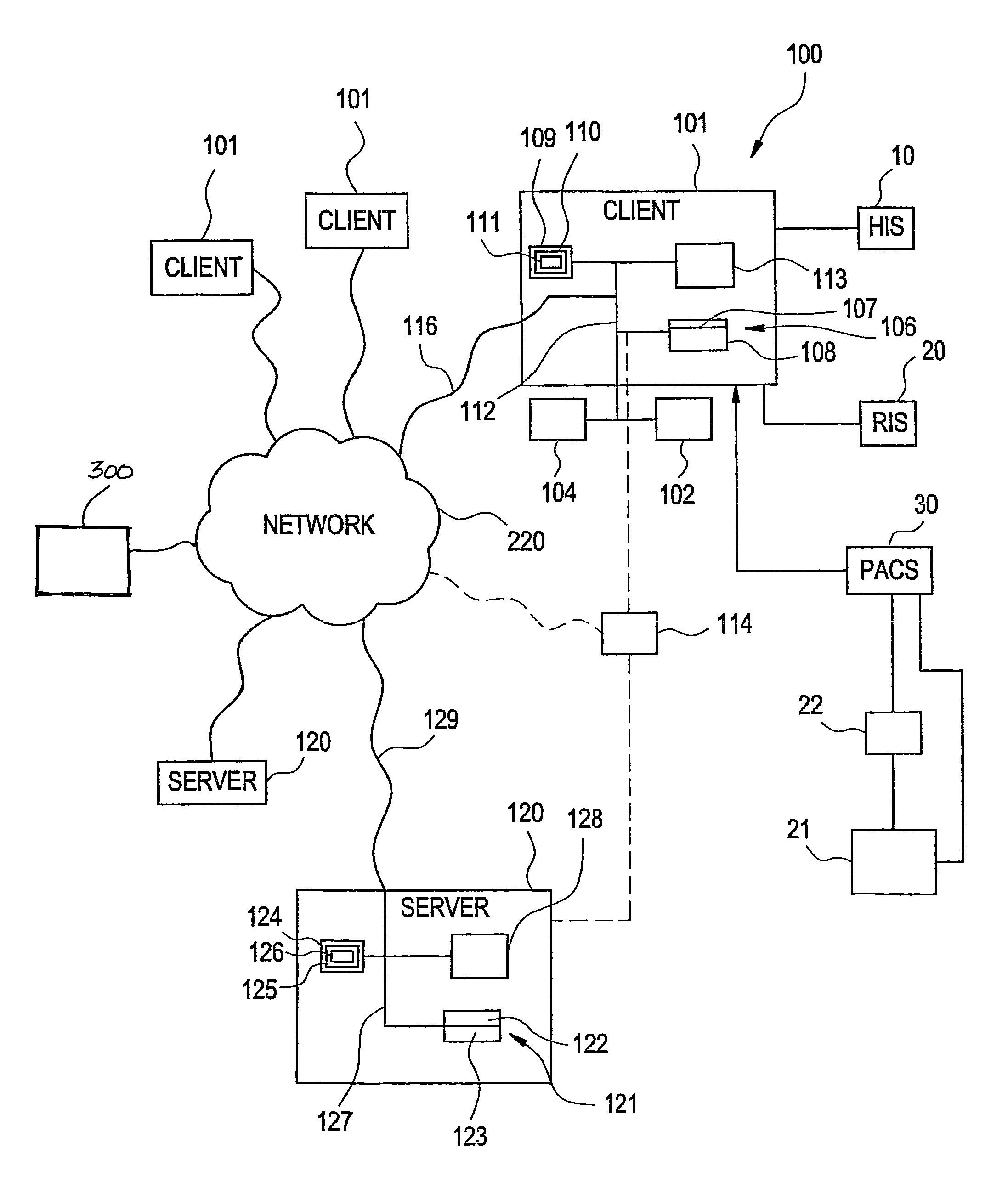

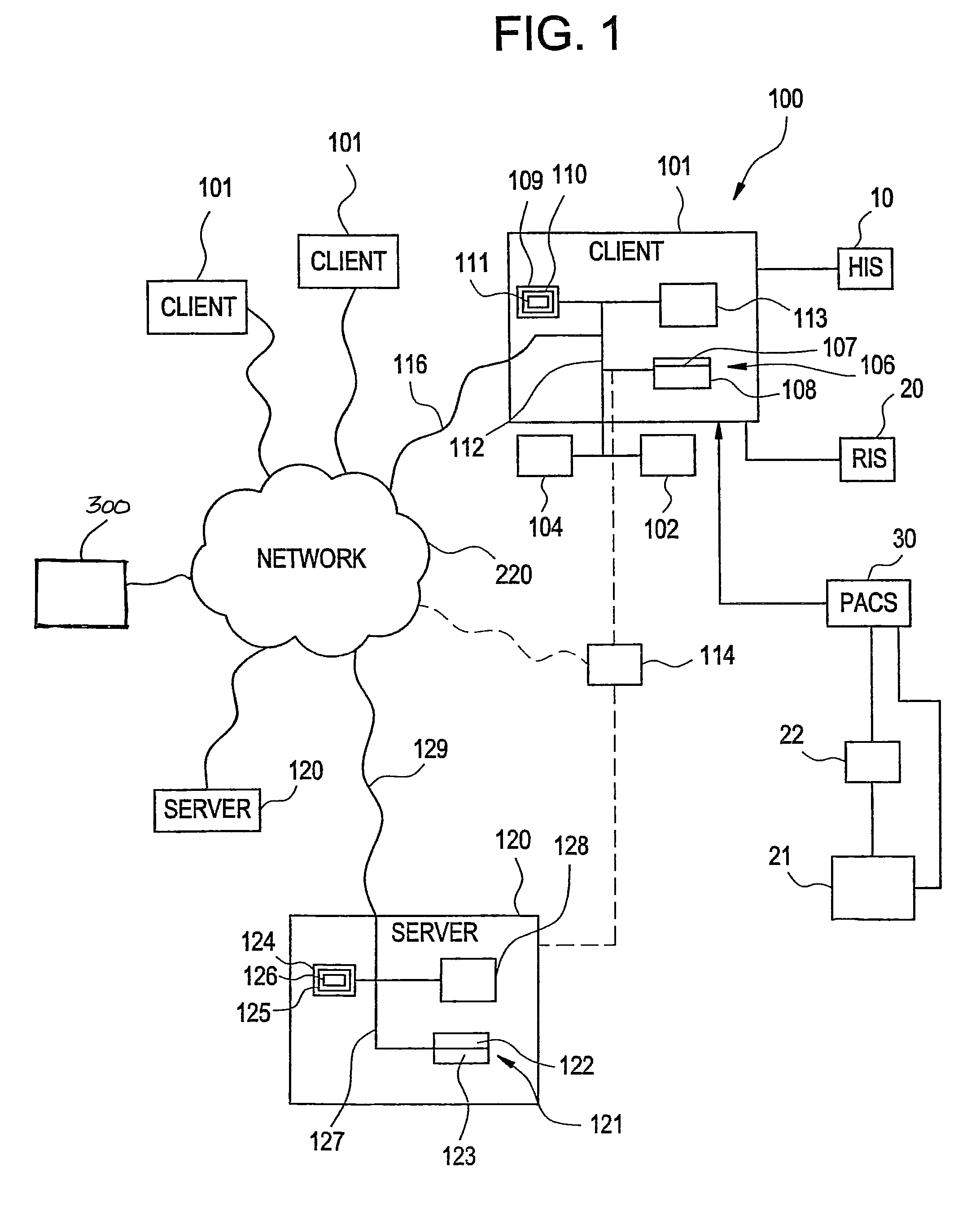

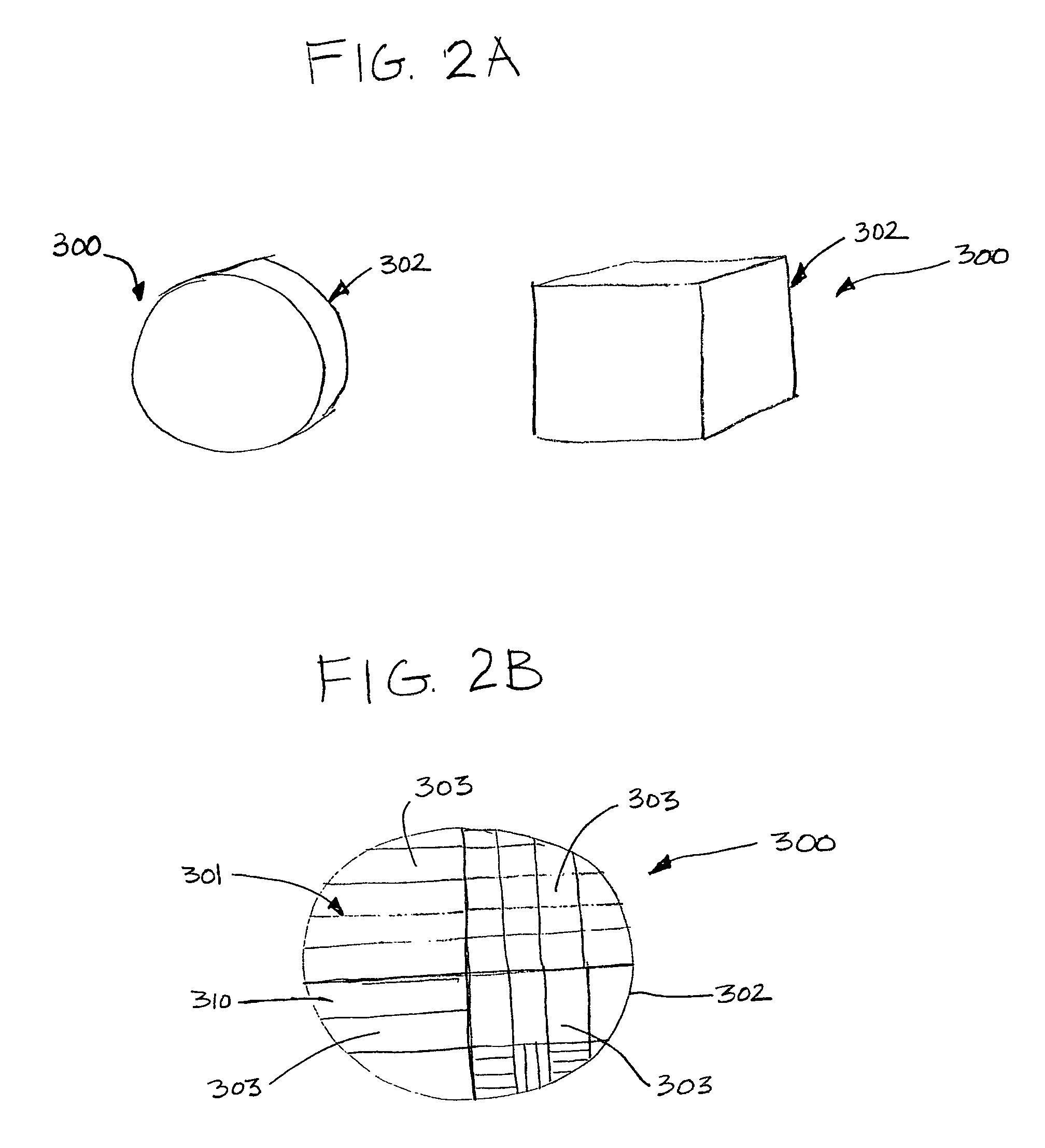

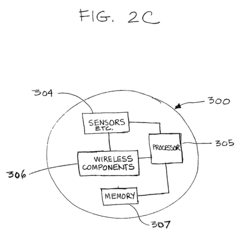

Multi-functional medical imaging quality assurance sensor

PatentInactiveUS8333508B2

Innovation

- A combined QA/QC technology using a sensor to automate and objectify QA analysis, establishing objective quality metrics that are recorded in a database for tracking and analysis, providing automated decision support and meta-analysis for improving image quality and patient safety, and creating evidence-based guidelines.

Data Security and Privacy Considerations

In the realm of Sensor Data Quality Assurance and Remote Diagnostics, data security and privacy considerations have become paramount concerns. As sensor networks expand across industrial environments, healthcare systems, and consumer applications, the volume of potentially sensitive data being collected has grown exponentially, creating significant security challenges.

The protection of sensor data requires a multi-layered approach addressing both data in transit and at rest. Encryption protocols such as TLS/SSL for data transmission and AES-256 for storage have become industry standards, yet implementation across diverse sensor platforms remains inconsistent. Organizations must establish end-to-end encryption policies that account for the often limited computational capabilities of edge devices without compromising security integrity.

Access control mechanisms represent another critical component of sensor data protection. Implementing robust authentication protocols, including multi-factor authentication for administrative access and role-based access controls, helps prevent unauthorized data exposure. The principle of least privilege should guide all access management strategies, particularly for remote diagnostic operations where system vulnerabilities may be exposed.

Privacy considerations extend beyond technical safeguards to regulatory compliance. Frameworks such as GDPR in Europe, CCPA in California, and industry-specific regulations like HIPAA for healthcare sensors impose strict requirements on data handling practices. Organizations must implement privacy-by-design principles, including data minimization, purpose limitation, and transparent processing policies.

The challenge of data anonymization deserves special attention in sensor networks. While complete anonymization may compromise diagnostic capabilities, techniques such as differential privacy and pseudonymization offer balanced approaches that preserve analytical utility while protecting individual privacy. These methods become especially important when sensor data is aggregated for trend analysis or machine learning applications.

Remote diagnostic operations introduce additional security vectors that must be addressed. Secure remote access protocols, including VPN technologies and jump servers, should be mandatory for all diagnostic sessions. Furthermore, comprehensive logging and monitoring systems must track all remote interactions with sensor data, enabling rapid detection of potential security breaches or privacy violations.

As sensor networks increasingly incorporate edge computing capabilities, security architectures must evolve to protect distributed processing environments. This includes implementing secure boot processes, regular firmware updates, and hardware-based security features such as trusted platform modules (TPMs) to establish root-of-trust mechanisms at the device level.

The protection of sensor data requires a multi-layered approach addressing both data in transit and at rest. Encryption protocols such as TLS/SSL for data transmission and AES-256 for storage have become industry standards, yet implementation across diverse sensor platforms remains inconsistent. Organizations must establish end-to-end encryption policies that account for the often limited computational capabilities of edge devices without compromising security integrity.

Access control mechanisms represent another critical component of sensor data protection. Implementing robust authentication protocols, including multi-factor authentication for administrative access and role-based access controls, helps prevent unauthorized data exposure. The principle of least privilege should guide all access management strategies, particularly for remote diagnostic operations where system vulnerabilities may be exposed.

Privacy considerations extend beyond technical safeguards to regulatory compliance. Frameworks such as GDPR in Europe, CCPA in California, and industry-specific regulations like HIPAA for healthcare sensors impose strict requirements on data handling practices. Organizations must implement privacy-by-design principles, including data minimization, purpose limitation, and transparent processing policies.

The challenge of data anonymization deserves special attention in sensor networks. While complete anonymization may compromise diagnostic capabilities, techniques such as differential privacy and pseudonymization offer balanced approaches that preserve analytical utility while protecting individual privacy. These methods become especially important when sensor data is aggregated for trend analysis or machine learning applications.

Remote diagnostic operations introduce additional security vectors that must be addressed. Secure remote access protocols, including VPN technologies and jump servers, should be mandatory for all diagnostic sessions. Furthermore, comprehensive logging and monitoring systems must track all remote interactions with sensor data, enabling rapid detection of potential security breaches or privacy violations.

As sensor networks increasingly incorporate edge computing capabilities, security architectures must evolve to protect distributed processing environments. This includes implementing secure boot processes, regular firmware updates, and hardware-based security features such as trusted platform modules (TPMs) to establish root-of-trust mechanisms at the device level.

Standardization Efforts in Sensor Data Quality

The standardization of sensor data quality has become a critical focus area in the IoT and industrial automation sectors. Organizations such as IEEE, ISO, and IEC have developed comprehensive frameworks that establish uniform metrics, protocols, and methodologies for evaluating sensor data quality. The IEEE 2700-2017 standard specifically addresses sensor performance parameters, providing a common language for manufacturers and users to describe and compare sensor capabilities across different applications and environments.

ISO/IEC JTC 1/SC 41 has made significant contributions through its work on IoT reference architectures, which include specific provisions for data quality assurance in sensor networks. These standards define key quality attributes such as accuracy, precision, reliability, and timeliness, enabling consistent evaluation across diverse sensor deployments. Additionally, the W3C's Semantic Sensor Network Ontology offers standardized vocabulary for describing sensors and their measurements, facilitating interoperability in heterogeneous systems.

Industry-specific standardization efforts have emerged to address unique sectoral requirements. In healthcare, the HL7 FHIR standard incorporates specific provisions for medical sensor data quality, while in automotive applications, the AUTOSAR standard includes detailed specifications for sensor data validation and verification. These domain-specific standards complement broader frameworks while addressing particular challenges in their respective fields.

Open-source initiatives have accelerated standardization adoption through reference implementations and testing tools. The Linux Foundation's EdgeX Foundry project provides standardized APIs for sensor data quality validation, while the Apache IoT projects offer frameworks that implement quality assurance mechanisms aligned with international standards. These initiatives reduce implementation barriers and promote wider adoption of standardized approaches.

Regulatory bodies have increasingly incorporated sensor data quality standards into compliance frameworks. The FDA's guidance on medical device software includes specific requirements for sensor data quality assurance, while the European Union's GDPR has implications for the quality management of personal data collected through sensors. This regulatory integration underscores the growing recognition of data quality as a critical component of system reliability and safety.

Emerging trends in standardization include the development of machine learning-specific quality metrics for sensor data preprocessing and validation. Standards bodies are working to establish benchmarks for evaluating AI-enhanced sensor systems, addressing challenges in data drift detection and automated quality control. These efforts reflect the evolving nature of sensor technologies and their increasingly sophisticated applications in autonomous systems.

ISO/IEC JTC 1/SC 41 has made significant contributions through its work on IoT reference architectures, which include specific provisions for data quality assurance in sensor networks. These standards define key quality attributes such as accuracy, precision, reliability, and timeliness, enabling consistent evaluation across diverse sensor deployments. Additionally, the W3C's Semantic Sensor Network Ontology offers standardized vocabulary for describing sensors and their measurements, facilitating interoperability in heterogeneous systems.

Industry-specific standardization efforts have emerged to address unique sectoral requirements. In healthcare, the HL7 FHIR standard incorporates specific provisions for medical sensor data quality, while in automotive applications, the AUTOSAR standard includes detailed specifications for sensor data validation and verification. These domain-specific standards complement broader frameworks while addressing particular challenges in their respective fields.

Open-source initiatives have accelerated standardization adoption through reference implementations and testing tools. The Linux Foundation's EdgeX Foundry project provides standardized APIs for sensor data quality validation, while the Apache IoT projects offer frameworks that implement quality assurance mechanisms aligned with international standards. These initiatives reduce implementation barriers and promote wider adoption of standardized approaches.

Regulatory bodies have increasingly incorporated sensor data quality standards into compliance frameworks. The FDA's guidance on medical device software includes specific requirements for sensor data quality assurance, while the European Union's GDPR has implications for the quality management of personal data collected through sensors. This regulatory integration underscores the growing recognition of data quality as a critical component of system reliability and safety.

Emerging trends in standardization include the development of machine learning-specific quality metrics for sensor data preprocessing and validation. Standards bodies are working to establish benchmarks for evaluating AI-enhanced sensor systems, addressing challenges in data drift detection and automated quality control. These efforts reflect the evolving nature of sensor technologies and their increasingly sophisticated applications in autonomous systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!