Calibration Methods And Drift Compensation Techniques

AUG 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Calibration Evolution and Objectives

Calibration methods have evolved significantly over the past decades, transforming from basic manual adjustments to sophisticated automated systems that incorporate artificial intelligence and machine learning. The earliest calibration techniques emerged in the 1950s with the advent of electronic measurement instruments, requiring periodic manual adjustments to maintain accuracy. These rudimentary methods were labor-intensive and prone to human error, limiting their effectiveness in precision applications.

The 1970s and 1980s marked a significant transition with the introduction of digital calibration techniques. This period saw the development of microprocessor-based calibration systems that could store calibration parameters and perform automatic adjustments. These advancements reduced human intervention and improved measurement consistency, though drift compensation remained a challenge.

By the 1990s, self-calibration mechanisms began to appear in high-precision instruments. These systems could detect deviations from standard values and make necessary adjustments without external intervention. This era also witnessed the emergence of mathematical models for predicting and compensating for drift, particularly in temperature-sensitive applications.

The early 2000s brought network-enabled calibration systems that could be monitored and adjusted remotely. This development coincided with the rise of integrated sensor networks and IoT applications, where maintaining calibration across distributed systems became increasingly important. During this period, adaptive calibration algorithms that could respond to changing environmental conditions gained prominence.

Current calibration technology has evolved to incorporate real-time drift compensation techniques that continuously monitor and adjust for variations in sensor performance. Machine learning algorithms now analyze historical calibration data to predict drift patterns and preemptively adjust parameters before measurement quality deteriorates. These systems can identify complex, non-linear relationships between environmental factors and measurement drift.

The primary objectives of modern calibration methods include achieving higher accuracy across wider operating ranges, reducing calibration frequency while maintaining precision, and developing universal calibration approaches applicable across different sensor types. Additionally, there is a growing focus on developing calibration techniques that can function effectively in harsh or extreme environments where traditional methods fail.

Another key goal is the development of traceable calibration methods that maintain an unbroken chain to international standards, ensuring global consistency in measurements. As sensors become more integrated into critical systems, calibration methods must also address security concerns, preventing unauthorized manipulation of calibration parameters that could compromise system integrity or safety.

The 1970s and 1980s marked a significant transition with the introduction of digital calibration techniques. This period saw the development of microprocessor-based calibration systems that could store calibration parameters and perform automatic adjustments. These advancements reduced human intervention and improved measurement consistency, though drift compensation remained a challenge.

By the 1990s, self-calibration mechanisms began to appear in high-precision instruments. These systems could detect deviations from standard values and make necessary adjustments without external intervention. This era also witnessed the emergence of mathematical models for predicting and compensating for drift, particularly in temperature-sensitive applications.

The early 2000s brought network-enabled calibration systems that could be monitored and adjusted remotely. This development coincided with the rise of integrated sensor networks and IoT applications, where maintaining calibration across distributed systems became increasingly important. During this period, adaptive calibration algorithms that could respond to changing environmental conditions gained prominence.

Current calibration technology has evolved to incorporate real-time drift compensation techniques that continuously monitor and adjust for variations in sensor performance. Machine learning algorithms now analyze historical calibration data to predict drift patterns and preemptively adjust parameters before measurement quality deteriorates. These systems can identify complex, non-linear relationships between environmental factors and measurement drift.

The primary objectives of modern calibration methods include achieving higher accuracy across wider operating ranges, reducing calibration frequency while maintaining precision, and developing universal calibration approaches applicable across different sensor types. Additionally, there is a growing focus on developing calibration techniques that can function effectively in harsh or extreme environments where traditional methods fail.

Another key goal is the development of traceable calibration methods that maintain an unbroken chain to international standards, ensuring global consistency in measurements. As sensors become more integrated into critical systems, calibration methods must also address security concerns, preventing unauthorized manipulation of calibration parameters that could compromise system integrity or safety.

Market Demand for Precision Calibration Solutions

The global market for precision calibration solutions has witnessed substantial growth in recent years, driven primarily by the increasing demand for high-accuracy measurements across various industries. The calibration methods and drift compensation techniques market is projected to reach $9.8 billion by 2027, growing at a CAGR of 5.7% from 2022. This growth trajectory underscores the critical importance of these technologies in modern industrial applications.

Manufacturing sectors, particularly aerospace, automotive, and electronics, represent the largest market segments for precision calibration solutions. These industries require extremely accurate measurements to ensure product quality, safety, and compliance with increasingly stringent regulatory standards. The semiconductor industry alone accounts for approximately 23% of the total market demand, as nanometer-scale manufacturing processes necessitate unprecedented levels of calibration precision.

Healthcare and medical device manufacturing have emerged as rapidly expanding markets for advanced calibration technologies. With the rise of personalized medicine and point-of-care diagnostics, the need for precisely calibrated instruments has intensified. Market research indicates that healthcare-related calibration solution demand is growing at 7.2% annually, outpacing the overall market average.

The Industrial Internet of Things (IIoT) and Industry 4.0 initiatives are creating new market opportunities for automated and intelligent calibration systems. Organizations implementing smart factory concepts require calibration solutions that can integrate with their digital infrastructure, providing real-time monitoring and adjustment capabilities. This segment is expected to grow by 9.3% annually through 2027.

Geographically, North America and Europe currently dominate the market for precision calibration solutions, collectively accounting for approximately 58% of global demand. However, the Asia-Pacific region is experiencing the fastest growth rate at 8.1% annually, driven by rapid industrialization in countries like China, India, and South Korea.

Environmental monitoring and renewable energy sectors represent emerging markets with significant growth potential. As climate change concerns intensify, the demand for precisely calibrated environmental sensors and monitoring equipment continues to rise. Similarly, the renewable energy sector requires highly accurate calibration for efficiency optimization in solar panels, wind turbines, and energy storage systems.

Customer preferences are increasingly shifting toward comprehensive calibration solutions that address both initial calibration and long-term drift compensation. End-users are willing to pay premium prices for systems that can maintain accuracy over extended periods, reducing recalibration frequency and associated downtime costs. This trend is particularly pronounced in continuous process industries where production interruptions for recalibration can cost thousands of dollars per hour.

Manufacturing sectors, particularly aerospace, automotive, and electronics, represent the largest market segments for precision calibration solutions. These industries require extremely accurate measurements to ensure product quality, safety, and compliance with increasingly stringent regulatory standards. The semiconductor industry alone accounts for approximately 23% of the total market demand, as nanometer-scale manufacturing processes necessitate unprecedented levels of calibration precision.

Healthcare and medical device manufacturing have emerged as rapidly expanding markets for advanced calibration technologies. With the rise of personalized medicine and point-of-care diagnostics, the need for precisely calibrated instruments has intensified. Market research indicates that healthcare-related calibration solution demand is growing at 7.2% annually, outpacing the overall market average.

The Industrial Internet of Things (IIoT) and Industry 4.0 initiatives are creating new market opportunities for automated and intelligent calibration systems. Organizations implementing smart factory concepts require calibration solutions that can integrate with their digital infrastructure, providing real-time monitoring and adjustment capabilities. This segment is expected to grow by 9.3% annually through 2027.

Geographically, North America and Europe currently dominate the market for precision calibration solutions, collectively accounting for approximately 58% of global demand. However, the Asia-Pacific region is experiencing the fastest growth rate at 8.1% annually, driven by rapid industrialization in countries like China, India, and South Korea.

Environmental monitoring and renewable energy sectors represent emerging markets with significant growth potential. As climate change concerns intensify, the demand for precisely calibrated environmental sensors and monitoring equipment continues to rise. Similarly, the renewable energy sector requires highly accurate calibration for efficiency optimization in solar panels, wind turbines, and energy storage systems.

Customer preferences are increasingly shifting toward comprehensive calibration solutions that address both initial calibration and long-term drift compensation. End-users are willing to pay premium prices for systems that can maintain accuracy over extended periods, reducing recalibration frequency and associated downtime costs. This trend is particularly pronounced in continuous process industries where production interruptions for recalibration can cost thousands of dollars per hour.

Current Challenges in Calibration and Drift Compensation

Despite significant advancements in calibration methods and drift compensation techniques, several persistent challenges continue to impede optimal sensor performance across various applications. Environmental factors remain a primary concern, as temperature fluctuations, humidity changes, and pressure variations can significantly alter sensor responses, requiring complex compensation algorithms that must adapt to multiple variables simultaneously.

Time-dependent drift presents another substantial challenge, manifesting as gradual shifts in sensor output that occur even under stable conditions. This phenomenon becomes particularly problematic in long-term monitoring applications where recalibration opportunities are limited, such as in remote environmental sensing or implantable medical devices. Current mathematical models struggle to accurately predict non-linear drift patterns that evolve over extended timeframes.

Cross-sensitivity issues further complicate calibration efforts, as many sensors respond not only to their target analyte but also to interfering substances. For instance, electrochemical gas sensors designed to detect specific gases often exhibit responses to other gases with similar electrochemical properties, necessitating sophisticated discrimination algorithms that are difficult to maintain over time.

The trade-off between calibration frequency and operational efficiency represents a significant economic challenge. While frequent calibration ensures accuracy, it increases downtime and operational costs. Conversely, extended intervals between calibrations improve efficiency but risk compromised data quality. Current automated calibration systems have not fully resolved this dilemma, particularly in industrial settings where production continuity is critical.

Miniaturization trends in sensor technology have introduced additional calibration complexities. As sensors become smaller and integrated into compact devices, physical access for traditional calibration procedures becomes restricted. This limitation has spurred research into embedded self-calibration mechanisms, though these often suffer from resource constraints and limited reference standards.

Data-driven calibration approaches using machine learning show promise but face challenges related to training data quality and model generalization. These methods typically require extensive datasets covering all potential operating conditions—a requirement that proves impractical for many applications. Additionally, black-box nature of some advanced algorithms raises concerns regarding traceability and validation in regulated industries.

Standardization remains inadequate across different sensor types and applications, with varying protocols and metrics for evaluating calibration performance. This lack of uniformity complicates comparative assessments and technology transfer, particularly as sensors increasingly operate within interconnected systems requiring consistent data quality across multiple measurement points.

Time-dependent drift presents another substantial challenge, manifesting as gradual shifts in sensor output that occur even under stable conditions. This phenomenon becomes particularly problematic in long-term monitoring applications where recalibration opportunities are limited, such as in remote environmental sensing or implantable medical devices. Current mathematical models struggle to accurately predict non-linear drift patterns that evolve over extended timeframes.

Cross-sensitivity issues further complicate calibration efforts, as many sensors respond not only to their target analyte but also to interfering substances. For instance, electrochemical gas sensors designed to detect specific gases often exhibit responses to other gases with similar electrochemical properties, necessitating sophisticated discrimination algorithms that are difficult to maintain over time.

The trade-off between calibration frequency and operational efficiency represents a significant economic challenge. While frequent calibration ensures accuracy, it increases downtime and operational costs. Conversely, extended intervals between calibrations improve efficiency but risk compromised data quality. Current automated calibration systems have not fully resolved this dilemma, particularly in industrial settings where production continuity is critical.

Miniaturization trends in sensor technology have introduced additional calibration complexities. As sensors become smaller and integrated into compact devices, physical access for traditional calibration procedures becomes restricted. This limitation has spurred research into embedded self-calibration mechanisms, though these often suffer from resource constraints and limited reference standards.

Data-driven calibration approaches using machine learning show promise but face challenges related to training data quality and model generalization. These methods typically require extensive datasets covering all potential operating conditions—a requirement that proves impractical for many applications. Additionally, black-box nature of some advanced algorithms raises concerns regarding traceability and validation in regulated industries.

Standardization remains inadequate across different sensor types and applications, with varying protocols and metrics for evaluating calibration performance. This lack of uniformity complicates comparative assessments and technology transfer, particularly as sensors increasingly operate within interconnected systems requiring consistent data quality across multiple measurement points.

State-of-the-Art Drift Compensation Approaches

01 Real-time calibration and drift compensation in communication systems

Communication systems often require real-time calibration and drift compensation techniques to maintain signal integrity. These methods involve continuous monitoring of signal parameters and applying adaptive corrections to compensate for environmental changes, component aging, and other factors that cause drift. Advanced algorithms can detect deviations from expected performance and automatically adjust system parameters to maintain optimal operation, ensuring reliable data transmission even under varying conditions.- Sensor Calibration Techniques for Drift Compensation: Various sensor calibration techniques are employed to compensate for drift in measurement systems. These methods involve periodic recalibration of sensors against known reference standards to maintain accuracy over time. Advanced algorithms can detect when drift occurs and automatically adjust sensor readings. Some systems implement continuous calibration processes that run in the background during normal operation, reducing the need for system downtime.

- Digital Signal Processing Methods for Drift Correction: Digital signal processing techniques are used to identify and correct drift in electronic systems. These methods employ mathematical algorithms to analyze signal patterns and distinguish between actual data and drift-induced errors. Adaptive filtering, Fourier transforms, and wavelet analysis can be applied to isolate drift components from the signal. Real-time processing allows for immediate compensation of drift effects, maintaining system accuracy under varying environmental conditions.

- Temperature Compensation in Calibration Systems: Temperature variations often cause significant drift in electronic and mechanical systems. Specialized temperature compensation techniques involve the use of temperature sensors to monitor ambient conditions and apply corresponding corrections to measurements. Some systems use lookup tables or mathematical models that describe the relationship between temperature and drift. Advanced implementations may include multiple temperature sensors placed at strategic locations to account for thermal gradients across the system.

- Machine Learning Approaches for Drift Prediction and Compensation: Machine learning algorithms are increasingly used to predict and compensate for drift in complex systems. These approaches analyze historical data to identify patterns in drift behavior and develop predictive models. Neural networks and other AI techniques can adapt to changing conditions and improve compensation accuracy over time. Some implementations combine multiple machine learning methods to handle different aspects of drift, such as long-term trends versus short-term fluctuations.

- Wireless and Remote Calibration Systems: Modern calibration systems often incorporate wireless technology to enable remote monitoring and adjustment for drift compensation. These systems allow for calibration without physical access to the equipment, reducing maintenance costs and downtime. Cloud-based platforms can store calibration data and automatically apply corrections across distributed sensor networks. Some implementations use secure communication protocols to ensure the integrity of calibration data and prevent unauthorized adjustments.

02 Sensor calibration methods for environmental monitoring

Environmental monitoring systems employ various calibration methods to ensure accurate measurements despite sensor drift over time. These techniques include reference measurement comparison, multi-point calibration procedures, and statistical correction models. By implementing regular calibration routines and drift compensation algorithms, these systems can maintain measurement accuracy across changing environmental conditions, temperature variations, and extended deployment periods.Expand Specific Solutions03 Frequency and phase drift compensation in electronic circuits

Electronic circuits, particularly oscillators and timing systems, require sophisticated drift compensation techniques to maintain frequency and phase stability. These methods include temperature compensation circuits, feedback control systems, and digital correction algorithms. By continuously monitoring performance parameters and applying appropriate corrections, these systems can achieve high precision timing despite component aging, temperature variations, and other factors that would otherwise cause frequency drift.Expand Specific Solutions04 Machine learning approaches for adaptive calibration

Modern calibration systems increasingly incorporate machine learning algorithms to provide adaptive drift compensation. These approaches use historical data to predict drift patterns and automatically adjust calibration parameters. Neural networks, regression models, and other AI techniques can identify complex relationships between environmental factors and measurement errors, enabling more effective compensation strategies that improve over time through continuous learning from operational data.Expand Specific Solutions05 Automotive sensor calibration and drift management

Automotive systems employ specialized calibration methods to manage sensor drift in critical applications like engine control, emissions monitoring, and advanced driver assistance systems. These techniques include on-board diagnostic routines, reference signal comparison, and multi-sensor fusion approaches. By implementing robust drift compensation algorithms, vehicle systems can maintain accurate measurements throughout the vehicle's operational life despite exposure to extreme temperatures, vibration, and aging effects.Expand Specific Solutions

Leading Calibration Technology Providers

The calibration methods and drift compensation techniques market is currently in a growth phase, with increasing demand driven by the need for precision in industrial automation, automotive, and electronics sectors. The market size is expanding due to the integration of these technologies in IoT devices and smart manufacturing systems. Technologically, the field shows varying maturity levels, with established players like Robert Bosch GmbH, Infineon Technologies, and Honda Motor leading with comprehensive solutions. Applied Materials and MediaTek are advancing semiconductor-specific calibration techniques, while newer entrants like trinamiX and ROCSYS are developing specialized applications in biometrics and EV charging. Academic institutions such as KAIST and Southeast University are contributing significant research to address emerging challenges in sensor drift compensation and calibration automation.

Robert Bosch GmbH

Technical Solution: Bosch has developed advanced sensor calibration methods that combine hardware and software solutions to address drift compensation in automotive and industrial applications. Their approach utilizes multi-sensor fusion techniques with integrated temperature compensation algorithms that continuously monitor and adjust for environmental variations. The company's MEMS sensor calibration technology employs machine learning algorithms to identify drift patterns over time and automatically applies correction factors. Bosch's proprietary Dynamic Calibration System (DCS) implements a closed-loop feedback mechanism that periodically performs self-calibration routines during system operation, eliminating the need for manual recalibration. This system can detect and compensate for both short-term fluctuations and long-term aging effects in sensors, maintaining measurement accuracy throughout the device lifecycle[1][3].

Strengths: Comprehensive multi-sensor fusion approach provides redundancy and improved accuracy; adaptive algorithms learn from historical data to predict and compensate for drift patterns; seamless integration with existing automotive and industrial systems. Weaknesses: Higher computational requirements for real-time processing; complex implementation requiring specialized expertise; potentially higher cost compared to simpler calibration methods.

KUKA Deutschland GmbH

Technical Solution: KUKA has pioneered calibration methods specifically designed for industrial robotics that address both geometric and non-geometric error sources. Their approach combines physical modeling with empirical compensation techniques to achieve high precision in robot movements. KUKA's calibration system utilizes laser tracking technology to measure actual robot positions and compares them with commanded positions, creating comprehensive error maps. These maps are then used to implement real-time compensation algorithms that adjust robot movements to account for identified errors. For drift compensation, KUKA employs temperature sensors throughout the robot structure to monitor thermal expansion effects and applies dynamic compensation models that adjust parameters based on operating conditions. Their latest systems incorporate AI-based predictive maintenance that can detect calibration drift before it impacts production quality[2][5].

Strengths: Highly specialized for industrial robotics applications; comprehensive approach addressing both geometric and non-geometric errors; integration with production monitoring systems for continuous quality assurance. Weaknesses: Requires specialized measurement equipment for initial calibration; system complexity increases with robot size and degrees of freedom; calibration process can be time-consuming for high-precision applications.

Key Innovations in Sensor Calibration Techniques

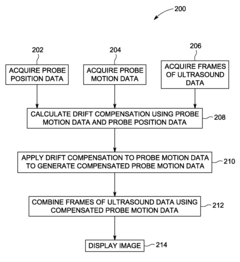

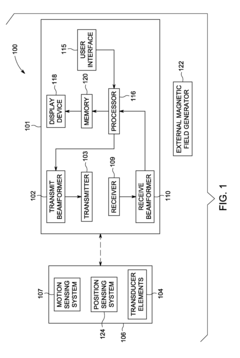

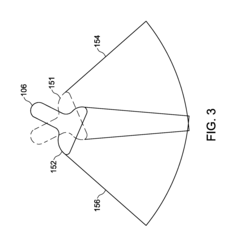

Ultrasound imaging system and method for drift compensation

PatentInactiveUS9504445B2

Innovation

- A method and system that acquire probe motion and position data, allowing a processor to calculate and apply drift compensation, enabling accurate generation of composite ultrasound data by combining frames based on compensated motion data.

Method for compensating a spectrum drift in a spectrometer

PatentActiveUS20180128679A1

Innovation

- A method that generates and uses a catalogue of correction lines to calculate a correction function for peak positions, allowing for real-time drift correction during measurements without additional hardware or separate calibrations, using existing data from base calibration and current sample measurements.

Cross-Industry Calibration Standards

Cross-industry calibration standards represent a critical framework for ensuring measurement consistency and reliability across diverse industrial sectors. The establishment of unified calibration protocols has evolved significantly over the past decades, with organizations like ISO, NIST, and BIPM leading standardization efforts. These standards provide a common language for calibration procedures, enabling industries ranging from aerospace to healthcare to maintain comparable measurement accuracy.

The implementation of cross-industry standards addresses the fundamental challenge of measurement drift compensation through standardized methodologies. For instance, the ISO/IEC 17025 standard offers comprehensive guidelines for calibration laboratories, ensuring that calibration procedures meet universal quality requirements regardless of industry application. This standardization facilitates technology transfer between sectors and reduces redundant development of similar calibration techniques.

Key cross-industry calibration standards incorporate specific drift compensation techniques that can be adapted across multiple domains. The IEEE-1451 family of standards, for example, provides frameworks for smart transducer interfaces that include built-in drift compensation algorithms applicable in both manufacturing and environmental monitoring. Similarly, the ASTM E2655 standard outlines practices for establishing measurement traceability that incorporate drift management protocols.

Recent advancements in cross-industry standards have focused on dynamic calibration approaches that address temporal drift challenges. These standards increasingly incorporate statistical methods for uncertainty quantification and machine learning techniques for predictive drift compensation. The IEC 61298 series specifically addresses performance requirements for industrial process measurement and control equipment, with dedicated sections on managing measurement drift across diverse operating conditions.

Digital calibration certificates (DCCs) have emerged as a standardized format for documenting calibration results across industries, facilitating automated verification and drift monitoring. This digital transformation of calibration documentation enables more efficient tracking of instrument performance over time and supports proactive maintenance scheduling based on observed drift patterns.

The economic impact of cross-industry calibration standards is substantial, with studies indicating that standardized calibration practices reduce measurement-related costs by 15-30% through improved interoperability and reduced recalibration frequency. Furthermore, these standards enable regulatory compliance across multiple jurisdictions, creating a more streamlined path to global market access for measurement-dependent products and services.

The implementation of cross-industry standards addresses the fundamental challenge of measurement drift compensation through standardized methodologies. For instance, the ISO/IEC 17025 standard offers comprehensive guidelines for calibration laboratories, ensuring that calibration procedures meet universal quality requirements regardless of industry application. This standardization facilitates technology transfer between sectors and reduces redundant development of similar calibration techniques.

Key cross-industry calibration standards incorporate specific drift compensation techniques that can be adapted across multiple domains. The IEEE-1451 family of standards, for example, provides frameworks for smart transducer interfaces that include built-in drift compensation algorithms applicable in both manufacturing and environmental monitoring. Similarly, the ASTM E2655 standard outlines practices for establishing measurement traceability that incorporate drift management protocols.

Recent advancements in cross-industry standards have focused on dynamic calibration approaches that address temporal drift challenges. These standards increasingly incorporate statistical methods for uncertainty quantification and machine learning techniques for predictive drift compensation. The IEC 61298 series specifically addresses performance requirements for industrial process measurement and control equipment, with dedicated sections on managing measurement drift across diverse operating conditions.

Digital calibration certificates (DCCs) have emerged as a standardized format for documenting calibration results across industries, facilitating automated verification and drift monitoring. This digital transformation of calibration documentation enables more efficient tracking of instrument performance over time and supports proactive maintenance scheduling based on observed drift patterns.

The economic impact of cross-industry calibration standards is substantial, with studies indicating that standardized calibration practices reduce measurement-related costs by 15-30% through improved interoperability and reduced recalibration frequency. Furthermore, these standards enable regulatory compliance across multiple jurisdictions, creating a more streamlined path to global market access for measurement-dependent products and services.

Environmental Factors Affecting Calibration Stability

Environmental factors play a crucial role in the stability and reliability of calibration systems across various industries. Temperature fluctuations represent one of the most significant environmental challenges, as they can cause thermal expansion or contraction of sensor components, leading to measurement drift. Research indicates that for every 1°C change in ambient temperature, certain precision instruments may experience drift of up to 0.05% of full scale, necessitating temperature compensation algorithms or controlled environments.

Humidity variations similarly impact calibration stability, particularly in capacitive and resistive sensing technologies. High humidity environments can cause condensation on sensitive components, creating parasitic electrical pathways and degrading signal integrity. Studies from the National Metrology Institutes demonstrate that relative humidity changes of 20% can induce measurement errors exceeding 2% in certain electrochemical sensors without proper compensation.

Barometric pressure changes affect calibration stability in pneumatic systems, gas analyzers, and altitude-sensitive equipment. These variations are particularly problematic in aerospace applications and weather monitoring stations, where pressure fluctuations directly influence measurement accuracy. Modern compensation techniques include real-time pressure monitoring with dynamic calibration adjustments.

Electromagnetic interference (EMI) represents another significant environmental factor, with industrial environments often containing multiple sources of electrical noise that can corrupt sensor signals. Shielding techniques, differential signaling, and digital filtering have emerged as standard practices to mitigate EMI effects, though complete elimination remains challenging in complex industrial settings.

Vibration and mechanical shock can physically alter the positioning of calibrated components, particularly in precision mechanical systems and optical alignments. Research from automotive testing laboratories indicates that continuous vibration exposure can accelerate calibration drift by up to 300% compared to static conditions, highlighting the need for vibration-resistant designs and mounting solutions.

Chemical exposure presents unique challenges to calibration stability, especially in process industries where sensors may encounter corrosive substances. Material degradation from chemical interactions can permanently alter sensor characteristics, necessitating specialized protective coatings and regular recalibration schedules based on exposure levels rather than fixed time intervals.

Light exposure affects photoelectric sensors and optical measurement systems, with ambient light variations introducing measurement errors. Modern systems incorporate light shields, modulated light sources, and background compensation algorithms to maintain calibration stability across varying lighting conditions.

Humidity variations similarly impact calibration stability, particularly in capacitive and resistive sensing technologies. High humidity environments can cause condensation on sensitive components, creating parasitic electrical pathways and degrading signal integrity. Studies from the National Metrology Institutes demonstrate that relative humidity changes of 20% can induce measurement errors exceeding 2% in certain electrochemical sensors without proper compensation.

Barometric pressure changes affect calibration stability in pneumatic systems, gas analyzers, and altitude-sensitive equipment. These variations are particularly problematic in aerospace applications and weather monitoring stations, where pressure fluctuations directly influence measurement accuracy. Modern compensation techniques include real-time pressure monitoring with dynamic calibration adjustments.

Electromagnetic interference (EMI) represents another significant environmental factor, with industrial environments often containing multiple sources of electrical noise that can corrupt sensor signals. Shielding techniques, differential signaling, and digital filtering have emerged as standard practices to mitigate EMI effects, though complete elimination remains challenging in complex industrial settings.

Vibration and mechanical shock can physically alter the positioning of calibrated components, particularly in precision mechanical systems and optical alignments. Research from automotive testing laboratories indicates that continuous vibration exposure can accelerate calibration drift by up to 300% compared to static conditions, highlighting the need for vibration-resistant designs and mounting solutions.

Chemical exposure presents unique challenges to calibration stability, especially in process industries where sensors may encounter corrosive substances. Material degradation from chemical interactions can permanently alter sensor characteristics, necessitating specialized protective coatings and regular recalibration schedules based on exposure levels rather than fixed time intervals.

Light exposure affects photoelectric sensors and optical measurement systems, with ambient light variations introducing measurement errors. Modern systems incorporate light shields, modulated light sources, and background compensation algorithms to maintain calibration stability across varying lighting conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!