Device Reliability Characterization In Memristor-Based In-Memory Computing

SEP 2, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Memristor IMC Reliability Background and Objectives

Memristor-based In-Memory Computing (IMC) represents a revolutionary paradigm in computing architecture that addresses the von Neumann bottleneck by integrating computation and memory functions within the same physical location. The evolution of this technology can be traced back to the theoretical conception of memristors by Leon Chua in 1971, followed by the practical demonstration by HP Labs in 2008, which marked a significant milestone in non-volatile memory technology development.

The technological trajectory of memristors has been characterized by continuous improvements in material science, fabrication techniques, and circuit design. Initially developed as a simple two-terminal device exhibiting resistance switching behavior, memristors have evolved into sophisticated components capable of performing complex computational tasks directly within memory arrays, thereby eliminating the need for data transfer between separate processing and storage units.

Current research trends indicate a growing interest in leveraging memristors for artificial intelligence applications, particularly in neural network implementations where the inherent analog nature of memristors aligns well with the computational requirements of synaptic weight storage and processing. This convergence of memory and computing functionalities promises significant improvements in energy efficiency, processing speed, and system footprint.

However, device reliability remains a critical challenge that impedes the widespread adoption of memristor-based IMC systems. Reliability issues manifest in various forms, including resistance state drift, endurance limitations, retention degradation, and variability across devices. These challenges are particularly pronounced in large-scale arrays where uniform performance across thousands or millions of devices is essential for computational accuracy.

The primary objective of reliability characterization in memristor-based IMC is to develop comprehensive understanding of failure mechanisms and their impact on computational accuracy and system longevity. This involves establishing standardized testing methodologies, identifying key reliability metrics, and creating predictive models that can accurately forecast device behavior under various operating conditions and over extended periods.

Additionally, this research aims to establish correlations between material properties, fabrication processes, and reliability parameters to guide the development of more robust memristive devices. By systematically investigating how different stress factors—such as temperature, voltage, and cycling—affect device performance, researchers seek to define operational boundaries that ensure reliable computation while maximizing device utilization.

Ultimately, the goal is to enable the transition of memristor-based IMC from laboratory demonstrations to commercial applications by addressing reliability concerns that currently limit their practical implementation in mission-critical systems and consumer electronics.

The technological trajectory of memristors has been characterized by continuous improvements in material science, fabrication techniques, and circuit design. Initially developed as a simple two-terminal device exhibiting resistance switching behavior, memristors have evolved into sophisticated components capable of performing complex computational tasks directly within memory arrays, thereby eliminating the need for data transfer between separate processing and storage units.

Current research trends indicate a growing interest in leveraging memristors for artificial intelligence applications, particularly in neural network implementations where the inherent analog nature of memristors aligns well with the computational requirements of synaptic weight storage and processing. This convergence of memory and computing functionalities promises significant improvements in energy efficiency, processing speed, and system footprint.

However, device reliability remains a critical challenge that impedes the widespread adoption of memristor-based IMC systems. Reliability issues manifest in various forms, including resistance state drift, endurance limitations, retention degradation, and variability across devices. These challenges are particularly pronounced in large-scale arrays where uniform performance across thousands or millions of devices is essential for computational accuracy.

The primary objective of reliability characterization in memristor-based IMC is to develop comprehensive understanding of failure mechanisms and their impact on computational accuracy and system longevity. This involves establishing standardized testing methodologies, identifying key reliability metrics, and creating predictive models that can accurately forecast device behavior under various operating conditions and over extended periods.

Additionally, this research aims to establish correlations between material properties, fabrication processes, and reliability parameters to guide the development of more robust memristive devices. By systematically investigating how different stress factors—such as temperature, voltage, and cycling—affect device performance, researchers seek to define operational boundaries that ensure reliable computation while maximizing device utilization.

Ultimately, the goal is to enable the transition of memristor-based IMC from laboratory demonstrations to commercial applications by addressing reliability concerns that currently limit their practical implementation in mission-critical systems and consumer electronics.

Market Analysis for Memristor-Based Computing Solutions

The memristor-based in-memory computing market is experiencing significant growth, driven by increasing demands for energy-efficient computing solutions capable of handling AI and big data workloads. Current market projections indicate that the global neuromorphic computing market, which includes memristor technologies, will reach approximately $8.3 billion by 2025, with a compound annual growth rate of 49.2% from 2020.

The primary market segments for memristor-based computing solutions include edge computing devices, data centers, autonomous vehicles, and IoT applications. Edge computing represents the fastest-growing segment due to the increasing need for real-time data processing capabilities in smart devices without reliance on cloud infrastructure. Data centers constitute the largest market segment by revenue, as operators seek solutions to reduce power consumption while handling exponentially growing data volumes.

Industry analysis reveals that North America currently dominates the market with approximately 42% share, followed by Asia-Pacific at 31% and Europe at 22%. The Asia-Pacific region, particularly China and South Korea, is expected to demonstrate the highest growth rate over the next five years due to substantial investments in semiconductor research and manufacturing infrastructure.

Customer demand is primarily driven by three factors: power efficiency, processing speed, and integration capabilities. Enterprise surveys indicate that 78% of potential adopters cite energy efficiency as their primary concern when considering memristor-based solutions, while 65% prioritize computational performance improvements over conventional architectures.

Market barriers include high initial implementation costs, reliability concerns, and integration challenges with existing computing infrastructure. The average cost premium for memristor-based solutions currently stands at 2.3 times that of conventional computing hardware, though this gap is expected to narrow as manufacturing scales up and yields improve.

Competitive analysis shows that the market remains fragmented, with both established semiconductor companies and specialized startups competing for market share. The top five companies currently control approximately 57% of the market, with the remainder distributed among smaller specialized players focusing on niche applications or specific technological approaches.

Market forecasts suggest that reliability improvements in memristor devices could accelerate market adoption by 18-24 months compared to current projections. Specifically, enhancing device endurance from current levels (typically 10^6-10^8 cycles) to 10^10 cycles would address a critical barrier identified by 83% of potential enterprise customers in recent industry surveys.

The primary market segments for memristor-based computing solutions include edge computing devices, data centers, autonomous vehicles, and IoT applications. Edge computing represents the fastest-growing segment due to the increasing need for real-time data processing capabilities in smart devices without reliance on cloud infrastructure. Data centers constitute the largest market segment by revenue, as operators seek solutions to reduce power consumption while handling exponentially growing data volumes.

Industry analysis reveals that North America currently dominates the market with approximately 42% share, followed by Asia-Pacific at 31% and Europe at 22%. The Asia-Pacific region, particularly China and South Korea, is expected to demonstrate the highest growth rate over the next five years due to substantial investments in semiconductor research and manufacturing infrastructure.

Customer demand is primarily driven by three factors: power efficiency, processing speed, and integration capabilities. Enterprise surveys indicate that 78% of potential adopters cite energy efficiency as their primary concern when considering memristor-based solutions, while 65% prioritize computational performance improvements over conventional architectures.

Market barriers include high initial implementation costs, reliability concerns, and integration challenges with existing computing infrastructure. The average cost premium for memristor-based solutions currently stands at 2.3 times that of conventional computing hardware, though this gap is expected to narrow as manufacturing scales up and yields improve.

Competitive analysis shows that the market remains fragmented, with both established semiconductor companies and specialized startups competing for market share. The top five companies currently control approximately 57% of the market, with the remainder distributed among smaller specialized players focusing on niche applications or specific technological approaches.

Market forecasts suggest that reliability improvements in memristor devices could accelerate market adoption by 18-24 months compared to current projections. Specifically, enhancing device endurance from current levels (typically 10^6-10^8 cycles) to 10^10 cycles would address a critical barrier identified by 83% of potential enterprise customers in recent industry surveys.

Current Challenges in Memristor Device Reliability

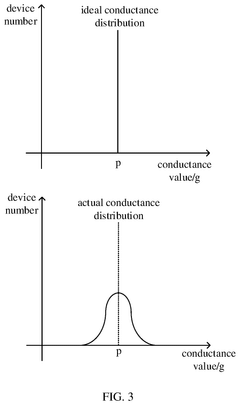

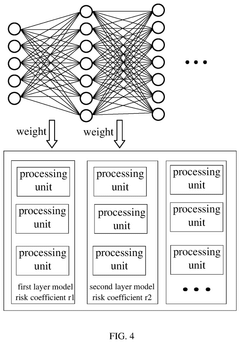

Despite significant advancements in memristor technology, several critical reliability challenges persist that impede the widespread adoption of memristor-based in-memory computing systems. The foremost challenge is device variability, which manifests as inconsistent switching behavior across different devices and even within the same device over time. This variability stems from stochastic filament formation processes, manufacturing inconsistencies, and material defects, resulting in unpredictable resistance states that compromise computational accuracy.

Endurance limitations represent another significant hurdle, with current memristor devices typically supporting only 10^6 to 10^9 write cycles before experiencing performance degradation. This falls short of the requirements for intensive computing applications that demand billions of operations. The physical mechanisms behind this degradation include electrode material migration, oxygen vacancy redistribution, and structural changes in the switching layer that progressively alter device characteristics.

Retention stability poses additional concerns, particularly in environments with temperature fluctuations. Many memristors exhibit resistance drift over time, with stored values gradually shifting from their programmed states. This phenomenon becomes more pronounced at elevated temperatures, creating reliability issues for long-term data storage and computation integrity in real-world applications where thermal conditions vary.

The read disturbance effect presents a subtle but critical challenge, where repeated read operations can unintentionally modify the resistance state of memristors. This non-destructive reading limitation complicates the design of reliable memory arrays and necessitates complex error correction mechanisms that increase system overhead and power consumption.

Sneak path currents in crossbar architectures constitute a significant reliability concern, causing unintended state changes in adjacent devices during read/write operations. These parasitic currents can lead to erroneous computations and accelerated device degradation, particularly as array densities increase and operating voltages decrease to improve energy efficiency.

Environmental sensitivity further complicates reliability characterization, with memristor performance being highly susceptible to temperature variations, humidity, and electromagnetic interference. This sensitivity necessitates robust packaging solutions and environmental controls that add complexity and cost to practical implementations.

Standardized testing methodologies for memristor reliability remain underdeveloped, with inconsistent protocols across research groups making direct performance comparisons challenging. The absence of industry-wide standards for characterizing device reliability metrics impedes meaningful benchmarking and slows technological maturation toward commercial viability.

Endurance limitations represent another significant hurdle, with current memristor devices typically supporting only 10^6 to 10^9 write cycles before experiencing performance degradation. This falls short of the requirements for intensive computing applications that demand billions of operations. The physical mechanisms behind this degradation include electrode material migration, oxygen vacancy redistribution, and structural changes in the switching layer that progressively alter device characteristics.

Retention stability poses additional concerns, particularly in environments with temperature fluctuations. Many memristors exhibit resistance drift over time, with stored values gradually shifting from their programmed states. This phenomenon becomes more pronounced at elevated temperatures, creating reliability issues for long-term data storage and computation integrity in real-world applications where thermal conditions vary.

The read disturbance effect presents a subtle but critical challenge, where repeated read operations can unintentionally modify the resistance state of memristors. This non-destructive reading limitation complicates the design of reliable memory arrays and necessitates complex error correction mechanisms that increase system overhead and power consumption.

Sneak path currents in crossbar architectures constitute a significant reliability concern, causing unintended state changes in adjacent devices during read/write operations. These parasitic currents can lead to erroneous computations and accelerated device degradation, particularly as array densities increase and operating voltages decrease to improve energy efficiency.

Environmental sensitivity further complicates reliability characterization, with memristor performance being highly susceptible to temperature variations, humidity, and electromagnetic interference. This sensitivity necessitates robust packaging solutions and environmental controls that add complexity and cost to practical implementations.

Standardized testing methodologies for memristor reliability remain underdeveloped, with inconsistent protocols across research groups making direct performance comparisons challenging. The absence of industry-wide standards for characterizing device reliability metrics impedes meaningful benchmarking and slows technological maturation toward commercial viability.

Existing Reliability Characterization Methodologies

01 Reliability enhancement through error correction mechanisms

Memristor-based in-memory computing devices can incorporate error correction mechanisms to improve reliability. These mechanisms detect and correct errors that may occur during computation or data storage operations. Various error correction codes and techniques can be implemented to address issues such as resistance drift, device variability, and other factors that might affect the accuracy of computations performed within memristor arrays.- Reliability enhancement through error correction mechanisms: Memristor-based in-memory computing devices can incorporate error correction mechanisms to improve reliability. These mechanisms detect and correct errors that may occur during computation or data storage operations. Various techniques such as parity checking, error-correcting codes, and redundancy schemes can be implemented to ensure data integrity and computational accuracy in memristor arrays, thereby enhancing the overall reliability of these devices.

- Thermal stability and aging management: Managing thermal effects and aging processes is crucial for the reliability of memristor-based computing devices. Techniques include temperature compensation circuits, adaptive programming schemes that adjust parameters based on device age, and thermal management systems that prevent overheating. These approaches help maintain consistent memristor characteristics over time and under varying operating conditions, ensuring long-term reliability and performance stability.

- Variability compensation and calibration techniques: Memristor devices exhibit inherent variability in their electrical characteristics, which can affect computing reliability. Advanced calibration techniques and compensation algorithms can be implemented to address this variability. These include adaptive programming schemes, reference-based calibration, and feedback mechanisms that adjust operational parameters based on real-time measurements, ensuring consistent performance across different memristor cells within an array.

- Fault-tolerant architecture design: Designing fault-tolerant architectures is essential for reliable memristor-based in-memory computing. These architectures incorporate redundant elements, bypass mechanisms for faulty components, and modular designs that isolate failures. Some implementations include hierarchical memory structures, distributed computing elements, and reconfigurable interconnects that can adapt to device failures, ensuring system functionality even when individual memristor elements fail.

- Programming and read scheme optimization: Optimizing programming and read schemes significantly improves the reliability of memristor-based computing devices. Advanced techniques include multi-level verification during programming, adaptive read voltage selection, pulse-width modulation, and current-limiting approaches. These methods minimize disturbances to adjacent cells, reduce read noise, and ensure accurate state transitions, thereby enhancing the overall reliability and precision of memristor-based computational operations.

02 Thermal stability and aging management techniques

Managing thermal effects and aging in memristor devices is crucial for maintaining reliability in in-memory computing applications. Techniques include temperature compensation algorithms, adaptive programming schemes, and specialized circuit designs that mitigate the impact of temperature variations and device aging. These approaches help maintain consistent resistance states over time, ensuring computational accuracy and extending device lifetime.Expand Specific Solutions03 Redundancy and fault tolerance architectures

Implementing redundancy and fault tolerance in memristor-based computing architectures significantly improves reliability. These designs incorporate spare elements, parallel processing paths, and fault detection circuits that can bypass or compensate for failing memristor cells. Such architectures enable the system to continue functioning correctly even when individual memristive elements experience failures or degradation.Expand Specific Solutions04 Adaptive programming and read schemes

Adaptive programming and read schemes dynamically adjust operational parameters based on device characteristics and environmental conditions. These techniques include variable pulse width/amplitude programming, closed-loop verification, and reference-based reading methods. By adapting to the specific behavior of individual memristor devices, these approaches ensure reliable data storage and computation despite device-to-device variations and temporal instabilities.Expand Specific Solutions05 Reliability-aware circuit design and integration

Specialized circuit designs that account for memristor reliability challenges are essential for robust in-memory computing systems. These designs include sensing circuits with improved noise margins, interface circuits that handle the analog nature of memristive devices, and peripheral circuits that manage variability. Additionally, reliability-aware integration approaches consider system-level reliability factors including power distribution, signal integrity, and thermal management to ensure consistent operation.Expand Specific Solutions

Leading Companies and Research Institutions in Memristor IMC

The memristor-based in-memory computing reliability characterization field is currently in its growth phase, with an estimated market size of $2-3 billion and projected annual growth of 25-30%. The competitive landscape features established technology leaders like HP Enterprise and IBM driving fundamental research, while semiconductor manufacturers including Micron Technology and SK Hynix focus on commercial implementation. Academic institutions such as Tsinghua University, KAIST, and Huazhong University of Science & Technology are contributing significant research advancements. The technology is approaching maturity with functional prototypes, but challenges in standardization and long-term reliability testing remain. Industry-academic collaborations are accelerating development, with HP Enterprise maintaining leadership through its pioneering memristor patents.

Hewlett Packard Enterprise Development LP

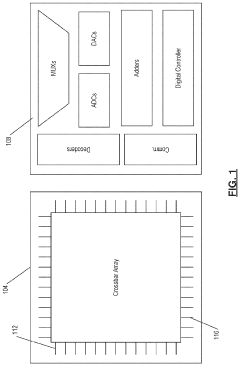

Technical Solution: Hewlett Packard Enterprise has pioneered memristor technology for in-memory computing with their crossbar architecture that enables high-density integration. Their approach focuses on characterizing device reliability through comprehensive testing methodologies including endurance cycling, retention testing, and variability analysis. HPE has developed proprietary techniques for real-time monitoring of resistance drift and implemented adaptive programming schemes that compensate for device-to-device variations[1]. Their memristor-based architecture incorporates error correction mechanisms and redundancy strategies to mitigate the impact of device failures. HPE has also introduced temperature-aware reliability models that account for thermal effects on memristor switching behavior and long-term stability[3]. Their characterization framework includes accelerated aging tests to predict device lifetime under various operating conditions.

Strengths: Industry-leading expertise in memristor fabrication and integration; comprehensive reliability testing infrastructure; advanced error correction techniques. Weaknesses: Their proprietary nature limits broader adoption; solutions may be optimized for specific applications rather than general-purpose computing.

HP Development Co. LP

Technical Solution:

Critical Patents and Research on Memristor Reliability

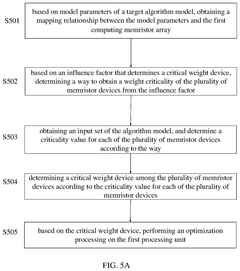

Computing apparatus and robustness processing method therefor

PatentPendingUS20250095728A1

Innovation

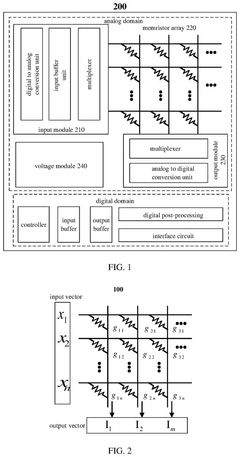

- A robustness processing method that determines the criticality of memristor devices within a computing apparatus based on model parameters and influence factors, optimizing only the critical devices through methods like averaging and re-refreshing strategies to enhance system reliability without requiring widespread hardware changes.

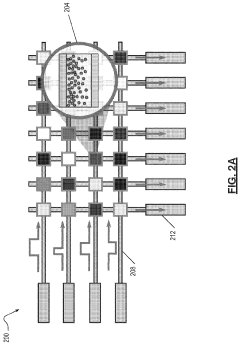

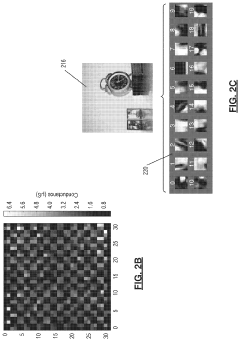

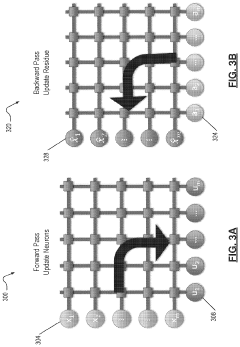

Techniques for computing dot products with memory devices

PatentActiveUS20200067512A1

Innovation

- A memristor crossbar network is used to perform sparse coding by implementing a locally competitive algorithm, where the network iteratively applies forward and backward passes to achieve lateral neuron inhibition without physical inhibitory synaptic connections, allowing for efficient data representation and pattern recognition.

Standardization Efforts for Memristor Reliability Testing

The standardization of reliability testing protocols for memristor devices represents a critical frontier in advancing memristor-based in-memory computing technologies toward commercial viability. Currently, several international organizations are spearheading efforts to establish uniform testing methodologies and reliability metrics specifically tailored for memristive systems.

The IEEE Working Group on Non-Volatile Memory Devices has been particularly active, developing the P1817 standard that addresses reliability characterization methods for emerging resistive memory technologies. This standard aims to establish consistent protocols for evaluating endurance, retention, and variability across different memristor technologies, enabling meaningful cross-platform comparisons.

JEDEC, traditionally focused on semiconductor memory standardization, has expanded its scope to include working groups dedicated to memristor reliability testing. Their JC-42.4 Committee has proposed standardized test conditions for environmental factors that significantly impact memristor performance, including temperature cycling, humidity exposure, and electromagnetic interference tolerance.

The International Electrotechnical Commission (IEC) has contributed through its Technical Committee 113, which focuses on nanotechnology standardization. Their efforts include developing standard terminology and measurement methodologies specifically for memristive systems used in computational applications, addressing the unique reliability challenges of in-memory computing architectures.

Industry consortia have also emerged as important drivers of standardization. The Memristor Research Alliance, comprising leading semiconductor manufacturers and research institutions, has published several white papers outlining recommended practices for reliability assessment in memristor-based computing systems. Their proposed testing framework incorporates both device-level and system-level reliability metrics.

Academia-industry partnerships have yielded significant contributions to standardization efforts. The Memristor Reliability Consortium, established in 2018, has developed an open-source reliability testing platform that implements standardized testing protocols, facilitating reproducible research and accelerating consensus on reliability benchmarks.

Despite these advances, significant challenges remain in standardization efforts. The diversity of memristor materials, architectures, and fabrication processes complicates the establishment of universal testing protocols. Additionally, the unique requirements of in-memory computing applications—where memristors serve dual storage and processing functions—necessitate specialized reliability metrics beyond those used for conventional memory technologies.

Looking forward, emerging standardization initiatives are increasingly focusing on application-specific reliability requirements, recognizing that memristor reliability specifications may vary significantly between edge computing, neuromorphic systems, and data center applications. This trend toward application-tailored standardization promises to accelerate the commercial adoption of memristor-based in-memory computing solutions across diverse market segments.

The IEEE Working Group on Non-Volatile Memory Devices has been particularly active, developing the P1817 standard that addresses reliability characterization methods for emerging resistive memory technologies. This standard aims to establish consistent protocols for evaluating endurance, retention, and variability across different memristor technologies, enabling meaningful cross-platform comparisons.

JEDEC, traditionally focused on semiconductor memory standardization, has expanded its scope to include working groups dedicated to memristor reliability testing. Their JC-42.4 Committee has proposed standardized test conditions for environmental factors that significantly impact memristor performance, including temperature cycling, humidity exposure, and electromagnetic interference tolerance.

The International Electrotechnical Commission (IEC) has contributed through its Technical Committee 113, which focuses on nanotechnology standardization. Their efforts include developing standard terminology and measurement methodologies specifically for memristive systems used in computational applications, addressing the unique reliability challenges of in-memory computing architectures.

Industry consortia have also emerged as important drivers of standardization. The Memristor Research Alliance, comprising leading semiconductor manufacturers and research institutions, has published several white papers outlining recommended practices for reliability assessment in memristor-based computing systems. Their proposed testing framework incorporates both device-level and system-level reliability metrics.

Academia-industry partnerships have yielded significant contributions to standardization efforts. The Memristor Reliability Consortium, established in 2018, has developed an open-source reliability testing platform that implements standardized testing protocols, facilitating reproducible research and accelerating consensus on reliability benchmarks.

Despite these advances, significant challenges remain in standardization efforts. The diversity of memristor materials, architectures, and fabrication processes complicates the establishment of universal testing protocols. Additionally, the unique requirements of in-memory computing applications—where memristors serve dual storage and processing functions—necessitate specialized reliability metrics beyond those used for conventional memory technologies.

Looking forward, emerging standardization initiatives are increasingly focusing on application-specific reliability requirements, recognizing that memristor reliability specifications may vary significantly between edge computing, neuromorphic systems, and data center applications. This trend toward application-tailored standardization promises to accelerate the commercial adoption of memristor-based in-memory computing solutions across diverse market segments.

Energy Efficiency and Thermal Management Considerations

Energy efficiency represents a critical consideration in memristor-based in-memory computing (IMC) systems, directly impacting both operational costs and device reliability. Memristors offer inherent advantages in energy consumption compared to conventional computing architectures, with potential energy savings of 10-100x by eliminating the energy-intensive data movement between memory and processing units. However, these benefits can only be fully realized through careful thermal management and energy optimization strategies.

The power density in memristor crossbar arrays presents significant thermal challenges that directly affect device reliability. When operating at high frequencies or with dense computational workloads, localized heating can occur within the crossbar structure, potentially accelerating device degradation mechanisms such as ion migration and oxide breakdown. Measurements indicate that temperature increases of just 15-20°C can reduce memristor lifetime by 30-50%, highlighting the critical relationship between thermal management and long-term reliability.

Thermal gradients across memristor arrays further complicate reliability characterization, as devices at different physical locations may experience varying degradation rates. This non-uniform aging process introduces unpredictable computational errors that are difficult to model and mitigate. Research has demonstrated that implementing intelligent workload distribution algorithms can reduce thermal hotspots by up to 40%, significantly extending device lifetime while maintaining computational accuracy.

Dynamic voltage and frequency scaling (DVFS) techniques have emerged as effective approaches for balancing performance and reliability in memristor-based systems. By adaptively adjusting operational parameters based on workload requirements and thermal conditions, DVFS can reduce energy consumption by 25-35% while minimizing reliability-degrading thermal stress. Recent studies have shown that reliability-aware DVFS implementations can extend memristor array lifetime by up to 2.5x compared to fixed-parameter operation.

The relationship between programming current and reliability presents another important energy-reliability tradeoff. While higher programming currents can improve switching speed and state stability, they also generate more heat and accelerate wear mechanisms. Experimental data suggests an optimal current range exists for each memristor technology, typically offering 3-5x reliability improvement compared to maximum-current operation while maintaining acceptable performance levels.

Cooling solutions specifically designed for memristor arrays represent another critical research direction. Unlike conventional computing systems, the three-dimensional nature of stacked memristor architectures creates unique thermal dissipation challenges. Advanced microfluidic cooling techniques have demonstrated the ability to maintain temperature variations below 5°C across memristor arrays, significantly improving reliability uniformity and extending overall system lifetime.

The power density in memristor crossbar arrays presents significant thermal challenges that directly affect device reliability. When operating at high frequencies or with dense computational workloads, localized heating can occur within the crossbar structure, potentially accelerating device degradation mechanisms such as ion migration and oxide breakdown. Measurements indicate that temperature increases of just 15-20°C can reduce memristor lifetime by 30-50%, highlighting the critical relationship between thermal management and long-term reliability.

Thermal gradients across memristor arrays further complicate reliability characterization, as devices at different physical locations may experience varying degradation rates. This non-uniform aging process introduces unpredictable computational errors that are difficult to model and mitigate. Research has demonstrated that implementing intelligent workload distribution algorithms can reduce thermal hotspots by up to 40%, significantly extending device lifetime while maintaining computational accuracy.

Dynamic voltage and frequency scaling (DVFS) techniques have emerged as effective approaches for balancing performance and reliability in memristor-based systems. By adaptively adjusting operational parameters based on workload requirements and thermal conditions, DVFS can reduce energy consumption by 25-35% while minimizing reliability-degrading thermal stress. Recent studies have shown that reliability-aware DVFS implementations can extend memristor array lifetime by up to 2.5x compared to fixed-parameter operation.

The relationship between programming current and reliability presents another important energy-reliability tradeoff. While higher programming currents can improve switching speed and state stability, they also generate more heat and accelerate wear mechanisms. Experimental data suggests an optimal current range exists for each memristor technology, typically offering 3-5x reliability improvement compared to maximum-current operation while maintaining acceptable performance levels.

Cooling solutions specifically designed for memristor arrays represent another critical research direction. Unlike conventional computing systems, the three-dimensional nature of stacked memristor architectures creates unique thermal dissipation challenges. Advanced microfluidic cooling techniques have demonstrated the ability to maintain temperature variations below 5°C across memristor arrays, significantly improving reliability uniformity and extending overall system lifetime.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!