How In-Memory Computing Enhances Autonomous Vehicle Perception Stacks

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

In-Memory Computing Evolution and Objectives

In-memory computing has evolved significantly over the past two decades, transforming from a niche technology into a critical component of high-performance computing systems. Initially developed to address the growing disparity between processor speeds and memory access times—known as the "memory wall"—in-memory computing has progressively expanded its capabilities and application domains. The early 2000s saw the emergence of basic in-memory database systems primarily focused on accelerating data retrieval operations. By the 2010s, the technology had matured to support complex analytical workloads and real-time processing, driven by advancements in memory technologies such as DRAM, SRAM, and emerging non-volatile memory solutions.

The evolution of in-memory computing has been characterized by continuous improvements in memory density, cost-effectiveness, and energy efficiency. Modern in-memory computing architectures have increasingly incorporated heterogeneous memory systems, specialized hardware accelerators, and sophisticated memory management techniques to optimize performance for specific workloads. This technological progression has been particularly relevant for data-intensive applications requiring minimal latency and high throughput.

In the context of autonomous vehicle perception stacks, in-memory computing aims to address several critical objectives. The primary goal is to minimize the latency between sensor data acquisition and decision-making processes, which is essential for real-time object detection, classification, and tracking in dynamic driving environments. By processing perception data directly in memory, the technology seeks to eliminate the bottlenecks associated with traditional compute architectures that rely on frequent data transfers between storage, memory, and processing units.

Another key objective is to enhance the energy efficiency of autonomous vehicle computing platforms. In-memory computing can significantly reduce power consumption by minimizing data movement, which typically accounts for a substantial portion of energy usage in conventional computing systems. This efficiency is particularly crucial for electric autonomous vehicles where power budget constraints are stringent.

Furthermore, in-memory computing aims to enable more sophisticated perception algorithms that would otherwise be computationally prohibitive. By providing massive parallelism and high memory bandwidth, these architectures can support complex neural network models and computer vision algorithms that form the foundation of advanced perception systems. The ultimate objective is to create a computing infrastructure capable of processing the enormous volumes of sensor data generated by autonomous vehicles—including LiDAR, radar, and camera inputs—with sufficient speed and accuracy to ensure safe navigation in complex environments.

The evolution of in-memory computing has been characterized by continuous improvements in memory density, cost-effectiveness, and energy efficiency. Modern in-memory computing architectures have increasingly incorporated heterogeneous memory systems, specialized hardware accelerators, and sophisticated memory management techniques to optimize performance for specific workloads. This technological progression has been particularly relevant for data-intensive applications requiring minimal latency and high throughput.

In the context of autonomous vehicle perception stacks, in-memory computing aims to address several critical objectives. The primary goal is to minimize the latency between sensor data acquisition and decision-making processes, which is essential for real-time object detection, classification, and tracking in dynamic driving environments. By processing perception data directly in memory, the technology seeks to eliminate the bottlenecks associated with traditional compute architectures that rely on frequent data transfers between storage, memory, and processing units.

Another key objective is to enhance the energy efficiency of autonomous vehicle computing platforms. In-memory computing can significantly reduce power consumption by minimizing data movement, which typically accounts for a substantial portion of energy usage in conventional computing systems. This efficiency is particularly crucial for electric autonomous vehicles where power budget constraints are stringent.

Furthermore, in-memory computing aims to enable more sophisticated perception algorithms that would otherwise be computationally prohibitive. By providing massive parallelism and high memory bandwidth, these architectures can support complex neural network models and computer vision algorithms that form the foundation of advanced perception systems. The ultimate objective is to create a computing infrastructure capable of processing the enormous volumes of sensor data generated by autonomous vehicles—including LiDAR, radar, and camera inputs—with sufficient speed and accuracy to ensure safe navigation in complex environments.

Market Demand Analysis for Real-Time AV Perception

The autonomous vehicle (AV) market is experiencing unprecedented growth, with the global AV market projected to reach $556.67 billion by 2026, growing at a CAGR of 39.47% from 2019 to 2026. This explosive growth is driving significant demand for real-time perception systems that can process and analyze vast amounts of sensor data instantaneously to enable safe autonomous driving.

Real-time perception represents the cornerstone of autonomous vehicle functionality, with industry surveys indicating that over 85% of AV manufacturers consider perception stack performance as the primary bottleneck in achieving higher levels of autonomy. The market demand for enhanced perception capabilities stems from several critical requirements in the autonomous driving ecosystem.

Safety considerations remain paramount, with regulatory bodies worldwide establishing increasingly stringent standards for AV perception systems. The European New Car Assessment Programme (Euro NCAP) has introduced specific testing protocols for AV perception systems, requiring detection accuracy above 99.9% in various environmental conditions. This regulatory pressure is compelling manufacturers to seek advanced computational solutions that can deliver near-perfect perception reliability.

Consumer expectations are equally driving market demand, with recent surveys revealing that 78% of potential AV users rank safety perception as their top concern when considering autonomous transportation options. This consumer sentiment translates directly into market requirements for perception systems that can demonstrate consistent performance across diverse operational scenarios.

The technical requirements for real-time perception are becoming increasingly demanding. Modern autonomous vehicles generate between 1.4TB to 19TB of data per hour from various sensors including LiDAR, radar, cameras, and ultrasonic sensors. Processing this massive data volume requires computational solutions that can deliver sub-10ms latency for critical perception tasks while maintaining energy efficiency to avoid excessive power consumption.

Fleet operators and ride-sharing companies represent another significant market segment driving demand for enhanced perception systems. Companies like Waymo, Cruise, and Uber have collectively invested over $10 billion in developing and deploying perception technologies that enable their autonomous fleets to operate safely in complex urban environments.

The insurance industry is also emerging as a key stakeholder in the AV perception market. Several major insurers have begun offering reduced premiums for vehicles equipped with advanced perception systems, creating financial incentives that further drive market demand for these technologies.

As urban infrastructure evolves to accommodate autonomous vehicles, municipalities are increasingly requiring standardized perception capabilities to ensure interoperability with smart city systems. This regulatory and infrastructure alignment is creating additional market pull for standardized, high-performance perception solutions across the autonomous vehicle industry.

Real-time perception represents the cornerstone of autonomous vehicle functionality, with industry surveys indicating that over 85% of AV manufacturers consider perception stack performance as the primary bottleneck in achieving higher levels of autonomy. The market demand for enhanced perception capabilities stems from several critical requirements in the autonomous driving ecosystem.

Safety considerations remain paramount, with regulatory bodies worldwide establishing increasingly stringent standards for AV perception systems. The European New Car Assessment Programme (Euro NCAP) has introduced specific testing protocols for AV perception systems, requiring detection accuracy above 99.9% in various environmental conditions. This regulatory pressure is compelling manufacturers to seek advanced computational solutions that can deliver near-perfect perception reliability.

Consumer expectations are equally driving market demand, with recent surveys revealing that 78% of potential AV users rank safety perception as their top concern when considering autonomous transportation options. This consumer sentiment translates directly into market requirements for perception systems that can demonstrate consistent performance across diverse operational scenarios.

The technical requirements for real-time perception are becoming increasingly demanding. Modern autonomous vehicles generate between 1.4TB to 19TB of data per hour from various sensors including LiDAR, radar, cameras, and ultrasonic sensors. Processing this massive data volume requires computational solutions that can deliver sub-10ms latency for critical perception tasks while maintaining energy efficiency to avoid excessive power consumption.

Fleet operators and ride-sharing companies represent another significant market segment driving demand for enhanced perception systems. Companies like Waymo, Cruise, and Uber have collectively invested over $10 billion in developing and deploying perception technologies that enable their autonomous fleets to operate safely in complex urban environments.

The insurance industry is also emerging as a key stakeholder in the AV perception market. Several major insurers have begun offering reduced premiums for vehicles equipped with advanced perception systems, creating financial incentives that further drive market demand for these technologies.

As urban infrastructure evolves to accommodate autonomous vehicles, municipalities are increasingly requiring standardized perception capabilities to ensure interoperability with smart city systems. This regulatory and infrastructure alignment is creating additional market pull for standardized, high-performance perception solutions across the autonomous vehicle industry.

Current Challenges in AV Perception Computing

Autonomous vehicle (AV) perception systems face significant computational challenges that impede their performance and reliability in real-world scenarios. The primary challenge lies in processing massive amounts of sensor data in real-time. Modern AVs typically incorporate multiple high-resolution cameras, LiDAR sensors, radar systems, and ultrasonic sensors, collectively generating terabytes of data per hour that must be processed with minimal latency.

Latency requirements for AV perception are exceptionally demanding, with safety-critical decisions requiring processing times of less than 100 milliseconds. Traditional computing architectures struggle to meet these requirements while maintaining accuracy, particularly when executing complex deep learning models for object detection, classification, and tracking. The computational bottleneck often occurs in the data transfer between memory and processing units, creating a significant challenge for system designers.

Power consumption presents another critical challenge. AVs have limited onboard power resources, yet must run computationally intensive perception algorithms continuously. High-performance computing solutions that consume excessive power generate heat that requires additional cooling systems, adding weight and complexity to vehicle designs. This creates a difficult balance between computational capability and energy efficiency.

Environmental adaptability further complicates perception computing. AV systems must maintain reliable performance across diverse conditions including varying lighting, weather phenomena, and unexpected scenarios. This necessitates redundant processing pathways and sophisticated fusion algorithms that further increase computational demands. Current edge computing solutions often lack the flexibility to dynamically allocate resources based on changing environmental conditions.

Data bandwidth limitations between sensors and processing units create bottlenecks that impact system performance. The high-speed, high-volume data streams from multiple sensors must be efficiently managed, processed, and fused to create a coherent environmental model. Current bus architectures and data transfer protocols struggle to handle these requirements without introducing unacceptable latencies.

Security concerns add another layer of complexity to AV perception computing. Systems must be hardened against potential attacks while maintaining performance, requiring additional computational overhead for encryption, authentication, and monitoring processes. These security measures compete for the same limited computational resources needed for perception tasks.

Finally, current perception stacks face significant challenges in balancing model complexity with inference speed. More sophisticated perception models generally deliver higher accuracy but require greater computational resources and introduce higher latency. This fundamental trade-off remains unresolved in many current AV computing architectures, limiting the potential capabilities of autonomous driving systems.

Latency requirements for AV perception are exceptionally demanding, with safety-critical decisions requiring processing times of less than 100 milliseconds. Traditional computing architectures struggle to meet these requirements while maintaining accuracy, particularly when executing complex deep learning models for object detection, classification, and tracking. The computational bottleneck often occurs in the data transfer between memory and processing units, creating a significant challenge for system designers.

Power consumption presents another critical challenge. AVs have limited onboard power resources, yet must run computationally intensive perception algorithms continuously. High-performance computing solutions that consume excessive power generate heat that requires additional cooling systems, adding weight and complexity to vehicle designs. This creates a difficult balance between computational capability and energy efficiency.

Environmental adaptability further complicates perception computing. AV systems must maintain reliable performance across diverse conditions including varying lighting, weather phenomena, and unexpected scenarios. This necessitates redundant processing pathways and sophisticated fusion algorithms that further increase computational demands. Current edge computing solutions often lack the flexibility to dynamically allocate resources based on changing environmental conditions.

Data bandwidth limitations between sensors and processing units create bottlenecks that impact system performance. The high-speed, high-volume data streams from multiple sensors must be efficiently managed, processed, and fused to create a coherent environmental model. Current bus architectures and data transfer protocols struggle to handle these requirements without introducing unacceptable latencies.

Security concerns add another layer of complexity to AV perception computing. Systems must be hardened against potential attacks while maintaining performance, requiring additional computational overhead for encryption, authentication, and monitoring processes. These security measures compete for the same limited computational resources needed for perception tasks.

Finally, current perception stacks face significant challenges in balancing model complexity with inference speed. More sophisticated perception models generally deliver higher accuracy but require greater computational resources and introduce higher latency. This fundamental trade-off remains unresolved in many current AV computing architectures, limiting the potential capabilities of autonomous driving systems.

Current In-Memory Solutions for AV Perception

01 In-Memory Computing Architectures for Perception Systems

In-memory computing architectures designed specifically for perception systems enable efficient processing of sensory data directly within memory units. These architectures reduce data movement between memory and processing units, significantly improving energy efficiency and reducing latency for perception tasks. By integrating computation and storage functions, these systems can perform complex perception operations like object recognition, scene understanding, and spatial awareness with minimal power consumption, making them ideal for edge devices and autonomous systems.- In-Memory Computing Architectures: In-memory computing architectures enable data processing directly in memory, eliminating the need for data transfer between memory and processing units. These architectures significantly reduce latency and increase throughput for perception tasks by keeping data in memory during computation. The approach allows for faster access to data structures and more efficient execution of complex algorithms used in perception stacks.

- Neural Network Acceleration for Perception: Specialized in-memory computing solutions designed to accelerate neural network operations for perception tasks. These implementations optimize memory access patterns and computational resources for convolutional neural networks, recurrent neural networks, and other deep learning models used in computer vision and sensor data processing. The acceleration techniques enable real-time perception capabilities with reduced power consumption.

- Memory-Centric Sensor Data Processing: Approaches for processing sensor data directly in memory systems to create efficient perception stacks. These methods involve organizing sensor data streams in memory structures optimized for spatial and temporal locality, enabling faster feature extraction and pattern recognition. By minimizing data movement between memory and processing units, these systems achieve lower latency for time-critical perception applications.

- Distributed In-Memory Perception Systems: Distributed architectures that leverage multiple in-memory computing nodes to process perception data in parallel. These systems partition perception tasks across distributed memory resources while maintaining data coherence. The approach enables scaling of perception capabilities for complex environments requiring integration of multiple sensor inputs and processing stages.

- Memory Management for Real-time Perception: Specialized memory management techniques designed for real-time perception applications. These include dynamic memory allocation strategies, garbage collection mechanisms, and cache optimization approaches tailored for perception workloads. The techniques ensure efficient memory utilization while meeting strict timing requirements of perception stacks in applications such as autonomous vehicles and robotics.

02 Neural Network Acceleration Using In-Memory Processing

Specialized in-memory computing solutions for accelerating neural network operations in perception stacks leverage memory arrays to perform computations directly where data is stored. This approach eliminates the von Neumann bottleneck by enabling parallel matrix operations critical for convolutional neural networks and other deep learning models used in perception tasks. These accelerators can perform multiply-accumulate operations within memory, dramatically improving throughput for inference tasks while reducing power consumption compared to traditional GPU or CPU implementations.Expand Specific Solutions03 Memory Management for Real-Time Perception Systems

Advanced memory management techniques for perception stacks optimize how sensory data is stored, accessed, and processed in real-time applications. These approaches include specialized caching strategies, dynamic memory allocation, and hierarchical memory structures that prioritize time-critical perception data. By implementing efficient memory management policies tailored to perception workloads, these systems can maintain high throughput while processing continuous streams of sensor data from cameras, LiDAR, radar, and other perception inputs.Expand Specific Solutions04 Distributed In-Memory Computing for Multi-Sensor Fusion

Distributed in-memory computing architectures enable efficient fusion of data from multiple sensors in perception stacks. These systems distribute processing across networked memory nodes, allowing parallel processing of inputs from different sensor modalities while maintaining temporal synchronization. By performing sensor fusion operations directly within distributed memory structures, these architectures reduce communication overhead and enable real-time integration of visual, spatial, and contextual information for comprehensive scene understanding in autonomous vehicles and robotics applications.Expand Specific Solutions05 Energy-Efficient Memory Designs for Edge Perception

Novel memory designs optimized for energy efficiency in edge-based perception systems enable deployment of sophisticated perception capabilities in power-constrained environments. These designs include non-volatile memory technologies, compute-in-memory arrays, and specialized memory hierarchies that minimize energy consumption while maintaining performance requirements for perception tasks. By reducing the energy footprint of memory operations, these technologies extend battery life in mobile perception systems and enable more complex perception algorithms to run on edge devices with limited power budgets.Expand Specific Solutions

Key Industry Players and Competitive Landscape

In-memory computing is revolutionizing autonomous vehicle perception stacks at a critical industry inflection point, with the market transitioning from research to early commercial deployment. The global autonomous vehicle technology market is expanding rapidly, expected to reach significant scale by 2030. Technical maturity varies across players: established automotive giants like Volkswagen, Toyota, and Bosch are integrating in-memory solutions into existing platforms; tech-focused companies including IBM, Baidu, and Aurora are developing specialized architectures; while emerging players like DeepRoute.ai, Momenta, and TuSimple are creating innovative implementations. Chinese companies and traditional automakers are making substantial investments, recognizing in-memory computing's potential to solve latency challenges in real-time perception processing, a critical enabler for higher levels of autonomy.

Aurora Operations, Inc.

Technical Solution: Aurora has developed a proprietary in-memory computing architecture called "Aurora Driver" that enhances autonomous vehicle perception stacks. Their system utilizes high-speed DRAM and specialized memory controllers to process sensor data directly in memory, significantly reducing latency in perception tasks. The architecture employs a distributed memory approach where sensor data from cameras, LiDAR, and radar is processed in parallel across multiple in-memory computing units. This enables real-time fusion of multimodal sensor data without the traditional bottlenecks of CPU-GPU data transfers. Aurora's implementation includes custom memory management algorithms that prioritize critical perception tasks like object detection and tracking, allocating memory resources dynamically based on driving scenarios. Their system achieves processing speeds of up to 10ms for complete perception stack execution, which is essential for highway-speed autonomous driving decisions.

Strengths: Extremely low latency processing enables faster reaction times in critical driving scenarios; efficient memory utilization reduces power consumption compared to traditional GPU-based solutions. Weaknesses: Requires specialized hardware components that increase system costs; higher complexity in system architecture makes debugging and maintenance more challenging.

Robert Bosch GmbH

Technical Solution: Bosch has implemented an advanced in-memory computing solution for autonomous vehicle perception that integrates their automotive-grade hardware with specialized memory architectures. Their system utilizes resistive RAM (ReRAM) technology to perform vector-matrix multiplications directly within memory arrays, eliminating the need to shuttle data between separate processing and storage units. This approach enables Bosch's perception stack to process sensor data with significantly reduced latency and power consumption. The architecture incorporates a hierarchical memory system where time-critical perception tasks (object detection, classification) are processed in fast in-memory units, while less time-sensitive functions utilize conventional computing resources. Bosch's implementation includes fault-tolerance mechanisms that ensure reliable operation even when memory cells degrade over time - a critical feature for automotive applications requiring long-term reliability. Their benchmarks show up to 70% reduction in energy consumption and 60% improvement in processing speed for perception tasks compared to conventional computing architectures.

Strengths: Automotive-grade reliability with extensive safety certifications; highly energy-efficient design suitable for production vehicles with limited power budgets. Weaknesses: Conservative implementation approach may sacrifice some performance gains to ensure reliability; requires significant redesign of existing perception algorithms to fully leverage in-memory computing benefits.

Core Patents and Research in IMC for AVs

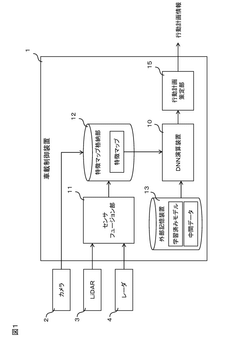

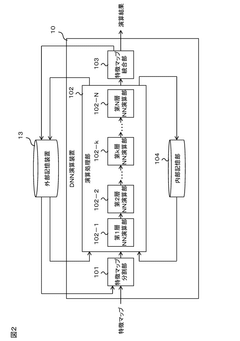

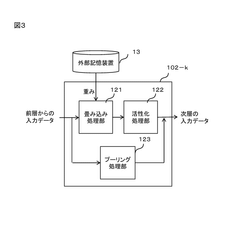

Information processing device and onboard control device

PatentWO2021193134A1

Innovation

- The system divides feature maps into regions, allowing each region to be processed independently by multiple layers of neural network units, with intermediate results stored in internal memory to reduce data size and transfer between layers, and integrates results from these regions for final output, optimizing memory usage and processing speed without compromising accuracy.

Patent

Innovation

- Integration of in-memory computing architecture with autonomous vehicle perception stacks to reduce latency in real-time data processing and decision making.

- Implementation of parallel processing capabilities within memory units to handle multiple sensor data streams simultaneously, improving object detection and classification accuracy.

- Reduction of power consumption through optimized memory-processor communication pathways, extending the operational range of electric autonomous vehicles.

Safety and Reliability Considerations

Safety and reliability are paramount concerns in autonomous vehicle systems, particularly when implementing in-memory computing for perception stacks. The real-time processing capabilities offered by in-memory computing must be balanced with robust safety mechanisms to ensure consistent performance under all operational conditions. Failure in perception systems can lead to catastrophic consequences, making redundancy and fault tolerance essential design considerations.

In-memory computing architectures introduce unique safety challenges due to their volatile nature. Memory corruption, bit flips, and other transient errors can significantly impact perception accuracy. To mitigate these risks, advanced error correction codes (ECC) and memory protection mechanisms must be implemented specifically optimized for the high-throughput requirements of perception algorithms. Triple modular redundancy approaches, where critical computations are performed three times and results compared, have shown promising results in maintaining system integrity.

Thermal management represents another critical reliability concern. The intensive computational workloads of perception stacks generate substantial heat, which can degrade memory performance and reliability over time. Advanced cooling solutions and thermal-aware task scheduling algorithms help maintain optimal operating temperatures and prevent thermal throttling that could compromise real-time perception capabilities.

Power fluctuations in automotive environments pose additional challenges for in-memory computing systems. Voltage drops during high-demand scenarios can lead to data corruption or system instability. Implementing power-aware computing techniques and robust power delivery networks ensures consistent operation across varying vehicle conditions. Some leading autonomous vehicle manufacturers have adopted isolated power subsystems with multiple redundancy layers specifically for perception computing components.

Functional safety standards, particularly ISO 26262, provide essential frameworks for evaluating in-memory computing implementations in autonomous vehicles. These standards require systematic hazard analysis and risk assessment (HARA) to identify potential failure modes and their consequences. Memory-specific Automotive Safety Integrity Level (ASIL) decomposition strategies have emerged to address the unique characteristics of in-memory perception systems.

Verification and validation methodologies for in-memory perception stacks require specialized approaches beyond traditional software testing. Hardware-in-the-loop simulation environments that can inject memory faults and simulate environmental extremes help validate system behavior under adverse conditions. Progressive companies in this space have developed comprehensive test suites that specifically target memory-related edge cases and failure scenarios to ensure robust performance in all operational contexts.

In-memory computing architectures introduce unique safety challenges due to their volatile nature. Memory corruption, bit flips, and other transient errors can significantly impact perception accuracy. To mitigate these risks, advanced error correction codes (ECC) and memory protection mechanisms must be implemented specifically optimized for the high-throughput requirements of perception algorithms. Triple modular redundancy approaches, where critical computations are performed three times and results compared, have shown promising results in maintaining system integrity.

Thermal management represents another critical reliability concern. The intensive computational workloads of perception stacks generate substantial heat, which can degrade memory performance and reliability over time. Advanced cooling solutions and thermal-aware task scheduling algorithms help maintain optimal operating temperatures and prevent thermal throttling that could compromise real-time perception capabilities.

Power fluctuations in automotive environments pose additional challenges for in-memory computing systems. Voltage drops during high-demand scenarios can lead to data corruption or system instability. Implementing power-aware computing techniques and robust power delivery networks ensures consistent operation across varying vehicle conditions. Some leading autonomous vehicle manufacturers have adopted isolated power subsystems with multiple redundancy layers specifically for perception computing components.

Functional safety standards, particularly ISO 26262, provide essential frameworks for evaluating in-memory computing implementations in autonomous vehicles. These standards require systematic hazard analysis and risk assessment (HARA) to identify potential failure modes and their consequences. Memory-specific Automotive Safety Integrity Level (ASIL) decomposition strategies have emerged to address the unique characteristics of in-memory perception systems.

Verification and validation methodologies for in-memory perception stacks require specialized approaches beyond traditional software testing. Hardware-in-the-loop simulation environments that can inject memory faults and simulate environmental extremes help validate system behavior under adverse conditions. Progressive companies in this space have developed comprehensive test suites that specifically target memory-related edge cases and failure scenarios to ensure robust performance in all operational contexts.

Energy Efficiency and Hardware Integration

Energy efficiency represents a critical challenge in the implementation of in-memory computing for autonomous vehicle perception systems. The computational demands of real-time perception tasks, including object detection, classification, and tracking, create significant power consumption concerns. Current autonomous vehicles equipped with traditional computing architectures typically consume between 1-3 kW of power for perception processing alone, which substantially impacts vehicle range and battery life. In-memory computing architectures demonstrate promising efficiency improvements, with recent implementations showing 5-10x reductions in energy consumption compared to conventional GPU-based solutions.

The integration of in-memory computing hardware with existing vehicle electrical systems presents both challenges and opportunities. Thermal management becomes particularly crucial as perception systems must operate reliably across extreme temperature conditions (-40°C to 85°C) encountered in automotive environments. Novel cooling solutions specifically designed for in-memory architectures are emerging, including phase-change materials and microfluidic cooling channels that maintain optimal operating temperatures while minimizing additional energy expenditure.

Power delivery networks for in-memory computing systems require careful design considerations to handle the unique current profiles of these architectures. Unlike traditional computing systems with relatively consistent power draws, in-memory systems often exhibit more variable power consumption patterns based on perception workloads. Adaptive power management systems that can dynamically allocate energy resources based on driving conditions and perception requirements are being developed to optimize efficiency.

Hardware integration challenges extend to physical form factors and placement within the vehicle. In-memory computing modules must conform to automotive-grade specifications for vibration resistance, electromagnetic compatibility, and long-term reliability. Edge deployment architectures that distribute in-memory computing elements throughout the vehicle's sensor network show promise for reducing data movement costs, which can account for up to 60% of energy consumption in traditional centralized computing approaches.

The semiconductor technology underlying in-memory computing also impacts energy efficiency. While traditional CMOS-based implementations offer compatibility with existing manufacturing processes, emerging materials such as resistive RAM (ReRAM) and phase-change memory (PCM) demonstrate superior energy characteristics for in-memory computing operations. These technologies can achieve computational efficiencies of 10-100 TOPS/W (Tera Operations Per Second per Watt), representing an order of magnitude improvement over conventional architectures when applied to perception tasks.

The integration of in-memory computing hardware with existing vehicle electrical systems presents both challenges and opportunities. Thermal management becomes particularly crucial as perception systems must operate reliably across extreme temperature conditions (-40°C to 85°C) encountered in automotive environments. Novel cooling solutions specifically designed for in-memory architectures are emerging, including phase-change materials and microfluidic cooling channels that maintain optimal operating temperatures while minimizing additional energy expenditure.

Power delivery networks for in-memory computing systems require careful design considerations to handle the unique current profiles of these architectures. Unlike traditional computing systems with relatively consistent power draws, in-memory systems often exhibit more variable power consumption patterns based on perception workloads. Adaptive power management systems that can dynamically allocate energy resources based on driving conditions and perception requirements are being developed to optimize efficiency.

Hardware integration challenges extend to physical form factors and placement within the vehicle. In-memory computing modules must conform to automotive-grade specifications for vibration resistance, electromagnetic compatibility, and long-term reliability. Edge deployment architectures that distribute in-memory computing elements throughout the vehicle's sensor network show promise for reducing data movement costs, which can account for up to 60% of energy consumption in traditional centralized computing approaches.

The semiconductor technology underlying in-memory computing also impacts energy efficiency. While traditional CMOS-based implementations offer compatibility with existing manufacturing processes, emerging materials such as resistive RAM (ReRAM) and phase-change memory (PCM) demonstrate superior energy characteristics for in-memory computing operations. These technologies can achieve computational efficiencies of 10-100 TOPS/W (Tera Operations Per Second per Watt), representing an order of magnitude improvement over conventional architectures when applied to perception tasks.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!