How Device Variability Affects Accuracy In In-Memory Computing Operations

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

In-Memory Computing Evolution and Objectives

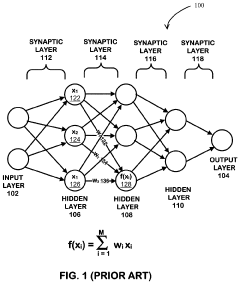

In-memory computing (IMC) represents a paradigm shift in computer architecture that has evolved significantly over the past decades. Initially conceptualized in the 1970s as content-addressable memory, this technology has transformed into sophisticated computational frameworks that integrate processing capabilities directly within memory structures. The fundamental objective of IMC is to overcome the von Neumann bottleneck—the performance limitation caused by the physical separation between processing units and memory storage.

The evolution of IMC can be traced through several key technological milestones. Early implementations focused primarily on simple logic operations within memory arrays. By the early 2000s, advancements in semiconductor technology enabled more complex computational capabilities within memory structures. The past decade has witnessed exponential growth in IMC research and development, driven by the increasing demands of data-intensive applications such as artificial intelligence, machine learning, and big data analytics.

Current IMC architectures span various memory technologies, including SRAM, DRAM, emerging non-volatile memories like ReRAM, PCM, and MRAM. Each technology presents unique characteristics that influence computational accuracy and efficiency. The primary technical objective of modern IMC systems is to maximize computational precision while minimizing energy consumption—a balance that becomes increasingly challenging due to inherent device variability.

Device variability in IMC operations manifests through manufacturing process variations, temporal instabilities, and environmental sensitivities. These variations directly impact the accuracy of computational results, particularly in analog IMC systems where precise resistance or capacitance values are critical for mathematical operations. As IMC technologies advance toward higher integration densities and more complex operations, managing this variability becomes a central technical challenge.

The strategic objectives of IMC development include achieving computational efficiency that significantly exceeds conventional architectures, particularly for specific workloads like neural network inference and matrix operations. Industry benchmarks suggest that ideal IMC implementations could potentially deliver 10-100x improvements in energy efficiency and computational density compared to traditional computing approaches.

Looking forward, the technical roadmap for IMC focuses on developing robust algorithms and circuit designs that can compensate for device variability. This includes adaptive calibration techniques, error-correction mechanisms, and novel computational approaches that maintain accuracy despite underlying hardware inconsistencies. The ultimate goal is to create IMC systems that deliver reliable, high-precision results while maintaining the fundamental energy and performance advantages that make this architecture compelling for next-generation computing applications.

The evolution of IMC can be traced through several key technological milestones. Early implementations focused primarily on simple logic operations within memory arrays. By the early 2000s, advancements in semiconductor technology enabled more complex computational capabilities within memory structures. The past decade has witnessed exponential growth in IMC research and development, driven by the increasing demands of data-intensive applications such as artificial intelligence, machine learning, and big data analytics.

Current IMC architectures span various memory technologies, including SRAM, DRAM, emerging non-volatile memories like ReRAM, PCM, and MRAM. Each technology presents unique characteristics that influence computational accuracy and efficiency. The primary technical objective of modern IMC systems is to maximize computational precision while minimizing energy consumption—a balance that becomes increasingly challenging due to inherent device variability.

Device variability in IMC operations manifests through manufacturing process variations, temporal instabilities, and environmental sensitivities. These variations directly impact the accuracy of computational results, particularly in analog IMC systems where precise resistance or capacitance values are critical for mathematical operations. As IMC technologies advance toward higher integration densities and more complex operations, managing this variability becomes a central technical challenge.

The strategic objectives of IMC development include achieving computational efficiency that significantly exceeds conventional architectures, particularly for specific workloads like neural network inference and matrix operations. Industry benchmarks suggest that ideal IMC implementations could potentially deliver 10-100x improvements in energy efficiency and computational density compared to traditional computing approaches.

Looking forward, the technical roadmap for IMC focuses on developing robust algorithms and circuit designs that can compensate for device variability. This includes adaptive calibration techniques, error-correction mechanisms, and novel computational approaches that maintain accuracy despite underlying hardware inconsistencies. The ultimate goal is to create IMC systems that deliver reliable, high-precision results while maintaining the fundamental energy and performance advantages that make this architecture compelling for next-generation computing applications.

Market Analysis for In-Memory Computing Solutions

The global market for in-memory computing solutions is experiencing robust growth, driven by increasing demands for real-time data processing and analytics. Current market valuations place the in-memory computing sector at approximately $15 billion, with projections indicating a compound annual growth rate of 18-20% over the next five years. This acceleration is primarily fueled by the expanding adoption of artificial intelligence, machine learning applications, and the growing need for edge computing capabilities.

Key vertical markets demonstrating significant demand include financial services, where high-frequency trading and fraud detection require instantaneous processing; healthcare, with its increasing reliance on real-time patient monitoring and diagnostic systems; and telecommunications, where network optimization demands continuous data analysis. Manufacturing and retail sectors are also rapidly adopting in-memory computing solutions to enhance operational efficiency and customer experience through real-time inventory management and personalized marketing.

The market landscape reveals a distinct segmentation between hardware-focused and software-oriented solutions. Hardware solutions, including specialized memory architectures and neuromorphic computing chips, represent approximately 40% of the market. Software solutions, comprising in-memory databases, data grids, and analytics platforms, constitute the remaining 60%. This distribution reflects the dual approach to addressing computational bottlenecks in modern data processing systems.

Regional analysis indicates North America currently leads with 42% market share, followed by Europe at 28% and Asia-Pacific at 24%. However, the Asia-Pacific region is demonstrating the fastest growth rate at 22% annually, driven by substantial investments in technological infrastructure across China, South Korea, and Japan.

Customer demand patterns reveal increasing concerns regarding accuracy and reliability in in-memory computing operations. A recent industry survey indicated that 78% of enterprise customers consider computational accuracy as "critical" or "very important" when evaluating in-memory computing solutions. This concern directly relates to device variability issues, which can significantly impact computational precision in analog in-memory computing architectures.

Market research further indicates that solutions addressing device variability challenges could command premium pricing, with customers willing to pay 15-25% more for systems demonstrating superior accuracy and reliability metrics. This represents a significant market opportunity for technologies that can effectively mitigate variability-induced errors while maintaining the performance advantages of in-memory computing architectures.

Key vertical markets demonstrating significant demand include financial services, where high-frequency trading and fraud detection require instantaneous processing; healthcare, with its increasing reliance on real-time patient monitoring and diagnostic systems; and telecommunications, where network optimization demands continuous data analysis. Manufacturing and retail sectors are also rapidly adopting in-memory computing solutions to enhance operational efficiency and customer experience through real-time inventory management and personalized marketing.

The market landscape reveals a distinct segmentation between hardware-focused and software-oriented solutions. Hardware solutions, including specialized memory architectures and neuromorphic computing chips, represent approximately 40% of the market. Software solutions, comprising in-memory databases, data grids, and analytics platforms, constitute the remaining 60%. This distribution reflects the dual approach to addressing computational bottlenecks in modern data processing systems.

Regional analysis indicates North America currently leads with 42% market share, followed by Europe at 28% and Asia-Pacific at 24%. However, the Asia-Pacific region is demonstrating the fastest growth rate at 22% annually, driven by substantial investments in technological infrastructure across China, South Korea, and Japan.

Customer demand patterns reveal increasing concerns regarding accuracy and reliability in in-memory computing operations. A recent industry survey indicated that 78% of enterprise customers consider computational accuracy as "critical" or "very important" when evaluating in-memory computing solutions. This concern directly relates to device variability issues, which can significantly impact computational precision in analog in-memory computing architectures.

Market research further indicates that solutions addressing device variability challenges could command premium pricing, with customers willing to pay 15-25% more for systems demonstrating superior accuracy and reliability metrics. This represents a significant market opportunity for technologies that can effectively mitigate variability-induced errors while maintaining the performance advantages of in-memory computing architectures.

Device Variability Challenges in IMC Technologies

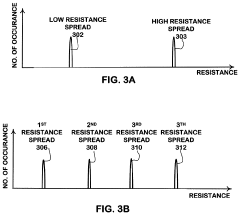

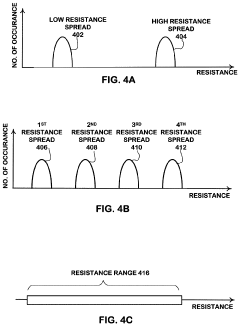

In-Memory Computing (IMC) technologies face significant challenges due to inherent device variability, which directly impacts computational accuracy and reliability. These variations manifest across multiple dimensions, including manufacturing process variations, operational temperature fluctuations, and aging effects that evolve over a device's lifetime.

Process variations during fabrication represent a primary source of variability, resulting in non-uniform device characteristics even within the same manufacturing batch. These variations affect critical parameters such as threshold voltage, resistance states in memristive devices, and capacitance values in capacitive computing elements. For instance, in resistive RAM (RRAM) based IMC systems, variations in filament formation can lead to inconsistent resistance levels, directly affecting the precision of matrix multiplication operations fundamental to neural network computations.

Temperature-induced variability presents another significant challenge, as IMC devices often exhibit temperature-dependent behavior. Resistance drift in phase-change memory (PCM) elements and threshold voltage shifts in transistors can occur with temperature fluctuations, introducing computational errors that are difficult to predict and compensate for in real-time applications. This is particularly problematic in edge computing scenarios where environmental conditions may vary considerably.

Device aging and wear mechanisms introduce time-dependent variability that progressively degrades computational accuracy. Phenomena such as Random Telegraph Noise (RTN), Hot Carrier Injection (HCI), and Bias Temperature Instability (BTI) cause parameter shifts over time. In analog IMC implementations, these shifts directly translate to computational errors that accumulate and worsen throughout the device lifetime.

Cycle-to-cycle variations represent another critical challenge, where the same device exhibits different characteristics across operational cycles. This is particularly evident in emerging memory technologies like RRAM and PCM, where the stochastic nature of switching mechanisms leads to probabilistic behavior rather than deterministic outcomes.

The combined effect of these variability sources creates a complex error landscape that significantly impacts the achievable computational precision in IMC systems. Conventional error correction techniques developed for digital systems often prove inadequate for addressing these analog domain variations, necessitating novel approaches that can accommodate the unique characteristics of IMC architectures.

The severity of these challenges increases with technology scaling, as smaller feature sizes typically exhibit greater relative variations. This creates a fundamental tension between the desire for higher integration density and the need for computational reliability, representing one of the most significant barriers to widespread IMC adoption in precision-critical applications.

Process variations during fabrication represent a primary source of variability, resulting in non-uniform device characteristics even within the same manufacturing batch. These variations affect critical parameters such as threshold voltage, resistance states in memristive devices, and capacitance values in capacitive computing elements. For instance, in resistive RAM (RRAM) based IMC systems, variations in filament formation can lead to inconsistent resistance levels, directly affecting the precision of matrix multiplication operations fundamental to neural network computations.

Temperature-induced variability presents another significant challenge, as IMC devices often exhibit temperature-dependent behavior. Resistance drift in phase-change memory (PCM) elements and threshold voltage shifts in transistors can occur with temperature fluctuations, introducing computational errors that are difficult to predict and compensate for in real-time applications. This is particularly problematic in edge computing scenarios where environmental conditions may vary considerably.

Device aging and wear mechanisms introduce time-dependent variability that progressively degrades computational accuracy. Phenomena such as Random Telegraph Noise (RTN), Hot Carrier Injection (HCI), and Bias Temperature Instability (BTI) cause parameter shifts over time. In analog IMC implementations, these shifts directly translate to computational errors that accumulate and worsen throughout the device lifetime.

Cycle-to-cycle variations represent another critical challenge, where the same device exhibits different characteristics across operational cycles. This is particularly evident in emerging memory technologies like RRAM and PCM, where the stochastic nature of switching mechanisms leads to probabilistic behavior rather than deterministic outcomes.

The combined effect of these variability sources creates a complex error landscape that significantly impacts the achievable computational precision in IMC systems. Conventional error correction techniques developed for digital systems often prove inadequate for addressing these analog domain variations, necessitating novel approaches that can accommodate the unique characteristics of IMC architectures.

The severity of these challenges increases with technology scaling, as smaller feature sizes typically exhibit greater relative variations. This creates a fundamental tension between the desire for higher integration density and the need for computational reliability, representing one of the most significant barriers to widespread IMC adoption in precision-critical applications.

Current Approaches to Mitigate Device Variability

01 Error correction techniques for in-memory computing

Various error correction techniques are employed to enhance the accuracy of in-memory computing systems. These include specialized algorithms that can detect and correct computational errors in real-time, redundancy mechanisms that provide fault tolerance, and adaptive error correction schemes that adjust based on the specific computational requirements. These techniques help maintain high accuracy levels even when dealing with analog computing elements that may be susceptible to noise and variability.- Error correction techniques in in-memory computing: Various error correction techniques are employed to improve the accuracy of in-memory computing systems. These include implementing error detection and correction codes, redundancy mechanisms, and adaptive error compensation algorithms. By detecting and correcting errors that occur during computation, these techniques significantly enhance the reliability and accuracy of in-memory processing, particularly in applications where precision is critical.

- Precision optimization for analog in-memory computing: Analog in-memory computing faces unique challenges in maintaining computational accuracy. Techniques such as precision scaling, quantization methods, and calibration procedures are implemented to optimize the precision of analog computations. These approaches address issues related to device variations, noise, and non-linearity in analog memory cells, resulting in improved computational accuracy while maintaining the energy efficiency benefits of in-memory processing.

- Neural network training with in-memory computing: Specialized techniques for training neural networks using in-memory computing architectures focus on maintaining accuracy during the learning process. These include gradient approximation methods, weight update optimization, and precision-aware training algorithms. By addressing the unique constraints of in-memory computing hardware, these approaches enable accurate neural network training while leveraging the performance benefits of computing directly within memory structures.

- Hardware-algorithm co-design for accuracy improvement: Hardware-algorithm co-design approaches optimize both the physical implementation and computational methods to enhance in-memory computing accuracy. This includes developing specialized memory cell designs, circuit configurations, and algorithmic techniques that work synergistically. By considering both hardware limitations and algorithmic requirements simultaneously, these co-design strategies achieve higher computational accuracy while maintaining the efficiency advantages of in-memory processing.

- Runtime accuracy monitoring and adaptation: Dynamic systems for monitoring and adapting to accuracy fluctuations during in-memory computation operation ensure consistent performance. These include real-time error monitoring, dynamic precision adjustment, and adaptive computation techniques that respond to changing conditions. By continuously evaluating computational accuracy and making appropriate adjustments, these systems maintain high levels of precision even under varying operational conditions and workloads.

02 Precision optimization in analog in-memory computing

Analog in-memory computing faces inherent precision challenges that can affect accuracy. Advanced methods for precision optimization include calibration techniques that compensate for device variations, quantization schemes that balance precision with computational efficiency, and specialized circuit designs that minimize noise interference. These approaches help maintain computational accuracy while leveraging the power and performance benefits of analog computing architectures.Expand Specific Solutions03 Neural network training techniques for improved accuracy

Specialized training methodologies for neural networks implemented in in-memory computing architectures can significantly improve computational accuracy. These include noise-aware training algorithms that account for hardware imperfections, transfer learning approaches that leverage pre-trained models to enhance accuracy, and regularization techniques specifically designed for in-memory implementations. These methods help neural networks achieve higher inference accuracy despite the constraints of in-memory computing hardware.Expand Specific Solutions04 Hardware-algorithm co-design for accuracy enhancement

Co-designing hardware and algorithms specifically for in-memory computing can lead to significant accuracy improvements. This approach involves developing computational models that account for the specific characteristics of memory devices, creating custom memory cell designs that prioritize computational precision, and implementing hybrid architectures that combine the strengths of different computing paradigms. Such co-design strategies help overcome the inherent accuracy limitations of in-memory computing systems.Expand Specific Solutions05 Runtime accuracy monitoring and adaptation

Dynamic systems that monitor and adapt to accuracy fluctuations during operation can maintain high computational precision in in-memory computing environments. These include real-time monitoring circuits that detect accuracy degradation, feedback mechanisms that adjust computational parameters based on error rates, and self-calibrating systems that periodically recalibrate to maintain optimal performance. These adaptive approaches ensure sustained accuracy even as device characteristics change over time or operating conditions vary.Expand Specific Solutions

Leading Companies and Research Institutions in IMC

In-memory computing for device variability management is in a growth phase, with the market expanding due to increasing demand for edge computing and AI applications. The technology is approaching maturity but still faces challenges in maintaining accuracy amid device-to-device variations. Leading players include Micron Technology and Samsung Electronics, who are developing advanced memory architectures with built-in error correction. IBM and Intel are focusing on algorithmic solutions to mitigate variability effects, while academic institutions like National Tsing-Hua University are researching fundamental physics of variability. Emerging companies like YMTC and KIOXIA are introducing innovative approaches to address precision issues in analog computing operations, creating a competitive landscape balanced between established semiconductor giants and specialized memory technology providers.

Micron Technology, Inc.

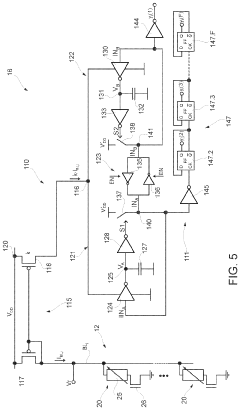

Technical Solution: Micron has developed advanced in-memory computing architectures that address device variability through adaptive calibration techniques. Their approach utilizes a combination of hardware and software solutions to mitigate the impact of device-to-device variations in resistive memory arrays. Micron's technology implements real-time monitoring systems that continuously measure device characteristics during operation and apply compensation algorithms to maintain computational accuracy. Their 3D XPoint technology incorporates specialized circuitry that can detect and adjust for resistance drift in memory cells, which is a primary source of computational errors in in-memory operations. Additionally, Micron has pioneered temperature-aware computing models that dynamically adjust operational parameters based on thermal conditions to maintain consistent performance across varying environmental conditions. The company has also developed innovative error correction codes specifically designed for in-memory computing that can detect and correct errors resulting from device variability without significant performance overhead.

Strengths: Micron's solutions offer excellent scalability across different memory technologies and provide robust performance under varying environmental conditions. Their adaptive calibration approach minimizes accuracy degradation over time. Weaknesses: The additional circuitry required for monitoring and calibration increases power consumption and chip area, potentially limiting application in ultra-low-power devices.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has pioneered a comprehensive approach to managing device variability in in-memory computing through their Processing-In-Memory (PIM) architecture. Their solution integrates specialized hardware units within memory arrays that perform real-time characterization and compensation for device variations. Samsung's HBM-PIM technology incorporates dedicated calibration circuits that periodically measure the electrical characteristics of memory cells and adjust computational parameters accordingly. The company has developed a multi-level sensing scheme that can accurately distinguish between resistance states even in the presence of significant device-to-device variations, enabling more reliable analog computing operations. Samsung's approach also includes innovative programming algorithms that adapt the write conditions for each memory cell based on its unique characteristics, reducing the initial variability before computation begins. Additionally, they have implemented machine learning-based prediction models that can anticipate drift in device parameters and proactively adjust computational operations to maintain accuracy over extended periods of operation.

Strengths: Samsung's solution offers industry-leading energy efficiency while maintaining high computational accuracy, and their integration with HBM technology provides exceptional bandwidth for data-intensive applications. Weaknesses: The complexity of their calibration systems requires significant design expertise and may present challenges for integration with third-party systems.

Key Patents and Research on Variability Compensation

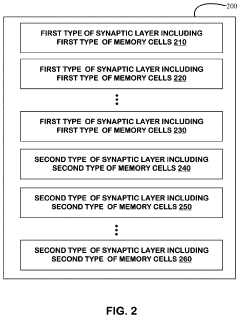

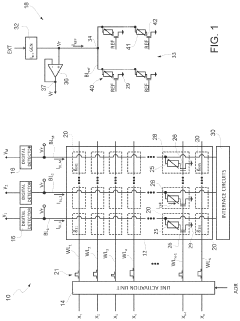

In-memory computing devices for neural networks

PatentActiveUS11138497B2

Innovation

- An integrated circuit with a neural network implementation in-memory computing device featuring multiple types of synaptic layers, where the first type of memory cells are configured for more accurate and stable data storage and operations compared to the second type, reducing weight fluctuations and improving inference accuracy.

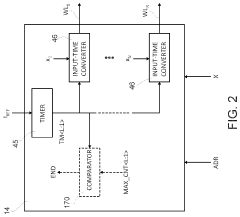

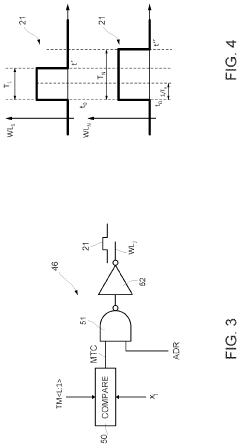

In-memory computation device having improved drift compensation

PatentPendingUS20240212730A1

Innovation

- The device includes a word line activation circuit, a biasing circuit, and a digital detector, with memory cells configured to receive activation signals and bias voltage, generating a bit line current that is sampled to produce output signals, and a reference memory array to statistically represent the overall transconductance of the memory array, allowing for compensation of drifts in computational weights.

Reliability and Yield Considerations in IMC Manufacturing

In-Memory Computing (IMC) manufacturing faces significant challenges related to device variability that directly impact reliability and yield. The inherent variability in semiconductor fabrication processes creates inconsistencies in device characteristics, which becomes particularly problematic for IMC architectures where computational accuracy depends on precise analog operations. Process variations during manufacturing can lead to threshold voltage shifts, resistance variations, and other parameter fluctuations that compromise the reliability of IMC operations.

Manufacturing yield for IMC devices is typically lower than conventional digital circuits due to these variability issues. Statistical analysis shows that as device dimensions shrink below 10nm, the percentage of devices meeting strict performance specifications decreases exponentially. This yield challenge translates directly to higher production costs and potentially limits mass-market adoption of IMC technologies.

Reliability testing for IMC devices requires specialized methodologies beyond traditional digital testing frameworks. Manufacturers must implement comprehensive characterization techniques to identify and compensate for device-to-device variations. Advanced testing protocols often include temperature-dependent measurements, stress testing, and accelerated aging to predict long-term reliability under various operating conditions.

Compensation techniques have emerged as critical strategies for improving manufacturing yield. These include adaptive calibration circuits, redundancy schemes, and error correction mechanisms implemented at both hardware and software levels. Some manufacturers employ post-fabrication trimming techniques to adjust device parameters and bring outlier devices within acceptable performance ranges.

The economic implications of reliability and yield challenges are substantial. Current industry data suggests that yield losses can account for 15-30% of manufacturing costs for advanced IMC devices. This economic pressure drives continuous innovation in design-for-manufacturability approaches specifically tailored to IMC architectures.

Future manufacturing technologies show promise for addressing these challenges. Emerging techniques such as atomic layer deposition for more uniform material properties and advanced lithography methods may reduce process variations. Additionally, the development of self-calibrating IMC architectures that can adapt to inherent device variations represents a paradigm shift in addressing reliability concerns without requiring perfect manufacturing precision.

Manufacturing yield for IMC devices is typically lower than conventional digital circuits due to these variability issues. Statistical analysis shows that as device dimensions shrink below 10nm, the percentage of devices meeting strict performance specifications decreases exponentially. This yield challenge translates directly to higher production costs and potentially limits mass-market adoption of IMC technologies.

Reliability testing for IMC devices requires specialized methodologies beyond traditional digital testing frameworks. Manufacturers must implement comprehensive characterization techniques to identify and compensate for device-to-device variations. Advanced testing protocols often include temperature-dependent measurements, stress testing, and accelerated aging to predict long-term reliability under various operating conditions.

Compensation techniques have emerged as critical strategies for improving manufacturing yield. These include adaptive calibration circuits, redundancy schemes, and error correction mechanisms implemented at both hardware and software levels. Some manufacturers employ post-fabrication trimming techniques to adjust device parameters and bring outlier devices within acceptable performance ranges.

The economic implications of reliability and yield challenges are substantial. Current industry data suggests that yield losses can account for 15-30% of manufacturing costs for advanced IMC devices. This economic pressure drives continuous innovation in design-for-manufacturability approaches specifically tailored to IMC architectures.

Future manufacturing technologies show promise for addressing these challenges. Emerging techniques such as atomic layer deposition for more uniform material properties and advanced lithography methods may reduce process variations. Additionally, the development of self-calibrating IMC architectures that can adapt to inherent device variations represents a paradigm shift in addressing reliability concerns without requiring perfect manufacturing precision.

Benchmarking and Standardization for IMC Performance

The standardization of benchmarking methodologies for In-Memory Computing (IMC) performance has become increasingly critical as device variability continues to impact computational accuracy. Current benchmarking practices vary widely across research institutions and industry players, making direct comparisons between different IMC implementations challenging and often misleading.

A comprehensive benchmarking framework must account for the inherent variability in IMC devices, particularly in analog implementations where manufacturing variations can significantly affect performance metrics. Standard test patterns that specifically probe the impact of device-to-device variations on computational accuracy are essential for meaningful evaluation. These patterns should include worst-case scenarios that stress the system's ability to maintain accuracy despite variability.

Performance metrics for IMC systems require standardization beyond traditional computing metrics. While energy efficiency and throughput remain important, accuracy-related metrics such as error tolerance, noise immunity, and drift compensation capabilities are particularly relevant for IMC systems affected by device variability. The IEEE P3109 working group has begun developing standards specifically addressing these unique aspects of IMC performance evaluation.

Calibration procedures represent another critical aspect of standardization. As device characteristics drift over time and operating conditions, standardized calibration methodologies enable fair comparisons between different IMC architectures. These procedures must be reproducible and account for environmental factors such as temperature and humidity that can exacerbate variability effects.

Cross-platform benchmarking tools that can be applied consistently across different IMC implementations are emerging but remain limited. Open-source initiatives like IMC-Bench and AnalogML provide standardized test suites that specifically evaluate how well different architectures handle device variability. These tools incorporate statistical analysis methods to characterize performance distributions rather than single-point measurements.

Industry adoption of standardized benchmarking practices faces challenges including proprietary concerns and the rapid evolution of IMC technologies. However, consortia such as the In-Memory Computing Alliance are working to establish consensus on key performance indicators and testing methodologies. Their roadmap includes the development of reference designs that can serve as standardized comparison points for evaluating new IMC implementations.

Academic-industry partnerships are proving valuable in developing vendor-neutral benchmarking standards that address the unique challenges of device variability in IMC operations. These collaborative efforts focus on creating benchmarks that remain relevant across multiple technology generations while providing meaningful insights into how different architectures handle the fundamental challenge of device variability.

A comprehensive benchmarking framework must account for the inherent variability in IMC devices, particularly in analog implementations where manufacturing variations can significantly affect performance metrics. Standard test patterns that specifically probe the impact of device-to-device variations on computational accuracy are essential for meaningful evaluation. These patterns should include worst-case scenarios that stress the system's ability to maintain accuracy despite variability.

Performance metrics for IMC systems require standardization beyond traditional computing metrics. While energy efficiency and throughput remain important, accuracy-related metrics such as error tolerance, noise immunity, and drift compensation capabilities are particularly relevant for IMC systems affected by device variability. The IEEE P3109 working group has begun developing standards specifically addressing these unique aspects of IMC performance evaluation.

Calibration procedures represent another critical aspect of standardization. As device characteristics drift over time and operating conditions, standardized calibration methodologies enable fair comparisons between different IMC architectures. These procedures must be reproducible and account for environmental factors such as temperature and humidity that can exacerbate variability effects.

Cross-platform benchmarking tools that can be applied consistently across different IMC implementations are emerging but remain limited. Open-source initiatives like IMC-Bench and AnalogML provide standardized test suites that specifically evaluate how well different architectures handle device variability. These tools incorporate statistical analysis methods to characterize performance distributions rather than single-point measurements.

Industry adoption of standardized benchmarking practices faces challenges including proprietary concerns and the rapid evolution of IMC technologies. However, consortia such as the In-Memory Computing Alliance are working to establish consensus on key performance indicators and testing methodologies. Their roadmap includes the development of reference designs that can serve as standardized comparison points for evaluating new IMC implementations.

Academic-industry partnerships are proving valuable in developing vendor-neutral benchmarking standards that address the unique challenges of device variability in IMC operations. These collaborative efforts focus on creating benchmarks that remain relevant across multiple technology generations while providing meaningful insights into how different architectures handle the fundamental challenge of device variability.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!