In-Memory Computing Approaches For Secure Cryptographic Accelerations

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

In-Memory Computing Evolution and Objectives

In-memory computing (IMC) represents a paradigm shift in computer architecture that addresses the von Neumann bottleneck by integrating computation capabilities directly within memory structures. This approach has evolved significantly over the past decades, from simple content-addressable memories to sophisticated processing-in-memory architectures capable of handling complex computational tasks.

The evolution of IMC began in the 1970s with associative memories but gained substantial momentum in the early 2000s with the emergence of resistive memory technologies. The development trajectory accelerated dramatically after 2010 when researchers demonstrated practical implementations using memristors, phase-change memory (PCM), and spin-transfer torque magnetic RAM (STT-MRAM) technologies. These non-volatile memory technologies provided the foundation for performing computational operations directly within memory arrays.

For cryptographic applications specifically, IMC evolution has followed a distinct path focused on security and performance optimization. Early implementations concentrated on basic Boolean operations for symmetric key algorithms, while recent advancements have enabled more complex operations required for asymmetric cryptography, including modular multiplication and exponentiation critical for RSA and elliptic curve cryptosystems.

The primary objectives of IMC for cryptographic acceleration encompass several dimensions. First, performance enhancement aims to overcome the computational bottlenecks in cryptographic operations by reducing data movement between memory and processing units. Current research indicates potential speedups of 10-100x for specific cryptographic workloads compared to conventional architectures.

Second, energy efficiency represents a critical objective, particularly for resource-constrained environments such as IoT devices and embedded systems. IMC approaches have demonstrated energy reductions of up to 90% for certain cryptographic operations by minimizing the energy-intensive data transfers between separate memory and processing units.

Third, security hardening constitutes a fundamental objective, as IMC architectures can potentially provide inherent resistance against side-channel attacks. By performing sensitive operations within memory arrays, these systems can reduce observable electromagnetic emissions and power fluctuations that often leak cryptographic secrets in conventional systems.

Finally, area efficiency and scalability objectives drive research toward compact implementations suitable for integration into existing semiconductor manufacturing processes. The goal is to develop IMC solutions that can be seamlessly incorporated into system-on-chip designs without significant increases in silicon area or manufacturing complexity.

The evolution of IMC began in the 1970s with associative memories but gained substantial momentum in the early 2000s with the emergence of resistive memory technologies. The development trajectory accelerated dramatically after 2010 when researchers demonstrated practical implementations using memristors, phase-change memory (PCM), and spin-transfer torque magnetic RAM (STT-MRAM) technologies. These non-volatile memory technologies provided the foundation for performing computational operations directly within memory arrays.

For cryptographic applications specifically, IMC evolution has followed a distinct path focused on security and performance optimization. Early implementations concentrated on basic Boolean operations for symmetric key algorithms, while recent advancements have enabled more complex operations required for asymmetric cryptography, including modular multiplication and exponentiation critical for RSA and elliptic curve cryptosystems.

The primary objectives of IMC for cryptographic acceleration encompass several dimensions. First, performance enhancement aims to overcome the computational bottlenecks in cryptographic operations by reducing data movement between memory and processing units. Current research indicates potential speedups of 10-100x for specific cryptographic workloads compared to conventional architectures.

Second, energy efficiency represents a critical objective, particularly for resource-constrained environments such as IoT devices and embedded systems. IMC approaches have demonstrated energy reductions of up to 90% for certain cryptographic operations by minimizing the energy-intensive data transfers between separate memory and processing units.

Third, security hardening constitutes a fundamental objective, as IMC architectures can potentially provide inherent resistance against side-channel attacks. By performing sensitive operations within memory arrays, these systems can reduce observable electromagnetic emissions and power fluctuations that often leak cryptographic secrets in conventional systems.

Finally, area efficiency and scalability objectives drive research toward compact implementations suitable for integration into existing semiconductor manufacturing processes. The goal is to develop IMC solutions that can be seamlessly incorporated into system-on-chip designs without significant increases in silicon area or manufacturing complexity.

Market Analysis for Secure Cryptographic Accelerators

The secure cryptographic accelerator market is experiencing robust growth, driven by escalating cybersecurity threats and the increasing adoption of encryption across multiple sectors. Current market valuations place this segment at approximately 2.3 billion USD in 2023, with projections indicating a compound annual growth rate of 12.7% through 2028, potentially reaching 4.2 billion USD by the end of the forecast period.

Financial services represent the largest market vertical, accounting for roughly 31% of total market share. This dominance stems from stringent regulatory requirements for data protection and the critical need to secure financial transactions. Healthcare follows at 22%, driven by privacy regulations like HIPAA and the increasing digitization of patient records requiring robust encryption solutions.

Cloud service providers constitute another significant market segment, representing 18% of demand. As organizations migrate sensitive workloads to cloud environments, the need for hardware-based security accelerators that can provide both performance and protection has intensified. Government and defense sectors account for 15% of the market, primarily focused on high-assurance cryptographic solutions.

Regionally, North America leads with approximately 42% market share, followed by Europe (27%), Asia-Pacific (21%), and rest of world (10%). The Asia-Pacific region is expected to witness the fastest growth rate at 15.3% annually, propelled by rapid digital transformation initiatives and increasing cybersecurity investments in countries like China, India, and Singapore.

In-memory computing approaches for cryptographic acceleration represent an emerging subsegment with particularly strong growth potential. This technology addresses the critical "memory wall" challenge that traditional cryptographic implementations face, offering significant performance improvements while maintaining security assurances. Market analysis indicates this specific approach could grow at 18.2% annually, outpacing the broader cryptographic accelerator market.

Key market drivers include the proliferation of IoT devices requiring lightweight yet secure encryption, the growing adoption of post-quantum cryptographic algorithms demanding greater computational resources, and the expansion of 5G networks necessitating high-throughput security solutions at network edges.

Customer demand increasingly focuses on solutions that offer flexibility across multiple cryptographic standards while minimizing power consumption - a particular advantage of in-memory computing approaches. Enterprise surveys indicate that 76% of organizations consider cryptographic acceleration capabilities as "important" or "very important" in their security infrastructure planning for the next three years.

Financial services represent the largest market vertical, accounting for roughly 31% of total market share. This dominance stems from stringent regulatory requirements for data protection and the critical need to secure financial transactions. Healthcare follows at 22%, driven by privacy regulations like HIPAA and the increasing digitization of patient records requiring robust encryption solutions.

Cloud service providers constitute another significant market segment, representing 18% of demand. As organizations migrate sensitive workloads to cloud environments, the need for hardware-based security accelerators that can provide both performance and protection has intensified. Government and defense sectors account for 15% of the market, primarily focused on high-assurance cryptographic solutions.

Regionally, North America leads with approximately 42% market share, followed by Europe (27%), Asia-Pacific (21%), and rest of world (10%). The Asia-Pacific region is expected to witness the fastest growth rate at 15.3% annually, propelled by rapid digital transformation initiatives and increasing cybersecurity investments in countries like China, India, and Singapore.

In-memory computing approaches for cryptographic acceleration represent an emerging subsegment with particularly strong growth potential. This technology addresses the critical "memory wall" challenge that traditional cryptographic implementations face, offering significant performance improvements while maintaining security assurances. Market analysis indicates this specific approach could grow at 18.2% annually, outpacing the broader cryptographic accelerator market.

Key market drivers include the proliferation of IoT devices requiring lightweight yet secure encryption, the growing adoption of post-quantum cryptographic algorithms demanding greater computational resources, and the expansion of 5G networks necessitating high-throughput security solutions at network edges.

Customer demand increasingly focuses on solutions that offer flexibility across multiple cryptographic standards while minimizing power consumption - a particular advantage of in-memory computing approaches. Enterprise surveys indicate that 76% of organizations consider cryptographic acceleration capabilities as "important" or "very important" in their security infrastructure planning for the next three years.

Current Challenges in Cryptographic Hardware Implementation

Despite significant advancements in cryptographic hardware implementation, several critical challenges persist that impede the widespread adoption and efficiency of secure cryptographic accelerations, particularly in the context of in-memory computing approaches.

The fundamental challenge remains the inherent trade-off between security, performance, and power consumption. As cryptographic algorithms become more complex to counter evolving threats, hardware implementations must balance computational intensity with energy efficiency constraints, especially for resource-constrained devices in IoT ecosystems. This balancing act becomes particularly challenging when implementing post-quantum cryptographic algorithms, which typically require significantly more computational resources than traditional approaches.

Side-channel attacks continue to pose a substantial threat to cryptographic hardware implementations. These non-invasive attacks exploit information leakage through power consumption patterns, electromagnetic emissions, timing variations, and acoustic signatures. Current countermeasures often introduce significant overhead, reducing the performance benefits of hardware acceleration. The integration of effective side-channel attack resistance directly into in-memory computing architectures remains an open challenge.

The increasing demand for homomorphic encryption and secure multi-party computation introduces unprecedented computational complexity that strains even dedicated hardware accelerators. These privacy-preserving techniques require operations on encrypted data without decryption, demanding novel architectural approaches that current hardware struggles to support efficiently.

Flexibility and programmability present another significant hurdle. Cryptographic standards evolve rapidly in response to discovered vulnerabilities and emerging threats. Hardware implementations must therefore balance specialization for performance with adaptability to support algorithm updates and replacements. This challenge is particularly acute for in-memory computing approaches, which often optimize for specific computational patterns.

Manufacturing variability and reliability issues compound these challenges. Process variations in semiconductor fabrication can lead to inconsistent behavior across identical hardware units, potentially compromising security guarantees or requiring conservative design margins that reduce performance. Additionally, emerging memory technologies used in in-memory computing approaches may exhibit reliability issues such as limited endurance or retention, affecting long-term security properties.

Finally, verification and certification of cryptographic hardware implementations remain complex and time-consuming processes. Formal verification techniques struggle to scale to complex designs, while empirical testing cannot exhaustively cover all possible attack vectors. This verification gap creates uncertainty about security guarantees, particularly for novel in-memory computing architectures that deviate from traditional design paradigms.

The fundamental challenge remains the inherent trade-off between security, performance, and power consumption. As cryptographic algorithms become more complex to counter evolving threats, hardware implementations must balance computational intensity with energy efficiency constraints, especially for resource-constrained devices in IoT ecosystems. This balancing act becomes particularly challenging when implementing post-quantum cryptographic algorithms, which typically require significantly more computational resources than traditional approaches.

Side-channel attacks continue to pose a substantial threat to cryptographic hardware implementations. These non-invasive attacks exploit information leakage through power consumption patterns, electromagnetic emissions, timing variations, and acoustic signatures. Current countermeasures often introduce significant overhead, reducing the performance benefits of hardware acceleration. The integration of effective side-channel attack resistance directly into in-memory computing architectures remains an open challenge.

The increasing demand for homomorphic encryption and secure multi-party computation introduces unprecedented computational complexity that strains even dedicated hardware accelerators. These privacy-preserving techniques require operations on encrypted data without decryption, demanding novel architectural approaches that current hardware struggles to support efficiently.

Flexibility and programmability present another significant hurdle. Cryptographic standards evolve rapidly in response to discovered vulnerabilities and emerging threats. Hardware implementations must therefore balance specialization for performance with adaptability to support algorithm updates and replacements. This challenge is particularly acute for in-memory computing approaches, which often optimize for specific computational patterns.

Manufacturing variability and reliability issues compound these challenges. Process variations in semiconductor fabrication can lead to inconsistent behavior across identical hardware units, potentially compromising security guarantees or requiring conservative design margins that reduce performance. Additionally, emerging memory technologies used in in-memory computing approaches may exhibit reliability issues such as limited endurance or retention, affecting long-term security properties.

Finally, verification and certification of cryptographic hardware implementations remain complex and time-consuming processes. Formal verification techniques struggle to scale to complex designs, while empirical testing cannot exhaustively cover all possible attack vectors. This verification gap creates uncertainty about security guarantees, particularly for novel in-memory computing architectures that deviate from traditional design paradigms.

Existing In-Memory Cryptographic Acceleration Solutions

01 Encryption and data protection in in-memory computing

Various encryption techniques and data protection mechanisms are implemented to secure data in in-memory computing environments. These include encryption of data at rest and in transit, secure key management, and protection against unauthorized access. By implementing robust encryption protocols, sensitive information stored in memory can be protected from potential breaches while maintaining the performance benefits of in-memory processing.- Encryption and data protection in in-memory computing: Various encryption techniques and data protection mechanisms are implemented to secure data in in-memory computing environments. These include encryption of data at rest and in transit, secure key management, and protection against unauthorized access. By implementing robust encryption protocols, sensitive information stored in memory can be protected from potential breaches and attacks, ensuring data confidentiality and integrity in high-performance computing scenarios.

- Access control and authentication mechanisms: Access control and authentication mechanisms are crucial for securing in-memory computing systems. These include multi-factor authentication, role-based access control, and secure user identity management. By implementing robust authentication protocols and fine-grained access controls, organizations can ensure that only authorized users and processes can access sensitive data and computing resources in memory, preventing unauthorized access and potential security breaches.

- Secure memory architecture and isolation: Secure memory architecture designs incorporate isolation techniques to protect in-memory computing environments. These include memory segmentation, containerization, and hardware-based isolation mechanisms. By creating isolated memory spaces and implementing secure boundaries between different processes and applications, the risk of memory-based attacks such as buffer overflows and side-channel attacks can be significantly reduced, enhancing the overall security posture of in-memory computing systems.

- Threat detection and prevention for in-memory systems: Advanced threat detection and prevention mechanisms are implemented to protect in-memory computing environments from various security threats. These include real-time monitoring, anomaly detection, and intrusion prevention systems specifically designed for in-memory architectures. By continuously monitoring memory usage patterns and detecting suspicious activities, organizations can identify and mitigate potential security threats before they cause significant damage to in-memory computing systems.

- Secure data processing in distributed in-memory environments: Secure data processing techniques are implemented in distributed in-memory computing environments to ensure data security across multiple nodes. These include secure data partitioning, distributed encryption, and secure communication protocols between nodes. By implementing comprehensive security measures for distributed processing, organizations can maintain data confidentiality and integrity while leveraging the performance benefits of distributed in-memory computing architectures.

02 Access control and authentication mechanisms

Access control and authentication mechanisms are crucial for securing in-memory computing systems. These include multi-factor authentication, role-based access control, and secure token-based authentication systems. By implementing robust authentication protocols, organizations can ensure that only authorized users and processes can access sensitive data stored in memory, thereby preventing unauthorized data manipulation or extraction.Expand Specific Solutions03 Memory isolation and segmentation techniques

Memory isolation and segmentation techniques are employed to create secure boundaries between different processes and applications sharing the same memory resources. These techniques include hardware-based memory protection, virtualization of memory spaces, and secure memory partitioning. By implementing effective memory isolation, potential vulnerabilities in one application cannot be exploited to access data from another application, enhancing the overall security of in-memory computing systems.Expand Specific Solutions04 Threat detection and prevention in in-memory systems

Advanced threat detection and prevention mechanisms are implemented to identify and mitigate security threats in in-memory computing environments. These include real-time monitoring, anomaly detection, and behavioral analysis to identify potential security breaches. By continuously monitoring memory access patterns and system behavior, organizations can quickly detect and respond to security incidents, minimizing potential damage from attacks targeting in-memory data.Expand Specific Solutions05 Secure architecture design for in-memory computing

Secure architecture designs are developed specifically for in-memory computing environments to address their unique security challenges. These include secure boot processes, trusted execution environments, and hardware-assisted security features. By implementing security considerations at the architectural level, organizations can build inherently secure in-memory computing systems that protect data integrity and confidentiality while maintaining the performance advantages of in-memory processing.Expand Specific Solutions

Leading Organizations in Secure Computing Architecture

In-Memory Computing for secure cryptographic acceleration is evolving rapidly, with the market currently in a growth phase driven by increasing cybersecurity demands. The global market size is expanding significantly as organizations prioritize data protection. Technologically, this field shows varying maturity levels across players. Intel, AMD, and NVIDIA lead with advanced hardware-based solutions integrating specialized cryptographic accelerators directly into memory architectures. Huawei, Micron, and Infineon demonstrate strong capabilities in memory-centric security implementations. Companies like Cryptography Research and NXP offer specialized solutions, while academic institutions including Fudan University and North Carolina State University contribute significant research innovations. The competitive landscape reflects a blend of established semiconductor giants and specialized security firms developing increasingly sophisticated in-memory cryptographic processing technologies.

Intel Corp.

Technical Solution: Intel has developed Software Guard Extensions (SGX) as a key in-memory computing approach for secure cryptographic acceleration. SGX creates isolated execution environments called enclaves within the processor's memory, protecting sensitive data and code from unauthorized access even if the system is compromised. Intel's implementation includes dedicated hardware instructions for cryptographic operations that execute directly within these secure enclaves. Their Memory Encryption Engine (MEE) provides transparent memory encryption with integrity protection, allowing cryptographic operations to be performed on encrypted data without exposing plaintext in system memory. Intel has also integrated AES-NI (Advanced Encryption Standard New Instructions) directly into their processors, which accelerates encryption/decryption operations by 3-10x compared to software implementations. Their QuickAssist Technology (QAT) further enhances cryptographic performance by offloading operations to specialized hardware accelerators while maintaining memory security.

Strengths: Hardware-level security with minimal performance overhead; widespread ecosystem adoption; comprehensive protection against side-channel attacks. Weaknesses: Requires specific Intel hardware; potential vulnerability to physical attacks; some implementation complexities for developers integrating with the technology.

Micron Technology, Inc.

Technical Solution: Micron has pioneered Compute-In-Memory (CIM) architecture specifically designed for cryptographic acceleration. Their approach integrates processing capabilities directly into DRAM memory arrays, eliminating the need to move sensitive data between memory and CPU during cryptographic operations. Micron's Authenta technology embeds security directly into memory devices, creating a hardware root of trust that enables secure boot and runtime integrity verification. Their implementation includes dedicated memory regions for cryptographic key storage with physical isolation from the main memory space. Micron has developed specialized memory cells that can perform basic logic operations (AND, OR, XOR) directly within memory arrays, which are fundamental to many cryptographic algorithms. This approach reduces energy consumption by up to 95% for certain cryptographic workloads compared to conventional CPU processing. Micron's technology also incorporates true random number generation capabilities within memory for secure key generation without exposing the process to external observation.

Strengths: Dramatic reduction in power consumption; elimination of data movement vulnerabilities; inherent protection against certain side-channel attacks. Weaknesses: Limited to specific cryptographic operations; requires specialized memory hardware; potential challenges in scaling to complex cryptographic algorithms.

Key Patents in Secure In-Memory Computing

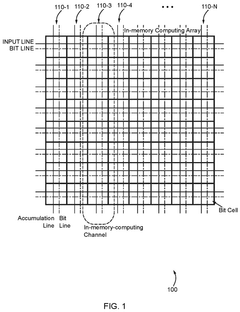

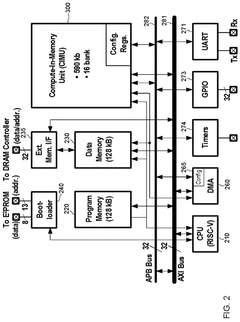

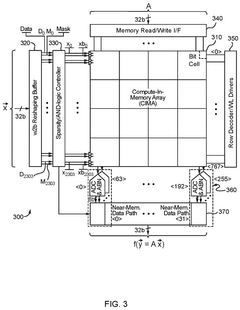

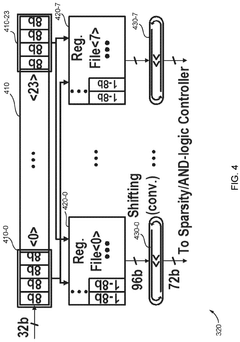

Configurable in memory computing engine, platform, bit cells and layouts therefore

PatentPendingUS20240330178A1

Innovation

- An in-memory computing architecture that includes a reshaping buffer, a compute-in-memory array, analog-to-digital converter circuitry, and control circuitry to perform multi-bit computing operations using single-bit internal circuits, enabling bit-parallel/bit-serial operations and near-memory computing to efficiently process multi-bit matrix and vector elements.

Cryptographic processing accelerator board

PatentInactiveEP1417566A2

Innovation

- A cryptographic processing accelerator card utilizing an original architecture with a modular exponential calculation stage, a modified Montgomery multiplier stage, and a dispatcher for multicore management, significantly reducing processing time and complexity by implementing an asynchronous statistical processing approach.

Hardware-Software Co-Design Approaches

Hardware-software co-design represents a critical approach for optimizing in-memory computing (IMC) systems for cryptographic acceleration. This methodology bridges the gap between hardware capabilities and software requirements, creating synergistic solutions that maximize security, performance, and energy efficiency. Traditional approaches that treat hardware and software development as separate concerns often result in suboptimal implementations for cryptographic operations, which demand both high computational throughput and stringent security guarantees.

Effective co-design strategies for secure cryptographic IMC begin with comprehensive requirement analysis that considers both the cryptographic algorithm characteristics and the memory architecture constraints. This includes identifying memory access patterns of specific cryptographic operations, determining critical paths, and analyzing potential side-channel vulnerabilities at the hardware-software interface. Such analysis enables designers to make informed decisions about which operations should be implemented in hardware versus software.

Memory-centric cryptographic accelerators benefit significantly from specialized instruction set extensions that directly support in-memory operations. These custom instructions can enable atomic operations on cryptographic primitives while maintaining data within the memory array, thus minimizing data movement and associated security risks. For example, specialized instructions for AES operations can be designed to work directly with in-memory computing arrays, allowing encryption/decryption processes to occur without exposing intermediate values to the system bus.

Runtime reconfigurability represents another important aspect of hardware-software co-design for cryptographic IMC. Adaptive systems can dynamically adjust the hardware configuration based on software-defined security policies or workload characteristics. This approach enables systems to balance performance and security requirements in real-time, allocating appropriate resources to cryptographic operations based on their sensitivity and computational demands.

Security-aware compilers and toolchains form a crucial component in the co-design ecosystem. These tools must understand both the cryptographic algorithm requirements and the underlying IMC hardware capabilities to generate optimized code that preserves security properties. Advanced compiler techniques can automatically identify opportunities for leveraging in-memory computing features while ensuring that sensitive operations remain protected from potential side-channel attacks.

Cross-layer optimization techniques further enhance the effectiveness of hardware-software co-design for cryptographic acceleration. By considering multiple abstraction layers simultaneously—from circuit-level implementations to application software—designers can identify and eliminate inefficiencies that would be invisible when examining each layer in isolation. This holistic approach is particularly valuable for cryptographic applications where performance bottlenecks or security vulnerabilities may emerge from interactions between different system components.

Effective co-design strategies for secure cryptographic IMC begin with comprehensive requirement analysis that considers both the cryptographic algorithm characteristics and the memory architecture constraints. This includes identifying memory access patterns of specific cryptographic operations, determining critical paths, and analyzing potential side-channel vulnerabilities at the hardware-software interface. Such analysis enables designers to make informed decisions about which operations should be implemented in hardware versus software.

Memory-centric cryptographic accelerators benefit significantly from specialized instruction set extensions that directly support in-memory operations. These custom instructions can enable atomic operations on cryptographic primitives while maintaining data within the memory array, thus minimizing data movement and associated security risks. For example, specialized instructions for AES operations can be designed to work directly with in-memory computing arrays, allowing encryption/decryption processes to occur without exposing intermediate values to the system bus.

Runtime reconfigurability represents another important aspect of hardware-software co-design for cryptographic IMC. Adaptive systems can dynamically adjust the hardware configuration based on software-defined security policies or workload characteristics. This approach enables systems to balance performance and security requirements in real-time, allocating appropriate resources to cryptographic operations based on their sensitivity and computational demands.

Security-aware compilers and toolchains form a crucial component in the co-design ecosystem. These tools must understand both the cryptographic algorithm requirements and the underlying IMC hardware capabilities to generate optimized code that preserves security properties. Advanced compiler techniques can automatically identify opportunities for leveraging in-memory computing features while ensuring that sensitive operations remain protected from potential side-channel attacks.

Cross-layer optimization techniques further enhance the effectiveness of hardware-software co-design for cryptographic acceleration. By considering multiple abstraction layers simultaneously—from circuit-level implementations to application software—designers can identify and eliminate inefficiencies that would be invisible when examining each layer in isolation. This holistic approach is particularly valuable for cryptographic applications where performance bottlenecks or security vulnerabilities may emerge from interactions between different system components.

Energy Efficiency Considerations for Secure Computing

Energy efficiency has emerged as a critical consideration in the development of secure cryptographic accelerators using in-memory computing approaches. The implementation of cryptographic operations traditionally demands significant computational resources, resulting in substantial energy consumption. This energy overhead becomes particularly problematic in resource-constrained environments such as IoT devices, mobile platforms, and embedded systems where battery life and thermal management are paramount concerns.

In-memory computing architectures offer promising solutions to address these energy efficiency challenges by minimizing data movement between processing units and memory, which typically accounts for a substantial portion of energy consumption in conventional computing systems. By performing computations directly within memory arrays, these approaches can significantly reduce the energy costs associated with data transfer operations, potentially achieving energy savings of 10-100x compared to traditional von Neumann architectures.

Recent research has demonstrated several energy-efficient in-memory computing techniques for cryptographic operations. Resistive RAM (RRAM) and phase-change memory (PCM) based implementations have shown particular promise, with some designs achieving energy consumption as low as 2-5 pJ per bit operation for basic cryptographic functions. These technologies leverage the inherent parallelism of memory arrays to perform multiple operations simultaneously, further enhancing energy efficiency.

Power side-channel attacks represent a significant security concern for cryptographic systems, as they exploit correlations between power consumption patterns and processed data to extract sensitive information. In-memory computing approaches can mitigate these vulnerabilities through more uniform power consumption profiles. Techniques such as balanced logic designs and constant-time operations implemented directly within memory arrays have demonstrated up to 90% reduction in power signature variations, significantly improving resistance against power analysis attacks.

Dynamic voltage and frequency scaling (DVFS) techniques, when integrated with in-memory computing architectures, provide additional energy optimization opportunities. Adaptive systems can adjust operational parameters based on security requirements and computational demands, potentially reducing energy consumption by 30-40% during periods of lower security needs while maintaining full protection for critical operations.

The integration of lightweight cryptographic algorithms specifically designed for in-memory computing represents another promising direction. These algorithms optimize for the unique characteristics of memory-centric computation, reducing energy requirements by 20-50% compared to traditional cryptographic implementations while maintaining security guarantees. Examples include modified versions of AES and lightweight block ciphers that leverage bit-parallel operations naturally suited to memory array structures.

In-memory computing architectures offer promising solutions to address these energy efficiency challenges by minimizing data movement between processing units and memory, which typically accounts for a substantial portion of energy consumption in conventional computing systems. By performing computations directly within memory arrays, these approaches can significantly reduce the energy costs associated with data transfer operations, potentially achieving energy savings of 10-100x compared to traditional von Neumann architectures.

Recent research has demonstrated several energy-efficient in-memory computing techniques for cryptographic operations. Resistive RAM (RRAM) and phase-change memory (PCM) based implementations have shown particular promise, with some designs achieving energy consumption as low as 2-5 pJ per bit operation for basic cryptographic functions. These technologies leverage the inherent parallelism of memory arrays to perform multiple operations simultaneously, further enhancing energy efficiency.

Power side-channel attacks represent a significant security concern for cryptographic systems, as they exploit correlations between power consumption patterns and processed data to extract sensitive information. In-memory computing approaches can mitigate these vulnerabilities through more uniform power consumption profiles. Techniques such as balanced logic designs and constant-time operations implemented directly within memory arrays have demonstrated up to 90% reduction in power signature variations, significantly improving resistance against power analysis attacks.

Dynamic voltage and frequency scaling (DVFS) techniques, when integrated with in-memory computing architectures, provide additional energy optimization opportunities. Adaptive systems can adjust operational parameters based on security requirements and computational demands, potentially reducing energy consumption by 30-40% during periods of lower security needs while maintaining full protection for critical operations.

The integration of lightweight cryptographic algorithms specifically designed for in-memory computing represents another promising direction. These algorithms optimize for the unique characteristics of memory-centric computation, reducing energy requirements by 20-50% compared to traditional cryptographic implementations while maintaining security guarantees. Examples include modified versions of AES and lightweight block ciphers that leverage bit-parallel operations naturally suited to memory array structures.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!