How to Optimize Chiplet Performance in Edge Computing Environments?

JUL 16, 20258 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Chiplet Tech Evolution

Chiplet technology has undergone significant evolution since its inception, driven by the need for improved performance, power efficiency, and scalability in computing systems. The journey of chiplet technology can be traced back to the early 2010s when the concept of disaggregating large monolithic chips into smaller, more manageable components began to gain traction.

In the initial stages, chiplets were primarily used to address manufacturing challenges associated with large-scale integration. By breaking down complex designs into smaller, more easily manufacturable units, chiplets allowed for improved yield rates and reduced production costs. This approach also enabled the mixing of different process nodes within a single package, allowing for optimized performance and power consumption.

As the technology matured, the focus shifted towards enhancing system-level performance and flexibility. The introduction of advanced packaging technologies, such as 2.5D and 3D integration, played a crucial role in this evolution. These packaging techniques allowed for high-bandwidth, low-latency interconnects between chiplets, effectively mitigating the performance penalties associated with chip disaggregation.

The next phase of chiplet evolution saw the development of standardized interfaces and protocols. Initiatives like the Advanced Interface Bus (AIB) and Universal Chiplet Interconnect Express (UCIe) emerged, aiming to create a common language for chiplet-to-chiplet communication. These standards have been instrumental in fostering interoperability and promoting a more open ecosystem for chiplet-based designs.

In recent years, the focus has shifted towards optimizing chiplet performance for specific application domains, including edge computing. This has led to the development of specialized chiplets tailored for tasks such as AI inference, signal processing, and low-power operation. The integration of heterogeneous chiplets, each optimized for specific functions, has become a key strategy for achieving high performance and energy efficiency in edge computing environments.

The latest advancements in chiplet technology have centered around improving thermal management, reducing power consumption, and enhancing system-level integration. Innovations in cooling solutions, power delivery networks, and die-to-die interconnects have been crucial in pushing the boundaries of chiplet performance, particularly in constrained edge computing scenarios.

Looking ahead, the evolution of chiplet technology is expected to continue with a focus on even finer-grained disaggregation, improved energy efficiency, and enhanced integration capabilities. Emerging technologies such as photonic interconnects and advanced materials are poised to play a significant role in shaping the future of chiplet-based systems, particularly in addressing the unique challenges posed by edge computing environments.

In the initial stages, chiplets were primarily used to address manufacturing challenges associated with large-scale integration. By breaking down complex designs into smaller, more easily manufacturable units, chiplets allowed for improved yield rates and reduced production costs. This approach also enabled the mixing of different process nodes within a single package, allowing for optimized performance and power consumption.

As the technology matured, the focus shifted towards enhancing system-level performance and flexibility. The introduction of advanced packaging technologies, such as 2.5D and 3D integration, played a crucial role in this evolution. These packaging techniques allowed for high-bandwidth, low-latency interconnects between chiplets, effectively mitigating the performance penalties associated with chip disaggregation.

The next phase of chiplet evolution saw the development of standardized interfaces and protocols. Initiatives like the Advanced Interface Bus (AIB) and Universal Chiplet Interconnect Express (UCIe) emerged, aiming to create a common language for chiplet-to-chiplet communication. These standards have been instrumental in fostering interoperability and promoting a more open ecosystem for chiplet-based designs.

In recent years, the focus has shifted towards optimizing chiplet performance for specific application domains, including edge computing. This has led to the development of specialized chiplets tailored for tasks such as AI inference, signal processing, and low-power operation. The integration of heterogeneous chiplets, each optimized for specific functions, has become a key strategy for achieving high performance and energy efficiency in edge computing environments.

The latest advancements in chiplet technology have centered around improving thermal management, reducing power consumption, and enhancing system-level integration. Innovations in cooling solutions, power delivery networks, and die-to-die interconnects have been crucial in pushing the boundaries of chiplet performance, particularly in constrained edge computing scenarios.

Looking ahead, the evolution of chiplet technology is expected to continue with a focus on even finer-grained disaggregation, improved energy efficiency, and enhanced integration capabilities. Emerging technologies such as photonic interconnects and advanced materials are poised to play a significant role in shaping the future of chiplet-based systems, particularly in addressing the unique challenges posed by edge computing environments.

Edge Computing Demand

Edge computing has emerged as a critical technology in the era of Internet of Things (IoT) and 5G networks, driving significant demand for high-performance, low-latency computing capabilities at the network edge. This demand is primarily fueled by the exponential growth of connected devices and the increasing need for real-time data processing and analysis closer to the data source.

The proliferation of IoT devices across various sectors, including industrial automation, smart cities, autonomous vehicles, and healthcare, has created a substantial market for edge computing solutions. These applications require rapid data processing and decision-making capabilities to ensure optimal performance and user experience. For instance, in autonomous vehicles, edge computing enables real-time sensor data analysis and decision-making, crucial for safe navigation and collision avoidance.

The global edge computing market is experiencing rapid growth, with projections indicating a compound annual growth rate (CAGR) of over 30% in the coming years. This growth is driven by the increasing adoption of IoT devices, the rollout of 5G networks, and the rising demand for low-latency applications. Industries such as manufacturing, healthcare, and retail are increasingly leveraging edge computing to improve operational efficiency, enhance customer experiences, and enable new business models.

The demand for edge computing is also being propelled by the limitations of cloud computing in certain scenarios. While cloud computing offers scalable and centralized processing power, it often falls short in applications requiring real-time processing or those operating in environments with limited connectivity. Edge computing addresses these challenges by bringing computation and data storage closer to the point of need, reducing latency and bandwidth usage.

As the demand for edge computing grows, there is an increasing need for more powerful and efficient computing solutions at the edge. This is where chiplet technology comes into play, offering a promising approach to optimize performance in edge computing environments. Chiplets allow for the integration of multiple specialized processing units on a single package, enabling customized and scalable solutions that can meet the diverse requirements of edge computing applications.

The adoption of chiplet technology in edge computing is driven by the need for higher performance, improved energy efficiency, and greater flexibility in system design. By leveraging chiplets, manufacturers can create edge computing devices that are tailored to specific application requirements, combining high-performance processing units with specialized accelerators for tasks such as AI inference, signal processing, or network packet processing.

The proliferation of IoT devices across various sectors, including industrial automation, smart cities, autonomous vehicles, and healthcare, has created a substantial market for edge computing solutions. These applications require rapid data processing and decision-making capabilities to ensure optimal performance and user experience. For instance, in autonomous vehicles, edge computing enables real-time sensor data analysis and decision-making, crucial for safe navigation and collision avoidance.

The global edge computing market is experiencing rapid growth, with projections indicating a compound annual growth rate (CAGR) of over 30% in the coming years. This growth is driven by the increasing adoption of IoT devices, the rollout of 5G networks, and the rising demand for low-latency applications. Industries such as manufacturing, healthcare, and retail are increasingly leveraging edge computing to improve operational efficiency, enhance customer experiences, and enable new business models.

The demand for edge computing is also being propelled by the limitations of cloud computing in certain scenarios. While cloud computing offers scalable and centralized processing power, it often falls short in applications requiring real-time processing or those operating in environments with limited connectivity. Edge computing addresses these challenges by bringing computation and data storage closer to the point of need, reducing latency and bandwidth usage.

As the demand for edge computing grows, there is an increasing need for more powerful and efficient computing solutions at the edge. This is where chiplet technology comes into play, offering a promising approach to optimize performance in edge computing environments. Chiplets allow for the integration of multiple specialized processing units on a single package, enabling customized and scalable solutions that can meet the diverse requirements of edge computing applications.

The adoption of chiplet technology in edge computing is driven by the need for higher performance, improved energy efficiency, and greater flexibility in system design. By leveraging chiplets, manufacturers can create edge computing devices that are tailored to specific application requirements, combining high-performance processing units with specialized accelerators for tasks such as AI inference, signal processing, or network packet processing.

Chiplet Challenges

Chiplets face several significant challenges in edge computing environments, primarily due to the unique requirements and constraints of these distributed systems. One of the main obstacles is power efficiency. Edge devices often operate on limited power sources, such as batteries or energy harvesting systems, necessitating chiplets that can deliver high performance while maintaining low power consumption. This balance is crucial for extending device longevity and reducing operational costs in large-scale deployments.

Thermal management presents another critical challenge. Edge devices frequently operate in diverse and sometimes harsh environments, lacking the controlled cooling systems found in data centers. Chiplets must be designed to dissipate heat effectively without relying on extensive cooling infrastructure, which can be particularly challenging when integrating high-performance components in compact form factors.

Interconnect bandwidth and latency between chiplets become more pronounced issues in edge computing scenarios. The distributed nature of edge networks demands rapid data processing and communication between chiplets, yet traditional interconnect technologies may introduce bottlenecks that hinder overall system performance. Optimizing these inter-chiplet connections is essential for maintaining low-latency responses in time-sensitive edge applications.

Security and reliability pose additional concerns for chiplet implementation in edge environments. Edge devices are often more vulnerable to physical tampering and cyber attacks due to their distributed locations. Ensuring robust security measures at the chiplet level, including hardware-based encryption and secure boot processes, is crucial for protecting sensitive data and maintaining system integrity.

Manufacturing and integration complexities also present challenges. The diverse requirements of edge computing applications may necessitate customized chiplet configurations, leading to increased design and production costs. Ensuring compatibility and seamless integration between chiplets from different vendors or with varying process nodes adds another layer of complexity to the manufacturing process.

Lastly, the need for standardization in chiplet interfaces and protocols remains a significant hurdle. The lack of universal standards can impede interoperability and limit the flexibility of chiplet-based designs in edge computing systems. Establishing industry-wide standards for chiplet integration would greatly facilitate the adoption and optimization of this technology in edge environments.

Thermal management presents another critical challenge. Edge devices frequently operate in diverse and sometimes harsh environments, lacking the controlled cooling systems found in data centers. Chiplets must be designed to dissipate heat effectively without relying on extensive cooling infrastructure, which can be particularly challenging when integrating high-performance components in compact form factors.

Interconnect bandwidth and latency between chiplets become more pronounced issues in edge computing scenarios. The distributed nature of edge networks demands rapid data processing and communication between chiplets, yet traditional interconnect technologies may introduce bottlenecks that hinder overall system performance. Optimizing these inter-chiplet connections is essential for maintaining low-latency responses in time-sensitive edge applications.

Security and reliability pose additional concerns for chiplet implementation in edge environments. Edge devices are often more vulnerable to physical tampering and cyber attacks due to their distributed locations. Ensuring robust security measures at the chiplet level, including hardware-based encryption and secure boot processes, is crucial for protecting sensitive data and maintaining system integrity.

Manufacturing and integration complexities also present challenges. The diverse requirements of edge computing applications may necessitate customized chiplet configurations, leading to increased design and production costs. Ensuring compatibility and seamless integration between chiplets from different vendors or with varying process nodes adds another layer of complexity to the manufacturing process.

Lastly, the need for standardization in chiplet interfaces and protocols remains a significant hurdle. The lack of universal standards can impede interoperability and limit the flexibility of chiplet-based designs in edge computing systems. Establishing industry-wide standards for chiplet integration would greatly facilitate the adoption and optimization of this technology in edge environments.

Current Optimization

01 Chiplet interconnect optimization

Improving chiplet performance through advanced interconnect technologies, including high-bandwidth interfaces and novel packaging solutions. This approach enhances data transfer rates between chiplets, reducing latency and improving overall system efficiency.- Chiplet interconnection and communication: Improving chiplet performance through advanced interconnection technologies and communication protocols. This includes high-bandwidth interfaces, low-latency connections, and efficient data transfer mechanisms between chiplets. These advancements enable better integration and coordination of multiple chiplets, leading to enhanced overall system performance.

- Power management and thermal optimization: Implementing sophisticated power management techniques and thermal optimization strategies for chiplets. This involves dynamic voltage and frequency scaling, intelligent power gating, and advanced cooling solutions. These approaches help maintain optimal performance while managing power consumption and heat dissipation in multi-chiplet designs.

- Chiplet architecture and design optimization: Developing innovative chiplet architectures and design methodologies to enhance performance. This includes optimizing chiplet floor plans, implementing efficient cache hierarchies, and utilizing advanced packaging technologies. These design strategies aim to maximize the performance potential of individual chiplets and their integration into larger systems.

- AI and machine learning acceleration in chiplets: Incorporating specialized AI and machine learning accelerators within chiplet designs. This approach involves integrating dedicated neural processing units, tensor cores, or other AI-optimized hardware into chiplets. These accelerators enhance the performance of AI workloads and enable more efficient processing of machine learning algorithms in chiplet-based systems.

- Heterogeneous integration and chiplet specialization: Leveraging heterogeneous integration techniques to combine chiplets with different functionalities and process technologies. This approach allows for the optimization of specific chiplets for particular tasks, such as memory, processing, or I/O operations. By integrating specialized chiplets, overall system performance can be significantly improved while maintaining flexibility in design and manufacturing.

02 Power management in chiplet designs

Implementing sophisticated power management techniques for chiplets, such as dynamic voltage and frequency scaling, and power gating. These methods optimize energy consumption while maintaining or improving performance, crucial for multi-chiplet systems.Expand Specific Solutions03 Thermal management for chiplet performance

Developing advanced thermal management solutions specifically for chiplet architectures. This includes innovative cooling techniques and thermal-aware designs to maintain optimal operating temperatures, ensuring sustained high performance and reliability.Expand Specific Solutions04 Chiplet-based AI and machine learning acceleration

Designing specialized chiplets for AI and machine learning workloads, incorporating dedicated processing units and optimized data flow architectures. This approach enhances performance in AI applications while maintaining flexibility in system design.Expand Specific Solutions05 Heterogeneous integration in chiplet systems

Leveraging heterogeneous integration techniques to combine chiplets with different process nodes or functionalities. This strategy allows for optimized performance by utilizing the best-suited technology for each component within a single package.Expand Specific Solutions

Key Chiplet Players

The chiplet optimization landscape in edge computing is evolving rapidly, with the market in its growth phase and expected to expand significantly. The technology's maturity varies across companies, with industry leaders like Intel, IBM, and Qualcomm at the forefront. These firms are leveraging their expertise in semiconductor design and edge computing to develop advanced chiplet solutions. Emerging players such as Graphcore and Black Sesame Technologies are also making strides, focusing on AI-specific chiplet designs for edge applications. The competitive landscape is characterized by a mix of established tech giants and innovative startups, each striving to address the unique challenges of optimizing chiplet performance in resource-constrained edge environments.

Intel Corp.

Technical Solution: Intel's approach to optimizing chiplet performance in edge computing environments focuses on their Foveros 3D packaging technology and Embedded Multi-die Interconnect Bridge (EMIB). These technologies allow for the integration of multiple chiplets in a single package, optimizing performance and power efficiency. Intel's Ponte Vecchio GPU, designed for high-performance computing and AI applications, utilizes over 40 chiplets manufactured on different process nodes[1]. For edge computing, Intel has developed the Loihi 2 neuromorphic chip, which uses a 3D stacked architecture to improve energy efficiency and reduce latency in AI workloads at the edge[2]. Additionally, Intel's Agilex FPGA family incorporates chiplet-based design to provide customizable solutions for edge computing applications, offering up to 40 TFLOPS of single-precision floating-point performance[3].

Strengths: Advanced packaging technologies, diverse product portfolio for edge computing, and strong integration capabilities. Weaknesses: Higher power consumption compared to some ARM-based solutions, and potential complexity in system design.

QUALCOMM, Inc.

Technical Solution: Qualcomm's strategy for optimizing chiplet performance in edge computing environments centers around their Snapdragon platform and heterogeneous computing approach. Their chiplet-based designs integrate custom-designed CPU cores, GPUs, and AI accelerators on a single chip. The Snapdragon 8cx Gen 3 compute platform, targeted at edge devices, utilizes a 5nm process and delivers up to 85% faster CPU performance and 60% faster GPU performance compared to previous generations[4]. Qualcomm's AI Engine, integrated into their chiplets, provides up to 15 TOPS of AI performance for edge computing tasks[5]. Furthermore, Qualcomm has introduced the Cloud AI 100 accelerator, which uses chiplet technology to deliver up to 400 TOPS of AI performance while consuming only 75 watts, making it suitable for edge deployments[6].

Strengths: High energy efficiency, strong AI performance, and extensive experience in mobile and edge computing. Weaknesses: Less presence in high-performance computing segments compared to some competitors.

Core Chiplet Innov

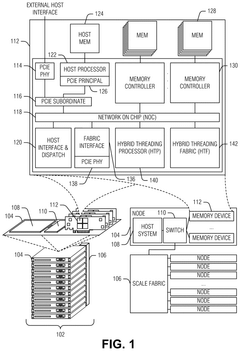

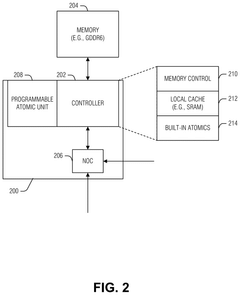

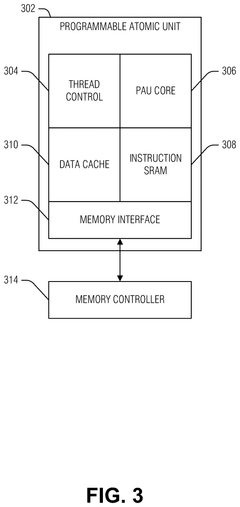

Parking threads in barrel processor for managing hazard clearing

PatentPendingUS20240419534A1

Innovation

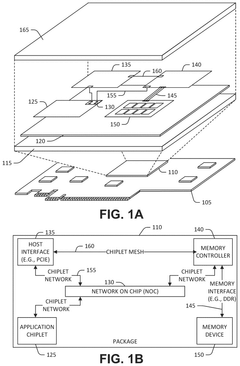

- The implementation of a compute-near-memory (CNM) system with hybrid threading processors and fabrics that allow on-demand thread rescheduling and efficient data access, utilizing chiplet-based architectures for scalable and high-bandwidth memory access, and custom compute fabrics to enhance performance and reduce latency.

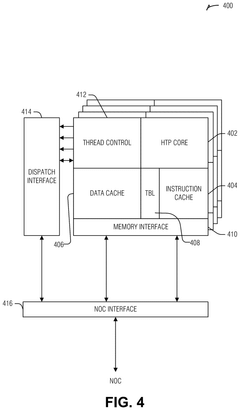

Edge interface placements to enable chiplet rotation into multi-chiplet cluster

PatentPendingUS20240332257A1

Innovation

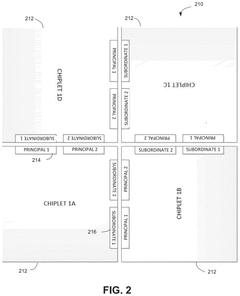

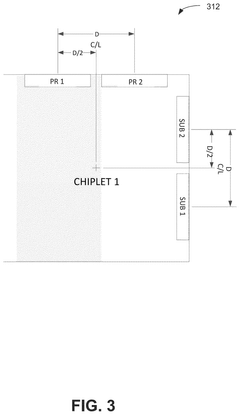

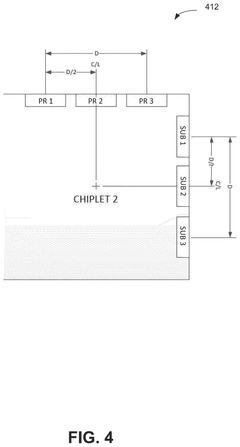

- The implementation of a chiplet architecture that uses a network-on-chip (NOC) with a chiplet protocol interface (CPI) to facilitate high-speed, flexible inter-chiplet communication, combined with a tightly packed matrix arrangement of chiplets and I/O micro-bumps for close-coupled interconnects, allowing for proper alignment and minimal footprint.

Thermal Management

Thermal management is a critical aspect of optimizing chiplet performance in edge computing environments. As edge devices become more powerful and compact, the challenge of dissipating heat effectively becomes increasingly significant. In chiplet-based architectures, where multiple dies are integrated into a single package, thermal considerations are paramount to ensure optimal performance and reliability.

One of the primary thermal management strategies for chiplets in edge computing is the implementation of advanced cooling solutions. These may include passive cooling techniques such as heat spreaders and heat sinks, as well as active cooling methods like forced air or liquid cooling systems. The choice of cooling solution depends on the specific requirements of the edge device, including its form factor, power consumption, and environmental conditions.

Another key approach to thermal management is the strategic placement of chiplets within the package. By carefully arranging the chiplets and considering their thermal characteristics, designers can optimize heat distribution and minimize hotspots. This may involve placing high-power chiplets closer to cooling interfaces or separating them to prevent thermal coupling.

Power management techniques also play a crucial role in thermal control. Dynamic voltage and frequency scaling (DVFS) can be employed to adjust the performance and power consumption of individual chiplets based on workload and thermal conditions. This allows for fine-grained control over heat generation, ensuring that the system operates within its thermal envelope while maximizing performance when possible.

Thermal-aware task scheduling is another important consideration in edge computing environments. By intelligently distributing computational tasks across chiplets, taking into account their thermal profiles and current temperatures, the system can balance the workload to prevent localized overheating and maintain overall thermal stability.

Advanced packaging technologies, such as through-silicon vias (TSVs) and interposers, can also contribute to improved thermal management. These technologies enable more efficient heat dissipation paths and can incorporate thermal management features directly into the package design.

Monitoring and adaptive control systems are essential for effective thermal management in chiplet-based edge devices. Real-time temperature sensing and feedback mechanisms allow for dynamic adjustments to cooling systems, power states, and workload distribution, ensuring optimal performance while maintaining safe operating temperatures.

One of the primary thermal management strategies for chiplets in edge computing is the implementation of advanced cooling solutions. These may include passive cooling techniques such as heat spreaders and heat sinks, as well as active cooling methods like forced air or liquid cooling systems. The choice of cooling solution depends on the specific requirements of the edge device, including its form factor, power consumption, and environmental conditions.

Another key approach to thermal management is the strategic placement of chiplets within the package. By carefully arranging the chiplets and considering their thermal characteristics, designers can optimize heat distribution and minimize hotspots. This may involve placing high-power chiplets closer to cooling interfaces or separating them to prevent thermal coupling.

Power management techniques also play a crucial role in thermal control. Dynamic voltage and frequency scaling (DVFS) can be employed to adjust the performance and power consumption of individual chiplets based on workload and thermal conditions. This allows for fine-grained control over heat generation, ensuring that the system operates within its thermal envelope while maximizing performance when possible.

Thermal-aware task scheduling is another important consideration in edge computing environments. By intelligently distributing computational tasks across chiplets, taking into account their thermal profiles and current temperatures, the system can balance the workload to prevent localized overheating and maintain overall thermal stability.

Advanced packaging technologies, such as through-silicon vias (TSVs) and interposers, can also contribute to improved thermal management. These technologies enable more efficient heat dissipation paths and can incorporate thermal management features directly into the package design.

Monitoring and adaptive control systems are essential for effective thermal management in chiplet-based edge devices. Real-time temperature sensing and feedback mechanisms allow for dynamic adjustments to cooling systems, power states, and workload distribution, ensuring optimal performance while maintaining safe operating temperatures.

Power Efficiency

Power efficiency is a critical factor in optimizing chiplet performance for edge computing environments. As edge devices often operate on limited power resources, maximizing computational output while minimizing energy consumption is paramount. Chiplet-based designs offer significant advantages in this regard, allowing for more efficient power distribution and management across multiple smaller dies.

One key strategy for enhancing power efficiency in chiplet-based edge computing systems is the implementation of advanced power gating techniques. By selectively powering down inactive chiplets or portions of chiplets, overall power consumption can be substantially reduced without compromising performance when needed. This approach is particularly effective in edge computing scenarios where workloads may vary significantly over time.

Dynamic voltage and frequency scaling (DVFS) is another crucial technique for optimizing power efficiency in chiplet designs. By dynamically adjusting the voltage and clock frequency of individual chiplets based on workload demands, power consumption can be fine-tuned to match computational requirements. This granular control allows for a more efficient balance between performance and power usage, especially in the diverse and often unpredictable workloads typical of edge computing environments.

Thermal management plays a vital role in maintaining power efficiency for chiplet-based edge devices. Efficient heat dissipation not only prevents performance degradation but also reduces the need for power-hungry cooling solutions. Advanced packaging technologies, such as 2.5D and 3D integration, can improve thermal characteristics by optimizing heat spread across the chiplet array. Additionally, incorporating on-die temperature sensors and intelligent thermal management algorithms can help maintain optimal operating conditions while minimizing power consumption.

Inter-chiplet communication is another area where power efficiency can be significantly improved. By optimizing the design of high-bandwidth, low-power interconnects between chiplets, data transfer energy costs can be reduced. Technologies such as silicon interposers and advanced through-silicon vias (TSVs) enable more efficient communication pathways, minimizing power loss during data exchange between chiplets.

Lastly, the integration of specialized accelerators as dedicated chiplets can greatly enhance power efficiency for specific edge computing tasks. By offloading computationally intensive operations to purpose-built chiplets optimized for energy efficiency, overall system power consumption can be reduced while maintaining or even improving performance for key workloads.

One key strategy for enhancing power efficiency in chiplet-based edge computing systems is the implementation of advanced power gating techniques. By selectively powering down inactive chiplets or portions of chiplets, overall power consumption can be substantially reduced without compromising performance when needed. This approach is particularly effective in edge computing scenarios where workloads may vary significantly over time.

Dynamic voltage and frequency scaling (DVFS) is another crucial technique for optimizing power efficiency in chiplet designs. By dynamically adjusting the voltage and clock frequency of individual chiplets based on workload demands, power consumption can be fine-tuned to match computational requirements. This granular control allows for a more efficient balance between performance and power usage, especially in the diverse and often unpredictable workloads typical of edge computing environments.

Thermal management plays a vital role in maintaining power efficiency for chiplet-based edge devices. Efficient heat dissipation not only prevents performance degradation but also reduces the need for power-hungry cooling solutions. Advanced packaging technologies, such as 2.5D and 3D integration, can improve thermal characteristics by optimizing heat spread across the chiplet array. Additionally, incorporating on-die temperature sensors and intelligent thermal management algorithms can help maintain optimal operating conditions while minimizing power consumption.

Inter-chiplet communication is another area where power efficiency can be significantly improved. By optimizing the design of high-bandwidth, low-power interconnects between chiplets, data transfer energy costs can be reduced. Technologies such as silicon interposers and advanced through-silicon vias (TSVs) enable more efficient communication pathways, minimizing power loss during data exchange between chiplets.

Lastly, the integration of specialized accelerators as dedicated chiplets can greatly enhance power efficiency for specific edge computing tasks. By offloading computationally intensive operations to purpose-built chiplets optimized for energy efficiency, overall system power consumption can be reduced while maintaining or even improving performance for key workloads.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!