Data-integrity frameworks for QC systems: audit trails, LIMS and sample metadata standards

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

QC Data Integrity Evolution and Objectives

Quality control (QC) data integrity frameworks have evolved significantly over the past decades, transitioning from paper-based documentation systems to sophisticated digital solutions. The journey began in the 1970s with manual record-keeping, where data integrity relied heavily on human diligence and physical security measures. The 1980s saw the emergence of early computerized systems, though these lacked robust audit capabilities and standardized approaches to data management.

The 1990s marked a pivotal shift with the introduction of the first Laboratory Information Management Systems (LIMS), establishing foundational concepts for electronic data integrity. However, these early systems often operated in isolation with limited interoperability. The early 2000s brought increased regulatory focus, particularly with the FDA's 21 CFR Part 11 regulations mandating controls for electronic records and signatures in pharmaceutical and biotech industries.

By the 2010s, integrated QC data integrity frameworks began incorporating comprehensive audit trails, capturing who performed actions, what changes were made, when they occurred, and why. This period also saw the development of more sophisticated LIMS platforms with enhanced security features, automated workflows, and improved data validation capabilities.

The current technological landscape features cloud-based LIMS solutions, real-time monitoring capabilities, and advanced analytics for detecting data anomalies. Modern frameworks emphasize the ALCOA+ principles: Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available.

Looking forward, the primary objectives for QC data integrity frameworks center on several key areas. First is achieving seamless integration across diverse laboratory systems and equipment, enabling comprehensive data flow while maintaining integrity throughout the entire analytical lifecycle. Second is the standardization of sample metadata to ensure consistency and comparability across different laboratories and methodologies.

Another critical objective is enhancing security measures to protect against increasingly sophisticated cyber threats while maintaining system accessibility. The industry also aims to develop more intelligent audit trail systems capable of risk-based monitoring and anomaly detection through artificial intelligence and machine learning algorithms.

Finally, there is a growing focus on creating adaptive frameworks that can evolve with changing regulatory requirements while supporting innovation. These frameworks must balance compliance needs with operational efficiency, ensuring that data integrity measures enhance rather than hinder scientific progress and quality control processes.

The 1990s marked a pivotal shift with the introduction of the first Laboratory Information Management Systems (LIMS), establishing foundational concepts for electronic data integrity. However, these early systems often operated in isolation with limited interoperability. The early 2000s brought increased regulatory focus, particularly with the FDA's 21 CFR Part 11 regulations mandating controls for electronic records and signatures in pharmaceutical and biotech industries.

By the 2010s, integrated QC data integrity frameworks began incorporating comprehensive audit trails, capturing who performed actions, what changes were made, when they occurred, and why. This period also saw the development of more sophisticated LIMS platforms with enhanced security features, automated workflows, and improved data validation capabilities.

The current technological landscape features cloud-based LIMS solutions, real-time monitoring capabilities, and advanced analytics for detecting data anomalies. Modern frameworks emphasize the ALCOA+ principles: Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available.

Looking forward, the primary objectives for QC data integrity frameworks center on several key areas. First is achieving seamless integration across diverse laboratory systems and equipment, enabling comprehensive data flow while maintaining integrity throughout the entire analytical lifecycle. Second is the standardization of sample metadata to ensure consistency and comparability across different laboratories and methodologies.

Another critical objective is enhancing security measures to protect against increasingly sophisticated cyber threats while maintaining system accessibility. The industry also aims to develop more intelligent audit trail systems capable of risk-based monitoring and anomaly detection through artificial intelligence and machine learning algorithms.

Finally, there is a growing focus on creating adaptive frameworks that can evolve with changing regulatory requirements while supporting innovation. These frameworks must balance compliance needs with operational efficiency, ensuring that data integrity measures enhance rather than hinder scientific progress and quality control processes.

Market Demand Analysis for Robust QC Data Systems

The demand for robust quality control (QC) data systems has experienced significant growth across multiple industries, particularly in pharmaceuticals, biotechnology, food safety, and environmental monitoring. This surge is primarily driven by increasing regulatory requirements, the need for operational efficiency, and the growing complexity of product development processes.

Regulatory bodies worldwide, including the FDA, EMA, and WHO, have intensified their focus on data integrity in quality control processes. The FDA's guidance on data integrity emphasizes the ALCOA+ principles (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available), creating substantial market pressure for compliant systems. Organizations are increasingly seeking comprehensive solutions that can demonstrate adherence to these principles through robust audit trails and metadata management.

The global laboratory information management system (LIMS) market, a critical component of QC data integrity frameworks, was valued at approximately $1.7 billion in 2021 and is projected to reach $3.2 billion by 2028, growing at a CAGR of 9.2%. This growth reflects the urgent need for systems that can ensure data integrity while streamlining laboratory operations.

Healthcare and pharmaceutical sectors represent the largest market segments, accounting for over 35% of the total market share. These industries face particularly stringent regulatory requirements and complex data management challenges due to the critical nature of their products and the potential impact on public health.

Market research indicates that over 70% of quality professionals cite data integrity as their top concern in quality management systems. Organizations are increasingly seeking integrated solutions that combine audit trail capabilities, LIMS functionality, and standardized metadata management rather than disparate systems that create data silos and integrity risks.

The COVID-19 pandemic has accelerated market demand, as remote work requirements highlighted vulnerabilities in paper-based or partially digitized QC systems. This has created a significant market opportunity for cloud-based solutions that enable secure, remote access to quality data while maintaining compliance with regulatory standards.

Geographically, North America leads the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is experiencing the fastest growth rate as manufacturing capabilities expand and regulatory frameworks mature in countries like China and India.

Customer requirements are evolving beyond basic compliance to include advanced analytics capabilities, integration with other enterprise systems, and support for emerging technologies like artificial intelligence and machine learning for predictive quality management.

Regulatory bodies worldwide, including the FDA, EMA, and WHO, have intensified their focus on data integrity in quality control processes. The FDA's guidance on data integrity emphasizes the ALCOA+ principles (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available), creating substantial market pressure for compliant systems. Organizations are increasingly seeking comprehensive solutions that can demonstrate adherence to these principles through robust audit trails and metadata management.

The global laboratory information management system (LIMS) market, a critical component of QC data integrity frameworks, was valued at approximately $1.7 billion in 2021 and is projected to reach $3.2 billion by 2028, growing at a CAGR of 9.2%. This growth reflects the urgent need for systems that can ensure data integrity while streamlining laboratory operations.

Healthcare and pharmaceutical sectors represent the largest market segments, accounting for over 35% of the total market share. These industries face particularly stringent regulatory requirements and complex data management challenges due to the critical nature of their products and the potential impact on public health.

Market research indicates that over 70% of quality professionals cite data integrity as their top concern in quality management systems. Organizations are increasingly seeking integrated solutions that combine audit trail capabilities, LIMS functionality, and standardized metadata management rather than disparate systems that create data silos and integrity risks.

The COVID-19 pandemic has accelerated market demand, as remote work requirements highlighted vulnerabilities in paper-based or partially digitized QC systems. This has created a significant market opportunity for cloud-based solutions that enable secure, remote access to quality data while maintaining compliance with regulatory standards.

Geographically, North America leads the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is experiencing the fastest growth rate as manufacturing capabilities expand and regulatory frameworks mature in countries like China and India.

Customer requirements are evolving beyond basic compliance to include advanced analytics capabilities, integration with other enterprise systems, and support for emerging technologies like artificial intelligence and machine learning for predictive quality management.

Current Challenges in QC Data Integrity Frameworks

Quality control systems face significant data integrity challenges in today's complex regulatory environment. Regulatory bodies including FDA, EMA, and MHRA have intensified their focus on data integrity in pharmaceutical and life sciences sectors, resulting in increased warning letters and observations related to data management deficiencies. These regulatory pressures demand more robust frameworks for ensuring data reliability throughout the entire quality control process.

The integration of disparate systems presents a major challenge for QC data integrity. Many organizations operate with a patchwork of legacy systems, modern LIMS platforms, and specialized analytical instruments, creating data silos that complicate comprehensive audit trail maintenance. These integration gaps often result in manual data transfers that introduce error risks and compromise data integrity.

Audit trail functionality across QC systems frequently lacks standardization and completeness. While modern LIMS platforms typically offer robust audit capabilities, many organizations struggle with implementing consistent audit trail practices across their entire QC ecosystem. Particular challenges include capturing metadata changes, documenting review processes, and maintaining the context of data modifications throughout the sample lifecycle.

Data security vulnerabilities persist despite technological advancements. Shared login credentials, insufficient access controls, and inadequate user permission management create opportunities for unauthorized data manipulation. The balance between security requirements and operational efficiency remains difficult to achieve, especially in high-throughput QC environments where multiple analysts may need simultaneous system access.

Metadata standardization represents another significant challenge. The lack of consistent metadata standards across instruments, systems, and organizational units leads to interpretation difficulties and compromises data searchability and traceability. This standardization gap becomes particularly problematic during technology transfers, mergers, or when working with contract testing organizations.

The human factor continues to challenge data integrity frameworks. Staff training on data integrity principles often fails to address the specific operational contexts of QC systems. Additionally, the complexity of modern LIMS and analytical systems can overwhelm users, leading to workarounds that undermine data integrity controls.

Validation and change management processes for QC systems frequently struggle to keep pace with software updates and regulatory expectations. Many organizations find themselves operating partially validated systems or delaying critical updates due to the resource-intensive nature of system validation, creating potential compliance gaps and security vulnerabilities.

The integration of disparate systems presents a major challenge for QC data integrity. Many organizations operate with a patchwork of legacy systems, modern LIMS platforms, and specialized analytical instruments, creating data silos that complicate comprehensive audit trail maintenance. These integration gaps often result in manual data transfers that introduce error risks and compromise data integrity.

Audit trail functionality across QC systems frequently lacks standardization and completeness. While modern LIMS platforms typically offer robust audit capabilities, many organizations struggle with implementing consistent audit trail practices across their entire QC ecosystem. Particular challenges include capturing metadata changes, documenting review processes, and maintaining the context of data modifications throughout the sample lifecycle.

Data security vulnerabilities persist despite technological advancements. Shared login credentials, insufficient access controls, and inadequate user permission management create opportunities for unauthorized data manipulation. The balance between security requirements and operational efficiency remains difficult to achieve, especially in high-throughput QC environments where multiple analysts may need simultaneous system access.

Metadata standardization represents another significant challenge. The lack of consistent metadata standards across instruments, systems, and organizational units leads to interpretation difficulties and compromises data searchability and traceability. This standardization gap becomes particularly problematic during technology transfers, mergers, or when working with contract testing organizations.

The human factor continues to challenge data integrity frameworks. Staff training on data integrity principles often fails to address the specific operational contexts of QC systems. Additionally, the complexity of modern LIMS and analytical systems can overwhelm users, leading to workarounds that undermine data integrity controls.

Validation and change management processes for QC systems frequently struggle to keep pace with software updates and regulatory expectations. Many organizations find themselves operating partially validated systems or delaying critical updates due to the resource-intensive nature of system validation, creating potential compliance gaps and security vulnerabilities.

Existing Data Integrity Solutions for QC Systems

01 Blockchain-based data integrity frameworks

Blockchain technology is utilized to create secure data integrity frameworks that ensure the immutability and transparency of data. These frameworks leverage distributed ledger technology to create tamper-proof records, allowing for verification of data integrity across multiple nodes. The decentralized nature of blockchain provides enhanced security against unauthorized modifications, making it particularly valuable for applications requiring high levels of data assurance and auditability.- Blockchain-based data integrity frameworks: Blockchain technology is utilized to create secure data integrity frameworks that ensure the immutability and transparency of data. These frameworks leverage distributed ledger technology to create tamper-proof records, allowing for verification of data integrity across multiple nodes. The decentralized nature of blockchain provides enhanced security against unauthorized modifications, making it particularly valuable for applications requiring high levels of data assurance and auditability.

- Error detection and correction mechanisms: Data integrity frameworks implement various error detection and correction mechanisms to identify and rectify data corruption. These mechanisms include checksums, cyclic redundancy checks (CRCs), and parity bits that can detect when data has been altered during storage or transmission. Advanced error correction codes allow systems to not only detect errors but also reconstruct the original data, ensuring integrity even in environments prone to data corruption or loss.

- Cryptographic verification systems: Cryptographic techniques form the foundation of many data integrity frameworks, providing mathematical assurance of data authenticity and integrity. These systems employ hash functions, digital signatures, and encryption algorithms to create verifiable proofs that data remains unchanged. Cryptographic verification allows systems to detect unauthorized modifications to data and validate the source of information, creating trust in digital environments where data integrity is critical.

- Database integrity and transaction management: Frameworks for ensuring data integrity in database systems focus on maintaining consistency during transactions and operations. These frameworks implement ACID (Atomicity, Consistency, Isolation, Durability) principles to guarantee that database operations either complete entirely or fail without partial changes. Advanced transaction management techniques, including rollback mechanisms and journaling, protect against data corruption during system failures or concurrent access scenarios.

- Automated integrity monitoring and recovery systems: Continuous monitoring systems actively verify data integrity across storage systems and networks, automatically detecting and responding to integrity violations. These frameworks employ agents that periodically validate data against known good states or checksums, generating alerts when discrepancies are found. Self-healing capabilities allow systems to automatically restore corrupted data from backups or redundant sources, minimizing downtime and ensuring persistent data integrity even after failure events.

02 Error detection and correction mechanisms

Data integrity frameworks incorporate various error detection and correction mechanisms to identify and rectify data corruption. These mechanisms include checksums, cyclic redundancy checks (CRCs), and parity bits that can detect when data has been altered during storage or transmission. Advanced frameworks implement forward error correction techniques that not only detect errors but also automatically correct them, ensuring data remains intact throughout its lifecycle.Expand Specific Solutions03 Cryptographic verification systems

Cryptographic techniques form the foundation of many data integrity frameworks, providing mechanisms to verify that data has not been tampered with. These systems utilize digital signatures, hash functions, and encryption algorithms to create verifiable proofs of data integrity. By generating unique cryptographic identifiers for data sets, any unauthorized modifications can be readily detected, ensuring the authenticity and integrity of critical information across storage and transmission processes.Expand Specific Solutions04 Database integrity and transaction management

Frameworks for ensuring data integrity in database systems focus on maintaining consistency during transactions and preventing corruption. These frameworks implement ACID (Atomicity, Consistency, Isolation, Durability) properties to ensure reliable transaction processing. They include mechanisms such as journaling, write-ahead logging, and two-phase commit protocols that protect against data inconsistencies resulting from system failures, concurrent access issues, or incomplete transactions.Expand Specific Solutions05 Automated data integrity monitoring and recovery

Advanced data integrity frameworks incorporate continuous monitoring systems that automatically detect and respond to integrity violations. These frameworks employ agents that regularly verify data consistency, generate alerts when anomalies are detected, and initiate recovery procedures. They often include self-healing capabilities that can restore corrupted data from secure backups or redundant storage, minimizing downtime and data loss while maintaining operational continuity.Expand Specific Solutions

Leading LIMS Vendors and Market Landscape

The data integrity frameworks for QC systems market is in a growth phase, driven by increasing regulatory requirements and digital transformation in laboratory operations. The market is expanding as organizations prioritize data integrity in quality control processes, with an estimated market size reaching several billion dollars. Technologically, solutions are maturing rapidly with established players like IBM, Thermo Fisher Scientific, and Abbott Laboratories offering comprehensive LIMS solutions with robust audit trail capabilities. Emerging companies such as DiscernDX and Biomatrica are introducing innovative approaches to sample metadata standardization, while specialized providers like Eurofins TestOil focus on industry-specific implementations. The convergence of traditional laboratory systems with cloud computing and AI technologies is accelerating market development and adoption across pharmaceutical, healthcare, and industrial sectors.

International Business Machines Corp.

Technical Solution: IBM has developed Watson Health's Life Sciences solutions that incorporate advanced data integrity frameworks for quality control systems. Their approach combines blockchain technology with traditional LIMS to create immutable audit trails that prevent unauthorized data manipulation[3]. IBM's QC data integrity framework implements a multi-layered security architecture with role-based permissions, automated data validation rules, and comprehensive audit logging that captures all system interactions. Their metadata management system employs standardized ontologies and controlled vocabularies to ensure consistent sample annotation across different laboratory workflows and instruments[4]. IBM's solutions leverage AI capabilities to detect anomalous patterns in data that might indicate integrity issues, providing proactive monitoring of quality control processes. The platform supports integration with various laboratory equipment through standardized APIs and data exchange protocols.

Strengths: Advanced AI and blockchain integration for enhanced data security; enterprise-grade scalability; sophisticated analytics capabilities for quality monitoring. Weaknesses: Higher cost structure than specialized LIMS providers; complex implementation requiring significant customization; potential vendor lock-in with proprietary technologies.

Thermo Fisher Scientific (Bremen) GmbH

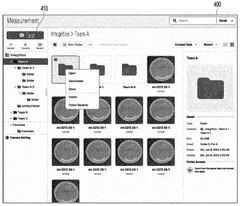

Technical Solution: Thermo Fisher Scientific has developed comprehensive Laboratory Information Management Systems (LIMS) with robust data integrity frameworks specifically designed for quality control environments. Their SampleManager LIMS platform incorporates complete audit trail functionality that tracks all data modifications, user actions, and system events in compliance with FDA 21 CFR Part 11 and EU Annex 11 regulations[1]. The system features electronic signatures, time-stamped audit trails, and role-based access controls to ensure data authenticity. Their platform integrates seamlessly with analytical instruments and enterprise systems, providing end-to-end sample tracking with standardized metadata schemas that support data interoperability across the laboratory ecosystem[2]. Thermo Fisher's LIMS solutions also include built-in validation tools and compliance management features to maintain data integrity throughout the sample lifecycle.

Strengths: Industry-leading integration capabilities with analytical instruments; comprehensive compliance features for regulated industries; scalable enterprise architecture. Weaknesses: Higher implementation costs compared to smaller vendors; complex deployment requiring significant IT resources; steeper learning curve for end users.

Key Innovations in Sample Metadata Standardization

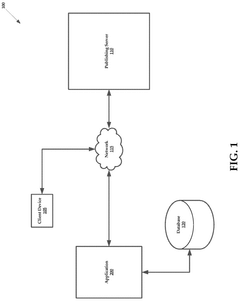

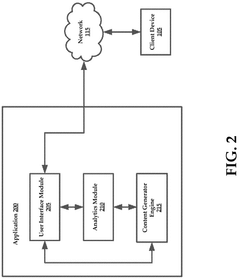

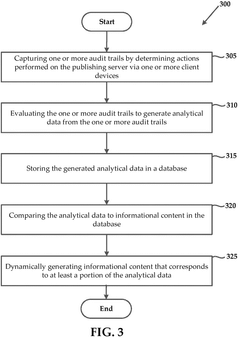

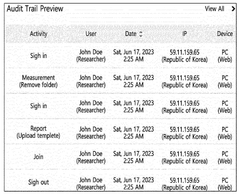

Systems and methods of generating analytical data based on captured audit trails

PatentActiveUS12222912B2

Innovation

- The system captures audit trails of actions performed on publishing servers by client devices, generates analytical data from these trails, and uses this data to dynamically modify informational content in real-time, ensuring it aligns with user preferences.

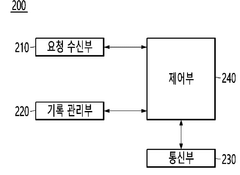

Method and system for supporting recording of user activities

PatentWO2025136018A1

Innovation

- A method and system that receive requests to record user activities for audit trails, recording information including the type of activity, user details, and timestamp, while restricting changes to the recorded data.

Regulatory Compliance Requirements for QC Data Systems

Quality Control (QC) systems in regulated industries must adhere to stringent regulatory frameworks that govern data integrity and management. The FDA's 21 CFR Part 11 establishes requirements for electronic records and signatures, mandating comprehensive audit trails that document all system activities and changes. These regulations require that QC data systems maintain complete, consistent, and accurate records throughout the data lifecycle.

The European Medicines Agency (EMA) has established similar guidelines through Annex 11 of the EU GMP Guide, which emphasizes the validation of computerized systems and the implementation of appropriate controls for electronic data. Both FDA and EMA frameworks emphasize ALCOA+ principles (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available), which serve as the foundation for data integrity compliance.

ISO/IEC 17025, specifically designed for testing and calibration laboratories, outlines requirements for competence, impartiality, and consistent operation. This standard necessitates robust data management systems that ensure traceability and reliability of test results, directly impacting QC data system design and implementation.

The pharmaceutical industry faces additional compliance requirements through PIC/S (Pharmaceutical Inspection Co-operation Scheme) guidelines, which provide detailed expectations for data governance and integrity. These guidelines emphasize risk-based approaches to data management and require organizations to implement appropriate technical and procedural controls.

Recent regulatory trends show increased scrutiny of metadata management within QC systems. Regulatory bodies now expect organizations to maintain comprehensive metadata standards that document sample origins, testing conditions, and analytical parameters. This shift reflects growing recognition that contextual information is essential for ensuring data integrity and facilitating meaningful data review.

Compliance with these regulations necessitates implementation of Laboratory Information Management Systems (LIMS) with robust audit trail capabilities. Modern LIMS must capture all data modifications, including the identity of individuals making changes, timestamps, and justifications for alterations. These systems must also prevent unauthorized access and ensure data cannot be deleted or manipulated without appropriate documentation.

Regulatory bodies increasingly expect integration between QC data systems and broader quality management frameworks. This integration ensures that data integrity controls align with overall quality objectives and facilitates comprehensive compliance monitoring across the organization.

The European Medicines Agency (EMA) has established similar guidelines through Annex 11 of the EU GMP Guide, which emphasizes the validation of computerized systems and the implementation of appropriate controls for electronic data. Both FDA and EMA frameworks emphasize ALCOA+ principles (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available), which serve as the foundation for data integrity compliance.

ISO/IEC 17025, specifically designed for testing and calibration laboratories, outlines requirements for competence, impartiality, and consistent operation. This standard necessitates robust data management systems that ensure traceability and reliability of test results, directly impacting QC data system design and implementation.

The pharmaceutical industry faces additional compliance requirements through PIC/S (Pharmaceutical Inspection Co-operation Scheme) guidelines, which provide detailed expectations for data governance and integrity. These guidelines emphasize risk-based approaches to data management and require organizations to implement appropriate technical and procedural controls.

Recent regulatory trends show increased scrutiny of metadata management within QC systems. Regulatory bodies now expect organizations to maintain comprehensive metadata standards that document sample origins, testing conditions, and analytical parameters. This shift reflects growing recognition that contextual information is essential for ensuring data integrity and facilitating meaningful data review.

Compliance with these regulations necessitates implementation of Laboratory Information Management Systems (LIMS) with robust audit trail capabilities. Modern LIMS must capture all data modifications, including the identity of individuals making changes, timestamps, and justifications for alterations. These systems must also prevent unauthorized access and ensure data cannot be deleted or manipulated without appropriate documentation.

Regulatory bodies increasingly expect integration between QC data systems and broader quality management frameworks. This integration ensures that data integrity controls align with overall quality objectives and facilitates comprehensive compliance monitoring across the organization.

Risk Assessment Methodologies for Data Integrity

Risk assessment for data integrity in quality control systems requires a structured approach to identify, evaluate, and mitigate potential vulnerabilities. The ALCOA+ principles (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available) serve as the foundation for comprehensive risk assessment methodologies. These principles guide organizations in evaluating their data management practices against established regulatory standards.

A tiered risk assessment approach is commonly employed, beginning with system-level evaluation that examines the overall architecture of QC data management systems. This includes assessing the integration points between audit trails, Laboratory Information Management Systems (LIMS), and metadata repositories. The interconnectivity between these components often represents critical vulnerability points where data integrity may be compromised.

Process-level risk assessment focuses on workflow analysis, examining how data flows through various stages of laboratory operations. This includes sample receipt, preparation, analysis, and reporting phases. Each transition point presents unique risks to data integrity that must be systematically evaluated. Methodologies such as Failure Mode and Effects Analysis (FMEA) and Hazard Analysis and Critical Control Points (HACCP) have been adapted from manufacturing quality systems to address laboratory data integrity concerns.

Data-specific risk assessment methodologies examine the nature of the data itself, considering factors such as criticality, complexity, and regulatory impact. This approach typically involves mapping data elements to their corresponding metadata standards and evaluating compliance with industry frameworks such as FAIR (Findable, Accessible, Interoperable, Reusable) principles for scientific data management.

Quantitative risk scoring systems have emerged as valuable tools for prioritizing remediation efforts. These systems typically evaluate factors such as detection probability, occurrence likelihood, and severity of impact. The resulting risk priority numbers (RPNs) enable organizations to allocate resources efficiently toward the most critical data integrity vulnerabilities.

Periodic reassessment methodologies incorporate continuous monitoring approaches that leverage automated data integrity checking algorithms. These systems can detect anomalies in audit trail patterns, metadata consistency, and user behavior that may indicate potential integrity breaches. Machine learning techniques are increasingly being applied to identify subtle patterns that might escape traditional rule-based detection methods.

Cross-functional risk assessment teams comprising quality assurance, IT security, laboratory operations, and regulatory compliance specialists provide comprehensive evaluation perspectives. This multidisciplinary approach ensures that technical, procedural, and regulatory considerations are adequately addressed in the risk assessment process.

A tiered risk assessment approach is commonly employed, beginning with system-level evaluation that examines the overall architecture of QC data management systems. This includes assessing the integration points between audit trails, Laboratory Information Management Systems (LIMS), and metadata repositories. The interconnectivity between these components often represents critical vulnerability points where data integrity may be compromised.

Process-level risk assessment focuses on workflow analysis, examining how data flows through various stages of laboratory operations. This includes sample receipt, preparation, analysis, and reporting phases. Each transition point presents unique risks to data integrity that must be systematically evaluated. Methodologies such as Failure Mode and Effects Analysis (FMEA) and Hazard Analysis and Critical Control Points (HACCP) have been adapted from manufacturing quality systems to address laboratory data integrity concerns.

Data-specific risk assessment methodologies examine the nature of the data itself, considering factors such as criticality, complexity, and regulatory impact. This approach typically involves mapping data elements to their corresponding metadata standards and evaluating compliance with industry frameworks such as FAIR (Findable, Accessible, Interoperable, Reusable) principles for scientific data management.

Quantitative risk scoring systems have emerged as valuable tools for prioritizing remediation efforts. These systems typically evaluate factors such as detection probability, occurrence likelihood, and severity of impact. The resulting risk priority numbers (RPNs) enable organizations to allocate resources efficiently toward the most critical data integrity vulnerabilities.

Periodic reassessment methodologies incorporate continuous monitoring approaches that leverage automated data integrity checking algorithms. These systems can detect anomalies in audit trail patterns, metadata consistency, and user behavior that may indicate potential integrity breaches. Machine learning techniques are increasingly being applied to identify subtle patterns that might escape traditional rule-based detection methods.

Cross-functional risk assessment teams comprising quality assurance, IT security, laboratory operations, and regulatory compliance specialists provide comprehensive evaluation perspectives. This multidisciplinary approach ensures that technical, procedural, and regulatory considerations are adequately addressed in the risk assessment process.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!