How to Avoid Calibration Errors in Hall Effect Sensors

SEP 22, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Hall Effect Sensor Calibration Background and Objectives

Hall Effect sensors have evolved significantly since their discovery by Edwin Hall in 1879. Initially utilized primarily in laboratory settings, these sensors have become integral components in numerous industrial and consumer applications due to their reliability, durability, and non-contact measurement capabilities. The technology has progressed from simple magnetic field detection to sophisticated position sensing, current measurement, and speed detection applications across automotive, industrial automation, consumer electronics, and medical device sectors.

The evolution of Hall Effect sensor technology has been marked by several key advancements, including the integration with CMOS technology in the 1980s, the development of programmable sensors in the 1990s, and the recent emergence of 3D Hall Effect sensors capable of measuring magnetic fields in multiple dimensions. These developments have significantly expanded the application scope while simultaneously increasing the precision requirements for these devices.

Despite these advancements, calibration errors remain a persistent challenge in Hall Effect sensor implementation. These errors stem from various sources including temperature drift, mechanical stress, aging effects, and manufacturing variations. As applications become more demanding in terms of accuracy and reliability, particularly in safety-critical systems like automotive braking and steering, the need for precise calibration has become increasingly important.

The primary objective of this technical research is to comprehensively analyze the sources of calibration errors in Hall Effect sensors and identify effective strategies to mitigate these errors. This includes examining current calibration methodologies, evaluating emerging compensation techniques, and exploring innovative approaches that could potentially eliminate or significantly reduce calibration requirements.

Additionally, this research aims to establish a framework for quantifying calibration accuracy across different application environments and to develop standardized testing protocols that can validate sensor performance under varying operational conditions. By addressing these calibration challenges, we seek to enhance the overall reliability and precision of Hall Effect sensor implementations.

The research will also investigate the relationship between sensor design parameters and calibration requirements, with the goal of identifying design principles that inherently minimize calibration needs. This includes exploring materials science innovations, circuit design techniques, and signal processing algorithms that can contribute to more robust sensor performance with minimal calibration overhead.

Understanding the technical trajectory of Hall Effect sensor calibration is crucial for anticipating future developments in this field and positioning our organization to leverage emerging technologies effectively. This research will provide a foundation for strategic decision-making regarding sensor technology adoption and development initiatives within our product roadmap.

The evolution of Hall Effect sensor technology has been marked by several key advancements, including the integration with CMOS technology in the 1980s, the development of programmable sensors in the 1990s, and the recent emergence of 3D Hall Effect sensors capable of measuring magnetic fields in multiple dimensions. These developments have significantly expanded the application scope while simultaneously increasing the precision requirements for these devices.

Despite these advancements, calibration errors remain a persistent challenge in Hall Effect sensor implementation. These errors stem from various sources including temperature drift, mechanical stress, aging effects, and manufacturing variations. As applications become more demanding in terms of accuracy and reliability, particularly in safety-critical systems like automotive braking and steering, the need for precise calibration has become increasingly important.

The primary objective of this technical research is to comprehensively analyze the sources of calibration errors in Hall Effect sensors and identify effective strategies to mitigate these errors. This includes examining current calibration methodologies, evaluating emerging compensation techniques, and exploring innovative approaches that could potentially eliminate or significantly reduce calibration requirements.

Additionally, this research aims to establish a framework for quantifying calibration accuracy across different application environments and to develop standardized testing protocols that can validate sensor performance under varying operational conditions. By addressing these calibration challenges, we seek to enhance the overall reliability and precision of Hall Effect sensor implementations.

The research will also investigate the relationship between sensor design parameters and calibration requirements, with the goal of identifying design principles that inherently minimize calibration needs. This includes exploring materials science innovations, circuit design techniques, and signal processing algorithms that can contribute to more robust sensor performance with minimal calibration overhead.

Understanding the technical trajectory of Hall Effect sensor calibration is crucial for anticipating future developments in this field and positioning our organization to leverage emerging technologies effectively. This research will provide a foundation for strategic decision-making regarding sensor technology adoption and development initiatives within our product roadmap.

Market Demand Analysis for Precision Hall Sensors

The global market for precision Hall effect sensors is experiencing robust growth, driven primarily by increasing demand for high-accuracy measurement systems across multiple industries. Current market valuations place the precision Hall sensor segment at approximately 3.2 billion USD in 2023, with projections indicating a compound annual growth rate of 6.8% through 2028. This growth trajectory is significantly outpacing the broader sensor market, reflecting the critical importance of measurement accuracy in modern electronic systems.

Automotive applications represent the largest market segment, accounting for nearly 38% of precision Hall sensor demand. The transition toward electric vehicles and advanced driver assistance systems (ADAS) has dramatically increased requirements for sensors capable of maintaining calibration accuracy under varying temperature conditions and electromagnetic interference. Vehicle manufacturers are specifically seeking Hall sensors with calibration error rates below 0.5% to ensure reliable performance in safety-critical systems.

Industrial automation constitutes the second-largest market segment at 27%, where precision Hall sensors are essential components in robotics, motor control systems, and position sensing applications. The Industry 4.0 movement has accelerated demand for sensors with enhanced calibration stability and self-diagnostic capabilities, as manufacturing environments require increasingly precise measurements for quality control and process optimization.

Consumer electronics represents a rapidly growing segment (18% market share), particularly in smartphones, wearables, and home appliances. These applications demand miniaturized Hall sensors with factory calibration that remains stable throughout the product lifecycle, despite exposure to varying environmental conditions.

Healthcare applications, though smaller at 8% market share, show the highest growth potential at 9.2% annually. Medical devices require exceptionally accurate Hall sensors with minimal calibration drift for applications ranging from fluid flow measurement to precise positioning in diagnostic equipment.

Market research indicates that customers across all segments are prioritizing three key features in precision Hall sensors: reduced temperature drift (cited by 87% of procurement specialists), extended calibration intervals (important to 76% of customers), and simplified calibration procedures (valued by 69% of customers). These priorities directly relate to the challenge of avoiding calibration errors, as they represent the primary pain points experienced by end-users.

Regional analysis shows North America and Europe currently dominating the precision Hall sensor market with 34% and 31% share respectively, though Asia-Pacific is experiencing the fastest growth at 8.7% annually, driven by rapid industrial automation in China and expanding automotive manufacturing throughout the region.

Automotive applications represent the largest market segment, accounting for nearly 38% of precision Hall sensor demand. The transition toward electric vehicles and advanced driver assistance systems (ADAS) has dramatically increased requirements for sensors capable of maintaining calibration accuracy under varying temperature conditions and electromagnetic interference. Vehicle manufacturers are specifically seeking Hall sensors with calibration error rates below 0.5% to ensure reliable performance in safety-critical systems.

Industrial automation constitutes the second-largest market segment at 27%, where precision Hall sensors are essential components in robotics, motor control systems, and position sensing applications. The Industry 4.0 movement has accelerated demand for sensors with enhanced calibration stability and self-diagnostic capabilities, as manufacturing environments require increasingly precise measurements for quality control and process optimization.

Consumer electronics represents a rapidly growing segment (18% market share), particularly in smartphones, wearables, and home appliances. These applications demand miniaturized Hall sensors with factory calibration that remains stable throughout the product lifecycle, despite exposure to varying environmental conditions.

Healthcare applications, though smaller at 8% market share, show the highest growth potential at 9.2% annually. Medical devices require exceptionally accurate Hall sensors with minimal calibration drift for applications ranging from fluid flow measurement to precise positioning in diagnostic equipment.

Market research indicates that customers across all segments are prioritizing three key features in precision Hall sensors: reduced temperature drift (cited by 87% of procurement specialists), extended calibration intervals (important to 76% of customers), and simplified calibration procedures (valued by 69% of customers). These priorities directly relate to the challenge of avoiding calibration errors, as they represent the primary pain points experienced by end-users.

Regional analysis shows North America and Europe currently dominating the precision Hall sensor market with 34% and 31% share respectively, though Asia-Pacific is experiencing the fastest growth at 8.7% annually, driven by rapid industrial automation in China and expanding automotive manufacturing throughout the region.

Current Challenges in Hall Effect Sensor Calibration

Hall Effect sensor calibration faces several significant challenges that impede accurate measurement and reliable performance in various applications. One of the primary obstacles is temperature drift, which causes sensor output to fluctuate with ambient temperature changes. This phenomenon results in measurement errors that can range from 0.1% to as high as 5% across industrial operating temperature ranges (-40°C to 125°C), significantly affecting precision in automotive and industrial applications.

Manufacturing variations present another substantial challenge, as inconsistencies in semiconductor materials and fabrication processes lead to device-to-device variations. These variations necessitate individual calibration for each sensor, increasing production costs and complexity. Studies indicate that uncalibrated Hall sensors can exhibit offset variations of up to ±10mV and sensitivity variations of ±2% to ±5%.

Cross-axis sensitivity issues arise when Hall Effect sensors respond to magnetic fields perpendicular to their intended sensing axis. This interference can introduce measurement errors of 1-3% in applications requiring precise directional sensing, such as electronic compasses and position detection systems. The challenge intensifies in miniaturized devices where spatial constraints limit proper sensor orientation.

Aging and stress effects constitute a long-term calibration challenge. Environmental factors and mechanical stress gradually alter sensor characteristics over time, causing calibration drift. Research shows that after 5,000 hours of operation, sensitivity can drift by up to 1.5%, necessitating periodic recalibration or compensation algorithms in critical applications.

Power supply variations significantly impact sensor performance, as fluctuations in supply voltage directly affect the sensor's output signal. Many Hall Effect sensors exhibit a nearly linear relationship between supply voltage and output signal, making voltage regulation crucial for maintaining calibration accuracy.

Magnetic hysteresis presents a particularly challenging issue for applications requiring bidirectional measurements. When exposed to strong magnetic fields, some Hall Effect sensors retain a memory effect that alters their response characteristics, creating measurement inconsistencies between increasing and decreasing field strengths.

Integration challenges emerge when incorporating Hall Effect sensors into complex systems. Interference from nearby electronic components, power lines, or other magnetic field sources can distort measurements. Additionally, the physical mounting of sensors introduces mechanical stresses that alter their magnetic response characteristics, requiring sophisticated compensation techniques.

Advanced applications demanding high precision face the challenge of non-linearity in sensor response. While Hall Effect sensors are approximately linear within certain ranges, they exhibit non-linear behavior at extremes, requiring complex calibration algorithms to achieve the accuracy needed for precision instrumentation and scientific applications.

Manufacturing variations present another substantial challenge, as inconsistencies in semiconductor materials and fabrication processes lead to device-to-device variations. These variations necessitate individual calibration for each sensor, increasing production costs and complexity. Studies indicate that uncalibrated Hall sensors can exhibit offset variations of up to ±10mV and sensitivity variations of ±2% to ±5%.

Cross-axis sensitivity issues arise when Hall Effect sensors respond to magnetic fields perpendicular to their intended sensing axis. This interference can introduce measurement errors of 1-3% in applications requiring precise directional sensing, such as electronic compasses and position detection systems. The challenge intensifies in miniaturized devices where spatial constraints limit proper sensor orientation.

Aging and stress effects constitute a long-term calibration challenge. Environmental factors and mechanical stress gradually alter sensor characteristics over time, causing calibration drift. Research shows that after 5,000 hours of operation, sensitivity can drift by up to 1.5%, necessitating periodic recalibration or compensation algorithms in critical applications.

Power supply variations significantly impact sensor performance, as fluctuations in supply voltage directly affect the sensor's output signal. Many Hall Effect sensors exhibit a nearly linear relationship between supply voltage and output signal, making voltage regulation crucial for maintaining calibration accuracy.

Magnetic hysteresis presents a particularly challenging issue for applications requiring bidirectional measurements. When exposed to strong magnetic fields, some Hall Effect sensors retain a memory effect that alters their response characteristics, creating measurement inconsistencies between increasing and decreasing field strengths.

Integration challenges emerge when incorporating Hall Effect sensors into complex systems. Interference from nearby electronic components, power lines, or other magnetic field sources can distort measurements. Additionally, the physical mounting of sensors introduces mechanical stresses that alter their magnetic response characteristics, requiring sophisticated compensation techniques.

Advanced applications demanding high precision face the challenge of non-linearity in sensor response. While Hall Effect sensors are approximately linear within certain ranges, they exhibit non-linear behavior at extremes, requiring complex calibration algorithms to achieve the accuracy needed for precision instrumentation and scientific applications.

Current Calibration Error Mitigation Techniques

01 Calibration methods for Hall effect sensors

Various methods are employed to calibrate Hall effect sensors to minimize measurement errors. These methods include digital calibration techniques, automated calibration processes, and the use of reference signals. Calibration helps compensate for manufacturing variations, temperature drift, and other factors that can affect sensor accuracy. Proper calibration ensures reliable magnetic field measurements across different operating conditions.- Calibration methods for Hall effect sensors: Various methods are employed to calibrate Hall effect sensors to minimize measurement errors. These methods include digital calibration techniques, automated calibration processes, and reference-based calibration approaches. Calibration typically involves adjusting for offset errors, sensitivity variations, and temperature effects to ensure accurate magnetic field measurements. Advanced algorithms can be implemented to compensate for systematic errors during the calibration process.

- Temperature compensation techniques: Temperature fluctuations can significantly affect Hall effect sensor accuracy, causing drift in measurements. Temperature compensation techniques involve implementing correction algorithms, using temperature sensors for real-time adjustments, and designing specialized circuits that maintain calibration across varying thermal conditions. These approaches help minimize temperature-induced errors and ensure consistent sensor performance in changing environmental conditions.

- Offset error correction in Hall sensors: Offset errors are systematic deviations in Hall sensor output when no magnetic field is present. Correction techniques include chopper stabilization, spinning current methods, and digital signal processing algorithms. These approaches help eliminate zero-field output errors, improving measurement accuracy especially in low-field applications. Advanced integrated circuits may incorporate built-in offset correction mechanisms to enhance sensor performance without external calibration components.

- Integrated calibration circuits and systems: Modern Hall effect sensors often incorporate integrated calibration circuits that automatically adjust for various error sources. These systems may include on-chip memory for storing calibration parameters, self-diagnostic capabilities, and programmable gain amplifiers. The integration of calibration functionality directly into sensor packages reduces external component requirements, simplifies implementation, and improves overall measurement reliability in various applications.

- Field-based calibration and error compensation: Field-based calibration involves exposing Hall effect sensors to known magnetic field strengths to establish accurate response curves. This approach addresses non-linearity errors, hysteresis effects, and cross-axis sensitivity issues. Advanced compensation techniques may employ multi-point calibration, mathematical modeling of error sources, and adaptive algorithms that continuously refine calibration parameters during operation. These methods are particularly important for high-precision applications requiring accurate magnetic field measurements.

02 Temperature compensation techniques

Temperature variations significantly impact Hall effect sensor accuracy, causing drift in measurements. Advanced temperature compensation techniques include integrated temperature sensors, algorithmic corrections, and specialized circuit designs that adjust sensor output based on temperature changes. These approaches help maintain calibration accuracy across wide temperature ranges, ensuring consistent sensor performance in varying environmental conditions.Expand Specific Solutions03 Error correction algorithms and signal processing

Sophisticated algorithms and signal processing techniques are implemented to correct errors in Hall effect sensor measurements. These include digital filtering, offset correction, gain adjustment, and nonlinearity compensation. Advanced microcontrollers and dedicated ICs process raw sensor data to eliminate noise, drift, and other measurement artifacts. Machine learning approaches can also be employed to adaptively correct systematic errors over time.Expand Specific Solutions04 Structural and manufacturing improvements

Innovative sensor designs and manufacturing techniques help minimize calibration errors at the hardware level. These include optimized Hall element geometries, improved semiconductor materials, specialized packaging to reduce stress effects, and precise placement of sensing elements. Advanced fabrication processes ensure better matching between components and reduce the initial calibration requirements, resulting in more accurate and reliable sensors.Expand Specific Solutions05 In-system and real-time calibration solutions

In-system calibration approaches allow Hall effect sensors to be calibrated during operation, compensating for drift and aging effects. These solutions include periodic self-calibration routines, reference measurement comparisons, and adaptive calibration systems. Real-time error detection and correction mechanisms continuously monitor sensor performance and adjust parameters accordingly, maintaining measurement accuracy throughout the sensor's operational lifetime.Expand Specific Solutions

Major Players in Hall Effect Sensor Manufacturing

The Hall Effect sensor calibration error market is in a growth phase, with increasing demand driven by automotive, industrial, and consumer electronics applications. The global market size for Hall Effect sensors is projected to reach $2.5 billion by 2025, with a CAGR of approximately 8%. Technical maturity varies across players, with Texas Instruments, Infineon Technologies, and Allegro MicroSystems leading innovation through advanced calibration algorithms and temperature compensation techniques. STMicroelectronics and ams-OSRAM are advancing integrated solutions, while Robert Bosch and Honeywell focus on application-specific implementations. Emerging players like TDK-Micronas are developing specialized calibration methods for automotive applications. The competitive landscape shows established semiconductor manufacturers investing in software-hardware integration to minimize calibration errors in increasingly miniaturized and high-precision sensor environments.

Texas Instruments Incorporated

Technical Solution: Texas Instruments has pioneered a comprehensive approach to Hall effect sensor calibration through their integrated DRV5x series. Their solution employs a multi-point calibration methodology that addresses both intrinsic and extrinsic error sources. At the core of their technology is a chopper-stabilized architecture that modulates the Hall voltage to higher frequencies, effectively separating the signal from low-frequency noise and offset errors. TI implements factory calibration using automated test equipment that characterizes each sensor at multiple temperature points, storing correction coefficients in on-chip non-volatile memory. Their sensors feature integrated temperature sensors with real-time compensation algorithms that continuously adjust sensitivity and offset parameters based on current operating conditions. The DRV5055 series incorporates ratiometric output scaling that automatically adjusts for supply voltage variations, eliminating another common source of measurement error[2]. For applications requiring highest precision, TI offers devices with digital interfaces (I²C/SPI) that enable system-level calibration after assembly, compensating for mechanical stress effects and package-induced errors. Their latest generation includes built-in self-test capabilities that periodically verify calibration integrity during operation.

Strengths: Excellent temperature stability across wide operating ranges (-40°C to +150°C) with drift typically below 100ppm/°C. Integrated signal conditioning eliminates need for external components. Ratiometric outputs simplify system integration. Weaknesses: Higher power consumption compared to simpler Hall sensors. Some advanced calibration features require digital interface support and microcontroller resources for implementation.

Infineon Technologies AG

Technical Solution: Infineon Technologies has developed a sophisticated multi-level calibration approach for their TLE49xx series Hall effect sensors. Their technology employs a combination of hardware and software techniques to minimize both initial offset errors and long-term drift. At the manufacturing level, Infineon implements wafer-level testing and calibration, where each die undergoes characterization across multiple temperature points using precision magnetic field sources. The resulting calibration data is stored in on-chip EEPROM, enabling dynamic compensation during operation. Their patented "Chopped Hall" technology switches the biasing of the Hall plate in orthogonal directions and averages the measurements, effectively canceling out offset errors and reducing 1/f noise[3]. For automotive-grade sensors, Infineon implements stress-compensation structures within the silicon die that minimize package-induced offset shifts. Their TLE4998 series features digital signal processing with 16-bit resolution and temperature compensation algorithms that continuously adjust sensitivity and offset parameters based on an integrated temperature sensor. For highest precision applications, Infineon offers programmable sensors with SENT or PWM interfaces that enable end-of-line calibration to account for mechanical mounting variations and system-level errors.

Strengths: Exceptional long-term stability with drift typically below 0.5% over lifetime. Automotive-grade qualification with AEC-Q100 compliance ensures reliability in harsh environments. Advanced stress compensation minimizes package-induced errors. Weaknesses: Premium pricing compared to standard Hall sensors. Some advanced programmable features require specialized programming equipment and expertise to fully utilize.

Key Patents and Research in Hall Sensor Calibration

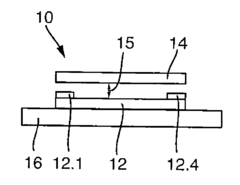

Hall sensor for canceling offset

PatentInactiveUS20120262163A1

Innovation

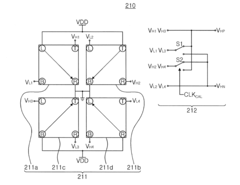

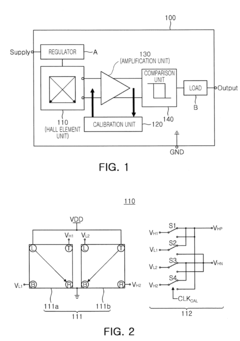

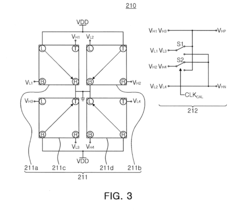

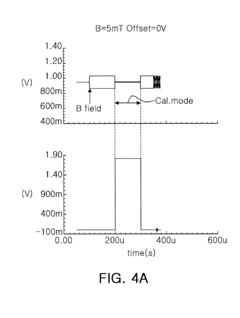

- A Hall sensor design that includes a Hall element unit with pairs of Hall elements having preset detection directions and switch configurations for different calibration and operation modes, along with a calibration unit using comparators, bit counters, and digital-to-analog converters to dynamically cancel offsets before they reach the amplifier.

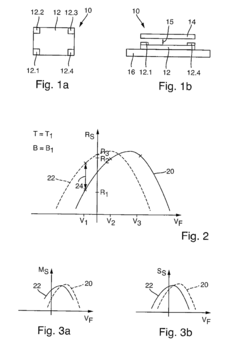

Calibration method for a hall effect sensor

PatentActiveUS20180172780A1

Innovation

- A calibration method for Hall sensors with a Hall sensitive layer and a field plate, where resistance values are determined at different field plate voltages to create a calibration curve, allowing for real-time adjustment of the field plate voltage to maintain sensitivity and mobility, enabling continuous recalibration throughout the sensor's lifespan without additional aids.

Temperature Compensation Strategies for Hall Sensors

Temperature compensation represents a critical strategy in mitigating calibration errors in Hall effect sensors. These sensors exhibit significant sensitivity to temperature variations, which can lead to measurement drift and reduced accuracy. Effective temperature compensation techniques address this vulnerability through both hardware and software approaches, ensuring stable performance across diverse operating conditions.

Hardware-based compensation methods typically involve the integration of temperature sensors directly within the Hall sensor package. These additional sensors continuously monitor ambient temperature changes, providing real-time data that can be used to adjust Hall sensor readings. Advanced designs incorporate thermally balanced circuits that minimize temperature gradients across the sensing element, reducing thermal stress and associated measurement errors. Some manufacturers employ specialized materials with complementary temperature coefficients that naturally counteract temperature-induced drift.

Software-based compensation techniques utilize mathematical models to correct temperature-related errors. These algorithms typically rely on temperature characterization data collected during manufacturing calibration processes. Polynomial compensation functions map the relationship between temperature and sensor output, enabling precise corrections across the operating temperature range. More sophisticated approaches implement adaptive algorithms that continuously refine compensation parameters based on observed sensor behavior, effectively addressing aging effects and environmental variations.

Integrated compensation systems combine both hardware and software strategies for optimal performance. These systems often feature multi-point calibration across the entire operating temperature range, storing correction coefficients in non-volatile memory. During operation, real-time temperature measurements trigger appropriate compensation adjustments, maintaining measurement accuracy despite temperature fluctuations. Some advanced implementations incorporate digital signal processing techniques to filter temperature-induced noise and enhance signal quality.

Recent innovations in temperature compensation include machine learning approaches that can identify complex, non-linear relationships between temperature and sensor output. These systems analyze historical sensor data to develop predictive models that anticipate temperature effects before they manifest as measurement errors. Additionally, distributed sensing networks now enable environmental context awareness, where multiple sensors collaborate to distinguish between genuine magnetic field changes and temperature-induced variations.

For practical implementation, engineers must carefully select compensation strategies based on application requirements, balancing complexity against performance needs. Cost-sensitive applications may benefit from simplified lookup table approaches, while precision-critical systems might justify more sophisticated real-time compensation algorithms. The effectiveness of any temperature compensation strategy ultimately depends on thorough characterization during development and appropriate calibration during manufacturing.

Hardware-based compensation methods typically involve the integration of temperature sensors directly within the Hall sensor package. These additional sensors continuously monitor ambient temperature changes, providing real-time data that can be used to adjust Hall sensor readings. Advanced designs incorporate thermally balanced circuits that minimize temperature gradients across the sensing element, reducing thermal stress and associated measurement errors. Some manufacturers employ specialized materials with complementary temperature coefficients that naturally counteract temperature-induced drift.

Software-based compensation techniques utilize mathematical models to correct temperature-related errors. These algorithms typically rely on temperature characterization data collected during manufacturing calibration processes. Polynomial compensation functions map the relationship between temperature and sensor output, enabling precise corrections across the operating temperature range. More sophisticated approaches implement adaptive algorithms that continuously refine compensation parameters based on observed sensor behavior, effectively addressing aging effects and environmental variations.

Integrated compensation systems combine both hardware and software strategies for optimal performance. These systems often feature multi-point calibration across the entire operating temperature range, storing correction coefficients in non-volatile memory. During operation, real-time temperature measurements trigger appropriate compensation adjustments, maintaining measurement accuracy despite temperature fluctuations. Some advanced implementations incorporate digital signal processing techniques to filter temperature-induced noise and enhance signal quality.

Recent innovations in temperature compensation include machine learning approaches that can identify complex, non-linear relationships between temperature and sensor output. These systems analyze historical sensor data to develop predictive models that anticipate temperature effects before they manifest as measurement errors. Additionally, distributed sensing networks now enable environmental context awareness, where multiple sensors collaborate to distinguish between genuine magnetic field changes and temperature-induced variations.

For practical implementation, engineers must carefully select compensation strategies based on application requirements, balancing complexity against performance needs. Cost-sensitive applications may benefit from simplified lookup table approaches, while precision-critical systems might justify more sophisticated real-time compensation algorithms. The effectiveness of any temperature compensation strategy ultimately depends on thorough characterization during development and appropriate calibration during manufacturing.

Industry Standards and Testing Protocols

The standardization of Hall effect sensor calibration processes is governed by several key industry standards that provide frameworks for ensuring measurement accuracy and reliability. IEC 60770 serves as a foundational standard for evaluating transmitters used in industrial process control systems, offering guidelines applicable to Hall sensor calibration procedures. Complementing this, IEEE 1451 provides specifications for smart transducer interfaces, which include calibration data formats and protocols essential for Hall effect sensors.

For automotive applications, which represent a significant market for Hall sensors, ISO 26262 establishes functional safety requirements that directly impact calibration processes. This standard mandates rigorous testing protocols to ensure sensor reliability in safety-critical systems. Similarly, AEC-Q100 qualification requirements define stress test conditions specifically for automotive-grade integrated circuits, including Hall effect sensors, with explicit calibration verification procedures.

Testing protocols for Hall effect sensors typically follow a multi-stage approach. Initial characterization testing establishes baseline performance parameters across temperature ranges (-40°C to +150°C), supply voltage variations, and magnetic field strengths. This is followed by calibration verification testing, which employs reference magnetometers with traceability to national standards institutes such as NIST or PTB to validate sensor outputs against known magnetic field values.

Environmental stress testing represents another critical protocol, subjecting sensors to temperature cycling, humidity exposure, and mechanical shock to verify calibration stability under adverse conditions. The automotive industry particularly emphasizes these tests through standards like ISO 16750, which defines environmental conditions for electrical and electronic equipment in vehicles.

Manufacturing quality control protocols typically implement Statistical Process Control (SPC) methodologies, with defined Cpk values exceeding 1.33 for critical calibration parameters. These protocols often include Measurement System Analysis (MSA) procedures to quantify measurement uncertainty and establish Gauge R&R (Repeatability and Reproducibility) values below 10% for calibration equipment.

Recent developments in industry standards have increasingly focused on automated calibration systems with digital compensation capabilities. The JEDEC JESD47 standard provides guidelines for stress-test-driven qualification of integrated circuits that include Hall effect sensors, while IPC-9592 addresses requirements for power conversion devices that often incorporate these sensors, including specific calibration verification methodologies.

For automotive applications, which represent a significant market for Hall sensors, ISO 26262 establishes functional safety requirements that directly impact calibration processes. This standard mandates rigorous testing protocols to ensure sensor reliability in safety-critical systems. Similarly, AEC-Q100 qualification requirements define stress test conditions specifically for automotive-grade integrated circuits, including Hall effect sensors, with explicit calibration verification procedures.

Testing protocols for Hall effect sensors typically follow a multi-stage approach. Initial characterization testing establishes baseline performance parameters across temperature ranges (-40°C to +150°C), supply voltage variations, and magnetic field strengths. This is followed by calibration verification testing, which employs reference magnetometers with traceability to national standards institutes such as NIST or PTB to validate sensor outputs against known magnetic field values.

Environmental stress testing represents another critical protocol, subjecting sensors to temperature cycling, humidity exposure, and mechanical shock to verify calibration stability under adverse conditions. The automotive industry particularly emphasizes these tests through standards like ISO 16750, which defines environmental conditions for electrical and electronic equipment in vehicles.

Manufacturing quality control protocols typically implement Statistical Process Control (SPC) methodologies, with defined Cpk values exceeding 1.33 for critical calibration parameters. These protocols often include Measurement System Analysis (MSA) procedures to quantify measurement uncertainty and establish Gauge R&R (Repeatability and Reproducibility) values below 10% for calibration equipment.

Recent developments in industry standards have increasingly focused on automated calibration systems with digital compensation capabilities. The JEDEC JESD47 standard provides guidelines for stress-test-driven qualification of integrated circuits that include Hall effect sensors, while IPC-9592 addresses requirements for power conversion devices that often incorporate these sensors, including specific calibration verification methodologies.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!