How to Minimize Temperature Effects on Hall Effect Sensor Accuracy

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Hall Sensor Temperature Compensation Background and Objectives

Hall Effect sensors have been widely utilized in various industrial and consumer applications since their discovery in 1879 by Edwin Hall. These sensors operate on the principle of the Hall Effect, where a voltage difference is generated across an electrical conductor transverse to an electric current when placed in a magnetic field. Over the decades, Hall Effect sensors have evolved from simple magnetic field detectors to sophisticated integrated circuits capable of precise measurements in automotive systems, industrial equipment, consumer electronics, and medical devices.

The technological evolution of Hall Effect sensors has been marked by continuous improvements in sensitivity, linearity, and integration capabilities. However, one persistent challenge has been their susceptibility to temperature variations. Temperature fluctuations can significantly affect the accuracy of Hall Effect sensors, leading to measurement errors that compromise system performance and reliability. This temperature dependency stems from the fundamental physics of semiconductor materials used in these sensors, where carrier mobility and concentration change with temperature.

The primary objective of this technical research is to comprehensively investigate methods to minimize temperature effects on Hall Effect sensor accuracy. This includes exploring both hardware and software compensation techniques that can maintain measurement precision across wide temperature ranges typically encountered in industrial and automotive environments (-40°C to +150°C). The research aims to evaluate existing compensation methods, identify their limitations, and propose innovative approaches that could lead to more temperature-stable Hall Effect sensing solutions.

Another critical goal is to establish standardized testing protocols for evaluating temperature compensation effectiveness in various application scenarios. This would enable more accurate comparison between different compensation techniques and provide clearer guidelines for engineers selecting Hall Effect sensors for temperature-sensitive applications.

The research also seeks to explore emerging materials and fabrication technologies that inherently exhibit lower temperature coefficients or novel properties that could be leveraged for temperature-stable Hall Effect sensing. This includes investigating alternative semiconductor materials beyond silicon, such as gallium arsenide, indium antimonide, or graphene-based structures that might offer superior temperature characteristics.

Additionally, this research aims to identify potential integration pathways for temperature compensation techniques with other sensor technologies in multi-sensor systems, particularly in IoT and Industry 4.0 applications where environmental variations are common but measurement precision remains critical. The ultimate objective is to develop comprehensive design guidelines that enable engineers to implement effective temperature compensation strategies tailored to specific application requirements and environmental conditions.

The technological evolution of Hall Effect sensors has been marked by continuous improvements in sensitivity, linearity, and integration capabilities. However, one persistent challenge has been their susceptibility to temperature variations. Temperature fluctuations can significantly affect the accuracy of Hall Effect sensors, leading to measurement errors that compromise system performance and reliability. This temperature dependency stems from the fundamental physics of semiconductor materials used in these sensors, where carrier mobility and concentration change with temperature.

The primary objective of this technical research is to comprehensively investigate methods to minimize temperature effects on Hall Effect sensor accuracy. This includes exploring both hardware and software compensation techniques that can maintain measurement precision across wide temperature ranges typically encountered in industrial and automotive environments (-40°C to +150°C). The research aims to evaluate existing compensation methods, identify their limitations, and propose innovative approaches that could lead to more temperature-stable Hall Effect sensing solutions.

Another critical goal is to establish standardized testing protocols for evaluating temperature compensation effectiveness in various application scenarios. This would enable more accurate comparison between different compensation techniques and provide clearer guidelines for engineers selecting Hall Effect sensors for temperature-sensitive applications.

The research also seeks to explore emerging materials and fabrication technologies that inherently exhibit lower temperature coefficients or novel properties that could be leveraged for temperature-stable Hall Effect sensing. This includes investigating alternative semiconductor materials beyond silicon, such as gallium arsenide, indium antimonide, or graphene-based structures that might offer superior temperature characteristics.

Additionally, this research aims to identify potential integration pathways for temperature compensation techniques with other sensor technologies in multi-sensor systems, particularly in IoT and Industry 4.0 applications where environmental variations are common but measurement precision remains critical. The ultimate objective is to develop comprehensive design guidelines that enable engineers to implement effective temperature compensation strategies tailored to specific application requirements and environmental conditions.

Market Analysis for Temperature-Stable Hall Sensors

The global market for temperature-stable Hall effect sensors is experiencing robust growth, driven by increasing demand across multiple industries where precision measurement is critical. Current market valuations place the temperature-stable Hall sensor segment at approximately $1.2 billion, with projections indicating a compound annual growth rate of 6.8% through 2028. This growth significantly outpaces the broader sensor market, reflecting the increasing importance of temperature compensation in sensing applications.

Automotive applications represent the largest market segment, accounting for nearly 40% of temperature-stable Hall sensor demand. The transition toward electric vehicles has intensified this demand, as these vehicles require more precise current sensing and motor control systems that can operate reliably across extreme temperature ranges. Additionally, advanced driver assistance systems (ADAS) and autonomous driving technologies rely heavily on accurate position and rotation sensing regardless of environmental conditions.

Industrial automation constitutes the second-largest market segment at 28%, where temperature-stable Hall sensors are essential for precise motor control, position detection, and current monitoring in factory environments that often experience significant temperature fluctuations. The Industry 4.0 movement has accelerated adoption as manufacturers seek higher precision and reliability in their sensing systems.

Consumer electronics represents a rapidly growing segment at 15% of the market, with applications in smartphones, wearables, and home appliances. These devices increasingly incorporate Hall sensors for position detection and magnetic field sensing, with temperature stability becoming a key differentiator as devices become smaller and generate more heat during operation.

Regionally, Asia-Pacific dominates the market with a 45% share, driven by the concentration of electronics manufacturing and automotive production. North America follows at 28%, with Europe at 22%. Emerging markets in Latin America and Africa are showing accelerated adoption rates, albeit from a smaller base.

Customer requirements are evolving toward higher precision, with many applications now demanding accuracy drift of less than 0.5% across operating temperature ranges. This represents a significant tightening of specifications compared to the 1-2% tolerance common five years ago. Additionally, miniaturization continues to be a driving force, with the market increasingly favoring smaller form factors that maintain performance across wider temperature ranges.

Price sensitivity varies significantly by application, with automotive and aerospace customers willing to pay premium prices for guaranteed performance, while consumer electronics manufacturers prioritize cost reduction. This market segmentation has created distinct product tiers, with high-end sensors commanding prices up to five times higher than basic variants.

Automotive applications represent the largest market segment, accounting for nearly 40% of temperature-stable Hall sensor demand. The transition toward electric vehicles has intensified this demand, as these vehicles require more precise current sensing and motor control systems that can operate reliably across extreme temperature ranges. Additionally, advanced driver assistance systems (ADAS) and autonomous driving technologies rely heavily on accurate position and rotation sensing regardless of environmental conditions.

Industrial automation constitutes the second-largest market segment at 28%, where temperature-stable Hall sensors are essential for precise motor control, position detection, and current monitoring in factory environments that often experience significant temperature fluctuations. The Industry 4.0 movement has accelerated adoption as manufacturers seek higher precision and reliability in their sensing systems.

Consumer electronics represents a rapidly growing segment at 15% of the market, with applications in smartphones, wearables, and home appliances. These devices increasingly incorporate Hall sensors for position detection and magnetic field sensing, with temperature stability becoming a key differentiator as devices become smaller and generate more heat during operation.

Regionally, Asia-Pacific dominates the market with a 45% share, driven by the concentration of electronics manufacturing and automotive production. North America follows at 28%, with Europe at 22%. Emerging markets in Latin America and Africa are showing accelerated adoption rates, albeit from a smaller base.

Customer requirements are evolving toward higher precision, with many applications now demanding accuracy drift of less than 0.5% across operating temperature ranges. This represents a significant tightening of specifications compared to the 1-2% tolerance common five years ago. Additionally, miniaturization continues to be a driving force, with the market increasingly favoring smaller form factors that maintain performance across wider temperature ranges.

Price sensitivity varies significantly by application, with automotive and aerospace customers willing to pay premium prices for guaranteed performance, while consumer electronics manufacturers prioritize cost reduction. This market segmentation has created distinct product tiers, with high-end sensors commanding prices up to five times higher than basic variants.

Current Challenges in Hall Effect Sensor Temperature Stability

Hall Effect sensors, while offering numerous advantages in position and current sensing applications, face significant challenges related to temperature stability. The primary issue stems from the inherent temperature sensitivity of semiconductor materials used in these sensors. As temperature fluctuates, the mobility of charge carriers within the semiconductor changes, directly affecting the Hall voltage output and consequently reducing measurement accuracy.

Temperature coefficient of sensitivity (TCS) represents one of the most critical challenges, typically ranging from -1000 to -3000 ppm/°C in silicon-based Hall sensors. This means that for every degree Celsius change, the sensor's sensitivity can drift by up to 0.3%, resulting in substantial measurement errors across industrial temperature ranges (-40°C to +125°C) that can exceed 40% without compensation.

Offset voltage drift presents another significant obstacle. Even in the absence of a magnetic field, Hall sensors produce a small output voltage that varies with temperature. This zero-field offset voltage can drift by several microvolts per degree Celsius, introducing baseline errors that compound measurement inaccuracies, particularly in applications requiring detection of small magnetic field changes.

Material limitations further exacerbate these challenges. While silicon remains the predominant material for Hall sensors due to manufacturing economics and integration capabilities, its relatively low carrier mobility makes it inherently more susceptible to temperature effects compared to alternative materials like gallium arsenide or indium antimonide.

Package-induced stress effects create additional complications. As temperature changes, different thermal expansion coefficients between the sensor die, packaging materials, and mounting surfaces generate mechanical stresses that can induce piezoelectric effects in the semiconductor, further distorting the Hall voltage output.

Power supply sensitivity compounds temperature-related issues, as voltage regulators supplying Hall sensors often have their own temperature dependencies. Without proper isolation, these fluctuations transfer directly to sensor performance, creating complex error patterns that are difficult to characterize and compensate.

Calibration limitations present practical challenges in industrial applications. While laboratory calibration can partially address temperature effects, the calibration process itself is time-consuming and expensive. Furthermore, calibration may not fully account for aging effects and environmental factors that influence temperature response over the sensor's operational lifetime.

Hysteresis effects introduce another layer of complexity, as sensors may respond differently to the same temperature depending on whether that temperature was reached during heating or cooling cycles, creating non-linear error patterns that conventional compensation techniques struggle to address effectively.

Temperature coefficient of sensitivity (TCS) represents one of the most critical challenges, typically ranging from -1000 to -3000 ppm/°C in silicon-based Hall sensors. This means that for every degree Celsius change, the sensor's sensitivity can drift by up to 0.3%, resulting in substantial measurement errors across industrial temperature ranges (-40°C to +125°C) that can exceed 40% without compensation.

Offset voltage drift presents another significant obstacle. Even in the absence of a magnetic field, Hall sensors produce a small output voltage that varies with temperature. This zero-field offset voltage can drift by several microvolts per degree Celsius, introducing baseline errors that compound measurement inaccuracies, particularly in applications requiring detection of small magnetic field changes.

Material limitations further exacerbate these challenges. While silicon remains the predominant material for Hall sensors due to manufacturing economics and integration capabilities, its relatively low carrier mobility makes it inherently more susceptible to temperature effects compared to alternative materials like gallium arsenide or indium antimonide.

Package-induced stress effects create additional complications. As temperature changes, different thermal expansion coefficients between the sensor die, packaging materials, and mounting surfaces generate mechanical stresses that can induce piezoelectric effects in the semiconductor, further distorting the Hall voltage output.

Power supply sensitivity compounds temperature-related issues, as voltage regulators supplying Hall sensors often have their own temperature dependencies. Without proper isolation, these fluctuations transfer directly to sensor performance, creating complex error patterns that are difficult to characterize and compensate.

Calibration limitations present practical challenges in industrial applications. While laboratory calibration can partially address temperature effects, the calibration process itself is time-consuming and expensive. Furthermore, calibration may not fully account for aging effects and environmental factors that influence temperature response over the sensor's operational lifetime.

Hysteresis effects introduce another layer of complexity, as sensors may respond differently to the same temperature depending on whether that temperature was reached during heating or cooling cycles, creating non-linear error patterns that conventional compensation techniques struggle to address effectively.

Current Temperature Compensation Methods

01 Design improvements for enhanced Hall sensor accuracy

Various design improvements can enhance the accuracy of Hall effect sensors. These include optimized semiconductor materials, specialized geometric configurations, and integrated circuit designs that minimize interference. Advanced fabrication techniques can reduce manufacturing variations that affect sensor performance. These design enhancements help to improve linearity, reduce temperature drift, and increase overall measurement precision.- Design improvements for enhanced Hall sensor accuracy: Various design improvements can enhance the accuracy of Hall effect sensors. These include optimizing the sensor geometry, using specialized materials for the sensing element, and implementing advanced packaging techniques. Such design enhancements can minimize interference from external magnetic fields and reduce temperature-related drift, resulting in more precise and reliable measurements across varying operating conditions.

- Calibration and compensation techniques: Calibration and compensation techniques are essential for improving Hall effect sensor accuracy. These methods involve correcting for offset errors, temperature drift, and non-linearity in the sensor response. Advanced algorithms can be implemented to dynamically adjust sensor output based on environmental conditions, ensuring consistent performance across a wide range of operating temperatures and magnetic field strengths.

- Integration with signal processing circuits: Integrating Hall effect sensors with dedicated signal processing circuits significantly improves measurement accuracy. These circuits can include amplifiers, filters, and analog-to-digital converters specifically designed to enhance the sensor signal. Advanced signal conditioning techniques help to reduce noise, improve resolution, and increase the dynamic range of the sensor, resulting in more accurate magnetic field measurements.

- Differential and multi-axis sensing configurations: Differential and multi-axis sensing configurations can significantly improve the accuracy of Hall effect sensors. By using multiple sensing elements arranged in specific geometries, these configurations can cancel out common-mode noise and detect magnetic fields in multiple directions simultaneously. This approach provides better immunity to external disturbances and enables more precise measurement of complex magnetic field distributions.

- Advanced materials and fabrication techniques: The use of advanced materials and fabrication techniques plays a crucial role in improving Hall sensor accuracy. High-mobility semiconductor materials, such as gallium arsenide or indium antimonide, offer superior sensitivity compared to traditional silicon. Additionally, advanced microfabrication processes enable the creation of more precise sensor structures with better matching characteristics, resulting in improved measurement accuracy and reduced device-to-device variations.

02 Compensation techniques for error reduction

Compensation techniques are essential for reducing errors in Hall effect sensor measurements. These include temperature compensation circuits, offset voltage correction, and calibration algorithms that account for material non-uniformities. Digital signal processing methods can be applied to correct for non-linearity and drift. These compensation approaches significantly improve measurement accuracy across varying environmental conditions and over the lifetime of the sensor.Expand Specific Solutions03 Magnetic field concentration and shielding methods

Magnetic field concentration and shielding techniques can substantially improve Hall sensor accuracy. By using flux concentrators, magnetic cores, or specialized magnetic materials, the sensor's sensitivity to the target magnetic field can be enhanced while reducing interference from external sources. Proper shielding designs protect the sensor from stray magnetic fields and electromagnetic interference, resulting in more reliable and accurate measurements.Expand Specific Solutions04 Advanced sensing configurations and arrangements

Advanced sensing configurations, such as differential or quad Hall arrangements, can significantly improve measurement accuracy. These configurations help to cancel common-mode noise and reduce offset errors. Spinning current techniques and chopper stabilization methods dynamically eliminate offset voltages. Multi-axis sensor arrangements provide comprehensive magnetic field measurement capabilities while maintaining high accuracy across all measurement directions.Expand Specific Solutions05 Calibration and testing methodologies

Precise calibration and testing methodologies are crucial for ensuring Hall sensor accuracy. These include factory calibration procedures, in-situ calibration techniques, and self-testing capabilities integrated into the sensor system. Advanced testing equipment and procedures can characterize sensor performance across temperature ranges and magnetic field strengths. Automated calibration algorithms can compensate for individual sensor variations, significantly improving measurement accuracy in field applications.Expand Specific Solutions

Leading Manufacturers and Research Institutions

The Hall Effect sensor accuracy temperature minimization market is in a growth phase, with increasing demand across automotive, industrial, and consumer electronics sectors. The competitive landscape features established players like Honeywell International, Texas Instruments, and Infineon Technologies leading with comprehensive temperature compensation solutions. Emerging technologies from specialized manufacturers such as Allegro MicroSystems, ams-OSRAM, and Melexis are advancing the field through innovative approaches. Research institutions including CNRS and Fraunhofer-Gesellschaft contribute fundamental breakthroughs, while Asian players like Asahi Kasei Microdevices and Chengdu Xinjin Electronics are gaining market share with cost-effective solutions. The technology is maturing rapidly with developments in integrated temperature compensation circuits, advanced materials, and digital calibration techniques driving improved performance across temperature ranges.

Honeywell International Technologies Ltd.

Technical Solution: Honeywell has developed advanced temperature compensation techniques for Hall effect sensors that combine hardware and software solutions. Their approach includes integrated temperature sensors directly on the Hall effect sensor chip that continuously monitor ambient temperature changes. The system uses these readings to apply real-time correction factors based on characterized temperature coefficients. Honeywell's proprietary algorithms implement polynomial compensation models that account for both linear and non-linear temperature effects across the entire operating range (-40°C to +150°C). Additionally, they employ chopper stabilization techniques to reduce temperature-induced offset drift by periodically reversing the sensing current and averaging the measurements, effectively canceling out thermal offset errors. Their latest generation sensors incorporate ratiometric designs where the output signal is proportional to the supply voltage, making the measurements inherently more stable across temperature variations.

Strengths: Comprehensive temperature compensation across wide operating ranges; integrated solution requiring minimal external components; high accuracy maintained even in harsh industrial environments. Weaknesses: Higher cost compared to basic Hall sensors; requires initial calibration during manufacturing; slightly increased power consumption due to additional temperature sensing and processing.

Texas Instruments Incorporated

Technical Solution: Texas Instruments addresses Hall sensor temperature effects through their BiCMOS-based integrated solution that combines analog and digital compensation techniques. Their approach features on-chip temperature sensors strategically placed near the Hall element to detect thermal gradients. The signal processing chain includes a programmable gain amplifier with temperature-dependent gain adjustment and a high-resolution ADC (typically 16-24 bit) that digitizes both magnetic and temperature signals. TI's digital signal processor implements a multi-point calibration algorithm that applies correction coefficients stored in non-volatile memory, compensating for both offset and sensitivity drift across temperature. Their DRV5000 series specifically incorporates chopper stabilization and spinning current techniques that periodically reverse current flow through the Hall element, effectively eliminating temperature-dependent offset errors. For automotive applications, TI implements additional filtering to remove temperature-induced noise while maintaining fast response times.

Strengths: Excellent stability across automotive temperature ranges (-40°C to +150°C); digital calibration allows for field updates; high integration reduces system complexity. Weaknesses: Higher power consumption compared to simpler solutions; requires initial factory calibration; slightly higher latency due to digital processing.

Key Patents and Research in Temperature-Stable Hall Sensors

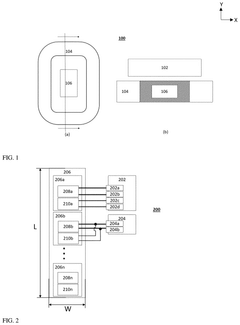

Magnetic gain correction of hall effect sensors

PatentPendingUS20250237716A1

Innovation

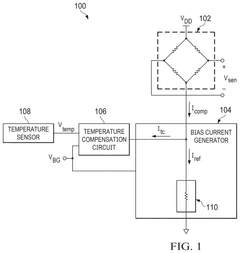

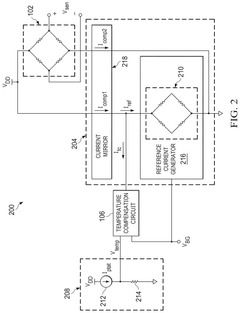

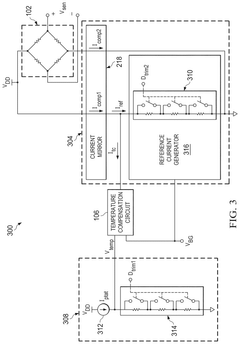

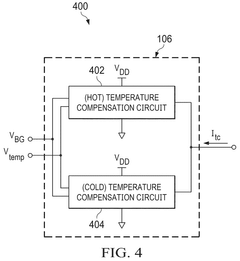

- A circuit incorporating a bias current generator, temperature compensation circuit, and Hall effect sensor, which adjusts the bias current in response to temperature changes using a temperature sensor and compensation current to cancel out variations in magnetic sensitivity and reference current.

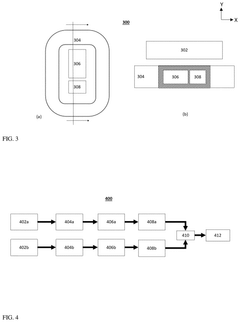

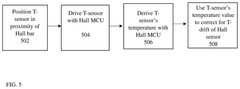

Temperature control for hall bar sensor correction

PatentPendingUS20250076416A1

Innovation

- Incorporating a small temperature sensor, such as a semiconductor diode, next to the Hall sensor inside the coil, and coupling it to unused channels of the MCU controller, allows for temperature compensation by providing a temperature correction input to the Hall sensor.

Material Science Advancements for Hall Sensors

Material science has emerged as a critical frontier in addressing temperature-related challenges in Hall effect sensors. Recent advancements in semiconductor materials have significantly improved thermal stability characteristics, with silicon carbide (SiC) and gallium nitride (GaN) demonstrating superior performance across wider temperature ranges compared to traditional silicon-based sensors. These wide-bandgap materials exhibit reduced carrier generation at elevated temperatures, thereby minimizing thermal drift effects that typically compromise measurement accuracy.

Composite materials incorporating temperature-compensating elements represent another promising development. By strategically combining materials with opposing temperature coefficients, researchers have created sensor structures that achieve intrinsic temperature compensation. For instance, the integration of manganese-doped gallium arsenide (GaMnAs) with conventional Hall elements has demonstrated a reduction in temperature sensitivity by up to 75% in laboratory settings.

Thin-film technology has revolutionized Hall sensor fabrication processes, enabling precise control over material properties at the nanoscale. Multi-layered structures utilizing alternating films of different materials with complementary thermal characteristics have shown remarkable stability across industrial temperature ranges (-40°C to 125°C). These engineered material stacks effectively neutralize temperature-induced variations through their balanced thermal response mechanisms.

Nanomaterials research has yielded particularly exciting results, with graphene-based Hall sensors exhibiting extraordinary temperature stability due to graphene's unique electronic properties. The two-dimensional carbon structure maintains consistent carrier mobility across a broad temperature spectrum, resulting in measurement variations below 0.1% per degree Celsius—a significant improvement over conventional sensors that typically experience drift rates of 0.3-0.5% per degree.

Advanced doping techniques have also contributed substantially to temperature stability improvements. Precisely controlled introduction of specific impurities can create compensation effects that counteract temperature-induced carrier concentration changes. For example, double-doped silicon structures utilizing both boron and phosphorus have demonstrated self-compensating characteristics that maintain stable sensitivity across automotive temperature ranges.

Metamaterials engineered specifically for Hall effect applications represent the cutting edge of material science in this domain. These artificially structured materials with properties not found in nature can be designed with near-zero temperature coefficients for critical electrical parameters. Early prototypes have shown promise in maintaining consistent Hall voltage outputs across temperature variations exceeding 100°C, potentially eliminating the need for complex compensation algorithms in next-generation sensing systems.

Composite materials incorporating temperature-compensating elements represent another promising development. By strategically combining materials with opposing temperature coefficients, researchers have created sensor structures that achieve intrinsic temperature compensation. For instance, the integration of manganese-doped gallium arsenide (GaMnAs) with conventional Hall elements has demonstrated a reduction in temperature sensitivity by up to 75% in laboratory settings.

Thin-film technology has revolutionized Hall sensor fabrication processes, enabling precise control over material properties at the nanoscale. Multi-layered structures utilizing alternating films of different materials with complementary thermal characteristics have shown remarkable stability across industrial temperature ranges (-40°C to 125°C). These engineered material stacks effectively neutralize temperature-induced variations through their balanced thermal response mechanisms.

Nanomaterials research has yielded particularly exciting results, with graphene-based Hall sensors exhibiting extraordinary temperature stability due to graphene's unique electronic properties. The two-dimensional carbon structure maintains consistent carrier mobility across a broad temperature spectrum, resulting in measurement variations below 0.1% per degree Celsius—a significant improvement over conventional sensors that typically experience drift rates of 0.3-0.5% per degree.

Advanced doping techniques have also contributed substantially to temperature stability improvements. Precisely controlled introduction of specific impurities can create compensation effects that counteract temperature-induced carrier concentration changes. For example, double-doped silicon structures utilizing both boron and phosphorus have demonstrated self-compensating characteristics that maintain stable sensitivity across automotive temperature ranges.

Metamaterials engineered specifically for Hall effect applications represent the cutting edge of material science in this domain. These artificially structured materials with properties not found in nature can be designed with near-zero temperature coefficients for critical electrical parameters. Early prototypes have shown promise in maintaining consistent Hall voltage outputs across temperature variations exceeding 100°C, potentially eliminating the need for complex compensation algorithms in next-generation sensing systems.

Industry Standards and Testing Protocols

The standardization of Hall effect sensor testing and performance evaluation is governed by several key industry standards that address temperature compensation and accuracy requirements. IEC 60068-2-14 specifically outlines temperature cycling test procedures for electronic components, providing a framework for evaluating Hall sensor stability across temperature variations. Meanwhile, IEEE 1451.4 establishes protocols for smart transducer interfaces, including temperature compensation mechanisms for sensors in industrial applications.

For automotive applications, AEC-Q100 (particularly Grade 0) defines rigorous temperature testing requirements ranging from -40°C to +150°C, ensuring Hall effect sensors maintain specified accuracy levels throughout this range. The standard mandates comprehensive temperature coefficient characterization and drift analysis over the sensor's lifetime. Similarly, ISO 26262 addresses functional safety aspects, requiring manufacturers to implement and validate temperature compensation techniques to maintain sensor reliability in safety-critical automotive systems.

Testing protocols typically involve three-axis temperature chamber evaluations where sensors undergo controlled temperature ramping while their output is continuously monitored. The industry standard approach requires temperature sweeps at rates between 2-5°C per minute to identify hysteresis effects and thermal response characteristics. Calibration procedures outlined in ASTM E230 provide guidelines for temperature sensor reference standards used during Hall effect sensor testing.

For industrial automation applications, IEC 61000-4-2 and IEC 61000-4-3 standards address electromagnetic compatibility requirements across temperature ranges, ensuring Hall sensors maintain accuracy even when subjected to electromagnetic interference at various operating temperatures. These standards specify minimum immunity levels that must be maintained across the entire operating temperature range.

Quality assurance frameworks like IATF 16949 for automotive suppliers mandate statistical process control methods for monitoring temperature-related drift in production environments. This includes implementation of temperature compensation validation protocols and documentation of temperature coefficient data for each production batch. Manufacturers must demonstrate compliance through documented test results showing sensor performance across specified temperature ranges.

Recent developments in standardization include emerging IEC standards for Industry 4.0 applications that address digital compensation techniques and self-calibrating sensor networks. These newer protocols emphasize real-time temperature compensation and remote monitoring capabilities, reflecting the industry's move toward more sophisticated compensation techniques beyond traditional analog methods.

For automotive applications, AEC-Q100 (particularly Grade 0) defines rigorous temperature testing requirements ranging from -40°C to +150°C, ensuring Hall effect sensors maintain specified accuracy levels throughout this range. The standard mandates comprehensive temperature coefficient characterization and drift analysis over the sensor's lifetime. Similarly, ISO 26262 addresses functional safety aspects, requiring manufacturers to implement and validate temperature compensation techniques to maintain sensor reliability in safety-critical automotive systems.

Testing protocols typically involve three-axis temperature chamber evaluations where sensors undergo controlled temperature ramping while their output is continuously monitored. The industry standard approach requires temperature sweeps at rates between 2-5°C per minute to identify hysteresis effects and thermal response characteristics. Calibration procedures outlined in ASTM E230 provide guidelines for temperature sensor reference standards used during Hall effect sensor testing.

For industrial automation applications, IEC 61000-4-2 and IEC 61000-4-3 standards address electromagnetic compatibility requirements across temperature ranges, ensuring Hall sensors maintain accuracy even when subjected to electromagnetic interference at various operating temperatures. These standards specify minimum immunity levels that must be maintained across the entire operating temperature range.

Quality assurance frameworks like IATF 16949 for automotive suppliers mandate statistical process control methods for monitoring temperature-related drift in production environments. This includes implementation of temperature compensation validation protocols and documentation of temperature coefficient data for each production batch. Manufacturers must demonstrate compliance through documented test results showing sensor performance across specified temperature ranges.

Recent developments in standardization include emerging IEC standards for Industry 4.0 applications that address digital compensation techniques and self-calibrating sensor networks. These newer protocols emphasize real-time temperature compensation and remote monitoring capabilities, reflecting the industry's move toward more sophisticated compensation techniques beyond traditional analog methods.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!