In-Memory Computing For Financial Modeling And Risk Simulations

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

In-Memory Computing Evolution and Objectives

In-memory computing has evolved significantly over the past two decades, transforming from a niche technology into a critical component of high-performance computing infrastructure. The evolution began in the early 2000s with basic in-memory database systems primarily focused on improving query response times. By 2010, advancements in hardware technology, particularly the decreasing cost of RAM and the development of multi-core processors, accelerated the adoption of in-memory computing solutions across various industries.

The financial services sector recognized the potential of in-memory computing around 2012-2015, when institutions began implementing these systems for real-time analytics and risk calculations. This shift was largely driven by post-2008 financial crisis regulations that demanded more sophisticated and timely risk assessments. The technology's ability to process vast datasets without the latency associated with disk-based systems made it particularly valuable for complex financial modeling scenarios.

Recent developments have seen in-memory computing platforms evolve to incorporate distributed computing architectures, allowing for horizontal scalability across server clusters. This has been complemented by advancements in data compression techniques that optimize memory usage while maintaining computational efficiency. Additionally, the integration of specialized hardware accelerators such as GPUs and FPGAs has further enhanced performance for specific financial algorithms.

The primary objective of in-memory computing in financial modeling and risk simulations is to enable real-time or near-real-time analysis of complex financial scenarios. This includes Monte Carlo simulations, Value at Risk (VaR) calculations, and stress testing that traditionally required hours or days to complete. By reducing these computation times to minutes or seconds, financial institutions can make more informed decisions and respond more rapidly to market changes.

Another key objective is to support the increasing regulatory requirements for comprehensive risk assessment. Regulations such as FRTB (Fundamental Review of the Trading Book) and BCBS 239 demand granular risk calculations across multiple scenarios, which are computationally intensive and time-sensitive. In-memory computing provides the necessary performance to meet these requirements within operational timeframes.

Looking forward, the technology aims to facilitate more sophisticated risk models that incorporate machine learning algorithms and alternative data sources. The goal is to move beyond traditional statistical models to develop more predictive risk assessment capabilities that can identify emerging threats before they materialize into significant market events.

The financial services sector recognized the potential of in-memory computing around 2012-2015, when institutions began implementing these systems for real-time analytics and risk calculations. This shift was largely driven by post-2008 financial crisis regulations that demanded more sophisticated and timely risk assessments. The technology's ability to process vast datasets without the latency associated with disk-based systems made it particularly valuable for complex financial modeling scenarios.

Recent developments have seen in-memory computing platforms evolve to incorporate distributed computing architectures, allowing for horizontal scalability across server clusters. This has been complemented by advancements in data compression techniques that optimize memory usage while maintaining computational efficiency. Additionally, the integration of specialized hardware accelerators such as GPUs and FPGAs has further enhanced performance for specific financial algorithms.

The primary objective of in-memory computing in financial modeling and risk simulations is to enable real-time or near-real-time analysis of complex financial scenarios. This includes Monte Carlo simulations, Value at Risk (VaR) calculations, and stress testing that traditionally required hours or days to complete. By reducing these computation times to minutes or seconds, financial institutions can make more informed decisions and respond more rapidly to market changes.

Another key objective is to support the increasing regulatory requirements for comprehensive risk assessment. Regulations such as FRTB (Fundamental Review of the Trading Book) and BCBS 239 demand granular risk calculations across multiple scenarios, which are computationally intensive and time-sensitive. In-memory computing provides the necessary performance to meet these requirements within operational timeframes.

Looking forward, the technology aims to facilitate more sophisticated risk models that incorporate machine learning algorithms and alternative data sources. The goal is to move beyond traditional statistical models to develop more predictive risk assessment capabilities that can identify emerging threats before they materialize into significant market events.

Financial Market Demand for High-Performance Computing

The financial services industry has witnessed an exponential growth in computational demands over the past decade, driven primarily by increasingly complex financial models, regulatory requirements for more comprehensive risk assessments, and the competitive advantage gained through faster analytics. Traditional computing architectures have reached their performance limits when processing the massive datasets and complex calculations required for modern financial operations.

High-frequency trading firms require microsecond-level response times to execute profitable trades, creating demand for systems that can process market data and execute trading algorithms with minimal latency. Even milliseconds of delay can translate to millions in lost opportunities. According to industry analyses, trading firms investing in high-performance computing infrastructure have demonstrated 15-30% performance advantages over competitors using conventional systems.

Risk management departments in major financial institutions now run Monte Carlo simulations with millions of scenarios daily, requiring teraflops of computing power. The 2008 financial crisis highlighted the inadequacy of existing risk models and computing infrastructure, prompting financial institutions to invest heavily in advanced computing capabilities. Post-crisis regulations like Basel III and FRTB (Fundamental Review of the Trading Book) have mandated more stringent stress testing and risk calculations, further driving demand for computational power.

Asset management firms increasingly employ quantitative strategies requiring real-time analysis of vast market datasets. The rise of algorithmic trading, which now accounts for over 70% of U.S. equity trading volume, has created sustained demand for high-performance computing solutions that can process market signals and execute trades with minimal latency.

Financial institutions are also exploring AI and machine learning applications for fraud detection, credit scoring, and investment strategies, all of which require significant computational resources. These applications often process petabytes of historical and real-time data to identify patterns and make predictions, necessitating computing architectures that can handle both the volume and velocity of financial data.

Cloud computing has emerged as a flexible solution for financial institutions seeking scalable computing resources without massive capital investments. However, concerns about data security, regulatory compliance, and consistent performance have led many institutions to maintain hybrid infrastructures combining on-premises high-performance computing clusters with cloud resources for peak demand periods.

The market for financial high-performance computing solutions continues to grow at approximately 9% annually, reflecting the industry's ongoing digital transformation and increasing reliance on data-driven decision making across all operational areas.

High-frequency trading firms require microsecond-level response times to execute profitable trades, creating demand for systems that can process market data and execute trading algorithms with minimal latency. Even milliseconds of delay can translate to millions in lost opportunities. According to industry analyses, trading firms investing in high-performance computing infrastructure have demonstrated 15-30% performance advantages over competitors using conventional systems.

Risk management departments in major financial institutions now run Monte Carlo simulations with millions of scenarios daily, requiring teraflops of computing power. The 2008 financial crisis highlighted the inadequacy of existing risk models and computing infrastructure, prompting financial institutions to invest heavily in advanced computing capabilities. Post-crisis regulations like Basel III and FRTB (Fundamental Review of the Trading Book) have mandated more stringent stress testing and risk calculations, further driving demand for computational power.

Asset management firms increasingly employ quantitative strategies requiring real-time analysis of vast market datasets. The rise of algorithmic trading, which now accounts for over 70% of U.S. equity trading volume, has created sustained demand for high-performance computing solutions that can process market signals and execute trades with minimal latency.

Financial institutions are also exploring AI and machine learning applications for fraud detection, credit scoring, and investment strategies, all of which require significant computational resources. These applications often process petabytes of historical and real-time data to identify patterns and make predictions, necessitating computing architectures that can handle both the volume and velocity of financial data.

Cloud computing has emerged as a flexible solution for financial institutions seeking scalable computing resources without massive capital investments. However, concerns about data security, regulatory compliance, and consistent performance have led many institutions to maintain hybrid infrastructures combining on-premises high-performance computing clusters with cloud resources for peak demand periods.

The market for financial high-performance computing solutions continues to grow at approximately 9% annually, reflecting the industry's ongoing digital transformation and increasing reliance on data-driven decision making across all operational areas.

Current In-Memory Technologies and Limitations

In-memory computing has revolutionized financial modeling and risk simulations by dramatically reducing data access latency. Current technologies primarily include DRAM-based solutions, Non-Volatile Memory (NVM), and hybrid architectures that combine different memory types. DRAM-based in-memory databases like SAP HANA, Oracle TimesTen, and Redis have become industry standards, offering microsecond-level data access compared to milliseconds for disk-based systems—a critical advantage for real-time financial analytics.

Distributed in-memory data grids such as Apache Ignite and Hazelcast have gained prominence for their ability to scale horizontally across server clusters, enabling financial institutions to process massive datasets for complex risk simulations. These platforms provide essential features like data partitioning, replication, and fault tolerance that are crucial for mission-critical financial applications.

Despite these advancements, current in-memory technologies face significant limitations. Memory volatility remains a primary concern, as DRAM-based solutions lose data during power failures, necessitating complex persistence mechanisms that can impact performance. This creates a challenging trade-off between data durability and processing speed that financial institutions must carefully navigate.

Cost constraints represent another major limitation. In-memory solutions require substantial hardware investments, with high-capacity server memory configurations costing significantly more than equivalent disk storage. This economic barrier often forces organizations to be selective about which data sets they maintain in memory, potentially limiting the scope of real-time risk analysis.

Scalability challenges emerge as data volumes grow exponentially. While distributed architectures help address this issue, they introduce complexity in data consistency, synchronization, and network latency. Financial models requiring atomic transactions across distributed memory nodes face particular difficulties maintaining both performance and accuracy.

Memory capacity limitations also restrict the size of financial models that can be processed entirely in memory. Although 64-bit architectures theoretically support vast memory spaces, practical server configurations typically cap at several terabytes, insufficient for the largest risk simulation scenarios that may require petabytes of data.

Security vulnerabilities present additional concerns, as in-memory data is potentially more exposed to certain attack vectors than encrypted disk storage. Cold boot attacks and memory scraping techniques can potentially extract sensitive financial data from memory, requiring additional security measures that may impact performance.

Distributed in-memory data grids such as Apache Ignite and Hazelcast have gained prominence for their ability to scale horizontally across server clusters, enabling financial institutions to process massive datasets for complex risk simulations. These platforms provide essential features like data partitioning, replication, and fault tolerance that are crucial for mission-critical financial applications.

Despite these advancements, current in-memory technologies face significant limitations. Memory volatility remains a primary concern, as DRAM-based solutions lose data during power failures, necessitating complex persistence mechanisms that can impact performance. This creates a challenging trade-off between data durability and processing speed that financial institutions must carefully navigate.

Cost constraints represent another major limitation. In-memory solutions require substantial hardware investments, with high-capacity server memory configurations costing significantly more than equivalent disk storage. This economic barrier often forces organizations to be selective about which data sets they maintain in memory, potentially limiting the scope of real-time risk analysis.

Scalability challenges emerge as data volumes grow exponentially. While distributed architectures help address this issue, they introduce complexity in data consistency, synchronization, and network latency. Financial models requiring atomic transactions across distributed memory nodes face particular difficulties maintaining both performance and accuracy.

Memory capacity limitations also restrict the size of financial models that can be processed entirely in memory. Although 64-bit architectures theoretically support vast memory spaces, practical server configurations typically cap at several terabytes, insufficient for the largest risk simulation scenarios that may require petabytes of data.

Security vulnerabilities present additional concerns, as in-memory data is potentially more exposed to certain attack vectors than encrypted disk storage. Cold boot attacks and memory scraping techniques can potentially extract sensitive financial data from memory, requiring additional security measures that may impact performance.

Existing In-Memory Architectures for Risk Analysis

01 Memory architecture for in-memory computing

In-memory computing architectures involve specialized memory designs that enable data processing directly within memory units rather than transferring data to the CPU. These architectures include non-volatile memory technologies, memory arrays with computational capabilities, and hybrid memory systems that optimize for both storage and processing. By processing data where it resides, these systems significantly reduce data movement bottlenecks and improve computational efficiency for data-intensive applications.- Memory architecture for in-memory computing: In-memory computing architectures involve specialized memory designs that enable data processing directly within memory units rather than transferring data to the CPU. These architectures include processing-in-memory (PIM) technologies, memory-centric computing structures, and hybrid memory systems that combine different memory types (DRAM, SRAM, non-volatile memory) to optimize performance. By reducing data movement between memory and processing units, these architectures significantly decrease latency and power consumption while increasing computational throughput for data-intensive applications.

- In-memory database management systems: In-memory database management systems store and process data entirely in main memory rather than on disk storage. These systems implement specialized data structures, indexing methods, and query processing techniques optimized for in-memory operations. They feature real-time data processing capabilities, advanced caching mechanisms, and memory-optimized transaction management. Such systems significantly improve query performance and data access speeds for applications requiring rapid data analysis and transaction processing.

- Neural network acceleration using in-memory computing: In-memory computing provides efficient acceleration for neural network operations by performing matrix multiplications and other computations directly within memory arrays. This approach uses analog or digital computing elements integrated with memory cells to execute parallel operations for deep learning algorithms. Memory arrays can be configured to perform multiply-accumulate operations critical for neural network inference and training, significantly reducing energy consumption and improving throughput compared to conventional computing architectures.

- Security and fault tolerance in in-memory computing: Security and fault tolerance mechanisms for in-memory computing systems include data encryption techniques, secure memory partitioning, error detection and correction codes, and memory state preservation methods. These systems implement memory protection schemes to isolate sensitive data, checkpoint mechanisms to recover from failures, and redundancy techniques to ensure data integrity. Advanced security protocols protect against side-channel attacks and unauthorized access while maintaining the performance benefits of in-memory processing.

- Memory management and optimization techniques: Memory management and optimization techniques for in-memory computing include dynamic memory allocation, garbage collection, memory compression, and intelligent data placement strategies. These approaches optimize memory utilization through techniques such as data tiering, memory pooling, and workload-aware memory distribution. Advanced memory management systems implement predictive algorithms to anticipate data access patterns, reduce memory fragmentation, and balance computational loads across distributed memory resources, enhancing overall system efficiency and performance.

02 Processing-in-memory techniques

Processing-in-memory techniques involve implementing computational logic directly within memory arrays to perform operations on data without moving it to external processing units. These techniques include analog computing within memory cells, logic operations performed at the bit-line or word-line level, and specialized memory cells designed to support both storage and computation. Such approaches significantly reduce energy consumption and latency by minimizing data movement across the memory hierarchy.Expand Specific Solutions03 In-memory computing for AI and machine learning

In-memory computing solutions specifically designed for artificial intelligence and machine learning workloads enable efficient neural network processing by performing matrix operations directly within memory. These implementations support parallel vector operations, weight storage with computational capabilities, and optimized data flow for neural network inference and training. By aligning the memory architecture with the computational patterns of AI algorithms, these systems achieve higher throughput and energy efficiency.Expand Specific Solutions04 System-level integration for in-memory computing

System-level integration approaches for in-memory computing focus on how processing-in-memory components interact with the broader computing ecosystem. These include memory controllers designed for computational memory, software frameworks that can leverage in-memory processing capabilities, and system architectures that balance traditional computing with in-memory acceleration. Such integrations enable seamless adoption of in-memory computing while maintaining compatibility with existing software stacks.Expand Specific Solutions05 Security and reliability in in-memory computing

Security and reliability mechanisms for in-memory computing address the unique challenges of systems where computation and data storage are tightly coupled. These include error detection and correction techniques for computational memory, secure execution environments for in-memory processing, and fault tolerance approaches that maintain data integrity during computation. Such mechanisms ensure that in-memory computing systems can be deployed in production environments with appropriate safeguards against data corruption and unauthorized access.Expand Specific Solutions

Leading Vendors in Financial In-Memory Solutions

In-Memory Computing for financial modeling and risk simulations is currently in a growth phase, with the market expanding rapidly due to increasing demands for real-time analytics in financial services. The global market size is projected to reach significant scale as financial institutions seek faster processing capabilities for complex risk calculations. Technology maturity varies across players, with established companies like Huawei, Oracle, and Samsung Electronics leading innovation through advanced hardware-software integration. Financial institutions such as ICBC and Huatai Securities are driving adoption through practical implementations, while research institutions like Fudan University and Duke University contribute to theoretical advancements. Specialized players including Taiwan Semiconductor and Macronix are developing optimized memory solutions specifically for financial workloads, creating a competitive ecosystem that balances innovation from technology providers with practical implementation requirements from financial institutions.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed a comprehensive in-memory computing architecture specifically for financial modeling and risk simulations. Their solution integrates FPGA-based hardware accelerators with their proprietary Kunpeng processors to create a high-throughput computing platform. The architecture employs a distributed memory model where financial data is stored directly in high-speed memory modules, eliminating traditional I/O bottlenecks. Huawei's implementation utilizes specialized memory-centric algorithms that perform calculations directly within memory units, reducing data movement and significantly accelerating complex financial calculations. Their system supports parallel processing of risk simulations, allowing financial institutions to run thousands of Monte Carlo simulations simultaneously for real-time risk assessment. The platform includes dedicated hardware for specific financial operations such as options pricing, Value-at-Risk calculations, and stress testing scenarios, with reported performance improvements of up to 10x compared to traditional computing architectures.

Strengths: Exceptional performance for complex financial calculations with significant reduction in latency; seamless integration with existing financial systems; strong security features for sensitive financial data. Weaknesses: Higher initial implementation costs compared to traditional systems; requires specialized expertise for optimization; potential vendor lock-in with proprietary hardware components.

Hitachi Ltd.

Technical Solution: Hitachi has pioneered an advanced in-memory computing solution tailored for financial risk management and modeling. Their architecture leverages persistent memory technologies combined with specialized hardware accelerators to create a unified memory-compute environment. Hitachi's system employs a hybrid approach that combines DRAM, Storage Class Memory (SCM), and flash storage in a tiered memory hierarchy optimized for financial workloads. The platform features real-time data processing capabilities that enable financial institutions to perform complex risk calculations with sub-millisecond latency. Hitachi's solution includes proprietary algorithms that decompose large financial models into smaller components that can be processed in parallel within memory, significantly reducing computation time for risk simulations. The system supports dynamic resource allocation, automatically adjusting memory and computing resources based on workload demands, which is particularly valuable for handling market volatility scenarios that require sudden increases in computational capacity.

Strengths: Highly scalable architecture that can handle growing datasets and increasingly complex models; excellent reliability with built-in redundancy features; comprehensive support for regulatory compliance requirements. Weaknesses: Complex implementation process requiring significant system integration; higher power consumption compared to traditional computing approaches; requires specialized training for IT staff.

Key Patents in Financial In-Memory Computing

In-memory calculation method, system and device based on 1T and storage medium

PatentPendingCN119513031A

Innovation

- Weight mapping and storage are completed by designing the width-length ratio of the transistor, and the input signal is converted into an input voltage using a digital-to-analog converter. The input voltage is used to determine the leakage current of the transistor, and finally the result of matrix multiplication and addition is calculated through the leakage current.

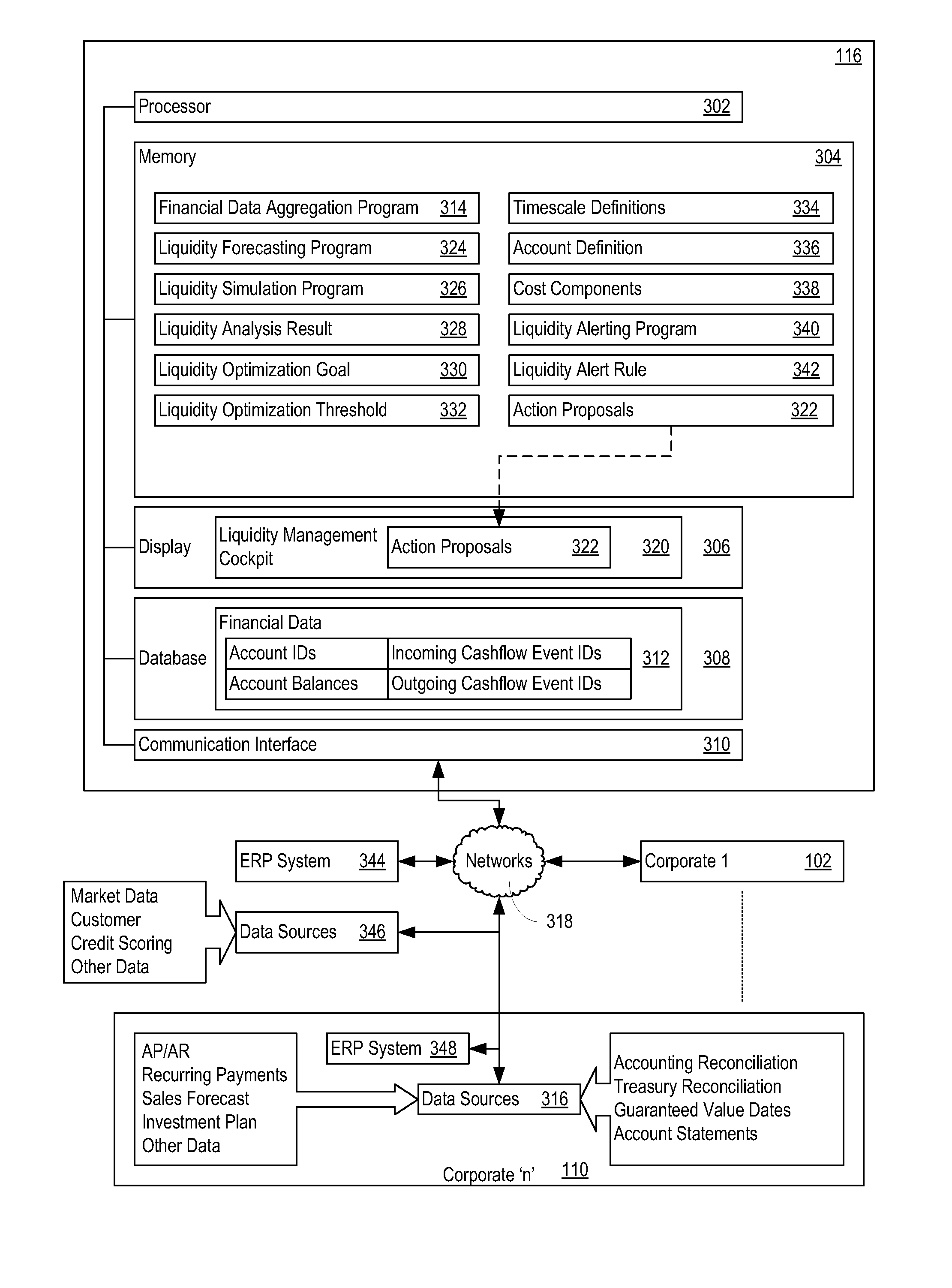

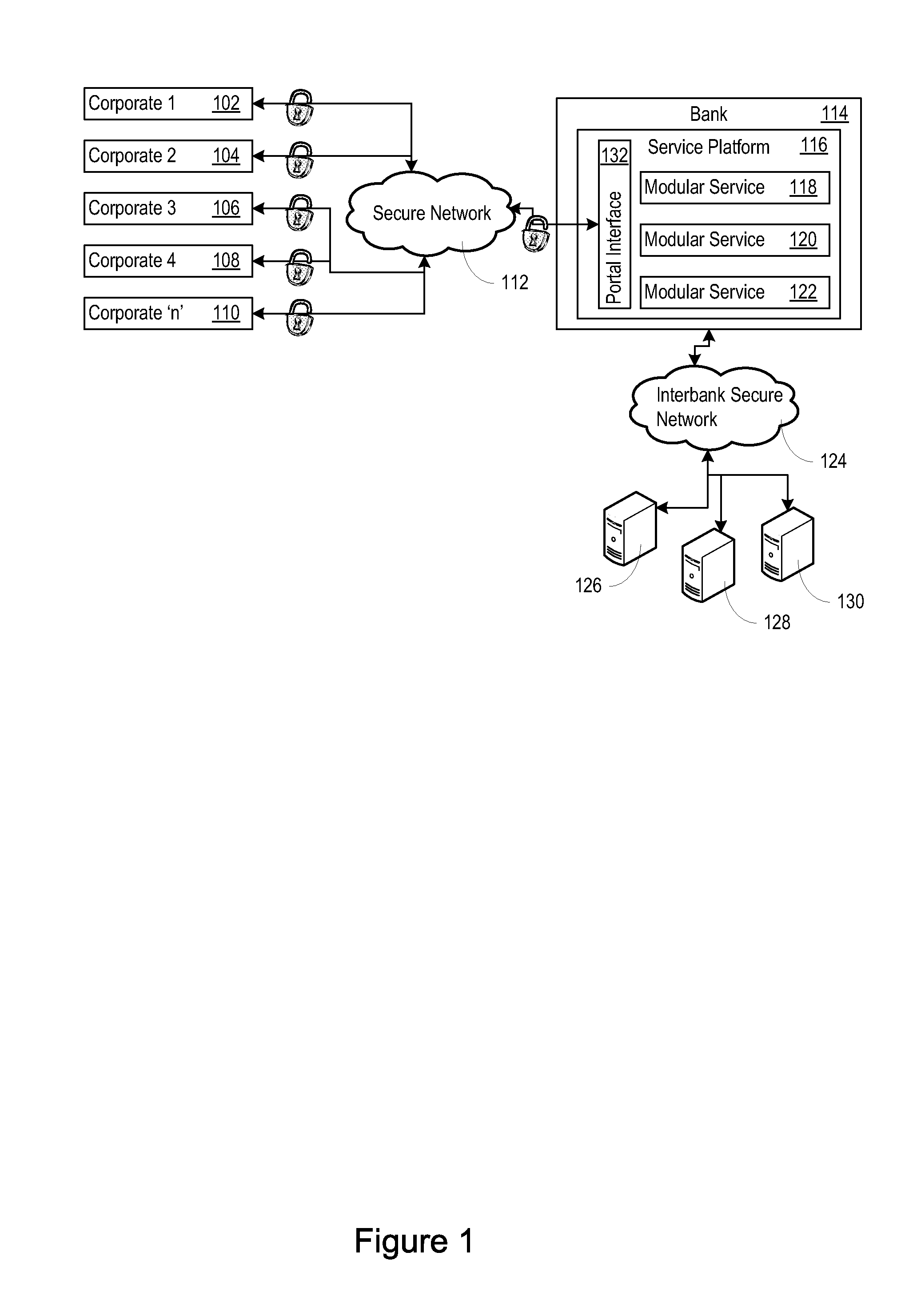

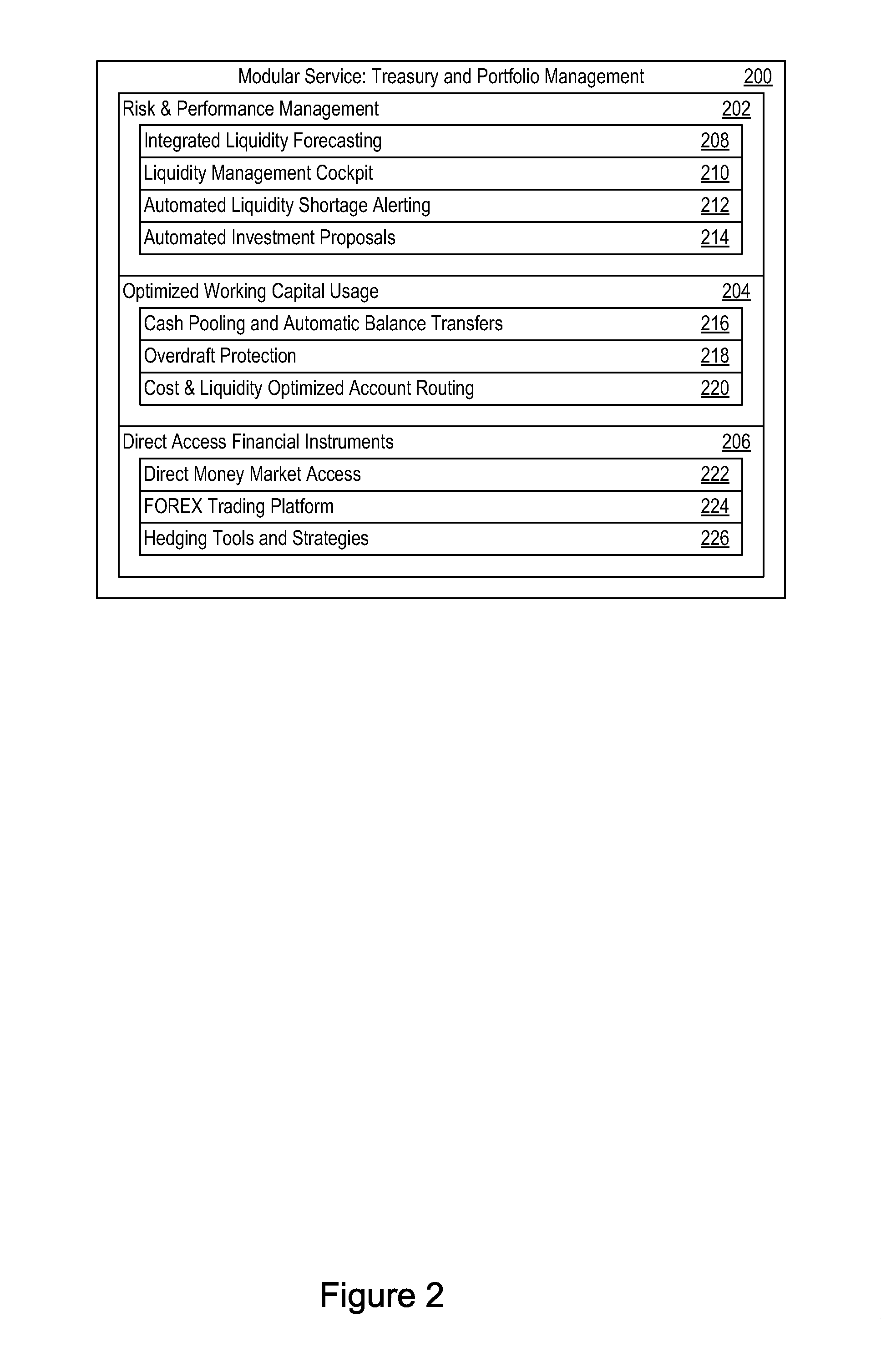

Corporate payments, liquidity and cash management optimization service platform

PatentInactiveUS8190504B1

Innovation

- A corporate payment, liquidity, and cash management optimization service platform that integrates with corporate supply chains, providing real-time liquidity forecasting, optimization, and simulation services through a processor and memory-based system, enabling banks to serve multiple corporations with a single system, leveraging existing bank services and offering modular, configurable solutions.

Regulatory Compliance for Financial Technology

The financial services industry operates within one of the most heavily regulated environments globally, with regulatory frameworks constantly evolving in response to technological innovations. In-memory computing for financial modeling and risk simulations introduces significant regulatory considerations that financial institutions must address to maintain compliance while leveraging these advanced technologies.

Basel III and IV frameworks specifically impact in-memory computing implementations by requiring financial institutions to demonstrate robust risk management capabilities. These regulations mandate comprehensive stress testing and risk assessment methodologies that in-memory computing systems must support with appropriate audit trails and validation mechanisms. The ability to trace calculation methodologies becomes particularly critical when using high-speed in-memory systems.

The Financial Industry Regulatory Authority (FINRA) and Securities and Exchange Commission (SEC) in the United States impose additional requirements regarding system reliability and data integrity. In-memory computing solutions must incorporate safeguards against data corruption and system failures to meet these regulatory standards. This includes implementing appropriate backup mechanisms and disaster recovery protocols specifically designed for volatile memory environments.

Data privacy regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States present unique challenges for in-memory computing implementations. These regulations require careful management of personally identifiable information (PII) even in temporary memory structures, necessitating sophisticated data masking and encryption capabilities within in-memory systems.

Model validation requirements from regulators present another significant compliance consideration. Financial institutions must demonstrate that their in-memory computing models produce accurate, consistent results that align with regulatory expectations. This often requires parallel implementation of models across different computing architectures to validate outputs and ensure consistency.

Regulatory reporting timelines have become increasingly demanding, with some jurisdictions requiring near real-time risk reporting capabilities. In-memory computing offers significant advantages in meeting these accelerated reporting requirements, but implementations must include appropriate controls to ensure reporting accuracy and completeness.

Emerging regulations around algorithmic trading and high-frequency trading activities directly impact in-memory computing applications in trading environments. These regulations focus on system stability, market fairness, and prevention of market manipulation, requiring sophisticated monitoring and control mechanisms within in-memory systems.

Basel III and IV frameworks specifically impact in-memory computing implementations by requiring financial institutions to demonstrate robust risk management capabilities. These regulations mandate comprehensive stress testing and risk assessment methodologies that in-memory computing systems must support with appropriate audit trails and validation mechanisms. The ability to trace calculation methodologies becomes particularly critical when using high-speed in-memory systems.

The Financial Industry Regulatory Authority (FINRA) and Securities and Exchange Commission (SEC) in the United States impose additional requirements regarding system reliability and data integrity. In-memory computing solutions must incorporate safeguards against data corruption and system failures to meet these regulatory standards. This includes implementing appropriate backup mechanisms and disaster recovery protocols specifically designed for volatile memory environments.

Data privacy regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States present unique challenges for in-memory computing implementations. These regulations require careful management of personally identifiable information (PII) even in temporary memory structures, necessitating sophisticated data masking and encryption capabilities within in-memory systems.

Model validation requirements from regulators present another significant compliance consideration. Financial institutions must demonstrate that their in-memory computing models produce accurate, consistent results that align with regulatory expectations. This often requires parallel implementation of models across different computing architectures to validate outputs and ensure consistency.

Regulatory reporting timelines have become increasingly demanding, with some jurisdictions requiring near real-time risk reporting capabilities. In-memory computing offers significant advantages in meeting these accelerated reporting requirements, but implementations must include appropriate controls to ensure reporting accuracy and completeness.

Emerging regulations around algorithmic trading and high-frequency trading activities directly impact in-memory computing applications in trading environments. These regulations focus on system stability, market fairness, and prevention of market manipulation, requiring sophisticated monitoring and control mechanisms within in-memory systems.

Cost-Benefit Analysis of In-Memory Implementation

Implementing in-memory computing solutions for financial modeling and risk simulations requires substantial initial investment, yet offers significant long-term returns. The upfront costs include hardware infrastructure (high-capacity RAM, specialized servers), software licensing for in-memory database platforms, and system integration expenses. Organizations typically face implementation costs ranging from $500,000 to several million dollars depending on scale and complexity, with enterprise-wide deployments at major financial institutions potentially exceeding $10 million.

Personnel costs represent another significant investment area, requiring specialized talent in both in-memory technologies and financial modeling. Training existing staff and hiring specialized engineers with expertise in both domains commands premium compensation packages, with senior specialists commanding annual salaries of $150,000-$250,000 in major financial centers.

Against these costs, the benefits of in-memory implementation are substantial and multifaceted. Performance improvements represent the most immediate return, with processing speeds typically 100-1000x faster than traditional disk-based systems. This translates to near real-time risk calculations that previously required overnight batch processing. Financial institutions report risk simulation processing times reduced from hours to minutes or seconds, enabling more frequent and comprehensive risk assessments.

Operational efficiency gains manifest through reduced hardware footprint despite higher-specification components. The consolidation of computing resources leads to 30-40% lower data center costs through reduced power consumption, cooling requirements, and physical space needs. Maintenance costs typically decrease by 20-25% after the initial implementation period.

Business agility represents perhaps the most valuable though least quantifiable benefit. The ability to run complex scenarios in near real-time enables more responsive decision-making and creates competitive advantages in volatile markets. Financial institutions implementing in-memory solutions report 15-20% improvements in trading position management and risk mitigation.

Return on investment timelines vary by implementation scope, but most financial institutions report break-even periods of 18-36 months, with accelerating returns thereafter as operational efficiencies compound and business advantages materialize. Organizations that have fully embraced in-memory computing for financial modeling report 5-year ROI figures ranging from 150% to 300%, with the highest returns coming from institutions that have most effectively integrated the technology into their core business processes.

Personnel costs represent another significant investment area, requiring specialized talent in both in-memory technologies and financial modeling. Training existing staff and hiring specialized engineers with expertise in both domains commands premium compensation packages, with senior specialists commanding annual salaries of $150,000-$250,000 in major financial centers.

Against these costs, the benefits of in-memory implementation are substantial and multifaceted. Performance improvements represent the most immediate return, with processing speeds typically 100-1000x faster than traditional disk-based systems. This translates to near real-time risk calculations that previously required overnight batch processing. Financial institutions report risk simulation processing times reduced from hours to minutes or seconds, enabling more frequent and comprehensive risk assessments.

Operational efficiency gains manifest through reduced hardware footprint despite higher-specification components. The consolidation of computing resources leads to 30-40% lower data center costs through reduced power consumption, cooling requirements, and physical space needs. Maintenance costs typically decrease by 20-25% after the initial implementation period.

Business agility represents perhaps the most valuable though least quantifiable benefit. The ability to run complex scenarios in near real-time enables more responsive decision-making and creates competitive advantages in volatile markets. Financial institutions implementing in-memory solutions report 15-20% improvements in trading position management and risk mitigation.

Return on investment timelines vary by implementation scope, but most financial institutions report break-even periods of 18-36 months, with accelerating returns thereafter as operational efficiencies compound and business advantages materialize. Organizations that have fully embraced in-memory computing for financial modeling report 5-year ROI figures ranging from 150% to 300%, with the highest returns coming from institutions that have most effectively integrated the technology into their core business processes.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!