What Role Does In-Memory Computing Play In Neuromorphic Architectures

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This field emerged in the late 1980s when Carver Mead introduced the concept of using electronic analog circuits to mimic neuro-biological architectures. Since then, neuromorphic computing has evolved significantly, transitioning from theoretical concepts to practical implementations that aim to overcome the limitations of traditional von Neumann architectures.

The evolution of neuromorphic systems has been driven by the increasing demand for energy-efficient computing solutions capable of handling complex cognitive tasks. Traditional computing architectures face fundamental bottlenecks in processing the massive datasets required for modern artificial intelligence applications, particularly in terms of power consumption and processing speed. Neuromorphic computing addresses these challenges by integrating memory and processing units, similar to how neurons and synapses function in biological systems.

Recent technological advancements have accelerated the development of neuromorphic hardware. The introduction of memristive devices, phase-change materials, and spintronic components has enabled more efficient implementation of neural network architectures in hardware. These technologies facilitate the creation of systems that can perform parallel processing, adapt to new information, and operate with significantly lower power requirements compared to conventional computing systems.

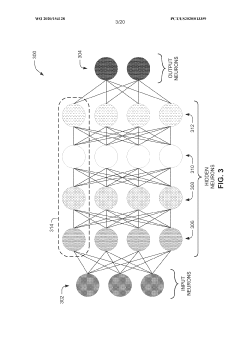

The primary objective of neuromorphic computing is to develop computational systems that can process information with the efficiency, adaptability, and robustness of biological neural networks. This includes achieving high levels of parallelism, fault tolerance, and energy efficiency while maintaining the ability to learn and adapt to new data patterns. Additionally, neuromorphic systems aim to support real-time processing of sensory data, enabling applications in robotics, autonomous vehicles, and advanced AI systems.

Looking forward, the field is trending toward more sophisticated integration of hardware and software components, with increasing focus on scalability and practical applications. Researchers are exploring hybrid approaches that combine the strengths of digital and analog computing, as well as developing new materials and fabrication techniques to enhance the performance of neuromorphic devices.

The convergence of neuromorphic computing with other emerging technologies, such as quantum computing and advanced AI algorithms, presents opportunities for revolutionary advances in computational capabilities. As these technologies mature, they promise to enable new applications in fields ranging from healthcare and scientific research to autonomous systems and personalized computing experiences.

The evolution of neuromorphic systems has been driven by the increasing demand for energy-efficient computing solutions capable of handling complex cognitive tasks. Traditional computing architectures face fundamental bottlenecks in processing the massive datasets required for modern artificial intelligence applications, particularly in terms of power consumption and processing speed. Neuromorphic computing addresses these challenges by integrating memory and processing units, similar to how neurons and synapses function in biological systems.

Recent technological advancements have accelerated the development of neuromorphic hardware. The introduction of memristive devices, phase-change materials, and spintronic components has enabled more efficient implementation of neural network architectures in hardware. These technologies facilitate the creation of systems that can perform parallel processing, adapt to new information, and operate with significantly lower power requirements compared to conventional computing systems.

The primary objective of neuromorphic computing is to develop computational systems that can process information with the efficiency, adaptability, and robustness of biological neural networks. This includes achieving high levels of parallelism, fault tolerance, and energy efficiency while maintaining the ability to learn and adapt to new data patterns. Additionally, neuromorphic systems aim to support real-time processing of sensory data, enabling applications in robotics, autonomous vehicles, and advanced AI systems.

Looking forward, the field is trending toward more sophisticated integration of hardware and software components, with increasing focus on scalability and practical applications. Researchers are exploring hybrid approaches that combine the strengths of digital and analog computing, as well as developing new materials and fabrication techniques to enhance the performance of neuromorphic devices.

The convergence of neuromorphic computing with other emerging technologies, such as quantum computing and advanced AI algorithms, presents opportunities for revolutionary advances in computational capabilities. As these technologies mature, they promise to enable new applications in fields ranging from healthcare and scientific research to autonomous systems and personalized computing experiences.

Market Analysis for In-Memory Computing Solutions

The in-memory computing (IMC) solutions market is experiencing robust growth, driven primarily by the increasing demand for high-performance computing systems capable of handling complex neuromorphic architectures. Current market valuations indicate that the global IMC market reached approximately $3.2 billion in 2022 and is projected to grow at a compound annual growth rate of 29.3% through 2028, potentially reaching $14.8 billion by the end of the forecast period.

The market demand for IMC solutions in neuromorphic computing is being fueled by several key factors. First, traditional von Neumann architectures face significant performance bottlenecks when processing the massive parallel operations required for neural network implementations. This "memory wall" challenge has created substantial market pull for alternative computing paradigms that can overcome these limitations.

Industry sectors showing the strongest demand include artificial intelligence hardware developers, autonomous vehicle manufacturers, advanced robotics companies, and high-performance computing research institutions. These segments collectively represent approximately 68% of the current market share for IMC solutions specifically tailored for neuromorphic applications.

Geographically, North America dominates the market with approximately 42% share, followed by Asia-Pacific at 31%, Europe at 22%, and the rest of the world accounting for the remaining 5%. China and South Korea are demonstrating particularly aggressive growth rates in this sector, with government-backed initiatives supporting domestic development of neuromorphic hardware utilizing in-memory computing principles.

From an application perspective, edge computing represents the fastest-growing segment for IMC-based neuromorphic solutions, with a projected growth rate of 34.7% annually. This is primarily driven by the need for energy-efficient AI processing in resource-constrained environments such as IoT devices, wearables, and autonomous systems.

The market is also witnessing significant shifts in customer preferences, with increasing demand for solutions that offer not only performance advantages but also substantial energy efficiency improvements. This trend aligns perfectly with the inherent benefits of neuromorphic architectures utilizing in-memory computing, which can potentially deliver 100-1000x improvements in energy efficiency compared to conventional computing approaches for neural network workloads.

Investment patterns further validate market growth potential, with venture capital funding for startups focused on IMC-based neuromorphic hardware exceeding $1.2 billion in 2022 alone, representing a 47% increase compared to the previous year. This influx of capital is accelerating commercialization timelines and expanding the range of available market solutions.

The market demand for IMC solutions in neuromorphic computing is being fueled by several key factors. First, traditional von Neumann architectures face significant performance bottlenecks when processing the massive parallel operations required for neural network implementations. This "memory wall" challenge has created substantial market pull for alternative computing paradigms that can overcome these limitations.

Industry sectors showing the strongest demand include artificial intelligence hardware developers, autonomous vehicle manufacturers, advanced robotics companies, and high-performance computing research institutions. These segments collectively represent approximately 68% of the current market share for IMC solutions specifically tailored for neuromorphic applications.

Geographically, North America dominates the market with approximately 42% share, followed by Asia-Pacific at 31%, Europe at 22%, and the rest of the world accounting for the remaining 5%. China and South Korea are demonstrating particularly aggressive growth rates in this sector, with government-backed initiatives supporting domestic development of neuromorphic hardware utilizing in-memory computing principles.

From an application perspective, edge computing represents the fastest-growing segment for IMC-based neuromorphic solutions, with a projected growth rate of 34.7% annually. This is primarily driven by the need for energy-efficient AI processing in resource-constrained environments such as IoT devices, wearables, and autonomous systems.

The market is also witnessing significant shifts in customer preferences, with increasing demand for solutions that offer not only performance advantages but also substantial energy efficiency improvements. This trend aligns perfectly with the inherent benefits of neuromorphic architectures utilizing in-memory computing, which can potentially deliver 100-1000x improvements in energy efficiency compared to conventional computing approaches for neural network workloads.

Investment patterns further validate market growth potential, with venture capital funding for startups focused on IMC-based neuromorphic hardware exceeding $1.2 billion in 2022 alone, representing a 47% increase compared to the previous year. This influx of capital is accelerating commercialization timelines and expanding the range of available market solutions.

In-Memory Computing Status and Technical Challenges

In-memory computing (IMC) represents a paradigm shift in computing architecture that addresses the von Neumann bottleneck by integrating computation and memory functions within the same physical location. Currently, IMC technologies have reached varying levels of maturity across different implementation approaches. Memristive devices, including Resistive RAM (RRAM), Phase Change Memory (PCM), and Magnetic RAM (MRAM), have demonstrated promising characteristics for neuromorphic applications but face challenges in device variability and endurance. Commercial deployment remains limited primarily to research prototypes and specialized applications.

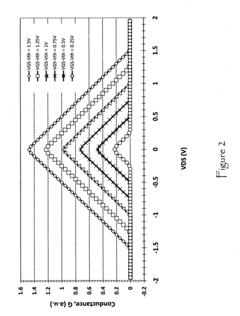

The technical landscape of IMC in neuromorphic architectures reveals several critical challenges. Device-level issues include non-linear conductance response, asymmetric weight updates, and limited precision, which complicate the implementation of accurate synaptic behavior. Cycle-to-cycle and device-to-device variations significantly impact reliability and reproducibility of neural network operations. Most current memristive devices also exhibit limited endurance (10^4-10^9 cycles), insufficient for long-term learning applications requiring billions of operations.

From an architectural perspective, the integration of analog IMC arrays with digital CMOS peripherals creates significant design complexities. Signal conversion overhead between analog and digital domains can negate energy efficiency gains. Additionally, the lack of standardized design methodologies and tools specifically tailored for neuromorphic IMC systems impedes widespread adoption and development efficiency.

Scaling challenges persist as crossbar arrays increase in size, with issues such as sneak path currents, voltage drops, and parasitic effects degrading computational accuracy. These effects become more pronounced in larger arrays necessary for complex neural network implementations. The absence of established fabrication processes compatible with standard CMOS technology further complicates commercial viability.

Energy efficiency, while theoretically superior to conventional architectures, faces practical limitations due to peripheral circuit overhead and the need for precise current sensing and voltage regulation. Current IMC implementations typically achieve 10-100x energy improvements over digital systems, falling short of theoretical projections of 1000x gains.

From a global perspective, research efforts in IMC for neuromorphic computing are concentrated primarily in North America, Europe, and East Asia, with significant contributions from both academic institutions and industry leaders such as IBM, Intel, Samsung, and emerging startups. The technology readiness level varies significantly across different implementation approaches, with most solutions currently at TRL 3-5, indicating proof-of-concept demonstrations but limited commercial deployment.

The technical landscape of IMC in neuromorphic architectures reveals several critical challenges. Device-level issues include non-linear conductance response, asymmetric weight updates, and limited precision, which complicate the implementation of accurate synaptic behavior. Cycle-to-cycle and device-to-device variations significantly impact reliability and reproducibility of neural network operations. Most current memristive devices also exhibit limited endurance (10^4-10^9 cycles), insufficient for long-term learning applications requiring billions of operations.

From an architectural perspective, the integration of analog IMC arrays with digital CMOS peripherals creates significant design complexities. Signal conversion overhead between analog and digital domains can negate energy efficiency gains. Additionally, the lack of standardized design methodologies and tools specifically tailored for neuromorphic IMC systems impedes widespread adoption and development efficiency.

Scaling challenges persist as crossbar arrays increase in size, with issues such as sneak path currents, voltage drops, and parasitic effects degrading computational accuracy. These effects become more pronounced in larger arrays necessary for complex neural network implementations. The absence of established fabrication processes compatible with standard CMOS technology further complicates commercial viability.

Energy efficiency, while theoretically superior to conventional architectures, faces practical limitations due to peripheral circuit overhead and the need for precise current sensing and voltage regulation. Current IMC implementations typically achieve 10-100x energy improvements over digital systems, falling short of theoretical projections of 1000x gains.

From a global perspective, research efforts in IMC for neuromorphic computing are concentrated primarily in North America, Europe, and East Asia, with significant contributions from both academic institutions and industry leaders such as IBM, Intel, Samsung, and emerging startups. The technology readiness level varies significantly across different implementation approaches, with most solutions currently at TRL 3-5, indicating proof-of-concept demonstrations but limited commercial deployment.

Current In-Memory Computing Implementation Approaches

01 Memory architecture optimization for computing efficiency

Optimizing memory architecture is crucial for in-memory computing efficiency. This includes designing specialized memory structures that reduce data movement between processing units and memory, implementing hierarchical memory systems, and utilizing novel memory technologies. These optimizations minimize latency and energy consumption while maximizing throughput for computational tasks performed directly within memory.- Memory architecture optimization for computing efficiency: Optimizing memory architecture is crucial for in-memory computing efficiency. This includes designing specialized memory structures that reduce data movement between processing units and memory, implementing hierarchical memory systems, and developing novel memory cell designs that support computational functions. These optimizations minimize latency and energy consumption while maximizing throughput for data-intensive applications.

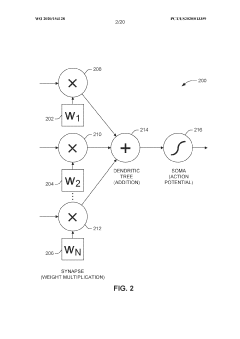

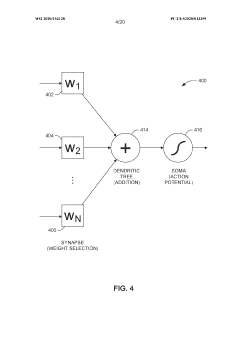

- Processing-in-memory techniques: Processing-in-memory (PIM) techniques integrate computational capabilities directly within memory arrays, enabling data processing where data resides. This approach eliminates the traditional bottleneck of data transfer between separate memory and processing units. PIM architectures can perform operations like vector calculations, neural network computations, and database operations directly in memory, significantly reducing energy consumption and increasing processing speed for parallel workloads.

- Power management strategies for in-memory computing: Effective power management is essential for in-memory computing systems to achieve optimal efficiency. This includes dynamic voltage and frequency scaling, selective activation of memory regions, power gating for inactive components, and thermal management techniques. Advanced power management strategies can significantly reduce energy consumption while maintaining computational performance, making in-memory computing more sustainable for various applications.

- Memory-centric algorithms and data structures: Specialized algorithms and data structures designed specifically for in-memory computing environments can dramatically improve computational efficiency. These include memory-aware sorting and search algorithms, optimized data layouts that minimize cache misses, compression techniques that reduce memory footprint, and data structures that exploit locality. By aligning software design with the characteristics of in-memory systems, these approaches maximize throughput and minimize resource utilization.

- Hardware acceleration for in-memory computing: Hardware accelerators designed specifically for in-memory computing can significantly enhance computational efficiency. These include specialized circuits for common operations like matrix multiplication, custom memory controllers that optimize data access patterns, and dedicated hardware for specific workloads such as neural network inference. By implementing frequently used operations directly in hardware, these accelerators reduce processing time and energy consumption compared to general-purpose computing approaches.

02 Processing-in-memory techniques

Processing-in-memory (PIM) techniques involve performing computational operations directly within memory arrays rather than transferring data to a separate processor. These techniques leverage memory's inherent parallelism to execute operations simultaneously across multiple memory cells, significantly reducing data movement overhead and power consumption. PIM architectures can be implemented using various memory technologies including DRAM, SRAM, and emerging non-volatile memories.Expand Specific Solutions03 Energy efficiency in in-memory computing

Energy efficiency is a critical aspect of in-memory computing systems. Various approaches are employed to reduce power consumption, including dynamic voltage and frequency scaling, selective activation of memory arrays, power gating unused components, and implementing energy-aware algorithms. These techniques help minimize energy usage while maintaining computational performance, making in-memory computing solutions more sustainable and practical for deployment in energy-constrained environments.Expand Specific Solutions04 Parallel processing frameworks for in-memory computing

Parallel processing frameworks specifically designed for in-memory computing enable efficient execution of complex computational tasks. These frameworks distribute workloads across multiple memory units, implement sophisticated scheduling algorithms, and provide programming models that leverage the unique characteristics of in-memory architectures. By exploiting data locality and minimizing synchronization overhead, these frameworks significantly improve computational efficiency for data-intensive applications.Expand Specific Solutions05 Memory-centric data management for computing efficiency

Memory-centric data management strategies optimize how data is organized, accessed, and processed within memory to maximize computing efficiency. These approaches include data layout optimization, compression techniques, intelligent caching mechanisms, and data prefetching algorithms. By minimizing data movement and ensuring that frequently accessed data remains close to processing elements, these strategies significantly reduce latency and improve overall system performance in in-memory computing environments.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing

In-memory computing is emerging as a critical component in neuromorphic architectures, currently positioned at the early growth stage of industry development. The market is expanding rapidly, with projections suggesting significant growth as AI applications proliferate. While the technology remains in development, major players are making substantial advances. IBM leads with its TrueNorth and subsequent neuromorphic chips, while Samsung, Intel, and TSMC are developing hardware implementations that leverage in-memory computing principles. Academic institutions including Tsinghua University, Zhejiang University, and KAIST are contributing fundamental research. The technology's maturity varies across applications, with memory-centric computing showing promise for overcoming von Neumann bottlenecks in neuromorphic systems, though commercial deployment remains limited to specialized applications.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic architecture leverages in-memory computing through their TrueNorth and subsequent systems that integrate memory and processing to mimic brain function. Their approach uses non-volatile memory technologies like phase-change memory (PCM) and resistive RAM to perform computations directly within memory arrays. IBM's neuromorphic chips contain millions of "neurons" and "synapses" implemented as electronic components that process information in parallel while storing memory locally. This architecture enables spike-based neural processing where computation occurs only when needed, dramatically reducing power consumption compared to traditional von Neumann architectures. IBM has demonstrated energy efficiency improvements of 100-1000x over conventional computing systems for certain neural network tasks[1]. Their SyNAPSE program developed chips with 1 million programmable neurons and 256 million configurable synapses, with each core containing memory, computation, and communication components integrated together rather than separated[2].

Strengths: Extremely low power consumption (typically 70mW during operation); highly scalable architecture; real-time sensory processing capabilities; mature technology with multiple generations of development. Weaknesses: Programming complexity requiring specialized knowledge; limited software ecosystem compared to traditional computing; challenges in adapting conventional algorithms to spiking neural network paradigms.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has pioneered neuromorphic computing solutions that integrate in-memory computing using their advanced memory technologies. Their approach centers on using High Bandwidth Memory (HBM) and Processing-in-Memory (PIM) architectures that place computing elements directly within memory arrays. Samsung's Aquabolt-XL HBM-PIM technology incorporates AI processing units within the memory subsystem, enabling computational operations to occur where data resides rather than shuttling information between separate memory and processing units[3]. For neuromorphic applications specifically, Samsung has developed resistive RAM (RRAM) and magnetoresistive RAM (MRAM) technologies that function as artificial synapses, allowing weight updates and neural computations to occur simultaneously within the memory elements. Their neuromorphic chips demonstrate power efficiency improvements of up to 120x compared to conventional GPU implementations for neural network inference tasks[4]. Samsung has also explored 3D stacking of memory and logic layers to further enhance the density and performance of their neuromorphic systems.

Strengths: Leverages Samsung's industry-leading memory manufacturing capabilities; high integration density; significant power efficiency gains; compatibility with existing semiconductor fabrication processes. Weaknesses: Still in relatively early commercialization stages compared to traditional computing architectures; requires specialized programming models; challenges in scaling to very large neural network implementations.

Key Technologies in Memory-Centric Neural Processing

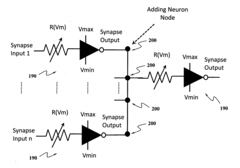

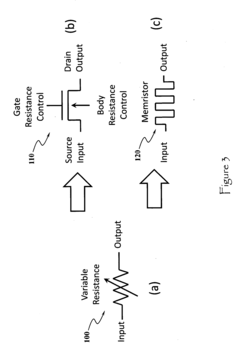

Neuromorphic computer

PatentActiveUS20110106742A1

Innovation

- A neuromorphic computer architecture utilizing electronic devices with variable resistance circuits to represent synaptic connection strength and positive/negative output circuits to mimic excitatory and inhibitory responses, enabling high-density fabrication and brain-like computing.

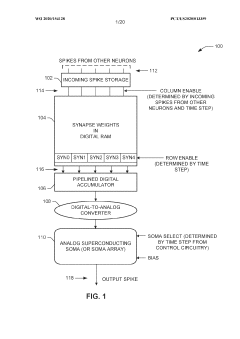

Superconducting neuromorphic core

PatentWO2020154128A1

Innovation

- A superconducting neuromorphic core is developed, incorporating a digital memory array for synapse weight storage, a digital accumulator, and analog soma circuitry to simulate multiple neurons, enabling efficient and scalable neural network operations with improved biological fidelity.

Energy Efficiency Considerations in Brain-Inspired Computing

Energy efficiency represents a critical consideration in brain-inspired computing systems, particularly as neuromorphic architectures aim to emulate the remarkable energy efficiency of biological brains. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks, a level of efficiency that traditional von Neumann computing architectures cannot match. This efficiency gap has driven significant research into neuromorphic computing approaches that leverage in-memory computing paradigms.

In-memory computing fundamentally addresses the "memory wall" problem by reducing or eliminating the energy-intensive data movement between processing units and memory. Traditional computing architectures expend significant energy shuttling data between physically separated processing and memory components. Neuromorphic designs that incorporate in-memory computing can achieve dramatic energy savings by performing computations directly where data is stored.

Recent benchmarks demonstrate that neuromorphic systems utilizing in-memory computing can achieve energy efficiencies 100-1000x greater than conventional digital systems for certain neural network operations. This efficiency stems from the elimination of redundant data transfers and the ability to perform massively parallel, low-precision computations that are well-suited to neural network operations.

Various material technologies support energy-efficient in-memory computing in neuromorphic systems. Resistive RAM (RRAM), phase-change memory (PCM), and magnetic RAM (MRAM) all offer non-volatile storage with the ability to perform computations within the memory array itself. These technologies enable analog computation that can directly implement the weighted summations central to neural network operations without requiring energy-intensive digital-to-analog conversions.

Spiking neural networks (SNNs) implemented with in-memory computing show particular promise for energy efficiency. By processing information only when neurons "fire" rather than in continuous operation, SNNs can dramatically reduce dynamic power consumption. When combined with in-memory computing, the energy advantages multiply as both computation and data movement are optimized simultaneously.

Looking forward, emerging technologies like photonic computing elements may further enhance energy efficiency in neuromorphic systems. These approaches leverage light rather than electricity for signal propagation and computation, potentially reducing energy requirements by additional orders of magnitude while increasing processing speed.

The ultimate goal remains achieving brain-like energy efficiency while maintaining computational capabilities suitable for complex AI tasks. Current neuromorphic systems with in-memory computing represent significant progress toward this goal, though challenges in scaling, precision, and programming models remain to be fully addressed.

In-memory computing fundamentally addresses the "memory wall" problem by reducing or eliminating the energy-intensive data movement between processing units and memory. Traditional computing architectures expend significant energy shuttling data between physically separated processing and memory components. Neuromorphic designs that incorporate in-memory computing can achieve dramatic energy savings by performing computations directly where data is stored.

Recent benchmarks demonstrate that neuromorphic systems utilizing in-memory computing can achieve energy efficiencies 100-1000x greater than conventional digital systems for certain neural network operations. This efficiency stems from the elimination of redundant data transfers and the ability to perform massively parallel, low-precision computations that are well-suited to neural network operations.

Various material technologies support energy-efficient in-memory computing in neuromorphic systems. Resistive RAM (RRAM), phase-change memory (PCM), and magnetic RAM (MRAM) all offer non-volatile storage with the ability to perform computations within the memory array itself. These technologies enable analog computation that can directly implement the weighted summations central to neural network operations without requiring energy-intensive digital-to-analog conversions.

Spiking neural networks (SNNs) implemented with in-memory computing show particular promise for energy efficiency. By processing information only when neurons "fire" rather than in continuous operation, SNNs can dramatically reduce dynamic power consumption. When combined with in-memory computing, the energy advantages multiply as both computation and data movement are optimized simultaneously.

Looking forward, emerging technologies like photonic computing elements may further enhance energy efficiency in neuromorphic systems. These approaches leverage light rather than electricity for signal propagation and computation, potentially reducing energy requirements by additional orders of magnitude while increasing processing speed.

The ultimate goal remains achieving brain-like energy efficiency while maintaining computational capabilities suitable for complex AI tasks. Current neuromorphic systems with in-memory computing represent significant progress toward this goal, though challenges in scaling, precision, and programming models remain to be fully addressed.

Hardware-Software Co-Design for Neuromorphic Applications

Effective neuromorphic computing requires seamless integration between hardware architectures and software frameworks, creating a symbiotic relationship that maximizes system performance. Hardware-software co-design approaches have emerged as critical methodologies for neuromorphic applications, particularly when leveraging in-memory computing capabilities. This integration addresses the fundamental challenge of the memory-processor bottleneck that plagues traditional von Neumann architectures.

The co-design process begins with hardware considerations that accommodate the unique requirements of neuromorphic algorithms. Memory-centric architectures featuring in-memory computing elements must be designed with specific neural network topologies and learning rules in mind. This includes determining optimal memory cell configurations, interconnect densities, and signal conversion mechanisms that support the parallel processing nature of neural networks.

Software frameworks must then be developed to efficiently map neural algorithms onto these specialized hardware substrates. This involves creating abstraction layers that hide hardware complexities while exposing essential neuromorphic features. Programming models specifically designed for in-memory computing architectures enable developers to express neural computations without detailed knowledge of the underlying hardware implementation.

Compiler technologies play a crucial role in this co-design ecosystem by translating high-level neural network descriptions into optimized instructions for in-memory computing hardware. These compilers must account for unique constraints such as limited precision, device variability, and specialized instruction sets that differ significantly from conventional computing platforms.

Runtime systems complete the co-design stack by managing resource allocation, scheduling, and power management during execution. For neuromorphic systems utilizing in-memory computing, these runtime components must handle the dynamic nature of neural processing, including event-driven computation and adaptive learning mechanisms that modify network parameters during operation.

Simulation environments that accurately model both hardware and software behaviors are essential tools in the co-design process. These environments allow designers to evaluate performance, energy efficiency, and accuracy trade-offs before physical implementation, significantly reducing development cycles and optimization costs.

The co-design approach has yielded significant advances in neuromorphic applications, including real-time sensor processing, autonomous systems, and edge AI implementations. By simultaneously considering hardware capabilities and software requirements, researchers have achieved orders of magnitude improvements in energy efficiency while maintaining computational performance necessary for complex neural processing tasks.

The co-design process begins with hardware considerations that accommodate the unique requirements of neuromorphic algorithms. Memory-centric architectures featuring in-memory computing elements must be designed with specific neural network topologies and learning rules in mind. This includes determining optimal memory cell configurations, interconnect densities, and signal conversion mechanisms that support the parallel processing nature of neural networks.

Software frameworks must then be developed to efficiently map neural algorithms onto these specialized hardware substrates. This involves creating abstraction layers that hide hardware complexities while exposing essential neuromorphic features. Programming models specifically designed for in-memory computing architectures enable developers to express neural computations without detailed knowledge of the underlying hardware implementation.

Compiler technologies play a crucial role in this co-design ecosystem by translating high-level neural network descriptions into optimized instructions for in-memory computing hardware. These compilers must account for unique constraints such as limited precision, device variability, and specialized instruction sets that differ significantly from conventional computing platforms.

Runtime systems complete the co-design stack by managing resource allocation, scheduling, and power management during execution. For neuromorphic systems utilizing in-memory computing, these runtime components must handle the dynamic nature of neural processing, including event-driven computation and adaptive learning mechanisms that modify network parameters during operation.

Simulation environments that accurately model both hardware and software behaviors are essential tools in the co-design process. These environments allow designers to evaluate performance, energy efficiency, and accuracy trade-offs before physical implementation, significantly reducing development cycles and optimization costs.

The co-design approach has yielded significant advances in neuromorphic applications, including real-time sensor processing, autonomous systems, and edge AI implementations. By simultaneously considering hardware capabilities and software requirements, researchers have achieved orders of magnitude improvements in energy efficiency while maintaining computational performance necessary for complex neural processing tasks.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!