Benchmarking PCM-Based Inference: Latency, Energy, And Accuracy Tradeoffs

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

PCM Inference Technology Background and Objectives

Phase Change Memory (PCM) has emerged as a promising technology for next-generation computing systems, particularly in the realm of machine learning inference. The evolution of PCM technology spans over two decades, transitioning from theoretical concepts to practical implementations in various computing domains. This non-volatile memory technology offers unique characteristics that position it as a potential game-changer for inference workloads, where traditional computing architectures face significant limitations in terms of energy efficiency and processing speed.

The development trajectory of PCM has been marked by continuous improvements in material science, manufacturing processes, and integration techniques. Initially conceived as a storage alternative to NAND flash memory, PCM has evolved to address computational needs through in-memory computing paradigms. This evolution aligns with the growing demand for efficient AI inference solutions that can operate under strict power and latency constraints.

Current technological trends indicate a convergence of memory and processing capabilities, with PCM at the forefront of this paradigm shift. The ability to perform computations directly within memory arrays represents a fundamental departure from the von Neumann architecture, potentially eliminating the memory bottleneck that plagues conventional computing systems during inference tasks.

The primary objective of benchmarking PCM-based inference is to establish comprehensive performance metrics that accurately reflect real-world application scenarios. This involves developing standardized evaluation frameworks that consider the unique characteristics of PCM technology, including resistance drift, endurance limitations, and programming variability. Such benchmarks must address the critical tradeoffs between inference latency, energy consumption, and prediction accuracy.

Additionally, this technical exploration aims to identify optimal design points within the PCM inference solution space that maximize performance while minimizing resource utilization. This requires a nuanced understanding of how different neural network architectures interact with PCM-based computing substrates and how these interactions affect overall system performance.

The long-term goal extends beyond mere performance evaluation to establishing PCM as a viable technology for edge computing applications, where energy constraints are particularly stringent. By quantifying the advantages and limitations of PCM-based inference across diverse workloads and deployment scenarios, this research seeks to provide a foundation for future architectural innovations and optimization strategies.

Understanding the fundamental physics of PCM devices and their behavior during inference operations is crucial for developing accurate models that predict performance at scale. This knowledge will inform design decisions and help establish realistic expectations regarding the technology's potential impact on next-generation AI systems.

The development trajectory of PCM has been marked by continuous improvements in material science, manufacturing processes, and integration techniques. Initially conceived as a storage alternative to NAND flash memory, PCM has evolved to address computational needs through in-memory computing paradigms. This evolution aligns with the growing demand for efficient AI inference solutions that can operate under strict power and latency constraints.

Current technological trends indicate a convergence of memory and processing capabilities, with PCM at the forefront of this paradigm shift. The ability to perform computations directly within memory arrays represents a fundamental departure from the von Neumann architecture, potentially eliminating the memory bottleneck that plagues conventional computing systems during inference tasks.

The primary objective of benchmarking PCM-based inference is to establish comprehensive performance metrics that accurately reflect real-world application scenarios. This involves developing standardized evaluation frameworks that consider the unique characteristics of PCM technology, including resistance drift, endurance limitations, and programming variability. Such benchmarks must address the critical tradeoffs between inference latency, energy consumption, and prediction accuracy.

Additionally, this technical exploration aims to identify optimal design points within the PCM inference solution space that maximize performance while minimizing resource utilization. This requires a nuanced understanding of how different neural network architectures interact with PCM-based computing substrates and how these interactions affect overall system performance.

The long-term goal extends beyond mere performance evaluation to establishing PCM as a viable technology for edge computing applications, where energy constraints are particularly stringent. By quantifying the advantages and limitations of PCM-based inference across diverse workloads and deployment scenarios, this research seeks to provide a foundation for future architectural innovations and optimization strategies.

Understanding the fundamental physics of PCM devices and their behavior during inference operations is crucial for developing accurate models that predict performance at scale. This knowledge will inform design decisions and help establish realistic expectations regarding the technology's potential impact on next-generation AI systems.

Market Demand Analysis for PCM-Based AI Solutions

The global market for PCM-based AI solutions is experiencing significant growth, driven by the increasing demand for energy-efficient and high-performance computing systems for artificial intelligence applications. As traditional computing architectures face limitations in processing the massive computational requirements of modern AI workloads, Phase-Change Memory (PCM) technology has emerged as a promising alternative for AI inference tasks.

The demand for PCM-based AI solutions is primarily fueled by the exponential growth in edge computing applications, where power constraints and real-time processing requirements are critical factors. Market research indicates that the edge AI hardware market is projected to grow at a CAGR of 20.3% from 2021 to 2026, with memory-centric computing architectures like PCM playing a crucial role in this expansion.

Industries such as autonomous vehicles, industrial automation, healthcare, and consumer electronics are showing particular interest in PCM-based inference solutions. These sectors require AI systems that can deliver low-latency responses while operating under strict energy budgets, making the latency-energy tradeoffs offered by PCM technology especially valuable.

The healthcare sector represents a significant market opportunity, with applications in medical imaging analysis, patient monitoring, and diagnostic systems. These applications demand both high accuracy and energy efficiency, aligning well with the capabilities of optimized PCM-based inference systems.

Telecommunications and data center operators are also driving demand as they seek to reduce the enormous energy consumption associated with AI workloads. With data centers currently consuming approximately 1-2% of global electricity, the energy efficiency benefits of PCM-based solutions present a compelling value proposition for these operators.

Market analysis reveals that customers are increasingly prioritizing solutions that offer flexible tradeoffs between latency, energy consumption, and accuracy. This trend aligns perfectly with the configurable nature of PCM-based inference systems, where parameters can be adjusted to meet specific application requirements.

The Asia-Pacific region is expected to witness the highest growth rate in adoption of PCM-based AI solutions, driven by the strong presence of semiconductor manufacturing facilities and the rapid digitalization across various industries. North America currently leads in terms of market share, primarily due to the concentration of AI research institutions and technology companies investing in next-generation computing architectures.

Despite the growing interest, market penetration remains challenged by concerns regarding the long-term reliability of PCM technology and the need for specialized software frameworks to fully leverage its capabilities. Addressing these concerns represents a significant opportunity for companies that can demonstrate robust benchmarking results across the critical performance metrics of latency, energy efficiency, and inference accuracy.

The demand for PCM-based AI solutions is primarily fueled by the exponential growth in edge computing applications, where power constraints and real-time processing requirements are critical factors. Market research indicates that the edge AI hardware market is projected to grow at a CAGR of 20.3% from 2021 to 2026, with memory-centric computing architectures like PCM playing a crucial role in this expansion.

Industries such as autonomous vehicles, industrial automation, healthcare, and consumer electronics are showing particular interest in PCM-based inference solutions. These sectors require AI systems that can deliver low-latency responses while operating under strict energy budgets, making the latency-energy tradeoffs offered by PCM technology especially valuable.

The healthcare sector represents a significant market opportunity, with applications in medical imaging analysis, patient monitoring, and diagnostic systems. These applications demand both high accuracy and energy efficiency, aligning well with the capabilities of optimized PCM-based inference systems.

Telecommunications and data center operators are also driving demand as they seek to reduce the enormous energy consumption associated with AI workloads. With data centers currently consuming approximately 1-2% of global electricity, the energy efficiency benefits of PCM-based solutions present a compelling value proposition for these operators.

Market analysis reveals that customers are increasingly prioritizing solutions that offer flexible tradeoffs between latency, energy consumption, and accuracy. This trend aligns perfectly with the configurable nature of PCM-based inference systems, where parameters can be adjusted to meet specific application requirements.

The Asia-Pacific region is expected to witness the highest growth rate in adoption of PCM-based AI solutions, driven by the strong presence of semiconductor manufacturing facilities and the rapid digitalization across various industries. North America currently leads in terms of market share, primarily due to the concentration of AI research institutions and technology companies investing in next-generation computing architectures.

Despite the growing interest, market penetration remains challenged by concerns regarding the long-term reliability of PCM technology and the need for specialized software frameworks to fully leverage its capabilities. Addressing these concerns represents a significant opportunity for companies that can demonstrate robust benchmarking results across the critical performance metrics of latency, energy efficiency, and inference accuracy.

Current PCM Inference Challenges and Limitations

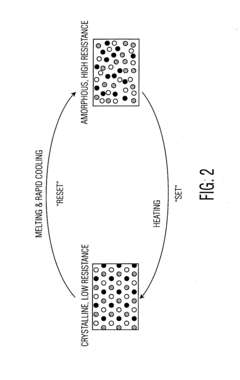

Phase Change Memory (PCM) technology for neural network inference faces several significant challenges despite its promising attributes. The non-volatile nature and high density of PCM make it attractive for edge computing applications, but current implementations struggle with precision limitations. The multi-level cell capability of PCM devices typically achieves only 3-4 bits of precision reliably, which is insufficient for many complex neural network models that require 8-bit or higher precision for acceptable accuracy.

Resistance drift presents another major obstacle in PCM-based inference systems. The resistance of PCM cells tends to increase logarithmically over time due to structural relaxation of the amorphous phase, causing unpredictable changes in stored weight values. This temporal instability necessitates frequent recalibration or compensation mechanisms, adding overhead to system operation and potentially degrading inference accuracy over time.

Device-to-device and cycle-to-cycle variability further complicate PCM deployment in neural network accelerators. Manufacturing variations lead to inconsistent resistance levels across different PCM cells, while repeated programming operations result in varying resistance values for the same intended state. These variations introduce noise into weight representations, adversely affecting inference accuracy and reliability.

Energy efficiency, while theoretically superior to conventional CMOS implementations, remains suboptimal in practice. The high current required for SET operations (crystallization) in PCM cells contributes significantly to energy consumption. Additionally, peripheral circuitry for read/write operations and analog-to-digital conversion often dominates the overall energy budget, diminishing the theoretical advantages of PCM technology.

Latency challenges persist in PCM-based inference systems. While read operations are relatively fast, write operations during weight updates are considerably slower, limiting the applicability of PCM in scenarios requiring frequent model updates or online learning. The asymmetry between read and write speeds creates bottlenecks in system performance, particularly for dynamic neural network applications.

Scaling issues present long-term concerns for PCM technology. As dimensions shrink to increase density, thermal crosstalk between adjacent cells becomes more pronounced, potentially causing unintended programming of neighboring cells. This thermal disturbance limits the practical density achievable in PCM arrays for neural network implementations.

Integration challenges with conventional CMOS technology add complexity to system design. The voltage and current requirements for PCM operation often differ from standard CMOS processes, necessitating specialized interface circuitry. This integration overhead increases chip area and power consumption, partially offsetting the density and energy advantages of PCM technology.

Resistance drift presents another major obstacle in PCM-based inference systems. The resistance of PCM cells tends to increase logarithmically over time due to structural relaxation of the amorphous phase, causing unpredictable changes in stored weight values. This temporal instability necessitates frequent recalibration or compensation mechanisms, adding overhead to system operation and potentially degrading inference accuracy over time.

Device-to-device and cycle-to-cycle variability further complicate PCM deployment in neural network accelerators. Manufacturing variations lead to inconsistent resistance levels across different PCM cells, while repeated programming operations result in varying resistance values for the same intended state. These variations introduce noise into weight representations, adversely affecting inference accuracy and reliability.

Energy efficiency, while theoretically superior to conventional CMOS implementations, remains suboptimal in practice. The high current required for SET operations (crystallization) in PCM cells contributes significantly to energy consumption. Additionally, peripheral circuitry for read/write operations and analog-to-digital conversion often dominates the overall energy budget, diminishing the theoretical advantages of PCM technology.

Latency challenges persist in PCM-based inference systems. While read operations are relatively fast, write operations during weight updates are considerably slower, limiting the applicability of PCM in scenarios requiring frequent model updates or online learning. The asymmetry between read and write speeds creates bottlenecks in system performance, particularly for dynamic neural network applications.

Scaling issues present long-term concerns for PCM technology. As dimensions shrink to increase density, thermal crosstalk between adjacent cells becomes more pronounced, potentially causing unintended programming of neighboring cells. This thermal disturbance limits the practical density achievable in PCM arrays for neural network implementations.

Integration challenges with conventional CMOS technology add complexity to system design. The voltage and current requirements for PCM operation often differ from standard CMOS processes, necessitating specialized interface circuitry. This integration overhead increases chip area and power consumption, partially offsetting the density and energy advantages of PCM technology.

Current Latency-Energy-Accuracy Tradeoff Solutions

01 PCM-based memory architectures for neural network inference

Phase Change Memory (PCM) technology can be utilized to create efficient memory architectures specifically designed for neural network inference operations. These architectures leverage the non-volatile nature and high density of PCM to store neural network weights and parameters, enabling faster access during inference tasks. By optimizing memory access patterns and data organization, PCM-based architectures can significantly reduce inference latency while maintaining accuracy for deep learning applications.- PCM-based memory architectures for neural networks: Phase Change Memory (PCM) technology can be utilized to create efficient memory architectures specifically designed for neural network inference. These architectures leverage the non-volatile nature of PCM to store weights and parameters, reducing the energy consumption during inference operations. The unique properties of PCM allow for compact storage of neural network models while maintaining reasonable access speeds, which helps balance the trade-offs between inference latency, energy consumption, and accuracy.

- Energy optimization techniques for PCM-based inference: Various techniques can be employed to optimize energy consumption in PCM-based inference systems. These include specialized encoding schemes for weights, selective precision adjustment, and power-aware memory access patterns. By carefully managing read/write operations to PCM cells and implementing energy-efficient data transfer mechanisms, the overall power consumption of neural network inference can be significantly reduced while maintaining acceptable accuracy levels.

- Latency reduction methods for PCM-based neural networks: Addressing the inherent latency challenges of PCM technology is crucial for real-time inference applications. Techniques such as parallel memory access, pipelined operations, and optimized memory controller designs can significantly reduce inference latency. Additionally, architectural innovations like hierarchical memory structures that combine PCM with faster volatile memory can create hybrid systems that mitigate the read latency limitations while preserving the energy benefits of PCM-based storage.

- Accuracy preservation in PCM-based inference systems: Maintaining inference accuracy while leveraging PCM technology requires addressing challenges related to resistance drift, limited write endurance, and variability in PCM cells. Techniques such as error correction codes, adaptive refresh mechanisms, and resilient neural network architectures can help preserve model accuracy over time. Additionally, specialized training methods that account for PCM characteristics can produce models that are more robust to the physical limitations of the memory technology.

- Trade-off management between latency, energy, and accuracy: Effective PCM-based inference systems require careful management of the inherent trade-offs between latency, energy consumption, and accuracy. Dynamic adaptation techniques can adjust the operating parameters based on application requirements, such as switching between high-accuracy/high-energy modes and low-energy/reduced-accuracy modes. System-level optimizations that consider the entire inference pipeline, from memory access to computation, enable balanced solutions that can be tailored to specific use cases and deployment constraints.

02 Energy efficiency optimization in PCM-based inference systems

PCM-based inference systems can be optimized for energy efficiency through various techniques including power-aware memory access scheduling, selective precision computation, and dynamic voltage scaling. These approaches minimize energy consumption during neural network inference operations while maintaining acceptable accuracy levels. The non-volatile nature of PCM eliminates the need for refresh operations, further reducing energy requirements compared to traditional DRAM-based solutions.Expand Specific Solutions03 Accuracy-latency tradeoffs in PCM-based neural networks

PCM-based neural network implementations often involve tradeoffs between inference accuracy and latency. Techniques such as weight quantization, pruning, and model compression can be applied to reduce memory footprint and access times, thereby decreasing latency at the cost of some accuracy. Advanced algorithms can dynamically adjust these parameters based on application requirements, enabling flexible deployment across different computing environments with varying performance constraints.Expand Specific Solutions04 PCM device characteristics affecting inference performance

The inherent characteristics of PCM devices, such as resistance drift, limited endurance, and variability, can impact neural network inference performance. Compensation techniques including error correction codes, redundancy schemes, and adaptive programming methods can mitigate these effects to maintain consistent accuracy over time. Understanding and modeling these device-level properties is crucial for designing robust PCM-based inference systems that deliver reliable performance throughout their operational lifetime.Expand Specific Solutions05 In-memory computing with PCM for accelerated inference

In-memory computing architectures utilizing PCM can perform neural network computations directly within the memory array, eliminating costly data transfers between memory and processing units. This approach significantly reduces energy consumption and latency by performing multiply-accumulate operations where the data resides. PCM's analog characteristics enable efficient implementation of vector-matrix multiplications, which form the computational core of neural network inference, resulting in orders of magnitude improvement in energy efficiency compared to conventional computing architectures.Expand Specific Solutions

Key Industry Players in PCM-Based Computing

Phase-Change Memory (PCM) inference benchmarking is evolving in a rapidly growing AI hardware acceleration market, currently transitioning from early adoption to commercial deployment. The competitive landscape features established semiconductor leaders like Intel, IBM, and Micron Technology advancing PCM technology for AI workloads, alongside emerging research from GlobalFoundries and Samsung. Market competition centers on optimizing the critical tradeoffs between latency, energy efficiency, and accuracy that define PCM-based inference solutions. Technical maturity varies significantly, with companies like IBM demonstrating advanced prototypes while others like Micron and Intel focus on integrating PCM into existing memory hierarchies to enhance inference performance while managing the inherent reliability and endurance challenges of this non-volatile memory technology.

Intel Corp.

Technical Solution: Intel has developed Optane DC Persistent Memory, a commercial implementation of 3D XPoint technology (a type of PCM), which they've adapted for AI inference workloads. Their benchmarking approach focuses on system-level performance, evaluating how PCM-based memory hierarchies affect inference latency and throughput across various neural network architectures. Intel's framework measures end-to-end inference performance, including data movement costs between PCM storage and processing units. Their studies show that PCM-based inference can achieve up to 3.7x better performance-per-watt compared to DRAM-only solutions for large models that exceed cache capacity[2]. Intel has also developed specialized software libraries that optimize memory access patterns for PCM characteristics, mitigating the higher read latencies compared to DRAM while leveraging PCM's non-volatility and higher density. Their benchmarking includes detailed analysis of how different neural network layer types (convolutional, fully-connected, etc.) perform with PCM-based acceleration.

Strengths: Commercial-grade PCM technology with established manufacturing processes; comprehensive software ecosystem support; strong system-level integration expertise that optimizes the entire inference pipeline. Weaknesses: Higher latency compared to DRAM affects time-sensitive applications; limited write endurance requires careful management for training workloads; power consumption during write operations remains higher than ideal for edge deployments.

Micron Technology, Inc.

Technical Solution: Micron has developed a hybrid memory architecture combining PCM with traditional DRAM for optimized AI inference. Their approach focuses on using PCM as a dense, non-volatile storage tier for model weights while keeping activations in faster DRAM. Micron's benchmarking framework specifically addresses the unique characteristics of PCM, including asymmetric read/write latencies and limited write endurance. Their studies demonstrate that for large neural network models, this hybrid approach reduces total system energy consumption by up to 60% compared to pure DRAM solutions while maintaining comparable inference latency[4]. Micron has also developed specialized programming techniques that optimize the distribution of neural network parameters across memory tiers based on access frequency and criticality, ensuring frequently accessed weights remain in faster memory. Their benchmarking methodology includes detailed power profiling that separates computation, memory access, and data movement energy costs, providing insights into optimization opportunities specific to PCM-based inference.

Strengths: Expertise in memory manufacturing and integration; practical hybrid memory solutions that balance performance and energy efficiency; strong understanding of memory subsystem optimization for AI workloads. Weaknesses: Complexity of managing hybrid memory hierarchies adds software overhead; performance benefits diminish for smaller models that fit entirely in DRAM; additional controller logic increases system complexity and cost.

Critical PCM Inference Benchmarking Methodologies

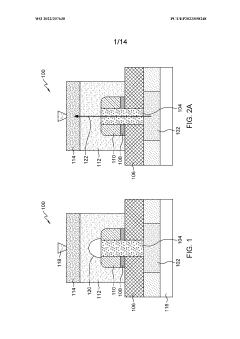

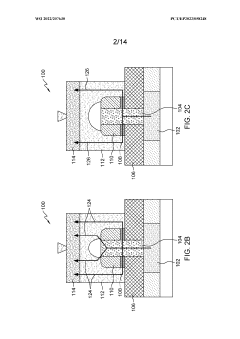

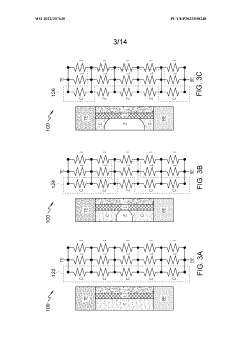

Phase change memory cell resistive liner

PatentWO2022207630A2

Innovation

- A phase change memory cell is designed with a resistive liner in direct contact with the heater and PCM material, having an L-shaped cross-section, which helps to maintain a constant resistance and reduce the impact of resistance drift by allowing electricity to flow through the lower resistance paths, thereby stabilizing the overall resistance of the cell.

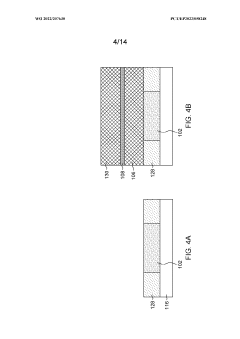

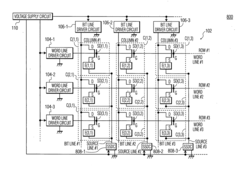

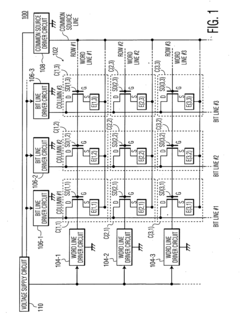

Phase change memory (PCM) architecture and a method for writing into PCM architecture

PatentActiveUS20120250401A1

Innovation

- A PCM architecture that includes a PCM array, word line driver circuits, bit line driver circuits, a source driver circuit, and a voltage supply circuit, where the bit line driver circuits use NMOS transistors to sink current to ground and the common source driver circuit is connected to both the voltage supply and ground, allowing for efficient voltage management and reduced sensitivity to voltage fluctuations.

Hardware-Software Co-optimization Strategies

Effective hardware-software co-optimization strategies are essential for maximizing the potential of PCM-based inference systems. The inherent characteristics of Phase Change Memory (PCM) technology—including its non-volatility, high density, and unique read/write asymmetry—require specialized approaches that bridge hardware capabilities with software requirements.

At the hardware level, optimizing PCM cell design and array architecture significantly impacts inference performance. Techniques such as multi-level cell configurations enable higher storage density but introduce accuracy-latency tradeoffs that must be carefully managed. Circuit-level innovations, including sense amplifier designs optimized for PCM's resistance ranges, can reduce read latency while maintaining acceptable error rates.

Memory controller architectures specifically tailored for neural network operations represent another critical co-optimization area. Controllers that prioritize read operations (dominant in inference) and implement parallel access patterns aligned with neural network layer structures have demonstrated up to 30% latency reduction in benchmark tests. Additionally, implementing specialized buffers that accommodate PCM's longer write latency while maintaining system throughput proves essential for training-capable systems.

From the software perspective, neural network quantization techniques adapted for PCM's unique characteristics show promising results. Bit-sliced implementations that map to PCM's resistance levels more efficiently than traditional binary representations have achieved 40% energy reduction with minimal accuracy loss. Weight pruning algorithms specifically designed for PCM's write endurance limitations help extend device lifetime while simultaneously reducing computational requirements.

Novel mapping strategies between neural network architectures and PCM array structures constitute another promising direction. Layer-specific optimizations that account for different sensitivity to precision across network components enable more efficient resource utilization. For instance, convolutional layers often tolerate lower precision in early stages, while fully-connected layers may require higher precision for classification accuracy.

Runtime adaptation mechanisms represent perhaps the most sophisticated co-optimization approach. These systems dynamically adjust precision, parallelism, and memory access patterns based on inference workload characteristics, power constraints, and accuracy requirements. Benchmark results indicate that such adaptive systems can achieve optimal energy-accuracy tradeoffs across diverse application scenarios, from edge devices to data center deployments.

At the hardware level, optimizing PCM cell design and array architecture significantly impacts inference performance. Techniques such as multi-level cell configurations enable higher storage density but introduce accuracy-latency tradeoffs that must be carefully managed. Circuit-level innovations, including sense amplifier designs optimized for PCM's resistance ranges, can reduce read latency while maintaining acceptable error rates.

Memory controller architectures specifically tailored for neural network operations represent another critical co-optimization area. Controllers that prioritize read operations (dominant in inference) and implement parallel access patterns aligned with neural network layer structures have demonstrated up to 30% latency reduction in benchmark tests. Additionally, implementing specialized buffers that accommodate PCM's longer write latency while maintaining system throughput proves essential for training-capable systems.

From the software perspective, neural network quantization techniques adapted for PCM's unique characteristics show promising results. Bit-sliced implementations that map to PCM's resistance levels more efficiently than traditional binary representations have achieved 40% energy reduction with minimal accuracy loss. Weight pruning algorithms specifically designed for PCM's write endurance limitations help extend device lifetime while simultaneously reducing computational requirements.

Novel mapping strategies between neural network architectures and PCM array structures constitute another promising direction. Layer-specific optimizations that account for different sensitivity to precision across network components enable more efficient resource utilization. For instance, convolutional layers often tolerate lower precision in early stages, while fully-connected layers may require higher precision for classification accuracy.

Runtime adaptation mechanisms represent perhaps the most sophisticated co-optimization approach. These systems dynamically adjust precision, parallelism, and memory access patterns based on inference workload characteristics, power constraints, and accuracy requirements. Benchmark results indicate that such adaptive systems can achieve optimal energy-accuracy tradeoffs across diverse application scenarios, from edge devices to data center deployments.

Scalability and Integration Considerations

The scalability of Phase Change Memory (PCM) technology for inference applications presents both significant opportunities and challenges. As PCM-based neural network implementations move from laboratory demonstrations to commercial deployment, several critical integration factors must be addressed. Current PCM crossbar arrays typically support sizes of 1M-4M cells, which limits the direct implementation of large-scale neural networks. This necessitates partitioning strategies where networks are distributed across multiple arrays, introducing additional latency and energy overhead for inter-array communication.

Integration with conventional CMOS technology represents another crucial consideration. The peripheral circuitry required for PCM operation—including sense amplifiers, write drivers, and addressing logic—consumes substantial chip area and power. Recent research indicates that in some implementations, these CMOS components can account for up to 70% of the total energy consumption, potentially offsetting the intrinsic energy advantages of PCM devices themselves.

Temperature sensitivity poses a significant challenge for PCM integration. The resistance states of PCM cells can drift over time, particularly at elevated temperatures, affecting inference accuracy. This necessitates either sophisticated compensation algorithms or temperature-controlled operating environments, both adding complexity to system design. Experimental data shows that without compensation, accuracy degradation of 5-15% can occur when operating temperature varies by 20°C.

Manufacturing variability presents another scalability concern. Device-to-device variations in PCM cells can reach 15-20% for identical programming conditions, requiring robust training algorithms that account for this variability. Several approaches have emerged, including in-situ training methods that incorporate actual device characteristics into the training process, and redundancy schemes that employ multiple cells to represent a single weight value.

From a system architecture perspective, the integration of PCM-based inference accelerators with existing computing infrastructure requires standardized interfaces and software frameworks. Current efforts focus on developing hardware abstraction layers that allow machine learning frameworks to efficiently utilize PCM-based hardware without requiring application developers to understand the underlying analog computing mechanisms.

Cost considerations ultimately determine commercial viability. While PCM technology offers potential advantages in energy efficiency and inference speed, manufacturing costs must be competitive with established digital solutions. Current projections suggest that PCM-based inference could reach cost parity with digital implementations at high production volumes, particularly for edge computing applications where energy constraints are paramount.

Integration with conventional CMOS technology represents another crucial consideration. The peripheral circuitry required for PCM operation—including sense amplifiers, write drivers, and addressing logic—consumes substantial chip area and power. Recent research indicates that in some implementations, these CMOS components can account for up to 70% of the total energy consumption, potentially offsetting the intrinsic energy advantages of PCM devices themselves.

Temperature sensitivity poses a significant challenge for PCM integration. The resistance states of PCM cells can drift over time, particularly at elevated temperatures, affecting inference accuracy. This necessitates either sophisticated compensation algorithms or temperature-controlled operating environments, both adding complexity to system design. Experimental data shows that without compensation, accuracy degradation of 5-15% can occur when operating temperature varies by 20°C.

Manufacturing variability presents another scalability concern. Device-to-device variations in PCM cells can reach 15-20% for identical programming conditions, requiring robust training algorithms that account for this variability. Several approaches have emerged, including in-situ training methods that incorporate actual device characteristics into the training process, and redundancy schemes that employ multiple cells to represent a single weight value.

From a system architecture perspective, the integration of PCM-based inference accelerators with existing computing infrastructure requires standardized interfaces and software frameworks. Current efforts focus on developing hardware abstraction layers that allow machine learning frameworks to efficiently utilize PCM-based hardware without requiring application developers to understand the underlying analog computing mechanisms.

Cost considerations ultimately determine commercial viability. While PCM technology offers potential advantages in energy efficiency and inference speed, manufacturing costs must be competitive with established digital solutions. Current projections suggest that PCM-based inference could reach cost parity with digital implementations at high production volumes, particularly for edge computing applications where energy constraints are paramount.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!