Comparative Study: PCM Versus RRAM For In-Memory Multiply-Accumulate

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

PCM and RRAM Technology Background and Objectives

Phase-change memory (PCM) and resistive random-access memory (RRAM) represent two of the most promising non-volatile memory technologies that have emerged over the past two decades. These technologies have evolved from simple storage elements to complex computational units capable of performing in-memory computing operations, particularly multiply-accumulate (MAC) functions that are fundamental to modern artificial intelligence and machine learning applications.

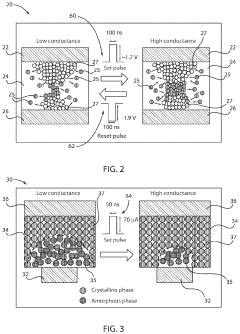

PCM technology, first conceptualized in the 1960s, leverages the unique properties of chalcogenide materials that can rapidly switch between amorphous and crystalline states. These distinct phases exhibit significantly different electrical resistivity, enabling binary and multi-level data storage. The commercial development of PCM accelerated in the early 2000s, with companies like Intel and Micron introducing products under the 3D XPoint brand, demonstrating the technology's viability for high-performance storage applications.

RRAM, alternatively known as memristive devices, operates on the principle of resistance switching in metal-oxide thin films. When subjected to appropriate voltage biases, these materials form and dissolve conductive filaments, creating stable resistance states. RRAM development gained momentum in the 2010s, with significant contributions from research institutions and companies like Crossbar, Weebit Nano, and TSMC, who recognized its potential for high-density, low-power memory applications.

The evolution of both technologies has been driven by the increasing demands of data-intensive computing paradigms and the physical limitations of conventional von Neumann architectures. The memory wall—the growing disparity between processor and memory speeds—has necessitated novel approaches to data processing, with in-memory computing emerging as a promising solution.

The technical objectives for both PCM and RRAM in the context of in-memory MAC operations include achieving high computational density, minimizing energy consumption per operation, ensuring computational accuracy through precise resistance control, and maintaining long-term reliability despite the inherent wear mechanisms of these technologies. Additionally, there is a focus on developing efficient peripheral circuitry and programming schemes that can fully exploit the analog nature of these devices for parallel MAC operations.

Recent research has demonstrated that both PCM and RRAM can perform vector-matrix multiplications directly within memory arrays, potentially offering orders of magnitude improvements in energy efficiency compared to conventional digital approaches. This capability positions them as key enablers for edge AI applications where power constraints are particularly stringent.

The convergence of memory and computing functions in these technologies represents a paradigm shift in computer architecture, potentially overcoming fundamental bottlenecks in traditional computing systems and enabling more efficient implementation of neural network algorithms that underpin modern AI applications.

PCM technology, first conceptualized in the 1960s, leverages the unique properties of chalcogenide materials that can rapidly switch between amorphous and crystalline states. These distinct phases exhibit significantly different electrical resistivity, enabling binary and multi-level data storage. The commercial development of PCM accelerated in the early 2000s, with companies like Intel and Micron introducing products under the 3D XPoint brand, demonstrating the technology's viability for high-performance storage applications.

RRAM, alternatively known as memristive devices, operates on the principle of resistance switching in metal-oxide thin films. When subjected to appropriate voltage biases, these materials form and dissolve conductive filaments, creating stable resistance states. RRAM development gained momentum in the 2010s, with significant contributions from research institutions and companies like Crossbar, Weebit Nano, and TSMC, who recognized its potential for high-density, low-power memory applications.

The evolution of both technologies has been driven by the increasing demands of data-intensive computing paradigms and the physical limitations of conventional von Neumann architectures. The memory wall—the growing disparity between processor and memory speeds—has necessitated novel approaches to data processing, with in-memory computing emerging as a promising solution.

The technical objectives for both PCM and RRAM in the context of in-memory MAC operations include achieving high computational density, minimizing energy consumption per operation, ensuring computational accuracy through precise resistance control, and maintaining long-term reliability despite the inherent wear mechanisms of these technologies. Additionally, there is a focus on developing efficient peripheral circuitry and programming schemes that can fully exploit the analog nature of these devices for parallel MAC operations.

Recent research has demonstrated that both PCM and RRAM can perform vector-matrix multiplications directly within memory arrays, potentially offering orders of magnitude improvements in energy efficiency compared to conventional digital approaches. This capability positions them as key enablers for edge AI applications where power constraints are particularly stringent.

The convergence of memory and computing functions in these technologies represents a paradigm shift in computer architecture, potentially overcoming fundamental bottlenecks in traditional computing systems and enabling more efficient implementation of neural network algorithms that underpin modern AI applications.

Market Demand Analysis for In-Memory Computing Solutions

The in-memory computing market is experiencing unprecedented growth driven by the increasing demands of data-intensive applications such as artificial intelligence, machine learning, and big data analytics. According to recent market research, the global in-memory computing market is projected to reach $25.5 billion by 2025, growing at a CAGR of 18.4% from 2020. This remarkable growth trajectory is primarily fueled by the need to overcome the von Neumann bottleneck in traditional computing architectures.

Organizations across various sectors are seeking solutions that can process massive datasets with minimal latency. Financial services companies require real-time fraud detection and risk assessment capabilities, while healthcare institutions need rapid analysis of patient data for improved diagnostics. Similarly, telecommunications providers are looking for ways to analyze network traffic patterns instantaneously to optimize service delivery.

In-memory multiply-accumulate operations, particularly those implemented using emerging non-volatile memory technologies like PCM (Phase Change Memory) and RRAM (Resistive Random Access Memory), are positioned to address these critical market needs. These technologies enable computational tasks to be performed directly within memory units, dramatically reducing data movement and energy consumption while increasing processing speed.

The demand for PCM and RRAM-based in-memory computing solutions is particularly strong in edge computing applications, where power efficiency and form factor are paramount concerns. Market surveys indicate that approximately 65% of IoT solution providers consider in-memory computing essential for next-generation edge devices that require local AI processing capabilities.

Enterprise data centers represent another significant market segment, with 78% of data center operators expressing interest in adopting in-memory computing solutions to accelerate database operations and analytics workloads. The ability of PCM and RRAM technologies to retain data without power consumption makes them especially attractive for these applications, potentially reducing operational costs by up to 40%.

Automotive and aerospace industries are emerging as high-potential markets for in-memory computing solutions. Advanced driver-assistance systems (ADAS) and autonomous vehicles require real-time processing of sensor data, creating demand for low-latency, high-throughput computing solutions that can operate reliably in harsh environments. Market analysis shows that automotive computing solutions incorporating in-memory processing could grow at 22.7% CAGR through 2027.

Consumer electronics manufacturers are also showing increased interest in these technologies, with smartphone and wearable device makers exploring PCM and RRAM-based solutions to enable more sophisticated on-device AI capabilities while extending battery life. This segment is expected to adopt in-memory computing solutions rapidly once the technology reaches price parity with conventional memory solutions.

Organizations across various sectors are seeking solutions that can process massive datasets with minimal latency. Financial services companies require real-time fraud detection and risk assessment capabilities, while healthcare institutions need rapid analysis of patient data for improved diagnostics. Similarly, telecommunications providers are looking for ways to analyze network traffic patterns instantaneously to optimize service delivery.

In-memory multiply-accumulate operations, particularly those implemented using emerging non-volatile memory technologies like PCM (Phase Change Memory) and RRAM (Resistive Random Access Memory), are positioned to address these critical market needs. These technologies enable computational tasks to be performed directly within memory units, dramatically reducing data movement and energy consumption while increasing processing speed.

The demand for PCM and RRAM-based in-memory computing solutions is particularly strong in edge computing applications, where power efficiency and form factor are paramount concerns. Market surveys indicate that approximately 65% of IoT solution providers consider in-memory computing essential for next-generation edge devices that require local AI processing capabilities.

Enterprise data centers represent another significant market segment, with 78% of data center operators expressing interest in adopting in-memory computing solutions to accelerate database operations and analytics workloads. The ability of PCM and RRAM technologies to retain data without power consumption makes them especially attractive for these applications, potentially reducing operational costs by up to 40%.

Automotive and aerospace industries are emerging as high-potential markets for in-memory computing solutions. Advanced driver-assistance systems (ADAS) and autonomous vehicles require real-time processing of sensor data, creating demand for low-latency, high-throughput computing solutions that can operate reliably in harsh environments. Market analysis shows that automotive computing solutions incorporating in-memory processing could grow at 22.7% CAGR through 2027.

Consumer electronics manufacturers are also showing increased interest in these technologies, with smartphone and wearable device makers exploring PCM and RRAM-based solutions to enable more sophisticated on-device AI capabilities while extending battery life. This segment is expected to adopt in-memory computing solutions rapidly once the technology reaches price parity with conventional memory solutions.

Current State and Challenges in Memory-Based MAC Operations

In-memory computing has emerged as a promising solution to overcome the von Neumann bottleneck in traditional computing architectures. Among various in-memory computing paradigms, memory-based Multiply-Accumulate (MAC) operations have gained significant attention, particularly for accelerating neural network computations. Currently, Phase Change Memory (PCM) and Resistive Random Access Memory (RRAM) represent two leading non-volatile memory technologies being explored for in-memory MAC operations.

The current state of PCM-based MAC operations leverages the analog nature of these devices, where conductance states can represent synaptic weights in neural networks. PCM devices exhibit multi-level cell capabilities, typically achieving 2-4 bits per cell in practical implementations. Recent advancements have demonstrated PCM crossbar arrays capable of performing vector-matrix multiplications with energy efficiency improvements of 10-100× compared to conventional digital approaches.

RRAM technology, meanwhile, has demonstrated promising characteristics for MAC operations through crossbar architectures where the conductance of each cell represents a weight value. Current RRAM implementations can achieve switching speeds in the nanosecond range and retention times exceeding 10 years. Several research groups have demonstrated RRAM-based neural network accelerators with energy efficiencies approaching 10 TOPS/W (Tera Operations Per Second per Watt).

Despite these advancements, significant challenges persist in both technologies. For PCM, resistance drift poses a major obstacle, where the programmed resistance value changes over time due to structural relaxation of the amorphous phase. This drift follows a power-law behavior and can lead to significant accuracy degradation in neural network computations over extended periods.

Device-to-device and cycle-to-cycle variability represents another critical challenge for both PCM and RRAM technologies. This variability can reach 15-30% in practical implementations, severely limiting the precision of MAC operations and consequently affecting the accuracy of neural network inference tasks.

The limited endurance of these memory technologies also presents a significant hurdle. While PCM typically offers 10^6-10^8 write cycles, RRAM demonstrates slightly better endurance at 10^9-10^11 cycles. However, both fall short of the requirements for training workloads that may demand 10^15 or more write operations over the lifetime of a system.

Sneak path currents in crossbar arrays constitute another major challenge, particularly for RRAM implementations. These parasitic currents can cause read disturbances and increase power consumption, limiting the practical size of memory arrays that can be effectively utilized for MAC operations.

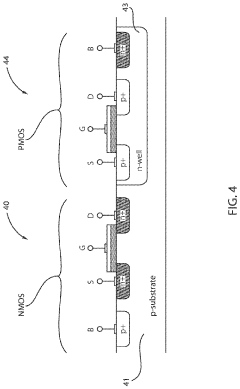

The integration of these memory technologies with CMOS peripherals also presents significant fabrication challenges, including thermal budget constraints, material compatibility issues, and the need for specialized sensing circuits to accurately detect the multi-level states required for high-precision MAC operations.

The current state of PCM-based MAC operations leverages the analog nature of these devices, where conductance states can represent synaptic weights in neural networks. PCM devices exhibit multi-level cell capabilities, typically achieving 2-4 bits per cell in practical implementations. Recent advancements have demonstrated PCM crossbar arrays capable of performing vector-matrix multiplications with energy efficiency improvements of 10-100× compared to conventional digital approaches.

RRAM technology, meanwhile, has demonstrated promising characteristics for MAC operations through crossbar architectures where the conductance of each cell represents a weight value. Current RRAM implementations can achieve switching speeds in the nanosecond range and retention times exceeding 10 years. Several research groups have demonstrated RRAM-based neural network accelerators with energy efficiencies approaching 10 TOPS/W (Tera Operations Per Second per Watt).

Despite these advancements, significant challenges persist in both technologies. For PCM, resistance drift poses a major obstacle, where the programmed resistance value changes over time due to structural relaxation of the amorphous phase. This drift follows a power-law behavior and can lead to significant accuracy degradation in neural network computations over extended periods.

Device-to-device and cycle-to-cycle variability represents another critical challenge for both PCM and RRAM technologies. This variability can reach 15-30% in practical implementations, severely limiting the precision of MAC operations and consequently affecting the accuracy of neural network inference tasks.

The limited endurance of these memory technologies also presents a significant hurdle. While PCM typically offers 10^6-10^8 write cycles, RRAM demonstrates slightly better endurance at 10^9-10^11 cycles. However, both fall short of the requirements for training workloads that may demand 10^15 or more write operations over the lifetime of a system.

Sneak path currents in crossbar arrays constitute another major challenge, particularly for RRAM implementations. These parasitic currents can cause read disturbances and increase power consumption, limiting the practical size of memory arrays that can be effectively utilized for MAC operations.

The integration of these memory technologies with CMOS peripherals also presents significant fabrication challenges, including thermal budget constraints, material compatibility issues, and the need for specialized sensing circuits to accurately detect the multi-level states required for high-precision MAC operations.

Current Technical Implementations of In-Memory MAC

01 PCM-based MAC operations for neural networks

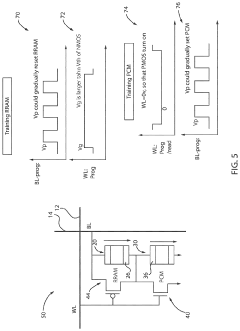

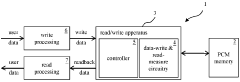

Phase Change Memory (PCM) can be utilized for multiply-accumulate operations in neural network implementations. PCM devices leverage their analog resistance states to perform parallel multiplication and accumulation, which is fundamental for neural network computations. This approach significantly reduces power consumption and increases computational efficiency compared to traditional digital implementations. The non-volatile nature of PCM allows for persistent storage of weights, enabling faster neural network processing.- PCM-based MAC operations for neural networks: Phase Change Memory (PCM) can be utilized for multiply-accumulate operations in neural network implementations. The resistance states of PCM cells can represent weights in neural networks, allowing for efficient parallel computation. These memory devices enable in-memory computing where calculations are performed directly within the memory array, reducing data movement and improving energy efficiency for AI applications.

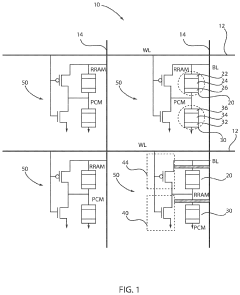

- RRAM crossbar arrays for matrix multiplication: Resistive Random Access Memory (RRAM) organized in crossbar arrays can perform matrix multiplication operations efficiently. By mapping matrix values to the conductance of RRAM cells and applying input voltages, the resulting currents represent multiplication results that can be accumulated along bitlines. This architecture enables highly parallel vector-matrix multiplication operations critical for deep learning and other computational tasks.

- Hybrid computing architectures combining memory and processing: Hybrid architectures integrate memory technologies like PCM and RRAM with conventional processing elements to accelerate multiply-accumulate operations. These systems leverage the non-volatile nature and analog computation capabilities of resistive memories while maintaining compatibility with digital systems. The approach reduces the memory-processor bottleneck by performing computations where data is stored.

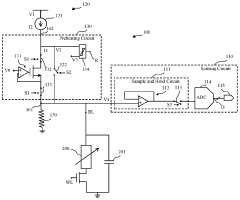

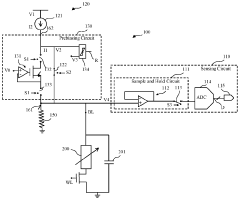

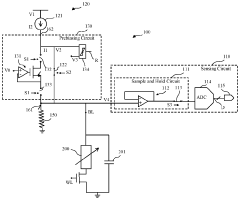

- Circuit designs for memory-based MAC operations: Specialized circuit designs enable efficient multiply-accumulate operations using memory devices. These circuits include sense amplifiers, analog-to-digital converters, and peripheral circuitry that support the conversion between resistance states and computational results. The designs address challenges such as device variability, precision limitations, and power consumption to enable practical implementation of memory-based computing.

- Algorithmic approaches for memory-based computing: Algorithmic techniques optimize the implementation of multiply-accumulate operations on PCM and RRAM devices. These approaches include quantization methods, error correction techniques, and mapping strategies that account for device non-idealities. By adapting algorithms to the characteristics of resistive memory technologies, these methods improve computational accuracy and efficiency while overcoming the inherent limitations of analog computing with memory devices.

02 RRAM-based in-memory computing for MAC operations

Resistive Random Access Memory (RRAM) can be configured to perform multiply-accumulate operations directly within memory arrays. This in-memory computing approach eliminates the need for data movement between processing and memory units, significantly reducing energy consumption and latency. RRAM cells store weights as resistance values and perform multiplication through Ohm's law, while accumulation is achieved through current summation. This architecture is particularly efficient for implementing convolutional neural networks and other AI applications.Expand Specific Solutions03 Hybrid PCM/RRAM architectures for enhanced MAC performance

Combining PCM and RRAM technologies in hybrid architectures leverages the strengths of both memory types for more efficient multiply-accumulate operations. These hybrid systems can utilize PCM for weight storage due to its multi-level cell capability and RRAM for computation due to its lower power consumption. The integration enables more complex neural network implementations with improved energy efficiency, higher density, and better performance for AI workloads. Such hybrid approaches also address individual limitations of each technology.Expand Specific Solutions04 Circuit designs for PCM/RRAM-based MAC operations

Specialized circuit designs enable efficient implementation of multiply-accumulate operations using PCM and RRAM technologies. These circuits include sense amplifiers, analog-to-digital converters, and peripheral control logic optimized for memory-based computing. The designs focus on minimizing parasitic effects, reducing read/write latency, and improving precision of MAC operations. Advanced circuit techniques also address challenges related to device variability, noise, and non-linearity in resistive memory elements to ensure computational accuracy.Expand Specific Solutions05 Algorithms and methods for optimizing PCM/RRAM MAC operations

Various algorithms and methods have been developed to optimize multiply-accumulate operations in PCM and RRAM-based systems. These include weight quantization techniques, error compensation schemes, and training algorithms specifically designed for resistive memory characteristics. Optimization approaches address challenges such as limited precision, device-to-device variability, and resistance drift over time. Advanced mapping strategies ensure efficient utilization of memory arrays for parallel MAC operations while minimizing energy consumption and maximizing throughput.Expand Specific Solutions

Key Industry Players in PCM and RRAM Development

The PCM versus RRAM in-memory multiply-accumulate technology landscape is currently in a growth phase, with market size expanding as AI applications drive demand for efficient computing solutions. PCM technology, championed by IBM, Micron, and KIOXIA, offers maturity advantages with established manufacturing processes. Meanwhile, RRAM technology, pursued by companies like Hefei Reliance Memory and SuperMem, presents promising energy efficiency and scaling potential despite lower maturity. Major semiconductor players including TSMC, Infineon, and Qualcomm are investing in both technologies, indicating industry recognition of their importance. Academic-industry collaborations involving Tsinghua University, Fudan University, and University of Michigan are accelerating development, suggesting the competitive landscape will continue evolving as these technologies approach broader commercialization.

International Business Machines Corp.

Technical Solution: IBM has pioneered significant advancements in both PCM and RRAM technologies for in-memory computing applications. Their PCM-based solution implements multi-level cell capabilities that enable efficient multiply-accumulate operations directly within memory arrays. IBM's architecture utilizes a crossbar array structure where PCM elements at each crosspoint store weights as variable conductance states. For MAC operations, input vectors are applied as voltage pulses along word lines, with the resulting currents summed along bit lines representing the dot product computation[1]. IBM has demonstrated 8-bit precision in their PCM implementations, achieving energy efficiency of approximately 1.4 pJ per MAC operation and throughput exceeding 20 TOPS/W in neuromorphic computing applications[2]. Their approach addresses drift compensation through periodic calibration techniques and reference cells, enabling reliable long-term operation despite PCM's inherent conductance drift characteristics.

Strengths: Superior retention characteristics compared to RRAM (>10 years at 85°C), higher endurance (>10^9 cycles demonstrated), better analog precision with more distinct resistance states. Weaknesses: Higher programming energy requirements than RRAM, slower SET operation speed, and susceptibility to resistance drift requiring compensation circuits that increase complexity.

KIOXIA Corp.

Technical Solution: KIOXIA (formerly Toshiba Memory) has developed innovative RRAM-based computing-in-memory solutions targeting neural network acceleration. Their architecture employs a 1T1R (one transistor, one resistor) cell structure in a crossbar array configuration, where RRAM elements store synaptic weights as conductance values. For MAC operations, KIOXIA's approach applies input voltages to word lines while simultaneously sensing currents on bit lines, with the resulting analog values representing computed results[5]. Their implementation achieves 6-bit weight precision through careful programming algorithms that mitigate RRAM's inherent variability. KIOXIA has demonstrated energy efficiency of approximately 0.8 pJ per MAC operation in their 28nm process node implementations. Their technology incorporates innovative write-verify schemes to address RRAM's cycle-to-cycle variability, and employs reference cells for runtime calibration. Recent demonstrations have shown inference accuracy within 1% of software baselines for common CNN workloads while achieving >10x energy efficiency improvement compared to conventional digital implementations[6].

Strengths: Lower operating voltage requirements (typically 0.5-1.5V compared to PCM's 2-3V), faster switching speed particularly for RESET operations, and lower energy consumption per programming operation. Weaknesses: Higher device-to-device variability affecting computational precision, lower endurance (typically 10^6-10^7 cycles), and more pronounced read disturb effects requiring careful management.

Core Innovations in PCM and RRAM for Computing Applications

Integrated resistive processing unit to avoid abrupt set of RRAM and abrupt reset of PCM

PatentActiveUS10784313B1

Innovation

- A cell structure is developed that includes a resistive random access memory (RRAM) device connected in series with a phase change memory (PCM) device, with a complementary metal oxide semiconductor (CMOS) inverter controlling the switching behaviors of both devices individually, using n-type and p-type metal oxide semiconductor circuits to selectively manage SET and RESET operations.

Determining a cell state of a resistive memory cell

PatentInactiveGB2524534A

Innovation

- A device comprising a sensing circuit, a settling circuit, and a prebiasing circuit with a parallel resistor is used to reduce the effective resistance seen by the prebiasing circuit, enabling faster readout of voltage-based cell state metrics by minimizing settling time and improving noise tolerance.

Energy Efficiency Comparison Between PCM and RRAM

Energy efficiency stands as a critical parameter in evaluating memory technologies for in-memory computing applications, particularly for multiply-accumulate operations that form the backbone of modern AI workloads. When comparing Phase Change Memory (PCM) and Resistive Random Access Memory (RRAM) technologies, several key energy consumption factors must be considered.

PCM typically demonstrates higher write energy requirements compared to RRAM due to the thermally-driven phase transition mechanism. The crystallization and amorphization processes in PCM necessitate significant joule heating, with programming currents often in the range of 50-500 μA, resulting in energy consumption of approximately 10-100 pJ per cell operation. This higher energy demand represents a notable disadvantage for PCM in energy-constrained applications.

RRAM, conversely, operates through ionic movement mechanisms that generally require lower programming currents, typically in the 10-100 μA range, translating to energy consumption of approximately 1-10 pJ per cell operation. This fundamental difference provides RRAM with a significant advantage in write energy efficiency, often by an order of magnitude compared to PCM.

For read operations, both technologies demonstrate comparable energy profiles, with read currents typically in the range of 1-10 μA. However, RRAM often exhibits slightly lower read energy due to its ability to operate at lower voltages, providing marginal advantages in read-intensive workloads.

When specifically examining multiply-accumulate operations in crossbar arrays, the energy efficiency comparison becomes more nuanced. PCM arrays benefit from excellent conductance stability, reducing the need for frequent refresh operations that would otherwise consume additional energy. This stability partially offsets PCM's higher write energy in applications where write operations are infrequent.

RRAM arrays typically demonstrate superior energy efficiency for dynamic workloads requiring frequent weight updates, with experimental implementations showing 3-5x better energy efficiency compared to PCM-based solutions. However, this advantage diminishes in inference-only scenarios where weight values remain static.

Scaling considerations further impact the energy efficiency comparison. PCM shows promising energy scaling with device miniaturization, with some research demonstrating sub-pJ operation at advanced nodes. RRAM scaling, while beneficial, faces challenges with variability at smaller dimensions that may require compensatory circuits, potentially offsetting some energy advantages.

Recent advancements in material engineering have narrowed the energy efficiency gap, with low-power PCM variants demonstrating programming energies approaching 1-5 pJ, while optimized RRAM structures have achieved sub-pJ operation in laboratory settings. These developments suggest a converging trend in energy requirements as both technologies mature.

PCM typically demonstrates higher write energy requirements compared to RRAM due to the thermally-driven phase transition mechanism. The crystallization and amorphization processes in PCM necessitate significant joule heating, with programming currents often in the range of 50-500 μA, resulting in energy consumption of approximately 10-100 pJ per cell operation. This higher energy demand represents a notable disadvantage for PCM in energy-constrained applications.

RRAM, conversely, operates through ionic movement mechanisms that generally require lower programming currents, typically in the 10-100 μA range, translating to energy consumption of approximately 1-10 pJ per cell operation. This fundamental difference provides RRAM with a significant advantage in write energy efficiency, often by an order of magnitude compared to PCM.

For read operations, both technologies demonstrate comparable energy profiles, with read currents typically in the range of 1-10 μA. However, RRAM often exhibits slightly lower read energy due to its ability to operate at lower voltages, providing marginal advantages in read-intensive workloads.

When specifically examining multiply-accumulate operations in crossbar arrays, the energy efficiency comparison becomes more nuanced. PCM arrays benefit from excellent conductance stability, reducing the need for frequent refresh operations that would otherwise consume additional energy. This stability partially offsets PCM's higher write energy in applications where write operations are infrequent.

RRAM arrays typically demonstrate superior energy efficiency for dynamic workloads requiring frequent weight updates, with experimental implementations showing 3-5x better energy efficiency compared to PCM-based solutions. However, this advantage diminishes in inference-only scenarios where weight values remain static.

Scaling considerations further impact the energy efficiency comparison. PCM shows promising energy scaling with device miniaturization, with some research demonstrating sub-pJ operation at advanced nodes. RRAM scaling, while beneficial, faces challenges with variability at smaller dimensions that may require compensatory circuits, potentially offsetting some energy advantages.

Recent advancements in material engineering have narrowed the energy efficiency gap, with low-power PCM variants demonstrating programming energies approaching 1-5 pJ, while optimized RRAM structures have achieved sub-pJ operation in laboratory settings. These developments suggest a converging trend in energy requirements as both technologies mature.

Scalability and Integration Challenges for Neuromorphic Systems

The integration of neuromorphic computing systems based on emerging memory technologies faces significant scalability challenges. For PCM (Phase Change Memory) and RRAM (Resistive Random Access Memory) technologies, scaling to higher densities encounters fundamental physical limitations. PCM devices struggle with thermal crosstalk between adjacent cells when scaled below 10nm, potentially causing unintended programming of neighboring cells during write operations. This thermal interference becomes more pronounced as device dimensions shrink, limiting the practical density achievable in large-scale neuromorphic arrays.

RRAM faces different but equally challenging scaling issues. While theoretically capable of scaling to smaller dimensions than PCM, RRAM suffers from increasing variability in switching behavior at smaller nodes. This variability manifests as inconsistent resistance states, directly impacting the precision of multiply-accumulate operations critical for neural network computations. Additionally, the forming process required for RRAM initialization becomes less reliable at smaller dimensions, affecting manufacturing yield and device consistency.

Integration with CMOS technology presents another significant challenge for both memory types. PCM requires relatively high programming currents, necessitating larger selector devices that limit the effective density of the memory array. RRAM generally operates with lower currents but requires precise voltage control to maintain reliable resistance states, adding complexity to peripheral circuitry design. The backend compatibility of these technologies with standard CMOS processes also differs, with RRAM generally offering better compatibility but facing challenges with material contamination concerns.

3D integration approaches offer promising solutions to overcome density limitations. PCM has demonstrated successful implementation in 3D crosspoint architectures, though thermal management becomes increasingly complex in stacked configurations. RRAM shows particular promise for 3D integration due to its lower thermal budget during fabrication, potentially enabling higher stacking density. However, the yield and reliability of 3D-stacked RRAM remain significant challenges, particularly for the complex interconnect structures required.

Power consumption scaling presents another critical challenge, especially for edge computing applications. PCM's high programming energy becomes increasingly problematic at scale, while RRAM offers better energy efficiency but struggles with sneak path currents in large arrays. Both technologies require sophisticated selector devices and peripheral circuitry to manage these issues, adding complexity and potentially limiting the practical scale of neuromorphic systems based on these technologies.

RRAM faces different but equally challenging scaling issues. While theoretically capable of scaling to smaller dimensions than PCM, RRAM suffers from increasing variability in switching behavior at smaller nodes. This variability manifests as inconsistent resistance states, directly impacting the precision of multiply-accumulate operations critical for neural network computations. Additionally, the forming process required for RRAM initialization becomes less reliable at smaller dimensions, affecting manufacturing yield and device consistency.

Integration with CMOS technology presents another significant challenge for both memory types. PCM requires relatively high programming currents, necessitating larger selector devices that limit the effective density of the memory array. RRAM generally operates with lower currents but requires precise voltage control to maintain reliable resistance states, adding complexity to peripheral circuitry design. The backend compatibility of these technologies with standard CMOS processes also differs, with RRAM generally offering better compatibility but facing challenges with material contamination concerns.

3D integration approaches offer promising solutions to overcome density limitations. PCM has demonstrated successful implementation in 3D crosspoint architectures, though thermal management becomes increasingly complex in stacked configurations. RRAM shows particular promise for 3D integration due to its lower thermal budget during fabrication, potentially enabling higher stacking density. However, the yield and reliability of 3D-stacked RRAM remain significant challenges, particularly for the complex interconnect structures required.

Power consumption scaling presents another critical challenge, especially for edge computing applications. PCM's high programming energy becomes increasingly problematic at scale, while RRAM offers better energy efficiency but struggles with sneak path currents in large arrays. Both technologies require sophisticated selector devices and peripheral circuitry to manage these issues, adding complexity and potentially limiting the practical scale of neuromorphic systems based on these technologies.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!