Hardware/Software Co-Verification Workflows For PCM Platforms

AUG 29, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

PCM Co-Verification Background and Objectives

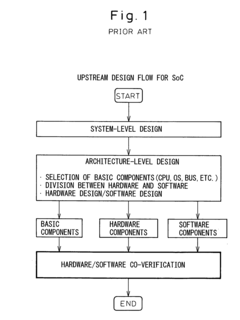

Platform Control Module (PCM) systems represent a critical intersection of hardware and software in modern embedded systems, particularly in automotive, industrial automation, and aerospace applications. The evolution of PCM technology has witnessed significant advancements from simple microcontroller-based systems to complex multi-core architectures integrating various communication protocols and safety mechanisms.

The co-verification of hardware and software components in PCM platforms has become increasingly important as system complexity grows exponentially. Historically, hardware and software verification were conducted separately, leading to integration issues discovered late in the development cycle. This traditional approach resulted in costly redesigns, delayed time-to-market, and potential safety concerns in critical applications.

Industry trends indicate a paradigm shift toward unified verification methodologies that simultaneously validate both hardware and software components. This shift is driven by the need for more efficient development cycles, higher quality standards, and compliance with stringent safety regulations such as ISO 26262 for automotive systems and DO-178C for aerospace applications.

The primary objective of Hardware/Software Co-Verification for PCM platforms is to establish a comprehensive workflow that enables early detection of integration issues between hardware and software components. This includes validating that software correctly interacts with hardware peripherals, ensuring proper handling of interrupts and exceptions, and verifying timing constraints across the hardware-software boundary.

Another critical goal is to reduce verification time while improving coverage metrics. Traditional sequential verification approaches often lead to extended development cycles, whereas parallel co-verification methodologies can significantly compress timelines while enhancing system reliability through more thorough testing of hardware-software interactions.

Technical objectives also include creating reusable verification environments that can adapt to evolving PCM architectures, supporting various abstraction levels from transaction-level modeling to register-transfer level implementations, and enabling seamless integration with continuous integration/continuous deployment (CI/CD) pipelines.

The emergence of virtual platforms and hardware emulation technologies has further transformed the co-verification landscape, allowing software development to begin before hardware availability and enabling more comprehensive system-level testing. These technologies facilitate the execution of complex test scenarios that would be difficult or impossible to recreate with physical hardware prototypes.

As PCM systems increasingly incorporate artificial intelligence and machine learning capabilities, co-verification workflows must also evolve to address the unique challenges posed by these technologies, including deterministic behavior verification and performance validation across diverse operational conditions.

The co-verification of hardware and software components in PCM platforms has become increasingly important as system complexity grows exponentially. Historically, hardware and software verification were conducted separately, leading to integration issues discovered late in the development cycle. This traditional approach resulted in costly redesigns, delayed time-to-market, and potential safety concerns in critical applications.

Industry trends indicate a paradigm shift toward unified verification methodologies that simultaneously validate both hardware and software components. This shift is driven by the need for more efficient development cycles, higher quality standards, and compliance with stringent safety regulations such as ISO 26262 for automotive systems and DO-178C for aerospace applications.

The primary objective of Hardware/Software Co-Verification for PCM platforms is to establish a comprehensive workflow that enables early detection of integration issues between hardware and software components. This includes validating that software correctly interacts with hardware peripherals, ensuring proper handling of interrupts and exceptions, and verifying timing constraints across the hardware-software boundary.

Another critical goal is to reduce verification time while improving coverage metrics. Traditional sequential verification approaches often lead to extended development cycles, whereas parallel co-verification methodologies can significantly compress timelines while enhancing system reliability through more thorough testing of hardware-software interactions.

Technical objectives also include creating reusable verification environments that can adapt to evolving PCM architectures, supporting various abstraction levels from transaction-level modeling to register-transfer level implementations, and enabling seamless integration with continuous integration/continuous deployment (CI/CD) pipelines.

The emergence of virtual platforms and hardware emulation technologies has further transformed the co-verification landscape, allowing software development to begin before hardware availability and enabling more comprehensive system-level testing. These technologies facilitate the execution of complex test scenarios that would be difficult or impossible to recreate with physical hardware prototypes.

As PCM systems increasingly incorporate artificial intelligence and machine learning capabilities, co-verification workflows must also evolve to address the unique challenges posed by these technologies, including deterministic behavior verification and performance validation across diverse operational conditions.

Market Demand Analysis for HW/SW Co-Verification

The global market for Hardware/Software Co-Verification solutions is experiencing significant growth, driven by the increasing complexity of PCM (Process Control Module) platforms and the need for more efficient verification methodologies. Current market analysis indicates that the HW/SW co-verification segment is expanding at a compound annual growth rate exceeding 8%, with particularly strong demand in automotive, aerospace, and industrial automation sectors where PCM platforms are critical components.

This market expansion is primarily fueled by the escalating costs associated with late-stage detection of integration issues between hardware and software components. Industry reports suggest that fixing a bug discovered during system integration can cost up to 100 times more than if detected during the design phase, creating a compelling economic case for advanced co-verification solutions.

The demand for co-verification workflows specifically tailored to PCM platforms has intensified as these systems increasingly incorporate multi-core processors, specialized accelerators, and complex memory hierarchies. Market research indicates that over 70% of PCM platform developers now consider co-verification capabilities as essential rather than optional when selecting development tools and methodologies.

Regional analysis reveals that North America currently leads the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is demonstrating the fastest growth rate, particularly in countries with rapidly expanding electronics manufacturing sectors such as China, Taiwan, and South Korea.

Customer surveys highlight several key requirements driving market demand. First, there is strong interest in solutions that can reduce time-to-market, with 85% of respondents citing verification bottlenecks as a significant factor in product delays. Second, there is growing demand for co-verification tools that support heterogeneous computing architectures commonly found in modern PCM platforms. Third, customers increasingly seek solutions that integrate seamlessly with existing development environments and workflows.

The market is also witnessing a shift toward cloud-based co-verification solutions, which offer scalability advantages and facilitate collaboration among geographically distributed teams. This trend is particularly relevant for PCM platform development, which often involves multiple stakeholders across hardware and software domains.

Industry analysts project that the total addressable market for PCM-specific co-verification tools will reach several billion dollars by 2027, representing a significant opportunity for tool vendors and methodology providers. This growth is further supported by the increasing adoption of formal verification methods and virtual prototyping techniques, which complement traditional simulation-based approaches in comprehensive co-verification workflows.

This market expansion is primarily fueled by the escalating costs associated with late-stage detection of integration issues between hardware and software components. Industry reports suggest that fixing a bug discovered during system integration can cost up to 100 times more than if detected during the design phase, creating a compelling economic case for advanced co-verification solutions.

The demand for co-verification workflows specifically tailored to PCM platforms has intensified as these systems increasingly incorporate multi-core processors, specialized accelerators, and complex memory hierarchies. Market research indicates that over 70% of PCM platform developers now consider co-verification capabilities as essential rather than optional when selecting development tools and methodologies.

Regional analysis reveals that North America currently leads the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is demonstrating the fastest growth rate, particularly in countries with rapidly expanding electronics manufacturing sectors such as China, Taiwan, and South Korea.

Customer surveys highlight several key requirements driving market demand. First, there is strong interest in solutions that can reduce time-to-market, with 85% of respondents citing verification bottlenecks as a significant factor in product delays. Second, there is growing demand for co-verification tools that support heterogeneous computing architectures commonly found in modern PCM platforms. Third, customers increasingly seek solutions that integrate seamlessly with existing development environments and workflows.

The market is also witnessing a shift toward cloud-based co-verification solutions, which offer scalability advantages and facilitate collaboration among geographically distributed teams. This trend is particularly relevant for PCM platform development, which often involves multiple stakeholders across hardware and software domains.

Industry analysts project that the total addressable market for PCM-specific co-verification tools will reach several billion dollars by 2027, representing a significant opportunity for tool vendors and methodology providers. This growth is further supported by the increasing adoption of formal verification methods and virtual prototyping techniques, which complement traditional simulation-based approaches in comprehensive co-verification workflows.

Current Challenges in PCM Platform Verification

Phase-Change Memory (PCM) platforms present significant verification challenges due to their unique architecture and operational characteristics. The integration of hardware and software components in these platforms creates a complex verification landscape that traditional methodologies struggle to address effectively.

The primary challenge lies in the dual-nature verification requirements of PCM platforms. Hardware verification must account for the non-volatile memory characteristics, including write endurance limitations, read disturbance effects, and thermal stability concerns. Simultaneously, software verification must ensure proper memory management, wear-leveling algorithms, and error correction mechanisms specifically designed for PCM technology.

Timing verification presents another critical challenge. PCM's asymmetric read/write latencies differ substantially from conventional memory technologies, creating complex timing dependencies that must be accurately modeled and verified across the hardware-software boundary. Current verification tools often lack the specialized capabilities to effectively simulate these unique timing characteristics.

Power consumption verification is increasingly problematic as PCM platforms are frequently deployed in energy-constrained environments. The verification process must accurately model power states, transitions, and consumption patterns across both hardware and software domains, which current methodologies struggle to integrate cohesively.

Fault tolerance verification represents a significant hurdle. PCM's susceptibility to specific failure modes requires comprehensive verification of error detection and correction mechanisms spanning both hardware and software layers. Current verification approaches often treat these domains separately, missing critical interaction points where failures might propagate.

Scalability issues further complicate verification efforts. As PCM capacities increase and architectures become more complex, verification environments struggle to maintain performance while providing adequate coverage. The exponential growth in state space makes exhaustive verification increasingly impractical, necessitating more sophisticated approaches.

Tool interoperability remains a persistent challenge. Hardware verification tools (like RTL simulators) and software verification environments (such as virtual platforms) often use incompatible models and interfaces, creating verification gaps at the hardware-software boundary. These disconnects frequently result in integration issues discovered only in physical prototypes, significantly increasing development costs and time-to-market.

Finally, the lack of standardized verification methodologies specifically tailored for PCM platforms forces verification teams to develop custom approaches, leading to inconsistent quality and coverage across the industry. This absence of standardization hampers knowledge sharing and best practice development in the PCM verification community.

The primary challenge lies in the dual-nature verification requirements of PCM platforms. Hardware verification must account for the non-volatile memory characteristics, including write endurance limitations, read disturbance effects, and thermal stability concerns. Simultaneously, software verification must ensure proper memory management, wear-leveling algorithms, and error correction mechanisms specifically designed for PCM technology.

Timing verification presents another critical challenge. PCM's asymmetric read/write latencies differ substantially from conventional memory technologies, creating complex timing dependencies that must be accurately modeled and verified across the hardware-software boundary. Current verification tools often lack the specialized capabilities to effectively simulate these unique timing characteristics.

Power consumption verification is increasingly problematic as PCM platforms are frequently deployed in energy-constrained environments. The verification process must accurately model power states, transitions, and consumption patterns across both hardware and software domains, which current methodologies struggle to integrate cohesively.

Fault tolerance verification represents a significant hurdle. PCM's susceptibility to specific failure modes requires comprehensive verification of error detection and correction mechanisms spanning both hardware and software layers. Current verification approaches often treat these domains separately, missing critical interaction points where failures might propagate.

Scalability issues further complicate verification efforts. As PCM capacities increase and architectures become more complex, verification environments struggle to maintain performance while providing adequate coverage. The exponential growth in state space makes exhaustive verification increasingly impractical, necessitating more sophisticated approaches.

Tool interoperability remains a persistent challenge. Hardware verification tools (like RTL simulators) and software verification environments (such as virtual platforms) often use incompatible models and interfaces, creating verification gaps at the hardware-software boundary. These disconnects frequently result in integration issues discovered only in physical prototypes, significantly increasing development costs and time-to-market.

Finally, the lack of standardized verification methodologies specifically tailored for PCM platforms forces verification teams to develop custom approaches, leading to inconsistent quality and coverage across the industry. This absence of standardization hampers knowledge sharing and best practice development in the PCM verification community.

Mainstream Co-Verification Approaches for PCM

01 Co-simulation frameworks for hardware and software verification

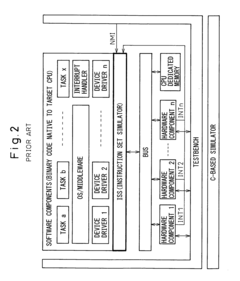

Co-simulation frameworks enable the simultaneous verification of hardware and software components by creating a unified environment where both can be tested together. These frameworks allow engineers to detect integration issues early in the development process by simulating the interaction between hardware and software components before physical implementation. This approach helps identify bugs that might only appear when hardware and software interact, reducing development time and costs while improving system reliability.- Simulation-based co-verification methodologies: Simulation-based approaches enable hardware and software components to be verified together in a virtual environment before physical implementation. These methodologies typically involve creating simulation models of hardware components that can interact with software code, allowing developers to detect integration issues early in the development cycle. Advanced simulation techniques may include cycle-accurate modeling, transaction-level modeling, and event-driven simulation to balance verification thoroughness with execution speed.

- FPGA-based hardware/software co-verification: Field-Programmable Gate Array (FPGA) prototyping provides a hardware platform for co-verification by implementing hardware designs in reconfigurable logic while interfacing with actual software. This approach offers higher execution speeds than pure simulation and allows testing with real-world interfaces. FPGA-based co-verification enables developers to validate hardware and software interactions under conditions closer to the final product, helping identify timing issues and performance bottlenecks that might not be apparent in simulation.

- Automated test generation and verification frameworks: Automated frameworks generate test cases and verification scenarios to systematically exercise hardware-software interactions. These frameworks can include coverage-driven verification, constraint random testing, and formal verification techniques to ensure comprehensive testing of the integrated system. By automating the generation of test cases, these frameworks can explore edge cases and corner conditions that might be missed in manual testing approaches, improving the overall quality of verification.

- Virtual prototyping and emulation techniques: Virtual prototyping creates software models of hardware components that can execute at higher speeds than detailed RTL simulations. These models allow software development and verification to begin before hardware is finalized. Hardware emulation systems provide a middle ground between simulation and FPGA prototyping, offering faster execution than simulation while maintaining high visibility into the system's internal state. These approaches enable parallel development of hardware and software components, reducing time-to-market.

- Hardware/software co-design and verification methodologies: Integrated methodologies address hardware and software design and verification as a unified process rather than separate activities. These approaches include formal specification of hardware-software interfaces, partitioning strategies, and verification planning that considers the entire system. Co-design methodologies often incorporate model-based design techniques where high-level system models are progressively refined and verified throughout the development process, ensuring consistency between hardware and software implementations.

02 Emulation-based verification techniques

Emulation-based verification techniques use hardware emulators to mimic the behavior of actual hardware components while interfacing with software. This approach provides a more accurate representation of the final system compared to pure simulation, allowing for faster verification of complex systems. Emulation platforms can execute software at near-real-time speeds while providing detailed visibility into the hardware operation, enabling comprehensive testing of hardware-software interactions under realistic conditions.Expand Specific Solutions03 Automated test generation and validation methods

Automated test generation and validation methods streamline the co-verification process by creating comprehensive test scenarios that exercise both hardware and software components. These methods use algorithms to generate test cases that target specific interaction points between hardware and software, ensuring thorough coverage of potential failure modes. By automating the test generation process, engineers can achieve higher test coverage with less manual effort, leading to more robust verification results.Expand Specific Solutions04 Hardware-in-the-loop testing methodologies

Hardware-in-the-loop testing methodologies integrate actual hardware components with simulated software environments or vice versa to verify system behavior under realistic conditions. This approach bridges the gap between pure simulation and full system implementation by allowing engineers to test software against real hardware responses or test hardware with production software. These methodologies are particularly valuable for safety-critical systems where accurate verification of timing and performance characteristics is essential.Expand Specific Solutions05 Formal verification methods for hardware-software interfaces

Formal verification methods apply mathematical techniques to prove the correctness of hardware-software interfaces and interactions. These methods use formal models to represent both hardware and software components and their interactions, allowing for exhaustive analysis of all possible behaviors. By mathematically proving that the hardware-software interface meets its specifications under all conditions, formal verification provides a higher level of assurance than testing alone, particularly for complex systems with numerous potential interaction scenarios.Expand Specific Solutions

Leading Vendors in Co-Verification Tools

The Hardware/Software Co-Verification Workflows for PCM Platforms market is currently in a growth phase, with increasing adoption as system complexity drives demand for integrated verification solutions. The market is projected to reach approximately $500-700 million by 2025, expanding at a CAGR of 8-10%. Leading players include Synopsys and Cadence Design Systems, who dominate with comprehensive verification platforms, while IBM and Siemens Industry Software offer specialized enterprise solutions. Emerging competitors like ARM and ZTE are gaining traction with targeted offerings. The technology is approaching maturity in traditional computing sectors but remains evolving for emerging applications like IoT and automotive systems, where companies like Robert Bosch and Google are developing customized co-verification methodologies to address domain-specific challenges.

International Business Machines Corp.

Technical Solution: IBM has developed an advanced Hardware/Software Co-Verification workflow for PCM platforms centered around their POWER architecture and OpenPOWER ecosystem. Their approach leverages a combination of simulation-based verification and hardware acceleration techniques. IBM's solution includes the Functional Verification (FV) suite that provides comprehensive verification capabilities across multiple abstraction levels. Their methodology incorporates formal verification techniques alongside traditional simulation to achieve higher verification coverage. IBM's co-verification platform supports both pre-silicon and post-silicon validation phases with seamless transition between them. The system includes specialized verification IP for memory subsystems that are critical in PCM architectures. IBM's approach emphasizes early detection of hardware-software interface issues through their "shift-left" verification methodology. Their platform includes advanced debug capabilities with hardware trace facilities and software debug tools that operate in a synchronized environment. IBM also leverages AI-assisted verification techniques to identify potential issues earlier in the development cycle[7][9].

Strengths: Deep integration with enterprise-class systems; excellent scalability for complex verification environments; strong formal verification capabilities. Weaknesses: More specialized toward IBM's own hardware architectures; potentially less flexible for diverse third-party components; higher complexity requiring specialized expertise.

Siemens Industry Software, Inc.

Technical Solution: Siemens (formerly Mentor Graphics) has developed the Veloce hardware-assisted verification platform specifically addressing hardware/software co-verification for PCM architectures. Their solution combines hardware emulation with virtual platform technologies to enable early software development and comprehensive system verification. The Veloce platform features a unique hybrid approach that allows hardware and software teams to work in parallel using their preferred environments and tools. Siemens' solution includes the Questa verification platform that integrates with Veloce to provide a complete verification continuum from virtual prototypes to emulation. Their technology supports advanced debug capabilities including save and restore functionality that allows engineers to capture specific system states for detailed analysis. The platform includes power-aware verification features essential for modern PCM designs where power consumption is critical. Siemens also offers the Catapult High-Level Synthesis tool that enables algorithmic C/C++ specifications to be directly implemented in hardware, streamlining the hardware-software co-design process[4][6].

Strengths: Excellent scalability for enterprise-level verification needs; strong integration between virtual and physical verification environments; comprehensive power analysis capabilities. Weaknesses: Complex deployment requiring specialized expertise; potentially higher cost structure; steeper learning curve for new users.

Key Technologies in HW/SW Interface Verification

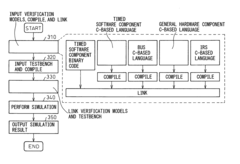

Hardware/software co-verification method

PatentInactiveUS20050149897A1

Innovation

- Implementing a C-based native code simulation that eliminates per-instruction interpretation by converting untimed software components into timed components with execution time insertion statements, allowing for faster simulation without degrading timing accuracy.

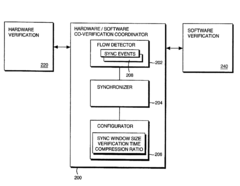

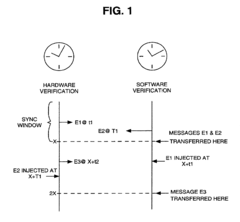

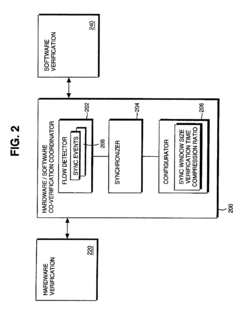

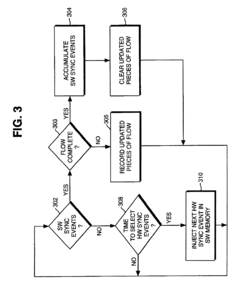

Hardware and software co-verification employing deferred synchronization

PatentInactiveUS6356862B2

Innovation

- A co-verification coordinator with a flow detector, synchronizer, and configurator facilitates deferred synchronization, allowing periodic accumulation and injection of synchronization events to ensure consistent verification without locked-step limitations, providing flexibility in synchronization window size and reducing communication overhead.

Performance Metrics and Benchmarking

Establishing effective performance metrics and benchmarking methodologies is crucial for evaluating Hardware/Software Co-Verification workflows in PCM (Phase Change Memory) platforms. These metrics provide quantifiable measures to assess the efficiency, accuracy, and reliability of verification processes across different implementation scenarios.

Verification time serves as a primary performance indicator, encompassing simulation runtime, compilation time, and overall verification cycle duration. For PCM platforms, where timing characteristics are particularly critical due to the unique read/write latencies of phase change materials, metrics must specifically address timing verification accuracy. Industry benchmarks indicate that advanced co-verification methodologies can reduce verification cycles by 30-45% compared to traditional sequential approaches.

Coverage metrics represent another essential dimension, including code coverage, functional coverage, and assertion coverage. PCM-specific coverage metrics should focus on memory state transitions, resistance drift compensation mechanisms, and multi-level cell verification. Leading verification environments typically aim for at least 95% functional coverage across all PCM operational modes and corner cases.

Resource utilization metrics track computational resources required during verification, such as CPU usage, memory consumption, and license utilization. Recent benchmarks show that hardware-accelerated verification platforms can achieve 5-10x performance improvements for PCM verification compared to software-only solutions, though at increased infrastructure costs.

Defect detection efficiency measures the workflow's ability to identify hardware-software interface issues, particularly those unique to PCM technologies such as resistance drift, read disturb phenomena, and endurance limitations. Industry data suggests that integrated co-verification approaches detect approximately 25-30% more interface defects than separate hardware and software verification processes.

Standardized benchmarking suites specifically designed for PCM platforms have emerged, including PCM-Verify, MemVerify+, and NVRAM-Bench. These suites contain reference designs with known defects and performance characteristics, enabling objective comparison between different verification methodologies and tools. The PCM-Verify suite, for instance, includes 12 reference designs of varying complexity and focuses on multi-level cell verification challenges.

Correlation metrics between simulation and silicon results are particularly important for PCM platforms due to their analog characteristics and sensitivity to manufacturing variations. Leading verification methodologies demonstrate correlation coefficients exceeding 0.92 between simulated and measured performance parameters, including read latency distribution, write energy consumption, and data retention characteristics.

Industry benchmarking practices increasingly incorporate power and thermal verification metrics, as PCM operations—particularly write operations—can generate significant localized heating that affects both the memory cells and surrounding circuitry. Comprehensive co-verification workflows must therefore include thermal simulation accuracy as a key performance indicator.

Verification time serves as a primary performance indicator, encompassing simulation runtime, compilation time, and overall verification cycle duration. For PCM platforms, where timing characteristics are particularly critical due to the unique read/write latencies of phase change materials, metrics must specifically address timing verification accuracy. Industry benchmarks indicate that advanced co-verification methodologies can reduce verification cycles by 30-45% compared to traditional sequential approaches.

Coverage metrics represent another essential dimension, including code coverage, functional coverage, and assertion coverage. PCM-specific coverage metrics should focus on memory state transitions, resistance drift compensation mechanisms, and multi-level cell verification. Leading verification environments typically aim for at least 95% functional coverage across all PCM operational modes and corner cases.

Resource utilization metrics track computational resources required during verification, such as CPU usage, memory consumption, and license utilization. Recent benchmarks show that hardware-accelerated verification platforms can achieve 5-10x performance improvements for PCM verification compared to software-only solutions, though at increased infrastructure costs.

Defect detection efficiency measures the workflow's ability to identify hardware-software interface issues, particularly those unique to PCM technologies such as resistance drift, read disturb phenomena, and endurance limitations. Industry data suggests that integrated co-verification approaches detect approximately 25-30% more interface defects than separate hardware and software verification processes.

Standardized benchmarking suites specifically designed for PCM platforms have emerged, including PCM-Verify, MemVerify+, and NVRAM-Bench. These suites contain reference designs with known defects and performance characteristics, enabling objective comparison between different verification methodologies and tools. The PCM-Verify suite, for instance, includes 12 reference designs of varying complexity and focuses on multi-level cell verification challenges.

Correlation metrics between simulation and silicon results are particularly important for PCM platforms due to their analog characteristics and sensitivity to manufacturing variations. Leading verification methodologies demonstrate correlation coefficients exceeding 0.92 between simulated and measured performance parameters, including read latency distribution, write energy consumption, and data retention characteristics.

Industry benchmarking practices increasingly incorporate power and thermal verification metrics, as PCM operations—particularly write operations—can generate significant localized heating that affects both the memory cells and surrounding circuitry. Comprehensive co-verification workflows must therefore include thermal simulation accuracy as a key performance indicator.

Integration with CI/CD Pipelines

The integration of Hardware/Software Co-Verification workflows into Continuous Integration/Continuous Deployment (CI/CD) pipelines represents a critical advancement for PCM (Phase Change Memory) platform development. Modern development environments demand rapid iteration cycles while maintaining high quality standards, making automated verification processes essential within the development pipeline.

CI/CD integration enables automated triggering of co-verification processes whenever changes are committed to either hardware or software repositories. This automation significantly reduces the verification overhead that traditionally required manual intervention, allowing development teams to detect integration issues earlier in the development cycle. For PCM platforms specifically, where timing constraints and memory access patterns are critical, this early detection capability prevents costly redesigns later in the development process.

A well-implemented CI/CD pipeline for PCM platform verification typically includes several key components. First, automated build systems compile hardware descriptions and software code upon each commit. Then, regression test suites execute pre-defined verification scenarios that target critical PCM operations such as write latency verification, read performance, and endurance testing. Finally, comprehensive reporting mechanisms generate detailed results that highlight any deviations from expected behavior.

The pipeline architecture must accommodate the unique characteristics of PCM verification. This includes managing the significant simulation time required for accurate PCM modeling, orchestrating parallel verification jobs to improve throughput, and implementing intelligent test selection algorithms that prioritize tests based on the nature of code changes. Cloud-based verification resources can be dynamically allocated to handle computation-intensive verification tasks, ensuring efficient resource utilization.

Version control integration is particularly important for maintaining traceability between hardware and software components. Modern CI/CD systems for PCM platforms must track interdependencies between hardware models, firmware, and application software, ensuring that compatible versions are always verified together. This capability becomes essential when managing multiple PCM configurations or when supporting various system architectures simultaneously.

Metrics collection throughout the verification process provides valuable insights into development efficiency. Key performance indicators such as verification coverage trends, defect detection rates, and time-to-resolution metrics help teams continuously improve their development processes. For PCM platforms, specialized metrics tracking power consumption profiles and reliability characteristics across temperature ranges can be automatically collected and analyzed.

The ultimate goal of CI/CD integration for PCM platform co-verification is to establish a "shift-left" verification approach, where potential issues are identified and resolved as early as possible in the development cycle, significantly reducing overall development time while improving product quality and reliability.

CI/CD integration enables automated triggering of co-verification processes whenever changes are committed to either hardware or software repositories. This automation significantly reduces the verification overhead that traditionally required manual intervention, allowing development teams to detect integration issues earlier in the development cycle. For PCM platforms specifically, where timing constraints and memory access patterns are critical, this early detection capability prevents costly redesigns later in the development process.

A well-implemented CI/CD pipeline for PCM platform verification typically includes several key components. First, automated build systems compile hardware descriptions and software code upon each commit. Then, regression test suites execute pre-defined verification scenarios that target critical PCM operations such as write latency verification, read performance, and endurance testing. Finally, comprehensive reporting mechanisms generate detailed results that highlight any deviations from expected behavior.

The pipeline architecture must accommodate the unique characteristics of PCM verification. This includes managing the significant simulation time required for accurate PCM modeling, orchestrating parallel verification jobs to improve throughput, and implementing intelligent test selection algorithms that prioritize tests based on the nature of code changes. Cloud-based verification resources can be dynamically allocated to handle computation-intensive verification tasks, ensuring efficient resource utilization.

Version control integration is particularly important for maintaining traceability between hardware and software components. Modern CI/CD systems for PCM platforms must track interdependencies between hardware models, firmware, and application software, ensuring that compatible versions are always verified together. This capability becomes essential when managing multiple PCM configurations or when supporting various system architectures simultaneously.

Metrics collection throughout the verification process provides valuable insights into development efficiency. Key performance indicators such as verification coverage trends, defect detection rates, and time-to-resolution metrics help teams continuously improve their development processes. For PCM platforms, specialized metrics tracking power consumption profiles and reliability characteristics across temperature ranges can be automatically collected and analyzed.

The ultimate goal of CI/CD integration for PCM platform co-verification is to establish a "shift-left" verification approach, where potential issues are identified and resolved as early as possible in the development cycle, significantly reducing overall development time while improving product quality and reliability.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!