Software Tooling Requirements For PCM-Aware Machine Learning Teams

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

PCM-ML Integration Background and Objectives

The integration of Product Cost Management (PCM) and Machine Learning (ML) represents a significant evolution in how organizations approach cost optimization and decision-making processes. Historically, PCM has relied on traditional accounting methods and manual analysis, while ML has developed independently within the computer science domain. The convergence of these fields began gaining momentum around 2015-2018, as organizations recognized the potential of applying advanced analytics to cost management challenges.

The evolution of this integration has been driven by several factors: increasing data availability within enterprise systems, advancements in ML algorithms capable of handling complex cost variables, and growing competitive pressures demanding more precise cost predictions. Early implementations focused primarily on descriptive analytics, while recent developments have shifted toward predictive and prescriptive capabilities that can anticipate cost fluctuations and recommend mitigation strategies.

The primary objective of PCM-aware ML integration is to transform cost management from a retrospective accounting function to a forward-looking strategic capability. This involves developing systems that can automatically identify cost drivers, predict future cost behaviors across product lifecycles, and recommend design or process modifications to optimize costs while maintaining quality standards.

Technical goals include creating seamless data pipelines between enterprise resource planning (ERP) systems and ML environments, developing specialized algorithms that incorporate domain-specific cost accounting principles, and building interpretable models that provide actionable insights to non-technical stakeholders in product development and manufacturing.

A critical aim is establishing cross-functional software tooling that bridges the knowledge gap between cost accounting professionals and data scientists. This requires developing interfaces and workflows that translate between financial terminology and ML concepts, enabling collaborative model development and refinement.

Long-term objectives extend to creating autonomous cost optimization systems capable of continuously monitoring production data, identifying emerging cost patterns, and automatically implementing or suggesting adjustments to maintain optimal cost structures. These systems must balance immediate cost reduction with long-term value creation, considering factors such as sustainability, supply chain resilience, and total cost of ownership.

The ultimate vision is to establish a technological foundation that transforms cost management from a constraint to a competitive advantage, enabling organizations to rapidly respond to market changes while maintaining profitability through intelligent, data-driven cost strategies.

The evolution of this integration has been driven by several factors: increasing data availability within enterprise systems, advancements in ML algorithms capable of handling complex cost variables, and growing competitive pressures demanding more precise cost predictions. Early implementations focused primarily on descriptive analytics, while recent developments have shifted toward predictive and prescriptive capabilities that can anticipate cost fluctuations and recommend mitigation strategies.

The primary objective of PCM-aware ML integration is to transform cost management from a retrospective accounting function to a forward-looking strategic capability. This involves developing systems that can automatically identify cost drivers, predict future cost behaviors across product lifecycles, and recommend design or process modifications to optimize costs while maintaining quality standards.

Technical goals include creating seamless data pipelines between enterprise resource planning (ERP) systems and ML environments, developing specialized algorithms that incorporate domain-specific cost accounting principles, and building interpretable models that provide actionable insights to non-technical stakeholders in product development and manufacturing.

A critical aim is establishing cross-functional software tooling that bridges the knowledge gap between cost accounting professionals and data scientists. This requires developing interfaces and workflows that translate between financial terminology and ML concepts, enabling collaborative model development and refinement.

Long-term objectives extend to creating autonomous cost optimization systems capable of continuously monitoring production data, identifying emerging cost patterns, and automatically implementing or suggesting adjustments to maintain optimal cost structures. These systems must balance immediate cost reduction with long-term value creation, considering factors such as sustainability, supply chain resilience, and total cost of ownership.

The ultimate vision is to establish a technological foundation that transforms cost management from a constraint to a competitive advantage, enabling organizations to rapidly respond to market changes while maintaining profitability through intelligent, data-driven cost strategies.

Market Analysis for PCM-Aware ML Tools

The market for PCM-aware machine learning tools is experiencing significant growth as organizations increasingly recognize the importance of product cost management (PCM) integration within their ML workflows. Current market size estimates indicate the specialized ML tools market exceeds $5 billion annually, with PCM-aware solutions representing an emerging segment growing at approximately 30% year-over-year.

Primary market drivers include the escalating costs of ML model development and deployment, with enterprises reporting average annual ML infrastructure costs between $1-10 million depending on organization size. The integration of PCM capabilities addresses critical pain points around resource allocation, cost optimization, and ROI justification that traditional ML platforms fail to address.

Market segmentation reveals three distinct customer profiles: enterprise organizations seeking comprehensive cost management across large-scale ML operations; mid-market companies requiring modular solutions with clear cost-benefit analysis capabilities; and research institutions needing granular resource tracking for grant-funded projects. Each segment demonstrates different purchasing behaviors and feature priorities.

Competitive analysis shows limited dedicated solutions currently available. Major cloud providers (AWS, Azure, Google Cloud) offer basic cost monitoring tools but lack ML-specific PCM features. Specialized vendors like Weights & Biases and Determined AI have introduced preliminary cost tracking capabilities but comprehensive PCM-aware ML platforms remain scarce, indicating significant market opportunity.

Regional analysis reveals North America dominating market share (approximately 45%), followed by Europe (30%) and Asia-Pacific (20%). The APAC region demonstrates the fastest growth trajectory at 35% annually, driven by rapid AI adoption in China, Japan, and Singapore.

Customer surveys indicate willingness to pay premiums of 15-25% for ML tools with integrated PCM capabilities compared to standard offerings. Key purchasing factors include integration with existing ML workflows, customizable cost allocation frameworks, and predictive cost modeling accuracy.

Market forecasts project the PCM-aware ML tools segment to reach $2.5 billion by 2026, representing a compound annual growth rate of 38%. This growth trajectory is supported by increasing regulatory pressure for AI cost transparency, the mainstream adoption of ML operations (MLOps) practices, and the continued expansion of enterprise AI initiatives requiring stricter financial governance.

Primary market drivers include the escalating costs of ML model development and deployment, with enterprises reporting average annual ML infrastructure costs between $1-10 million depending on organization size. The integration of PCM capabilities addresses critical pain points around resource allocation, cost optimization, and ROI justification that traditional ML platforms fail to address.

Market segmentation reveals three distinct customer profiles: enterprise organizations seeking comprehensive cost management across large-scale ML operations; mid-market companies requiring modular solutions with clear cost-benefit analysis capabilities; and research institutions needing granular resource tracking for grant-funded projects. Each segment demonstrates different purchasing behaviors and feature priorities.

Competitive analysis shows limited dedicated solutions currently available. Major cloud providers (AWS, Azure, Google Cloud) offer basic cost monitoring tools but lack ML-specific PCM features. Specialized vendors like Weights & Biases and Determined AI have introduced preliminary cost tracking capabilities but comprehensive PCM-aware ML platforms remain scarce, indicating significant market opportunity.

Regional analysis reveals North America dominating market share (approximately 45%), followed by Europe (30%) and Asia-Pacific (20%). The APAC region demonstrates the fastest growth trajectory at 35% annually, driven by rapid AI adoption in China, Japan, and Singapore.

Customer surveys indicate willingness to pay premiums of 15-25% for ML tools with integrated PCM capabilities compared to standard offerings. Key purchasing factors include integration with existing ML workflows, customizable cost allocation frameworks, and predictive cost modeling accuracy.

Market forecasts project the PCM-aware ML tools segment to reach $2.5 billion by 2026, representing a compound annual growth rate of 38%. This growth trajectory is supported by increasing regulatory pressure for AI cost transparency, the mainstream adoption of ML operations (MLOps) practices, and the continued expansion of enterprise AI initiatives requiring stricter financial governance.

Current Tooling Landscape and Technical Barriers

The current machine learning tooling landscape for PCM (Product Cost Management) integration presents a fragmented ecosystem with significant technical barriers. Traditional ML frameworks like TensorFlow, PyTorch, and scikit-learn lack native capabilities for cost-aware model development and deployment, creating a fundamental disconnect between model performance and business economics.

Existing MLOps platforms such as Kubeflow, MLflow, and SageMaker provide robust infrastructure for model lifecycle management but offer minimal support for tracking and optimizing cost metrics throughout the development process. This gap forces teams to develop custom solutions or rely on manual processes to incorporate cost considerations into their ML workflows.

Data management tools represent another critical barrier. While solutions like Databricks, Snowflake, and Delta Lake excel at data processing and storage, they typically lack integrated cost attribution mechanisms that would allow teams to understand the financial implications of different data preparation strategies. The inability to trace costs from raw data to model inference creates significant blind spots in economic optimization.

Monitoring and observability tools such as Prometheus, Grafana, and New Relic have evolved to track technical metrics but remain underdeveloped for PCM-specific concerns. Most platforms can track compute resource utilization but fail to translate these metrics into actionable cost insights or provide mechanisms for cost-based alerting and optimization.

Experiment tracking systems like Weights & Biases and Neptune.ai have revolutionized model development by enabling comprehensive tracking of hyperparameters and performance metrics. However, these platforms rarely incorporate cost as a first-class optimization dimension, limiting teams' ability to make informed tradeoffs between model performance and economic efficiency.

Deployment and serving infrastructure tools such as TensorFlow Serving, Seldon Core, and Triton Inference Server focus primarily on technical performance metrics like throughput and latency. The absence of integrated cost monitoring and optimization capabilities forces organizations to implement separate systems for tracking the financial impact of model serving decisions.

The emerging field of ML efficiency tools, including frameworks like DeepSpeed and ONNX Runtime, primarily targets computational efficiency rather than holistic cost management. While these tools can reduce resource consumption, they typically lack the business context necessary to translate technical optimizations into financial outcomes aligned with organizational PCM objectives.

Existing MLOps platforms such as Kubeflow, MLflow, and SageMaker provide robust infrastructure for model lifecycle management but offer minimal support for tracking and optimizing cost metrics throughout the development process. This gap forces teams to develop custom solutions or rely on manual processes to incorporate cost considerations into their ML workflows.

Data management tools represent another critical barrier. While solutions like Databricks, Snowflake, and Delta Lake excel at data processing and storage, they typically lack integrated cost attribution mechanisms that would allow teams to understand the financial implications of different data preparation strategies. The inability to trace costs from raw data to model inference creates significant blind spots in economic optimization.

Monitoring and observability tools such as Prometheus, Grafana, and New Relic have evolved to track technical metrics but remain underdeveloped for PCM-specific concerns. Most platforms can track compute resource utilization but fail to translate these metrics into actionable cost insights or provide mechanisms for cost-based alerting and optimization.

Experiment tracking systems like Weights & Biases and Neptune.ai have revolutionized model development by enabling comprehensive tracking of hyperparameters and performance metrics. However, these platforms rarely incorporate cost as a first-class optimization dimension, limiting teams' ability to make informed tradeoffs between model performance and economic efficiency.

Deployment and serving infrastructure tools such as TensorFlow Serving, Seldon Core, and Triton Inference Server focus primarily on technical performance metrics like throughput and latency. The absence of integrated cost monitoring and optimization capabilities forces organizations to implement separate systems for tracking the financial impact of model serving decisions.

The emerging field of ML efficiency tools, including frameworks like DeepSpeed and ONNX Runtime, primarily targets computational efficiency rather than holistic cost management. While these tools can reduce resource consumption, they typically lack the business context necessary to translate technical optimizations into financial outcomes aligned with organizational PCM objectives.

Existing Software Solutions for PCM-ML Integration

01 PCM-aware software monitoring and management tools

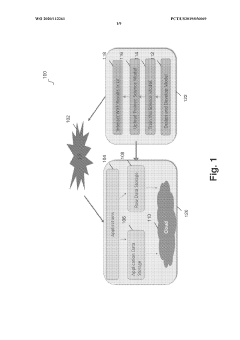

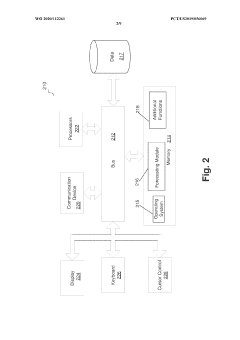

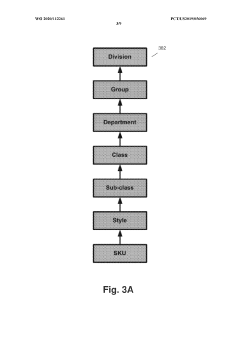

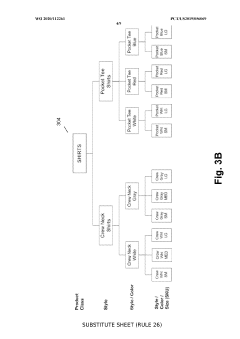

Software tools designed to monitor and manage Phase Change Memory (PCM) systems, providing awareness of PCM characteristics and performance metrics. These tools enable real-time monitoring of PCM operations, memory allocation, and system performance to optimize resource utilization and ensure efficient operation of PCM-based systems.- PCM-aware software monitoring and management tools: Software tools designed specifically for monitoring and managing Phase Change Memory (PCM) systems. These tools provide real-time awareness of PCM states, performance metrics, and health indicators. They enable system administrators to optimize PCM usage, detect potential issues, and implement preventive maintenance strategies. The tools typically include dashboards for visualizing PCM performance data and interfaces for configuration adjustments.

- PCM-aware development frameworks and APIs: Development frameworks and Application Programming Interfaces (APIs) that are specifically designed with PCM-awareness capabilities. These tools provide developers with libraries and functions to optimize software for PCM characteristics, including wear-leveling, endurance management, and performance tuning. They abstract the complexities of PCM hardware while exposing key capabilities that allow applications to take advantage of PCM's unique properties.

- PCM-aware diagnostic and testing tools: Specialized software tools for diagnosing and testing PCM components and systems. These tools perform comprehensive assessments of PCM functionality, reliability, and performance characteristics. They can identify potential issues such as wear patterns, read/write errors, and latency problems. The diagnostic capabilities help in troubleshooting PCM-related issues and validating system configurations before deployment.

- PCM-aware system optimization software: Software tools designed to optimize system performance by leveraging PCM characteristics. These tools analyze workload patterns and data access behaviors to make intelligent decisions about data placement, caching strategies, and memory allocation. They implement algorithms that consider PCM's asymmetric read/write performance, endurance limitations, and non-volatility to enhance overall system efficiency and extend PCM lifespan.

- PCM-aware user interface and visualization tools: User interface and visualization tools that provide insights into PCM operation and performance. These tools present complex PCM data in intuitive, graphical formats that help users understand system behavior. They include real-time monitoring dashboards, historical performance graphs, and interactive configuration interfaces. The visualization capabilities enable better decision-making regarding PCM resource allocation and system tuning.

02 PCM-aware data processing and communication systems

Systems and methods for data processing and communication that are specifically designed to be aware of PCM characteristics. These solutions incorporate PCM-awareness into network protocols, data transmission mechanisms, and processing algorithms to optimize performance when working with PCM storage technologies.Expand Specific Solutions03 PCM-aware diagnostic and testing tools

Diagnostic and testing software tools that are specifically designed to evaluate PCM performance, reliability, and behavior under various conditions. These tools help identify potential issues, validate PCM implementations, and ensure proper functioning of PCM-based systems through comprehensive testing methodologies.Expand Specific Solutions04 PCM-aware development environments and frameworks

Software development environments, frameworks, and programming tools that incorporate PCM-awareness to help developers create applications optimized for PCM technologies. These tools provide APIs, libraries, and development interfaces that account for PCM characteristics during the software development process.Expand Specific Solutions05 PCM-aware system integration and control software

Software solutions for integrating PCM technologies into larger systems and providing control mechanisms that are aware of PCM characteristics. These tools facilitate the integration of PCM with other system components, manage thermal aspects of PCM operation, and provide control interfaces for PCM-based systems.Expand Specific Solutions

Leading Vendors in PCM-ML Ecosystem

The PCM-aware machine learning tooling market is in its growth phase, characterized by increasing adoption across industries as organizations recognize the value of integrating product configuration management with ML workflows. The market size is expanding rapidly, projected to reach significant scale as enterprises seek to streamline their ML operations with proper version control and configuration management. From a technical maturity perspective, the landscape shows varying degrees of sophistication. Industry leaders like Microsoft, Google, IBM, and NVIDIA are at the forefront, offering comprehensive solutions that integrate ML frameworks with robust PCM capabilities. Companies such as Autodesk, Dassault Systèmes, and Siemens are leveraging their engineering expertise to develop specialized tooling for manufacturing and design applications, while newer entrants like Alteryx and Salesforce are focusing on business-oriented ML tooling with emerging PCM features.

International Business Machines Corp.

Technical Solution: IBM has developed Watson Studio as its flagship platform addressing PCM requirements for ML teams. The platform provides integrated tools for data preparation, model building, and deployment with strong governance features. IBM's approach emphasizes enterprise-grade PCM through Watson OpenScale, which provides automated bias detection, explainability, and drift monitoring capabilities. Their AutoAI functionality automates model selection and hyperparameter tuning while maintaining transparency throughout the process. IBM has implemented robust model governance features including approval workflows, version control, and detailed audit trails that satisfy regulatory requirements in complex ML deployments. Their Cloud Pak for Data extends these capabilities with containerized microservices architecture that enables consistent ML workflows across hybrid cloud environments. IBM's toolchain includes specialized components for industry-specific ML applications, with pre-built templates that accelerate development while maintaining compliance with domain-specific PCM requirements.

Strengths: Superior governance and compliance features; strong support for regulated industries; excellent integration with enterprise data systems. Weaknesses: More complex setup compared to newer platforms; enterprise focus may be overkill for smaller teams; higher cost structure compared to open-source alternatives.

Microsoft Technology Licensing LLC

Technical Solution: Microsoft has developed Azure Machine Learning, a comprehensive platform that addresses PCM requirements through its MLOps capabilities. The platform provides end-to-end ML lifecycle management with robust tooling for data preparation, model training, and deployment. Microsoft's approach emphasizes reproducibility through Azure ML pipelines that capture metadata at each stage of the ML workflow. Their system includes automated model registration, versioning, and lineage tracking to ensure compliance with PCM standards. Microsoft has integrated GitHub Actions specifically for ML workflows, enabling automated testing and validation of models before deployment. Their recent innovations include "responsible ML" tools that help teams monitor and understand model behavior in production, addressing the complexity of maintaining ML systems over time. Microsoft's toolchain also features integration with Power BI for visualization of model performance metrics, enabling stakeholders to track PCM-related KPIs.

Strengths: Strong enterprise integration with existing Microsoft tools; comprehensive governance features for regulated industries; excellent documentation and support ecosystem. Weaknesses: Tighter coupling with Microsoft ecosystem can limit flexibility; some advanced features require significant Azure investment; complexity in configuration for cross-platform deployments.

Key Technologies Enabling PCM-Aware ML

Real-time workflow injection recommendations

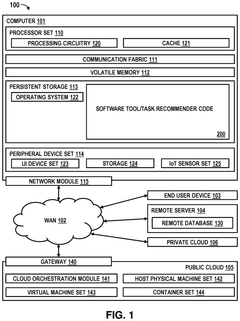

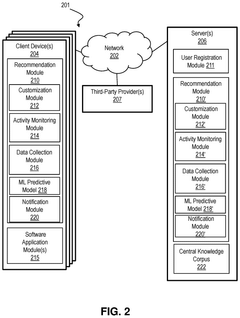

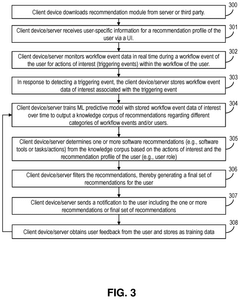

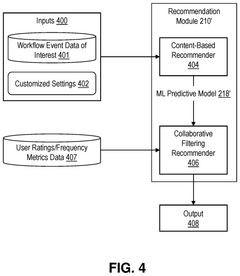

PatentPendingUS20240330646A1

Innovation

- A computer-implemented method using machine learning predictive models trained with workflow event data from multiple devices to generate real-time software recommendations based on user-specific profiles, identifying actions of interest and suggesting optimal tools or tasks to improve workflow efficiency, while also updating the model with user feedback.

Extensible software tool with customizable machine prediction

PatentWO2020112261A1

Innovation

- An extensible software tool that allows loading customizable trained machine learning models, enabling the use of various prediction models like neural networks and regression models, while maintaining confidentiality by allowing external sources to train models with confidential data without exposing it to the software tool.

DevOps Integration for PCM-ML Workflows

Integrating DevOps practices into PCM-aware machine learning workflows represents a critical advancement for organizations seeking to optimize their development pipelines. The convergence of DevOps methodologies with PCM-ML (Probabilistic Causal Models for Machine Learning) requires specialized tooling configurations that support both the iterative nature of ML development and the rigorous requirements of causal inference.

Traditional DevOps pipelines must be extended to accommodate the unique characteristics of PCM-ML workflows, including causal graph versioning, experimental tracking, and model validation against causal assumptions. CI/CD systems need modification to incorporate causal testing frameworks that verify not just predictive accuracy but causal validity across deployments.

Container orchestration platforms like Kubernetes can be configured with custom operators for PCM-ML workloads, enabling efficient resource allocation for computationally intensive causal discovery algorithms. These platforms should implement specialized monitoring for causal drift—detecting when underlying causal relationships in production data diverge from training assumptions.

Infrastructure-as-Code (IaC) templates specifically designed for PCM-ML environments must include provisions for reproducible causal inference, maintaining consistent computational environments across development, testing, and production. Tools like Terraform can be extended with custom modules that provision appropriate hardware accelerators for graph-based causal computations.

Automated testing frameworks require enhancement to validate causal relationships alongside traditional ML metrics. This includes implementing A/B testing infrastructures that can isolate causal effects in production environments and feed results back into the development cycle, creating a continuous causal validation loop.

Observability solutions must be augmented to track causal metrics alongside traditional ML performance indicators. This involves custom dashboards that visualize causal graph stability, intervention effects, and counterfactual accuracy over time, providing teams with insights into how their causal models perform in real-world scenarios.

Version control systems need extensions to handle the unique artifacts of PCM-ML workflows, including causal graph specifications, intervention designs, and counterfactual scenarios. Git-based workflows can be enhanced with hooks that trigger causal validation tests when changes to these artifacts are committed.

Deployment strategies for PCM-ML models should incorporate canary releases and feature flags specifically designed to test causal hypotheses in production environments with minimal risk. This allows teams to gradually validate causal assumptions against real-world data before full deployment.

Traditional DevOps pipelines must be extended to accommodate the unique characteristics of PCM-ML workflows, including causal graph versioning, experimental tracking, and model validation against causal assumptions. CI/CD systems need modification to incorporate causal testing frameworks that verify not just predictive accuracy but causal validity across deployments.

Container orchestration platforms like Kubernetes can be configured with custom operators for PCM-ML workloads, enabling efficient resource allocation for computationally intensive causal discovery algorithms. These platforms should implement specialized monitoring for causal drift—detecting when underlying causal relationships in production data diverge from training assumptions.

Infrastructure-as-Code (IaC) templates specifically designed for PCM-ML environments must include provisions for reproducible causal inference, maintaining consistent computational environments across development, testing, and production. Tools like Terraform can be extended with custom modules that provision appropriate hardware accelerators for graph-based causal computations.

Automated testing frameworks require enhancement to validate causal relationships alongside traditional ML metrics. This includes implementing A/B testing infrastructures that can isolate causal effects in production environments and feed results back into the development cycle, creating a continuous causal validation loop.

Observability solutions must be augmented to track causal metrics alongside traditional ML performance indicators. This involves custom dashboards that visualize causal graph stability, intervention effects, and counterfactual accuracy over time, providing teams with insights into how their causal models perform in real-world scenarios.

Version control systems need extensions to handle the unique artifacts of PCM-ML workflows, including causal graph specifications, intervention designs, and counterfactual scenarios. Git-based workflows can be enhanced with hooks that trigger causal validation tests when changes to these artifacts are committed.

Deployment strategies for PCM-ML models should incorporate canary releases and feature flags specifically designed to test causal hypotheses in production environments with minimal risk. This allows teams to gradually validate causal assumptions against real-world data before full deployment.

Data Governance Standards for PCM-ML Systems

Effective data governance is essential for Private Computation Machine Learning (PCM-ML) systems to ensure data security, privacy compliance, and operational integrity. Organizations implementing PCM-aware machine learning solutions must establish comprehensive governance frameworks that address the unique challenges of processing sensitive data while maintaining analytical capabilities.

Data governance standards for PCM-ML systems should begin with clear data classification protocols. These protocols must identify different sensitivity levels of data assets, with special attention to personally identifiable information (PII), protected health information (PHI), and other regulated data types. Each classification level should trigger specific handling requirements within the PCM environment, ensuring appropriate controls are consistently applied.

Access control mechanisms represent another critical component of PCM-ML governance. These should implement the principle of least privilege, ensuring team members can only access data necessary for their specific roles. Multi-factor authentication, role-based access controls, and time-limited permissions are particularly important when dealing with privacy-preserving computation techniques.

Data lineage tracking must be implemented to maintain visibility throughout the data lifecycle. This includes documenting all transformations, encryptions, and anonymization processes applied to datasets. For PCM-ML systems, this tracking becomes more complex as data may undergo multiple privacy-preserving transformations that must be meticulously recorded while preserving confidentiality guarantees.

Audit and compliance procedures should be formalized to demonstrate adherence to relevant regulations such as GDPR, HIPAA, or CCPA. These procedures must include regular assessment of privacy controls, documentation of processing activities, and mechanisms for responding to data subject requests without compromising the privacy-preserving nature of the system.

Data retention and disposal policies require special consideration in PCM-ML contexts. Organizations must establish clear timeframes for retaining both raw data and derived models, with automated enforcement mechanisms to ensure data is securely deleted when no longer needed. This becomes particularly challenging when dealing with federated learning or secure multi-party computation scenarios.

Incident response protocols should outline specific procedures for addressing potential data breaches or privacy violations within PCM-ML systems. These protocols must balance transparency requirements with the need to maintain privacy guarantees, providing clear escalation paths and remediation steps tailored to privacy-preserving environments.

Finally, governance standards should include regular training requirements for all team members working with PCM-ML systems. This training must cover not only general data protection principles but also the specific technical and procedural safeguards unique to privacy-preserving computation methods.

Data governance standards for PCM-ML systems should begin with clear data classification protocols. These protocols must identify different sensitivity levels of data assets, with special attention to personally identifiable information (PII), protected health information (PHI), and other regulated data types. Each classification level should trigger specific handling requirements within the PCM environment, ensuring appropriate controls are consistently applied.

Access control mechanisms represent another critical component of PCM-ML governance. These should implement the principle of least privilege, ensuring team members can only access data necessary for their specific roles. Multi-factor authentication, role-based access controls, and time-limited permissions are particularly important when dealing with privacy-preserving computation techniques.

Data lineage tracking must be implemented to maintain visibility throughout the data lifecycle. This includes documenting all transformations, encryptions, and anonymization processes applied to datasets. For PCM-ML systems, this tracking becomes more complex as data may undergo multiple privacy-preserving transformations that must be meticulously recorded while preserving confidentiality guarantees.

Audit and compliance procedures should be formalized to demonstrate adherence to relevant regulations such as GDPR, HIPAA, or CCPA. These procedures must include regular assessment of privacy controls, documentation of processing activities, and mechanisms for responding to data subject requests without compromising the privacy-preserving nature of the system.

Data retention and disposal policies require special consideration in PCM-ML contexts. Organizations must establish clear timeframes for retaining both raw data and derived models, with automated enforcement mechanisms to ensure data is securely deleted when no longer needed. This becomes particularly challenging when dealing with federated learning or secure multi-party computation scenarios.

Incident response protocols should outline specific procedures for addressing potential data breaches or privacy violations within PCM-ML systems. These protocols must balance transparency requirements with the need to maintain privacy guarantees, providing clear escalation paths and remediation steps tailored to privacy-preserving environments.

Finally, governance standards should include regular training requirements for all team members working with PCM-ML systems. This training must cover not only general data protection principles but also the specific technical and procedural safeguards unique to privacy-preserving computation methods.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!