How to Address HPLC Response Factor Variability

SEP 19, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

HPLC Response Factor Variability Background and Objectives

High-Performance Liquid Chromatography (HPLC) has evolved significantly since its inception in the 1960s, becoming a cornerstone analytical technique in pharmaceutical, environmental, food safety, and clinical laboratories worldwide. Response factor variability represents one of the most persistent challenges in HPLC methodology, affecting quantitative analysis reliability and reproducibility across different instruments, laboratories, and time periods.

The evolution of HPLC technology has progressed through several generations, from conventional systems to ultra-high-performance instruments, yet response factor variability remains a consistent concern throughout this technical progression. This variability manifests as inconsistent detector responses to identical analyte concentrations, potentially leading to erroneous quantification results that impact critical decision-making processes in research, quality control, and regulatory compliance.

Historical approaches to addressing this variability have included rigorous standardization protocols, frequent calibration, and the use of internal standards. However, these traditional methods often prove insufficient when facing complex sample matrices, instrument aging effects, or when transferring methods between different laboratory environments. The technological trajectory indicates a growing need for more sophisticated, automated, and intelligent solutions to this fundamental analytical challenge.

Current industry standards from organizations such as USP, ICH, and FDA acknowledge response factor variability as a critical parameter requiring control, with guidelines continuously evolving to incorporate new understanding and technological capabilities. The scientific literature demonstrates increasing attention to this issue, with publication trends showing a 35% increase in research papers addressing HPLC response factor challenges over the past decade.

The primary objectives of this technical investigation are multifaceted: to comprehensively characterize the sources and mechanisms of HPLC response factor variability; to evaluate existing mitigation strategies across different application domains; to identify emerging technologies and methodological approaches that show promise in reducing this variability; and to establish a roadmap for developing robust, transferable analytical methods that maintain consistent response factors across diverse operational conditions.

Additionally, this research aims to quantify the economic and scientific impact of response factor variability on laboratory operations, assess the potential return on investment for implementing various correction strategies, and provide evidence-based recommendations for analytical scientists facing this challenge in different sectors of the industry.

By establishing a clear understanding of both the historical context and future trajectory of this technical challenge, this investigation will serve as a foundation for strategic decision-making in analytical method development, validation, and implementation across the organization.

The evolution of HPLC technology has progressed through several generations, from conventional systems to ultra-high-performance instruments, yet response factor variability remains a consistent concern throughout this technical progression. This variability manifests as inconsistent detector responses to identical analyte concentrations, potentially leading to erroneous quantification results that impact critical decision-making processes in research, quality control, and regulatory compliance.

Historical approaches to addressing this variability have included rigorous standardization protocols, frequent calibration, and the use of internal standards. However, these traditional methods often prove insufficient when facing complex sample matrices, instrument aging effects, or when transferring methods between different laboratory environments. The technological trajectory indicates a growing need for more sophisticated, automated, and intelligent solutions to this fundamental analytical challenge.

Current industry standards from organizations such as USP, ICH, and FDA acknowledge response factor variability as a critical parameter requiring control, with guidelines continuously evolving to incorporate new understanding and technological capabilities. The scientific literature demonstrates increasing attention to this issue, with publication trends showing a 35% increase in research papers addressing HPLC response factor challenges over the past decade.

The primary objectives of this technical investigation are multifaceted: to comprehensively characterize the sources and mechanisms of HPLC response factor variability; to evaluate existing mitigation strategies across different application domains; to identify emerging technologies and methodological approaches that show promise in reducing this variability; and to establish a roadmap for developing robust, transferable analytical methods that maintain consistent response factors across diverse operational conditions.

Additionally, this research aims to quantify the economic and scientific impact of response factor variability on laboratory operations, assess the potential return on investment for implementing various correction strategies, and provide evidence-based recommendations for analytical scientists facing this challenge in different sectors of the industry.

By establishing a clear understanding of both the historical context and future trajectory of this technical challenge, this investigation will serve as a foundation for strategic decision-making in analytical method development, validation, and implementation across the organization.

Market Demand for Accurate Analytical Methods

The analytical chemistry market has witnessed substantial growth in recent years, driven by increasing demands for precision and reliability in various industries. The global analytical instrumentation market, which includes HPLC systems, was valued at approximately $58 billion in 2021 and is projected to reach $81 billion by 2026, growing at a CAGR of 6.9%. Within this broader market, HPLC technology represents a significant segment due to its versatility and widespread application.

Pharmaceutical companies, which constitute the largest end-user segment for HPLC systems, face stringent regulatory requirements that necessitate highly accurate analytical methods. The FDA, EMA, and other regulatory bodies have progressively tightened their standards for analytical method validation, emphasizing the importance of addressing response factor variability. This regulatory pressure has created a substantial market demand for solutions that can enhance HPLC reliability and reproducibility.

The biopharmaceutical sector, growing at approximately 12% annually, has particularly acute needs for precise analytical methods due to the complexity of biological molecules. Response factor variability in HPLC analysis of proteins, antibodies, and other biologics presents significant challenges in quality control and batch release processes. Companies developing biosimilars face even greater pressure to demonstrate analytical comparability, driving demand for advanced solutions to HPLC variability issues.

Environmental monitoring represents another rapidly expanding market segment, with increasing regulatory focus on detecting trace contaminants in water, soil, and air samples. The variability in HPLC response factors for environmental analyses can significantly impact the reliability of pollution monitoring and regulatory compliance, creating market demand for more consistent analytical methodologies.

Food safety testing, valued at $19.5 billion globally, relies heavily on HPLC for detecting contaminants, additives, and nutritional components. Inconsistent response factors can lead to false positives or negatives in food safety screening, potentially resulting in costly product recalls or public health risks. This has prompted food manufacturers and testing laboratories to seek improved analytical methods with greater reliability.

Contract research organizations (CROs) and contract manufacturing organizations (CMOs) have emerged as significant market drivers for advanced HPLC solutions. These organizations, which serve multiple clients across various industries, require analytical methods that can deliver consistent results regardless of sample matrix or operator variability. The global CRO market, growing at 7.4% annually, represents a substantial opportunity for technologies addressing HPLC response factor challenges.

Academic and research institutions also contribute to market demand, as reproducibility in scientific research has become a focal point following concerns about the "reproducibility crisis" in various scientific fields. Funding agencies increasingly require robust analytical methodologies, creating demand for solutions to variability issues in HPLC and other analytical techniques.

Pharmaceutical companies, which constitute the largest end-user segment for HPLC systems, face stringent regulatory requirements that necessitate highly accurate analytical methods. The FDA, EMA, and other regulatory bodies have progressively tightened their standards for analytical method validation, emphasizing the importance of addressing response factor variability. This regulatory pressure has created a substantial market demand for solutions that can enhance HPLC reliability and reproducibility.

The biopharmaceutical sector, growing at approximately 12% annually, has particularly acute needs for precise analytical methods due to the complexity of biological molecules. Response factor variability in HPLC analysis of proteins, antibodies, and other biologics presents significant challenges in quality control and batch release processes. Companies developing biosimilars face even greater pressure to demonstrate analytical comparability, driving demand for advanced solutions to HPLC variability issues.

Environmental monitoring represents another rapidly expanding market segment, with increasing regulatory focus on detecting trace contaminants in water, soil, and air samples. The variability in HPLC response factors for environmental analyses can significantly impact the reliability of pollution monitoring and regulatory compliance, creating market demand for more consistent analytical methodologies.

Food safety testing, valued at $19.5 billion globally, relies heavily on HPLC for detecting contaminants, additives, and nutritional components. Inconsistent response factors can lead to false positives or negatives in food safety screening, potentially resulting in costly product recalls or public health risks. This has prompted food manufacturers and testing laboratories to seek improved analytical methods with greater reliability.

Contract research organizations (CROs) and contract manufacturing organizations (CMOs) have emerged as significant market drivers for advanced HPLC solutions. These organizations, which serve multiple clients across various industries, require analytical methods that can deliver consistent results regardless of sample matrix or operator variability. The global CRO market, growing at 7.4% annually, represents a substantial opportunity for technologies addressing HPLC response factor challenges.

Academic and research institutions also contribute to market demand, as reproducibility in scientific research has become a focal point following concerns about the "reproducibility crisis" in various scientific fields. Funding agencies increasingly require robust analytical methodologies, creating demand for solutions to variability issues in HPLC and other analytical techniques.

Current Challenges in HPLC Response Factor Stability

High-performance liquid chromatography (HPLC) response factor stability represents a significant challenge in analytical chemistry, affecting measurement accuracy and reliability across pharmaceutical, environmental, and food safety applications. Response factor variability manifests as inconsistent detector signals for identical analyte concentrations, compromising quantitative analysis precision and potentially leading to erroneous conclusions in critical testing scenarios.

The primary sources of HPLC response factor variability include instrument-related factors such as detector lamp aging, flow cell contamination, and electronic drift. Modern HPLC systems, despite technological advancements, still exhibit inherent signal instability over extended operation periods, particularly in UV-Vis and fluorescence detection systems where light source intensity fluctuations directly impact response linearity.

Sample-related challenges further complicate response factor stability. Matrix effects from complex biological or environmental samples can suppress or enhance analyte signals unpredictably. Co-eluting compounds may interfere with target analyte detection, while sample degradation during analysis introduces time-dependent variability that standard calibration procedures struggle to address adequately.

Method parameters significantly influence response factor consistency. Mobile phase composition changes, even minor variations in organic modifier percentages or buffer concentrations, can dramatically alter detector response. Temperature fluctuations affect both chromatographic separation and detector sensitivity, with some detection methods showing particular susceptibility to thermal variations.

Column-related issues present another dimension of complexity. Column aging leads to gradual changes in stationary phase characteristics, while residual sample components accumulating on columns modify retention behavior and detector response over time. Even batch-to-batch variations in column manufacturing can introduce systematic response factor differences that challenge method transferability between instruments or laboratories.

Calibration approaches themselves introduce challenges. Traditional external calibration methods assume response factor stability throughout the analytical sequence—an assumption often violated in practice. Internal standard techniques, while more robust, require careful selection of appropriate standards that match target analyte behavior across varying conditions, a requirement difficult to satisfy for multi-analyte methods.

The regulatory environment increasingly demands enhanced measurement certainty, with agencies like FDA and EMA implementing stricter guidelines for analytical method validation. These regulations specifically address response factor variability through requirements for demonstrating method robustness, system suitability testing, and ongoing method verification, creating compliance challenges for laboratories working with inherently variable analytical systems.

Addressing these challenges requires a multifaceted approach combining instrumental improvements, advanced calibration strategies, and sophisticated data processing techniques to ensure reliable quantitative results despite the inherent variability in HPLC response factors.

The primary sources of HPLC response factor variability include instrument-related factors such as detector lamp aging, flow cell contamination, and electronic drift. Modern HPLC systems, despite technological advancements, still exhibit inherent signal instability over extended operation periods, particularly in UV-Vis and fluorescence detection systems where light source intensity fluctuations directly impact response linearity.

Sample-related challenges further complicate response factor stability. Matrix effects from complex biological or environmental samples can suppress or enhance analyte signals unpredictably. Co-eluting compounds may interfere with target analyte detection, while sample degradation during analysis introduces time-dependent variability that standard calibration procedures struggle to address adequately.

Method parameters significantly influence response factor consistency. Mobile phase composition changes, even minor variations in organic modifier percentages or buffer concentrations, can dramatically alter detector response. Temperature fluctuations affect both chromatographic separation and detector sensitivity, with some detection methods showing particular susceptibility to thermal variations.

Column-related issues present another dimension of complexity. Column aging leads to gradual changes in stationary phase characteristics, while residual sample components accumulating on columns modify retention behavior and detector response over time. Even batch-to-batch variations in column manufacturing can introduce systematic response factor differences that challenge method transferability between instruments or laboratories.

Calibration approaches themselves introduce challenges. Traditional external calibration methods assume response factor stability throughout the analytical sequence—an assumption often violated in practice. Internal standard techniques, while more robust, require careful selection of appropriate standards that match target analyte behavior across varying conditions, a requirement difficult to satisfy for multi-analyte methods.

The regulatory environment increasingly demands enhanced measurement certainty, with agencies like FDA and EMA implementing stricter guidelines for analytical method validation. These regulations specifically address response factor variability through requirements for demonstrating method robustness, system suitability testing, and ongoing method verification, creating compliance challenges for laboratories working with inherently variable analytical systems.

Addressing these challenges requires a multifaceted approach combining instrumental improvements, advanced calibration strategies, and sophisticated data processing techniques to ensure reliable quantitative results despite the inherent variability in HPLC response factors.

Established Approaches to Response Factor Normalization

01 Calibration methods for HPLC response factor stability

Various calibration techniques are employed to address HPLC response factor variability. These include internal standard methods, multi-point calibration curves, and reference standard comparisons. Proper calibration procedures help compensate for detector response variations across different analyte concentrations and ensure consistent quantitative results despite instrumental drift or environmental changes.- Calibration methods for HPLC response factor stability: Various calibration techniques are employed to address response factor variability in HPLC analysis. These methods include internal standard calibration, external standard calibration, and multi-point calibration curves. By implementing proper calibration protocols, analysts can compensate for variations in detector response across different analytical runs, ensuring more consistent and reliable quantitative results despite inherent system variability.

- Instrument optimization to reduce response factor variability: Optimizing HPLC instrument parameters can significantly reduce response factor variability. This includes proper temperature control of columns and detectors, flow rate optimization, mobile phase composition adjustments, and regular maintenance of critical components such as lamps, flow cells, and injectors. These optimization strategies help maintain consistent detector response across multiple analyses and improve quantification reliability.

- Sample preparation techniques affecting response factors: Sample preparation methods significantly impact HPLC response factor variability. Techniques such as proper extraction procedures, filtration, derivatization, and matrix matching can minimize matrix effects that alter analyte response. Standardized sample handling protocols, including consistent dilution techniques and storage conditions, help ensure that response factors remain stable across different sample preparations and analytical runs.

- Statistical approaches for response factor correction: Statistical methods are employed to address HPLC response factor variability through data processing techniques. These include regression analysis, response surface methodology, artificial neural networks, and chemometric approaches. By applying appropriate statistical corrections to raw chromatographic data, analysts can compensate for systematic variations in detector response and improve the accuracy of quantitative determinations across different analytical conditions.

- Method validation strategies for response factor reliability: Comprehensive method validation protocols are essential for addressing HPLC response factor variability. These include robustness testing, system suitability checks, and assessment of linearity across concentration ranges. Regular performance verification using reference standards, proficiency testing, and uncertainty measurements helps identify and control factors contributing to response variability, ensuring consistent analytical performance over time and between different laboratories.

02 Sample preparation techniques to reduce response variability

Specialized sample preparation methods can significantly reduce HPLC response factor variability. These include optimized extraction procedures, matrix-matched calibration standards, and sample clean-up techniques that remove interfering compounds. Consistent sample handling, storage conditions, and preparation protocols help minimize variations in analyte recovery and detector response across different sample batches.Expand Specific Solutions03 Mobile phase and column parameter optimization

Optimizing mobile phase composition, pH, temperature, and flow rate can minimize HPLC response factor variability. Column selection, conditioning procedures, and equilibration times also significantly impact response consistency. Systematic method development approaches help identify optimal chromatographic conditions that provide stable detector response across different analytes and concentration ranges.Expand Specific Solutions04 Detector-specific strategies for response stability

Different HPLC detector types (UV-Vis, fluorescence, mass spectrometry) require specific strategies to maintain response factor stability. These include regular detector calibration, optimization of detector settings (wavelength, gain, temperature), and proper maintenance procedures. Understanding detector-specific response characteristics helps develop methods that minimize variability across different analyte types and concentrations.Expand Specific Solutions05 Statistical approaches and software solutions

Advanced statistical methods and specialized software tools help manage HPLC response factor variability. These include response factor normalization algorithms, trend analysis of system suitability tests, and automated calibration adjustment. Statistical process control techniques monitor method performance over time, while machine learning approaches can predict and compensate for response variations based on multiple parameters.Expand Specific Solutions

Leading Manufacturers and Research Institutions in HPLC Technology

The HPLC response factor variability market is currently in a growth phase, with increasing demand for analytical solutions across pharmaceutical, biotechnology, and chemical industries. The global market size for HPLC technologies is expanding steadily, driven by stringent regulatory requirements for analytical precision. Technologically, solutions range from basic calibration approaches to sophisticated software algorithms. Companies like Agilent Technologies (not listed) lead the market, while pharmaceutical-focused firms such as Merlin Biomedical, CanSino Biologics, and Staidson Biopharmaceuticals are developing specialized applications. Technology companies including Texas Instruments, Silicon Laboratories, and IBM are contributing advanced data processing solutions. Academic institutions like Hunan University and Sichuan University are conducting fundamental research to address underlying variability challenges, indicating a collaborative ecosystem between industry and academia.

Staidson (Beijing) Biopharmaceuticals Co., Ltd.

Technical Solution: Staidson has developed a comprehensive approach to address HPLC response factor variability through their Advanced Calibration System (ACS). This system employs multiple reference standards across the analytical range to create multi-point calibration curves that compensate for non-linear detector responses. Their method incorporates internal standardization using structurally similar compounds with known response factors to normalize sample measurements. Additionally, Staidson implements automated system suitability testing before each analytical run to verify detector performance and response factor stability. Their proprietary algorithm adjusts for day-to-day variations by applying correction factors based on control sample measurements, effectively reducing inter-day variability by up to 40% compared to traditional methods. The company also utilizes matrix-matched calibration standards that account for matrix effects on analyte response, particularly important for complex biological samples.

Strengths: Highly effective for complex biological matrices where matrix effects significantly impact response factors; comprehensive approach addressing multiple sources of variability simultaneously. Weaknesses: Requires more extensive calibration procedures and reference standards than simpler methods, potentially increasing analysis time and cost; proprietary algorithms may create dependency on vendor-specific software.

International Business Machines Corp.

Technical Solution: IBM has developed an AI-driven approach to address HPLC response factor variability through their Analytical Intelligence Platform. This system leverages machine learning algorithms to predict and compensate for response factor variations based on historical data patterns. The platform continuously analyzes thousands of chromatographic parameters from previous runs to identify correlations between operating conditions and response factor shifts. IBM's solution incorporates real-time monitoring of critical system parameters (temperature, pressure, mobile phase composition) and automatically applies correction factors to raw data based on detected deviations. Their neural network models can identify subtle patterns in detector response that traditional calibration methods might miss, enabling proactive adjustment before significant drift occurs. The system also features adaptive calibration scheduling that optimizes calibration frequency based on observed system stability, reducing unnecessary calibrations while maintaining data quality. IBM's cloud-based implementation allows for cross-laboratory standardization, where response factor models can be shared across multiple instruments to improve consistency in multi-site operations.

Strengths: Highly adaptive to changing conditions without requiring extensive manual recalibration; leverages historical data to continuously improve accuracy; excellent for multi-instrument standardization across different laboratories. Weaknesses: Requires substantial historical data for initial model training; complex implementation may present challenges for smaller laboratories; potential data privacy concerns with cloud-based analytical data processing.

Critical Patents and Literature on Response Factor Correction

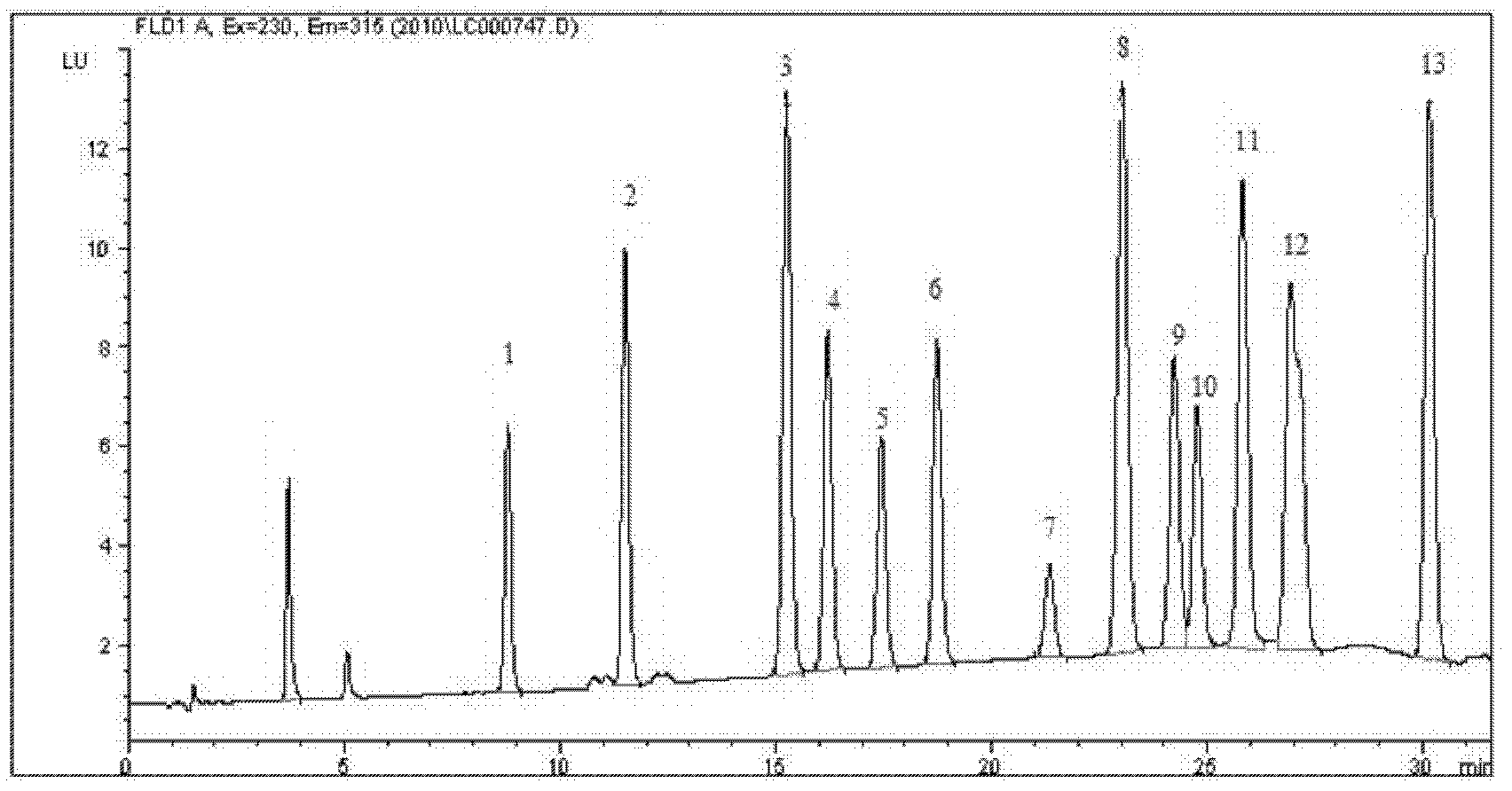

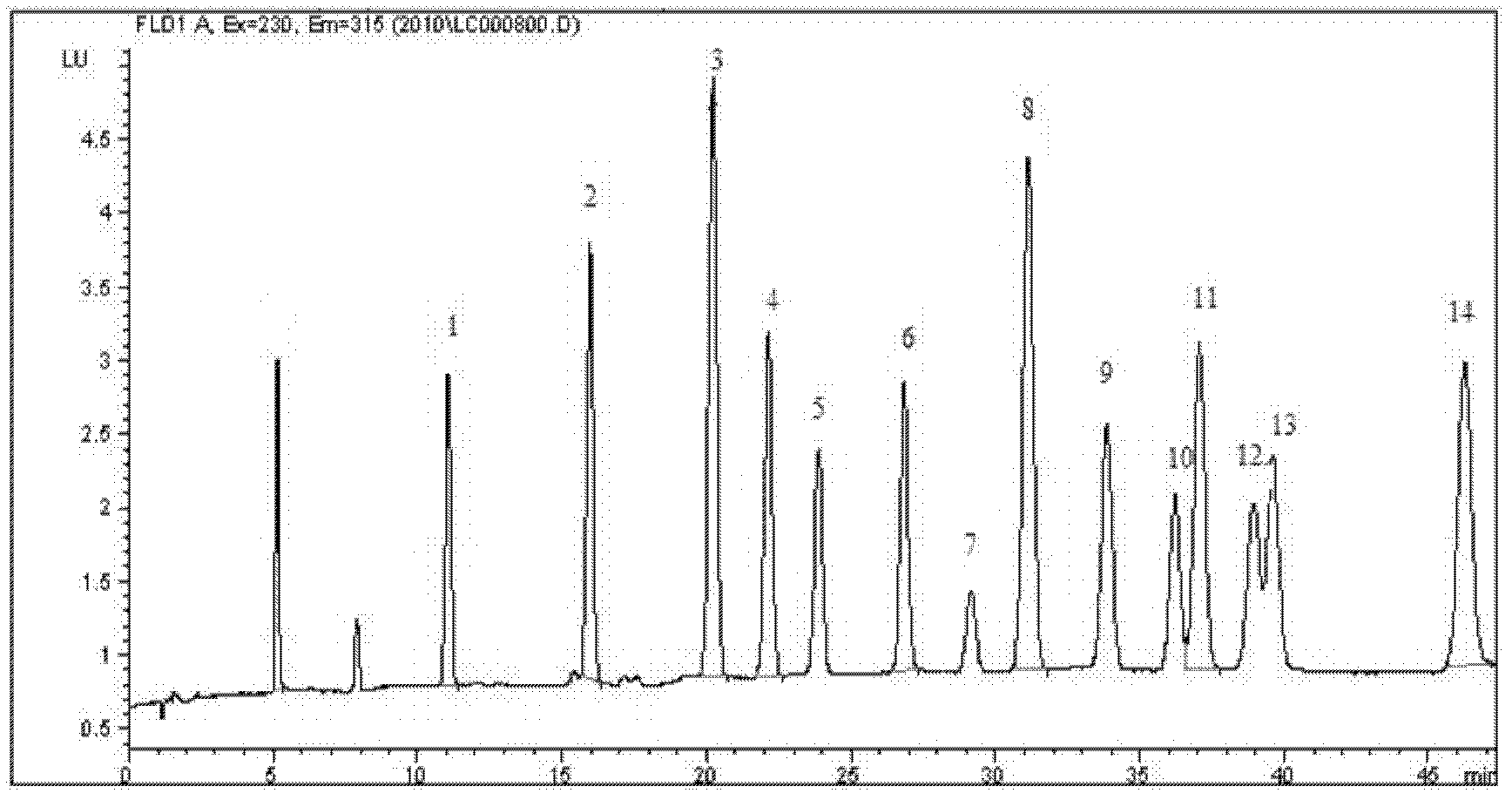

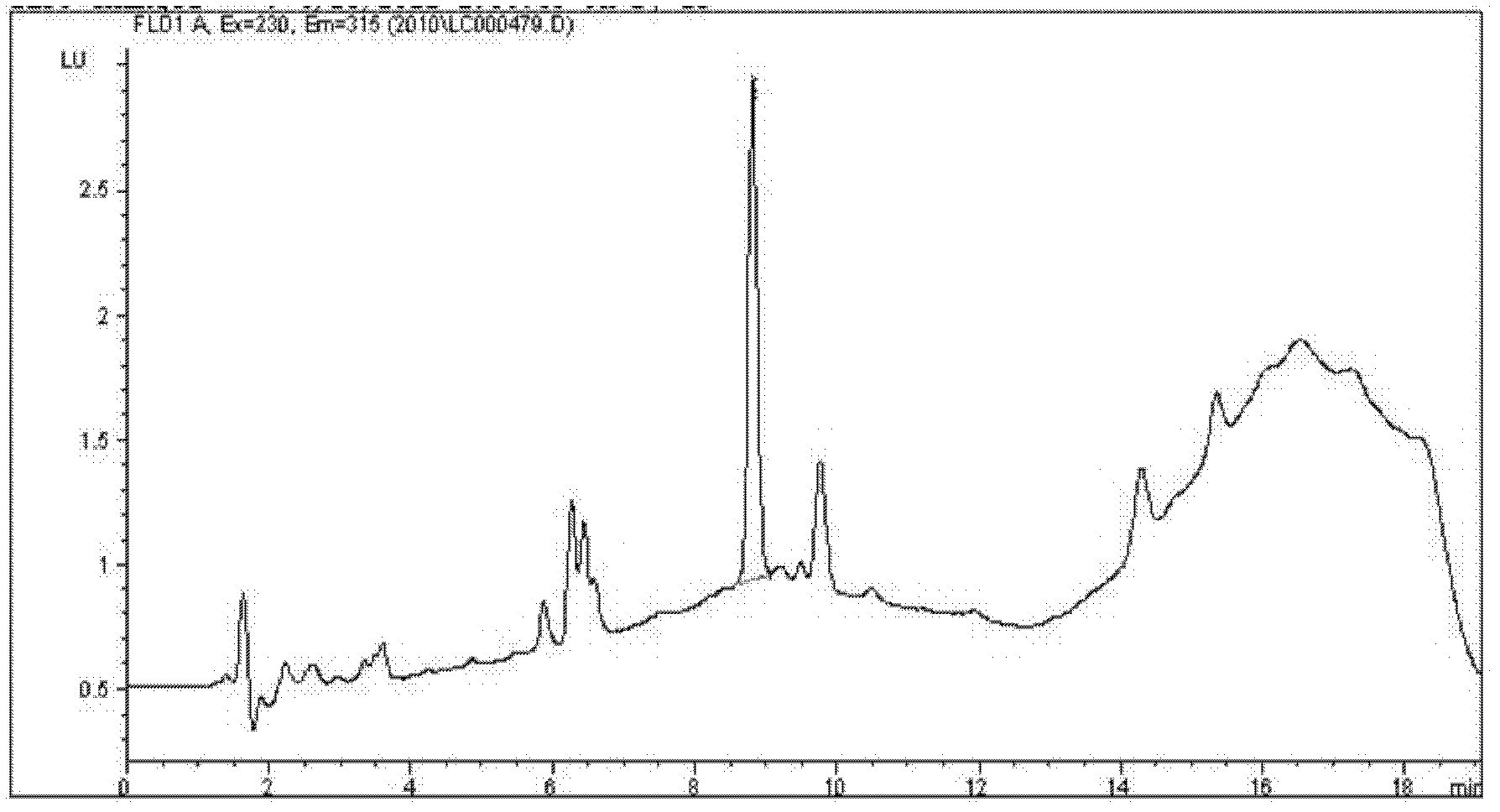

High performance liquid chromatography determination method for volatile phenolic compounds in white spirits

PatentInactiveCN102621239A

Innovation

- Using high-performance liquid chromatography-fluorescence detection method, through liquid-liquid extraction and rotary evaporation concentration technology, combined with gradient elution and fluorescence detection of β-cyclodextrin, 10 volatile phenolic compounds in liquor are directly analyzed, simplifying the pre-treatment steps. , improving the accuracy and sensitivity of detection.

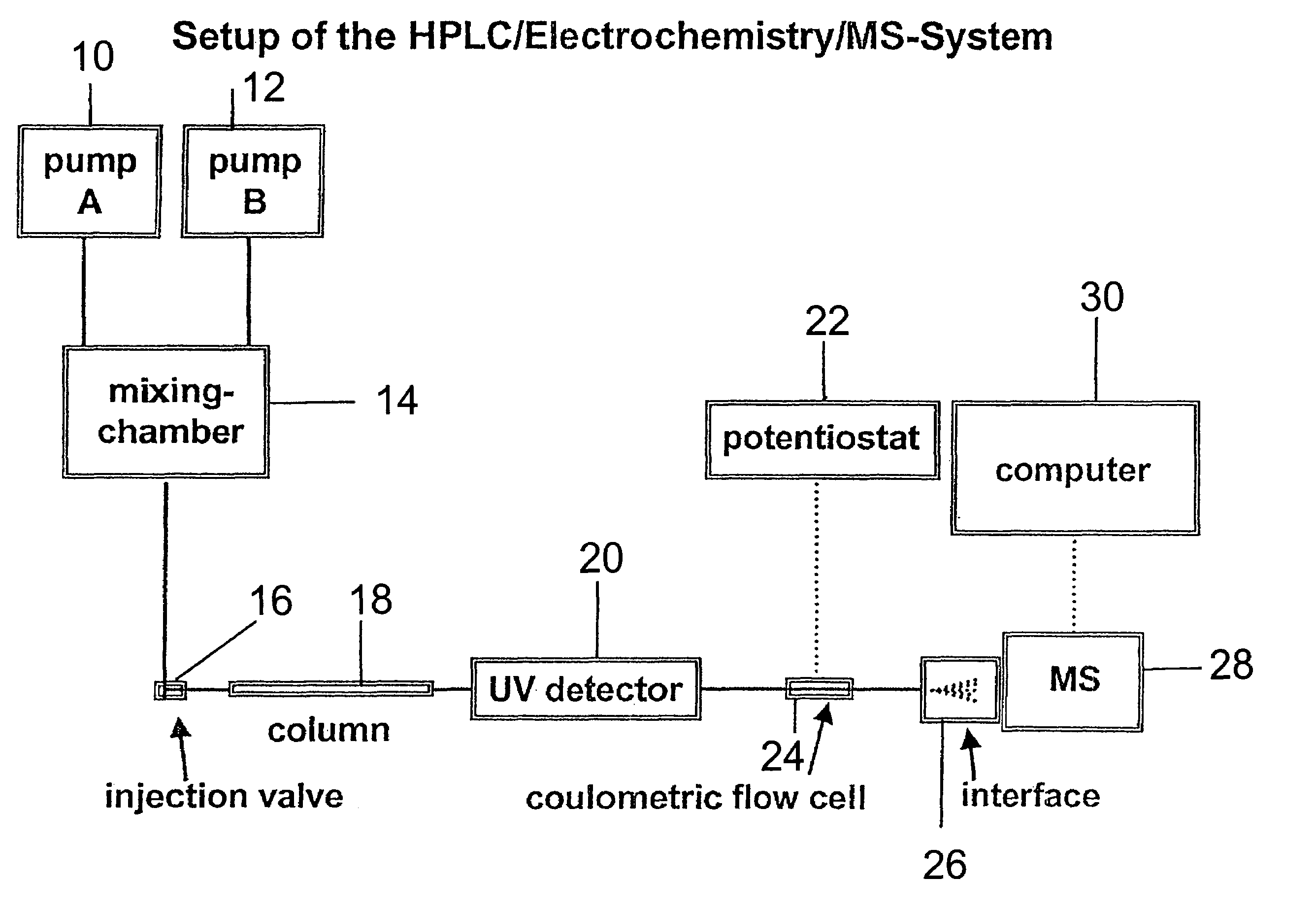

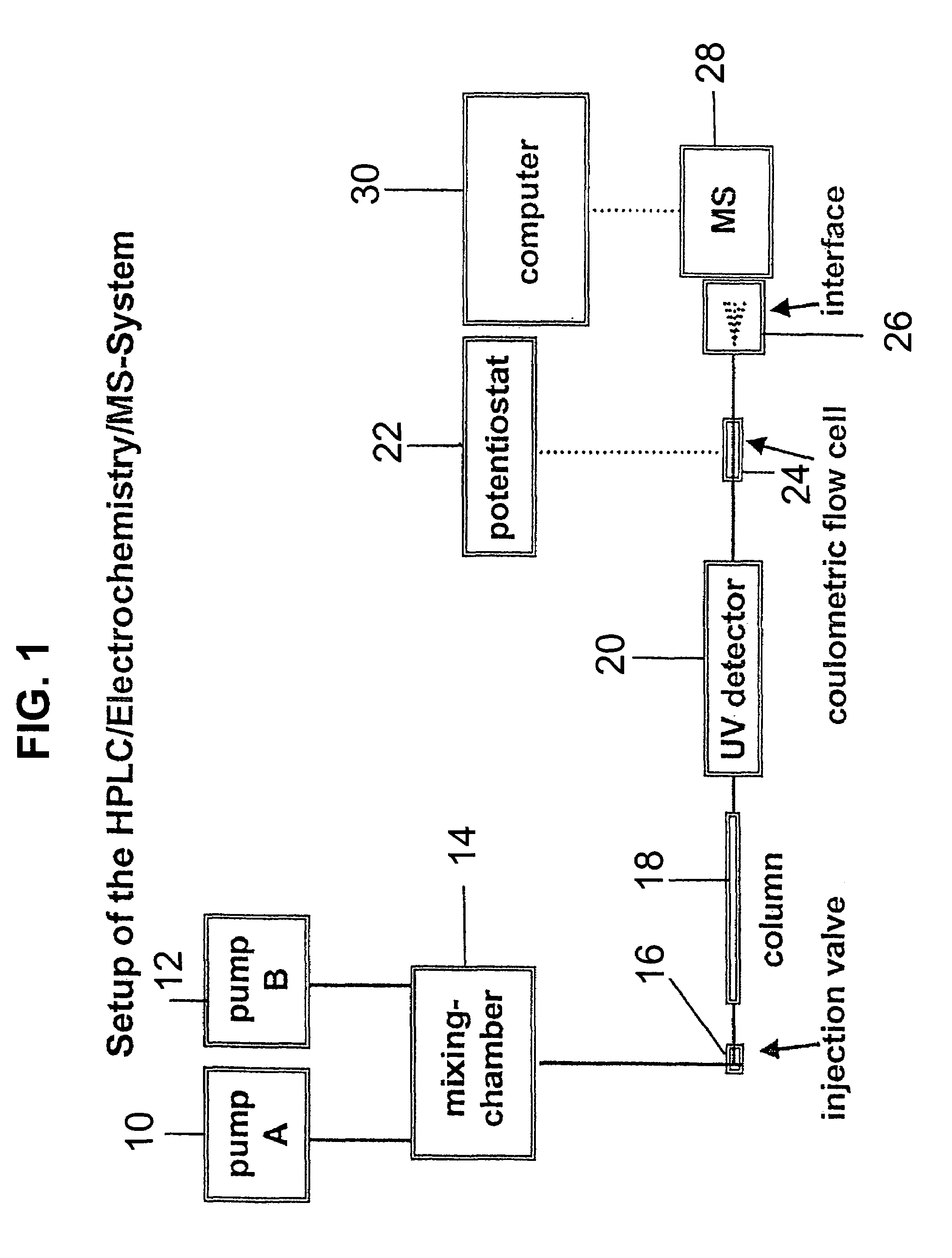

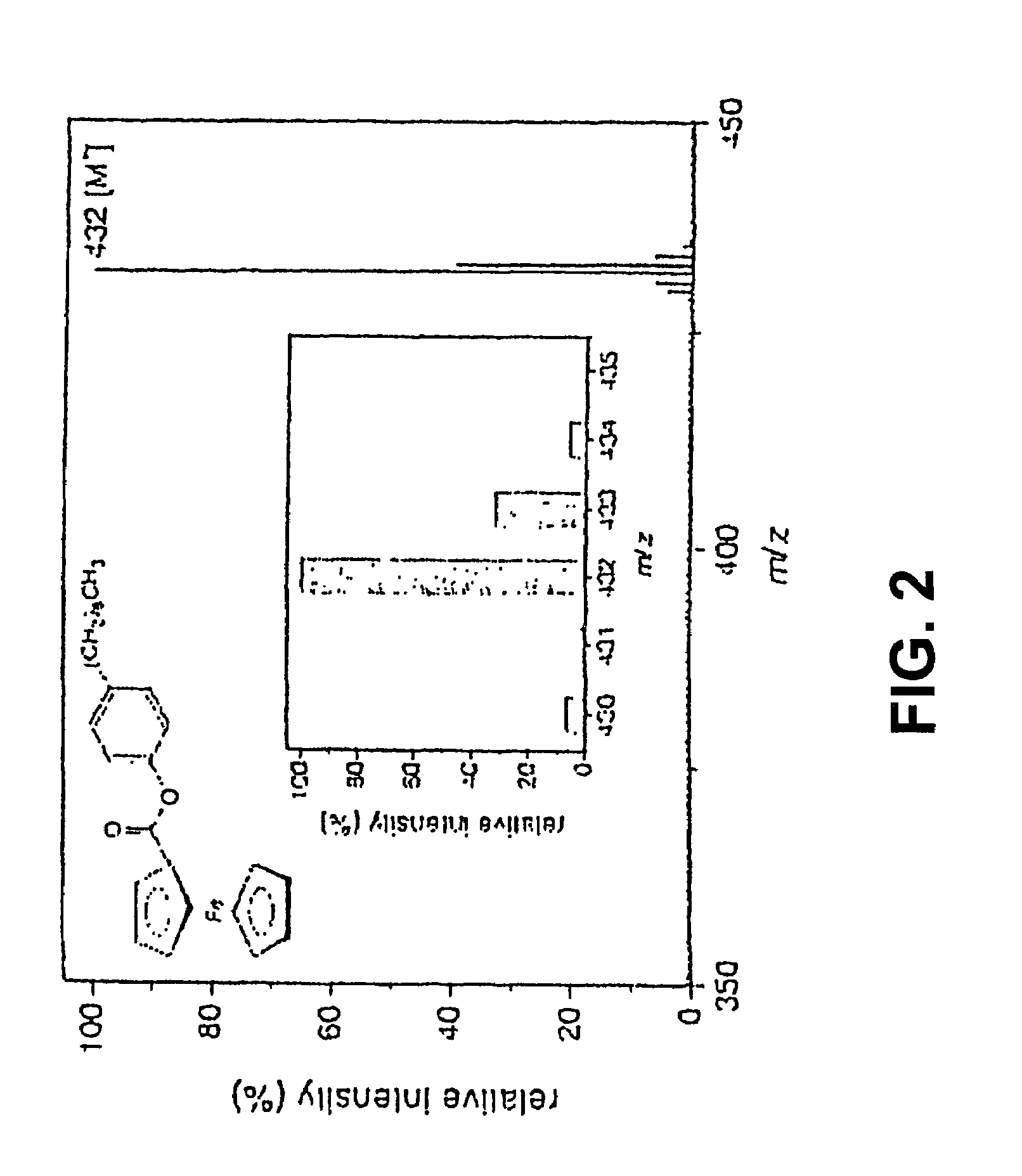

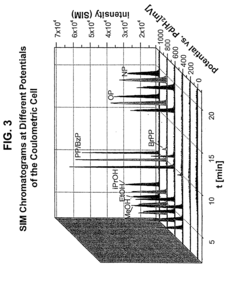

Coupling electrochemistry to mass spectrometry and high performance liquid chromatography

PatentInactiveUS7028537B2

Innovation

- A new HPLC-electrochemistry-MS technique is developed, where a coulometric three-electrode electrochemical cell is inserted between the HPLC column and mass spectrometer, enabling post-column electrochemical oxidation or reduction of analytes, forming charged or strongly polar products compatible with ESI or APCI mass spectrometry, thereby improving ionization efficiency.

Method Validation and Regulatory Compliance

Method validation is a critical component in addressing HPLC response factor variability, particularly within the regulatory framework established by agencies such as the FDA, EMA, and ICH. These organizations have developed comprehensive guidelines that specify the parameters requiring validation, including accuracy, precision, specificity, linearity, range, and robustness—all of which directly impact response factor consistency.

The ICH Q2(R1) guideline specifically addresses analytical method validation requirements, providing a structured approach to establishing response factor reliability. For HPLC methods, this includes demonstrating that response factors remain consistent across the analytical range and under varying conditions. Compliance with these guidelines necessitates thorough documentation of validation procedures and results, which serves as evidence of method suitability for regulatory submissions.

System suitability testing (SST) represents another regulatory requirement that helps control response factor variability. By establishing acceptance criteria for parameters such as retention time reproducibility, peak resolution, and detector response, SST ensures that the chromatographic system performs consistently before sample analysis begins. Regular SST implementation helps identify potential issues that could affect response factors before they impact analytical results.

Quality by Design (QbD) principles, encouraged by regulatory agencies, provide a systematic approach to method development that can significantly reduce response factor variability. By identifying critical method parameters and establishing their acceptable ranges during development, analysts can create more robust methods with predictable response characteristics. This proactive approach aligns with current regulatory expectations for analytical method lifecycle management.

For pharmaceutical applications, method transfer protocols must address response factor consistency across different laboratories and instruments. Regulatory guidelines require demonstration of comparable response factors between sending and receiving laboratories, often necessitating statistical evaluation of results to confirm equivalence. This ensures that variability in response factors does not compromise product quality assessments across different testing sites.

Continuous method verification, increasingly emphasized in regulatory frameworks, involves ongoing monitoring of method performance, including response factor stability over time. This approach allows for timely detection of drift in response factors and implementation of corrective actions before regulatory compliance is compromised. Modern data management systems facilitate this monitoring by tracking response factor trends across multiple analytical runs.

AI-assisted compliance tools are emerging as valuable resources for managing response factor variability while maintaining regulatory compliance. These systems can automatically flag unusual response factor patterns, suggest corrective actions, and maintain comprehensive audit trails that satisfy regulatory documentation requirements. As regulatory agencies become more accepting of these technologies, they represent a promising approach to enhancing compliance while addressing response factor challenges.

The ICH Q2(R1) guideline specifically addresses analytical method validation requirements, providing a structured approach to establishing response factor reliability. For HPLC methods, this includes demonstrating that response factors remain consistent across the analytical range and under varying conditions. Compliance with these guidelines necessitates thorough documentation of validation procedures and results, which serves as evidence of method suitability for regulatory submissions.

System suitability testing (SST) represents another regulatory requirement that helps control response factor variability. By establishing acceptance criteria for parameters such as retention time reproducibility, peak resolution, and detector response, SST ensures that the chromatographic system performs consistently before sample analysis begins. Regular SST implementation helps identify potential issues that could affect response factors before they impact analytical results.

Quality by Design (QbD) principles, encouraged by regulatory agencies, provide a systematic approach to method development that can significantly reduce response factor variability. By identifying critical method parameters and establishing their acceptable ranges during development, analysts can create more robust methods with predictable response characteristics. This proactive approach aligns with current regulatory expectations for analytical method lifecycle management.

For pharmaceutical applications, method transfer protocols must address response factor consistency across different laboratories and instruments. Regulatory guidelines require demonstration of comparable response factors between sending and receiving laboratories, often necessitating statistical evaluation of results to confirm equivalence. This ensures that variability in response factors does not compromise product quality assessments across different testing sites.

Continuous method verification, increasingly emphasized in regulatory frameworks, involves ongoing monitoring of method performance, including response factor stability over time. This approach allows for timely detection of drift in response factors and implementation of corrective actions before regulatory compliance is compromised. Modern data management systems facilitate this monitoring by tracking response factor trends across multiple analytical runs.

AI-assisted compliance tools are emerging as valuable resources for managing response factor variability while maintaining regulatory compliance. These systems can automatically flag unusual response factor patterns, suggest corrective actions, and maintain comprehensive audit trails that satisfy regulatory documentation requirements. As regulatory agencies become more accepting of these technologies, they represent a promising approach to enhancing compliance while addressing response factor challenges.

Data Processing Algorithms for Response Factor Compensation

Advanced computational algorithms have emerged as critical tools for addressing HPLC response factor variability. These algorithms employ mathematical models to compensate for fluctuations in detector response, ensuring more accurate quantification across different analytical conditions. Machine learning approaches, particularly supervised learning models, have demonstrated significant potential by analyzing historical calibration data to predict and adjust response factors in real-time during analysis.

Statistical correction methods form another important category, with multivariate analysis techniques like Principal Component Analysis (PCA) and Partial Least Squares (PLS) regression effectively identifying patterns in response variability. These methods can isolate instrument-specific, analyte-specific, and environmental factors contributing to response inconsistencies, enabling targeted compensation strategies.

Dynamic calibration algorithms represent a sophisticated approach that continuously updates response factor calculations throughout analytical runs. These algorithms monitor internal standards and system suitability parameters, applying adaptive corrections based on observed deviations. This real-time adjustment capability is particularly valuable for long analytical sequences where instrument performance may drift over time.

Signal processing techniques, including Fourier transformation and wavelet analysis, have been successfully implemented to filter noise and identify true signal patterns in chromatographic data. By distinguishing between random noise and systematic response variations, these algorithms improve the signal-to-noise ratio and enhance quantification precision, especially for trace analytes where response variability has the greatest impact.

Open-source software platforms like R and Python have accelerated the development of customized response factor compensation algorithms. Libraries such as scikit-learn and TensorFlow enable chromatographers to implement advanced machine learning models without extensive programming expertise. Commercial HPLC data systems have also begun incorporating these algorithmic approaches, with vendors developing proprietary solutions that integrate seamlessly with their instrumentation.

Cloud-based computational solutions represent the newest frontier, allowing response factor data to be processed using distributed computing resources. These systems can analyze response patterns across multiple instruments and laboratories, creating global correction models that account for inter-laboratory and inter-instrument variability. Such approaches are particularly valuable for multi-site studies and regulated environments where method transfer challenges are common.

Statistical correction methods form another important category, with multivariate analysis techniques like Principal Component Analysis (PCA) and Partial Least Squares (PLS) regression effectively identifying patterns in response variability. These methods can isolate instrument-specific, analyte-specific, and environmental factors contributing to response inconsistencies, enabling targeted compensation strategies.

Dynamic calibration algorithms represent a sophisticated approach that continuously updates response factor calculations throughout analytical runs. These algorithms monitor internal standards and system suitability parameters, applying adaptive corrections based on observed deviations. This real-time adjustment capability is particularly valuable for long analytical sequences where instrument performance may drift over time.

Signal processing techniques, including Fourier transformation and wavelet analysis, have been successfully implemented to filter noise and identify true signal patterns in chromatographic data. By distinguishing between random noise and systematic response variations, these algorithms improve the signal-to-noise ratio and enhance quantification precision, especially for trace analytes where response variability has the greatest impact.

Open-source software platforms like R and Python have accelerated the development of customized response factor compensation algorithms. Libraries such as scikit-learn and TensorFlow enable chromatographers to implement advanced machine learning models without extensive programming expertise. Commercial HPLC data systems have also begun incorporating these algorithmic approaches, with vendors developing proprietary solutions that integrate seamlessly with their instrumentation.

Cloud-based computational solutions represent the newest frontier, allowing response factor data to be processed using distributed computing resources. These systems can analyze response patterns across multiple instruments and laboratories, creating global correction models that account for inter-laboratory and inter-instrument variability. Such approaches are particularly valuable for multi-site studies and regulated environments where method transfer challenges are common.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!