Regulatory expectations for AI/ML tools used in process control and decision-making in GMP environments

AI/ML in GMP: Background and Objectives

Artificial Intelligence (AI) and Machine Learning (ML) technologies have evolved significantly over the past decade, transforming various industries including pharmaceutical manufacturing. The integration of these technologies within Good Manufacturing Practice (GMP) environments represents a paradigm shift in how pharmaceutical companies approach process control and decision-making. Historically, GMP environments have relied on traditional statistical process control methods, with limited automation and predominantly manual oversight for critical decisions.

The evolution of AI/ML in regulated industries has progressed from basic rule-based systems to sophisticated deep learning algorithms capable of handling complex, multivariate data sets. This technological progression has coincided with increasing regulatory attention to digital technologies in pharmaceutical manufacturing, particularly following the FDA's 2011 Process Validation Guidance and subsequent initiatives promoting continuous manufacturing and Quality by Design (QbD) principles.

The primary objective of implementing AI/ML tools in GMP environments is to enhance product quality, manufacturing consistency, and regulatory compliance while reducing human error. These technologies offer unprecedented capabilities for real-time monitoring, predictive maintenance, anomaly detection, and process optimization that traditional systems cannot achieve. Additionally, they provide opportunities for more robust data analysis that can lead to deeper insights into manufacturing processes.

Regulatory agencies worldwide, including the FDA, EMA, and WHO, have begun developing frameworks to address the unique challenges posed by AI/ML systems in regulated environments. These challenges include validation of algorithms, data integrity, system transparency, and ongoing performance monitoring. The FDA's proposed regulatory framework for AI/ML-based Software as a Medical Device (SaMD) provides initial insights into regulatory thinking, though specific guidance for manufacturing applications remains under development.

Current technological objectives focus on developing AI/ML systems that are not only powerful but also interpretable, validated, and compliant with GMP requirements. This includes creating robust validation methodologies for AI/ML models, establishing appropriate change control procedures for learning algorithms, and developing standards for data quality and integrity in AI-driven systems.

The intersection of cutting-edge technology with stringent regulatory requirements creates a complex landscape that requires careful navigation. As these technologies continue to mature, the goal is to establish a balance between innovation and compliance that allows pharmaceutical manufacturers to leverage AI/ML capabilities while maintaining the high standards of safety and quality expected in GMP environments.

Market Demand Analysis for AI/ML in Pharmaceutical Manufacturing

The pharmaceutical manufacturing industry is witnessing a significant surge in demand for AI/ML technologies, driven primarily by the need for enhanced efficiency, quality control, and regulatory compliance. Recent market analyses indicate that the global AI in pharmaceutical market is projected to grow at a CAGR of 28.5% through 2027, reaching a valuation of over $5.8 billion. This growth trajectory is particularly pronounced in GMP environments where process control and decision-making applications are becoming increasingly critical.

Manufacturing efficiency remains a primary market driver, with pharmaceutical companies seeking AI solutions that can optimize production processes, reduce downtime, and minimize waste. Studies show that AI-enabled predictive maintenance alone can reduce machine downtime by up to 30% and extend equipment life by 20-40%, representing substantial cost savings in an industry where production interruptions can cost millions per day.

Quality assurance represents another significant market segment, with demand for real-time monitoring and anomaly detection systems growing at 32% annually. Pharmaceutical manufacturers are increasingly looking for AI tools that can detect subtle deviations in production parameters before they result in quality issues, thereby reducing batch rejections and recalls which typically cost between $10-30 million per incident.

Regulatory compliance is perhaps the most compelling market driver. With the FDA and EMA increasingly acknowledging the role of advanced analytics in pharmaceutical manufacturing, companies are investing heavily in AI/ML systems that can demonstrate consistent compliance with GMP standards. Market research indicates that approximately 65% of pharmaceutical manufacturers cite regulatory compliance as their primary motivation for AI/ML adoption.

Regional analysis reveals varying adoption rates, with North America leading at 42% market share, followed by Europe at 28% and Asia-Pacific at 22%. However, the Asia-Pacific region is experiencing the fastest growth rate at 34% annually, driven by rapid industrialization and increasing regulatory sophistication in countries like China and India.

By application segment, process optimization represents 36% of the market, quality control accounts for 29%, predictive maintenance for 18%, and supply chain optimization for 17%. The fastest-growing segment is in real-time decision support systems, which is expanding at 38% annually as manufacturers seek to implement more responsive production environments.

Customer surveys indicate that pharmaceutical manufacturers are increasingly prioritizing AI/ML solutions that offer clear validation pathways and transparent decision-making processes, reflecting the industry's need to balance innovation with regulatory expectations in GMP environments.

Current Regulatory Landscape and Technical Challenges

The regulatory landscape for AI/ML tools in GMP environments is currently in a state of evolution, with various regulatory bodies developing frameworks to address the unique challenges posed by these technologies. The FDA has taken a lead role through its proposed regulatory framework for modifications to AI/ML-based Software as a Medical Device (SaMD), emphasizing a "total product lifecycle" approach. This framework acknowledges the dynamic nature of AI/ML systems and proposes mechanisms for continuous monitoring and updates without compromising safety and efficacy.

In Europe, the European Medicines Agency (EMA) has published guidance on computerized systems validation that, while not specifically targeting AI/ML, provides principles applicable to these technologies. The EU's proposed AI Act also introduces a risk-based approach that would classify AI systems used in pharmaceutical manufacturing as "high-risk," requiring stringent compliance measures.

The International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use (ICH) has yet to release specific guidelines for AI/ML in GMP environments, creating a significant gap in international harmonization. This lack of standardized global regulations presents a major challenge for multinational pharmaceutical companies implementing AI/ML solutions across different jurisdictions.

Technical challenges in implementing AI/ML tools in GMP environments are multifaceted. Data integrity remains paramount, with concerns about the quality, completeness, and representativeness of training data. GMP environments demand rigorous validation of all systems, but traditional validation approaches are ill-suited for self-learning systems that continuously evolve based on new data inputs.

Explainability and transparency present another significant hurdle. Regulatory bodies increasingly require that decisions made by AI systems be interpretable by humans, particularly in critical manufacturing processes. However, many advanced AI algorithms, especially deep learning models, function as "black boxes," making their decision-making processes difficult to articulate or justify to regulators.

System validation and change control pose unique challenges for AI/ML systems that learn and adapt over time. Traditional validation paradigms assume static systems, whereas AI/ML systems may change their behavior as they process new data. Establishing appropriate validation protocols that accommodate this dynamic nature while maintaining compliance with GMP principles remains problematic.

Cybersecurity vulnerabilities represent another critical concern, as AI/ML systems may be susceptible to adversarial attacks or data poisoning that could compromise product quality or patient safety. Implementing robust security measures without impeding system performance requires careful balance.

Current Validation Approaches for AI/ML in GMP Settings

01 Regulatory frameworks for AI/ML in healthcare

Regulatory bodies are establishing frameworks to evaluate and approve AI/ML tools in healthcare settings. These frameworks focus on ensuring safety, efficacy, and ethical use of AI technologies in medical applications. Key considerations include validation protocols, clinical performance metrics, and risk management strategies for AI-based diagnostic and therapeutic tools. Regulatory expectations emphasize transparency in algorithm development and continuous monitoring of AI systems after deployment.- Regulatory compliance frameworks for AI/ML in healthcare: Regulatory bodies have established specific frameworks for AI/ML tools used in healthcare applications. These frameworks address validation requirements, clinical evaluation protocols, and risk management strategies for AI-based medical devices. They include guidelines for demonstrating safety and efficacy through appropriate clinical studies and technical documentation, as well as requirements for continuous monitoring and updating of AI algorithms in medical applications.

- Explainability and transparency requirements for AI systems: Regulatory expectations increasingly emphasize the need for explainable AI, requiring developers to provide transparency in how AI/ML tools make decisions. This includes requirements for documenting model architecture, training methodologies, and decision-making processes. Regulations mandate that AI systems provide interpretable outputs, especially in high-risk applications, allowing users and regulators to understand how conclusions are reached and ensuring accountability for automated decisions.

- Data privacy and security standards for AI/ML applications: Regulatory frameworks establish strict standards for data privacy and security in AI/ML tools, particularly regarding the collection, storage, and processing of sensitive information. These regulations require robust data protection measures, including anonymization techniques, secure data transmission protocols, and consent management systems. Compliance with data protection laws such as GDPR and HIPAA is mandatory, with specific provisions addressing the unique challenges posed by AI systems that process large volumes of personal data.

- Validation and testing requirements for AI/ML algorithms: Regulatory bodies have established specific validation and testing protocols for AI/ML algorithms to ensure reliability and performance. These include requirements for comprehensive testing across diverse datasets, validation of algorithm performance against predefined metrics, and demonstration of robustness against adversarial attacks. Regulations mandate documentation of testing methodologies, performance benchmarks, and limitations of the AI systems, with particular emphasis on validating the generalizability of algorithms across different populations and use cases.

- Ongoing monitoring and update management for AI systems: Regulatory expectations include requirements for continuous monitoring and management of AI/ML systems throughout their lifecycle. This involves establishing processes for detecting and addressing performance drift, implementing controlled update mechanisms, and maintaining audit trails of system changes. Regulations mandate post-market surveillance systems to track real-world performance, with specific provisions for reporting adverse events and implementing corrective actions when necessary. These requirements ensure that AI systems remain safe and effective as they evolve over time.

02 Data privacy and security compliance for AI systems

AI/ML tools must comply with stringent data privacy and security regulations across different jurisdictions. Regulatory expectations include proper data anonymization techniques, secure data storage protocols, and transparent data handling practices. AI developers must implement measures to protect sensitive information while maintaining the utility of training datasets. Compliance frameworks require documentation of data governance policies and regular security assessments of AI systems that process personal or sensitive information.Expand Specific Solutions03 Explainability and transparency requirements

Regulatory bodies increasingly require AI/ML tools to provide explainable outputs and transparent decision-making processes. This includes documentation of model architecture, training methodologies, and performance limitations. AI systems, particularly those used in high-risk applications, must be able to provide understandable explanations for their recommendations or decisions. Regulations emphasize the importance of human oversight and the ability to audit AI systems to ensure accountability and trust in automated processes.Expand Specific Solutions04 Validation and performance standards for AI/ML tools

Regulatory expectations include rigorous validation protocols and performance standards for AI/ML tools before market approval. These standards encompass requirements for demonstrating statistical validity, clinical relevance, and robustness across diverse populations. AI developers must provide evidence of appropriate testing methodologies, including cross-validation techniques and performance metrics relevant to the intended use. Continuous monitoring and periodic re-validation are expected to ensure ongoing performance as data distributions and clinical practices evolve.Expand Specific Solutions05 Lifecycle management and post-market surveillance

Regulatory frameworks require comprehensive lifecycle management strategies for AI/ML tools, including post-market surveillance and update protocols. Developers must establish processes for monitoring real-world performance, detecting and addressing algorithmic drift, and implementing version control for model updates. Regulatory expectations include predefined protocols for reporting adverse events related to AI systems and mechanisms for implementing corrective actions. Documentation of change management procedures and impact assessments for algorithm modifications are essential compliance requirements.Expand Specific Solutions

Key Stakeholders in Pharmaceutical AI/ML Implementation

The AI/ML regulatory landscape in GMP environments is evolving rapidly, currently in a growth phase with increasing adoption across pharmaceutical and healthcare sectors. The market is expanding significantly as companies integrate AI tools into manufacturing processes, quality control, and decision-making systems. Technology maturity varies considerably among key players: established automation leaders like Siemens, Honeywell, and Fisher-Rosemount (Emerson) offer mature GMP-compliant solutions, while specialized AI firms such as DataRobot, UiPath, and Retrocausal are developing targeted applications. Pharmaceutical companies like Regeneron and technology consultancies including Accenture are actively implementing AI/ML solutions while navigating complex regulatory frameworks. Commissioning Agents and AspenTech provide specialized validation expertise critical for GMP compliance in this emerging field.

Fisher-Rosemount Systems, Inc.

Accenture Global Solutions Ltd.

Critical Technical Standards and Regulatory Guidance

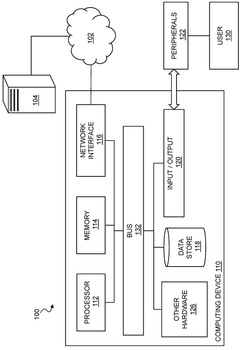

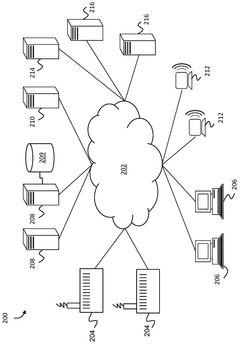

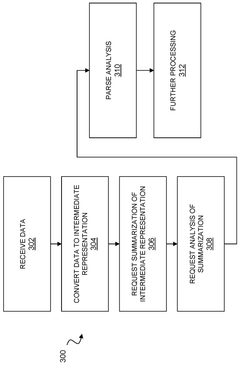

- The implementation of a system that converts manufacturing data into near natural language representations, allowing inference engines and large language models to generate analyses, recommendations, and solutions, while also incorporating data from sensors and user descriptions to provide actionable insights for process improvement.

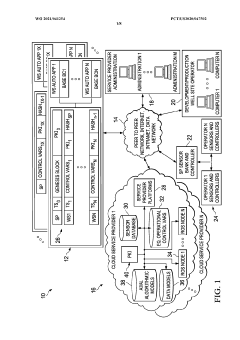

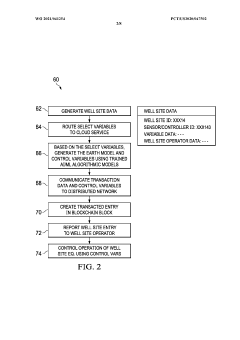

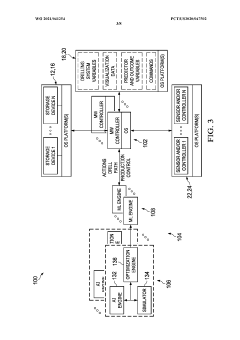

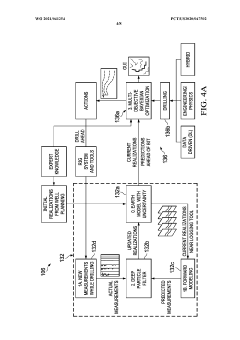

- A system integrating AI/ML engines, predictive engines, and blockchain technology for secure data storage and management, utilizing distributed computing to handle Big Data analytics efficiently, and optimizing earth model variables for controlling well site operations.

Risk Management Frameworks for AI/ML in GMP Processes

Effective risk management frameworks are essential for the successful implementation of AI/ML tools in GMP environments. These frameworks must align with regulatory expectations while addressing the unique challenges posed by AI/ML technologies. The ICH Q9 principles provide a foundation for risk management in pharmaceutical manufacturing, but require adaptation to accommodate the dynamic nature of AI/ML systems.

A comprehensive risk management framework for AI/ML in GMP processes should incorporate a lifecycle approach, beginning with risk identification during the design phase. This includes evaluating data quality, algorithm selection, and potential failure modes. Organizations must document their risk assessment methodologies, clearly defining risk acceptance criteria specific to AI/ML applications in process control and decision-making contexts.

Risk mitigation strategies should be proportionate to the identified risks, with higher scrutiny applied to AI/ML systems directly impacting critical quality attributes or patient safety. Continuous monitoring mechanisms must be established to detect performance drift, data anomalies, and unexpected behaviors in AI/ML models. These monitoring systems should include predefined alert thresholds and escalation procedures.

Validation protocols for AI/ML systems in GMP environments should incorporate risk-based testing approaches, focusing resources on high-risk components. The validation strategy must address both initial validation and ongoing performance verification, with particular attention to change management processes for model updates and retraining events.

Documentation requirements within the risk management framework should include detailed risk registers, mitigation plans, and verification activities. Traceability matrices linking identified risks to control measures and testing protocols provide evidence of a systematic approach to risk management, which regulators increasingly expect to see.

Cross-functional governance structures are becoming a regulatory expectation for AI/ML implementation in GMP environments. These structures should include representation from quality, manufacturing, IT, and data science disciplines to ensure comprehensive risk evaluation. Regular risk reviews should be conducted, with the frequency determined by the criticality of the AI/ML application and its potential impact on product quality.

Regulatory agencies are increasingly emphasizing the importance of explainability in AI/ML risk management frameworks. Organizations must demonstrate an understanding of how their AI/ML systems make decisions, particularly for high-risk applications. This includes maintaining appropriate documentation of model architecture, training methodologies, and performance metrics to support regulatory inspections and audits.

Data Integrity and Audit Trail Requirements

Data integrity and audit trail requirements represent critical components for AI/ML systems deployed in GMP environments. Regulatory bodies, including FDA, EMA, and WHO, have established stringent expectations regarding how data is collected, processed, stored, and protected when artificial intelligence tools are used for process control and decision-making. These requirements ensure that AI-driven decisions maintain the same level of reliability and traceability as traditional systems.

The ALCOA+ principles (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available) form the foundation of data integrity requirements for AI/ML systems in pharmaceutical manufacturing. When implementing machine learning algorithms, organizations must ensure that training data sets maintain complete audit trails documenting their origin, preprocessing steps, and any transformations applied. This documentation must be maintained throughout the entire data lifecycle.

For AI systems making GMP-critical decisions, comprehensive audit trail mechanisms must capture all system activities, including algorithm training sessions, model updates, parameter adjustments, and decision outputs. These audit trails must be time-stamped, user-attributed, and protected from unauthorized modifications. Regulatory expectations specifically require that the rationale behind AI-driven decisions remains transparent and traceable, even for complex deep learning models where interpretability presents challenges.

Data used for AI model training and validation must be stored in their original form with appropriate controls preventing unauthorized alterations. When data transformations occur during preprocessing, these operations must be documented with justifications for any data exclusions or modifications. Regulatory bodies increasingly expect technical controls that can detect and prevent data manipulation that might compromise model integrity.

Review processes for AI-generated data require human oversight mechanisms with clearly defined roles and responsibilities. The system must maintain records of who reviewed AI outputs, when reviews occurred, and what actions resulted. For continuous learning systems that adapt over time, special audit trail requirements apply to document evolutionary changes in algorithm behavior and performance metrics.

Risk-based approaches to data integrity should be implemented, with more stringent controls applied to high-risk AI applications directly impacting product quality or patient safety. Periodic data integrity assessments must evaluate the entire AI/ML ecosystem, including interfaces with other GMP systems, data transfer processes, and backup procedures. These assessments should verify that audit trails remain complete and that data integrity controls function as intended throughout the AI system lifecycle.