Advances In SLAM For Humanoid Robots

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Evolution and Humanoid Navigation Goals

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly over the past three decades, transforming from theoretical concepts to practical applications across various robotic platforms. For humanoid robots specifically, SLAM represents a critical enabling technology that allows these complex systems to navigate and interact within dynamic environments. The evolution of SLAM algorithms has progressed through several distinct phases, beginning with filter-based approaches in the 1990s, advancing to graph-based optimization methods in the 2000s, and more recently incorporating deep learning techniques that enhance perception capabilities.

The fundamental goal of SLAM in humanoid robotics extends beyond traditional mobile robot navigation. Humanoids require not only accurate environmental mapping and self-localization but also specialized capabilities that account for their bipedal locomotion, dynamic stability, and human-like interaction requirements. These unique challenges have driven specialized research directions in humanoid-specific SLAM implementations that must handle the complex kinematics and dynamics of bipedal systems.

Current technological objectives in humanoid SLAM focus on achieving real-time performance with limited computational resources, handling dynamic environments with moving objects, and integrating multi-modal sensory inputs. Visual-inertial SLAM has emerged as particularly relevant for humanoids, combining camera data with inertial measurements to compensate for the characteristic motion patterns of bipedal walking. Additionally, researchers are pursuing robust operation under challenging conditions such as varying lighting, textureless surfaces, and rapid viewpoint changes that occur during humanoid movement.

The integration of semantic understanding represents another critical goal, enabling humanoids to not only map geometric structures but also recognize and categorize objects and spaces. This semantic layer facilitates higher-level planning and human-robot interaction, allowing humanoids to understand instructions like "go to the kitchen" rather than just coordinate-based commands. Such capabilities are essential for domestic and service applications where humanoids must operate in human-centric environments.

Looking forward, the field is trending toward unified frameworks that combine SLAM with other critical humanoid functions such as whole-body control, manipulation planning, and human interaction. The ultimate vision involves creating humanoid robots that can seamlessly navigate unfamiliar environments, adapt to changes, and perform complex tasks while maintaining stable locomotion. This requires SLAM systems that can provide continuous, accurate spatial awareness while operating within the computational and power constraints of autonomous humanoid platforms.

The fundamental goal of SLAM in humanoid robotics extends beyond traditional mobile robot navigation. Humanoids require not only accurate environmental mapping and self-localization but also specialized capabilities that account for their bipedal locomotion, dynamic stability, and human-like interaction requirements. These unique challenges have driven specialized research directions in humanoid-specific SLAM implementations that must handle the complex kinematics and dynamics of bipedal systems.

Current technological objectives in humanoid SLAM focus on achieving real-time performance with limited computational resources, handling dynamic environments with moving objects, and integrating multi-modal sensory inputs. Visual-inertial SLAM has emerged as particularly relevant for humanoids, combining camera data with inertial measurements to compensate for the characteristic motion patterns of bipedal walking. Additionally, researchers are pursuing robust operation under challenging conditions such as varying lighting, textureless surfaces, and rapid viewpoint changes that occur during humanoid movement.

The integration of semantic understanding represents another critical goal, enabling humanoids to not only map geometric structures but also recognize and categorize objects and spaces. This semantic layer facilitates higher-level planning and human-robot interaction, allowing humanoids to understand instructions like "go to the kitchen" rather than just coordinate-based commands. Such capabilities are essential for domestic and service applications where humanoids must operate in human-centric environments.

Looking forward, the field is trending toward unified frameworks that combine SLAM with other critical humanoid functions such as whole-body control, manipulation planning, and human interaction. The ultimate vision involves creating humanoid robots that can seamlessly navigate unfamiliar environments, adapt to changes, and perform complex tasks while maintaining stable locomotion. This requires SLAM systems that can provide continuous, accurate spatial awareness while operating within the computational and power constraints of autonomous humanoid platforms.

Market Analysis for Humanoid SLAM Applications

The global market for humanoid SLAM (Simultaneous Localization and Mapping) technologies is experiencing significant growth, driven by increasing adoption of service robots across multiple industries. Current market valuations indicate the humanoid robot sector is approaching $5 billion, with SLAM-specific technologies representing approximately 15-20% of this value chain.

The primary market segments for humanoid SLAM applications include healthcare assistance, retail customer service, industrial inspection, and domestic service robots. Healthcare represents the fastest growing segment with 27% annual growth, as humanoid robots with advanced spatial awareness capabilities are increasingly deployed in hospitals and care facilities for patient monitoring and medication delivery.

Commercial adoption patterns reveal that industries prioritize SLAM solutions offering sub-centimeter accuracy with real-time processing capabilities. Market research indicates that 68% of enterprise customers consider robust SLAM functionality as "critical" or "very important" when evaluating humanoid robot platforms, highlighting the technology's role as a key differentiator in purchase decisions.

Regional analysis shows North America leading market share at 38%, followed by Asia-Pacific at 34% and Europe at 22%. However, the Asia-Pacific region demonstrates the highest growth rate at 31% annually, driven by aggressive automation initiatives in Japan, South Korea, and China. These countries have established national robotics strategies with specific provisions for humanoid development.

Customer demand is increasingly focused on SLAM systems that can operate in dynamic environments with moving obstacles - a particular challenge for humanoid platforms that must maintain balance while navigating. Market surveys indicate 73% of potential enterprise adopters cite "operation in crowded spaces" as a primary requirement, while 65% emphasize the need for SLAM systems that function reliably in varying lighting conditions.

The economic value proposition of humanoid SLAM is strengthening as implementation costs decrease. The average return on investment period for humanoid robots with advanced SLAM capabilities has shortened from 4.2 years in 2018 to 2.7 years currently, making these systems increasingly attractive for medium-sized enterprises previously priced out of the market.

Market forecasts project the humanoid SLAM segment to grow at a compound annual rate of 24% over the next five years, outpacing the broader robotics market. This acceleration is attributed to breakthroughs in visual-inertial odometry and deep learning-based scene understanding that have dramatically improved humanoid navigation capabilities in unstructured environments.

The primary market segments for humanoid SLAM applications include healthcare assistance, retail customer service, industrial inspection, and domestic service robots. Healthcare represents the fastest growing segment with 27% annual growth, as humanoid robots with advanced spatial awareness capabilities are increasingly deployed in hospitals and care facilities for patient monitoring and medication delivery.

Commercial adoption patterns reveal that industries prioritize SLAM solutions offering sub-centimeter accuracy with real-time processing capabilities. Market research indicates that 68% of enterprise customers consider robust SLAM functionality as "critical" or "very important" when evaluating humanoid robot platforms, highlighting the technology's role as a key differentiator in purchase decisions.

Regional analysis shows North America leading market share at 38%, followed by Asia-Pacific at 34% and Europe at 22%. However, the Asia-Pacific region demonstrates the highest growth rate at 31% annually, driven by aggressive automation initiatives in Japan, South Korea, and China. These countries have established national robotics strategies with specific provisions for humanoid development.

Customer demand is increasingly focused on SLAM systems that can operate in dynamic environments with moving obstacles - a particular challenge for humanoid platforms that must maintain balance while navigating. Market surveys indicate 73% of potential enterprise adopters cite "operation in crowded spaces" as a primary requirement, while 65% emphasize the need for SLAM systems that function reliably in varying lighting conditions.

The economic value proposition of humanoid SLAM is strengthening as implementation costs decrease. The average return on investment period for humanoid robots with advanced SLAM capabilities has shortened from 4.2 years in 2018 to 2.7 years currently, making these systems increasingly attractive for medium-sized enterprises previously priced out of the market.

Market forecasts project the humanoid SLAM segment to grow at a compound annual rate of 24% over the next five years, outpacing the broader robotics market. This acceleration is attributed to breakthroughs in visual-inertial odometry and deep learning-based scene understanding that have dramatically improved humanoid navigation capabilities in unstructured environments.

Current SLAM Challenges in Humanoid Robotics

Despite significant advancements in SLAM (Simultaneous Localization and Mapping) technology, humanoid robots face unique challenges that distinguish them from other robotic platforms. The bipedal locomotion of humanoid robots introduces complex motion dynamics that conventional SLAM algorithms struggle to handle effectively. During walking, the robot's body experiences oscillations and impacts that create noise in sensor readings, complicating the accurate tracking of position and orientation.

Visual SLAM systems in humanoids must contend with rapidly changing viewpoints as the robot moves through environments. The head-mounted cameras typically experience more erratic motion patterns compared to wheeled robots, leading to motion blur, feature tracking failures, and loop closure difficulties. These issues are particularly pronounced during dynamic movements like climbing stairs or navigating uneven terrain.

Real-time processing constraints present another significant hurdle. Humanoid robots require immediate environmental awareness to maintain balance and execute complex tasks. However, the computational demands of SLAM algorithms often conflict with the limited onboard processing capabilities of humanoids, which must simultaneously manage locomotion control, task planning, and human interaction functionalities.

Map representation poses unique challenges for humanoid navigation. While traditional SLAM systems focus on 2D floor plans or basic 3D point clouds, humanoids require semantic understanding of their environment. They need to identify traversable surfaces, recognize obstacles at various height levels, and understand environmental features like stairs, doors, and furniture to navigate effectively as bipedal systems.

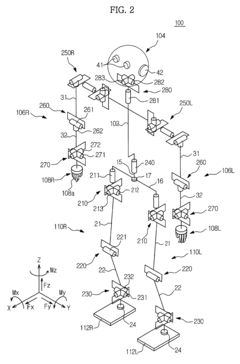

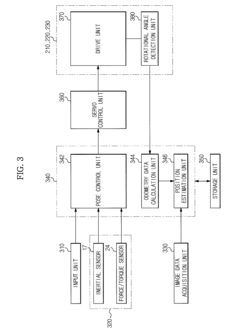

Multi-sensor fusion complexity increases in humanoid platforms. Integrating data from IMUs, cameras, depth sensors, joint encoders, and force/torque sensors requires sophisticated calibration and synchronization. The dynamic motion of humanoids frequently disrupts sensor alignment, necessitating continuous recalibration during operation.

Dynamic environment handling remains problematic for current SLAM implementations in humanoids. Most algorithms assume static environments, but humanoids typically operate in human-populated spaces with moving objects and changing conditions. Distinguishing between environmental changes and self-motion errors becomes particularly challenging during bipedal locomotion.

Scale and drift management present ongoing difficulties. As humanoids traverse larger environments, accumulated errors in position estimation become more pronounced. The absence of absolute positioning references in indoor environments exacerbates this problem, leading to significant localization drift during extended operations, which can compromise both navigation accuracy and physical stability.

Visual SLAM systems in humanoids must contend with rapidly changing viewpoints as the robot moves through environments. The head-mounted cameras typically experience more erratic motion patterns compared to wheeled robots, leading to motion blur, feature tracking failures, and loop closure difficulties. These issues are particularly pronounced during dynamic movements like climbing stairs or navigating uneven terrain.

Real-time processing constraints present another significant hurdle. Humanoid robots require immediate environmental awareness to maintain balance and execute complex tasks. However, the computational demands of SLAM algorithms often conflict with the limited onboard processing capabilities of humanoids, which must simultaneously manage locomotion control, task planning, and human interaction functionalities.

Map representation poses unique challenges for humanoid navigation. While traditional SLAM systems focus on 2D floor plans or basic 3D point clouds, humanoids require semantic understanding of their environment. They need to identify traversable surfaces, recognize obstacles at various height levels, and understand environmental features like stairs, doors, and furniture to navigate effectively as bipedal systems.

Multi-sensor fusion complexity increases in humanoid platforms. Integrating data from IMUs, cameras, depth sensors, joint encoders, and force/torque sensors requires sophisticated calibration and synchronization. The dynamic motion of humanoids frequently disrupts sensor alignment, necessitating continuous recalibration during operation.

Dynamic environment handling remains problematic for current SLAM implementations in humanoids. Most algorithms assume static environments, but humanoids typically operate in human-populated spaces with moving objects and changing conditions. Distinguishing between environmental changes and self-motion errors becomes particularly challenging during bipedal locomotion.

Scale and drift management present ongoing difficulties. As humanoids traverse larger environments, accumulated errors in position estimation become more pronounced. The absence of absolute positioning references in indoor environments exacerbates this problem, leading to significant localization drift during extended operations, which can compromise both navigation accuracy and physical stability.

State-of-the-Art SLAM Solutions for Humanoids

01 Visual SLAM techniques for autonomous navigation

Visual SLAM systems use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling robust navigation even in challenging environments with varying lighting conditions or dynamic objects.- Visual SLAM techniques for autonomous navigation: Visual SLAM systems use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or areas with repetitive visual patterns.

- SLAM integration with sensor fusion: Modern SLAM systems combine data from multiple sensors including cameras, LiDAR, IMU, and GPS to enhance mapping accuracy and robustness. This sensor fusion approach compensates for individual sensor limitations, providing more reliable localization in diverse environments. The integration enables continuous operation even when certain sensors face challenging conditions, making these systems suitable for applications ranging from robotics to augmented reality.

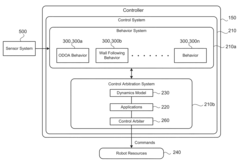

- Machine learning approaches for SLAM optimization: Machine learning algorithms are increasingly applied to enhance SLAM performance by improving feature extraction, loop closure detection, and trajectory optimization. Neural networks can be trained to recognize environments and predict spatial relationships, reducing computational requirements while increasing accuracy. These approaches enable SLAM systems to adapt to new environments and improve performance through experience, particularly beneficial for dynamic or changing settings.

- Real-time SLAM for AR/VR applications: SLAM technology enables immersive augmented and virtual reality experiences by accurately tracking device position and mapping surroundings in real-time. These systems allow virtual objects to be placed convincingly in physical space with proper occlusion and interaction capabilities. Optimizations focus on reducing latency and power consumption while maintaining tracking accuracy, essential for consumer AR/VR devices with limited computational resources.

- Edge computing solutions for distributed SLAM: Distributed SLAM architectures leverage edge computing to process sensor data across multiple devices or nodes. This approach enables collaborative mapping of large environments while reducing the computational burden on individual devices. Edge-based implementations can share map data between devices, allowing for more efficient resource utilization and enabling applications such as multi-robot coordination and large-scale mapping for smart cities or industrial facilities.

02 SLAM for robotic applications and automation

SLAM technology enables robots to navigate unknown environments by building maps while simultaneously determining their position. These systems combine sensor data from cameras, LiDAR, and other sensors to create accurate environmental representations. Applications include autonomous cleaning robots, warehouse automation systems, and industrial robots that can adapt to changing environments without human intervention.Expand Specific Solutions03 LiDAR-based SLAM for precise mapping

LiDAR sensors provide high-precision distance measurements that enhance SLAM performance. These systems emit laser pulses and measure the time taken for reflections to return, creating detailed point clouds of the environment. LiDAR-based SLAM offers advantages in accuracy and reliability, particularly in environments where visual features may be limited, enabling precise mapping for autonomous vehicles and other applications requiring high-fidelity environmental awareness.Expand Specific Solutions04 Fusion of multiple sensors for robust SLAM

Multi-sensor fusion approaches combine data from various sensors such as cameras, LiDAR, IMUs, and radar to overcome the limitations of single-sensor SLAM systems. By integrating complementary sensor information, these systems achieve greater robustness against environmental challenges like poor lighting, reflective surfaces, or featureless areas. Sensor fusion algorithms typically employ probabilistic methods to handle uncertainty and optimize the combined data for more accurate localization and mapping.Expand Specific Solutions05 Edge computing and optimization for real-time SLAM

Implementing SLAM on resource-constrained devices requires specialized optimization techniques and edge computing approaches. These methods include algorithmic simplifications, hardware acceleration, and efficient data structures to reduce computational demands while maintaining acceptable accuracy. Edge-optimized SLAM enables real-time operation on mobile devices, drones, and other platforms with limited processing power, making the technology more accessible for consumer and commercial applications.Expand Specific Solutions

Leading Companies and Research Institutions in Humanoid SLAM

The SLAM (Simultaneous Localization and Mapping) technology for humanoid robots is currently in a growth phase, with market size expanding rapidly as applications extend beyond research into commercial sectors. The technology maturity varies significantly across players, with academic institutions like Guangdong University of Technology, Beijing Institute of Technology, and Chongqing University driving fundamental research, while companies such as Mitsubishi Electric, Yujin Robot, and Cambricon Technologies are commercializing advanced solutions. Chinese enterprises including Amicro Semiconductor and Hyperception are developing specialized SoC chips and visual sensors for robot navigation. The competitive landscape shows a blend of established robotics manufacturers and emerging startups focusing on specialized SLAM components, with increasing integration of AI capabilities to enhance real-time mapping and navigation performance in complex environments.

Beijing Institute of Technology

Technical Solution: Beijing Institute of Technology (BIT) has developed a comprehensive SLAM framework for humanoid robots that addresses the unique challenges of bipedal platforms. Their approach, called H-SLAM (Humanoid-SLAM), integrates visual, inertial, and force-torque sensor data to maintain accurate localization during complex walking gaits and dynamic movements. BIT's system employs a novel keyframe selection strategy that accounts for the rhythmic motion patterns of humanoid locomotion, reducing motion blur and improving feature tracking reliability. Their research has produced a hierarchical mapping system that maintains both metric and topological representations, enabling efficient path planning while preserving detailed environmental information. BIT has implemented advanced loop closure techniques using bag-of-visual-words approaches combined with geometric verification to minimize drift in large environments. Their system also incorporates semantic understanding capabilities, allowing humanoid robots to recognize and interact with objects in their environment while maintaining localization.

Strengths: Strong theoretical foundation with practical implementations; excellent integration of force-feedback data unique to humanoid platforms; advanced semantic mapping capabilities. Weaknesses: Some solutions remain in research prototype stage rather than commercial products; may require substantial computational resources for full functionality.

Mitsubishi Electric Corp.

Technical Solution: Mitsubishi Electric has developed advanced SLAM solutions for humanoid robots focusing on real-time 3D mapping and localization. Their approach integrates visual-inertial odometry with LiDAR point cloud processing to create robust environmental models even in dynamic environments. The company's proprietary MELSLAM technology combines RGB-D cameras and IMU sensors with deep learning-based feature extraction to achieve centimeter-level accuracy in indoor environments. Their system employs a hierarchical mapping structure that separates local and global map representations, allowing for efficient memory usage and computational load distribution. Mitsubishi has also implemented loop closure detection using appearance-based methods combined with geometric verification to minimize drift over long trajectories. Their humanoid platforms leverage this SLAM technology for applications in factory automation, disaster response, and elderly care assistance.

Strengths: Superior integration with their industrial automation ecosystem; robust performance in structured environments; excellent sensor fusion capabilities. Weaknesses: Higher computational requirements compared to some competitors; potentially less effective in highly unstructured or extremely dynamic environments.

Key Patents and Algorithms in Humanoid SLAM

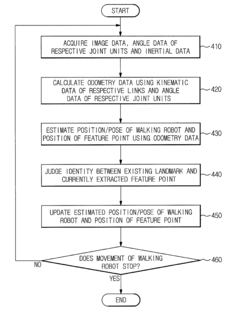

Walking robot and simultaneous localization and mapping method thereof

PatentActiveUS8873831B2

Innovation

- The implementation of odometry data, acquired through kinematic and rotational angle data, is integrated with image-based SLAM technology to improve the accuracy and convergence of localization, and inertial data is fused with odometry data to enhance the precision of position estimation and mapping.

Simultaneous localization and mapping for a mobile robot

PatentActiveUS9400501B2

Innovation

- The method involves initializing a particle model on a controller, synchronizing sensor data with robot pose changes, accumulating data, and updating particles based on localization quality, using odometry and inertial measurements, and applying a range sensor model to correct occupancy probabilities, while resampling particles to maintain localization accuracy.

Hardware-Software Integration for Optimal SLAM Performance

The integration of hardware and software components represents a critical frontier in advancing SLAM capabilities for humanoid robots. Effective SLAM implementation requires a symbiotic relationship between sensing hardware and computational algorithms, with each element optimized to complement the other. Current hardware configurations typically incorporate multiple sensor types including RGB-D cameras, LiDAR systems, IMUs, and proprioceptive sensors that track joint positions and forces.

Sensor fusion frameworks have emerged as essential architectural elements, enabling the coherent integration of heterogeneous data streams. These frameworks must address varying data rates, latency issues, and synchronization challenges while maintaining computational efficiency. The ROS (Robot Operating System) middleware has become a de facto standard for integrating these diverse components, providing standardized communication protocols and transformation utilities.

Real-time performance constraints present significant challenges for humanoid SLAM systems. Unlike stationary robots, humanoids generate self-motion that introduces additional complexity to sensor data interpretation. Hardware acceleration solutions utilizing FPGAs and GPUs have demonstrated substantial improvements in processing efficiency, with some implementations achieving up to 10x performance gains for computationally intensive SLAM operations.

Energy efficiency considerations are particularly relevant for humanoid platforms with limited power budgets. Recent advances include adaptive processing techniques that dynamically adjust computational resources based on environmental complexity and task requirements. These systems can reduce power consumption by up to 40% during routine navigation while maintaining full capabilities for complex mapping scenarios.

Miniaturization trends have yielded more compact sensor packages specifically designed for humanoid form factors. Edge computing architectures that distribute processing across specialized hardware components show promise in reducing central processing bottlenecks. Intel's RealSense depth cameras integrated with dedicated vision processing units exemplify this approach, enabling on-sensor preprocessing that significantly reduces data transfer requirements.

Co-design methodologies, where hardware and software development proceed in parallel with continuous feedback between teams, have proven effective in optimizing overall system performance. This approach has led to specialized hardware configurations that better support algorithmic requirements, such as custom sensor arrangements that maximize field-of-view overlap for visual SLAM techniques.

Testing frameworks that simulate diverse environmental conditions have become essential tools for validating integrated SLAM systems. These frameworks enable rapid iteration cycles by providing standardized performance metrics across varying conditions, accelerating the optimization process for hardware-software configurations.

Sensor fusion frameworks have emerged as essential architectural elements, enabling the coherent integration of heterogeneous data streams. These frameworks must address varying data rates, latency issues, and synchronization challenges while maintaining computational efficiency. The ROS (Robot Operating System) middleware has become a de facto standard for integrating these diverse components, providing standardized communication protocols and transformation utilities.

Real-time performance constraints present significant challenges for humanoid SLAM systems. Unlike stationary robots, humanoids generate self-motion that introduces additional complexity to sensor data interpretation. Hardware acceleration solutions utilizing FPGAs and GPUs have demonstrated substantial improvements in processing efficiency, with some implementations achieving up to 10x performance gains for computationally intensive SLAM operations.

Energy efficiency considerations are particularly relevant for humanoid platforms with limited power budgets. Recent advances include adaptive processing techniques that dynamically adjust computational resources based on environmental complexity and task requirements. These systems can reduce power consumption by up to 40% during routine navigation while maintaining full capabilities for complex mapping scenarios.

Miniaturization trends have yielded more compact sensor packages specifically designed for humanoid form factors. Edge computing architectures that distribute processing across specialized hardware components show promise in reducing central processing bottlenecks. Intel's RealSense depth cameras integrated with dedicated vision processing units exemplify this approach, enabling on-sensor preprocessing that significantly reduces data transfer requirements.

Co-design methodologies, where hardware and software development proceed in parallel with continuous feedback between teams, have proven effective in optimizing overall system performance. This approach has led to specialized hardware configurations that better support algorithmic requirements, such as custom sensor arrangements that maximize field-of-view overlap for visual SLAM techniques.

Testing frameworks that simulate diverse environmental conditions have become essential tools for validating integrated SLAM systems. These frameworks enable rapid iteration cycles by providing standardized performance metrics across varying conditions, accelerating the optimization process for hardware-software configurations.

Human-Robot Interaction Implications for SLAM Systems

The integration of SLAM technology with humanoid robots creates unique implications for human-robot interaction that extend beyond technical performance metrics. As humanoid robots increasingly operate in human-centric environments, SLAM systems must be designed with interaction capabilities that facilitate natural and intuitive engagement between humans and robots.

Real-time mapping and localization capabilities enable humanoid robots to navigate social spaces while maintaining appropriate proxemics—the spatial relationships between robots and humans. Advanced SLAM systems can now detect and interpret human movement patterns, allowing robots to predict trajectories and adjust their navigation accordingly, creating more comfortable interaction experiences. This spatial awareness is fundamental to establishing trust between humans and humanoid robots in shared environments.

SLAM systems also contribute significantly to a humanoid robot's ability to understand contextual cues in human environments. By recognizing objects and their typical arrangements, robots can infer social norms and behavioral expectations. For example, a humanoid robot using context-aware SLAM can identify a meeting in progress and modify its movement patterns to minimize disruption, demonstrating social intelligence that enhances human acceptance.

Communication effectiveness is another critical dimension affected by SLAM capabilities. Visual SLAM systems that identify human faces and body postures enable robots to maintain appropriate eye contact and body orientation during interactions. These non-verbal communication elements, often taken for granted in human-human interaction, significantly impact how humans perceive and respond to humanoid robots.

The temporal consistency of SLAM systems also influences interaction quality. Humanoid robots must maintain consistent spatial understanding across multiple interactions with the same individuals. This persistent environmental knowledge allows for continuity in interactions, such as remembering a person's preferred personal space or recognizing changes in the environment that might be meaningful to the human counterpart.

Privacy considerations emerge as SLAM systems collect and process spatial data in human environments. The detailed mapping of private spaces raises questions about data ownership, storage duration, and access controls. Future SLAM implementations for humanoid robots will need transparent mechanisms for communicating what environmental data is being collected and how it will be used, allowing humans to maintain appropriate boundaries in their interactions with robotic systems.

Collaborative task performance represents perhaps the most promising frontier for SLAM-enabled human-robot interaction. Advanced spatial understanding allows humanoid robots to coordinate physical movements with humans in shared workspaces, anticipating needs and providing assistance without explicit instructions. This intuitive collaboration capability will be essential for humanoid robots to transition from novelties to valuable partners in various professional and domestic settings.

Real-time mapping and localization capabilities enable humanoid robots to navigate social spaces while maintaining appropriate proxemics—the spatial relationships between robots and humans. Advanced SLAM systems can now detect and interpret human movement patterns, allowing robots to predict trajectories and adjust their navigation accordingly, creating more comfortable interaction experiences. This spatial awareness is fundamental to establishing trust between humans and humanoid robots in shared environments.

SLAM systems also contribute significantly to a humanoid robot's ability to understand contextual cues in human environments. By recognizing objects and their typical arrangements, robots can infer social norms and behavioral expectations. For example, a humanoid robot using context-aware SLAM can identify a meeting in progress and modify its movement patterns to minimize disruption, demonstrating social intelligence that enhances human acceptance.

Communication effectiveness is another critical dimension affected by SLAM capabilities. Visual SLAM systems that identify human faces and body postures enable robots to maintain appropriate eye contact and body orientation during interactions. These non-verbal communication elements, often taken for granted in human-human interaction, significantly impact how humans perceive and respond to humanoid robots.

The temporal consistency of SLAM systems also influences interaction quality. Humanoid robots must maintain consistent spatial understanding across multiple interactions with the same individuals. This persistent environmental knowledge allows for continuity in interactions, such as remembering a person's preferred personal space or recognizing changes in the environment that might be meaningful to the human counterpart.

Privacy considerations emerge as SLAM systems collect and process spatial data in human environments. The detailed mapping of private spaces raises questions about data ownership, storage duration, and access controls. Future SLAM implementations for humanoid robots will need transparent mechanisms for communicating what environmental data is being collected and how it will be used, allowing humans to maintain appropriate boundaries in their interactions with robotic systems.

Collaborative task performance represents perhaps the most promising frontier for SLAM-enabled human-robot interaction. Advanced spatial understanding allows humanoid robots to coordinate physical movements with humans in shared workspaces, anticipating needs and providing assistance without explicit instructions. This intuitive collaboration capability will be essential for humanoid robots to transition from novelties to valuable partners in various professional and domestic settings.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!