How Probabilistic Methods Enhance SLAM Accuracy?

SEP 12, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Probabilistic SLAM Evolution and Objectives

Simultaneous Localization and Mapping (SLAM) has evolved significantly since its inception in the 1980s, transforming from deterministic approaches to probabilistic frameworks that better handle real-world uncertainties. The integration of probability theory into SLAM represents a paradigm shift in how autonomous systems perceive and interact with their environments. Early SLAM implementations struggled with sensor noise, environmental ambiguities, and computational limitations, leading to inaccurate mapping and localization in complex scenarios.

Probabilistic methods emerged as a solution by explicitly modeling uncertainties in sensor measurements and robot motion. The evolution began with Extended Kalman Filter (EKF) implementations in the 1990s, progressed through particle filters in the early 2000s, and has now advanced to sophisticated graph-based optimization techniques. Each evolutionary stage has addressed specific limitations of previous approaches while expanding the applicability of SLAM systems across diverse environments.

The fundamental objective of probabilistic SLAM is to simultaneously estimate the posterior probability distribution over robot poses and landmark positions given sensor observations. This Bayesian approach allows systems to maintain multiple hypotheses about the environment state, rather than committing to potentially erroneous single estimates. By representing uncertainty mathematically, probabilistic SLAM can make optimal decisions even with imperfect information.

Current research trends focus on enhancing SLAM accuracy through improved uncertainty representation, efficient inference algorithms, and robust loop closure detection. The field is moving toward systems that can quantify their own uncertainty and make reliability assessments about their mapping and localization performance. This self-awareness is crucial for safety-critical applications like autonomous vehicles and medical robots.

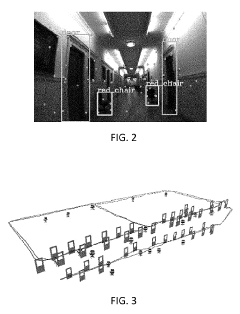

Another significant trend is the integration of semantic understanding with probabilistic frameworks, allowing SLAM systems to reason about object classes and relationships rather than just geometric features. This semantic-probabilistic fusion promises more intelligent environmental interaction and improved long-term mapping stability.

The technical objectives driving probabilistic SLAM development include achieving consistent mapping in dynamic environments, reducing computational requirements for real-time operation on resource-constrained platforms, and developing unified frameworks that can seamlessly incorporate heterogeneous sensor data. These objectives align with the broader goal of creating autonomous systems that can operate reliably in increasingly complex and unpredictable real-world scenarios.

Probabilistic methods emerged as a solution by explicitly modeling uncertainties in sensor measurements and robot motion. The evolution began with Extended Kalman Filter (EKF) implementations in the 1990s, progressed through particle filters in the early 2000s, and has now advanced to sophisticated graph-based optimization techniques. Each evolutionary stage has addressed specific limitations of previous approaches while expanding the applicability of SLAM systems across diverse environments.

The fundamental objective of probabilistic SLAM is to simultaneously estimate the posterior probability distribution over robot poses and landmark positions given sensor observations. This Bayesian approach allows systems to maintain multiple hypotheses about the environment state, rather than committing to potentially erroneous single estimates. By representing uncertainty mathematically, probabilistic SLAM can make optimal decisions even with imperfect information.

Current research trends focus on enhancing SLAM accuracy through improved uncertainty representation, efficient inference algorithms, and robust loop closure detection. The field is moving toward systems that can quantify their own uncertainty and make reliability assessments about their mapping and localization performance. This self-awareness is crucial for safety-critical applications like autonomous vehicles and medical robots.

Another significant trend is the integration of semantic understanding with probabilistic frameworks, allowing SLAM systems to reason about object classes and relationships rather than just geometric features. This semantic-probabilistic fusion promises more intelligent environmental interaction and improved long-term mapping stability.

The technical objectives driving probabilistic SLAM development include achieving consistent mapping in dynamic environments, reducing computational requirements for real-time operation on resource-constrained platforms, and developing unified frameworks that can seamlessly incorporate heterogeneous sensor data. These objectives align with the broader goal of creating autonomous systems that can operate reliably in increasingly complex and unpredictable real-world scenarios.

Market Applications and Demand Analysis for Accurate SLAM

The market for accurate SLAM (Simultaneous Localization and Mapping) technology has expanded significantly across multiple sectors, driven by the increasing demand for autonomous navigation systems. The global SLAM market was valued at approximately $200 million in 2020 and is projected to reach $1.5 billion by 2027, growing at a CAGR of over 30%. This remarkable growth is fueled by applications across diverse industries seeking precise spatial awareness capabilities.

In the automotive sector, accurate SLAM technology forms the backbone of autonomous driving systems, with major manufacturers investing heavily in this technology to achieve Level 4 and Level 5 autonomy. The demand is particularly strong as probabilistic SLAM methods have demonstrated superior performance in handling dynamic environments and varying lighting conditions, critical factors for safe autonomous navigation.

The robotics industry represents another significant market segment, with warehouse automation leading adoption. Companies like Amazon and Alibaba have deployed thousands of autonomous mobile robots utilizing probabilistic SLAM for efficient warehouse operations, reducing operational costs by up to 20% and improving picking accuracy by 40%. The market for warehouse robotics alone is expected to grow at 15% annually through 2025.

Consumer electronics manufacturers have also recognized the potential of accurate SLAM technology in augmented reality applications. Apple's LiDAR-equipped devices leverage probabilistic SLAM algorithms to create persistent AR experiences, while Facebook and Microsoft continue to develop spatial computing platforms requiring precise environmental mapping capabilities.

Healthcare applications represent an emerging market with substantial growth potential. Surgical robots employing probabilistic SLAM techniques for precise navigation during minimally invasive procedures have shown improved outcomes in clinical trials. The medical robotics market segment is projected to grow at 17% annually, with SLAM technology being a key enabler.

Smart cities and infrastructure monitoring constitute another expanding application area. Drones equipped with probabilistic SLAM systems are increasingly used for automated inspection of bridges, buildings, and other critical infrastructure, reducing inspection costs by up to 70% while improving detection of structural issues.

Market analysis indicates that companies offering SLAM solutions with robust probabilistic frameworks command premium pricing, with customers willing to pay 30-40% more for systems demonstrating superior accuracy in challenging environments. This price premium underscores the significant market value placed on the accuracy improvements that probabilistic methods bring to SLAM technology.

In the automotive sector, accurate SLAM technology forms the backbone of autonomous driving systems, with major manufacturers investing heavily in this technology to achieve Level 4 and Level 5 autonomy. The demand is particularly strong as probabilistic SLAM methods have demonstrated superior performance in handling dynamic environments and varying lighting conditions, critical factors for safe autonomous navigation.

The robotics industry represents another significant market segment, with warehouse automation leading adoption. Companies like Amazon and Alibaba have deployed thousands of autonomous mobile robots utilizing probabilistic SLAM for efficient warehouse operations, reducing operational costs by up to 20% and improving picking accuracy by 40%. The market for warehouse robotics alone is expected to grow at 15% annually through 2025.

Consumer electronics manufacturers have also recognized the potential of accurate SLAM technology in augmented reality applications. Apple's LiDAR-equipped devices leverage probabilistic SLAM algorithms to create persistent AR experiences, while Facebook and Microsoft continue to develop spatial computing platforms requiring precise environmental mapping capabilities.

Healthcare applications represent an emerging market with substantial growth potential. Surgical robots employing probabilistic SLAM techniques for precise navigation during minimally invasive procedures have shown improved outcomes in clinical trials. The medical robotics market segment is projected to grow at 17% annually, with SLAM technology being a key enabler.

Smart cities and infrastructure monitoring constitute another expanding application area. Drones equipped with probabilistic SLAM systems are increasingly used for automated inspection of bridges, buildings, and other critical infrastructure, reducing inspection costs by up to 70% while improving detection of structural issues.

Market analysis indicates that companies offering SLAM solutions with robust probabilistic frameworks command premium pricing, with customers willing to pay 30-40% more for systems demonstrating superior accuracy in challenging environments. This price premium underscores the significant market value placed on the accuracy improvements that probabilistic methods bring to SLAM technology.

Current Probabilistic SLAM Techniques and Limitations

Probabilistic methods form the backbone of modern SLAM (Simultaneous Localization and Mapping) systems, providing robust frameworks for handling uncertainty in sensor measurements and environmental representations. Currently, the field employs several established probabilistic techniques, each with distinct advantages and limitations.

Extended Kalman Filter (EKF) SLAM remains one of the most widely implemented approaches, linearizing non-linear motion and observation models to maintain computational efficiency. While EKF provides a mathematically elegant solution for jointly estimating robot pose and landmark positions, it suffers from quadratic computational complexity as the number of landmarks increases. Additionally, linearization errors can accumulate over time, causing filter inconsistency and divergence in highly non-linear environments.

Particle Filter SLAM techniques, particularly FastSLAM and its variants, address some EKF limitations by representing the posterior distribution using a set of weighted particles. This approach handles non-linear models more effectively and scales better with map size. However, particle depletion remains a significant challenge, especially in high-dimensional state spaces, often requiring sophisticated resampling strategies to maintain diversity in the particle set.

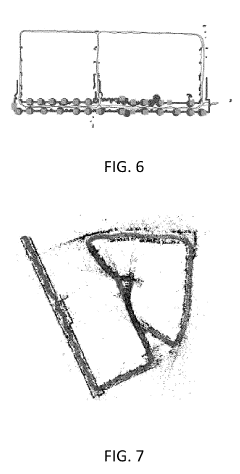

Graph-based SLAM has gained prominence for its ability to efficiently represent the full SLAM problem as a sparse graph optimization problem. Methods like g2o and GTSAM formulate SLAM as a maximum likelihood estimation over a factor graph, enabling efficient optimization even for large-scale environments. Despite these advantages, graph-based approaches remain sensitive to outliers and can struggle with loop closure detection in ambiguous environments.

Information filter approaches, particularly the Sparse Extended Information Filter (SEIF), exploit the natural sparsity in the SLAM information matrix. While these methods offer computational advantages for large-scale mapping, maintaining sparsity often requires approximations that can compromise accuracy.

Recent advances in probabilistic SLAM have focused on hybrid approaches that combine multiple techniques. For instance, visual-inertial odometry systems often integrate filter-based methods for real-time state estimation with periodic graph optimization for global consistency. These hybrid systems aim to balance computational efficiency with accuracy requirements.

A significant limitation across all probabilistic SLAM techniques is the challenge of representing and propagating non-Gaussian uncertainties. Real-world sensor noise and environmental ambiguities often exhibit complex, multi-modal distributions that are inadequately captured by Gaussian approximations. This fundamental limitation affects data association reliability, particularly in feature-poor or repetitive environments.

Computational constraints continue to challenge probabilistic SLAM implementation on resource-constrained platforms like mobile robots and drones, necessitating careful tradeoffs between accuracy and processing requirements. Additionally, most current techniques struggle with dynamic environments where the static world assumption is violated.

Extended Kalman Filter (EKF) SLAM remains one of the most widely implemented approaches, linearizing non-linear motion and observation models to maintain computational efficiency. While EKF provides a mathematically elegant solution for jointly estimating robot pose and landmark positions, it suffers from quadratic computational complexity as the number of landmarks increases. Additionally, linearization errors can accumulate over time, causing filter inconsistency and divergence in highly non-linear environments.

Particle Filter SLAM techniques, particularly FastSLAM and its variants, address some EKF limitations by representing the posterior distribution using a set of weighted particles. This approach handles non-linear models more effectively and scales better with map size. However, particle depletion remains a significant challenge, especially in high-dimensional state spaces, often requiring sophisticated resampling strategies to maintain diversity in the particle set.

Graph-based SLAM has gained prominence for its ability to efficiently represent the full SLAM problem as a sparse graph optimization problem. Methods like g2o and GTSAM formulate SLAM as a maximum likelihood estimation over a factor graph, enabling efficient optimization even for large-scale environments. Despite these advantages, graph-based approaches remain sensitive to outliers and can struggle with loop closure detection in ambiguous environments.

Information filter approaches, particularly the Sparse Extended Information Filter (SEIF), exploit the natural sparsity in the SLAM information matrix. While these methods offer computational advantages for large-scale mapping, maintaining sparsity often requires approximations that can compromise accuracy.

Recent advances in probabilistic SLAM have focused on hybrid approaches that combine multiple techniques. For instance, visual-inertial odometry systems often integrate filter-based methods for real-time state estimation with periodic graph optimization for global consistency. These hybrid systems aim to balance computational efficiency with accuracy requirements.

A significant limitation across all probabilistic SLAM techniques is the challenge of representing and propagating non-Gaussian uncertainties. Real-world sensor noise and environmental ambiguities often exhibit complex, multi-modal distributions that are inadequately captured by Gaussian approximations. This fundamental limitation affects data association reliability, particularly in feature-poor or repetitive environments.

Computational constraints continue to challenge probabilistic SLAM implementation on resource-constrained platforms like mobile robots and drones, necessitating careful tradeoffs between accuracy and processing requirements. Additionally, most current techniques struggle with dynamic environments where the static world assumption is violated.

State-of-the-Art Probabilistic Solutions for SLAM

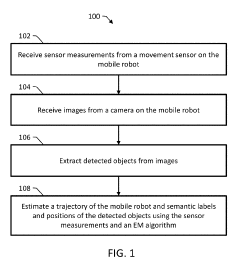

01 Bayesian filtering and probabilistic estimation in SLAM

Bayesian filtering techniques are widely used in SLAM (Simultaneous Localization and Mapping) to improve accuracy by handling uncertainties in sensor measurements and motion models. These methods include Extended Kalman Filters (EKF), Particle Filters, and Unscented Kalman Filters that maintain probabilistic representations of robot poses and map features. By incorporating probabilistic estimation, these approaches can better account for noise and uncertainties, leading to more robust localization and mapping in dynamic environments.- Bayesian Filtering for SLAM Accuracy Enhancement: Bayesian filtering methods, including Kalman filters and particle filters, are employed to improve the accuracy of SLAM systems by handling uncertainties in sensor measurements and motion models. These probabilistic approaches enable robust state estimation by continuously updating beliefs based on new observations while accounting for noise and environmental variability. The integration of Bayesian frameworks allows SLAM systems to maintain consistent mapping and localization even in dynamic or complex environments.

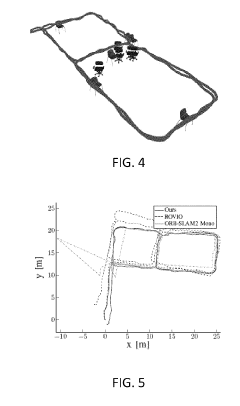

- Graph-Based Optimization for SLAM Precision: Graph-based optimization techniques represent SLAM problems as networks of constraints, where nodes correspond to poses or landmarks and edges represent spatial relationships. These methods improve accuracy by globally optimizing the entire trajectory and map structure through probabilistic error minimization. By formulating SLAM as a graph optimization problem, systems can achieve higher precision through techniques like maximum likelihood estimation and loop closure detection, resulting in more consistent and accurate mapping solutions.

- Machine Learning Integration for Uncertainty Estimation: Machine learning approaches enhance SLAM accuracy by improving uncertainty estimation and feature extraction. Neural networks and probabilistic models can be trained to predict measurement reliability, detect outliers, and adapt to changing environments. These techniques enable more robust performance by learning complex error patterns and environmental characteristics that traditional probabilistic methods might struggle to model explicitly, resulting in more accurate localization and mapping outcomes.

- Multi-Sensor Fusion with Probabilistic Methods: Probabilistic sensor fusion techniques combine data from multiple sensors (cameras, LiDAR, IMU, etc.) to improve SLAM accuracy. By modeling the uncertainty characteristics of each sensor and using methods like covariance intersection or factor graphs, these approaches enable more robust state estimation. The integration of complementary sensor information through probabilistic frameworks helps overcome limitations of individual sensors and provides more reliable mapping and localization in diverse environmental conditions.

- Loop Closure and Global Consistency Techniques: Probabilistic methods for loop closure detection and global map consistency significantly enhance SLAM accuracy. These techniques identify when a system revisits previously mapped areas and correct accumulated errors through probabilistic data association and pose graph optimization. By incorporating uncertainty models into the loop closure process, SLAM systems can maintain global consistency over extended operations, reducing drift and improving overall mapping accuracy even in large-scale environments.

02 Graph-based optimization for SLAM accuracy

Graph-based optimization approaches represent SLAM problems as graphs where nodes correspond to robot poses and landmarks, while edges represent spatial constraints from measurements. These methods use probabilistic techniques to optimize the graph structure, minimizing errors between predicted and observed measurements. Factor graphs and pose graphs are common implementations that leverage sparse optimization algorithms to efficiently solve large-scale SLAM problems, significantly improving accuracy especially in loop closure scenarios.Expand Specific Solutions03 Machine learning and probabilistic inference for SLAM enhancement

Machine learning techniques combined with probabilistic inference methods are increasingly used to enhance SLAM accuracy. Deep neural networks can learn complex sensor noise models and environmental patterns that traditional probabilistic approaches might miss. These hybrid approaches use probabilistic frameworks to incorporate learned features and uncertainties, allowing systems to adapt to different environments and conditions while maintaining robust performance estimates. This integration helps in handling dynamic objects and changing environments more effectively.Expand Specific Solutions04 Multi-sensor fusion using probabilistic methods

Probabilistic approaches for fusing data from multiple sensors (cameras, LiDAR, IMU, GPS) improve SLAM accuracy by leveraging the strengths of each sensor while mitigating their individual weaknesses. Techniques such as covariance intersection, factor graph fusion, and probabilistic data association enable robust integration of heterogeneous sensor data with different noise characteristics and sampling rates. These methods maintain consistent uncertainty estimates across sensor modalities, leading to more accurate and reliable mapping and localization results.Expand Specific Solutions05 Loop closure and global consistency using probabilistic techniques

Probabilistic methods for loop closure detection and correction are essential for maintaining global consistency in SLAM systems. These approaches use probabilistic data association to identify revisited places and update the map accordingly. Techniques such as probabilistic place recognition, Markov Random Fields, and Bayesian inference help in detecting and validating loop closures while properly distributing accumulated errors throughout the map. This significantly improves long-term accuracy and enables consistent mapping of large environments.Expand Specific Solutions

Leading Research Groups and Companies in Probabilistic SLAM

Probabilistic SLAM technology is currently in a growth phase, with the market expanding rapidly due to increasing applications in robotics, autonomous vehicles, and AR/VR. The global market size is projected to reach several billion dollars by 2025, driven by demand for precise navigation solutions. Technologically, SLAM is maturing with companies like iRobot and Sony leading commercial applications through robust probabilistic algorithms that enhance accuracy in dynamic environments. Academic institutions such as University of Electronic Science & Technology of China and National University of Defense Technology are advancing theoretical frameworks, while companies like GoerTek and SAIC GM Wuling are implementing SLAM in consumer products and autonomous systems, demonstrating the technology's transition from research to practical deployment.

iRobot Corp.

Technical Solution: iRobot has developed a probabilistic SLAM framework called vSLAM (Visual Simultaneous Localization and Mapping) that integrates visual data with other sensor inputs to enhance mapping accuracy in their Roomba robot vacuum cleaners. Their approach employs Bayesian filtering techniques, particularly Extended Kalman Filters (EKF) and particle filters, to handle sensor uncertainties and environmental noise. The system continuously updates probability distributions of robot positions and landmark locations, allowing for robust navigation even in dynamic home environments. iRobot's implementation includes loop closure detection algorithms that recognize previously visited locations, significantly reducing cumulative drift errors that plague deterministic SLAM approaches. Their latest iterations incorporate machine learning to improve feature extraction and matching in visually challenging environments such as low-light conditions or highly repetitive patterns.

Strengths: Robust performance in dynamic home environments with moving obstacles; computationally efficient implementation suitable for consumer robots with limited processing power. Weaknesses: Visual-based approach may struggle in low-light conditions; system optimization focuses on indoor structured environments rather than complex outdoor scenarios.

National University of Defense Technology

Technical Solution: The National University of Defense Technology has developed a sophisticated probabilistic SLAM framework called ProbSLAM that specifically addresses uncertainty in military and defense applications. Their approach employs Rao-Blackwellized Particle Filters (RBPF) to factorize the SLAM posterior into a product of conditional landmark distributions and a distribution over robot trajectories. This technique allows for efficient sampling in high-dimensional state spaces typical in complex military environments. The university's research has produced innovations in adaptive resampling strategies that maintain particle diversity while focusing computational resources on high-probability regions of the state space. Their system incorporates robust loop closure detection using probabilistic appearance models that can function in adversarial environments where visual features may be deliberately obscured or altered. Additionally, they've developed information-theoretic approaches to active SLAM that enable autonomous vehicles to plan paths that maximize information gain while minimizing localization uncertainty, critical for reconnaissance missions.

Strengths: Highly robust in challenging and adversarial environments; sophisticated uncertainty handling suitable for mission-critical applications. Weaknesses: Computational intensity may require specialized hardware; classified nature of some research limits commercial applications and open collaboration.

Key Algorithms and Mathematical Frameworks Analysis

Probabilistic data association for simultaneous localization and mapping

PatentActiveUS20190219401A1

Innovation

- The formulation of an optimization problem that integrates metric and semantic information, decomposing it into continuous and discrete optimization sub-problems to estimate data associations and landmark class probabilities, allowing for robust loop closure recognition and improved localization in complex environments.

Patent

Innovation

- Integration of probabilistic methods with SLAM algorithms to handle uncertainty in sensor measurements and environmental factors, resulting in more robust mapping and localization in dynamic environments.

- Development of adaptive particle filters that dynamically adjust the number of particles based on environmental complexity, optimizing computational resources while maintaining accuracy in SLAM systems.

- Implementation of Bayesian inference frameworks that effectively fuse data from heterogeneous sensors (cameras, LiDAR, IMU) to improve state estimation and reduce drift in long-term SLAM operations.

Computational Efficiency and Real-time Performance Challenges

Probabilistic SLAM methods face significant computational challenges that directly impact their real-time performance capabilities. The computational complexity of probabilistic SLAM algorithms typically scales with the dimensionality of the state space and the number of landmarks being tracked. For EKF-SLAM, the computational cost grows quadratically O(n²) with the number of landmarks, creating substantial processing demands for large-scale environments. This computational burden becomes particularly problematic when deploying SLAM systems on platforms with limited computational resources, such as mobile robots, drones, or augmented reality devices.

Real-time performance represents a critical requirement for many SLAM applications, especially in dynamic environments where rapid decision-making is essential. The probabilistic nature of these methods introduces additional computational overhead due to the need for uncertainty propagation and covariance matrix updates. Particle filter implementations face the challenge of maintaining sufficient particle diversity while keeping computational costs manageable. The trade-off between accuracy and computational efficiency remains a central challenge, as more accurate probabilistic representations typically demand greater computational resources.

Memory constraints further complicate the implementation of probabilistic SLAM methods in resource-constrained systems. The storage requirements for covariance matrices in EKF-SLAM or particle sets in FastSLAM can quickly exceed available memory in embedded systems. This limitation necessitates careful algorithm design and optimization strategies to ensure efficient memory utilization without compromising accuracy.

Several optimization approaches have emerged to address these challenges. Sparse approximation techniques reduce computational complexity by exploiting the inherent sparsity in SLAM problems. Information-form filters leverage sparsity in the information matrix to achieve more efficient updates. Submapping strategies partition the environment into manageable segments, allowing for localized updates that reduce overall computational demands.

Parallel computing architectures, including multi-core CPUs and GPUs, offer promising avenues for accelerating probabilistic SLAM computations. GPU implementations of particle filters have demonstrated significant speedups for certain SLAM variants. Additionally, algorithmic innovations such as incremental smoothing and mapping (iSAM) provide efficient solutions by performing incremental updates to the probabilistic representation rather than full recomputations at each step.

The development of specialized hardware accelerators for probabilistic operations represents another frontier in addressing computational challenges. FPGA implementations of key SLAM components have shown potential for significant performance improvements while maintaining low power consumption, making them suitable for mobile robotic platforms with strict energy constraints.

Real-time performance represents a critical requirement for many SLAM applications, especially in dynamic environments where rapid decision-making is essential. The probabilistic nature of these methods introduces additional computational overhead due to the need for uncertainty propagation and covariance matrix updates. Particle filter implementations face the challenge of maintaining sufficient particle diversity while keeping computational costs manageable. The trade-off between accuracy and computational efficiency remains a central challenge, as more accurate probabilistic representations typically demand greater computational resources.

Memory constraints further complicate the implementation of probabilistic SLAM methods in resource-constrained systems. The storage requirements for covariance matrices in EKF-SLAM or particle sets in FastSLAM can quickly exceed available memory in embedded systems. This limitation necessitates careful algorithm design and optimization strategies to ensure efficient memory utilization without compromising accuracy.

Several optimization approaches have emerged to address these challenges. Sparse approximation techniques reduce computational complexity by exploiting the inherent sparsity in SLAM problems. Information-form filters leverage sparsity in the information matrix to achieve more efficient updates. Submapping strategies partition the environment into manageable segments, allowing for localized updates that reduce overall computational demands.

Parallel computing architectures, including multi-core CPUs and GPUs, offer promising avenues for accelerating probabilistic SLAM computations. GPU implementations of particle filters have demonstrated significant speedups for certain SLAM variants. Additionally, algorithmic innovations such as incremental smoothing and mapping (iSAM) provide efficient solutions by performing incremental updates to the probabilistic representation rather than full recomputations at each step.

The development of specialized hardware accelerators for probabilistic operations represents another frontier in addressing computational challenges. FPGA implementations of key SLAM components have shown potential for significant performance improvements while maintaining low power consumption, making them suitable for mobile robotic platforms with strict energy constraints.

Integration with Sensor Fusion Technologies

The integration of probabilistic methods with sensor fusion technologies represents a significant advancement in enhancing SLAM accuracy. Sensor fusion combines data from multiple sensors to achieve more reliable and precise environmental mapping and localization. When probabilistic approaches are incorporated into this fusion framework, the system gains robust uncertainty management capabilities that single-sensor solutions cannot match.

Multi-sensor systems typically employ combinations of LiDAR, cameras, IMUs, GPS, and radar. Each sensor type offers unique strengths while exhibiting distinct limitations. LiDAR provides excellent depth information but struggles in adverse weather conditions. Cameras deliver rich visual data but face challenges in low-light environments. IMUs offer high-frequency motion data but suffer from drift over time. Probabilistic methods enable optimal weighting of these varied inputs based on their estimated reliability in different contexts.

Bayesian fusion frameworks have emerged as particularly effective approaches for integrating heterogeneous sensor data. These frameworks maintain probabilistic representations of both the environment and sensor characteristics, allowing the system to dynamically adjust confidence levels in different data sources. For instance, when visual features become unreliable due to lighting changes, the system can automatically increase reliance on LiDAR or IMU data while maintaining a coherent probabilistic representation.

Factor graph optimization techniques have demonstrated exceptional performance in multi-sensor SLAM implementations. These graphs represent sensor measurements as probabilistic constraints between state variables, allowing for efficient joint optimization across diverse sensor modalities. The probabilistic nature of factor graphs enables the system to incorporate sensor-specific uncertainty models and detect inconsistencies that might indicate sensor failures or environmental challenges.

Adaptive covariance estimation represents another crucial advancement in probabilistic sensor fusion. Rather than using fixed uncertainty models, modern systems dynamically estimate measurement covariances based on operating conditions. This approach significantly improves performance in challenging scenarios such as dynamic environments or when transitioning between indoor and outdoor settings where sensor characteristics change dramatically.

Real-time implementation of probabilistic sensor fusion remains computationally challenging. Recent research has focused on developing efficient approximation methods that maintain probabilistic rigor while meeting real-time constraints. Techniques such as Rao-Blackwellized particle filters and incremental smoothing algorithms have shown promise in balancing computational efficiency with probabilistic accuracy, making these approaches viable for deployment on platforms with limited processing capabilities.

The integration of deep learning with probabilistic sensor fusion represents the cutting edge of current research. Neural networks can learn complex sensor characteristics and environmental patterns that are difficult to model explicitly, while probabilistic frameworks provide the mathematical foundation for principled uncertainty quantification. This hybrid approach shows particular promise for handling the complex, non-Gaussian noise distributions encountered in real-world SLAM applications.

Multi-sensor systems typically employ combinations of LiDAR, cameras, IMUs, GPS, and radar. Each sensor type offers unique strengths while exhibiting distinct limitations. LiDAR provides excellent depth information but struggles in adverse weather conditions. Cameras deliver rich visual data but face challenges in low-light environments. IMUs offer high-frequency motion data but suffer from drift over time. Probabilistic methods enable optimal weighting of these varied inputs based on their estimated reliability in different contexts.

Bayesian fusion frameworks have emerged as particularly effective approaches for integrating heterogeneous sensor data. These frameworks maintain probabilistic representations of both the environment and sensor characteristics, allowing the system to dynamically adjust confidence levels in different data sources. For instance, when visual features become unreliable due to lighting changes, the system can automatically increase reliance on LiDAR or IMU data while maintaining a coherent probabilistic representation.

Factor graph optimization techniques have demonstrated exceptional performance in multi-sensor SLAM implementations. These graphs represent sensor measurements as probabilistic constraints between state variables, allowing for efficient joint optimization across diverse sensor modalities. The probabilistic nature of factor graphs enables the system to incorporate sensor-specific uncertainty models and detect inconsistencies that might indicate sensor failures or environmental challenges.

Adaptive covariance estimation represents another crucial advancement in probabilistic sensor fusion. Rather than using fixed uncertainty models, modern systems dynamically estimate measurement covariances based on operating conditions. This approach significantly improves performance in challenging scenarios such as dynamic environments or when transitioning between indoor and outdoor settings where sensor characteristics change dramatically.

Real-time implementation of probabilistic sensor fusion remains computationally challenging. Recent research has focused on developing efficient approximation methods that maintain probabilistic rigor while meeting real-time constraints. Techniques such as Rao-Blackwellized particle filters and incremental smoothing algorithms have shown promise in balancing computational efficiency with probabilistic accuracy, making these approaches viable for deployment on platforms with limited processing capabilities.

The integration of deep learning with probabilistic sensor fusion represents the cutting edge of current research. Neural networks can learn complex sensor characteristics and environmental patterns that are difficult to model explicitly, while probabilistic frameworks provide the mathematical foundation for principled uncertainty quantification. This hybrid approach shows particular promise for handling the complex, non-Gaussian noise distributions encountered in real-world SLAM applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!