The Role Of SLAM In Augmented Reality Systems

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Evolution and AR Integration Goals

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly over the past two decades, transforming from a theoretical concept to a practical solution for spatial awareness in autonomous systems. The evolution began with filter-based approaches like Extended Kalman Filters in the early 2000s, progressing to more robust optimization-based methods such as Bundle Adjustment and Graph SLAM by the 2010s. This trajectory has been driven by increasing computational capabilities and the growing demand for precise spatial understanding in various applications.

The integration of SLAM into Augmented Reality (AR) represents a critical technological convergence that addresses fundamental challenges in creating immersive and spatially aware AR experiences. Traditional AR systems relied on marker-based tracking or simple feature detection, which limited their application scope and robustness. SLAM technology has revolutionized this landscape by enabling markerless tracking and real-time environmental mapping, allowing AR content to interact naturally with physical surroundings.

The primary technical goal of SLAM in AR systems is to achieve precise real-time localization and mapping with minimal computational overhead. This involves accurately determining the position and orientation of the user's device while simultaneously building and updating a map of the surrounding environment. The challenge lies in maintaining tracking accuracy under varying lighting conditions, handling dynamic objects, and operating across diverse environments from indoor spaces to outdoor landscapes.

Another crucial objective is reducing latency between physical movement and virtual content adjustment, as even minor delays can break immersion and cause user discomfort. Modern SLAM algorithms aim to process sensor data at frame rate (30-60 Hz) while maintaining sub-centimeter accuracy for positioning and orientation.

Power efficiency represents another significant goal, particularly for mobile AR applications. As SLAM algorithms are computationally intensive, optimizing their performance for battery-powered devices without sacrificing accuracy remains a persistent challenge. Recent developments focus on lightweight SLAM implementations that can run efficiently on smartphones and AR headsets.

Looking forward, the evolution of SLAM technology for AR aims to achieve persistent mapping capabilities, allowing systems to recognize previously visited locations and update environmental maps over time. This persistence will enable more consistent AR experiences and facilitate shared experiences where multiple users can interact with the same virtual content anchored to physical locations.

The integration of SLAM into Augmented Reality (AR) represents a critical technological convergence that addresses fundamental challenges in creating immersive and spatially aware AR experiences. Traditional AR systems relied on marker-based tracking or simple feature detection, which limited their application scope and robustness. SLAM technology has revolutionized this landscape by enabling markerless tracking and real-time environmental mapping, allowing AR content to interact naturally with physical surroundings.

The primary technical goal of SLAM in AR systems is to achieve precise real-time localization and mapping with minimal computational overhead. This involves accurately determining the position and orientation of the user's device while simultaneously building and updating a map of the surrounding environment. The challenge lies in maintaining tracking accuracy under varying lighting conditions, handling dynamic objects, and operating across diverse environments from indoor spaces to outdoor landscapes.

Another crucial objective is reducing latency between physical movement and virtual content adjustment, as even minor delays can break immersion and cause user discomfort. Modern SLAM algorithms aim to process sensor data at frame rate (30-60 Hz) while maintaining sub-centimeter accuracy for positioning and orientation.

Power efficiency represents another significant goal, particularly for mobile AR applications. As SLAM algorithms are computationally intensive, optimizing their performance for battery-powered devices without sacrificing accuracy remains a persistent challenge. Recent developments focus on lightweight SLAM implementations that can run efficiently on smartphones and AR headsets.

Looking forward, the evolution of SLAM technology for AR aims to achieve persistent mapping capabilities, allowing systems to recognize previously visited locations and update environmental maps over time. This persistence will enable more consistent AR experiences and facilitate shared experiences where multiple users can interact with the same virtual content anchored to physical locations.

Market Analysis for SLAM-Enabled AR Applications

The SLAM-enabled AR applications market is experiencing significant growth, driven by the increasing adoption of augmented reality technologies across various industries. The global AR market is projected to reach $88.4 billion by 2026, with SLAM technology serving as a critical enabler for advanced spatial computing applications. This represents a compound annual growth rate of 31.5% from 2021 to 2026, highlighting the robust expansion potential in this sector.

Consumer applications currently dominate the market share, with gaming and retail experiences leading adoption. The gaming sector alone accounts for approximately 40% of AR application revenue, with SLAM technology enabling more immersive and spatially aware experiences. Retail applications follow closely, representing about 25% of the market, as businesses implement virtual try-on solutions and interactive shopping experiences that rely on precise spatial mapping.

Enterprise applications are showing the fastest growth trajectory, particularly in manufacturing, healthcare, and education sectors. Manufacturing implementations of SLAM-enabled AR are growing at 38% annually, driven by applications in assembly line instruction, maintenance procedures, and quality control. The healthcare segment is expanding at 35% annually, with surgical navigation and medical training representing key use cases that benefit from SLAM's precise spatial understanding.

Regional analysis reveals North America currently holds the largest market share at 42%, followed by Asia-Pacific at 31% and Europe at 22%. However, the Asia-Pacific region is demonstrating the highest growth rate at 36% annually, fueled by rapid technology adoption in China, Japan, and South Korea, particularly in manufacturing and consumer electronics sectors.

Market demand is increasingly focused on solutions that offer centimeter-level accuracy, low latency processing (under 20ms), and power efficiency for mobile devices. This has created distinct market segments based on performance tiers, with high-end solutions commanding premium pricing in professional applications, while consumer-grade implementations prioritize cost-effectiveness and battery efficiency.

Key market drivers include the proliferation of AR-capable smartphones, declining costs of depth sensors, increasing enterprise digital transformation initiatives, and growing consumer acceptance of immersive technologies. Barriers to wider adoption include processing power limitations on mobile devices, battery life constraints, and the need for more intuitive user interfaces that leverage spatial understanding without requiring technical expertise.

The market is expected to undergo significant consolidation over the next three years, as larger technology companies acquire specialized SLAM solution providers to enhance their AR capabilities and secure competitive advantages in the rapidly evolving spatial computing landscape.

Consumer applications currently dominate the market share, with gaming and retail experiences leading adoption. The gaming sector alone accounts for approximately 40% of AR application revenue, with SLAM technology enabling more immersive and spatially aware experiences. Retail applications follow closely, representing about 25% of the market, as businesses implement virtual try-on solutions and interactive shopping experiences that rely on precise spatial mapping.

Enterprise applications are showing the fastest growth trajectory, particularly in manufacturing, healthcare, and education sectors. Manufacturing implementations of SLAM-enabled AR are growing at 38% annually, driven by applications in assembly line instruction, maintenance procedures, and quality control. The healthcare segment is expanding at 35% annually, with surgical navigation and medical training representing key use cases that benefit from SLAM's precise spatial understanding.

Regional analysis reveals North America currently holds the largest market share at 42%, followed by Asia-Pacific at 31% and Europe at 22%. However, the Asia-Pacific region is demonstrating the highest growth rate at 36% annually, fueled by rapid technology adoption in China, Japan, and South Korea, particularly in manufacturing and consumer electronics sectors.

Market demand is increasingly focused on solutions that offer centimeter-level accuracy, low latency processing (under 20ms), and power efficiency for mobile devices. This has created distinct market segments based on performance tiers, with high-end solutions commanding premium pricing in professional applications, while consumer-grade implementations prioritize cost-effectiveness and battery efficiency.

Key market drivers include the proliferation of AR-capable smartphones, declining costs of depth sensors, increasing enterprise digital transformation initiatives, and growing consumer acceptance of immersive technologies. Barriers to wider adoption include processing power limitations on mobile devices, battery life constraints, and the need for more intuitive user interfaces that leverage spatial understanding without requiring technical expertise.

The market is expected to undergo significant consolidation over the next three years, as larger technology companies acquire specialized SLAM solution providers to enhance their AR capabilities and secure competitive advantages in the rapidly evolving spatial computing landscape.

Current SLAM Implementation Challenges in AR

Despite significant advancements in SLAM (Simultaneous Localization and Mapping) technology, its implementation in augmented reality systems continues to face substantial challenges. One of the primary obstacles is computational resource constraints. AR applications, particularly on mobile devices, must balance SLAM processing requirements with limited CPU/GPU capabilities while maintaining real-time performance. This creates a fundamental tension between accuracy and efficiency that developers constantly struggle to optimize.

Environmental variability presents another significant challenge. SLAM algorithms perform inconsistently across different lighting conditions, textureless surfaces, reflective materials, and dynamic environments. These variations can cause tracking failures, drift accumulation, and map degradation, severely impacting user experience in AR applications. The unpredictability of real-world environments remains one of the most difficult aspects to address in commercial AR implementations.

Scale and drift management continues to challenge SLAM systems in AR contexts. As users move through environments, accumulated errors in position and orientation estimation can cause virtual content to appear misaligned with the physical world. This drift becomes particularly problematic in large-scale environments or during extended usage sessions, where even minor errors compound over time.

Feature detection and matching reliability represents another critical challenge. Current SLAM implementations struggle with scenes containing repetitive patterns, homogeneous textures, or sudden changes in viewpoint. These scenarios often lead to incorrect feature associations, resulting in mapping errors that compromise the spatial understanding necessary for convincing AR experiences.

Power consumption remains a significant constraint, especially for wearable AR devices. SLAM algorithms typically require continuous sensor data processing and complex computations that drain battery life rapidly. This limitation restricts the practical deployment of sophisticated SLAM solutions in consumer AR products, forcing developers to implement compromises that affect overall system performance.

Integration challenges between SLAM and other AR subsystems further complicate implementation. SLAM must work seamlessly with rendering engines, interaction systems, and content management frameworks. The lack of standardized interfaces between these components creates integration difficulties and optimization challenges across different hardware platforms and software environments.

Finally, robustness to user behavior presents ongoing difficulties. AR users often move unpredictably, rotate devices rapidly, or transition between dramatically different environments. Current SLAM implementations struggle to maintain tracking stability under these conditions, leading to jarring experiences when virtual content suddenly loses proper positioning or disappears entirely.

Environmental variability presents another significant challenge. SLAM algorithms perform inconsistently across different lighting conditions, textureless surfaces, reflective materials, and dynamic environments. These variations can cause tracking failures, drift accumulation, and map degradation, severely impacting user experience in AR applications. The unpredictability of real-world environments remains one of the most difficult aspects to address in commercial AR implementations.

Scale and drift management continues to challenge SLAM systems in AR contexts. As users move through environments, accumulated errors in position and orientation estimation can cause virtual content to appear misaligned with the physical world. This drift becomes particularly problematic in large-scale environments or during extended usage sessions, where even minor errors compound over time.

Feature detection and matching reliability represents another critical challenge. Current SLAM implementations struggle with scenes containing repetitive patterns, homogeneous textures, or sudden changes in viewpoint. These scenarios often lead to incorrect feature associations, resulting in mapping errors that compromise the spatial understanding necessary for convincing AR experiences.

Power consumption remains a significant constraint, especially for wearable AR devices. SLAM algorithms typically require continuous sensor data processing and complex computations that drain battery life rapidly. This limitation restricts the practical deployment of sophisticated SLAM solutions in consumer AR products, forcing developers to implement compromises that affect overall system performance.

Integration challenges between SLAM and other AR subsystems further complicate implementation. SLAM must work seamlessly with rendering engines, interaction systems, and content management frameworks. The lack of standardized interfaces between these components creates integration difficulties and optimization challenges across different hardware platforms and software environments.

Finally, robustness to user behavior presents ongoing difficulties. AR users often move unpredictably, rotate devices rapidly, or transition between dramatically different environments. Current SLAM implementations struggle to maintain tracking stability under these conditions, leading to jarring experiences when virtual content suddenly loses proper positioning or disappears entirely.

Mainstream SLAM Algorithms for AR Systems

01 Visual SLAM techniques for autonomous navigation

Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced algorithms handle feature extraction, matching, and optimization to ensure accurate positioning even in dynamic environments. This approach is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.- Visual SLAM techniques for autonomous navigation: Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced algorithms handle feature detection, matching, and optimization to ensure accurate localization even in dynamic environments. This approach is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.

- SLAM integration with machine learning and AI: Machine learning and artificial intelligence enhance SLAM systems by improving pattern recognition, prediction capabilities, and adaptability. Neural networks can be trained to identify objects, predict movement trajectories, and optimize mapping processes. These AI-enhanced SLAM systems can better handle complex, changing environments and make more intelligent navigation decisions. The integration allows for more robust performance in challenging conditions where traditional algorithms might fail.

- Multi-sensor fusion for robust SLAM implementation: Multi-sensor fusion combines data from various sensors such as cameras, LiDAR, radar, IMUs, and GPS to create more accurate and reliable SLAM systems. By integrating complementary sensor data, these systems can overcome limitations of individual sensors, such as camera performance in low light or LiDAR range constraints. Sensor fusion algorithms synchronize and weight inputs from different sources to produce comprehensive environmental mapping and precise localization across diverse conditions.

- Real-time SLAM optimization techniques: Real-time optimization techniques for SLAM focus on improving computational efficiency while maintaining accuracy. These methods include loop closure detection to correct accumulated errors, graph-based optimization to refine map consistency, and parallel processing architectures to handle intensive calculations. Advanced algorithms reduce computational complexity through selective feature processing and hierarchical mapping approaches, enabling SLAM to operate effectively on devices with limited processing power.

- SLAM applications in augmented reality and mobile devices: SLAM technology enables compelling augmented reality experiences by accurately placing virtual content in real environments. Mobile device implementations of SLAM use onboard sensors to create spatial maps and track device position without external infrastructure. These systems must balance accuracy with power consumption and processing limitations of consumer devices. Optimized algorithms allow for persistent AR experiences where virtual objects maintain proper position and scale relative to the physical world even as users move around.

02 Machine learning integration with SLAM systems

Machine learning algorithms are being integrated with SLAM systems to enhance mapping accuracy and object recognition capabilities. Neural networks can improve feature detection, classification of environmental elements, and prediction of movement patterns. These AI-enhanced SLAM systems can better handle challenging scenarios like changing lighting conditions or dynamic obstacles. The combination of traditional SLAM techniques with deep learning approaches results in more robust localization and mapping solutions.Expand Specific Solutions03 SLAM for augmented and virtual reality applications

SLAM technology enables precise tracking for augmented and virtual reality experiences by mapping physical spaces and accurately positioning virtual content within them. These systems process sensor data to create spatial anchors that allow virtual objects to maintain consistent positions relative to the real world. The technology supports immersive experiences by enabling virtual elements to interact naturally with physical surroundings, creating convincing mixed reality environments for gaming, education, and industrial applications.Expand Specific Solutions04 Multi-sensor fusion approaches for robust SLAM

Multi-sensor fusion approaches combine data from various sensors like cameras, LiDAR, IMUs, and radar to create more robust SLAM systems. By integrating complementary sensor information, these systems can overcome limitations of individual sensors, such as camera performance in low light or LiDAR challenges with reflective surfaces. Sensor fusion algorithms synchronize and weight inputs based on reliability, creating comprehensive environmental models that maintain accuracy across diverse conditions and scenarios.Expand Specific Solutions05 Optimization techniques for real-time SLAM performance

Optimization techniques focus on improving computational efficiency to enable real-time SLAM performance on resource-constrained devices. These approaches include sparse mapping methods that selectively process key features, loop closure detection to correct accumulated errors, and parallel processing architectures that distribute computational loads. Advanced algorithms minimize memory usage while maintaining mapping accuracy, allowing SLAM systems to operate effectively on mobile devices, embedded systems, and other platforms with limited processing capabilities.Expand Specific Solutions

Leading Companies in SLAM-AR Ecosystem

The SLAM (Simultaneous Localization and Mapping) technology in augmented reality systems is currently in a growth phase, with the market expected to expand significantly as AR applications proliferate across consumer and enterprise sectors. Major players like Sony, Microsoft, Qualcomm, and Samsung are driving technological advancement through mature SLAM implementations, while emerging companies such as Auki Labs, Hiscene, and Chengdu Ideal Realm Technology are introducing innovative approaches. Academic institutions including Zhejiang University and the Institute of Automation Chinese Academy of Sciences contribute fundamental research. The competitive landscape features both hardware manufacturers (BOE, GoerTek) and software developers (SenseTime, Tencent) creating integrated solutions. As SLAM technology matures from experimental to commercial applications, we're seeing increased focus on real-time performance, accuracy, and power efficiency to enable seamless AR experiences.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed an integrated SLAM solution for AR applications across their mobile devices and upcoming AR glasses. Their approach combines visual SLAM with sensor fusion from IMUs and depth sensors when available. Samsung's SLAM technology employs a hierarchical mapping system that creates both detailed local maps for immediate interaction and sparse global maps for extended navigation. Their system features real-time surface reconstruction that enables virtual objects to interact naturally with the physical environment, including occlusion and physics-based interactions. Samsung has implemented efficient feature extraction and tracking algorithms optimized for their Exynos processors, balancing performance with power consumption. Their SLAM solution includes relocalization capabilities that allow AR experiences to persist across sessions, with users able to return to previously mapped environments. Samsung's technology incorporates cloud-based map sharing for collaborative AR experiences, while maintaining privacy through selective data sharing. Their system also features adaptive processing that adjusts computational resources based on scene complexity and device capabilities.

Strengths: Well-integrated with Samsung's ecosystem, good balance of performance and power efficiency, and strong hardware integration across diverse devices. Weaknesses: Best performance limited to Samsung's premium devices, and some advanced features may not be available on older hardware.

QUALCOMM, Inc.

Technical Solution: Qualcomm has developed a comprehensive SLAM solution for AR through their Snapdragon XR platform. Their approach integrates visual-inertial SLAM with their dedicated AI processing units to deliver efficient and accurate spatial tracking. Qualcomm's SLAM technology utilizes a multi-sensor fusion approach that combines camera data, IMU readings, and when available, depth information to create robust environmental mapping. Their system features a hardware-accelerated feature detection and tracking pipeline that minimizes power consumption while maintaining high performance. Qualcomm has implemented a distributed SLAM architecture that can offload certain processing tasks to edge computing resources when available, further enhancing performance. Their technology includes advanced drift correction mechanisms and relocalization capabilities that allow AR applications to maintain spatial consistency over extended periods. Qualcomm's SLAM solution also incorporates semantic understanding of environments, enabling more contextual and interactive AR experiences by recognizing surfaces, objects, and spaces.

Strengths: Highly optimized for mobile processors, excellent power efficiency, and widespread adoption across multiple device manufacturers. Weaknesses: Performance can vary across different device implementations, and advanced features may require newer hardware generations.

Key Patents and Research in Visual-Inertial SLAM

Place recognition algorithm

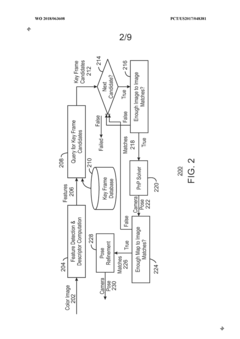

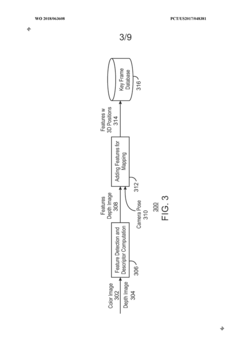

PatentWO2018063608A1

Innovation

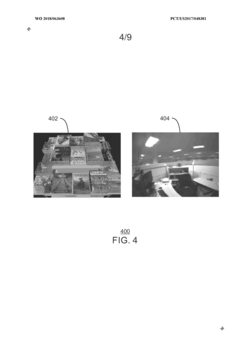

- A place recognition algorithm that extracts a smaller subset of candidate key frames and performs pair-wise matching using a two-stage process, relying solely on image content for key frame addition, enabling real-time camera pose determination by employing ORB binary features and a hierarchical bag-of-words model.

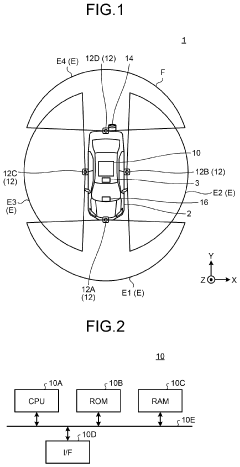

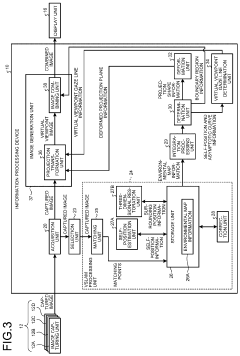

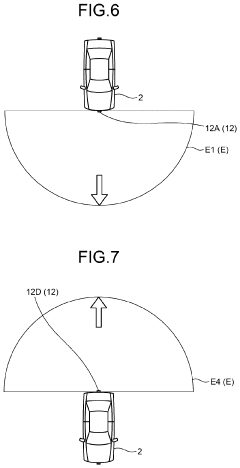

Information processing device, information processing method, and information processing program

PatentPendingUS20240144499A1

Innovation

- The implementation of an information processing device equipped with multiple image capturing units that capture images from different directions, integrating point cloud information from front and rear VSLAM processing to generate stable and comprehensive environmental maps, using alignment and integration processing to combine point cloud data and correct positional information.

Hardware Requirements for SLAM-Based AR Systems

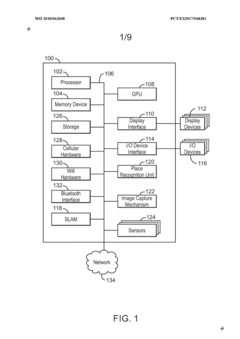

Effective SLAM-based AR systems require specialized hardware configurations to deliver seamless integration of virtual content with the real world. The primary sensing components include cameras, which serve as the fundamental visual input devices. Monocular cameras offer cost-effectiveness but struggle with absolute scale determination, while stereo camera setups provide depth perception through triangulation, enhancing spatial mapping accuracy. Depth cameras, including structured light and time-of-flight sensors, directly capture depth information, significantly improving 3D reconstruction capabilities in varying lighting conditions.

Inertial Measurement Units (IMUs) represent another critical hardware component, combining accelerometers, gyroscopes, and sometimes magnetometers to track device orientation and movement. The fusion of visual and inertial data through visual-inertial odometry (VIO) substantially improves tracking robustness during rapid movements and in visually challenging environments. High-quality IMUs with low drift characteristics are essential for maintaining accurate pose estimation over extended usage periods.

Processing hardware constitutes the computational backbone of SLAM-based AR systems. Mobile AR applications typically rely on energy-efficient ARM processors with dedicated neural processing units (NPUs) to handle real-time SLAM computations while managing power consumption. More sophisticated AR experiences may leverage GPU acceleration to process complex environmental data and render high-fidelity augmentations simultaneously. The hardware must balance computational capability with thermal management and power efficiency to maintain sustained performance.

Display technology represents the final critical hardware element, with optical see-through displays (like those in Microsoft HoloLens) offering direct real-world visibility overlaid with digital content, while video see-through displays (common in smartphone AR) present a camera feed augmented with virtual elements. The display's field of view, resolution, and refresh rate significantly impact the immersiveness and utility of the AR experience.

Connectivity components enable multi-user AR experiences and cloud-assisted processing. Low-latency wireless connections facilitate offloading computationally intensive SLAM operations to edge servers, expanding the capabilities beyond what on-device processing alone could achieve. Additionally, specialized hardware like depth-sensing LiDAR modules, found in newer tablets and smartphones, provide precise environmental mapping capabilities that enhance SLAM accuracy and speed.

The miniaturization trend continues to drive hardware evolution, with manufacturers working to integrate increasingly powerful components into smaller, lighter form factors suitable for wearable AR devices. This progression toward compact yet capable hardware represents one of the most significant engineering challenges in advancing SLAM-based AR toward mainstream adoption.

Inertial Measurement Units (IMUs) represent another critical hardware component, combining accelerometers, gyroscopes, and sometimes magnetometers to track device orientation and movement. The fusion of visual and inertial data through visual-inertial odometry (VIO) substantially improves tracking robustness during rapid movements and in visually challenging environments. High-quality IMUs with low drift characteristics are essential for maintaining accurate pose estimation over extended usage periods.

Processing hardware constitutes the computational backbone of SLAM-based AR systems. Mobile AR applications typically rely on energy-efficient ARM processors with dedicated neural processing units (NPUs) to handle real-time SLAM computations while managing power consumption. More sophisticated AR experiences may leverage GPU acceleration to process complex environmental data and render high-fidelity augmentations simultaneously. The hardware must balance computational capability with thermal management and power efficiency to maintain sustained performance.

Display technology represents the final critical hardware element, with optical see-through displays (like those in Microsoft HoloLens) offering direct real-world visibility overlaid with digital content, while video see-through displays (common in smartphone AR) present a camera feed augmented with virtual elements. The display's field of view, resolution, and refresh rate significantly impact the immersiveness and utility of the AR experience.

Connectivity components enable multi-user AR experiences and cloud-assisted processing. Low-latency wireless connections facilitate offloading computationally intensive SLAM operations to edge servers, expanding the capabilities beyond what on-device processing alone could achieve. Additionally, specialized hardware like depth-sensing LiDAR modules, found in newer tablets and smartphones, provide precise environmental mapping capabilities that enhance SLAM accuracy and speed.

The miniaturization trend continues to drive hardware evolution, with manufacturers working to integrate increasingly powerful components into smaller, lighter form factors suitable for wearable AR devices. This progression toward compact yet capable hardware represents one of the most significant engineering challenges in advancing SLAM-based AR toward mainstream adoption.

Cross-Platform SLAM Standardization Efforts

The standardization of SLAM technologies across different platforms represents a critical frontier in advancing augmented reality systems. Industry leaders including Microsoft, Google, Apple, and Meta have recognized that fragmentation in SLAM implementations creates significant barriers to widespread AR adoption. These efforts aim to establish common frameworks, APIs, and data exchange formats that enable SLAM algorithms to function consistently across diverse hardware configurations and operating systems.

The OpenXR standard, managed by the Khronos Group, has emerged as a promising initiative in this space. It includes specifications for spatial tracking and environmental understanding that directly address cross-platform SLAM functionality. By providing a unified interface for developers, OpenXR reduces the complexity of implementing AR applications that must operate across multiple device ecosystems while maintaining consistent spatial awareness capabilities.

Another significant development is the AR Common API initiative, which focuses specifically on standardizing how SLAM systems interact with applications. This effort seeks to abstract the underlying SLAM implementation details, allowing developers to write code once that can leverage platform-specific SLAM capabilities without modification. The initiative has gained traction among major technology companies seeking to expand the AR application ecosystem.

Open-source projects like OpenSLAM and ORB-SLAM have also contributed to standardization by providing reference implementations that work across multiple platforms. These projects serve as benchmarks against which proprietary SLAM systems can be measured and offer a foundation for companies developing their own cross-platform solutions.

Hardware manufacturers have begun collaborating on sensor standardization efforts that directly impact SLAM performance. The establishment of common specifications for depth sensors, IMUs, and cameras facilitates more consistent SLAM behavior across devices. This hardware-level standardization complements software API efforts by ensuring that the underlying data feeding into SLAM algorithms maintains comparable quality and characteristics regardless of device manufacturer.

Challenges remain in balancing standardization with innovation. Companies must navigate competitive interests while recognizing that a fragmented ecosystem ultimately limits market growth. Recent industry consortiums have formed to address this tension, working to define core SLAM capabilities that should be standardized while leaving room for proprietary advancements in areas like efficiency and accuracy.

The OpenXR standard, managed by the Khronos Group, has emerged as a promising initiative in this space. It includes specifications for spatial tracking and environmental understanding that directly address cross-platform SLAM functionality. By providing a unified interface for developers, OpenXR reduces the complexity of implementing AR applications that must operate across multiple device ecosystems while maintaining consistent spatial awareness capabilities.

Another significant development is the AR Common API initiative, which focuses specifically on standardizing how SLAM systems interact with applications. This effort seeks to abstract the underlying SLAM implementation details, allowing developers to write code once that can leverage platform-specific SLAM capabilities without modification. The initiative has gained traction among major technology companies seeking to expand the AR application ecosystem.

Open-source projects like OpenSLAM and ORB-SLAM have also contributed to standardization by providing reference implementations that work across multiple platforms. These projects serve as benchmarks against which proprietary SLAM systems can be measured and offer a foundation for companies developing their own cross-platform solutions.

Hardware manufacturers have begun collaborating on sensor standardization efforts that directly impact SLAM performance. The establishment of common specifications for depth sensors, IMUs, and cameras facilitates more consistent SLAM behavior across devices. This hardware-level standardization complements software API efforts by ensuring that the underlying data feeding into SLAM algorithms maintains comparable quality and characteristics regardless of device manufacturer.

Challenges remain in balancing standardization with innovation. Companies must navigate competitive interests while recognizing that a fragmented ecosystem ultimately limits market growth. Recent industry consortiums have formed to address this tension, working to define core SLAM capabilities that should be standardized while leaving room for proprietary advancements in areas like efficiency and accuracy.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!