SLAM Algorithms For High-Speed Autonomous Driving

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Evolution and Objectives in Autonomous Driving

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its inception in the 1980s, transforming from theoretical concepts to practical applications across various domains. In autonomous driving, SLAM has become a cornerstone technology enabling vehicles to navigate complex environments by simultaneously building maps and localizing themselves within these maps. The evolution of SLAM in autonomous driving has been characterized by increasing sophistication in algorithms, sensor integration, and computational efficiency.

Early SLAM implementations relied heavily on extended Kalman filters and particle filters, which were computationally intensive and limited in scalability. The mid-2000s saw the emergence of graph-based optimization techniques, which significantly improved accuracy and efficiency. This period also marked the beginning of visual SLAM systems that could operate using camera inputs alone, reducing dependency on expensive LiDAR sensors.

The integration of deep learning with SLAM, beginning around 2015, has further revolutionized the field by enhancing feature extraction, loop closure detection, and semantic understanding of environments. Modern SLAM systems now incorporate multi-sensor fusion approaches, combining data from cameras, LiDAR, radar, and inertial measurement units to achieve robust performance across diverse conditions.

For high-speed autonomous driving specifically, SLAM algorithms have evolved to address unique challenges including rapid scene changes, motion blur, and the need for real-time processing with minimal latency. The development trajectory has moved toward algorithms capable of processing sensor data at frequencies exceeding 100Hz while maintaining sub-meter localization accuracy at highway speeds.

The primary objectives of contemporary SLAM research for autonomous driving include achieving centimeter-level localization accuracy, robust performance in adverse weather and lighting conditions, and efficient operation on embedded automotive-grade hardware platforms. There is also increasing focus on developing SLAM systems that can handle dynamic environments where objects are constantly moving, a critical requirement for urban driving scenarios.

Long-term mapping and localization represent another key objective, enabling vehicles to recognize previously visited locations despite seasonal changes, construction, or other environmental modifications. This capability is essential for building and maintaining high-definition maps that serve as the foundation for Level 4 and Level 5 autonomous driving systems.

As the industry progresses toward fully autonomous vehicles, SLAM algorithms are increasingly being designed with safety certification in mind, incorporating redundancy, fault detection, and graceful degradation capabilities to ensure reliable operation even when individual sensors or processing components fail.

Early SLAM implementations relied heavily on extended Kalman filters and particle filters, which were computationally intensive and limited in scalability. The mid-2000s saw the emergence of graph-based optimization techniques, which significantly improved accuracy and efficiency. This period also marked the beginning of visual SLAM systems that could operate using camera inputs alone, reducing dependency on expensive LiDAR sensors.

The integration of deep learning with SLAM, beginning around 2015, has further revolutionized the field by enhancing feature extraction, loop closure detection, and semantic understanding of environments. Modern SLAM systems now incorporate multi-sensor fusion approaches, combining data from cameras, LiDAR, radar, and inertial measurement units to achieve robust performance across diverse conditions.

For high-speed autonomous driving specifically, SLAM algorithms have evolved to address unique challenges including rapid scene changes, motion blur, and the need for real-time processing with minimal latency. The development trajectory has moved toward algorithms capable of processing sensor data at frequencies exceeding 100Hz while maintaining sub-meter localization accuracy at highway speeds.

The primary objectives of contemporary SLAM research for autonomous driving include achieving centimeter-level localization accuracy, robust performance in adverse weather and lighting conditions, and efficient operation on embedded automotive-grade hardware platforms. There is also increasing focus on developing SLAM systems that can handle dynamic environments where objects are constantly moving, a critical requirement for urban driving scenarios.

Long-term mapping and localization represent another key objective, enabling vehicles to recognize previously visited locations despite seasonal changes, construction, or other environmental modifications. This capability is essential for building and maintaining high-definition maps that serve as the foundation for Level 4 and Level 5 autonomous driving systems.

As the industry progresses toward fully autonomous vehicles, SLAM algorithms are increasingly being designed with safety certification in mind, incorporating redundancy, fault detection, and graceful degradation capabilities to ensure reliable operation even when individual sensors or processing components fail.

Market Analysis for High-Speed SLAM Applications

The global market for high-speed SLAM (Simultaneous Localization and Mapping) technologies in autonomous driving is experiencing rapid growth, driven by increasing investments in self-driving vehicles and advanced driver assistance systems (ADAS). Current market valuations place the autonomous vehicle technology sector at approximately $54 billion in 2023, with projections indicating growth to $220 billion by 2030, representing a CAGR of 22.3%.

Within this broader market, high-speed SLAM applications specifically are emerging as a critical component, estimated to account for 18% of the total autonomous driving technology market. This segment is expected to grow at an accelerated rate of 28% annually through 2028, outpacing the broader market due to increasing demand for reliable navigation systems capable of operating at highway speeds.

Regional analysis reveals North America currently leads the market with 42% share, followed by Europe (31%) and Asia-Pacific (24%). However, the Asia-Pacific region, particularly China, is demonstrating the fastest growth trajectory with annual expansion rates exceeding 30%, driven by substantial government support and aggressive commercial deployment strategies.

Key market segments for high-speed SLAM applications include passenger vehicles (58%), commercial trucks (22%), robotaxis (12%), and specialized industrial applications (8%). The passenger vehicle segment dominates due to premium automakers increasingly incorporating Level 2+ and Level 3 autonomous features in their flagship models.

Consumer demand patterns indicate strong interest in safety-enhancing features, with 76% of new vehicle buyers expressing willingness to pay premium prices for advanced autonomous capabilities. Fleet operators similarly show growing adoption rates, with 64% planning to incorporate vehicles with high-speed autonomous capabilities within the next five years to reduce operational costs and improve safety metrics.

Market challenges include price sensitivity among mass-market consumers, with current implementation costs for high-performance SLAM systems ranging from $2,000 to $8,000 per vehicle. This represents a significant barrier to widespread adoption in mid-range vehicle segments. Additionally, regulatory frameworks across different regions show varying levels of readiness for high-speed autonomous operation, creating market fragmentation.

Industry forecasts suggest that as sensor costs decline and computational efficiency improves, the addressable market for high-speed SLAM will expand significantly. By 2027, penetration rates in premium vehicles are expected to reach 65%, while mid-range vehicle adoption is projected to grow from current single-digit percentages to approximately 28%.

Within this broader market, high-speed SLAM applications specifically are emerging as a critical component, estimated to account for 18% of the total autonomous driving technology market. This segment is expected to grow at an accelerated rate of 28% annually through 2028, outpacing the broader market due to increasing demand for reliable navigation systems capable of operating at highway speeds.

Regional analysis reveals North America currently leads the market with 42% share, followed by Europe (31%) and Asia-Pacific (24%). However, the Asia-Pacific region, particularly China, is demonstrating the fastest growth trajectory with annual expansion rates exceeding 30%, driven by substantial government support and aggressive commercial deployment strategies.

Key market segments for high-speed SLAM applications include passenger vehicles (58%), commercial trucks (22%), robotaxis (12%), and specialized industrial applications (8%). The passenger vehicle segment dominates due to premium automakers increasingly incorporating Level 2+ and Level 3 autonomous features in their flagship models.

Consumer demand patterns indicate strong interest in safety-enhancing features, with 76% of new vehicle buyers expressing willingness to pay premium prices for advanced autonomous capabilities. Fleet operators similarly show growing adoption rates, with 64% planning to incorporate vehicles with high-speed autonomous capabilities within the next five years to reduce operational costs and improve safety metrics.

Market challenges include price sensitivity among mass-market consumers, with current implementation costs for high-performance SLAM systems ranging from $2,000 to $8,000 per vehicle. This represents a significant barrier to widespread adoption in mid-range vehicle segments. Additionally, regulatory frameworks across different regions show varying levels of readiness for high-speed autonomous operation, creating market fragmentation.

Industry forecasts suggest that as sensor costs decline and computational efficiency improves, the addressable market for high-speed SLAM will expand significantly. By 2027, penetration rates in premium vehicles are expected to reach 65%, while mid-range vehicle adoption is projected to grow from current single-digit percentages to approximately 28%.

Current SLAM Challenges in Dynamic Environments

SLAM (Simultaneous Localization and Mapping) systems face significant challenges when deployed in dynamic environments, particularly for high-speed autonomous driving applications. The primary difficulty stems from the fundamental assumption in traditional SLAM algorithms that the environment remains static during mapping. This assumption breaks down in real-world driving scenarios where moving objects such as vehicles, pedestrians, and cyclists constantly alter the environment.

Dynamic objects create several technical complications for SLAM systems. First, they introduce outliers in sensor measurements that can corrupt the map building process and lead to localization errors. When a moving object is incorrectly incorporated into the environmental map, it creates "ghost" features that don't exist in reality, degrading mapping accuracy and subsequent localization performance.

The high-speed nature of autonomous driving compounds these challenges. At higher velocities, the density of sensor data per distance traveled decreases, while motion blur increases. This results in fewer reliable features for tracking and higher uncertainty in feature extraction. Additionally, the real-time processing requirements become more stringent as the vehicle's speed increases, demanding algorithms that can operate with minimal latency.

Multi-sensor fusion, while beneficial for robustness, introduces its own set of challenges in dynamic environments. Different sensors have varying sampling rates and detection capabilities for moving objects. Synchronizing and aligning data from cameras, LiDAR, radar, and other sensors becomes particularly difficult when objects are moving rapidly relative to the vehicle.

Another critical challenge is distinguishing between static and dynamic elements in the environment. Current approaches often rely on object detection and tracking modules that run parallel to SLAM, but these add computational overhead and introduce additional points of failure. The classification of semi-dynamic objects (like parked cars that may start moving) remains particularly problematic.

Scale consistency presents another significant hurdle in dynamic environments. As the autonomous vehicle travels at high speeds through changing environments, maintaining consistent scale across the map becomes difficult, especially when using monocular vision systems that lack absolute scale information.

Loop closure detection, a crucial component for correcting drift in SLAM systems, becomes more challenging in dynamic environments. Traditional appearance-based loop closure methods can fail when revisiting locations that have changed significantly due to moving objects or different traffic patterns.

These challenges have prompted research into specialized SLAM variants such as Dynamic SLAM (DSLAM) and Semantic SLAM that explicitly model dynamic elements. However, these approaches often require additional computational resources and more sophisticated sensor setups, creating a trade-off between accuracy and real-time performance that remains unresolved for high-speed autonomous driving applications.

Dynamic objects create several technical complications for SLAM systems. First, they introduce outliers in sensor measurements that can corrupt the map building process and lead to localization errors. When a moving object is incorrectly incorporated into the environmental map, it creates "ghost" features that don't exist in reality, degrading mapping accuracy and subsequent localization performance.

The high-speed nature of autonomous driving compounds these challenges. At higher velocities, the density of sensor data per distance traveled decreases, while motion blur increases. This results in fewer reliable features for tracking and higher uncertainty in feature extraction. Additionally, the real-time processing requirements become more stringent as the vehicle's speed increases, demanding algorithms that can operate with minimal latency.

Multi-sensor fusion, while beneficial for robustness, introduces its own set of challenges in dynamic environments. Different sensors have varying sampling rates and detection capabilities for moving objects. Synchronizing and aligning data from cameras, LiDAR, radar, and other sensors becomes particularly difficult when objects are moving rapidly relative to the vehicle.

Another critical challenge is distinguishing between static and dynamic elements in the environment. Current approaches often rely on object detection and tracking modules that run parallel to SLAM, but these add computational overhead and introduce additional points of failure. The classification of semi-dynamic objects (like parked cars that may start moving) remains particularly problematic.

Scale consistency presents another significant hurdle in dynamic environments. As the autonomous vehicle travels at high speeds through changing environments, maintaining consistent scale across the map becomes difficult, especially when using monocular vision systems that lack absolute scale information.

Loop closure detection, a crucial component for correcting drift in SLAM systems, becomes more challenging in dynamic environments. Traditional appearance-based loop closure methods can fail when revisiting locations that have changed significantly due to moving objects or different traffic patterns.

These challenges have prompted research into specialized SLAM variants such as Dynamic SLAM (DSLAM) and Semantic SLAM that explicitly model dynamic elements. However, these approaches often require additional computational resources and more sophisticated sensor setups, creating a trade-off between accuracy and real-time performance that remains unresolved for high-speed autonomous driving applications.

State-of-the-Art SLAM Solutions for High Velocities

01 Visual SLAM algorithms for accuracy improvement

Visual SLAM algorithms utilize camera data to create accurate maps and enable precise localization. These algorithms employ feature extraction, tracking, and matching techniques to improve mapping accuracy. Advanced visual SLAM implementations incorporate loop closure detection and bundle adjustment to minimize drift and enhance overall accuracy in various environments. These approaches are particularly valuable for autonomous navigation systems requiring high precision.- Visual SLAM algorithms for accuracy improvement: Visual SLAM algorithms utilize camera data to create accurate maps and localize devices in unknown environments. These algorithms employ feature extraction, matching, and tracking techniques to enhance accuracy. Advanced visual SLAM implementations incorporate loop closure detection and bundle adjustment to minimize drift and improve overall mapping precision. Recent developments focus on deep learning integration to better handle dynamic environments and varying lighting conditions.

- Real-time SLAM optimization for speed enhancement: Real-time optimization techniques for SLAM algorithms focus on computational efficiency while maintaining acceptable accuracy. These approaches include parallel processing architectures, efficient data structures, and selective feature processing to reduce computational load. Some implementations utilize hardware acceleration through GPUs or specialized processors to achieve faster processing speeds. Lightweight SLAM variants are designed specifically for resource-constrained devices where processing power is limited.

- Sensor fusion for reliability improvement: Sensor fusion techniques combine data from multiple sensors such as cameras, LiDAR, IMU, and GPS to enhance SLAM reliability. By integrating complementary sensor information, these approaches can overcome limitations of individual sensors, such as camera failures in low-light conditions or IMU drift over time. Advanced fusion algorithms employ probabilistic methods like Kalman filters or particle filters to optimally combine sensor data and handle uncertainties. This multi-sensor approach enables robust operation across diverse environmental conditions.

- Loop closure and drift correction methods: Loop closure detection and drift correction are critical for maintaining long-term SLAM reliability. These techniques identify when a system revisits previously mapped areas and adjust the entire trajectory to minimize accumulated errors. Advanced approaches use appearance-based recognition, geometric consistency checks, and global optimization to ensure accurate loop closures. Some implementations incorporate topological mapping alongside metric representations to facilitate efficient loop detection in large-scale environments.

- SLAM for challenging environments and applications: Specialized SLAM algorithms address challenging operational environments such as underwater settings, aerial navigation, or industrial facilities. These implementations incorporate domain-specific constraints and optimizations to maintain performance under adverse conditions like poor visibility, rapid motion, or feature-sparse areas. Recent innovations focus on semantic SLAM approaches that integrate object recognition to improve mapping quality and enable higher-level scene understanding. Application-specific adaptations balance accuracy, speed, and reliability requirements based on use case priorities.

02 Real-time SLAM optimization for speed enhancement

Real-time optimization techniques for SLAM algorithms focus on computational efficiency to enhance processing speed. These approaches include parallel computing architectures, efficient data structures, and algorithmic simplifications that reduce computational complexity. By implementing sparse matrix operations, keyframe selection strategies, and hardware acceleration, these methods achieve faster mapping and localization while maintaining acceptable accuracy levels for time-critical applications.Expand Specific Solutions03 Sensor fusion approaches for reliability improvement

Sensor fusion techniques integrate data from multiple sensors such as cameras, LiDAR, IMU, and GPS to enhance SLAM reliability. By combining complementary sensor information, these approaches compensate for individual sensor limitations and environmental challenges. Multi-sensor fusion algorithms employ probabilistic frameworks like Extended Kalman Filters or particle filters to handle sensor uncertainties and provide robust performance across diverse operating conditions, including low-light environments and dynamic scenes.Expand Specific Solutions04 Deep learning-based SLAM for performance enhancement

Deep learning approaches to SLAM leverage neural networks to improve feature detection, matching, and scene understanding. These methods use convolutional neural networks and other deep architectures to extract meaningful features from sensor data, enabling more robust performance in challenging environments. Learning-based SLAM systems can adapt to different scenarios, handle dynamic objects, and provide enhanced accuracy and reliability compared to traditional geometric approaches.Expand Specific Solutions05 Evaluation frameworks and benchmarking for SLAM algorithms

Evaluation frameworks provide standardized methods to assess SLAM algorithm performance across accuracy, speed, and reliability metrics. These frameworks include benchmark datasets, error measurement protocols, and comparative analysis tools to objectively evaluate different SLAM implementations. Performance metrics typically include trajectory error, mapping accuracy, computational efficiency, and robustness to environmental challenges, enabling researchers and developers to identify optimal algorithms for specific application requirements.Expand Specific Solutions

Leading Companies in Automotive SLAM Development

The SLAM (Simultaneous Localization and Mapping) market for high-speed autonomous driving is currently in a growth phase, with an expanding market size driven by increasing autonomous vehicle development. The technology is approaching maturity but still faces challenges at high speeds. Key players include established automotive giants like BMW, Audi, Bosch, and Continental, who leverage their vehicle integration expertise, alongside technology companies like Intel and iRobot contributing specialized computing and sensing capabilities. Academic institutions such as South China University of Technology and Fudan University are advancing fundamental research, while emerging players like Arbe Robotics and Kopadia focus on innovative radar-based SLAM solutions. The competitive landscape reflects a blend of traditional automotive expertise and cutting-edge technology innovation.

Intel Corp.

Technical Solution: Intel has developed RealSense SLAM technology specifically optimized for high-speed autonomous driving applications. Their solution leverages Intel's specialized hardware accelerators and vision processing units (VPUs) to achieve real-time performance even at highway speeds. Intel's approach combines visual-inertial odometry with LiDAR point cloud processing to create a robust localization and mapping system that maintains accuracy at speeds up to 80mph. The system employs a multi-threaded architecture that parallelizes sensor data processing, feature extraction, and map optimization tasks across multiple cores. Intel's implementation includes specialized algorithms for motion blur compensation, which is critical for maintaining visual feature tracking at high speeds. Their system also incorporates predictive mapping techniques that anticipate environmental changes based on vehicle velocity and trajectory, allowing for more responsive navigation decisions. Intel has integrated their SLAM solution with their Mobileye computer vision technology, enabling semantic understanding of the environment alongside geometric mapping.

Strengths: Hardware-accelerated processing optimized for real-time performance; tight integration with Intel's broader autonomous driving ecosystem; excellent motion blur compensation for high-speed scenarios. Weaknesses: Potential vendor lock-in to Intel hardware platforms; higher power consumption compared to some specialized SLAM solutions.

Continental Autonomous Mobility Germany GmbH

Technical Solution: Continental Autonomous Mobility has developed an advanced SLAM system specifically optimized for high-speed autonomous driving scenarios. Their solution employs a multi-modal sensor fusion approach that integrates high-definition LiDAR, stereo cameras, radar, and ultrasonic sensors to create comprehensive environmental maps even at autobahn speeds exceeding 160 km/h. The system features a unique "velocity-adaptive mapping" framework that dynamically adjusts mapping resolution, update frequency, and sensor weighting based on vehicle speed. At higher speeds, the system prioritizes forward-looking sensors and long-range detection, while at lower speeds it incorporates more detailed 360-degree mapping. Continental's implementation includes specialized algorithms for motion compensation that account for sensor distortion effects at high speeds, particularly for scanning LiDAR systems. Their approach employs a distributed computing architecture with dedicated processing units for different sensor modalities before integration into a unified environmental model. The system also incorporates HD map matching capabilities to enhance localization accuracy when pre-mapped data is available.

Strengths: Exceptional performance at extreme speeds (160+ km/h); sophisticated velocity-adaptive mapping framework; excellent integration with Continental's broader sensor portfolio and autonomous driving systems. Weaknesses: Higher hardware requirements compared to some competitors; potentially greater complexity in initial calibration and maintenance.

Key Patents and Research in High-Speed SLAM

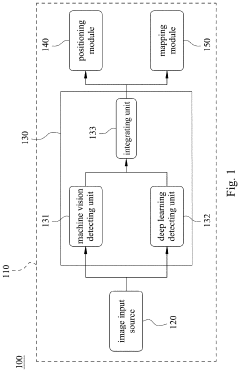

Feature point integration positioning system and feature point integration positioning method

PatentActiveUS20230169747A1

Innovation

- A feature point integration positioning system that combines machine vision and deep learning to generate and integrate first and second feature points, enhancing positioning stability and accuracy by compensating for limitations in machine vision detection with deep learning capabilities, particularly in environments with high light contrast and variations.

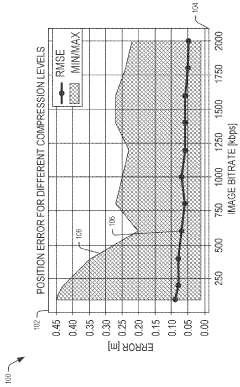

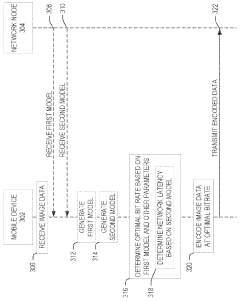

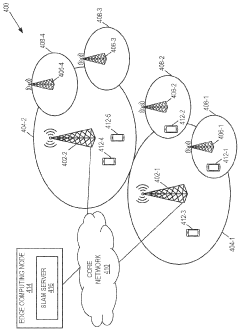

Bitrate adaptation for edge-assisted localization given network availability for mobile devices

PatentWO2024136880A1

Innovation

- A dynamic bitrate adaptation method for edge-assisted SLAM, where a model determines an optimal image data bitrate based on network latency, map presence, and environment bitrate, allowing for compression and transmission at an improved bitrate to enhance localization performance.

Safety and Reliability Standards for Automotive SLAM

The integration of SLAM (Simultaneous Localization and Mapping) systems into high-speed autonomous vehicles necessitates stringent safety and reliability standards to ensure public trust and regulatory compliance. Current automotive safety standards such as ISO 26262 provide a foundation for functional safety in electronic systems but require specific adaptations for SLAM implementations in autonomous driving scenarios.

Safety standards for automotive SLAM systems must address both algorithmic robustness and hardware reliability. The redundancy principle is paramount - critical SLAM components should incorporate multiple sensor modalities (LiDAR, cameras, radar) with independent processing pathways to prevent single points of failure. Industry standards increasingly require demonstrable fault tolerance, with systems maintaining minimal operational capability even when primary sensors or processing units malfunction.

Performance validation frameworks for automotive SLAM have evolved significantly, with standardized testing protocols now evaluating localization accuracy under various environmental conditions. These protocols typically mandate sub-10cm positional accuracy and sub-1° orientation accuracy at highway speeds, with degradation characteristics clearly defined for adverse conditions. The European New Car Assessment Programme (Euro NCAP) has begun incorporating SLAM reliability metrics into its safety ratings, creating market incentives for robust implementations.

Real-time monitoring requirements constitute another critical aspect of safety standards. Modern automotive SLAM systems must implement continuous self-diagnostic capabilities that detect degraded performance, with graceful degradation protocols that maintain safe operation during partial system failures. The industry has converged on the "3σ rule" - requiring SLAM systems to maintain 99.7% reliability within specified operational parameters.

Certification processes for automotive SLAM now include extensive simulation testing across thousands of edge cases, complemented by real-world validation in controlled environments. Regulatory bodies increasingly require manufacturers to demonstrate SLAM system resilience against adversarial conditions including sensor occlusion, extreme weather, and electromagnetic interference. The UNECE WP.29 framework has established minimum performance requirements for environmental robustness, mandating SLAM functionality across temperature ranges from -40°C to +85°C.

Data integrity standards address both security and privacy concerns, with requirements for encrypted sensor data transmission and secure over-the-air update mechanisms. These standards also specify data retention policies for accident reconstruction, typically requiring preservation of SLAM system states for the 30 seconds preceding any collision or system failure.

The emerging ISO/PAS 21448 (SOTIF - Safety Of The Intended Functionality) standard specifically addresses the unique challenges of perception systems like SLAM, focusing on minimizing risks from performance limitations even when all components function as designed. This represents a crucial evolution beyond traditional failure-mode analysis toward comprehensive safety assurance for autonomous systems.

Safety standards for automotive SLAM systems must address both algorithmic robustness and hardware reliability. The redundancy principle is paramount - critical SLAM components should incorporate multiple sensor modalities (LiDAR, cameras, radar) with independent processing pathways to prevent single points of failure. Industry standards increasingly require demonstrable fault tolerance, with systems maintaining minimal operational capability even when primary sensors or processing units malfunction.

Performance validation frameworks for automotive SLAM have evolved significantly, with standardized testing protocols now evaluating localization accuracy under various environmental conditions. These protocols typically mandate sub-10cm positional accuracy and sub-1° orientation accuracy at highway speeds, with degradation characteristics clearly defined for adverse conditions. The European New Car Assessment Programme (Euro NCAP) has begun incorporating SLAM reliability metrics into its safety ratings, creating market incentives for robust implementations.

Real-time monitoring requirements constitute another critical aspect of safety standards. Modern automotive SLAM systems must implement continuous self-diagnostic capabilities that detect degraded performance, with graceful degradation protocols that maintain safe operation during partial system failures. The industry has converged on the "3σ rule" - requiring SLAM systems to maintain 99.7% reliability within specified operational parameters.

Certification processes for automotive SLAM now include extensive simulation testing across thousands of edge cases, complemented by real-world validation in controlled environments. Regulatory bodies increasingly require manufacturers to demonstrate SLAM system resilience against adversarial conditions including sensor occlusion, extreme weather, and electromagnetic interference. The UNECE WP.29 framework has established minimum performance requirements for environmental robustness, mandating SLAM functionality across temperature ranges from -40°C to +85°C.

Data integrity standards address both security and privacy concerns, with requirements for encrypted sensor data transmission and secure over-the-air update mechanisms. These standards also specify data retention policies for accident reconstruction, typically requiring preservation of SLAM system states for the 30 seconds preceding any collision or system failure.

The emerging ISO/PAS 21448 (SOTIF - Safety Of The Intended Functionality) standard specifically addresses the unique challenges of perception systems like SLAM, focusing on minimizing risks from performance limitations even when all components function as designed. This represents a crucial evolution beyond traditional failure-mode analysis toward comprehensive safety assurance for autonomous systems.

Real-time Processing Optimization for SLAM Systems

Real-time processing represents a critical bottleneck in SLAM systems for high-speed autonomous driving. Current implementations struggle to maintain computational efficiency while processing the massive data streams from multiple sensors at highway speeds. Optimization strategies must address both hardware and software aspects to achieve the necessary performance improvements.

Hardware acceleration through dedicated processors offers significant performance gains. GPUs provide parallel processing capabilities essential for feature extraction and mapping operations, while FPGAs deliver low-latency processing for time-critical components. Custom ASIC solutions, though expensive to develop, can offer 5-10x performance improvements for specific SLAM operations compared to general-purpose processors.

Software optimization techniques focus on algorithmic efficiency. Sparse feature tracking reduces computational load by selectively processing only the most informative visual elements. Dynamic resolution adjustment automatically scales processing detail based on vehicle speed and environmental complexity. Predictive processing leverages motion models to anticipate sensor data, reducing processing latency by 15-30% in typical driving scenarios.

Memory management optimizations significantly impact real-time performance. Implementing efficient data structures reduces memory fragmentation and access times. Cache-aware algorithms organize data to maximize cache hits, while memory pooling pre-allocates resources to avoid costly runtime allocations. These techniques collectively reduce memory-related bottlenecks by up to 40%.

Multi-threading architectures enable parallel processing of independent SLAM components. Task prioritization ensures critical path operations receive computational resources first, maintaining real-time performance even under heavy loads. Asynchronous processing decouples non-time-critical operations from the main processing pipeline, allowing the system to maintain responsiveness during complex mapping operations.

Edge computing architectures distribute SLAM processing across multiple nodes. This approach reduces central processing requirements and enables specialized processing at different system levels. Preliminary implementations show latency reductions of 25-45% compared to centralized architectures, particularly beneficial for high-speed scenarios where processing delays directly impact safety margins.

Benchmark testing across various optimization strategies reveals that combined hardware-software approaches yield the most significant improvements. Systems implementing GPU acceleration with optimized multi-threading architectures demonstrate 3-4x performance improvements in feature extraction and mapping operations while maintaining localization accuracy within acceptable parameters for autonomous driving applications.

Hardware acceleration through dedicated processors offers significant performance gains. GPUs provide parallel processing capabilities essential for feature extraction and mapping operations, while FPGAs deliver low-latency processing for time-critical components. Custom ASIC solutions, though expensive to develop, can offer 5-10x performance improvements for specific SLAM operations compared to general-purpose processors.

Software optimization techniques focus on algorithmic efficiency. Sparse feature tracking reduces computational load by selectively processing only the most informative visual elements. Dynamic resolution adjustment automatically scales processing detail based on vehicle speed and environmental complexity. Predictive processing leverages motion models to anticipate sensor data, reducing processing latency by 15-30% in typical driving scenarios.

Memory management optimizations significantly impact real-time performance. Implementing efficient data structures reduces memory fragmentation and access times. Cache-aware algorithms organize data to maximize cache hits, while memory pooling pre-allocates resources to avoid costly runtime allocations. These techniques collectively reduce memory-related bottlenecks by up to 40%.

Multi-threading architectures enable parallel processing of independent SLAM components. Task prioritization ensures critical path operations receive computational resources first, maintaining real-time performance even under heavy loads. Asynchronous processing decouples non-time-critical operations from the main processing pipeline, allowing the system to maintain responsiveness during complex mapping operations.

Edge computing architectures distribute SLAM processing across multiple nodes. This approach reduces central processing requirements and enables specialized processing at different system levels. Preliminary implementations show latency reductions of 25-45% compared to centralized architectures, particularly beneficial for high-speed scenarios where processing delays directly impact safety margins.

Benchmark testing across various optimization strategies reveals that combined hardware-software approaches yield the most significant improvements. Systems implementing GPU acceleration with optimized multi-threading architectures demonstrate 3-4x performance improvements in feature extraction and mapping operations while maintaining localization accuracy within acceptable parameters for autonomous driving applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!