SLAM For Autonomous Ground Vehicles In Harsh Environments

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Evolution and Objectives in Harsh Environments

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its inception in the 1980s, transforming from theoretical concepts to practical applications across various domains. The evolution of SLAM for autonomous ground vehicles (AGVs) has been particularly notable, with early systems relying on simple feature extraction and sparse mapping techniques that performed adequately only in controlled environments. These initial implementations struggled with dynamic obstacles, varying lighting conditions, and computational limitations.

The mid-2000s marked a pivotal shift with the introduction of probabilistic approaches such as Extended Kalman Filter (EKF) SLAM and particle filter methods, which improved robustness by accounting for sensor uncertainties. Subsequently, graph-based optimization techniques emerged, enabling more accurate map representations and trajectory estimations. The integration of visual sensors alongside traditional LiDAR and radar systems further enhanced SLAM capabilities, allowing for richer environmental perception.

Recent advancements have focused on addressing the unique challenges posed by harsh environments, which include extreme weather conditions (snow, rain, fog), unstructured terrains (off-road, construction sites), poor visibility scenarios, and electromagnetic interference. These conditions significantly degrade sensor performance and challenge traditional SLAM algorithms that assume relatively stable environmental conditions.

The primary objective of modern SLAM research for AGVs in harsh environments is to develop systems that maintain localization accuracy and mapping fidelity despite adverse conditions. This includes creating algorithms that can handle sensor degradation, identify and filter unreliable data, and adapt to rapidly changing environmental conditions. Multi-sensor fusion approaches have become increasingly important, combining complementary sensing modalities to compensate for individual sensor weaknesses.

Another critical objective is computational efficiency, as harsh environments often require more complex processing while vehicles may have limited computational resources. Real-time performance remains essential for practical deployment, necessitating optimized algorithms and hardware acceleration techniques. Additionally, modern SLAM systems aim to incorporate semantic understanding, enabling vehicles to not only map their surroundings but also comprehend the environment's structure and identify potential hazards specific to challenging conditions.

Looking forward, the trajectory of SLAM development for harsh environments points toward more adaptive and learning-based approaches. Systems that can dynamically adjust their parameters based on environmental conditions and leverage historical data to improve performance in recurring scenarios represent the frontier of research. The ultimate goal remains creating SLAM solutions that provide consistent, reliable performance regardless of environmental challenges, thereby expanding the operational domain of autonomous ground vehicles.

The mid-2000s marked a pivotal shift with the introduction of probabilistic approaches such as Extended Kalman Filter (EKF) SLAM and particle filter methods, which improved robustness by accounting for sensor uncertainties. Subsequently, graph-based optimization techniques emerged, enabling more accurate map representations and trajectory estimations. The integration of visual sensors alongside traditional LiDAR and radar systems further enhanced SLAM capabilities, allowing for richer environmental perception.

Recent advancements have focused on addressing the unique challenges posed by harsh environments, which include extreme weather conditions (snow, rain, fog), unstructured terrains (off-road, construction sites), poor visibility scenarios, and electromagnetic interference. These conditions significantly degrade sensor performance and challenge traditional SLAM algorithms that assume relatively stable environmental conditions.

The primary objective of modern SLAM research for AGVs in harsh environments is to develop systems that maintain localization accuracy and mapping fidelity despite adverse conditions. This includes creating algorithms that can handle sensor degradation, identify and filter unreliable data, and adapt to rapidly changing environmental conditions. Multi-sensor fusion approaches have become increasingly important, combining complementary sensing modalities to compensate for individual sensor weaknesses.

Another critical objective is computational efficiency, as harsh environments often require more complex processing while vehicles may have limited computational resources. Real-time performance remains essential for practical deployment, necessitating optimized algorithms and hardware acceleration techniques. Additionally, modern SLAM systems aim to incorporate semantic understanding, enabling vehicles to not only map their surroundings but also comprehend the environment's structure and identify potential hazards specific to challenging conditions.

Looking forward, the trajectory of SLAM development for harsh environments points toward more adaptive and learning-based approaches. Systems that can dynamically adjust their parameters based on environmental conditions and leverage historical data to improve performance in recurring scenarios represent the frontier of research. The ultimate goal remains creating SLAM solutions that provide consistent, reliable performance regardless of environmental challenges, thereby expanding the operational domain of autonomous ground vehicles.

Market Analysis for Robust AGV Navigation Systems

The autonomous ground vehicle (AGV) navigation market is experiencing significant growth, driven by increasing demand for automation across multiple industries. The global market for AGV systems was valued at approximately $3.64 billion in 2022 and is projected to reach $9.5 billion by 2030, growing at a CAGR of 12.8%. Within this broader market, robust navigation systems capable of operating in harsh environments represent a rapidly expanding segment with particularly strong growth potential.

Industrial sectors including manufacturing, logistics, mining, agriculture, and construction constitute the primary demand drivers for harsh-environment AGV navigation solutions. The warehouse automation segment currently holds the largest market share at 38%, followed by manufacturing at 27%, with mining and construction collectively representing about 18% of the market. These industries increasingly require vehicles that can navigate reliably in conditions involving extreme temperatures, poor visibility, uneven terrain, and electromagnetic interference.

Regional analysis reveals North America currently leads the market with approximately 35% share, followed closely by Europe at 30% and Asia-Pacific at 25%. However, the Asia-Pacific region is expected to witness the fastest growth rate of 15.2% annually through 2030, driven by rapid industrialization in China, Japan, South Korea, and emerging economies like India and Vietnam.

Customer requirements analysis indicates five critical market needs: reliability in GPS-denied environments (cited by 87% of potential customers), real-time adaptation to changing conditions (82%), multi-sensor fusion capabilities (79%), reduced maintenance requirements (76%), and cost-effectiveness (72%). The market increasingly favors solutions that combine visual SLAM with LiDAR, radar, and inertial navigation systems to ensure redundancy and resilience.

Competitive pricing analysis shows current robust navigation systems for harsh environments typically cost between $8,000 and $25,000 per unit, representing 15-30% of total AGV system costs. However, price sensitivity varies significantly by industry, with mining and defense willing to pay premium prices for maximum reliability, while logistics and manufacturing segments demonstrate higher price sensitivity.

Market barriers include high initial investment costs, technical complexity requiring specialized expertise, regulatory compliance challenges particularly in safety-critical applications, and integration difficulties with existing infrastructure. Despite these challenges, the market demonstrates strong growth potential as technological advancements continue to address these limitations and expand the range of viable applications for harsh-environment AGV navigation systems.

Industrial sectors including manufacturing, logistics, mining, agriculture, and construction constitute the primary demand drivers for harsh-environment AGV navigation solutions. The warehouse automation segment currently holds the largest market share at 38%, followed by manufacturing at 27%, with mining and construction collectively representing about 18% of the market. These industries increasingly require vehicles that can navigate reliably in conditions involving extreme temperatures, poor visibility, uneven terrain, and electromagnetic interference.

Regional analysis reveals North America currently leads the market with approximately 35% share, followed closely by Europe at 30% and Asia-Pacific at 25%. However, the Asia-Pacific region is expected to witness the fastest growth rate of 15.2% annually through 2030, driven by rapid industrialization in China, Japan, South Korea, and emerging economies like India and Vietnam.

Customer requirements analysis indicates five critical market needs: reliability in GPS-denied environments (cited by 87% of potential customers), real-time adaptation to changing conditions (82%), multi-sensor fusion capabilities (79%), reduced maintenance requirements (76%), and cost-effectiveness (72%). The market increasingly favors solutions that combine visual SLAM with LiDAR, radar, and inertial navigation systems to ensure redundancy and resilience.

Competitive pricing analysis shows current robust navigation systems for harsh environments typically cost between $8,000 and $25,000 per unit, representing 15-30% of total AGV system costs. However, price sensitivity varies significantly by industry, with mining and defense willing to pay premium prices for maximum reliability, while logistics and manufacturing segments demonstrate higher price sensitivity.

Market barriers include high initial investment costs, technical complexity requiring specialized expertise, regulatory compliance challenges particularly in safety-critical applications, and integration difficulties with existing infrastructure. Despite these challenges, the market demonstrates strong growth potential as technological advancements continue to address these limitations and expand the range of viable applications for harsh-environment AGV navigation systems.

SLAM Challenges in Adverse Conditions

SLAM systems in harsh environments face significant challenges that can severely impact their performance and reliability. Adverse weather conditions such as heavy rain, snow, fog, and dust storms drastically reduce sensor visibility and create noise in data acquisition. These conditions cause light scattering, refraction, and absorption, leading to degraded image quality for visual SLAM systems and unreliable point cloud data for LiDAR-based approaches.

Extreme lighting variations present another major obstacle. Low-light conditions in tunnels or during nighttime operations reduce feature visibility for visual SLAM, while high-contrast scenarios like sudden transitions from dark to bright environments can overwhelm camera sensors. Direct sunlight can cause sensor saturation and glare, creating blind spots in the perception system.

Dynamic environments with moving objects, such as pedestrians, animals, or other vehicles, introduce additional complexity. These moving elements can be misinterpreted as static landmarks, corrupting the map and causing localization drift. In agricultural or construction settings, the environment itself may change rapidly as work progresses, invalidating previously built maps.

Terrain challenges significantly impact SLAM performance in ground vehicles. Uneven surfaces, loose gravel, mud, or snow can cause wheel slippage and unpredictable vehicle motion, invalidating motion models that assume smooth movement. This introduces errors in odometry estimates that accumulate over time, leading to significant drift in position tracking.

Sensor degradation and failure rates increase dramatically in harsh conditions. Mud splashes on cameras, dust accumulation on LiDAR sensors, or ice formation on radar units can temporarily or permanently impair sensor functionality. Vibrations from rough terrain can misalign carefully calibrated sensor arrays, compromising the fusion of multi-sensor data.

Computational constraints become more pronounced in adverse conditions. The need for more robust algorithms to filter noise and handle uncertainty increases computational load, while real-time performance requirements remain strict for autonomous navigation. This creates a challenging trade-off between processing power and algorithm sophistication.

Communication limitations in remote areas can restrict cloud computing assistance and map updates. Without reliable connectivity, SLAM systems must operate independently with onboard resources, limiting their ability to leverage external data or computational power for complex environmental interpretation.

Extreme lighting variations present another major obstacle. Low-light conditions in tunnels or during nighttime operations reduce feature visibility for visual SLAM, while high-contrast scenarios like sudden transitions from dark to bright environments can overwhelm camera sensors. Direct sunlight can cause sensor saturation and glare, creating blind spots in the perception system.

Dynamic environments with moving objects, such as pedestrians, animals, or other vehicles, introduce additional complexity. These moving elements can be misinterpreted as static landmarks, corrupting the map and causing localization drift. In agricultural or construction settings, the environment itself may change rapidly as work progresses, invalidating previously built maps.

Terrain challenges significantly impact SLAM performance in ground vehicles. Uneven surfaces, loose gravel, mud, or snow can cause wheel slippage and unpredictable vehicle motion, invalidating motion models that assume smooth movement. This introduces errors in odometry estimates that accumulate over time, leading to significant drift in position tracking.

Sensor degradation and failure rates increase dramatically in harsh conditions. Mud splashes on cameras, dust accumulation on LiDAR sensors, or ice formation on radar units can temporarily or permanently impair sensor functionality. Vibrations from rough terrain can misalign carefully calibrated sensor arrays, compromising the fusion of multi-sensor data.

Computational constraints become more pronounced in adverse conditions. The need for more robust algorithms to filter noise and handle uncertainty increases computational load, while real-time performance requirements remain strict for autonomous navigation. This creates a challenging trade-off between processing power and algorithm sophistication.

Communication limitations in remote areas can restrict cloud computing assistance and map updates. Without reliable connectivity, SLAM systems must operate independently with onboard resources, limiting their ability to leverage external data or computational power for complex environmental interpretation.

Current SLAM Architectures for Extreme Conditions

01 Visual SLAM techniques for autonomous navigation

Visual SLAM systems use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or scenes with repetitive patterns.- Visual SLAM techniques for autonomous navigation: Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced algorithms handle feature extraction, matching, and optimization to ensure accurate positioning even in dynamic environments. This approach is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.

- SLAM integration with sensor fusion: Sensor fusion approaches combine data from multiple sensors like cameras, LiDAR, IMU, and radar to enhance SLAM performance. By integrating complementary sensor data, these systems achieve more robust localization and mapping across varying environmental conditions. The fusion of different sensor modalities compensates for individual sensor limitations, providing more accurate and reliable spatial awareness for autonomous systems operating in complex real-world scenarios.

- Machine learning approaches for SLAM optimization: Machine learning techniques are being applied to SLAM systems to improve performance and adaptability. Deep neural networks can enhance feature detection, scene understanding, and prediction capabilities. These approaches enable SLAM systems to learn from experience, improving mapping accuracy and robustness in challenging environments. Machine learning optimization helps address traditional SLAM limitations such as dynamic object handling, changing lighting conditions, and computational efficiency.

- SLAM for augmented and virtual reality applications: SLAM technology enables precise spatial tracking for augmented and virtual reality experiences. By accurately mapping physical environments and tracking device movement, SLAM allows digital content to be convincingly anchored to the real world. These systems support immersive experiences by maintaining spatial consistency between virtual elements and physical surroundings, enabling applications in gaming, education, industrial training, and remote collaboration.

- Edge computing and real-time SLAM processing: Edge computing architectures optimize SLAM processing for resource-constrained devices by distributing computational workloads efficiently. These approaches enable real-time SLAM on mobile and embedded systems through algorithm optimization, hardware acceleration, and efficient memory management. By processing data locally rather than relying on cloud services, these systems reduce latency and bandwidth requirements while improving privacy and reliability for applications like mobile robotics and wearable devices.

02 SLAM for robotic applications

SLAM technology enables robots to navigate unknown environments by building maps while simultaneously determining their position. These systems typically combine data from multiple sensors including cameras, LiDAR, and inertial measurement units to achieve accurate localization and mapping. The technology is crucial for autonomous robots in applications ranging from household cleaning to industrial automation, allowing them to operate without pre-defined maps.Expand Specific Solutions03 SLAM optimization algorithms

Advanced optimization algorithms improve SLAM performance by efficiently processing sensor data and reducing computational requirements. These methods include graph-based optimization, bundle adjustment, and filter-based approaches that minimize mapping errors while maintaining real-time performance. Recent innovations focus on loop closure detection to correct accumulated errors and create globally consistent maps, particularly important for large-scale environments.Expand Specific Solutions04 AR/VR applications of SLAM

SLAM technology enables augmented and virtual reality systems to anchor digital content in physical space by tracking device movement and mapping surroundings. These implementations often use lightweight algorithms optimized for mobile devices with limited processing power. The technology allows for persistent AR experiences where virtual objects maintain their position in the real world even as users move around, creating more immersive and interactive experiences.Expand Specific Solutions05 LiDAR-based SLAM systems

LiDAR sensors provide precise distance measurements that enhance SLAM accuracy, particularly in environments where visual features are limited. These systems create detailed point cloud representations of surroundings and are less affected by lighting conditions compared to camera-only approaches. Recent developments focus on fusing LiDAR data with other sensor inputs to create more robust mapping solutions for autonomous vehicles and mobile robots operating in complex environments.Expand Specific Solutions

Leading SLAM Solution Providers and Research Groups

The SLAM for Autonomous Ground Vehicles in Harsh Environments market is currently in a growth phase, with increasing demand driven by the expanding autonomous vehicle sector. The market size is projected to reach significant value as industries adopt this technology for challenging operational environments. Technical maturity varies across players, with established automotive companies like Honda Motor, Ford Global Technologies, and Continental Autonomous Mobility leading commercial applications. Research institutions including China University of Mining & Technology, Northwestern Polytechnical University, and Jilin University are advancing fundamental SLAM capabilities for extreme conditions. Specialized technology firms such as TRX Systems and ETRI are developing niche solutions for GPS-denied environments, while companies like CRRC Technology Innovation focus on industrial applications requiring robust navigation in adverse conditions.

Continental Autonomous Mobility Germany GmbH

Technical Solution: Continental has developed a robust SLAM system specifically designed for autonomous ground vehicles operating in harsh environments. Their solution integrates multiple sensor modalities including LiDAR, radar, cameras, and inertial measurement units to create redundancy and resilience. The system employs advanced sensor fusion algorithms that dynamically adjust weightings based on environmental conditions, giving preference to sensors that perform better in specific harsh scenarios. For instance, when visibility is poor due to fog, rain, or snow, the system relies more heavily on radar data. Continental's SLAM technology incorporates deep learning-based feature extraction methods that are trained to recognize landmarks even when partially obscured or altered by environmental factors. Their system also implements a probabilistic mapping approach that accounts for uncertainty in sensor readings caused by harsh conditions, maintaining localization accuracy even when landmarks are temporarily unavailable.

Strengths: Superior multi-sensor fusion capabilities allowing for reliable operation across diverse harsh environments; robust against sensor degradation through intelligent sensor prioritization; extensive automotive industry experience providing real-world validation. Weaknesses: Higher system complexity and cost compared to single-sensor solutions; requires significant computational resources which may impact power efficiency in some vehicle platforms.

Ford Global Technologies LLC

Technical Solution: Ford has pioneered a SLAM system for autonomous vehicles designed specifically to handle harsh environmental conditions. Their approach combines traditional geometric SLAM techniques with deep learning-based environmental adaptation modules. The system features a multi-layered environmental perception framework that separates static infrastructure elements from dynamic and weather-affected features. Ford's technology employs specialized algorithms for detecting and filtering out noise caused by precipitation, extreme lighting conditions, and other environmental challenges. A key innovation is their "environmental context awareness" system that automatically detects changing conditions and adjusts SLAM parameters accordingly. For instance, in snowy conditions, the system modifies feature extraction thresholds and matching criteria to maintain reliability. Ford has also developed proprietary hardware solutions including heated and self-cleaning sensor housings to ensure physical sensor reliability in harsh environments. Their SLAM system incorporates temporal consistency checking that tracks the evolution of landmarks over time to distinguish between actual changes in the environment and sensor errors.

Strengths: Extensive real-world testing across diverse climate conditions; robust environmental adaptation capabilities; integration with vehicle systems for enhanced sensor protection and maintenance. Weaknesses: Higher power consumption due to sensor heating and cleaning systems; potentially greater dependence on high-definition pre-mapped data compared to some competitors.

Key Patents in Robust SLAM Implementation

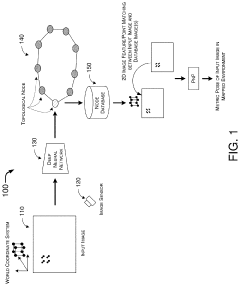

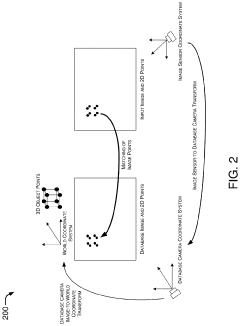

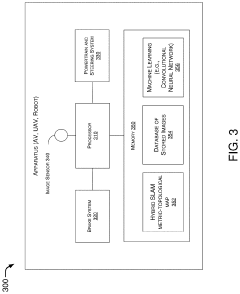

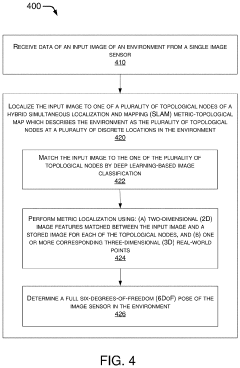

Hybrid Metric-Topological Camera-Based Localization

PatentActiveUS20210082145A1

Innovation

- A hybrid SLAM approach using stereo vision or depth sensors for initial mapping and a single camera or image sensor for localization, employing deep learning-based image classification and geometric PnP techniques to create a hybrid metric-topological map that is continuously updated, reducing memory requirements and costs by using a single camera instead of LIDAR.

Methods and system of navigation using terrain features

PatentInactiveEP2133662A3

Innovation

- A method and system that utilize terrain features by correlating current navigational data with prior data from a terrain mapping database using sensors like vision or laser-based systems, filtering with a SLAM framework to provide driftless navigation updates based on inertial measurement unit data.

Sensor Fusion Strategies for Reliability Enhancement

Sensor fusion represents a critical approach for enhancing SLAM reliability in harsh environments where autonomous ground vehicles (AGVs) operate. The integration of multiple sensor modalities creates redundancy and complementary information streams that can overcome the limitations of individual sensors. In particularly challenging conditions such as extreme weather, poor visibility, or rugged terrain, no single sensor can provide consistently reliable data.

Multi-modal sensor fusion architectures typically combine data from LiDAR, radar, cameras, IMUs, and GNSS systems. Each sensor contributes unique strengths: LiDAR offers precise 3D mapping but struggles in precipitation; radar penetrates adverse weather conditions but provides lower resolution; cameras deliver rich visual information but fail in low-light scenarios; IMUs provide motion data independent of external conditions; while GNSS offers absolute positioning when available.

Advanced fusion strategies employ both early and late fusion paradigms. Early fusion combines raw sensor data before feature extraction, creating a unified representation for subsequent processing. Late fusion processes each sensor stream independently before merging results at the decision level. Hybrid approaches leverage benefits from both methodologies, adapting to environmental conditions dynamically.

Kalman filter variants remain fundamental to sensor fusion implementations, with Extended Kalman Filters (EKF) and Unscented Kalman Filters (UKF) handling non-linear vehicle dynamics. Factor graph optimization has emerged as a powerful alternative, representing sensor measurements as constraints in a probabilistic framework that can be solved to obtain optimal state estimates.

Context-aware fusion algorithms represent the cutting edge, dynamically adjusting sensor weighting based on environmental conditions. These systems can detect when specific sensors become unreliable—such as cameras in fog or LiDAR in heavy rain—and reconfigure fusion parameters accordingly. Machine learning approaches further enhance this capability by learning optimal fusion strategies from extensive training data across diverse environmental conditions.

Fault detection and isolation (FDI) mechanisms form an essential component of robust fusion systems, continuously monitoring sensor health and identifying anomalous measurements. When sensor failures are detected, the system can gracefully degrade by reconfiguring the fusion architecture to rely more heavily on functioning sensors, maintaining operational capability even with partial sensor suite availability.

Multi-modal sensor fusion architectures typically combine data from LiDAR, radar, cameras, IMUs, and GNSS systems. Each sensor contributes unique strengths: LiDAR offers precise 3D mapping but struggles in precipitation; radar penetrates adverse weather conditions but provides lower resolution; cameras deliver rich visual information but fail in low-light scenarios; IMUs provide motion data independent of external conditions; while GNSS offers absolute positioning when available.

Advanced fusion strategies employ both early and late fusion paradigms. Early fusion combines raw sensor data before feature extraction, creating a unified representation for subsequent processing. Late fusion processes each sensor stream independently before merging results at the decision level. Hybrid approaches leverage benefits from both methodologies, adapting to environmental conditions dynamically.

Kalman filter variants remain fundamental to sensor fusion implementations, with Extended Kalman Filters (EKF) and Unscented Kalman Filters (UKF) handling non-linear vehicle dynamics. Factor graph optimization has emerged as a powerful alternative, representing sensor measurements as constraints in a probabilistic framework that can be solved to obtain optimal state estimates.

Context-aware fusion algorithms represent the cutting edge, dynamically adjusting sensor weighting based on environmental conditions. These systems can detect when specific sensors become unreliable—such as cameras in fog or LiDAR in heavy rain—and reconfigure fusion parameters accordingly. Machine learning approaches further enhance this capability by learning optimal fusion strategies from extensive training data across diverse environmental conditions.

Fault detection and isolation (FDI) mechanisms form an essential component of robust fusion systems, continuously monitoring sensor health and identifying anomalous measurements. When sensor failures are detected, the system can gracefully degrade by reconfiguring the fusion architecture to rely more heavily on functioning sensors, maintaining operational capability even with partial sensor suite availability.

Safety Standards and Certification Requirements

The implementation of SLAM systems for autonomous ground vehicles in harsh environments necessitates adherence to rigorous safety standards and certification requirements. ISO 26262, the international standard for functional safety in road vehicles, provides a comprehensive framework for ensuring that electronic and electrical systems maintain acceptable safety levels. For SLAM systems operating in challenging conditions, compliance with Automotive Safety Integrity Level (ASIL) classifications—ranging from A (lowest) to D (highest)—is essential, with most autonomous navigation systems requiring ASIL C or D certification due to their critical safety functions.

Beyond ISO 26262, UL 4600 specifically addresses autonomous vehicle safety, outlining requirements for validation and verification of perception systems like SLAM. This standard emphasizes the need for robust testing in diverse environmental conditions, particularly relevant for harsh environment operations where sensor degradation and algorithmic failures present heightened risks.

Regional regulatory frameworks impose additional requirements. The European Union's type-approval regulations for automated driving systems mandate extensive testing in adverse weather conditions, while the U.S. Department of Transportation's Automated Vehicles Comprehensive Plan requires demonstration of system reliability across varied environmental scenarios. China's Intelligent Connected Vehicle Road Test Management Specification similarly emphasizes performance validation in challenging conditions.

For SLAM systems specifically, IEEE P2846 provides guidelines for assumptions in autonomous vehicle control systems, including environmental perception reliability metrics. The standard establishes minimum performance thresholds for localization accuracy and mapping fidelity that must be maintained even when environmental factors compromise sensor performance.

Certification processes for harsh-environment SLAM systems typically involve multi-stage validation. This includes simulation testing with environmental stress factors, controlled field testing in representative harsh conditions, and long-term durability assessment. Documentation requirements are particularly stringent, necessitating detailed failure mode analyses and mitigation strategies for environmental challenges like extreme temperatures, precipitation, dust, and electromagnetic interference.

Recent regulatory developments indicate a trend toward performance-based standards rather than prescriptive requirements. This shift acknowledges the rapid evolution of SLAM technologies and allows for innovation while maintaining safety. However, it also places greater responsibility on developers to demonstrate system robustness through comprehensive testing methodologies that specifically address harsh environment operation.

Compliance with these standards represents a significant engineering challenge but is essential for market acceptance and liability protection. Organizations developing SLAM systems for harsh environments must integrate safety considerations throughout the development lifecycle, from initial requirements specification through to post-deployment monitoring and updates.

Beyond ISO 26262, UL 4600 specifically addresses autonomous vehicle safety, outlining requirements for validation and verification of perception systems like SLAM. This standard emphasizes the need for robust testing in diverse environmental conditions, particularly relevant for harsh environment operations where sensor degradation and algorithmic failures present heightened risks.

Regional regulatory frameworks impose additional requirements. The European Union's type-approval regulations for automated driving systems mandate extensive testing in adverse weather conditions, while the U.S. Department of Transportation's Automated Vehicles Comprehensive Plan requires demonstration of system reliability across varied environmental scenarios. China's Intelligent Connected Vehicle Road Test Management Specification similarly emphasizes performance validation in challenging conditions.

For SLAM systems specifically, IEEE P2846 provides guidelines for assumptions in autonomous vehicle control systems, including environmental perception reliability metrics. The standard establishes minimum performance thresholds for localization accuracy and mapping fidelity that must be maintained even when environmental factors compromise sensor performance.

Certification processes for harsh-environment SLAM systems typically involve multi-stage validation. This includes simulation testing with environmental stress factors, controlled field testing in representative harsh conditions, and long-term durability assessment. Documentation requirements are particularly stringent, necessitating detailed failure mode analyses and mitigation strategies for environmental challenges like extreme temperatures, precipitation, dust, and electromagnetic interference.

Recent regulatory developments indicate a trend toward performance-based standards rather than prescriptive requirements. This shift acknowledges the rapid evolution of SLAM technologies and allows for innovation while maintaining safety. However, it also places greater responsibility on developers to demonstrate system robustness through comprehensive testing methodologies that specifically address harsh environment operation.

Compliance with these standards represents a significant engineering challenge but is essential for market acceptance and liability protection. Organizations developing SLAM systems for harsh environments must integrate safety considerations throughout the development lifecycle, from initial requirements specification through to post-deployment monitoring and updates.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!